Abstract

In population genetics, the Dirichlet (also called the Balding–Nichols) model has for 20 years been considered the key model to approximate the distribution of allele fractions within populations in a multi-allelic setting. It has often been noted that the Dirichlet assumption is approximate because positive correlations among alleles cannot be accommodated under the Dirichlet model. However, the validity of the Dirichlet distribution has never been systematically investigated in a general framework. This paper attempts to address this problem by providing a general overview of how allele fraction data under the most common multi-allelic mutational structures should be modeled. The Dirichlet and alternative models are investigated by simulating allele fractions from a diffusion approximation of the multi-allelic Wright–Fisher process with mutation, and applying a moment-based analysis method. The study shows that the optimal modeling strategy for the distribution of allele fractions depends on the specific mutation process. The Dirichlet model is only an exceptionally good approximation for the pure drift, Jukes–Cantor and parent-independent mutation processes with small mutation rates. Alternative models are required and proposed for the other mutation processes, such as a Beta–Dirichlet model for the infinite alleles mutation process, and a Hierarchical Beta model for the Kimura, Hasegawa–Kishino–Yano and Tamura–Nei processes. Finally, a novel Hierarchical Beta approximation is developed, a Pyramidal Hierarchical Beta model, for the generalized time-reversible and single-step mutation processes.

Similar content being viewed by others

References

1000 Genomes Project Consortium et al (2015) A global reference for human genetic variation. Nature 526(7571):68–74

Aitchison J (1986) The statistical analysis of compositional data. Chapman and Hall, Boca Raton

Balding DJ, Nichols RA (1995) A method for quantifying differentiation between populations at multi-allelic loci and its implications for investigating identity and paternity. Genetica 96:3–12

Balding DJ, Nichols RA (1997) Significant genetic correlations among Caucasians at forensic DNA loci. Heredity 78(6):583–589

Balding DJ, Steele CD (2015) Weight-of-evidence for forensic DNA profiles, 2nd edn. Wiley, Woolloongabba

Crow JF, Kimura M (1970) An introduction to population genetics theory. Harper & Row, Publishers, New York

De Maio N, Schrempf D, Kosiol C (2015) PoMo: an allele frequency-based approach for species tree estimation. Syst Biol 64(6):1018–1031

Etheridge A (2012) Some mathematical models from population genetics. Springer, Berlin

Ewens WJ (2004) Mathematical population genetics 1: I. Theoretical introduction, vol 27. Springer, New York

Felsenstein J (2004) Inferring phylogenies. Sinauer Associates, Sunderland

Gautier M, Vitalis R (2013) Inferring population histories using genome-wide allele frequency data. Mol Biol Evol 30(3):654–68

Griffiths RC, Spanò D (2010) Diffusion processes and coalescent trees. In: Bingham NH, Goldie CM (eds) Probability and mathematical genetics: papers in honour of Sir John Kingman. Cambridge University Press, Cambridge, pp 358–379

Hasegawa M, Kishino H, Yano T (1985) Dating of human-ape splitting by a molecular clock of mitochondrial DNA. J Mol Evol 22(2):160–174

Hobolth A, Sirén J (2016) The multivariate Wright–Fisher process with mutation: moment-based analysis and inference using a hierarchical Beta model. Theor Popul Biol 108:36–50

Hodgkinson A, Eyre-Walker A (2010) Human triallelic sites: Evidence for a new mutational mechanism? Genetics 184(1):233–241

Jenkins PA, Mueller JW, Song YS (2014) General triallelic frequency spectrum under demographic models with variable population size. Genetics 196:295–311

Jukes TH, Cantor CR (1969) Evolution of protein molecules. Academic Press, New York, pp 21–132

Kimura M (1980) A simple method for estimating evolutionary rates of base substitutions through comparative studies of nucleotide sequences. J Mol Evol 16(2):111–120

Kimura M, Ohta T (1978) Stepwise mutation model and distribution of allele frequencies in a finite population. Proc Natl Acad Sci 75(6):2868–2872

Motoo K (1955a) Random genetic drift in multi-allelic locus. Evolution 9(4):419–435

Motoo K (1955b) Solution of a process of random genetic drift with a continuous model. Proc Natl Acad Sci U S A 41(3):144

Nicholson G, Smith AV, Jónsson F, Gustafsson Ó, Stefánsson K, Donnelly P (2002) Assessing population differentiation and isolation from single-nucleotide polymorphism data. J R Stat Soc Ser B (Stat Methodol) 64(4):695–715

Ongora A, Migliorati S, Monti GS (2008) A new distribution on the simplex containing the Dirichlet family. In: Proceedings of the 3rd compositional data analysis workshop, 27–30 May. University of Girona

Pickrell JK, Pritchard JK (2012) Inference of population splits and mixtures from genome-wide allele frequency data. PLoS Genet 8(11):e1002967

Ross SM (1996) Stochastic processes, 2nd edn. Wiley, Hoboken

Sirén J, Marttinen P, Corander J (2011) Reconstructing population histories from single nucleotide polymorphism data. Mol Biol Evol 28:673–683

Sirén J, Hanage WP, Corander J (2013) Inference on population histories by approximating infinite alleles diffusion. Mol Biol Evol 30(2):457–468

Swofford DL, Olsen GJ, Waddell PJ, Hillis DM (1996) Phylogenetic inference. Sinauer Associates, Sunderland

Tamura K, Nei M (1993) Estimation of the number of nucleotide substitutions in the control region of mitochondrial DNA in humans and chimpanzees. Mol Biol Evol 10(3):512–526

Tataru P, Bataillon T, Hobolth A (2015) Inference under a Wright–Fisher model using an accurate Beta approximation. Genetics 201:1133–1141

Tataru P, Simonsen M, Bataillon T, Hobolth A (2016) Statistical inference in the Wright–Fisher model using allele frequency data. Syst Biol 66:e30–e46

Teh YW, Jordan MI, Beal MJ, Blei DM (2006) Hierarchical Dirichlet processes. J Am Stat Assoc 101:1566–1581

Wong TT (2010) Parameter estimation for generalized Dirichlet distributions from the sample estimates of the first and the second moments of random variables. Comput Stat Data Anal 54(7):1756–1765

Acknowledgements

We are grateful to the associate editor and two anonymous reviewers for helpful comments and suggestions. This work is funded through a Grant from the Danish Research Council (DFF 4002-00382) awarded to Asger Hobolth.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

(Co)variance for PIM

In order to calculate the matrix exponential \(e^{Qt}=I+\sum \nolimits _{k=1}^{\infty } (Qt)^k/k!\) we need to find an expression for \(Q^k\), where \(Q=\mathbf {e}'\beta -\beta _0 I\) for \(\beta =(\beta _1,\dots ,\beta _K)\). By induction and using that \(\mathbf {e}'\beta \mathbf {e}'=\beta _0 \mathbf {e}'\), we generally get \(Q^k = (-\beta _0)^{k-1}(\mathbf {e}'\beta - \beta _0 I)\). This implies that the matrix exponential is given by

where \(a(t)=e^{-\beta _0 t}\) and \(\overline{\beta }=\beta /\beta _0\). The mean is therefore specified by

We ought to calculate the (co)variance specified in Theorem 2.1 for the WF PIM process. By using that \(x(0)\mathbf {e}{'}=\mathbf {e}x(0){'}=1\) and \(\overline{\beta }\mathbf {e}{'}=\mathbf {e}\overline{\beta }{'}=1\), the last term in the (co)variance is given by

The first term of the (co)variance involves an integral and is more tedious to calculate

by using \(\mathbf {e}{{\,\mathrm{diag}\,}}(\overline{\beta })=\overline{\beta }\), \({{\,\mathrm{diag}\,}}(x(0))\mathbf {e}{'}=x(0){'}\), \(\mathbf {e}{{\,\mathrm{diag}\,}}(x(0))=x(0)\), assuming \(\beta _0\ne 1\), and

The final expression for the (co)variance becomes

(Co)variance for IAM

This section provides more elegant calculations to obtain the (co)variance expression for the IAM process compared to the computations obtained by Sirén et al. (2013). By induction we obtain the following expression in matrix notation of the matrix exponential for the IAM process

Let \({\tilde{x}}(0)=(x_1(0),\dots ,x_{K-1}(0),x_K(0)-1)\) and \({\tilde{\mathbf {1}}}=(0,\dots ,0,1)\) be \((1\times K)\)-dimensional vectors. We provide a proof of the expression for the (co)variance in Eq. (1) in Corollary 3.3, where the last term is given by

The first term of the (co)variance becomes

The final expression for the (co)variance becomes by letting \(\widehat{x}(0)=(x_1(0),\dots ,x_{K-1}(0))\)

where

(Co)variance for HKY, TN and SSM

This section provides a proof of the expression for the (co)variance for the TN process in Eq. (3) in Corollary 3.5 (and for the HKY and SSM processes). The matrix exponential can be written on the form \(e^{Qt}=\sum _{i=1}^4 a_ie^{-b_i t}A_i\), which makes the last term in the (co)variance very easy to calculate

The first term of the (co)variance is given by

Moment-based parameter estimation

This section outlines how to estimate the free parameters in the four models: Dirichlet, Beta–Dirichlet, Hierarchical Beta and Pyramidal Hierachical Beta, using the moment-based method.

1.1 Dirichlet

Consider a K-dimensional Dirichlet distributed allele fraction data \(x(t)=(x_1(t),\dots ,x_K(t)) \sim {{\,\mathrm{Dirichlet}\,}}(\alpha )\) with parameter \(\alpha =(\alpha _1,\dots ,\alpha _K)\). The mean and (co)variance under the Dirichlet model are given by

We ought to estimate \(\alpha \) and \(\alpha _0\) from the Dirichlet means and (co)variances displayed in Table 1. An estimate of \(\alpha \) is obtained from the mean: \(\widehat{\alpha }={{\,\mathrm{E}\,}}[x(t)]\alpha _0\). The parameter \(\alpha _0\) is estimated by summing the known (co)variance over the number of allelic types

which implies

We then estimate the mean and variance of the stochastic variables as follows for \(k=1,\dots ,K-1\)

1.2 Beta–Dirichlet

Consider a K-dimensional Beta–Dirichlet distributed allele fraction data \(x(t)=((1-x_K(t))y,x_K(t))\), where

are independent. The mean and (co)variance under the Beta–Dirichlet model are given by

where

The Beta–Dirichlet model has \(K+1\) free parameter: \(\mu ,\phi ,\alpha =(\alpha _1,\dots ,\alpha _{K-1})\). Having calculated the means and (co)variances of the model displayed in Table 2, we now discuss how these parameters are estimated from the \(K+1\) parameters \(t,m,x(0)=(x_1(0),\dots ,x_K(0))\) in the IAM process. Let the expressions for the means in the Beta–Dirichlet model and the IAM process for \(x_K(t)\) and \((x_1(t),\dots ,x_{K-1}(t))=(1-x_K(t))y\), respectively, be equal

The first part of Eq. (8) estimates the parameter \(\widehat{\mu }\). The parameter \(\phi \) is estimated by letting the expressions for the (co)variance of \(x_K(t)\) in the two models be equal and applying Eq. (8)

which is equivalent to

We observe that the two expressions \(A(\mu ,\phi ,\alpha _0)\) and \(F(t,m,x_K(0))\) are equal

and can therefore estimate the parameter \(\alpha _0\) from this equality by using Eq. (8)

The second part of Eq. (8) estimates \(\widehat{\alpha }_j=(\alpha _0e^{-mt}x_j(0))/(1-\mu )\), \(j=1,\dots ,K-1\). We then estimate the mean and variance of the stochastic variables as follows for \(k=1,\dots ,K-2\)

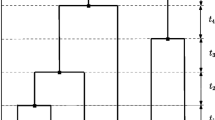

1.3 Hierarchical Beta

Consider a 4-dimensional Hierarchical Beta distributed allele fraction data \(x(t)=(x_1(t),x_2(t),x_3(t),x_4(t))\). The Hierarchical Beta model has 6 free parameter: 3 means \((\mu _{\omega }, \mu _{\eta _1}, \mu _{\eta _2})\) and 3 variances \((V_{\omega }, V_{\eta _1}, V_{\eta _2})\). Hobolth and Sirén (2016) provide the calculations of the means and (co)variances displayed in Table 3.

The mean of \((x_1, x_2, x_3, x_4)\) is a bijective function of \((\mu _{\omega }, \mu _{\eta _1}, \mu _{\eta _2})\). The means \((\mu _{\omega }, \mu _{\eta _1}, \mu _{\eta _2})\) are therefore completely determined from the means of the allele fractions

The variances are determined from the following three equations by isolating the variable of interest

where \(f_i: \mathbf {R} \rightarrow \mathbf {R}\), \(i=1,2\), are functions of the variance of interest.

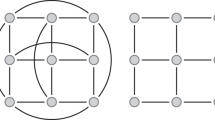

1.4 Pyramidal hierarchical Beta

The Pyramidal model has \((K-1)(K+2)/2\) free parameters (\(K-1\) means: \(\mu _{\eta _k}\), and \(K(K-1)/2\) variances: \(V_{\omega _{ij}}\), \(i=1,\dots ,K-2\), \(j=1,\dots ,i\), and \(V_{\eta _k}\), \(k=1,\dots ,K\)). The calculated means of the model are displayed in Table 4 for \(K=4\), while the variances and covariances are depicted in Table 7. We discuss how the 9 free parameters [3 means: \((\mu _{\eta _{1}},\mu _{\eta _2},\mu _{\eta _3})\) and 6 variances: \((V_{\omega _{11}},V_{\omega _{21}},V_{\omega _{22}}, V_{\eta _1},V_{\eta _2},V_{\eta _3})\)] are estimated.

The mean of \((x_1,x_2,x_3,x_4)\) is a bijective function of \((\mu _{\eta _1},\mu _{\eta _2},\mu _{\eta _3})\). The means \((\mu _{\eta _{1}},\mu _{\eta _2},\mu _{\eta _3})\) are therefore completely determined from the means as follows

The remaining variances are determined from the following (co)variances by isolating the variable of interest

where \(f_{i}: \mathbf {R}\rightarrow \mathbf {R}\), \(i=1,2,3,11,21,22\), is a function of the variances or covariances provided in Table 7. By isolating the parameter of interest, we obtain an expression for the variance parameters under the Pyramid model. A similar extended method is used to in the general case with a K-dimensional allele fraction vector \(x=(x_1,\dots ,x_K)\).

Rights and permissions

About this article

Cite this article

Speed, M.S., Balding, D.J. & Hobolth, A. A general framework for moment-based analysis of genetic data. J. Math. Biol. 78, 1727–1769 (2019). https://doi.org/10.1007/s00285-018-01325-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-018-01325-0

Keywords

- Allele fraction

- Beta–Dirichlet

- Diffusion

- Dirichlet

- Distribution of allele fractions

- Evolutionary history

- Hierarchical Beta

- Moments

- Multi-allelic Wright–Fisher

- Mutation processes

- Pyramid