Abstract

This work presents a global surrogate modelling of mechanical systems with elasto-plastic material behaviour based on support vector regression (SVR). In general, the main challenge in surrogate modelling is to construct an approximation model with the ability to capture the non-smooth behaviour of the system under interest. This paper investigates the ability of the SVR to deal with discontinuous and high non-smooth outputs. Two different kernel functions, namely the Gaussian and Matèrn 5/2 kernel functions, are examined and compared through one-dimensional, purely phenomenological elasto-plastic case. Thereafter, an essential part of this paper is addressed towards the application of the SVR for the two-dimensional elasto-plastic case preceded by a finite element method. In this study, the SVR computational cost is reduced by using anisotropic training grid where the number of points are only increased in the direction of the most important input parameters. Finally, the SVR accuracy is improved by smoothing the response surface based on the linear regression. The SVR is constructed using an in-house MATLAB code, while Abaqus is used as a finite element solver.

Similar content being viewed by others

1 Introduction

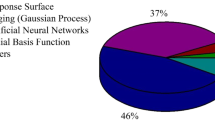

Computational simulation is being increasingly used in the field of structural mechanics for design optimisation, reliability, uncertainty quantification or sensitivity analysis. In practical applications, the parameters identification and assessing the properties of mechanical structures with real physical experiments require a large number of simulations of the high-fidelity models. However, the repeated simulations of the parameterised model remain an extremely time-consuming process and involve a huge computational effort. In order to reduce the computational burden associated with predictive modelling of complex engineering problems, the model’s response is often replaced by a simplified mathematical model known as surrogate model, also referred to as meta-model. In general, the surrogate model is built by considering the original model as a black-box model which is evaluated only on a limited number of simulations. Accordingly, the approximated model can be built to resemble the behaviour of the high-fidelity model while preserving accuracy and being computationally cheaper to evaluate. Indeed, particularly over the last decade, different surrogate modelling techniques have been proposed in the literature such as proper orthogonal decomposition (POD) [1,2,3,4,5], polynomial response surfaces [6, 7], polynomial chaos expansion (PCE) [8,9,10], Gaussian process models [11,12,13] and support vector regression (SVR) [14,15,16]. Among all the mentioned surrogate model techniques, SVR has attracted considerable attention in the literature due to its potential ability, efficiency and robustness in approximating the model response in various engineering problems. For instance, SVR was applied for structural crashworthiness design optimization in [17]. Pan et al. [18] utilised SVR as a surrogate model for lightweight vehicle design. The least square support vector regression meta-model technique for sheet metal forming optimization was proposed in [19]. Moustapha et al. applied SVR for nonlinear structural analysis in [20]. More recently, SVR was applied for structural reliability analysis in [21] and for measuring the water quality parameters in [22]. Moreover, a comprehensive comparison between SVR and various meta-model techniques including response surface methodology, kriging, radial basis functions and multivariate adaptive regression splines was examined in [23]. The study shows that SVR outperformed all other meta-model techniques in terms of prediction accuracy and robustness.

In fact, many engineering problems involve non-smooth responses which exhibit discontinuity or sharp changes for certain combinations of the input parameters. This behaviour often occurs in the context of nonlinear finite element method where the output response may behave non-smoothly in the parametric space. Consequently, approximating the non-smooth response of the underlying physical problem by a smooth global surrogate model might lead to large errors and poor prediction capability. This issue has been addressed in the literature in [24,25,26]. To overcome this problem, several attempts have been proposed in the literature based on data mining techniques such as clustering [27] which automatically determines the input domain of similar responses. In addition, the decomposition of the input space was applied in [28] where the different behaviours of the response surface are locally approximated. The multi-element approaches were applied in [29,30,31] where the parametric space is discretised into non-overlapping elements. Thereafter, the surrogate model is constructed element-wise which weakens the non-smoothness influence of the response within each element. However, these techniques might impose additional computations to tackle the non-smooth behaviour of the output. In this paper, the optimisation of SVR parameters is investigated in order to build a single and global surrogate model with high capability to capture the non-smooth responses. The developed SVR model is examined through nonlinear elasto-plastic problem where the output of interest exhibits highly non-smooth behaviour in the inputs parametric space. In this paper, the accuracy and the computational cost of SVR are studied and compared for two types of kernel functions, namely Gaussian and Matèrn 5/2 kernels. More details about the behaviour of different types of kernel functions can be found in [32]. Thereafter, the computational cost of SVR is reduced by applying anisotropic training grid where the training points are only increased in the input parameter where the response behaves highly nonlinear. Finally, this paper provides a smoothing technique of the non-smooth output response using linear regression. The smoothing method can be applied to enhance the accuracy and prediction capability of SVR, in addition to reducing the computational cost.

2 Support vector regression

The main concept of SVR is to generate a surrogate model of the underlying complex physical response which depends only on a given training set \(S=\{{\textbf{x}}_i,y_i\}_{i=1}^{n}\) of n training points \({\textbf{x}}_i\in {\mathbb {R}}^{d}\) with corresponding output \(y_i \in {\mathbb {R}}\). The SVR aims at approximating a linear regression function

where \(\langle \cdot \,,\cdot \rangle \) denotes the inner product, \({\textbf{w}}\) and \(b \in {\mathbb {R}}\) are the regression parameters to be estimated using the training data \({\textbf{x}}\) and \(\varvec{\phi }\left( {\textbf{x}}\right) \) is the transformation of \({\textbf{x}}\) from the input space into the so called feature space F. Here, the vector \({\textbf{x}}\) can be mapped into the feature space by using a kernel function \(K\left( {\textbf{x}}_i\,,{\textbf{x}}_j\right) \) that defines an inner product in this feature space as

Accordingly, the solution vector \({\textbf{w}}\) can be rewritten in its dual representation as [33]

where \({\hat{\alpha }}_i\) and \(\alpha _i\) are the Lagrange multipliers representing the dual variables. Substituting Eqs. (3) and (2) into Eq. (1), the regression function becomes

with \(\tilde{\varvec{\alpha }}=\left[ {\tilde{\alpha }}_1,\cdots ,{\tilde{\alpha }}_n\right] ^T\) and \({\textbf{k}}=\left[ k_1 ,\cdots , k_n\right] ^T\) where \(k_i=K\left( {\textbf{x}}_i,{\textbf{x}}\right) \). The variables \(\tilde{\varvec{\alpha }}\) and b are determined by means of an optimisation problem. For this purpose, the \(\varepsilon \)-intensive loss function is introduced as follows [34]:

Evaluating Eq. (5) at the training data points \({\textbf{x}}_i\) leads to the definition of the slack variables

The interpretation of the parameter \(\varepsilon \) and the slack variables \(\xi _i\) and \({\hat{\xi }}_i\) are clarified in Fig. 1.

It can be seen that, SVR aims to construct a function such that the training points are inside a tube of given radius \(\varepsilon \). The slack variables \(\xi \) and \({\hat{\xi }}\) indicate how far a training point lies outside the \(\varepsilon \)-tube. For instance, \({\hat{\xi }}=0\) if the corresponding training point lies above the tube. In contrast, \({\xi }=0\) if a training point is located below the tube. Finally, both slack variables take the value \(\xi ={\hat{\xi }}=0\) if the training points are inside the tube. Here, the points that are not strictly located inside the \(\varepsilon \)-tube are called the support vectors. Typically, the SVR formulation written as an optimisation problem consists of minimising the \(\varepsilon \)-intensive loss function through the calculation of its norm \(\frac{1}{2}\Vert {\textbf{w}}\Vert ^2\) as

where C is a positive weighting constant known as the box constraint.

The primary optimisation problem represented in Eq. (7) can be transformed into its dual form with the aid of the Lagrange multiplier technique as [35, 36]

where

and by satisfying the Karush–Kuhn–Tucker complementary conditions

The solution of the optimisation problem results in the dual variables \(\tilde{\varvec{\alpha }}=\left[ {\tilde{\alpha }}_1\cdots {\tilde{\alpha }}_n\right] ^T\) with \({\tilde{\alpha }}_i={\hat{\alpha }}_i-\alpha _i\) and an optimal bias term b that satisfies the following equations [37]

In general, the parameters C and \(\varepsilon \) can be chosen as

and

where \(\text {iqr}( {\textbf{Y}})\) is the interquartile range of the response spectrum \({\textbf{Y}}=\left\{ y_1,\cdots ,y_n\right\} \) of the input training data set.

2.1 Error measures

In order to ensure the quality and the prediction accuracy of the surrogate model, two main error measures are considered in this study. First, the overall quality of the SVR approximation is determined by using a relative error measure

where \(n^*\) is the number of the test data points and \(\epsilon _{i}\) is the relative absolute error, given by

where \(y_i=y\left( {\textbf{x}}^*_i\right) \) is the exact response evaluated at the test data points \({\textbf{x}}^*\), \({\hat{y}}_i={\hat{y}}\left( {\textbf{x}}^*_i\right) \) is the SVR surrogate model evaluated at the same test points, and \({\tilde{y}}\) is the average output obtained from the exact response

Second, a local error estimator known as Chebyshev norm estimator is used to determine the local prediction accuracy of SVR

where \(e_i\) is the absolute error defined as the absolute difference between each data point evaluated for the exact response and the SVR surrogate model

3 Numerical studies

This numerical study aims to construct a global surrogate model using SVR that is capable of capturing highly non-smooth and nonlinear responses. In order to determine the appropriate kernel function K and the optimal values of the SVR parameters C and \(\varepsilon \), a one-dimensional phenomenological elasto-plastic model is firstly considered in this study. The model consists of three inputs parameters, namely the total strain \(\varepsilon \), the hardening parameter k and the yield stress \(\sigma ^y\). Thereafter, the optimised SVR parameters are applied for a two-dimensional four-point bending beam where the response of interest exhibits highly non-smooth behaviour in the parametric space. Furthermore, the computational cost of the SVR is reduced by using anisotropic training grid. In addition, the accuracy of the constructed SVR is improved by smoothing the response surface using linear regression.

3.1 One-dimensional elasto-plastic model

Consider a one-dimensional pure phenomenological elasto-plastic model where the strain \(\varepsilon \) takes a scalar quantity. The total strain \(\varepsilon \) can be additively decomposed into an elastic and plastic strain as

The splitting is done by an return-mapping algorithm as described specifically for the one-dimensional case in [38]. In this example, the associated plastic strain \(\varepsilon ^p\) is considered as the quantity of interests by assigning the total strain \(\varepsilon \), the hardening parameter k and the yield stress \( \sigma ^y\) as input parameters denoted by \({\textbf{x}}=\left[ \varepsilon ,\;k,\;\sigma ^y\right] \). The variation ranges of the inputs parameters are given in Table 1. The Young’s modulus is fixed at \(E={210000}\,\hbox {MPa}\).

First, Cartesian grids are used as training- and test points, e.g. with eight points in each dimension

The training grid (20) and the separation of the parametric space into an elastic region (\(\varepsilon ^p=0\)) and a plastic region (\(\varepsilon ^p>=0\)) are shown in Fig. 2.

The reference response surface shown in Fig. 3 is obtained from the exact solution by fixing the value of the total strain at \(\varepsilon ={0,005}\) and \(\varepsilon ={0,0028}\), respectively. It can be seen that the exact response appears to have only nonlinear behaviour in Fig. 3a and begins to behave non-smoothly (e.g. \(C^0\)-continuous in the elastic region where \(\varepsilon ^p=0\)) by decreasing the value of the total strain, as shown in Fig. 3b.

In order to adopt a kernel function that can capture the non-smooth transition of the response surface in the input parametric space, two kernel functions are applied in this example.

First, the Gaussian kernel function defined as

is applied to build the surrogate model using SVR. The training points are distributed uniformly in the parametric space with ten points in each dimension. The exact response surface and the approximated one obtained from SVR at a fixed value of the total \(\varepsilon ={0.0028}\) are clarified in Fig. 4b.

It can be seen that the Gaussian kernel provides a qualitatively sufficient approximation of the exact response surface in the range where \(\varepsilon ^p=0\), and it exhibits a small deviation in the nonlinear region where \(\varepsilon ^p>0\).

Now, the Matèrn 5/2 kernel function defined as

is applied to the same problem and compared with the Gaussian kernel using the error criteria discussed in Sect. 2.1. In both kernels, the correlation length is set to \(\rho =1\), and the optimal values of the SVR parameters, namely the box constraint C and the tube width \(\varepsilon \), are experimentally determined as

The absolute error \(\varepsilon _{\max }\) and the relative error \(\varepsilon _\textrm{rel}\) using the Gaussian and Matèrn kernel functions with respect to the number of training points are shown in Fig. 5.

It can be seen that the Matèrn kernel function outperforms the Gaussian kernel for both error measures. The Gaussian kernel exhibits high oscillations in the absolute error and an increment in the relative error by increasing the number of the training points (\(n>500\)). On the contrary, the Matèrn kernel provides higher accuracy by increasing the number of the training points for the both errors. However, it shows a slight oscillation for the number of training points (\(n>10^4\)) due to the over-fitting phenomenon.

3.2 Four-point bending beam with elasto-plastic material behaviour

In this numerical study, the SVR is investigated on a nonlinear elasto-plastic analysis of a four-point bending test. The \({200}\,\hbox {mm} \times {20}\,\hbox {mm}\) beam is subjected to displacement-controlled loading at \(x= {60}\,\hbox {mm} \) and \(x= {140}\,\hbox {mm}\), respectively. The beam is supported at \(x= {10}\,\hbox {mm}\) and \(x= {190}\,\hbox {mm}\). The Poisson’s ratio \(\upsilon =0.3\) and a von Mises ideal plasticity yield criterion with a plane stress assumption are considered in this example. The height of non-plastified cross-section \(d^{el}\) is considered as a quantity of interest by assuming the Young’s modulus E, a yield stress \(\sigma ^y\) and the applied displacement \({\bar{u}}\) as inputs parameters. The variation ranges of the inputs parameters are given in Table 2.

The contour plot of the equivalent plastic strain \(\varepsilon ^p_{eq}\) obtained from FEM simulation at a fixed value of displacement \({\bar{u}}={0.2}\,\hbox {mm}\) is plotted in Fig. 6.

The non-plastified cross-section height at the centre of the beam is measured at the centre of gravity (centroid) of the non-plastified elements as

where \(N_e\vert _{\varepsilon ^p_{eq}=0}\) is the number of elements with zero centroid equivalent plastic strain and \(l_e\) is the element length. In general, the equivalent plastic strain can be estimated as

where \(\varvec{\varepsilon }^p\) denotes the plastic strain tensor. For the sake of higher precision, the equivalent plastic strain is evaluated at the centroid of each element where super convergence is guaranteed, as it is clarified in Fig. 7.

In this example, the beam height at the centre contains \(N_{e}=100\) finite elements with an element width length equal to \(l_e={0,2}\,\hbox {mm}\). The exact response surface obtained from \(n=8000\) FEM simulations using Cartesian grid distributed equally in the input parametric space is calcified in Fig. 8. It can be observed that the response surface exhibits highly non-smooth and discontinuous in addition to linear and nearly flat behaviour for certain combinations of the input parameters. This discontinuity is mainly caused due to the fact that the non-plastified height of the beam \(d^{el}\) is measured by counting the number of element \(N_e\) with the zero equivalent plastic strain \(\varepsilon ^{p}_{eq}\) along the beam cross section. Consequently, \(d^{el}\) can only change by the element length \(l_e\) for different combination of the input parameters. In order to approximate this response surface, the SVR model is built using the optimal parameters of the box constraint C and the tube width \(\varepsilon \) (see eqs. (23) and (24)) using the Matèrn 5/2 kernel function.

The accuracy of the SVR approximation using the error criteria discussed in Sect. 2.1 is given in Fig. 9. It is evident that the SVR approximation shows higher local error where the response behaves discontinuously, as shown in Fig. 9a. It requires approximately \(n=6860\) training points to obtain \(\epsilon _{\max }=0.44\) absolute error. On the contrary, the global relative error is \(\epsilon _\textrm{rel}=0.017\) for the same number of training points.

In fact, the response surface shown in Fig. 8 behaves approximately linearly with respect to the yield stress \(\sigma ^y\) and the Young’s modulus E, whereas it shows nonlinearity with respect to the displacement loading \({\bar{u}}\). Therefore, a natural choice to reduce the computational cost of the SVR model is to assign a higher number of training points only in the direction of the input parameter \({\bar{u}}\). The reduction in the training points in specific dimensions can be applied by using anisotropic training grids. For the sake of comparison with the previously used isotropic training grids, the number of training points in the anisotropic grid is reduced by half in the parameter directions \(\sigma ^y\) and E, while it remains at the same number of training points in the direction of \({\bar{u}}\). A comparison between the isotropic and anisotropic training grid is shown Fig. 10.

The accuracy of the SVR approximation using the anisotropic training grid in comparison with the isotropic one is given in Fig. 11. It can be seen that the anisotropic training grid provides approximately the same accuracy as the isotropic grid while reducing the computational cost by a factor of four. For instance, it requires only \(n=1900\) training points to achieve \(\epsilon _{\max }\approx {0,4}\,\hbox {mm}\) absolute error and \(\epsilon _\textrm{rel}\approx {0,017}\) relative error, whereas the isotropic grid requires \(n=6890\) training points to obtain the same accuracy.

The computational time required for SVR surrogate model to obtain the target absolute error \(\epsilon _{\max }\approx {0,43}\,\hbox {mm}\) using anisotropic training grid is given in Table 3.

Table 3 shows that after constructing the SVR surrogate model, the computational time is reduced to 0.18 s compared with 2 s computation time to run a single FEM simulation. This allows a reduction in the computational cost by a factor of 11.

3.2.1 Smoothing the response surface

In fact, the non-plastified height of the beam \(d^{el}\) discussed in Sect. 3.2 is mainly measured by counting the number of finite elements \(N_e\) with zero centroid equivalent plastic strain \(\varepsilon _{eq}^p=0\) using Eq. (25). This mesh dependency causes the response surface to behave discontinuously in the parametric space since the non-plastified height \(d^e\) can only change by the element length \(l_e\). This discontinuous behaviour can be weakened by estimating the non-plastified height of the beam depending on the number of integration points with zero equivalent plastic strain. However, this might only weaken the non-smoothness without relieving the mesh dependency of the response surface \(d^{el}\). Alternatively, this section provides a measure of the equivalent plastic strain \(\varepsilon _{eq}^{p}\) at the centre of the beam depending on the state of all integration points across the beam cross section, including the ones with \(\varepsilon _{eq}^p>0\) by using two linear regression functions, defined as

and

where the signs \((+,-)\) indicate the positive and negative global y-coordinates of the integration points, respectively, and \(\alpha \) and \(\beta \) are the linear combination coefficients to be estimated. Once the linear combinations coefficients are estimated using least squares method, the non-plastified height \(d^{el}\) can be simply calculated as

An example of the regression functions along the beam height is given in Fig. 12.

A comparison of the response surface obtained from \(n=8000\) combinations of the input parameters using different measures of the equivalent plastic strain \(\varepsilon ^{p}_{eq}\), namely at the centroid of the element, integration points and by using linear regression, is plotted in Fig. 13.

Figure 13c shows that using the linear regression to measure the equivalent plastic strain provides a smooth transition of the quantity of interest \(d^{el}\) in the parametric space unlike the centroid and integration points measures presented in Figs. 13a and b, respectively. The SVR approximation of the non-plastified height \(d^{el}\) is given in Fig. 14.

First, it can be observed from the global relative error \(\epsilon _\textrm{rel}\) , plotted in Fig. 14b, that the smoothed curve using linear regression provides a significant improvement in the accuracy of the SVR approximation compared with the centre point and the integration points. On the contrary, it exhibits approximately identical local accuracy with the integration points, as shown in Fig. 14a. This is mainly due to the fact that the quantity of interest \(d^{el}\) remains constant at \(d^{el}={20}\,\hbox {mm}\) when the beam is in a fully elastic state for different combination of the input parameters. Thereafter, it exhibits \(C^{0}\) transition in the parametric space where the cross-section height begins to be partially plastified where \(d^{el}<{20}\,\hbox {mm}\), as clarified in Fig. 13.

4 Conclusion

This study has presented a global surrogate modelling for mechanical systems with nonlinear elasto-plastic material behaviour based on support vector regression (SVR). First, SVR is applied to a purely phenomenological one-dimensional material model. In the numerical study, it is shown that the Matèrn 5/2 kernel function with appropriately optimised parameters tends to have more accurate representation of the output (the plastic strain \(\varepsilon ^{p}\)). It outperforms the Gaussian kernel. The optimal values of SVR parameters such as the box constraint C and tube width \(\varepsilon \) are determined in the first numerical study. The results obtained from the one-dimensional elasto-plastic example are therefore applied to continuum elasto-plasticity within the framework of the finite element method. The four-point bending with elasto-plastic material behaviour is chosen as a technical application. The quantity of interest is considered as the non-plastified cross-sectional height \(d^{el}\) by representing the displacement magnitude \({\bar{u}}\), the Young’s modulus E and the yield stress \(\sigma ^y\) as inputs parameters. It is shown that the post-processing and the element size of the finite element mesh have a significant impact on the accuracy of the SVR approximation. Consequently, it causes the quantity of interest to behave discontinuously in the parametric space. To overcome this problem, the quantity of interest \(d^{el}\) is smoothed over the input space by using linear regression. The results show that SVR can provide relatively a sufficient approximation of a highly nonlinear and non-smooth responses surfaces while the computational cost is reduced by a factor of 11. Finally, it is worth mentioning that only three input parameters are considered in this paper. However, the accuracy and the performance of the SVR surrogate model might be affected for high-dimensional non-smooth engineering problems.

References

Rathinam, M., Petzold, L.R.: A new look at proper orthogonal decomposition. SIAM J. Numer. Anal. 41(5), 1893–1925 (2003). https://doi.org/10.1137/S0036142901389049

Sengupta, T.K., Dey, S.: Proper orthogonal decomposition of direct numerical simulation data of by-pass transition. Comput. Struct. 82(31), 2693–2703 (2004). https://doi.org/10.1016/j.compstruc.2004.07.008

Willcox, K., Peraire, J.: Balanced model reduction via the proper orthogonal decomposition. AIAA J. 40(11), 2323–2330 (2002). https://doi.org/10.2514/2.1570

Swischuk, R., Mainini, L., Peherstorfer, B., Willcox, K.: Projection-based model reduction: formulations for physics-based machine learning. Comput. Fluids 179, 704–717 (2019). https://doi.org/10.1016/j.compfluid.2018.07.021

Ghavamian, F., Tiso, P., Simone, A.: Pod-deim model order reduction for strain-softening viscoplasticity. Comput. Methods Appl. Mech. Eng. 317, 458–479 (2017). https://doi.org/10.1016/j.cma.2016.11.025

F, J., Larsgunnar, N.: On polynomial response surfaces and kriging for use in structural optimization of crashworthiness. Struct. Multidiscip. Optim. 29, 232–243 (2005). https://doi.org/10.1007/s00158-004-0487-8

Kleijnen, J.P.C.: Design and Analysis of Simulation Experiments, 1st edn. Springer, New York (2007)

Ghanem, R., Spanos, P.D.: Stochastic Finite Elements: A Spectral Approach. Springer, New York (1991)

Blatman, G., Sudret, B.: Adaptive sparse polynomial chaos expansion based on least angle regression. J. Comput. Phys. 230(6), 2345–2367 (2011). https://doi.org/10.1016/j.jcp.2010.12.021

Eckert, C., Beer, M., Spanos, P.D.: A polynomial chaos method for arbitrary random inputs using B-splines. Probab. Eng. Mech. 60, 103051 (2020). https://doi.org/10.1016/j.probengmech.2020.103051

Jones, B., Johnson, R.T.: Design and analysis for the gaussian process model. Qual. Reliab. Eng. Int. 25(5), 515–524 (2009)

Su, G., Peng, L., Hu, L.: A gaussian process-based dynamic surrogate model for complex engineering structural reliability analysis. Struct. Saf. 68, 97–109 (2017)

Fuhg, J.N., Marino, M., Bouklas, N.: Local approximate gaussian process regression for data-driven constitutive models: development and comparison with neural networks. Comput. Methods Appl. Mech. Eng. 388, 114217 (2022). https://doi.org/10.1016/j.cma.2021.114217

Drucker, H., Burges, C.J.C., Kaufman, L., Smola, A., Vapnik, V.: Support vector regression machines. NIPS’96, pp. 155–161. MIT Press, Cambridge (1996)

Vapnik, V., Chapelle, O.: Bounds on error expectation for support vector machines. Neural Comput. 12(9), 2013–2036 (2000). https://doi.org/10.1162/089976600300015042

Cristianini, N., Shawe-Taylor, J., et al.: An Introduction to Support Vector Machines and Other Kernel-based Learning Methods. Cambridge University Press, Cambridge (2000)

Zhu, P., Pan, F., Chen, W., Zhang, S.: Use of support vector regression in structural optimization: application to vehicle crashworthiness design. Math. Comput. Simul. 86, 21–31 (2012). https://doi.org/10.1016/j.matcom.2011.11.008

Pan, F., Zhu, P., Zhang, Y.: Metamodel-based lightweight design of b-pillar with twb structure via support vector regression. Comput. Struct. 88(1), 36–44 (2010). https://doi.org/10.1016/j.compstruc.2009.07.008

Wang, H., Li, E., Li, G.Y.: The least square support vector regression coupled with parallel sampling scheme metamodeling technique and application in sheet forming optimization. Mater. Design 30(5), 1468–1479 (2009). https://doi.org/10.1016/j.matdes.2008.08.014

Moustapha, M., Bourinet, J.-M., Guillaume, B., Sudret, B.: Comparative study of kriging and support vector regression for structural engineering applications. ASCE-ASME J. Risk Uncert. Eng. Syst. A: Civil Eng. 4(2), 04018005 (2018). https://doi.org/10.1061/AJRUA6.0000950

Cheng, K., Lu, Z.: Adaptive Bayesian support vector regression model for structural reliability analysis. Reliab. Eng. Syst. Saf. 206, 107286 (2021). https://doi.org/10.1016/j.ress.2020.107286

Najafzadeh, M., Niazmardi, S.: A novel multiple-kernel support vector regression algorithm for estimation of water quality parameters. Natural Resour. Res. (2021). https://doi.org/10.1007/s11053-021-09895-5

Clarke, S.M., Griebsch, J.H., Simpson, T.W.: J. Mech. Design 127(6), 1077–1087 (2004). https://doi.org/10.1115/1.1897403

Moustapha, M., Sudret, B.: A two-stage surrogate modelling approach for the approximation of models with non-smooth outputs (2019). https://doi.org/10.7712/120219.6346.18665

Maître, O.P.L., Knio, O.M., Najm, H.N., Ghanem, R.G.: Uncertainty propagation using Wiener-Haar expansions. J. Comput. Phys. 197(1), 28–57 (2004). https://doi.org/10.1016/j.jcp.2003.11.033

Dannert, M.M., Bensel, F., Fau, A., Fleury, R.M.N., Nackenhorst, U.: Investigations on the restrictions of stochastic collocation methods for high dimensional and nonlinear engineering applications. Probab. Eng. Mech. (2022). https://doi.org/10.1016/j.probengmech.2022.103299

Martinez, W.L., Martinez, A.R., Solka, J.L.: Exploratory Data Analysis with MATLAB, 3rd edn. Chapman and Hall/CRC, New York (2017)

Basudhar, A., Missoum, S., Harrison Sanchez, A.: Limit state function identification using support vector machines for discontinuous responses and disjoint failure domains. Probab. Eng. Mech. 23(1), 1–11 (2008). https://doi.org/10.1016/j.probengmech.2007.08.004

Basmaji, A.A., Fau, A., Urrea-Quintero, J.H., Dannert, M.M., Voelsen, E., Nackenhorst, U.: Anisotropic multi-element polynomial chaos expansion for high-dimensional non-linear structural problems. Probab. Eng. Mech. 103366 (2022). https://doi.org/10.1016/j.probengmech.2022.103366

Wan, X., Karniadakis, G.E.: Multi-element generalized polynomial chaos for arbitrary probability measures. SIAM J. Sci. Comput. 28(3), 901–928 (2006). https://doi.org/10.1137/050627630

Foo, J., Karniadakis, G.E.: Multi-element probabilistic collocation method in high dimensions. J. Comput. Phys. 229(5), 1536–1557 (2010). https://doi.org/10.1016/j.jcp.2009.10.043

Funk, S.: Support Vektor Regression für Anwendungen im Bereich der Elasto-Plastizität. PhD dissertation, Institute of Mechanics and Computational Mechanics, Leibniz University Hannover (2022)

Smola, A.J., Schölkopf, B.: A tutorial on support vector regression. Stat. Comput. 14(3), 199–222 (2004)

Vapnik, V.: The Nature of Statistical Learning Theory. Springer, New York (1999)

Jiang, P., Zhou, Q., Shao, X.: Surrogate Model-Based Engineering Design and Optimization. Springer, Singapore (2020). https://doi.org/10.1007/978-981-15-0731-1

Cristianini, N., Shawe-Taylor, J.: Support vector and kernel methods. In: Intelligent Data Analysis, pp. 169–197. Springer, Heidelberg (2007)

Fasshauer, G.E., McCourt, M.J.: Kernel-based Approximation Methods Using Matlab, vol. 19. World Scientific Publishing Company, Singapore (2015)

Yaw, L.L.: Nonlinear static—1d plasticity—various forms of isotropic hardening. Walla Walla University 25 (2012)

Acknowledgements

The support of the German Research Foundation (DFG) during the priority program IRTG 2657 (grant ID: 433082294) is gratefully acknowledged . In addition, this work was supported by the compute cluster, which is funded by the Leibniz University of Hannover, the Lower Saxony Ministry of Science and Culture (MWK) and the German Research Foundation (DFG). On behalf of all authors, the corresponding author states that there is no conflict of interest.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Funk, S., Airoud Basmaji, A. & Nackenhorst, U. Globally supported surrogate model based on support vector regression for nonlinear structural engineering applications. Arch Appl Mech 93, 825–839 (2023). https://doi.org/10.1007/s00419-022-02301-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00419-022-02301-3

) and the SVR approximation (

) and the SVR approximation ( ) using the Gaussian Kernel function at a fixed value of the total strain

) using the Gaussian Kernel function at a fixed value of the total strain