Abstract

We investigate the scaling limit of the range (the set of visited vertices) for a general class of critical lattice models, starting from a single initial particle at the origin. Conditions are given on the random sets and an associated “ancestral relation” under which, conditional on longterm survival, the rescaled ranges converge weakly to the range of super-Brownian motion as random sets. These hypotheses also give precise asymptotics for the limiting behaviour of the probability of exiting a large ball, that is for the extrinsic one-arm probability. We show that these conditions are satisfied by the voter model in dimensions \(d\ge 2\), sufficiently spread out critical oriented percolation and critical contact processes in dimensions \(d>4\), and sufficiently spread out critical lattice trees in dimensions \(d>8\). The latter result proves Conjecture 1.6 of van der Hofstad et al. (Ann Probab 45:278–376, 2017) and also has important consequences for the behaviour of random walks on lattice trees in high dimensions.

Similar content being viewed by others

1 Introduction

Super-Brownian motion is a measure-valued process arising as a universal scaling limit for a variety of critical lattice models above the critical dimension in statistical physics and mathematical biology. Examples include oriented percolation [44], lattice trees [22], models for competing species such as voter models [3, 8], models for spread of disease such as contact processes [42], and percolation [18], where the full result in the latter context is the subject of ongoing research (e.g., [20]). The nature of the convergence in all these contexts is that of convergence of the associated empirical processes, conditioned on long term survival and suitably rescaled, to super-Brownian motion conditioned on survival. Moreover, often only convergence of the finite-dimensional distributions is known. Extending this to convergence on path space for lattice trees was recently carried out in [39] with great effort.

Convergence of the actual random sets of occupied sites to the range of super-Brownian motion is one of the most natural questions, but has not been achieved in any of these settings (convergence at a fixed time was done for the voter model in [3], and for the simple setting of branching random walk it is implicit in [10]). We provide a unified solution to this problem in the form of quite general conditions under which the rescaled ranges of a single occupancy particle model on the integer lattice (in discrete or continuous time) converge to the range of super-Brownian motion, conditional on survival. The conditions include convergence of the associated integrated measure-valued processes to integrated super-Brownian motion, but a feature of our results is that convergence of finite-dimensional distributions suffices (see Lemma 2.2 below). We verify the conditions for the voter model in two or more dimensions, for critical oriented percolation and the critical contact process in more than 4 dimensions, and for critical lattice trees in more than eight dimensions.

As a consequence of the above convergence, we obtain the precise asymptotics for the extrinsic one-arm probability (i.e. the probability that the random set is not contained in the ball of radius r, centred at the origin). The simpler problem of establishing the asymptotics of the intrinsic one-arm probability (the probability that there is an occupied site at time t) has itself only recently been resolved at this level of generality [38]. For sufficiently spread-out (unoriented) percolation in dimensions \(d>6\), Kozma and Nachmias [28] have identified the correct power law decay, but have not proved a limit theorem.

Our general lattice models include a random “ancestral relation” which in the case of random graphs such as lattice trees or oriented percolation is a fundamental part of the model, but for particle models such as the voter model or contact process, arises naturally from the graphical construction of such models. Our first main result will in fact be a uniform modulus of continuity for all “ancestral paths”. This result plays a crucial role in our proof of the above convergence, but it is also important in its own right.

We begin by briefly defining the four models which motivated our general results. These models depend on a random walk step kernel (probability mass function), \(D:{\mathbb {Z}}^d\rightarrow {\mathbb {R}}_+\), with finite range and covariance matrix \( \sigma ^2_{\scriptscriptstyle D}I_{d\times d}\) for some \( \sigma ^2_{\scriptscriptstyle D}>0\), and such that \(D(-x)=D(x)\) and \(D(o)=0\), where o will denote the origin. By finite range we mean there is an \(L\ge 1\) such that \(D(x)=0\) if \(\Vert x\Vert >L\) where \(\Vert x\Vert \) is the \(L_\infty \) norm of x. Let |A| denote the cardinality of a finite set A.

The voter model

The voter model on \({\mathbb {Z}}^d\) (introduced in [7, 21]) is a spin-flip system, and so in particular, a continuous time Feller process \((\xi _t)_{t\ge 0}\) with state space \(\{0,1\}^{{\mathbb {Z}}^d}\) and flip rates as follows. With rate one each vertex, say at x, imposes its type (0 or 1) on a randomly chosen vertex y with probability \(D(y-x)\). Let \(\xi _t(x)\in \{0,1\}\) denote the type of \(x\in {\mathbb {Z}}^d\) at time \(t\ge 0\), and let \({\mathcal {T}}_t:=\{x \in {\mathbb {Z}}^d:\xi _t(x)=1\}\). In the notation of [30] the flip rate at site x in state \(\xi \) is

If \({\mathbb {E}}[|{\mathcal {T}}_0|]<\infty \), then \(|{\mathcal {T}}_t|\) is a non-negative martingale and the extinction time \(S^{\scriptscriptstyle (1)}=\inf \{t\ge 0:|{\mathcal {T}}_t|=0\}\) is a.s. finite (see Lemma 7.1(b) below). We will usually assume that the process starts with a single site of type 1 at time 0, located at the origin o, i.e.

Oriented percolation

For an introduction to oriented percolation (OP) see e.g. [44]. For simplicity we take D to be uniform on \(([-L,L]^d{\setminus } \{o\})\cap {\mathbb {Z}}^d\), where \(d>4\), although more general kernels are possible (see Remark 2.7). The bond \(((n,x),(n+1,y))\) is occupied with probability \(pD(y-x)\), where \(p\in [0,\Vert D\Vert _{\infty }^{-1}]\), independent of all other bonds. Let \({\mathbb {P}}_p\) denote the law of the model. We say that there is an occupied path from (n, x) to \((n',x')\), and write \((n,x) \rightarrow (n',x')\), if there is a sequence \(x=x_0,x_1,\dots , x_{n'-n}=x'\) in \({\mathbb {Z}}^d\) such that \(((n+i-1,x_{i-1}),(n+i,x_{i}))\) is occupied for each \(i=1,\dots , n'-n\ge 0\). We include the convention that \((n,x) \rightarrow (n,x')\) if and only if \(x=x'\). Let \({\mathcal {T}}_n=\{x\in {\mathbb {Z}}^d:(0,o) \rightarrow (n,x)\}\), and observe that \({\mathbb {P}}_p({\mathcal {T}}_0=\{o\})=1\). Define \(p_c=\sup \{p:\lim _{n \rightarrow \infty }{\mathbb {P}}_p(\mathcal {T}_n\ne \varnothing )=0\}\). By (1.12) of [44], \(p_c=1+O(L^{-d})\), and so \(p_c\in (0,\Vert D\Vert _{\infty }^{-1})\) for L large. Let \({\mathbb {P}}={\mathbb {P}}_{p_c}\). It is well known (e.g. [38]) that \(\lim _{n \rightarrow \infty }{\mathbb {P}}(\mathcal {T}_n\ne \varnothing )=0\).

Lattice trees

A lattice tree (LT) T on \({\mathbb {Z}}^d\), is a finite connected simple graph in \({\mathbb {Z}}^d\) with no cycles. It consists of a set of lattice bonds, E(T) (unordered pairs of points in \({\mathbb {Z}}^d\)), together with the corresponding set of end-vertices, V(T), in \({\mathbb {Z}}^d\). By connected we mean that for any distinct \(v_1,v_2\in V(T)\) there is an \(m \in {\mathbb {N}}\) and a function \(w:\{0,\dots ,m\}\rightarrow V(T)\) so that \(w(0)=v_1\), \(w(m)=v_2\), and for all \(1\le k\le m\), \(\{w(k-1),w(k)\}\in E(T)\). We call w a path in T of length m from \(v_1\) to \(v_2\). Given any two vertices \(v_1,v_2\) in the tree, the lack of cycles means there is a unique path (length 0 if \(v_1=v_2\)) of bonds connecting \(v_1\) and \(v_2\). The number of such bonds, \(d_T(v_1,v_2)\), is the tree distance between \(v_1\) and \(v_2\). It is a metric on the set of vertices, called the tree metric. Let \({\mathbb {T}}_L(x)\) denote the countable space of lattice trees on \({\mathbb {Z}}^d\) whose vertex set contains \(x\in {\mathbb {Z}}^d\) and for which every bond has \(L_\infty \)-norm at most \(L\ge 1\). The parameter L will be taken sufficiently large for our main results. We now describe a way of choosing a “random” lattice tree \(\mathcal {T}\) containing the origin, i.e. \(\mathcal {T}\in {\mathbb {T}}_L:={\mathbb {T}}_L(o)\).

Let \(d>8\) and let \(D(\cdot )\) be the probability mass function of the uniform distribution on a finite box \(([-L,L]^d{\setminus } o)\cap {\mathbb {Z}}^d\) (see Remark 2.7). For \(e=(y,x)\), let \(D(e):=D(x-y)\). For a lattice tree \(T\in {\mathbb {T}}_L(x)\) define

where |T| is the number of edges in T. Since D is uniform (1.1) could also be written as \((c_{\scriptscriptstyle L}z)^{|T|}\). For any \(z>0\) such that \(\rho _z:=\sum _{T\in {\mathbb {T}}_L(o)}W_{z,D}(T)<\infty \) we can define a probability on \({\mathbb {T}}_L(o)\) by \({\mathbb {P}}_z({\mathcal {T}}=T)=\rho _z^{-1}W_{z,D}(T)\). Sub-additivity arguments show that \(z_{\scriptscriptstyle D}=\sup \{z>0:\rho _z<\infty \}\in (0,\infty )\). It is known (e.g. Theorem 1.2 of [17]) that \(\rho _{z_{\scriptscriptstyle D}}<\infty \) and that \(z_{\scriptscriptstyle D}=\sup \{z:{\mathbb {E}}_{z}[|{\mathcal {T}}|]<\infty \}\), but \({\mathbb {E}}_{z_{\scriptscriptstyle D}}[|{\mathcal {T}}|]=\infty \). Hereafter we write \(W(\cdot )\) for the critical weighting \(W_{z_{\scriptscriptstyle D},D}(\cdot )\), write \(\rho :=\rho _{z_{\scriptscriptstyle D}}\) and \({\mathbb {P}}={\mathbb {P}}_{z_{\scriptscriptstyle D}}\), and we select a random tree \({\mathcal {T}}\) according to this critical weighting.

For \(T\in {\mathbb {T}}_L(o)\) and \(m \in {\mathbb {Z}}_+\), let \(T_{m}\) denote the set of vertices in T of tree distance m from o, so \({\mathcal {T}}_m\) is the corresponding set of vertices for our random tree \({\mathcal {T}}\) and \({\mathbb {P}}({\mathcal {T}}_0=\{o\})=1\). Note that \(\lim _{n \rightarrow \infty }{\mathbb {P}}(\mathcal {T}_n\ne \varnothing )=0\) (see e.g. [38]).

Contact process

The contact process (CP) on \({\mathbb {Z}}^d\) is a spin-flip system \((\xi _t)_{t\ge 0}\) (hence a cadlag Feller process) with state space \(\{0,1\}^{{\mathbb {Z}}^d}\) and rates (\(\lambda >0\))

It describes the spread of an infection in that \(\xi _t(x)=1\) if and only if site x is infected at time t, and the above rates imply that an infected site x recovers at rate 1, and an uninfected site x with rate \(\lambda \) chooses a “neighbour” y with probability \(D(y-x)\) and becomes infected if y is infected. For simplicity (see Remark 2.7) we will take D to be the probability mass function of the uniform distribution on \(([-L,L]^d{\setminus }\{o\})\cap {\mathbb {Z}}^d\) where \(d>4\). We start from a single infected particle at the origin at time 0. Let \({\mathbb {P}}_{\lambda }\) denote a probability measure under which \((\xi _t)_{t\ge 0}\) has the law above with rates \(\lambda \). Let \(\mathcal {T}_t=\{x:\xi _t(x)=1\}\), so \({\mathbb {P}}_\lambda (\mathcal {T}_0=\{o\})=1\). Let \(\lambda _c=\sup \{\lambda :\lim _{t \rightarrow \infty }{\mathbb {P}}_\lambda (\mathcal {T}_t\ne \varnothing )=0\}\), and \({\mathbb {P}}={\mathbb {P}}_{\lambda _c}\). It is known (e.g. [38, 41]) that \(\lambda _c\in (0,\infty )\), and that \(\lim _{t \rightarrow \infty }{\mathbb {P}}(\mathcal {T}_t\ne \varnothing )=0\).

General models and ancestral relations

Our goal is to establish general conditions for convergence of the ranges of a wide class of rescaled lattice models (including the voter model, oriented percolation, lattice trees, the contact process, and perhaps also percolation). We introduce our general framework in this section. The time index I will either be \({\mathbb {Z}}_+\) (discrete time) or \([0,\infty )\) (continuous time). We use the notation \(I_t=\{s \in I:s\le t\}\). As we will be dealing with random compact sets, we let \(\mathcal {K}\) denote the set of compact subsets of \({\mathbb {R}}^d\). We equip it with the Hausdorff metric \(d_0\) (and note that \((\mathcal {K},d_0)\) is Polish) defined by \(d_0(\varnothing ,K)=d_0(K,\varnothing )=1\) for \(K\ne \varnothing \), while for \(K,K'\ne \varnothing \)

As our models of interest will be single occupancy models, we assume throughout that

Notation. For a metric space M, \(\mathcal {D}([0,\infty ),M)\) will denote the space of cadlag M-valued paths with the Skorokhod topology, and \(C_b(M)\) is the space of bounded \({\mathbb {R}}\)-valued continuous functions on M. \(C^2_b({\mathbb {R}}^d)\) is the set of bounded continuous functions whose first and second order partials are also in \(C_b({\mathbb {R}}^d)\). Integration of f with respect to a measure \(\mu \) is often denoted by \(\mu (f)\).

Cadlag paths are bounded on bounded intervals and so this implies

We will write

\((\mathcal {F}_t)_{t\in I}\) will denote a filtration with respect to which \(({\mathcal {T}}_t)_{t \in I}\) is adapted. In practice it may be larger than the filtration generated by \(\varvec{{\mathcal {T}}}\).

A random ancestral relation, \((s,y)\overset{\varvec{a}}{\rightarrow }(t,x)\), on \(I\times {\mathbb {Z}}^d\) will be fundamental to our analysis. If it holds we say that (s, y) is an ancestor of (t, x), and it will imply \(s\le t\). We write

and define (for \(s,t\in I\), \(x,y\in {\mathbb {Z}}^d\)),

We will assume \(\overset{\varvec{a}}{\rightarrow }\) satisfies the following conditions where (AR)(i)–(iii) will hold off a single null set which we usually ignore:

We call \(\overset{\varvec{a}}{\rightarrow }\) an ancestral relation iff (AR) holds. In this case we call \((\varvec{{\mathcal {T}}},\overset{\varvec{a}}{\rightarrow })\) an ancestral system.

Remark 1.1

-

(1)

It is immediate from (1.8) and (1.10) (the latter with \((s_1,s_2,s_3)=(0,s,t)\)) that

$$\begin{aligned} {\mathcal {T}}_s=\varnothing \Rightarrow {\mathcal {T}}_t=\varnothing \ \forall t\ge s. \end{aligned}$$(1.13) -

(2)

In practice it is often easiest to verify (AR)(iii) by showing \(s\mapsto e_{s,t}(y,x)\) is cadlag for each t, x, y, and that

$$\begin{aligned}&\text {For each }t\ge 0,x,y\in {\mathbb {Z}}^d\text { there is a }\delta >0\text { s.t. } \nonumber \\&{{\hat{e}}}_u(y,x)={{\hat{e}}}_t(y,x),\ \forall u\in [t,t+\delta )\ \text { and } \end{aligned}$$(1.14)$$\begin{aligned}&{{\hat{e}}}_u(y,x)={{\hat{e}}}_{u'}(y,x),\ \forall u,u'\in (t-\delta ,t)\cap [0,\infty ). \end{aligned}$$(1.15)

We will always assume (1.3) and (AR) when dealing with our abstract models.

In the discrete time case we can extend \({\mathcal {T}}_t\) and \(\mathcal {F}_t\) to \(t\in [0,\infty )\) by

and define \((s,y)\overset{\varvec{a}}{\rightarrow }(t,x)\) for all \(t\ge s\ge 0\) by

It is easy to check then that in the discrete case (AR)(i)–(iv) hold, where now \(s,s_i,t\) are allowed to take values in \([0,\infty )\). Moreover (1.14) and (1.15) hold.

Note: We allownto denote a real parameter in\([1,\infty )\).

For \(A\subset {\mathbb {R}}^d\) and \(a\in {\mathbb {R}}\) define \(aA=\{ax:x \in A\}\). To rescale our model for \(n\in [1,\infty )\) we set

and for \(s,t\ge 0\), \(x,y\in {\mathbb {Z}}^d/\sqrt{n}\)

We also define for \(n\in [1,\infty )\), \(s,t\ge 0\) and \(x,y\in {\mathbb {Z}}^d/\sqrt{n}\),

and note that \({{\hat{e}}}^{\scriptscriptstyle {(n)}}_t(y,x)(s)={{\hat{e}}}_{nt}(\sqrt{n} y,\sqrt{n} x)(ns)\).

Here is a simple consequence of (AR)(ii) which will be used frequently.

Lemma 1.2

W.p.1 if \(n\in [1,\infty )\), \(M\in {\mathbb {N}}\), \(0\le s_0<s_1<\dots <s_M\), and \((s_0,y_0)\overset{\varvec{a,n}}{\rightarrow }(s_M,y_M)\), then there are \(y_1\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_{s_1},\dots , y_{M-1}\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_{s_{M-1}}\) s.t. \((s_{i-1},y_{i-1})\overset{\varvec{a,n}}{\rightarrow }(s_i,y_i)\) for \(i=1,\dots ,M\).

Proof

Fix \(\omega \) s.t. (AR)(i)–(iii) hold. By scaling we may assume \(n=1\). By (1.10) there is a \(y_1\in {\mathcal {T}}_{s_1}\) s.t. \((s_0,y_0)\overset{\varvec{a}}{\rightarrow }(s_1,y_1)\overset{\varvec{a}}{\rightarrow }(s_M,y_M)\). Repeat this argument \(M-2\) times to construct the required sequence. \(\square \)

Definition 1.3

An ancestral path to \((t,x)\in {\mathcal {\varvec{T}}}\) is a cadlag path \(w=(w_s)_{s \ge 0}\) for which \((s,w_s)\overset{\varvec{a}}{\rightarrow }(s',w_{s'})\) for every \(0\le s\le s'\le t\), and \(w_s=x\) for all \(s\ge t\). The random collection of all ancestral paths to points in \({\mathcal {\varvec{T}}}\) is denoted by \(\mathcal {W}\) and is called the system of ancestral paths for \((\varvec{{\mathcal {T}}},\overset{\varvec{a}}{\rightarrow })\).

If \(n\in [1,\infty )\) and w is an ancestral path to \((nt,\sqrt{n} x)\), we define the rescaled ancestral path \(w^{\scriptscriptstyle {(n)}}\) by \(w^{\scriptscriptstyle {(n)}}_s=w_{ns}/\sqrt{n}\), and call \(w^{\scriptscriptstyle {(n)}}\) an ancestral path to \((t,x)\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_t\).

Remark 1.4

It is easy to check that if \(I={\mathbb {Z}}_+\) then (1.16) and (1.6) imply that for any ancestral path \(w\in \mathcal {W}\), \(w_s=w_{\lfloor s \rfloor }\) for all \(s\ge 0\). For this reason we will often restrict our ancestral paths to \(s\in {\mathbb {Z}}_+\).

Proposition 1.5

With probability 1 for any \((t,x)\in {\mathcal {\varvec{T}}}\), \(\mathcal {W}\) includes at least one ancestral path to (t, x).

The elementary proof is given in Sect. 6 below.

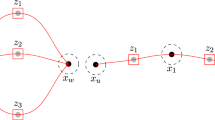

Let us briefly consider (1.3) and (AR) for our prototype models. For lattice trees (1.3) is immediate. Since a lattice tree \(T\in {\mathbb {T}}_L\) is a tree, for any \(x\in T_{m}\) there is a unique “ancestral” path \(w(m,x)=(w_k(m,x))_{k\le m}\) of length m in the tree from o to x. Moreover \(w_k(m,x)\in T_k\) for all \(0\le k\le m\). Define \((k,y)\overset{\varvec{a}}{\rightarrow }(m,x)\) iff \(x\in {\mathcal {T}}_m\), \(0\le k\le m\), and \(w_k(m,x)=y\). Here we allow \(m=0\). AR(i) and AR(ii) are then elementary to verify. It remains to verify AR(iv) which is deferred to Sect. 9 where the definition of \(\mathcal {F}_t\) is also given.

For the voter model there will be a unique ancestral path w(t, x) for each \((t,x)\in {\mathcal {\varvec{T}}}\) (see Lemma 7.3), obtained by tracing back the opinion 1 at x at time t to its source at the origin at time 0. Formally the ancestral paths are defined by reversing the dual system of coalescing random walks, obtained from the graphical construction of the voter model. We then define \((s,y)\overset{\varvec{a}}{\rightarrow }(t,x)\) iff \(0\le s\le t\), \(x\in {\mathcal {T}}_t\) and \(w_s(t,x)=y\). This standard construction is described in Sect. 7 where (AR) and (1.3) are then verified (see Lemmas 7.1 and 7.2).

For oriented percolation (1.3) is immediate, and we write \((n,x) \overset{\varvec{a}}{\rightarrow }(n',x')\) if \((0,o) \rightarrow (n,x)\) and \((n,x) \rightarrow (n',x')\). Here ancestral paths are not unique, since there may be many occupied paths between (n, x) and \((n',x')\). Let \(\mathcal {F}_n\) denote the \(\sigma \)-field generated by the bond occupation status for all bonds \(((m-1,x),(m,x'))\) for \(1\le m\le n\) and \(x,x'\in {\mathbb {Z}}^d\). It is easy to see that \(\overset{\varvec{a}}{\rightarrow }\) is an ancestral relation (i.e. (AR) holds).

For the contact process, the ancestral relation will be similar to that for oriented percolation, but will be obtained from the graphical construction of the contact process (see Sect. 10 below).

Survival probability and measure-valued processes

The survival time of our scaled model \({\mathcal {T}}^{\scriptscriptstyle {(n)}}\) is

The unscaled survival time is \(S^{\scriptscriptstyle (1)}\), and for \(t>0\), the unscaled survival probability is defined as

Our main results require a number of conditions on \({\varvec{{\mathcal {T}}}}\), the first of which concerns the asymptotics of the survival probability.

Notation. Write \(f(t)\sim g(t)\) as \(t\uparrow \infty \) iff \(\lim _{t\rightarrow \infty }f(t)/g(t)=1\). Similarly for \(f(t)\sim g(t)\) as \(t\downarrow 0\).

Condition 1

(Survival Probability) There is a constant \(s_D>0\) and a non-decreasing function \(m:[0,\infty )\rightarrow (0,\infty )\) such that \(m(t)\uparrow \infty \), as \(t\uparrow \infty \)

and a constant \(c_{1.21}\ge 1\) such that

The monotonicity properties of m and \(\theta \) and (1.19) easily show that

Note also that the first inequality in (1.21) with \(n=1\) implies that

For oriented percolation with \(d>4\) we set

where \(A,V>0\) are constants that depend on D;

and V is called the vertex factor (see [38] and in particular Condition 1.1 with \(r=2\) and \(\mathbf {k}=\mathbf {0}\) for the former). Similarly we have such an A and V for the contact process with \(d>4\) and for lattice trees with \(d>8\) and we set

For the voter model in two or more dimensions we set

and

In the above under \(P_o\), \((S_n)_{n \in {\mathbb {Z}}_+}\) is a discrete-time random walk with step distribution D, started at o, and \( \sigma ^2_{\scriptscriptstyle D}I_{d\times d}\) is the covariance matrix of D.

Proposition 1.6

-

(a)

Condition 1 holds for critical sufficiently spread out oriented percolation in dimension \(d>4\) with \(s_D=2A\).

-

(b)

Condition 1 holds for critical sufficiently spread out lattice trees in dimension \(d>8\) with \(s_D=2A\).

-

(c)

Condition 1 holds for critical sufficiently spread out contact processes in dimension \(d>4\) with \(s_D=2A\).

-

(d)

Condition 1 holds for the voter model in dimension \(d>1\) with \(s_D=\beta _D^{-1}\).

Proof

Conditions (1.20) and (1.21) are obvious in all four cases.

(a),(b),(c) For all 3 models (1.19) is a special case of Theorem 1.4 of [38] (for oriented percolation this was first proved in [36, 37]).

(d) Theorem \(1^\prime \) of [4] (or (1.5) of [3]) gives (1.19) for the voter model. \(\square \)

We can reinterpret the state of our rescaled models in terms of an empirical measure given by

So \(X_t^{\scriptscriptstyle {(n)}}\) takes values in the Polish space \(\mathcal {M}_F({\mathbb {R}}^d)\) of finite measures on \({\mathbb {R}}^d\) equipped with the topology of weak convergence. It follows from (1.3) that the measure-valued process \(\varvec{X}^{\scriptscriptstyle {(n)}}=(X_t^{\scriptscriptstyle {(n)}})_{t\ge 0}\) is in the Polish space \(\mathcal {D}:=\mathcal {D}([0,\infty ),\mathcal {M}_F({\mathbb {R}}^d))\). We define the survival map \(\mathcal {S}:\mathcal {D} \rightarrow [0,\infty )\) for \(\varvec{\nu }=(\nu _t)_{t\ge 0}\in \mathcal {D}\) by

so that our survival times satisfy \(S^{\scriptscriptstyle {(n)}}=\mathcal {S}(\varvec{X}^{\scriptscriptstyle {(n)}})\), and \(X^{\scriptscriptstyle {(n)}}_t=0\) for all \(t\ge S^{\scriptscriptstyle {(n)}}\) by (1.18).

Weak convergence and super-Brownian motion

An adapted a.s. continuous \(\mathcal {M}_F({\mathbb {R}}^d)\)-valued process, \(\varvec{X}=(X_s)_{s\ge 0}\), on a complete filtered probability space \((\varOmega ,\mathcal {F},\mathcal {F}_t,{\mathbb {P}}_{X_0})\) is said to be a super-Brownian motion (SBM) with branching rate \(\gamma >0\) and diffusion parameter \( \sigma _0^2>0\) (or a \((\gamma , \sigma _0^2)\)-SBM) starting at \(X_0\in \mathcal {M}_F({\mathbb {R}}^d)\) iff it solves the following martingale problem (see [32, Section II.5] for well-posedness of this martingale problem):

Let \(S=\mathcal {S}(\varvec{X})\). Associated with such a SBM is a \(\sigma \)-finite measure, \({\mathbb {N}}_o={\mathbb {N}}_o^{\gamma , \sigma _0^2}\), on the space of continuous measure-valued paths satisfying \(\nu _0=0\), \(0<S<\infty \) and \(\nu _s=0\) for all \(s\ge S\); let \(\varOmega ^{Ex}_C\) denote the space of such paths. \({\mathbb {N}}_o\) is called the canonical measure for super-Brownian motion. The connection between \({\mathbb {N}}_o\) and super-Brownian motion is that if \(\varXi \) is a Poisson point process on the space \(\varOmega _C^{Ex}\) with intensity \({\mathbb {N}}_o\), then

defines a SBM starting at \(\delta _0\). It is known that

Intuitively \({\mathbb {N}}_o\) governs the evolution of the descendants of a single ancestor at time zero, starting from the origin. For the above and more information on the canonical measure of super-Brownian motion see, e.g., Section II.7 of [32]. We will sometimes work with the unconditioned measures (\(n\in [1,\infty )\))

Note that (1.19) and (1.20) of Condition 1 together imply

Combining (1.22) with (1.21) and taking limits from the left, we arrive at

Suppressing dependence on \(\gamma , \sigma _0^2\), for \(s>0\) we define probabilities by

and

We slightly abuse the above notation and will also denote super-Brownian motion under \({\mathbb {N}}_o\), or the probabilities \({\mathbb {N}}_o^s\), by \(\varvec{X}=(X_t)_{t\ge 0}\). Then for \(\phi _k(x):=e^{ik \cdot x}\) we have

For LT, OP, and CP and d large as usual, we will use the fact that our rescaled models converge (at least in the sense of finite-dimensional distributions) to SBM with \(\gamma =1\) and

where \(\sigma ^2(=d \sigma ^2_{\scriptscriptstyle D})\) and v are as in [22, 42, 44], and the model dependent constant \(v>0\) is non-trivial (it involves so-called lace expansion coefficients). v satisfies (see e.g. [40, page 295] with \(p^*=2\) for LT and \(p^*=1\) for OP,CP)

For the VM and LT, the above convergence holds on path space:

Proposition 1.7

Consider either critical lattice trees with \(d>8\) and L sufficiently large, or the voter model with \(d>1\). Then for any \(s>0\),

where \({\mathbb {N}}_o^s\) has parameters \((\gamma , \sigma _0^2)=(1, \sigma ^2_{\scriptscriptstyle D}v)\) for lattice trees, and \((\gamma , \sigma _0^2)=(2\beta _D, \sigma ^2_{\scriptscriptstyle D})\) for the voter model.

Proof

For the voter model this is Theorem 4(b) of [3]. For lattice trees this is an immediate consequence of Theorem 1.2 of [39] (and an elementary rescaling) and (1.30). The reader should note, however, that the definition of \(\mu _n\) in [39] and that given above differ by a constant factor of A. \(\square \)

Remark 1.8

The size of our random tree, \(|\mathcal {T}|\), is random. If we were to condition on \(|\mathcal {T}|=n\) then (since all trees with n edges have equal weight) our conditional probability measure chooses a tree with n edges uniformly at random. If one rescales the \(n+1\) vertex locations by \(D_1^{-1}n^{-1/4}\) (where \(D_1\) is a constant depending on L) and considers the random mass distribution obtained by assigning mass \((n+1)^{-1}\) to each vertex, Aldous (Section 4.2 of [1]) had conjectured, and Derbez and Slade [11, 34] then proved, that the resulting rescaled empirical measures converges weakly to Integrated Super-Brownian Excursion (ISE). The latter is essentially super-Brownian motion integrated over time and conditioned to have total mass one.

For oriented percolation and the contact process, convergence of the finite-dimensional distributions (f.d.d.’s) to those of a \((1, \sigma ^2_{\scriptscriptstyle D}v)\)-SBM, where v is as in (1.36), is known [42, 44] (see also [24, 38]) but tightness (and convergence on path space) remains open. The actual weak convergence result we will impose on our lattice models (Condition 6 below) will in fact follow from convergence of the f.d.d.’s and a moment bound on the total mass (see Lemma 2.2).

Our main objective is to give general conditions for the convergence of the rescaled sets of occupied sites. This convergence follows neither from the notions of weak convergence above, nor from the weak convergence of the so-called historical processes (see e.g. [9, 23]).

Range

The range of \(\mathbf{\mathcal {T}}\) is \(R^{\scriptscriptstyle (1)}=\cup _{t\in I}{\mathcal {T}}_t\), which by (1.4) and (1.13) is a finite subset of \({\mathbb {Z}}^d\) on \(\{S^{\scriptscriptstyle (1)}<\infty \}\), and hence under Condition 1 will be finite a.s. The range of \({\mathcal {T}}^{\scriptscriptstyle {(n)}}\) is \(R^{\scriptscriptstyle {(n)}}=R^{\scriptscriptstyle (1)}/\sqrt{n}=\cup _{t\ge 0}{\mathcal {T}}^{\scriptscriptstyle {(n)}}_t\). So by the above we see that

Let \(\mathcal {R}:\mathcal {D} \rightarrow \) closed subsets of \({\mathbb {R}}^d\) be defined by

where \({\mathrm {supp}}(\mu )\) is the closed support of a measure \(\mu \). Clearly \(R^{\scriptscriptstyle {(n)}}=\mathcal {R}(\varvec{X}^{\scriptscriptstyle {(n)}})\) for all \(n\ge 1\). The radius mapping \(r_0: \mathcal {K}\rightarrow [0,\infty )\) on the space of compact subsets of \({\mathbb {R}}^d\) is given by

Of particular interest is the extrinsic one-arm probability

In the setting of high-dimensional critical percolation, Kozma and Nachmias [28] have proved that as \(r\rightarrow \infty \), \(r^2\eta _r\) is bounded above and below by positive constants. It is believed (see e.g. [19, Open Problem 11.2] and [39, Conjecture 1.6]) that in fact \(r^2\eta _r\rightarrow C>0\) for various critical models (percolation, voter, lattice trees, oriented percolation, and the contact process) all above their respective critical dimensions. To understand this \(r^{-2}\) behaviour in terms of the above weak convergence results, consider the one-arm probabilities for the limiting super-Brownian motion.

The range of a \((\gamma , \sigma _0^2)\)-SBM \({\varvec{X}}\) is denoted by

The a.e. continuity of \((X_t)_{t\ge 0}\) easily shows that

We note that R is a compact subset \({\mathbb {N}}_o\)-a.e. This is well-known under \({\mathbb {P}}_{\delta _0}\) (see, e.g. Corollary III.1.4 of [32]) and then follows easily under \({\mathbb {N}}_o\) using (1.28). We now state a quantitative version of this from [27]. For \(d\ge 1\), let \(v_d:B_d(0,1)\rightarrow {\mathbb {R}}_+\) be the unique positive radial solution of

(See Theorem 1 of [27] for existence and uniqueness of \(v_d\).)

Lemma 1.9

For all \(d\ge 1\) and \(r>0\), \({\mathbb {N}}_o^{\gamma , \sigma _0^2}(r_0(R)>r)=\frac{v_d(0) \sigma _0^2}{\gamma }r^{-2}\).

Proof

Theorem 1 of [27] and a simple scaling argument show that

On the other hand, the left-hand side of the above is \(1-\exp (-{\mathbb {N}}_o(r_0(R)>r))\) by (1.28). Combining these equalities completes the proof. \(\square \)

2 Statement of main results

We continue to state the general conditions which will imply our main results. Recall our standing assumptions (1.3) and (AR), the function m from (1.21), and the unconditioned measures (\(n\ge 1\)) \(\mu _n(\cdot )=m(n){\mathbb {P}}(\cdot )\). Recall also that \((\mathcal {F}_t)_{t\in I}\) is the filtration introduced prior to (AR) which will contain the filtration generated by \(({\mathcal {T}}_s)_{s\le t}\) or equivalently by \((X^{\scriptscriptstyle (1)}_s)_{s \le t}\).

2.1 Conditions

We now introduce additional conditions on \((\varvec{{\mathcal {T}}},\overset{\varvec{a}}{\rightarrow })\). Condition 2 is simple for the voter model, and is one of the outputs of the inductive approach to the lace expansion [40, 43] for other models, while Condition 3 will usually follow from Condition 1 and a form of the Markov property or Markov inequality.

Condition 2

(Total Mass)\(\quad \sup _{t\in I}{\mathbb {E}}\big [|{\mathcal {T}}_t|\big ]=c_{3}<\infty \).

Condition 3

(Conditional Survival Probability) There exists \(c_{3}>0\) such that for all \(s\ge 0,t>0\), on the event \(\{y \in {\mathcal {T}}_s\}\) we have (recall the function \(m(\cdot )\) from (1.21))

The next condition is the main input for our uniform modulus of continuity for ancestral paths (e.g. Theorem 1 below).

Condition 4

(Spatial Increments) There exists a \(p>4\) and \(c_{4}=c_{4}(p)>0\) such that for every \(0<s\le t\),

We will need an additional hypothesis to control the ancestral paths just before the terminal value.

Condition 5

(Local Jumps) There are \(\kappa >4\) and \(c_{5}>0\) such that for all \(s\ge 0\), \(y\in {\mathbb {Z}}^d\), and \(N>0\),

Remark 2.1

In discrete time if for some \(L>0\),

then Condition 5 holds for any \(\kappa >4\). This is obvious since the conditional probability on the left-hand side of (2.2) is then zero if \(N>2\sqrt{d} L\).

If \(\varvec{\nu }=(\nu _t)_{t\ge 0}\in \mathcal {D}\) and \(r>0\), define \({\bar{\nu }}_{r}\in M_F({\mathbb {R}}^d)\) by \({\bar{\nu }}_r(\cdot )=\int _0^r \nu _t(\cdot ) {\mathrm {d}}t\), and \(\bar{\nu }^{}_\infty \in M_F({\mathbb {R}}^d)\) by \(\bar{\nu }^{}_\infty (\cdot )=\mathbb {1}(\mathcal {S}(\nu )<\infty )\int _0^\infty \nu _t(\cdot ) {\mathrm {d}}t\).

Condition 6

(Measure Convergence) There are parameter values \((\gamma , \sigma _0^2)\) for \({\mathbb {N}}_o\) so that for every \(s>0\) and \(0\le t_0<t_1<\infty \), as \(n \rightarrow \infty \),

For critical lattice trees (\(d>8\)) with L sufficiently large, and voter models (\(d\ge 2\)) the above is immediate from Proposition 1.7 with the parameter values therein. For applications to other models (including oriented percolation and the contact process) it is worth noting that convergence of finite-dimensional distributions and boundedness of arbitrary moments of the total mass suffice. The hypotheses of the next lemma will be verified for OP and CP in Sects. 8 and 10, respectively.

Lemma 2.2

Fix \(s>0\) and let \({\mathbb {P}}_n^s\) and \({\mathbb {N}}_o^s\) be as in (1.32) and (1.33), respectively. Assume that for all \(p \in {\mathbb {N}}\):

Then Condition 6 holds.

The proof is an easy Fubini argument and is given in Sect. 6.

The final condition is needed to ensure the rescaled ranges of \(\varvec{{\mathcal {T}}}\) converge weakly to the range of super-Brownian motion. Together with uniform control of the ancestral paths, it will ensure that any occupied regions will be close to regions of positive integrated mass of the limiting super-Brownian motion.

Condition 7

(Low Density Inequality) There exists \(c_{7}>0\) such that for any \(t\ge 0\), \(M>0\), and \(\varDelta \ge 4\),

Here is a condition which implies the above and is more user-friendly in discrete time. The elementary proof is given in Sect. 6.

Lemma 2.3

Assume the discrete time setting and there is a \(c_{7}\) such that for all \(\ell \in {\mathbb {Z}}_+\), \(m\in {\mathbb {N}}^{\ge 4}\) and \(M>0\),

Then Condition 7 holds.

Note that Condition 4 for \(s=t\) gives bounds on the spatial moments \(\sum _x|x|^p{\mathbb {P}}(x\in {\mathcal {T}}_t)\). In fact a subset of the above conditions (including Condition 4) give exact asymptotics on these moments as \(t\rightarrow \infty \):

Proposition 2.4

Assume Condition 1 and that the conclusion of Condition 4 holds for \(s=t\) and all \(p>4\). Assume also that (2.4) holds for \(p=1\) and (2.5) holds for \(p=2\). If Z denotes a d-dimensional standard normal random vector, then for all \(p>0\),

The easy proof will be given in Sect. 6. Such exact asymptotics were established for OP (\(d>4\)) in [6] under more general conditions on D.

2.2 Main results

We start with a uniform modulus of continuity for the system of ancestral paths in either discrete or continuous time. As was noted before Condition 7, this modulus plays an important role in the convergence of the rescaled ranges but is also of independent interest. For critical branching Brownian motion such a modulus was first given in Theorem 4.7 of [10]. Although we assume Condition 1 for convenience, in fact the proof only requires the existence of a non-decreasing function m satisfying (1.21) and Condition 3, as well as Conditions 2, 4, and 5 but not the exact asymptotics in (1.19) or (1.20). We will often assume

where p is as in Condition 4. We will also sometimes assume

where \(\kappa \) is as in Condition 5. Note that such \(\alpha ,\beta \) exist since \(\kappa ,p>4\).

Theorem 1

Assume Conditions 1 to 5 for \(I={\mathbb {Z}}_+\) or \({\mathbb {R}}_+\), and \(\alpha ,\beta \) satisfy (2.9) and (2.10). Set \(q=\frac{\kappa (1/2-\alpha )-2}{2}\wedge 1\in (0,1]\). There is a constant \(C_{1}\ge 1\) and for all \(n\ge 1\), a random variable \(\delta _n\in [0,1]\), such that if

then

Moreover, \(\delta _n\) satisfies

In the discrete time setting recall that \({\mathbb {Z}}_+/n=\{j/n:j\in {\mathbb {Z}}_+\}\) is the natural time line. In this setting we can get a cleaner statement if we also assume the stronger (2.3) in place of Condition 5.

Theorem 1′

Assume Conditions 1 to 4 and (2.3), where \(I={\mathbb {Z}}_+\). Assume also that \(\alpha ,\beta \) are as in (2.9). There is a constant \(C_{1'}\) and for all \(n\ge 1\) a random variable \(\delta _n\in (0,1]\) such that if

then

and \(\delta _n\) satisfies

Moreover if \(s_1,s_2, y_1,y_2\) are as in (2.14) but with \(s_i\in {\mathbb {R}}_+\), then (2.12) holds.

Theorems 1 and \(1^\prime \) can be reinterpreted as (uniform) moduli of continuity for all ancestral paths.

Definition 2.5

For \(\alpha ,\beta >0\), the system of ancestral paths \(\mathcal {W}\) for \((\varvec{{\mathcal {T}}},\overset{\varvec{a}}{\rightarrow })\) is said to satisfy an \((\alpha ,\beta )\)-modulus of continuity if there exists a random function \(\delta :[1,\infty )\rightarrow [0,1]\), a function \(\varepsilon :[1,\infty )\rightarrow [0,\infty )\) such that \(\lim _{n\rightarrow \infty }\varepsilon (n)=0\) and a constant \(c>0\) such that for any \(n\in [1,\infty )\):

For every ancestral path \(w\in \mathcal {W}\), and all \(0\le s_1<s_2\),

Corollary 1

Assume Conditions 1 to 5 for \(I={\mathbb {Z}}_+\) or \({\mathbb {R}}_+\), then for \(\alpha ,\beta ,q\) as in Theorem 1, \(\mathcal {W}\) satisfies an \((\alpha ,\beta )\)-modulus of continuity with \(\varepsilon (n)=C_{1}n^{-q}\).

Proof

If w is an ancestral path to \((nt,\sqrt{n} x)\) and \(0\le s_1<s_2\), then for \(s_i\le t\), \((s_1,w^{\scriptscriptstyle {(n)}}_{s_1})\overset{\varvec{a,n}}{\rightarrow }(s_2,w^{\scriptscriptstyle {(n)}}_{s_2})\). (1) and (2) of Definition 2.5 now follow immediately from Theorem 1 with \(\delta _n\) as in the theorem and \(\varepsilon (n)\) as claimed. In general the result follows because \(w^{\scriptscriptstyle {(n)}}_s=w^{\scriptscriptstyle {(n)}}_{s\wedge t}\). \(\square \)

Corollary 1′

Assume Conditions 1 to 4 and (2.3), where \(({\mathcal {T}}_t)_{t\in {\mathbb {Z}}_+}\) is in discrete time, and let \(\alpha ,\beta \) and \(\delta _n\) be as in Theorem \(1^\prime \). Then for any \(n\ge 1\) and \(w\in \mathcal {W}\),

If \(s_i\) are as above but now in \({\mathbb {R}}_+\), then \(|w^{\scriptscriptstyle {(n)}}_{s_2}-w^{\scriptscriptstyle {(n)}}_{s_1}|\le C_{1}[|s_2-s_1|^\alpha +n^{-\alpha }]\).

Proof

As above but now use Theorem \(1^\prime \) in place of Theorem 1. \(\square \)

We will use this modulus of continuity as a tool for proving weak convergence of the range, however the result is useful more generally. For example, it provides a means to check tightness of the spatial component of the model in the extended Gromov–Hausdorff–Prohorov toplogy, cf. [2, Lemma 4.3]. For LT in particular, this has implications for random walk on LT (see e.g. [2]).

Our second main result is that, conditional on longterm survival, the rescaled range converges weakly to the range of (conditioned) SBM.

Theorem 2

(Convergence of the range) Assume Conditions 1–7, and let \({\mathbb {N}}_o\) be the canonical measure with parameters \((\gamma , \sigma _0^2)\) from Condition 6. Then for every \(s>0\),

as probability measures on \(\mathcal {K}\) equipped with the Hausdorff metric.

With Lemma 2.2 in mind it is perhaps a bit surprising that such a result could be proved without a formal tightness condition. It is Theorem 1 that effectively gives tightness of the approximating ranges.

Recall that \(v_d:B_d(0,1)\rightarrow {\mathbb {R}}_+\) is the unique solution of (1.38). The next result uses both Theorems 1 and 2 to give exact leading asymptotics for the extrinsic one-arm probability.

Theorem 3

(One-arm asymptotics) Assume Conditions 1–7. Then

and so (Condition 1)

Remark 2.6

The proof in Sect. 5 only uses Condition 1 and the conclusions of Theorems 1 and 2.

We finally show that all of the above conditions are satisfied by the voter model (\(d\ge 2\)), OP (\(d>4\)), LT (\(d>8\)), and the contact process (\(d>4\)).

Theorem 4

(Voter model) For the voter model, Conditions 1–7 hold in dimensions \(d\ge 2\) for any \(p>4\) in Condition 4, any \(\kappa >4\) in Condition 5, and \((\gamma , \sigma _0^2)=(2\beta _D, \sigma ^2_{\scriptscriptstyle D})\) in Condition 6. Hence for \(d\ge 2\), if \({\mathbb {N}}_o\) is the canonical measure of SBM with parameters \((2\beta _D, \sigma ^2_{\scriptscriptstyle D})\), then

- (v1)

For any \(\alpha \in (0,1/2)\), the system of ancestral paths, \(\mathcal {W}\), satisfies an \((\alpha ,1)\)-modulus of continuity with \(\varepsilon (n)=C_{1}n^{-1}\) for some \(C_1\ge 1\),

- (v2)

\({\mathbb {P}}_n^{s}(R^{\scriptscriptstyle (n)}\in \cdot )\overset{w}{\longrightarrow }{\mathbb {N}}_o^{s}(R\in \cdot )\) in \(\mathcal {K}\), as \(n\rightarrow \infty \), for every \(s>0\),

- (v3)

(i) \(\lim _{r\rightarrow \infty }r^2{\mathbb {P}}(r_0(R^{\scriptscriptstyle (1)})>r)=\frac{ \sigma ^2_{\scriptscriptstyle D}v_d(0)}{2\beta _D}\) if \(d>2\),

(ii) \(\lim _{r\rightarrow \infty }\frac{r^2}{\log r}{\mathbb {P}}(r_0(R^{\scriptscriptstyle (1)})>r)=v_2(0)(2\pi )^{-1}\) if \(d=2\).

Part (v1) will give a uniform modulus of continuity for all of the rescaled dual coalescing random walks between 1’s in a voter model conditioned on longterm survival. This is stated and proved in Sect. 7 (Corollary 7.4).

In the next three results v is the model-dependent constant satisfying (1.36).

Theorem 5

(Oriented percolation) For critical OP with \(d>4\) and L sufficiently large, Conditions 1–7 hold for any \(p>4\) in Condition 4, any \(\kappa >4\) in Condition 5, and \((\gamma , \sigma _0^2)=(1, \sigma ^2_{\scriptscriptstyle D}v)\) in Condition 6. Hence for \(d>4\), if \({\mathbb {N}}_o\) is the canonical measure of SBM with parameters \((1, \sigma ^2_{\scriptscriptstyle D}v)\), then

- (op1)

For \(\alpha \in (0,1/2)\) the system of ancestral paths, \(\mathcal {W}\), satisfies an \((\alpha ,1)\)-modulus of continuity with \(\varepsilon (n)\equiv 0\),

- (op2)

\({\mathbb {P}}_n^{s}(R^{\scriptscriptstyle {(n)}}\in \cdot )\overset{w}{\longrightarrow }{\mathbb {N}}_o^{s}(R\in \cdot )\) in \(\mathcal {K}\), as \(n\rightarrow \infty \), for every \(s>0\),

- (op3)

\(\lim _{r\rightarrow \infty }r^2{\mathbb {P}}(r_0(R^{\scriptscriptstyle (1)})>r)=\frac{ \sigma ^2_{\scriptscriptstyle D}vv_d(0)}{AV}\).

Theorem 6

(Lattice trees) For critical lattice trees with \(d>8\) and L sufficiently large, Conditions 1–7 hold for any \(p>4\) in Condition 4, any \(\kappa >4\) in Condition 5, and \((\gamma , \sigma _0^2)=(1, \sigma ^2_{\scriptscriptstyle D}v)\) in Condition 6. Hence for \(d>8\), if \({\mathbb {N}}_o\) is the canonical measure of SBM with parameters \((1, \sigma ^2_{\scriptscriptstyle D}v)\), then

- (t1)

For \(\alpha \in (0,1/2)\) the system of ancestral paths, \(\mathcal {W}\), satisfies an \((\alpha ,1)\)-modulus of continuity with \(\varepsilon (n)\equiv 0\),

- (t2)

\({\mathbb {P}}_n^{s}(R^{\scriptscriptstyle {(n)}}\in \cdot )\overset{w}{\longrightarrow }{\mathbb {N}}_o^{s}(R\in \cdot )\) in \(\mathcal {K}\), as \(n\rightarrow \infty \), for every \(s>0\),

- (t3)

\(\lim _{r\rightarrow \infty }r^2{\mathbb {P}}(r_0(R^{\scriptscriptstyle (1)})>r)=\frac{ \sigma ^2_{\scriptscriptstyle D}vv_d(0)}{AV}\).

Theorem 7

(Contact process) For the critical contact process with \(d>4\) and L large enough, Conditions 1–7 hold for any \(p>4\) in Condition 4, any \(\kappa >4\) in Condition 5, and \((\gamma , \sigma _0^2)=(1, \sigma ^2_{\scriptscriptstyle D}v)\) in Condition 6. Hence for \(d>4\), if \({\mathbb {N}}_o\) is the canonical measure of SBM with parameters \((1, \sigma ^2_{\scriptscriptstyle D}v)\), then

- (cp1)

For any \(\alpha \in (0,1/2)\), the system of ancestral paths, \(\mathcal {W}\), satisfies an \((\alpha ,1)\)-modulus of continuity with \(\varepsilon (n)=C_{{1}}n^{-1}\) for some \(C_1\ge 1\),

- (cp2)

\({\mathbb {P}}_n^{s}(R^{\scriptscriptstyle (n)}\in \cdot )\overset{w}{\longrightarrow }{\mathbb {N}}_o^{s}(R\in \cdot )\) in \(\mathcal {K}\), as \(n\rightarrow \infty \), for every \(s>0\),

- (cp3)

\(\lim _{r\rightarrow \infty }r^2{\mathbb {P}}(r_0(R^{\scriptscriptstyle (1)})>r)=\frac{ \sigma ^2_{\scriptscriptstyle D}vv_d(0)}{AV}\).

Remark 2.7

Although we have assumed D is uniform on \([-L,L]^d{\setminus }\{o\}\) for OP, LT and CP, the results (and proofs) for these models also hold for more general kernels. Namely, if \(h:{\mathbb {R}}^d\rightarrow [0,\infty )\) is bounded, continuous almost everywhere, supported on \([-1,1]^d\), invariant under the symmetries of \({\mathbb {Z}}^d\) and such that \(\int h(x)dx =1\), then for \(L\ge 1\) we may define D by

The case \(h=2^{-d}1_{[-1,1]^d}\) gives the uniform distribution. This is simply a matter of noting that the references we provide for checking these conditions hold for this class of more general D’s, and that our own arguments never make use of the uniform nature of D.

Remark 2.8

In Sects. 8–10 the hypotheses of Lemma 2.2 will be verified for LT, OP and the CP (for the dimensions noted in the above theorems). As Conditions 1-7 also hold by the above, we see in particular that Proposition 2.4 applies for all of these models. Recalling from the above that \(s_D=2A\), \(\gamma =1\) and \( \sigma _0^2= \sigma ^2_{\scriptscriptstyle D}v\) for all of these models, Proposition 2.4 gives

Recall that for lattice trees \(w_k(m,x)\) is the location of the ancestor of \(x\in {\mathcal {T}}_m\) in generation \(k\le m\) of the tree. We can also apply Corollary \(1^\prime \) to obtain a modulus of continuity for the large scale behaviour of \(k\mapsto w_k(m,x)\) conditional on longterm survival; see Corollary 9.1 in Sect. 9.

Note that the lower bounds on \({\mathbb {P}}(r_0(R^{(1)})>r)\) in (v3) and (t3) above follow easily from Proposition 1.7 and the lower semi-continuity of the support map on \(\mathcal {M}_F({\mathbb {R}}^d)\) (see Lemma 4.3 below). So the importance of (v3) and (t3) in these cases are the matching upper bounds.

For the voter model, in an interesting article Merle [31] has studied the probability that a distant site x ever holds the opinion 1 (i.e. \({\mathbb {P}}(\xi _t(x)=1 \text { for some }t\ge 0)\) in the limit as \(|x| \rightarrow \infty \)). Although clearly related to the behaviour of the range of the voter model, and in particular (v3) above, neither result implies the other.

2.3 Discussion on conditions and extensions

Note that, although the above list of conditions may appear lengthy, we shall see that for our prototype models, all of the conditions are either already proved in the literature, or are fairly easy to establish from known results, although Condition 4 was in some cases proved very recently in response to this work. For the voter model this Condition is elementary for any \(d\ge 2\) and any \(p>4\); see Sect. 7. For our prototype models, a Markov (or sub-Markov) argument reduces Condition 4 to the \(s=t\) case, which is a bound of the form

For the voter model such a reduction is implicit in (7.18), while for OP, the CP, and LT the reductions are given by Lemma 8.2, Lemma 10.2 and Lemma 9.5 respectively, with \(f(x)=|x|^p\). For OP, (2.20) was established by Chen and Sakai [6] (see Theorem 1.2 of [6] and the ensuing comment there to allow \(\alpha =\infty \)) where in fact exact asymptotics are given for large t. In response to our main results, Sakai and Slade [33] established (2.20) for sufficiently spread-out critical CP (\(d>4\)) and LT (\(d>8\)) for any \(p>4\) (their method also applies to OP). For LT, their result extends the result for \(p=6\) that appeared in an earlier version of this paper [25] (and their method is simpler). Thus the earlier version [25] has been superseded by this paper and [33]. Note that (2.19) shows that their upper bounds then immediately give exact asymptotics as \(t\rightarrow \infty \).

Recent work [20] suggests that it may be of interest to consider these results in the setting of critical sufficiently spread out percolation (\(d>6\)). In this setting, for example, Theorem 3 would refine a result of Kozma and Nachmias [28], by giving a bona fide limit for the one-arm probability. However we quickly point out that there is much work still to be done here. For example, the important Condition 6 has yet to be verified in this setting (see [18] for partial results). In a very interesting paper Tim Hulshof [26] has shown that there is a phase transition in the one-arm exponents which corresponds to the condition \(p>4\) in Condition 4, both for critical percolation (\(d>6\)) and critical branching random walk (BRW) \((d>2)\). He works with infinite range kernels D satisfying \(D(x)\approx |x|^{-d-\alpha }\) as |x| becomes large (where \(f(x)\approx g(x)\) means \(cg\le f\le Cg\) for some \(0<c\le C<\infty \)) and shows that if \(R^{\scriptscriptstyle (1)}\) is the open cluster of the origin (resp. set of vertices visited by BRW), then

For \(\alpha \ge 4\) this gives the one-arm exponent 2, found for the finite-range setting in [28], but for \(\alpha <4\) the one-arm exponent is no longer 2. It is easy to check that \(\alpha >4\) implies that \(\sum _z|z|^p D(z)<\infty \) for some \(p>4\). Although we have assumed single occupancy, our proof is modelled after the proof for branching Brownian motion, which is implicit in [10], and minor modifications to our arguments will give similar results for branching random walk. (One needs to define (AR) for individual particles at each site, for example by using the arboreal labelling in [10]). Hulshof’s results, described above, strongly suggest that the restriction \(p>4\) in Condition 4 is sharp. In fact we conjecture that Theorems 1–3 will continue to hold without the finite range assumption on D, but will all fail if we then allow \(p<4\) in Condition 4 for our four prototype models with such “long-range” kernels.

The remainder of this paper is organised as follows. In Sect. 3 we establish the moduli of continuity, i.e., Theorems 1 and \(1^\prime \). In Sect. 4 we prove our general result on convergence of the rescaled ranges, Theorem 2. In Sect. 5 both of the above ingredients are used to prove the one-arm result, Theorem 3. Lemmas 2.2 and 2.3 (dealing with checking Conditions 6 and 7, respectively) and Proposition 1.5 (existence of ancestral paths) are proved in Sect. 6. In Sects. 7–10, respectively, we verify our conditions for the voter model, OP, LT’s and CP, and so establish Theorems 4, 5, 6 and 7.

3 Modulus of continuity

In this section we prove Theorems 1 and \(1^\prime \).

Proposition 3.1

Assume Conditions 1, 3, 4, and let \(\alpha ,\beta \) satisfy (2.9). There is a constant \(C_{3.1}\), and for all \(n\ge 1\) a random variable \(\delta _n\in (0,1]\) such that if

then

implies

Moreover \(\delta _n\) satisfies

Proof

We first note that it suffices to consider \(n=2^{n_0}\) for some \(n_0\in {\mathbb {N}}\). Assuming the result for this case, for \(n\ge 1\), choose \(n_0\in {\mathbb {N}}\) so that \(2^{n_0-1}\le n<2^{n_0}\) and set \(\delta _n(\omega )=\delta _{2^{n_0}}(\omega )\in (0,1]\). The monotonicity of m(n) from Condition 1 (survival probability) shows that

Assume now that the conditions in (3.1) are satisfied by \(t=k/n\), \(x=z/\sqrt{n}\) and \(s_i=k_i/n\) where \(k,k_i\in {\mathbb {Z}}_+\) and \(z\in {\mathbb {Z}}^d\), and that (3.2) is satisfied by \(y_i=\frac{x_i}{\sqrt{n}}\), where \(x_i\in {\mathbb {Z}}^d\) for \(i=1,2\). Then \(k_2\le k-1\), which implies \(k_2 2^{-n_0}\le k 2^{-n_0}-2^{-n_0}\), and \(0<(k_2-k_1)2^{-n_0}<(k_2-k_1)n^{-1}\le \delta _{2^{n_0}}\). By scaling this implies \((\frac{k_1}{2^{n_0}},\frac{x_1}{2^{n_0/2}})\overset{\varvec{a,2^{n_0}}}{\rightarrow }(\frac{k_2}{2^{n_0}},\frac{x_2}{2^{n_0/2}})\overset{\varvec{a,2^{n_0}}}{\rightarrow }(\frac{k}{2^{n_0}},\frac{z}{2^{n_0/2}})\). So the result for \(2^{n_0}\) implies that

So the result follows for general \(n\ge 1\) by increasing \( C_{3.1}\) to \(\sqrt{2} C_{3.1}\).

We set \(n=2^{n_0}\) for \(n_0\in {\mathbb {N}}\). For a natural number m define

and for \(k\in I(n_0,m)\) define

Note that the \(\max \) exists by (1.3). For \(\ell \in {\mathbb {Z}}_+\), let

Lemma 3.2

Assume (2.9). There is a constant \(C_{3.2}\) so that for all \(m\in {\mathbb {N}}\),

- (a)

for all \(k\in I(n_0,m)\), \(\mu _{2^{n_0}}(A_k(n_0,m))\le c_{3.2}2^{-k(p/2-p\alpha -1)}\),

- (b)$$\begin{aligned} \mu _{2^{n_0}}(B_\ell (n_0,m))\le {\left\{ \begin{array}{ll} c_{3.2}2^{-\ell (p/2-p\alpha -1)},&{}\qquad \text {if } 0\le \ell \le n_0,\\ 0, &{} \qquad \text {if }\ell > n_0.\end{array}\right. } \end{aligned}$$

Proof

(a) Clearly we have

By Condition 3 (conditional survival probability),

Recall that \({\mathcal {T}}_{m-2^{n_0-k}}\) is \(\mathcal {F}_{m-2^{n_0-k}}\)-measurable, and by AR(iv),

is also \(\mathcal {F}_{m-2^{n_0-k}}\)-measurable. We therefore can use (3.6) in (3.5) to conclude

where Condition 4 (spatial increments) and (1.21) are used in the last.

(b) Note first that (2.9) implies \(p/2-p\alpha >2\). Sum the bound in (a) over \(k>\ell \), note that \(n_0\in I(n_0,m+1)\) (to apply (a) to \(A_{n_0}(n_0,m+1)\)), and use \(B_\ell (n_0,m)=\varnothing \) if \(\ell > n_0\) to derive (b) (where we can adjust the constants after the fact). \(\square \)

Lemma 3.3

Assume (2.9). There is a \(c_{3.3}=c_{3.3}(\alpha ,L)\) such that if \(m\in {\mathbb {N}}\) and \(\ell \in \{0,\dots ,n_0\}\) satisfies \(2^{n_0-\ell }\le m\), then

Proof

The conditions on \(\ell \) and m show that \(\{\ell +1,\dots ,n_0\}\subset I(n_0,m)\). This implies that

Choose \(\omega \in B_\ell (n_0,m)^c\) and assume \((m-2^{n_0-\ell },y)\overset{\varvec{a}}{\rightarrow }(m,x')\overset{\varvec{a}}{\rightarrow }(m+1,x)\) for some \(x\in {\mathcal {T}}_{m+1}\). By (1.10) we may choose \(x''\) so that \((m-2^{n_0-\ell },y)\overset{\varvec{a}}{\rightarrow }(m-1,x'')\overset{\varvec{a}}{\rightarrow }(m,x')\) (set \(x''=y\) if \(\ell =n_0\)). Then since \(\omega \not \in A_{n_0}(n_0,m+1)\),

Let \(y_\ell =y\). By (1.10) for \(k=\ell +1,\dots ,n_0\) we may choose \(y_k\in {\mathcal {T}}_{m-2^{n_0-k}}\) such that \(y_{n_0}=x''\), and

By \(\omega \notin A_k(n_0,m)\) and the triangle inequality, we have

This and (3.7) imply \(|x'-y|\le C2^{(n_0/2)-\ell \alpha }\) and so completes the proof. \(\square \)

Returning now to the proof of Proposition 3.1, we define

Note that \(C_r(n_0)\) is non-increasing in r. Set \(\delta _{2^{n_0}}(\omega )=2^{-K_{n_0}(\omega )}\in (0,1]\). Then for \(r\in \{0,\dots ,n_0\}\),

where we have used Lemma 3.2(b) and Condition 3 with \((s,y))=(0,o)\) and \(t=2^{r\beta }2^{n_0}\). Since \(2^{r\beta }\le 2^{n_0}\) (recall \(\beta \le 1\)) we may use (1.21) to see that

and so from the above,

where (2.9) is used in the last inequality. If \(r\in \{n_0+1,n_0+2,\dots \}\) then (3.8) is trivial since the left-hand side is zero. If \(\rho \in (0,1]\) choose \(r\in {\mathbb {Z}}_+\) so that \(2^{-r-1}<\rho \le 2^{-r}\). Then by (3.8),

Take limits from the right in \(\rho <1\) in the above to derive (3.4).

Turning now to (3.3), we can rescale and restate our objective as (note that \(t=(m+1)/n\) for some \(m\in {\mathbb {N}}\) or the conclusion is vacuously true):

If

then

It suffices to consider \(k_2=m\) in the above because if we choose (by (1.10)) \(x'\) s.t. \((k_2,y_2)\overset{\varvec{a}}{\rightarrow }(k_2+1,x')\overset{\varvec{a}}{\rightarrow }(m+1,x)\), then we can work with \((k_2+1,x')\) in place of \((m+1,x)\). So let us assume

We must show that

If \(K_{n_0}=n_0+1\), then (3.9) leads to a contradiction and so we have

By (3.9), \(2^{-n_0}\le (m-k)2^{-n_0}\le 2^{-K_{n_0}}\), and so we may choose \(r\in {\mathbb {Z}}_+\) so that

Using the fact that \(0<(m-k)2^{-n_0}\le 2^{-r}\), we can choose \(i_r,j_r\in {\mathbb {Z}}_+\) with \(j_r-i_r\in \{0,1\}\) and \(i_\ell ,j_\ell \in \{0,1\}\) for \(\ell \in (r,n_0]\cap {\mathbb {N}}\) so that

For \(q\in (r,n_0]\cap {\mathbb {N}}\) define

so that \(k_{n_0}=k\) and \(m_{n_0}=m\). By Lemma 1.2 there are \((y_q)_{q=r}^{n_0}\) and \((z_q)_{q=r}^{n_0}\) s.t. \(y_{n_0}=y\), \(z_{n_0}=x'\) and

(if \(k_{q-1}=k_q\) set \(y_q=y_{q-1}\) and similarly if \(m_{q-1}=m_q\)). In addition if \(i_r=j_r\) so that \(k_r=m_r\) we may assume

On the other hand if \(j_r=i_r+1\), then

and so (by Lemma 1.2) we may choose \(z_r\) in the above s.t. \((k_{n_0},y_{n_0})\overset{\varvec{a}}{\rightarrow }(m_r,z_r)\). Hence we have

Recalling from (3.11), and (3.12) that \(r\ge K_{n_0}\ge K^1_{n_0}\), we have from the definition of \(K^1_{n_0}\) that \(\omega \notin C_r(n_0)\) and so for all \(\ell \in [r,n_0]\cap {\mathbb {Z}}_+\),

which by Lemma 3.3 (and \(2^{n_0-\ell }\le i2^{n_0-\ell }\)) implies

As \(r\ge K_{n_0}\ge K^2_{n_0}\), we also have \(S^{\scriptscriptstyle (1)}\le 2^{r\beta +n_0}\le 2^{\ell \beta +n_0}\) for all \(\ell \ge r\) and so if \(i>\lceil 2^{\ell (1+\beta )}\rceil \), then

and so \({\mathcal {T}}_{i2^{n_0-\ell }+1}=\varnothing \). This implies (3.16) holds vacuously and we may conclude

By \(y_{n_0}=y\), \(z_{n_0}=x'\) and the triangle inequality, we have

Note that for \(q\in (r,n_0]\cap {\mathbb {N}}\), \(m_q={{\bar{j}}}_q2^{n_0-q}\) for some \({{\bar{j}}}_q\in {\mathbb {Z}}_+\) and also \(m_{q-1}=({{\bar{j}}}_q-j_q)2^{n_0-q}\), where \(j_q=0\) or 1. Therefore (3.13) implies

and so by (1.10) there is a \(z\in {\mathcal {T}}_{{{\bar{j}}}_q 2^{n_0-q}+1}\) s.t.

Therefore (3.17) implies

(note here that if \({{\bar{j}}}_q=0\), then \(j_q=0\) and so \(z_q=z_{q-1}\) and the above inequality is trivial). Similar reasoning shows

To handle the last term in (3.18), first observe that if \(j_r-i_r=1\), then \(k_r=(j_r-1)2^{n_0-r}\) and so (by (3.15)) \((k_r,y_r)\overset{\varvec{a}}{\rightarrow }(m_r,z_r)\overset{\varvec{a}}{\rightarrow }(m+1,x)\) implies

for some \(z\in {\mathcal {T}}_{j_r2^{n_0-r}+1}\) (by (1.10)). It follows from (3.17) with \(\ell =r\) that

Use (3.19)–(3.20) and (3.14) in (3.18) and so conclude that

which gives (3.10), as required. \(\square \)

Proof of Theorem \(1^\prime \). Let \(n\in [1,\infty )\) and take \(\delta _n\) as in Proposition 3.1, so that (2.16) and \(\delta _n\in (0,1]\) are immediate from that proposition. Assume \(s_1,s_2,y_1,y_2\) are as in (2.14). If \(s_1=s_2\) then \(y_1=y_2\) by (AR)(i), and the result is trivial. Otherwise \(s_1<s_2\), and by (1.10) we may choose \(x'\) s.t.

We also have \(|s_2-n^{-1}-s_1|\le |s_2-s_1|\le \delta _n\). Proposition 3.1 and (2.3) imply

the last since \(|s_2-s_1|\ge 1/n\) and \(\alpha <1/2\). This proves (2.15) where \(C_{1'}=\sqrt{d} L+C_{3.1}\).

Now suppose instead that \(s_1,s_2\in {\mathbb {R}}_+\) (otherwise as above). The validity of (2.12) now follows by an elementary argument using (2.3) and the fact that for \(0\le s_1<s_2\), \((s_1,y_1)\overset{\varvec{a,n}}{\rightarrow }(s_2,y_2)\) iff \((\lfloor s_1n \rfloor /n,y_1)\overset{\varvec{a,n}}{\rightarrow }(\lfloor s_2n \rfloor /n,y_2)\). \(\square \)

Consider next the proof of Theorem 1 and assume the hypotheses of that theorem. We first use Conditions 5 (local jumps) and 2 (total mass) to handle the small increments of \(w^{\scriptscriptstyle {(n)}}(t,x)\) near t.

Lemma 3.4

There is a \(C_{3.4}\) such that for any \(\alpha \in (0,1/2)\), \(t^*\ge 1\) and \(n\ge 1\),

Proof

If \(s=j/n\in {\mathbb {Z}}_+/n\) is fixed, then by scaling,

Now use Conditions 5 and 2, and (1.23) to see the above is at most

Finally sum over \(s\in ({\mathbb {Z}}_+/n)\cap [0,t^*]\) to obtain the desired upper bound. \(\square \)

Proof of Theorem 1

Fix \(n\ge 1\) and \(q\in (0,1]\) as in the Theorem and define

By Lemma 3.4 and (1.31) and the fact that \(n^q\le n\),

where the definition of q is used in the last line. To avoid confusion we denote the \(\delta _n\) arising in Proposition 3.1 by \(\delta _n^{3.1}\) and then define

Then for \(\rho \in [0,1)\),

the last by (3.21) and Proposition 3.1. This proves (2.13).

In proving (2.11) implies (2.12) to reduce subscripts we assume

This implies \(\delta _n>0\) and so \(\omega \in \varOmega _n\), which in turn implies \(S^{\scriptscriptstyle {(n)}}\le n^q\) and so (as \({\mathcal {T}}_t^{\scriptscriptstyle {(n)}}\) is non-empty),

We must show that, for an appropriate \(C_{1}\),

If \(t\le 2/n\) this follows easily from \(\omega \in \varOmega _n\) and the triangle inequality, so assume without loss of generality that \(t>2/n\). Write \([u]_n=\lfloor nu \rfloor /n\), and set \(t_n=[t-n^{-1}]_n, t'_n=t_n+n^{-1}, s_n=[s]_n\wedge t_n\le t'_n\), all in \(\in {\mathbb {Z}}_+/n\). Therefore

(we can assume \(s_n=[s]_n\) in the above derivation, since otherwise \(s_n=t_n\) and the desired upper bound in (3.24) is trivial), and

Since \(s_n\le s\) and \(y\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_s\) by Lemma 1.2 there exists \(y_n\) s.t.

We also have \(s_n\le t_n<t_n+n^{-1}\le t\le n^q\) (by (3.22)) and \(x\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_t\), so by Lemma 1.2 there are \(x_n,x_n'\) s.t.

Therefore by \(\omega \in \varOmega _n\) and (3.25) we may conclude,

the last by (3.24). This proves (3.23) and the proof is complete. \(\square \)

4 Convergence of the range

In this section we prove Theorem 2. Recall that \({\mathbb {N}}_o^s(\cdot )={\mathbb {N}}_o(\cdot \,|S>s)\).

Lemma 4.1

Proof

First recall from (1.29) that \({\mathbb {N}}_o(S>1)=2/\gamma \), and by Theorem II.7.2(iii) of [32]

Let \(v^{(\lambda )}_t=\sqrt{\frac{2\lambda }{\gamma }}\frac{[{\mathrm {e}}^{t \sqrt{2\gamma \lambda }}-1]}{[{\mathrm {e}}^{t \sqrt{2\gamma \lambda }}+1]}\) be the unique solution of

Then the Markov property under \({\mathbb {N}}_o\) and exponential duality (see Theorem II.5.11(c) of [32]), together with (4.2) gives

Since \(v_1^{(\lambda )}\sqrt{\gamma /(2 \lambda )} \rightarrow 1\) as \(\lambda \rightarrow \infty \),

A Tauberian theorem (e.g. Theorems 2,3 in Section XIII.5 of [15]) now gives

Now

If \(a\le \gamma \) and so \(\lambda :=1/a\ge \gamma ^{-1}\), then using \(\mathbb {1}_{Y\le a}\le {\mathrm {e}}^{\lambda (a-Y)}\) we have

For \(a>\gamma \) the bound is trivial. \(\square \)

Lemma 4.2

\(0\in R\ \ {\mathbb {N}}_o-a.e.\)

Proof

This is immediate from the description of the integral of SBM in terms of the Brownian snake, and the continuity of the snake under \({\mathbb {N}}_o\). See Proposition 5 and Section 5 of Chapter IV of [29]. \(\square \)

Recall that \(\mathcal {K}\) is the Polish space of compact subsets of \({\mathbb {R}}^d\), equipped with the Hausdorff metric \(d_0\) and \(\varDelta _1\) is as in (1.2).

Lemma 4.3

If \(\nu _n \rightarrow \nu \) in \(M_F({\mathbb {R}}^d)\), and \({\mathrm {supp}}(\nu )\in \mathcal {K}\), then

Proof

Fix \(\varepsilon >0\). We must show that \({\mathrm {supp}}(\nu )\subset {\mathrm {supp}}(\nu _n)^\varepsilon \) for all n sufficiently large. Let \(x\in {\mathrm {supp}}(\nu )\). Then \(\liminf _{n \rightarrow \infty }\nu _n(B(x,\varepsilon /2))\ge \nu (B(x,\varepsilon /2))>0\). Therefore there exists n(x) such that for every \(n\ge n(x)\),

and therefore \(x \in {\mathrm {supp}}(\nu _n)^{\varepsilon /2}\). As \({\mathrm {supp}}(\nu )\) is compact there exist \(x_1,\dots , x_k \in {\mathrm {supp}}(\nu )\) such that \({\mathrm {supp}}(\nu )\subset \cup _{i=1}^k B(x_i,\varepsilon /2)\). Thus if \(n\ge n_0=\max _{i\le k}n(x_i)\) then

as required. \(\square \)

In the rest of the Section we will assume Conditions 1–7, let \(\alpha ,\beta \) satisfy (2.9) and (2.10), and assume \((\gamma , \sigma _0^2)\) are as in Condition 6 (measure convergence). The parameter q is as in Theorem 1. We start with some simple consequences of Theorem 1. Recall from (1.37) that \(R^{\scriptscriptstyle {(n)}}\) is a.s. finite. For \(t\ge 0\), define

Lemma 4.4

-

(a)

There is a \(c_{4.4}>0\) such that on \(\{\delta _n\ge n^{-1}\}\) we have

$$\begin{aligned} r_0(R^{\scriptscriptstyle {(n)}})\le c_{4.4}(S^{\scriptscriptstyle {(n)}}\delta _n^{\alpha -1}+1). \end{aligned}$$(4.3) -

(b)

\(\eta _{4.4}(u):=\sup _{n\ge 1}\mu _n(r_0(R^{\scriptscriptstyle {(n)}})\ge u)\rightarrow 0\text { as }u\rightarrow \infty \).

-

(c)

For any \(\tau _0,\varepsilon ,s>0\) there is a \(\tau =\tau (\tau _0,\varepsilon ,s)>0\) and \(n_0(\tau _0,\varepsilon ,s)\ge 1\) so that

$$\begin{aligned} {\mathbb {P}}(R_\tau ^{\scriptscriptstyle {(n)}}\not \subset B(0,\tau _0)|S^{\scriptscriptstyle {(n)}}>s)\le \varepsilon \quad \forall n\ge n_0. \end{aligned}$$

Proof

(a) Assume \(\delta _n(\omega )\ge 1/n\). Assume also \(x\in {\mathcal {T}}_s^{\scriptscriptstyle {(n)}}\) for some \(s> 0\). Choose \(M\in {\mathbb {N}}\) so that \((M-1)\delta _n<s\le M\delta _n\), and set \(s_i=i\delta _n\) for \(0\le i<M\) and \(s_M=s\). Clearly \(s<S^{\scriptscriptstyle {(n)}}\) (since \(\mathcal {T}^{\scriptscriptstyle {(n)}}_s\) is non-empty) and so

By Lemma 1.2 there are \(y_i\in {\mathcal {T}}_{s_i}\) for \(0\le i\le M\) s.t. \(y_0=o\), \(y_M=x\) and \((s_{i-1},y_{i-1})\overset{\varvec{a,n}}{\rightarrow }(s_i,y_i)\) for \(1\le i\le M\). Theorem 1 implies for all \(1\le i\le M\), \(|y_i-y_{i-1}|\le 2C_{1}\delta _n^{\alpha }\), and so by the triangle inequality, (4.4), and \(\delta _n\le 1\),

This gives (a) with \(c_{4.4}=2C_{1}\).

(b) Use (a) to see that for \(u,n\ge 1\) and \(u> 2c_{4.4}\),

where in the last line we have used Theorem 1 and the lower bound on u. Now (1.31) implies that

Using this in the bound (4.5) we see that for any \(\varepsilon >0\) there is an \(n_0\ge 1\) such that

But for \(n \in [1,n_0)\cap {\mathbb {N}}\) we have

for \(u>u_0(\varepsilon )\). The result follows from this last inequality and (4.6).

(c) Fix \(\tau _0,\varepsilon ,s>0\) and then choose \(n_1(\tau _0)\ge 1\) and \(\tau _1(\tau _0)>0\) so that

So for \(n>n_1\) and \( \tau \in (0,\tau _1)\), by Theorem 1 (and the fact that \(y\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_s\) for some \(s\le \tau \) implies \((0,o)\overset{\varvec{a,n}}{\rightarrow }(s,y)\)) we have

Therefore using (1.31) and (2.13) we see that for \(n>n_1\) and \(\tau \in (0,\tau _1)\),

where the last inequality holds for \(\tau \) sufficiently small and n sufficiently large, depending only on \(\varepsilon \) and s. The result follows. \(\square \)

Lemma 4.5

For every \(s>0\),

Proof

Fix \(s>0\) and \(\varepsilon >0\). Choose \(t_\varepsilon >\max \{1/\varepsilon ,s\} \) and let \(\varphi \in C_b(M_F({\mathbb {R}}^d))\). Then \(\big |{\mathbb {P}}_n^{s}[\varphi (\bar{X}^{\scriptscriptstyle {(n)}}_\infty )]-{\mathbb {N}}_o^{s}[\varphi (\bar{X}_\infty )]\big |\) is at most

For n sufficiently large (depending on \(s,t_\varepsilon ,\varepsilon \)) the term (4.7) is less than \(\varepsilon \) by Condition 6 (measure convergence). The quantity (4.8) is equal to

This is less than \(\varepsilon \) for n sufficiently large depending on \(s,t_\varepsilon ,\varepsilon \) by Condition 6 and Condition 1 (survival probability).

Similarly, (4.9) is equal to

which is less than or equal to \(c \varepsilon \) for n sufficiently large (where c depends on s and \(\Vert \varphi \Vert _\infty \)) due to Condition 1 and (1.29). \(\square \)

Recalling Condition 7 (low density inequality), we set \(K=1024\) and for \(i\in {\mathbb {Z}}_+\), \(\ell \in {\mathbb {N}}\) and \(n\in [1,\infty ),\) define

and \({\hat{\varOmega }}_\ell ^n=\cup _{i=0}^{2^{2\ell }-1}\varOmega ^n_{i,\ell }\). The following result together with the modulus of continuity will ensure that, with high probability, points in the discrete range are near areas of significant integrated mass.

Lemma 4.6

There is a \(c_{4.6}>0\) and for any \(n\ge 1\) there is an \(M_n\in {\mathbb {N}}\) so that \(M_n\uparrow \infty \) as \(n\rightarrow \infty \), and if \(\varOmega _m^n=\cup _{\ell =m}^{M_n}{\hat{\varOmega }}_\ell ^n\) for \(m \in {\mathbb {N}}\), then

Proof

Let \(\ell \in {\mathbb {N}}\), \(i\in \{0,1,\dots ,2^{2\ell }-1\}\) and assume

A simple change of variables (\(u=ns\)) in the time integral in the definition of \(\varOmega ^n_{i,\ell }\) shows that

So using Condition 7 with \(\varDelta =n 2^{-\ell }\ge 4\) (by (4.10)) and \(t=ni2^{-\ell }\) yields

where in the last inequality we used (1.21), which applies because \(n2^{-\ell }\ge 1\) by (4.10).

If \(I_{n,\ell }=[1,1+\frac{2^{\ell +1}}{n}]\cup [2-\frac{2^{\ell +1}}{n},2]\), then

where Condition 2 (total mass) and (1.22) are used in the last inequality.

Use the upper bound from (4.11), (1.21), and then (1.22) to conclude that

Markov’s inequality and (4.12) imply that

By Condition 6 (measure convergence) and the upper bound in (4.1),

Therefore there is an increasing sequence \(\{n(\ell ):\ell \in {\mathbb {N}}\}\) such that \(n(\ell )\ge K^{3\ell /2}\) and

Use (4.14) and (4.15) in (4.13) and sum over \(i=0,\dots , 2^{2\ell }-1\) to deduce that

For \(n\ge 1\), define \(M_n=\max \{\ell :n(\ell )\le n\}\uparrow \infty \) as \(n\rightarrow \infty \) (\(\max \varnothing =0\)). Then by (4.16) for any \(m\in {\mathbb {N}}\),

because \(\ell \le M_n\) implies that \(n(\ell )\le n\). \(\square \)

Proof of Theorem 2. Recall (1.5). Let

where (1.11) is used to show that, using the usual countable product metric,

For \(s>0\), the joint probability law of \((\varvec{X}^{\scriptscriptstyle {(n)}},\tilde{e}^{\scriptscriptstyle {(n)}})\) (on \(\mathcal {D}\times \tilde{\mathcal {D}}\)) conditional on \(S^{\scriptscriptstyle {(n)}}>s\) is written as

Although \(\mu ^s_n={\mathbb {P}}^s_n\), we will soon be trading in our familiar \({\mathbb {P}}\) to apply Skorokhod’s Theorem and so to avoid confusion it will be useful to work with \(\mu ^s_n\) on our original probability space.

Fix \(s>0\). It suffices to show weak convergence along any sequence \(n_k\rightarrow \infty \) and to ease the notation we will simply assume \(n\in {\mathbb {N}}\) (the proof being the same in the general case). By Lemma 4.5 and the Skorokhod Representation Theorem we may work on some \((\varOmega ,\mathcal {F},{\mathbb {P}}^{\scriptscriptstyle \{s\}})\) on which there are random measures \((\bar{X}^{\scriptscriptstyle {(n)}}_\infty )_{n\in {\mathbb {N}}}\) and \(\bar{X}^{}_\infty \) such that \(\bar{X}^{\scriptscriptstyle {(n)}}_\infty \) has law \(\mu _n^s(\bar{X}^{\scriptscriptstyle {(n)}}_\infty \in \cdot )\) for each n, \(\bar{X}^{}_\infty \) has law \({\mathbb {N}}_o^s(\bar{X}^{}_\infty \in \cdot )\), and

For now we may assume \(\mathcal {F}=\sigma ((\bar{X}^{\scriptscriptstyle {(n)}}_\infty )_{n\in {\mathbb {N}}}, \bar{X}^{}_\infty )\). We claim that we may assume that for all \(n\in {\mathbb {N}}\) there are processes \((\varvec{X}^{\scriptscriptstyle {(n)}},{\tilde{e}}^{\scriptscriptstyle {(n)}})\in \mathcal {D}\times \tilde{\mathcal {D}}\) defined on \((\varOmega ,\mathcal {F},{\mathbb {P}}^{\scriptscriptstyle \{s\}})\) such that

To see this, work on \({{\bar{\varOmega }}}=\varOmega \times \mathcal {D}\times \tilde{\mathcal {D}}\) with the product \(\sigma \)-field \(\bar{\mathcal {F}}\), and define \({{\bar{{\mathbb {P}}}}}^{\{s\}}\) on \(\bar{\mathcal {F}}\) by

where the above conditional probabilities are taken to be a regular conditional probabilities. In short, given \((\bar{X}^{}_\infty ,(\bar{X}^{\scriptscriptstyle {(n)}}_\infty )_{n\in {\mathbb {N}}})\), we take \((\varvec{X}^{\scriptscriptstyle {(n)}},\tilde{e}^{\scriptscriptstyle {(n)}})_{n\in {\mathbb {N}}}\) to be conditionally independent and with laws \(\mu _n^s((\varvec{X}^{\scriptscriptstyle {(n)}},{\tilde{e}}^{\scriptscriptstyle {(n)}})\in \cdot |\bar{X}^{\scriptscriptstyle {(n)}}_\infty )\). Clearly the resulting enlarged space satisfies the above claim. We now relabel the enlarged space as \((\varOmega ,\mathcal {F},{\mathbb {P}}^{\scriptscriptstyle \{s\}})\) to ease the notation.

Note that for each fixed n, \(\varvec{{\mathcal {T}}}^{\scriptscriptstyle {(n)}}=({\mathcal {T}}^{\scriptscriptstyle {(n)}}_t)_{t\ge 0}:=(\text {supp}(X^{\scriptscriptstyle {(n)}}_t))_{t\ge 0}\) is a copy of our rescaled set-valued process so we can define \(S^{\scriptscriptstyle {(n)}}, R^{\scriptscriptstyle {(n)}}\) and \(\overset{\varvec{a,n}}{\rightarrow }\) just as before using \({\tilde{e}}^{\scriptscriptstyle {(n)}}\) and \(\varvec{{\mathcal {T}}}^{\scriptscriptstyle {(n)}}\). For the latter, set \(e^{\scriptscriptstyle {(n)}}_{s,t}(y,x)={\tilde{e}}^{\scriptscriptstyle {(n)}}_t(y,x)(s)\) and write \((s,u)\overset{\varvec{a,n}}{\rightarrow }(t,x)\) iff \(s\le t\) and \(e^{\scriptscriptstyle {(n)}}_{s,t}(x,y)=1\) (recall (1.5) and (1.17)). In particular \(\varOmega ^n_{i,\ell }\) and \(\varOmega ^n_m\), as in Lemma 4.6 can be defined as subsets of \(\varOmega \), and that result and (1.31) imply

To reinterpret Theorem 1 we introduce

and note that the law of \(\varDelta ^{\scriptscriptstyle {(n)}}(\rho )\) is the same under \({\mathbb {P}}^{\scriptscriptstyle \{s\}}\) and \(\mu _n^s\). Therefore

On the original space \(\varDelta ^{\scriptscriptstyle {(n)}}(\rho )>C_{1}(\rho ^\alpha +n^{-\alpha })\) implies \(\delta _n<\rho \) and so by Theorem 1 and (1.31) (as used in (4.18))

Next note that (1.31) implies for \(m\in {\mathbb {N}}\),

Finally use (1.31) and recall Lemma 4.4(b) to see that for \(m\in {\mathbb {N}}\),

We have \(R^{\scriptscriptstyle {(n)}}=\mathcal {R}(\varvec{X}^{\scriptscriptstyle {(n)}})={\mathrm {supp}}(\bar{X}^{\scriptscriptstyle {(n)}}_\infty )\), and \(R={\mathrm {supp}}(\bar{X}^{}_\infty )\) defines the range of a SBM. It suffices to show

Lemma 4.3 and (4.17) show that \(\varDelta _1(R,R^{\scriptscriptstyle {(n)}})\overset{a.s.}{\longrightarrow }0\), and therefore if we set \(R^\delta :=\{x:d(x,R)\le \delta \}\) it suffices to fix \(\tau _0>0\) and prove

Fix \(\varepsilon >0\) and let \(\tau (\tau _0,\varepsilon ,s)\), \(n_0(\tau _0,\varepsilon ,s)\) be as in Lemma 4.4(c), and choose \(0<\tau <\tau (\tau _0,\varepsilon ,s)\). Recalling \(R^{\scriptscriptstyle {(n)}}_\tau =\cup _{s\le \tau }{\mathcal {T}}_s^{\scriptscriptstyle {(n)}}\), we see from Lemmas 4.2 and 4.4(c) that

For \(m\in {\mathbb {N}}\), define the finite grid of points

where \(K_0\in {\mathbb {N}}\) is chosen so that

Define the finite collection

Fix \(m\in {\mathbb {N}}\) sufficiently large so that

If \((W_u,u\ge 0)\) denotes a d-dimensional Brownian motion with variance parameter \( \sigma _0^2\) starting at o under a probability measure \({\mathbb {P}}_o\), then for any open ball B,

where the second line is standard (e.g. Theorem II.7.2(iii) of [32]). Therefore by (4.17), the above equality, and standard properties of the weak topology we have (recall \(K=1024\))

So there is an \(n_1=n_1(m,\varepsilon ,\tau _0,s)\) so that for \(n\ge n_1\) we have

and, if \(M_n\) is as in Lemma 4.6, then for \(n\ge n_1\)

Assume \(n\ge n_1\), and then choose \(\omega \) so that (\(\varDelta ^{\scriptscriptstyle (n)}\) is as in (4.19))

Let \(x\in R^{\scriptscriptstyle {(n)}}{\setminus } R_\tau ^{\scriptscriptstyle {(n)}}\), and then choose \(s>\tau \) so that \(x\in {\mathcal {T}}_s^{\scriptscriptstyle {(n)}}\). By (4.27), (iii) in (4.34), and \(s<S^{\scriptscriptstyle {(n)}}\) (since \({\mathcal {T}}_s^{\scriptscriptstyle {(n)}}\) is non-empty), we have

and so we can choose \(i\in \{0,\dots ,2^{2m-1}\}\) so that \(s\in [(i+1)2^{-m},(i+2)2^{-m})\). Since \(|x|\le r_0(R^{\scriptscriptstyle {(n)}})<2^{m-1}\) (by (iv) of (4.34)), (4.25) shows there is a \(q\in G_m\) so that

the last by (4.26). By (1.10) \(\exists \, x_0\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_{i2^{-m}}\) such that \((i2^{-m},x_0)\overset{\varvec{a,n}}{\rightarrow }(s,x)\). Assume \(u\in [(i+1)2^{-m},(i+2)2^{-m}]\) and \((i2^{-m},x_0)\overset{\varvec{a,n}}{\rightarrow }(u,y)\) for some \(y\in {\mathcal {T}}^{\scriptscriptstyle {(n)}}_u\). Then use (4.36), (4.19), and (ii) in (4.33) to see that

where we have used (4.26) and (4.31) in the last line. This implies that

By (1.10) and \((i2^{-m},x_0)\overset{\varvec{a,n}}{\rightarrow }(u,y)\), there is an \(x'\in {\mathcal {T}}_{(i+1)2^{-m}}\) s.t.

The fact that \(\omega \notin \varOmega _m^n\) (by (i) of (4.33)), \(m\le M_n\) (by (4.30)), \(i\in \{0\dots ,2^{2m-1}\}\), \(x_0\in {\mathcal {T}}_{i2^{-m}}^{\scriptscriptstyle {(n)}}\), and \((i2^{-m},x_0)\overset{\varvec{a,n}}{\rightarrow }((i+1)2^{-m},x')\) shows that the right-hand side of (4.37) is at least \(K^{-m}\). This inequality and (4.35) imply

Use this and (4.36) to conclude \(\bar{X}^{}_\infty (B(x,\tau _0))>K^{-m}/2\) and therefore that \(x\in R^{\tau _0}\). We have shown that

Now use (4.18), (4.20), (4.21), (4.22), and (4.29) to see that the probability, P(m, n) that one of the 5 conditions listed in (4.33)-(4.35) fails is at most

Our lower bounds on m and n (in particular use (4.28) and (4.32)) shows that the above is at most \(3\varepsilon \), and so we have shown

Recalling (4.38) we conclude that

This, together with (4.24), proves (4.23) and so completes the proof. \(\square \)

5 The extrinsic one-arm probability

In this section we prove Theorem 3. Recall that \(r_0(G)=\sup \{|x|: x\in G\}\).

Lemma 5.1

The map \(r_0: \mathcal {K} \rightarrow [0,\infty )\) is continuous.

Proof

Let \(K_n \rightarrow K\), and let \(\varepsilon >0\). Choose \(n_0\) sufficiently large so that \(K_n \subset K^\varepsilon \) and \(K\subset K_n^\varepsilon \) for all \(n\ge n_0\). Then for such n, \(r_0(K_n)\le r_0(K)+\varepsilon \) and \(r_0(K)\le r_0(K_n)+\varepsilon \). \(\square \)

Proof of Theorem 3