Abstract

In recent years, one of the most popular techniques in the computer vision community has been the deep learning technique. As a data-driven technique, deep model requires enormous amounts of accurately labelled training data, which is often inaccessible in many real-world applications. A data-space solution is Data Augmentation (DA), that can artificially generate new images out of original samples. Image augmentation strategies can vary by dataset, as different data types might require different augmentations to facilitate model training. However, the design of DA policies has been largely decided by the human experts with domain knowledge, which is considered to be highly subjective and error-prone. To mitigate such problem, a novel direction is to automatically learn the image augmentation policies from the given dataset using Automated Data Augmentation (AutoDA) techniques. The goal of AutoDA models is to find the optimal DA policies that can maximize the model performance gains. This survey discusses the underlying reasons of the emergence of AutoDA technology from the perspective of image classification. We identify three key components of a standard AutoDA model: a search space, a search algorithm and an evaluation function. Based on their architecture, we provide a systematic taxonomy of existing image AutoDA approaches. This paper presents the major works in AutoDA field, discussing their pros and cons, and proposing several potential directions for future improvements.

Similar content being viewed by others

1 Introduction

Promoted by recent advances in neural network architectures, deep learning has made great progress in Computer Vision (CV) [1,2,3,4]. In particular, deep learning models have been successfully applied to image classification tasks in diverse areas from medical imaging [5, 6] to agriculture [7, 8]. However, to achieve enhanced performance, deep learning, as a data-driven technology, places significant demands on both the quantity and quality of data for model training and testing. Effectively training a supervised model highly relies on enormous amounts of annotated data, which is often challenging for most practical applications [9].

To address the issue of data insufficiency, Data Augmentation (DA) is widely utilized. In general, data augmentation refers to the process of artificially generating data samples to increase the size of training data [10]. In the imaging domain, this is usually done by applying image Transformation Functions (TFs), such as translation, rotation or flipping. For computer vision tasks, image DA has been utilised in nearly all supervised neural network architectures to increase data volume and variety, including traditional data-driven models [11,12,13,14], and few/zero-shot learning [15]. Besides supervised approaches, DA techniques are also extensively applied in the field of unsupervised learning. For example, contrastive self-supervised learning relies on image transformations to incorporate data invariance in the representation space across various augmentations [16].

In the context of image augmentation, a DA policy refers to a set of image operations, which are used to transform the image data. When applying image DA, choosing a carefully designed augmentation scheme (i.e. DA policy) is necessary to improve the effectiveness of DA and hence the associated network training [1, 17]. For instance, data augmented by random image operations can be redundant. But overly aggressive TFs might corrupt the original semantics, and introduce potential biases into the training dataset [13]. Therefore, different datasets or domains may require different types of augmentations. Specifically, when standard supervised approaches are applied, classification tasks with limited data may require label-preserving augmentations to provide direct semantic supervision. However, for few/zero-shot learning models, more emphasis is placed on increasing data diversity in order to generate an enriched training set [18], which might promote more aggressive augmentation TFs.

In spite of the ubiquity and importance of DA techniques, there is little selection strategy in DA policy design when given certain datasets. Unlike other machine learning topics that have been thoroughly explored, less attention has been put into finding effective DA policies to benefit particular dataset, and hence improve the model accuracy. Instead, DA policies are often intuitively decided based on past experience or limited trials [19]. Decisions on augmentation strategies are still made by human experts, based on prior knowledge. For example, the standard augmentation policy on ImageNet data was proposed in 2012 [1]. This is still widely used in most contemporary networks without much modification [20]. Furthermore, criteria for selecting good augmentation methods on different datasets may greatly vary due to the nature of given tasks. The traditional trial-and-error approach based on training loss or accuracy can give rise to extensive, redundant data collections, wasting computational efforts and resources.

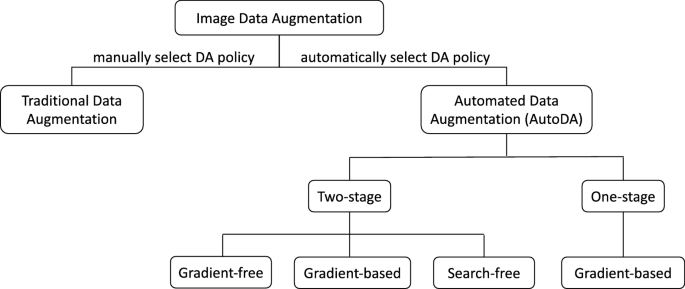

Motivated by progress in Automated Machine Learning (AutoML), there has been a rising interest in automatically searching effective augmentation policies from training data [20,21,22,23]. Such techniques are often referred to as Automated Data Augmentation (AutoDA). Figure shows a basic taxonomy of DA techniques, depicting the relationship between traditional DA and advanced AutoDA, as well as several sub-classes of AutoDA. Compared with standard DA, AutoDA emphasizes the automation aspect of DA policy selection. Recent research has found that instead of manually design the DA schemes, directly learning a DA strategy from the target dataset has the potential to significantly improve model performance [10, 24,25,26]. Specifically, the DA policy that can yield the most performance gain on classification model is regarded as the optimal augmentation policy for a given dataset. Among various contemporary works, AutoAugment (AA) stands out as the first AutoDA model, achieving state-of-the-art results on several popular image classification datasets, including CIFAR-10/100 [27], ImageNet [28] and SVHN [29]. More importantly, AA provides essential theoretical foundation for later works that support automated augmentation [21,22,23, 30, 31].

The progress of automating DA policy search can potentially change the existing process of model training. AutoDA model can automatically select the most effective combination of augmentation transformations to form the final DA policy. Once the optimal augmentation policy is found, the training set augmented by the learned policy can dramatically boost the model performance without extra input. Furthermore, AutoDA methods can be designed to be directly applied on the datasets of interest. The optimal DA policy learned from the data is regarded as the best augmentation formula for the target task, and hence it should guarantee the best model performance. Another desirable aspect of AutoDA techniques is their transferability. According to the findings in [20], learned DA policies can also be applied on other similar datasets with promising results.

Although considerable progress has been made for DA policy search, there is still a lack of comprehensive survey that can systematically summarize the diverse methods. To the best of our knowledge, no one has conducted a qualitative comparison of existing AutoDA methods or provided a systematic evaluation of their advantages and disadvantages. To fill this gap, this paper aims to identify the current state of research in the field of Automated Data Augmentation (AutoDA), especially for image classification tasks.

In this paper, we mainly review contemporary AutoDA works in imaging domain. We provide a systematic analysis, identifying three key components of standard AutoDA techniques, i.e. search space, search algorithm and evaluation function. Based on the different choices on search algorithms in reviewed works, we then propose a two-layer taxonomy of all AutoDA approaches. We also evaluate AutoDA approaches in terms of the efficiency of search algorithm, as well as the final performance of trained classification model. Through comparative analysis, we identify major contributions and limitations of these methods. Lastly, we summarise several main challenges and propose potential future directions in AutoDA field.

Our main contributions can be summarized as follows:

-

1.

Background on image data augmentation, including traditional approaches and Automated Data Augmentation (AutoDA) models (Sect. 2).

-

2.

Introduction of three key components within standard AutoDA models, along with evaluation metrics and benchmarks used in most works (Sect. 3).

-

3.

A hierarchical taxonomy of the mainstream AutoDA algorithms for image classification tasks from the perspective of hyper-parameter optimization (Sect. 4).

-

4.

Thorough review of each AutoDA method in the taxonomy, detailing the search algorithm applied (Sects. 5 and 6).

-

5.

Discussion about the current state of AutoDA technique, as well as the existing challenges and potential opportunities in future (Sect. 7).

2 Background

This section introduces background information about data augmentation in the computer vision field with focus on image classification tasks. We first provide a general overview of how DA technique developed and been applied to computer vision tasks. Then we briefly describe several traditional image processing operations that are involved in most AutoDA models. Finally, we discuss the recent advances in AutoDA techniques and how such techniques relate to Automated Machine Learning (AutoML).

2.1 Historical overview of image data augmentation

The early application of image augmentation can be traced back to LeNet-5 [32], where a Data Augmentation (DA) technique was applied by distorting images for recognizing handwritten and machine-printed characters. This work was one of the earliest pre-trained Convolutional Neural Networks (CNNs) that used DA for image classification tasks. Generally, DA can be regarded as an oversampling method. The objective of oversampling is to mitigate the negative influence of limited data or class imbalances by increasing data samples. A naive approach for oversampling is random oversampling, which randomly duplicates data points in minority classes until a desired data amount or data distribution is achieved. However, the duplicate images created by this technique can result in model overfitting towards the minority class. This problem becomes even more notable when deep learning technique is used. To add more variety to generated samples, DA via image transformations has emerged.

The most early famous use case of image DA was AlexNet model [1]. AlexNet significantly improved classification results on ImageNet data [28] through the use of a revolutionary CNN architecture. In their work, image augmentation was used to artificially expand the dataset. Multiple image operations were applied to the original training set, including random cropping, horizontal flipping and colour adjustment in RGB space. These transformation functions helped mitigate overfitting problems during model training. According to the experimental results in [1], image DA reduced the final error rate by approximately \(1\%\). Since then, image augmentation has been regarded as a necessary pre-processing procedure before training complex CNNs, from VGG [3] to ResNet [4] and Inception [33].

Image augmentation is not limited to the basic image processing. Following the proposal of Generative Adversarial Network (GAN) in [34], related works flourished in the following decade. Among them, the most influential technique was Neural Architecture Search (NAS) [35]. NAS is a type of AutoML technique, which is the process of searching for model architectures through automation. The advancement of NAS greatly promoted the development of DA technology in the imaging field. Applying concepts and techniques from NAS and AutoML has gained increasing interest in the CV community. Recent progress include Neural Augmentation [36], which tests the effectiveness of GANs in image augmentation; Smart Augmentation [10], which generates synthetic image data using neural networks; and AA [20], which is aimed at the automation of image transformation selection for DA. The latter work forms the basis for AutoDA and is the focus of this survey.

Most of the augmentation methods mentioned before were designed for image classification. The ultimate goal of image DA in classification tasks is to improve the predication accuracy of discriminative models. However, the same technique is applicable for other computer vision tasks, for example Object Detection (OD), where image augmentation can be combined with advanced deep neural networks including YOLO [37] and R-CNN series [38,39,40]. Semantic segmentation task [41] can also benefit from DA before training complex networks such as U-Net [42]. In this study, we particularly focus on the application of Automated DA (AutoDA) for image classification tasks, as there exists more published datasets in this domain that allow to conduct a fair evaluation. For some AutoDA methods, we also discuss the possibilities of applying them in object detection tasks if there are experimental results available.

2.2 Traditional image augmentation techniques

Image augmentation aims to enhance both the quantity and quality of datasets so that neural networks can be better trained [43]. Usually, DA does this in two ways, either through traditional image operations or based on deep learning technology. Traditional augmentation often emphasizes on preserving the image’s original label and transforms existing images into a new form [43]. This method can be achieved through various image processing approaches, including but not limited to geometric transformations, adjustment in colour space or even combinations of them.

Another augmentation technique based on deep learning attempts to generate synthetic images as the training set. Major techniques involve Mixup augmentation [44], GANs and transformations in feature space [25]. Due to the complexity of deep learning DA, only the basic image processing operations are considered in recent automated DA methods. Hence, we focus on the basic image transformations that can be easily parameterized. The rest of this section briefly introduces several basic image processing functions that are usually considered in AutoDA models, including geometric transformations, flipping, rotation, cropping, colour adjustment and kernel filters. Another two augmentation algorithms are also covered due to their presence in AutoAugment work [20], namely Cutout [45] and SamplePairing [46].

2.2.1 Geometric transformations

The simplest place to start image augmentation is using geometric transformation functions, such as image translation or scaling. These operations are easy to implement and can also be combined with other transformations to form more advanced DA algorithms. One important thing when applying such operations is whether they can preserve the original image label after the transformation [47]. From the perspective of image augmentation, the ability to keep label consistency can also be called safety feature of transformation functions [43]. In other words, transformations that may risk corrupting annotation information are considered to be unsafe. In general, geometric transformations tend to preserve the labels as they only change the position of key features. However, depending on the magnitude of the transformation function, the application of the chosen operation might not always be safe. For example, translation of the y axis with a high magnitude may end up completely shifting the object-of-interest outside of the visible area, therefore it fails to preserve the label of the post-processed image.

2.2.2 Rotation

Rotating the image by a given angle is another common DA technique. It is a special type of geometric transformation, which also has the risk of removing meaningful semantic information from the visible area. Aggressive operations with a large rotation angle are usually unsafe, especially for text-related data, e.g. "6" and "9" in SVHN data [29]. However, according to [43], slight rotation within the range of \(1^{\circ }\) to \(30^{\circ }\) can be helpful for most image classification tasks.

2.2.3 Flipping

Flipping is different from rotation augmentation as it generates mirror images. Flipping can be done either horizontally or vertically, while the former is more commonly used in computer vision [43]. This is one of the simple yet most effective augmentation techniques on image data, especially for CIFAR-10/100 [27] and ImageNet [28]. The safety feature of flipping largely depends on the type of input data. For normal classification or object detection tasks, flipping augmentation preserves the original label. But it can be unsafe for data involving digits or texts, such as SVHN data [29].

2.2.4 Cropping

Cropping is not only a basic DA method, but also an important pre-processing step before training when there are various sizes of image samples in the input data. Before being fed into the model, training data needs to be cropped into a unified \(x \times y\) dimension for later matrix calculations. As an augmentation technique, cropping has a similar effect to geometric translation. Both augmentation methods remove part of the original image patch, while image translation keeps the same spatial resolution of the input and output image. In contrast, cropping will reduce the size of processed image. As described previously, cropping can be a safe/unsafe depends on its associated magnitude value. Aggressive operations might crop the distinguishable features, affecting label consistency, whereas a reasonable magnitude value helps to improve the quality of the training data.

2.2.5 Colour adjustment

Adjusting values in colour space is another practical augmentation strategy that has been commonly adopted. Through the value jitters of single colour channels, it is possible to quickly obtain different colour representations of an image. These RGB values can also be manipulated through matrix operations to mimic different lighting conditions. Alternatively, self-defined rules on pixel values can be applied to implement transformations such as Solarize, Equalize, Posterize functions using in Python Image Library (PIL) [48]. Different from previous DA transformations, colour adjustment preserves the original size and content of input images. However, it might discard some colour information and thus might raise safety issues. For example, if colour is a discrimitative feature of an object of interest, when manipulating the colour values, the distinctive colour of the object may be hard to observe and hence confuse the model. The magnitude of colour transformation is again the determining factor that affects its safety property.

2.2.6 Kernel filters

Instead of directly changing pixel values in colour space, they can be manipulated via kernel filters. This is a widely used technique in computer vision field for image processing. A filter is usually a matrix of self-defined numbers, with much smaller size than the input image. Depending on the element values in the matrix, kernel filters can provide various functionalities. The most common kernel filters include blurring and sharpening. To apply the kernel filter on input image, we treat it as a sliding window, and scroll it over the whole image to get the pixel values out of matrix multiplications as our final output. A Gaussian kernel can cause blurring effect on the filtered image, which can better prepare the model for low-quality images with limited resolution. In contrast, a sharpening filter emphasizes the details in the image, which can help the model gain more information about the key features.

2.2.7 Cutout

Besides simple transformations, another interesting augmentation technique is Cutout [45]. Cutout is inspired by the concept of dropout regularisation, performed on input data instead of embedded units within neural network [49]. This algorithm is specifically designed for object occlusion problems. Occlusion happens when some parts of the object are vague or occluded (hidden) by other non-relevant objects, in which case, only partial observation of the object is possible. This is a common problem especially in real-world scenarios. Cutout augmentation combats this by randomly cropping a small patch out of the original image to simulate the occlusion cases. Training on such transformed data, models are forced to learn from the whole picture rather than just a section of it, which enhances its ability to distinguish object features. Another convenient feature of the Cutout algorithm is that it can be applied along with other image augmentation methods, such as geometric or colour transformation, to generate more diverse training data.

2.2.8 SamplePairing

SamplePairing [46] is an example of a complex augmentation algorithm that combines several simple transformations. It creates a completely new image by randomly choosing two data samples from the training set and mixing them. In standard SamplePairing, such combination is done by calculating the average of pixel values in two samples. The label of the generated images follows the first image and ignores the annotation of the second sample in the input pair. One of the advantages of SamplePairing augmentation is that it can create up to \(N^2\) new data points out of dataset of size N via simple permutation. SamplePairing is straightforward augmentation method that generates synthetic data points out of the original data. The enhancement of data quantity and variety significantly improves model performance and avoids model overfitting problems. This technique is especially helpful for computer vision tasks with limited training data.

2.3 Development of automated data augmentation (AutoDA)

With various image augmentation operations available, the question is how to choose an effective DA policy from these transformations for CV tasks. A naive solution is to apply random augmentations, generating vast amounts of transformed data for training. However, without appropriate control on the type and magnitude of augmentation TFs, the augmented data points might be simple duplicates or even semantically corrupted, which can lead to performance loss. Furthermore, overly augmented data might require excessive computational resources during model training, causing efficiency issues. A systematic selection strategy for a DA policy is therefore needed. A DA policy refers to a composition of various image distortion functions, which can be applied to training data for data augmentation.

Despite extensive research on DA transformations, the selection of a given augmentation policy usually relies on human experts. Especially in the context of CV tasks, the decision on which image operations to use is mainly made by machine learning engineers based on past experience or domain knowledge. Therefore, the optimal strength of a given DA policy is highly task-specific. For example, geometric and colour transformations are commonly used in standard classification datasets, including CIFAR-10/100 [27] and ImageNet [28]. While resizing and elastic deformations are more popular on digit images such as MNIST [50, 51] and SVHN [29] datasets. There is no universal agreement on augmentation strategies for all types of CV tasks. In most cases, DA policies need to be manually selected based on prior knowledge. However, human effort involved in deep learning is usually considered biased and error-prone. There is no theoretical evidence to support the optimal human-decided DA policies. It is infeasible to manually search for the optimal DA policy that can achieve the best model performance. Additionally, without the help of advanced ML technique, finding an effective DA policy must rely on empirical results from multiple experiments, which can be excessively time-consuming.

To reduce the potential bias and accelerate the design process, there has been increasing interest in automating the selection of DA policies. This technique is known as Automated Data Augmentation (AutoDA). The development of AutoDA is motivated by the advancements in Neural Architecture Search (NAS) [35], which automatically searches for the optimal architecture for deep neural networks instead of by manual approach. The majority of AutoDA techniques rely on different search algorithms to search for the most effective (optimal) augmentation policy for a given dataset. In the context of AutoDA, an optimal DA policy is the augmentation scheme that can yield the most performance gain and highest accuracy score.

The earliest AutoDA work can be traced back to Transformation Adversarial Networks for Data Augmentations (TANDA) [52] in 2017. This was the first attempt to automatically construct and tune DA policies according to provided data. The parameterization in TANDA inspired the design of search space in AutoAugment (AA) [20], and provided a standard problem formulation to the AutoDA field. AA used Reinforcement Learning (RL) to perform the augmentation search. During the search, augmentation policies were sampled via a Recurrent Neural Network (RNN) controller and then used for model training. Instead of directly searching on the target data, AA created a subset out of original training set as a proxy task. The evaluation of augmentation policies was also conducted on a simplified network instead of the final classification model. Unfortunately, searching in AA requires thousands of GPU hours to complete even under reduced setting.

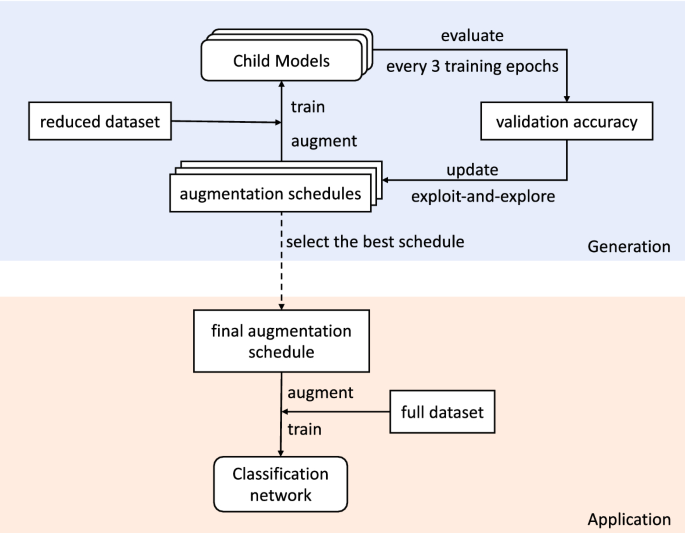

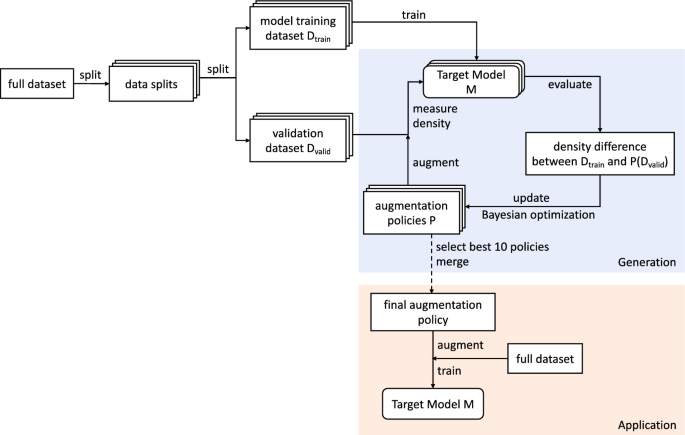

With the establishment of augmentation search space, efficiency problems have become the focus of later AutoDA works. Fast AutoAugment (Fast AA) [21] is one of the most popular improved versions of the original AA. Instead of RNN, Fast AA applies Bayesian optimization to sample the next augmentation policy to be evaluated, which greatly reduces the search cost. Additionally, Fast AA firstly uses density matching for policy evaluation, which completely eliminates the need for repeated training. Another approach to improve search efficiency is via parallel computation. Population-Based Augmentation (PBA) [23] adopts Population-Based Training to optimize the augmentation policy using several subsets of the target data simultaneously. The search goal in PBA is also slightly different than previous approaches. PBA aims to find a dynamic schedule during model training, rather than a static policy. Both Fast AA and PBA substantially reduce the complexity of the AA algorithm, and maintain comparable performance at the same time. However, there is still an expensive searching phase in these models especially when faced with large datasets or complicated models, which inevitably leads to poor efficiency.

To further enhance the scalability of AutoDA models, techniques such as gradient-based hyper-parameter optimization have been explored recently. AutoDA based on gradient is usually achieved by various gradient approximators to estimate the gradient of augmentation hyper-parameters with regard to model performance. This process ensures the hyper-parameters can be differentiated and hence optimized along with the model training. Adversarial AutoAugment (AAA) [53] and Online Hyper-parameter Learning AutoAugment (OHL-AA) [54] apply the REINFORCE gradient estimator [55] to achieve gradient approximation. Other gradient estimators are also applicable in AutoDA. For example, DARTS [56] is employed in Faster AutoAugment (Faster) [22] and Differentiable Automatic Data Augmentation (DADA) [57]. Using the same policy model as in Fast AA [21], OHL-AA optimizes augmentation policies in an online fashion during model training. There is no separate stage for searching in OHL-AA. Instead, it adopts a bi-level optimization framework, where the algorithm updates the weights of the classification model and hyper-parameters of augmentation policy at the same time. This scheme significantly reduces the search time. Similarly, there are two optimization objectives in AAA, one of which is the minimization of training loss, and the other is the minimization of adversarial loss [53]. Two objectives are optimized simultaneously in AAA, providing a much more computationally affordable solution.

Even though gradient-based approaches are more efficient in comparison to vanilla AA, these methods are still based on an expensive policy search. The bi-level setting also increases the complexity of the model training stage. Recent advancements in AutoDA aim to further enhance the efficiency of augmentation design by excluding the need for search. Proposed in 2020, RandAugment (RA) [31] reparameterizes the classical search space. It replaces the individual parameter for each transformation with two global variables. A simple grid search is performed in RA to optimize two hyper-parameters. The findings in RA not only suggest that the traditional search phase may not be necessary, but also indicate that the search using surrogate models could be sub-optimal. It was found that the effectiveness of the DA policy was relevant to the size of the model and training set, thus challenges all previous approaches based on proxy tasks. Another AutoDA model that does not rely on searching is UniformAugment (UA) [58]. UA further simplifies the augmentation space through invariance theory. The authors hypothesize an approximate invariant augmentation space. Any augmentation policy sampled from that space could lead to similar model performances, thus completely removing the search phase. Despite the promising speed, the model performance is a bottleneck in these search-free methods. Neither approach is able to make significant progress on model accuracy when compared with previous approaches. To apply AutoDA techniques in practice, further research and experiments are required.

3 Automated data augmentation techniques

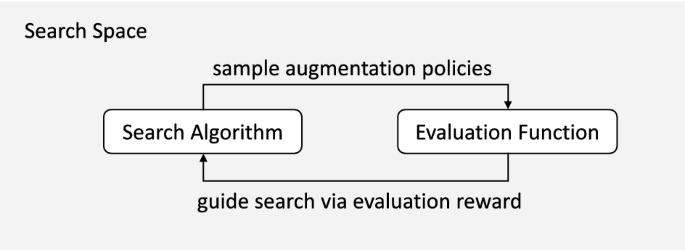

This section aims at introducing the basic concepts and terminologies of Automated Data Augmentation (AutoDA) techniques. In general, finding an optimal DA policy is formulated as a standard search problem in most works [20,21,22,23, 54, 57]. A standard AutoDA model consists of three major components: a search space, a search algorithm and an evaluation function. In this section, the functionalities and relationships of three component are discussed. We also describe how to assess the proposed AutoDA models, including two different evaluations based on direct and indirect approaches. Lastly, we introduce several commonly used datasets and benchmarks for comparative analysis.

3.1 Key components

For image classification tasks, the major objective of AutoDA models is to achieve the best classification accuracy using an optimal DA policy automatically learned from a given dataset. Inspired by the DA strategy modelling in Transformation Adversarial Networks for Data Augmentations (TANDA) [52], AutoAugment (AA) [20] is considered to be the first work that attempted to automate the augmentation policy search. AA formulates the AutoDA task as a search problem, and provided basic parameterization for the search space. The parameterization in AA is largely adopted in later AutoDA works, and is regarded as the de facto standard [21, 23, 59]. Specifically, there are three key components within a standard AutoDA formulation:

Definition 1

(Search Space) is regarded as the domain of DA policies to be optimized where all candidate solutions via augmentation hyper-parameters are defined.

Definition 2

(Search Algorithm) is used to retrieve augmentation policies from the search space and to sample the next search point based on a reward signal returned by an evaluation function.

Definition 3

(Evaluation Function) is the procedure of assessing or ranking sampled DA policies by assigning reward values. This usually relies on the training of the classification model.

3.1.1 Search space

The search space defines how DA policies are formed for subsequent searches. Specifically for image classification tasks, the augmentation policy refers to a composition of several image operations, which can be described by augmentation hyper-parameters. Generally, a complete augmentation policy consists of multiple sub-policies, each of which is used to augment one training batch. A sub-policy is composed of several basic image Transformation Functions (TFs). An augmentation policy is usually parameterized by two hyper-parameters: the application probability and the operation magnitude. The probability describes the probability of applying a certain transformation function on input images, while the magnitude determines the strength of the operation. Each TF within a DA policy is associated with a pair of probability and magnitude hyper-parameters. Depending on the choice of search or optimization algorithm, the formulation of the search space can vary greatly. For example, some works completely re-parameterize the search space to reduce the search complexity [31, 58]. However, the DA policy parameterization proposed in AA [20] has been widely used in later works with little or no modification.

3.1.2 Evaluation functions

The evaluation of augmentation policies is conducted from two perspectives, including the effectiveness and safety. The former feature emphasizes the impacts of DA on final classification results, while the safety feature focuses on the label preservation of the transformed data. Generally, the efficacy of augmentations is judged by the performance of classification models based on training loss or accuracy values. Such procedures can also be called direct evaluation functions, since the strength of the DA strategy is directly reflected in how much performance gains this augmentation policy can produce. The higher the classification accuracy, the better the associated DA policy.

Another alternative evaluation is an indirect method, emphasizing the safety feature of data augmentation. Examining the safety of DA policy often resorts to the use of density matching [21]. The main objective of density matching is to match the distribution of the augmented data to the original training data. The basic idea behind this indirect evaluation is to treat the transformed images as missing data points of the input data, thereby improving the generalizability of the classification model. Smaller density differences indicate higher similarity of data distributions, which can lead to better augmentation strategies. Using density matching, the policy evaluation does not require back-propagation of the model training. Such algorithms can be regarded as the indirect evaluation function of AutoDA tasks.

3.2 Overall workflow

The relationship between these components is depicted in Fig. . Firstly, the AutoDA model specifies the parameterization of DA policies for the given task, providing a finite number of potential solutions to be searched and evaluated. Within the defined search space, the search algorithm then samples DA policies and passes the candidates to the evaluation section. In earlier AutoDA works, augmentation policies were sampled one by one [20, 60], while later approaches tend to employ multi-threaded processes, sampling multiple candidates and evaluating them in a distributed fashion. This substantially improves the search efficiency. After a DA policy is selected by the search algorithm, it is then rated by the evaluation function to compute the reward signal. Each augmentation strategy is associated with a reward value, indicating its effectiveness in improving model performance. Finally, the reward information is used to update the search algorithm, guiding the sampling of the next DA policy to be evaluated. The entire search recursion process ends when the optimal policy is found. This can be determined by examining the difference in performance gain between the current search point and the previous point. However, the stopping criteria might lead to excessive searching with little improvement, especially in the later phases, resulting in a waste of resources. In most practical implementations of AutoDA algorithms, the search algorithm will stop when a self-defined stopping condition is fulfilled, for example after a certain number of search epochs [21, 23, 61].

3.3 Two stages of AutoDA

The standard AutoDA pipeline can be divided into two major stages:

Definition 4

(Generation stage) is the process of generating the optimal augmentation policy when given certain datasets. A DA policy is typically described by a sequence of augmentation hyper-parameters. Usually, the final DA solution is generated by a search or optimization algorithm, which samples various candidate strategies from the defined search space, and relies on an evaluation function to assess efficacy of the searched policies.

Definition 5

(Application stage) is the process of applying the policy learned in the generation stage. This is done by augmenting the target dataset using the obtained DA policy to artificially increase both the data quantity and variety, and then train the classification model on the transformed training set.

With the aim of finding the best augmentation strategy for the target dataset, a typical AutoDA problem is mainly solved in the policy generation stage. The best DA policy here specifically refers to the hyper-parameter setting that can maximize the classification model accuracy or minimize the training loss in the later phase, i.e. it can best solve the classification task in application phase. We identify several criterion used in published studies to determine the completion of policy generation:

-

1.

The sampled DA policy can help train the classification model to achieve the highest accuracy scores.

-

2.

The sampled DA policy can provide comparable performance gains to the optimal policy, or

-

3.

A certain number of training/searching epochs has been completed.

Theoretically, the policy generation stage can only end when the first criterion is achieved, i.e. the sampled policy is evaluated to be the optimal augmentation strategy for the given dataset. However, it is often impractical to thoroughly explore the entire search space to identify the best DA policy. A potential solution is to set a specific threshold for model accuracy or training loss to help decide whether the policy is optimal. However, in application scenarios, the optimal strength of data augmentation for classification models is often unknown. It is therefore tricky to set such thresholds as success criteria.

An alternative strategy to stop the generation phase is to relax the optimal criteria. In other words, if the sampled policy can produce comparable improvement in model performance to the optimal DA strategy, it is considered to be optimal. This idea has been widely adopted in many existing AutoDA works [21, 60, 62, 63]. It can be implemented by using the performance difference. For example, if the difference in performance gains between the sampled policy and the best rewarded one is smaller than a certain value, then this policy can be treated as the final output of the generation stage [60, 63]. A more popular alternative is to use density matching. Instead of directly training the classification model, density matching compares the distribution/density of the original data and the augmented samples. The assumption of density matching is that the optimal DA policy can best generalize the classification model by matching the density of given data with the density of the transformed data [21, 62].

In practice, the most commonly used stopping criteria is to manually decide the search limit. Once the training has been conducted after a certain number of epochs, policy generation will be forced to stop and output the DA policy with the best model performance so far. The selection of epoch number usually depends on the available computational resources as well as the complexity of the given task. There is no universal agreement on the stopping criteria.

3.4 Datasets

This section aims at providing a brief overview of the datasets employed in the considered approaches. Annotated datasets are generally used as benchmarks to provide a fair comparison among different AutoDA algorithms and architectures. Furthermore, the growth in size of data and complexity of application scenarios increases the challenge, resulting in constant development of new and improved techniques.

The most used datasets for the task of automated augmentation search are: (i) CIFAR-10/100 [27], (ii) SVHN [29], (iii) ImageNet [64]. CIFAR stands for Canadian Institute for Advanced Research. CIFAR-10 and CIFAR-100 share the same name as both are used for CIFAR research, while the numbers specifies the total number of classes in the dataset. SVHN refers to Street View House Numbers (SVHN). ImageNet is used in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [64]. The characteristics of each dataset are shown in Table , while their statistics are summarized in Table .

The CIFAR and ImageNet dataset are published at the same year, both present standard image classification task and are commonly used in computer vision researches. However, ImageNet is much larger than the CIFAR series in scale and diversity. There are over 5, 000 different categories in the original ImageNet set, with 3.2 million images that have been hand-annotated [28]. For the AutoDA search problem, the enormous quantity of ImageNet data might require significant amounts of computational resources. Training on the complete ImageNet is usually infeasible in practice. Instead, it is often more suitable to use a reduced ImageNet subset for the target task. Additionally, the distribution of instances among different classes can also vary considerably, which can decrease the performance of AutoDA models. To address these issues, each AutoDA work that conducts experiments using ImageNet data uses a distinctive trimming method to set up a smaller and cleaner subset for model evaluation. Nevertheless, due to the diversity of data and imbalanced class distribution, the classification on ImageNet subset is still considered to be a relatively difficult task when compared with other datasets (for augmentation search). In AutoDA works, the reduced ImageNet datasets are constructed differently, with varying sizes and class numbers. Each of them will be described in the works where they are employed.

The CIFAR series consists of much fewer categories, designated by their number [27]. The CIFAR-10 dataset consists of 60, 000 \(32\times 32\) colour images in total. The data distribution among classes in CIFAR-10 is more controlled and unified. 60, 000 images are evenly distributed into 10 classes, providing 6000 images per class. The splitting ration of train:test data is 5:1. In CIFAR-10 dataset, the test batch contains 10, 000 images, randomly selected from the full dataset, but each class contains exactly 1, 000 images. The training set contains the remaining 50, 000 instances. The formulation of CIFAR-100 dataset is similar to CIFAR-10, except there are 100 classes in CIFAR-100, each of which comprises 600 images. The train:test ratio is also 5:1, providing 500 training images and 100 test images per class. With a more balanced data distribution and limited class number, CIFAR data is usually more suitable to benchmark proposed AutoDA algorithms.

SVHN refers to Street View House Numbers. It is also collected from real-world scenarios, widely used for deep learning related researches. Similar to MNIST data [32], images in SVHN are also digits, cropped from house numbers in Google Street View images [29]. The major task of SVHN is to recognize numbers in natural scene images. There are 10 categories in total, each of which represents one digit, e.g. digit 1 has label 1. In SVHN, there are 73, 257 digits for training, 26, 032 digits for testing, and 531, 131 additional data items that can be used as extra training data. In contrast to previous datasets, SVHN specifically emphasizes the pictures of digits and numbers. This might reveal the relationship between DA selection strategy and data types. However, unlike CIFAR, the SVHN data distribution among classes is biased. There are more 0 and 1 digits present in the data, resulting in a skewed class distribution in both training and test set. Seen in Table 2, for SVHN, the number of images per class ranges from 5000 to 14, 000. Such imbalance can be considered as a challenge to better assess AutoDA models from different perspectives.

3.5 Evaluation metrics

To measure the effectiveness of AutoDA approaches, an intuitive way is to evaluate the performance of the final classification model, i.e., after it is has been trained using datasets augmented by AutoDA methods. There are two major aspects during AutoDA evaluation. First, the AutoDA model to be tested needs to be applied to the original dataset, in order to generate the optimal DA policy, which is then used to augment the data. After obtaining augmented training data, the target classifier is trained to solve the given classification task. A commonly used evaluation metric for classification network is accuracy, which is defined by the ratio of the number of correct predictions to the total number of cases examined:

where T, F stands for true and false respectively and indicate whether a prediction is correct or not, and P, N represent positive and negative results. Accuracy is considered to be a valid classification metric in this study because all datasets used in experiments, e.g. CIFAR-10/100, SVHN and reduced ImageNet, are well balanced without any class skew. The metric equivalent to Accuracy is the Error rate, which is the proportion of erroneously classified instances compared to the total instances:

Another dimension to evaluate AutoDA algorithms is their efficiency. Despite the impressive efficacy of some DA approaches, the total computational cost of DA policy generation can be significant [20], which hinders their large-scale application to many real world scenarios. A more comprehensive measure of AutoDA models should incorporate both their efficacy and their performance efficiency. Following the tradition of most AutoDA works, we consider the total GPU time (GPU hours) needed for a single run of a given AutoDA algorithm as a key evaluation metric used in this work.

4 Taxonomy of image AutoDA methods

Table shows a summary of primary works in the AutoDA field. The column Key technique describes the most important technique adapted in each AutoDA model to formulate augmentation search problems. These methods are usually borrowed from other ML-related field, such as NAS or hyper-parameter optimization. Policy optimizer indicates the algorithm or controller that is used to optimize or update the augmentation policy during the search. These AutoDA approaches are classified into two major types based on the stage involved to solve the classification task using the learned DA policy, namely one-stage or two-stage. Additionally, from the perspective of hyper-parameter optimization, these methods can be further categorized into three classes: gradient-based, gradient-free and search-free. Table 3 provides a categorization that projects the underlying optimization algorithm used by each of the methods.

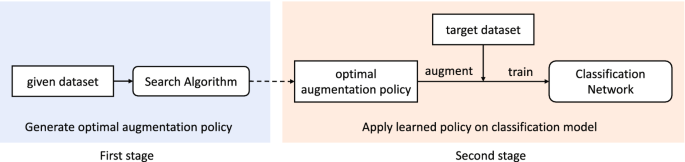

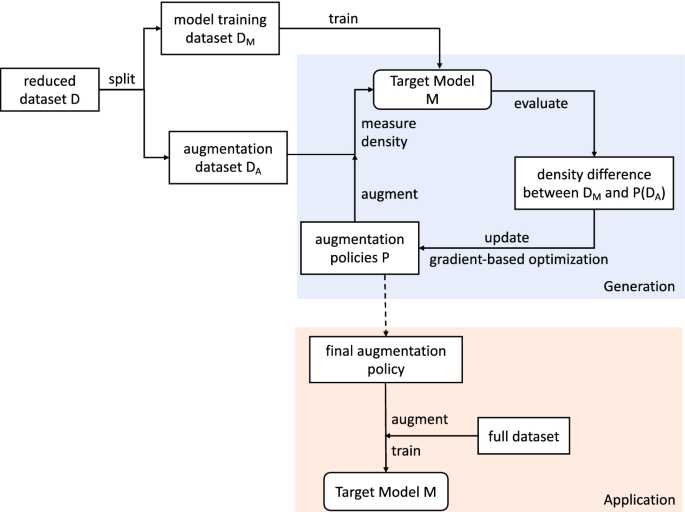

Based on the application sequence of the two stages of the AutoDA model, we classify all existing works into two major categories: one-stage and two-stage approaches (as shown in Fig. 1). Two-stage approaches conduct both the generation and application respectively. In a typical two-stage method, the optimal augmentation policy is generated according to the task dataset from the first stage. After that, the learned augmentation strategies is applied on the training set to train the discriminative model. There are two separate stages required when utilizing a two-stage algorithm. The one-stage approaches combine the generation and application together through the use of gradient approximation methods. By estimating the gradient of a DA policy with regard to model performance, one-stage approaches are able to optimize augmentation policy and classification model simultaneously. As a result, they can obtain the final results and trained model through a single run.

4.1 Two-stage methods

There are two steps involved in applying AutoDA to discriminative tasks in the imaging domain. Generally, an AutoDA model searches for the optimal augmentation strategy and then applies the obtained policy on the target data for model training. Due to the separate processes of searching and training, this kind of approach is described as two-stage in this paper. The general framework of a typical (two-stage) approach is displayed in Fig. . In the first stage, given a specific dataset, the search algorithm looks for the best composition of image transformation functions, also known as the DA policy. The generation stage ends once the optimal policy is identified by the evaluation function or the searching reaches a given time limit. In the second stage, the learnt policy is applied on the target training set - ideally with additional data of increased quantity and targeted variety. Then the augmented training samples are fed into the classification model for final training.

The algorithm used to find the best scheme for data augmentation has been explored in a wide range of existing works. We categorize them into three different classes according to the problem formulation. Some works treat augmentation searching as a standard gradient-free optimization problem [20, 21, 23, 30, 52, 59, 60], whilst other methods approach it from the gradient perspective by means of various gradient approximation algorithms [22]. Other options re-parameterize the entire AutoDA problem in a way that eliminates the need for searching - so called search-free approaches.

4.1.1 Gradient-free

Gradient-free approaches search for the best parameters of the augmentation policy based on model hyper-parameter optimization without gradient approximation. Intuitively, such optimizations can be accomplished by selecting several values for each hyper-parameter, completing a model training for each combination on the target task, and then computing the evaluation metrics of the model performance using all hyper-parameter values. The first attempt to automate such a search process was Transformation Adversarial Networks for Data Augmentations (TANDA) [52], which utilizes a Generative Adversarial Network (GAN) architecture at its core. The objective of the generator is to propose appropriate sequences of arbitrary augmentation operations, which are then sent to a discriminator for effectiveness assessment. The problem formulation in [66] motivated the deep learning community to explore other methods such as AA [20]. AA inherits the augmentation sequence modeling in [10], but applies a different strategy based on Reinforcement Learning (RL). Several possible augmentation policies are sampled via a Recurrent Neural Network (RNN) controller, that are then assessed through training a simplified child model instead of given classification model. Despite its promising performance in terms of model improvement, AA has a non-negligible limitation, which is the extremely low efficiency. It can take up to 15, 000 GPU hours to complete a search over ImageNet data. Even with the smallest CIFAR-10 set, AA still requires thousands of GPU hours to complete a single run.

The majority of the later works on this topic aim to contribute to efficiency enhancements and computational cost reductions. For example, [30] utilizes a similar reinforcement learning method, but slightly modifies the search procedure by sharing the same augmentation parameters from earlier stages. Such auto-augment techniques can be further improved through the application of advanced evolutionary algorithms such as Population-Based Training (PBT) [67]. Some simple searching algorithms have also been found to be beneficial to accelerate the first stage. For instance, [60, 63] replace the original RNN controller with a traditional Greedy Breadth First Search algorithm to simplify the process, and therefore reduce the overall computation cost. In addition to the selection of the search algorithm, modification of the evaluation function can also greatly reduce the computational demands. A landmark work in this direction is Fast AutoAugment (Fast AA) [21], which takes advantage of the variable kernel density [68] and proposes an efficient density matching algorithm as a substitute. In the AutoDA context, the data density represents the overall distribution of data. Instead of a training classification model, density matching evaluates DA policies by comparing the distribution of the original training set and the transformed data. Such algorithms eliminate the need for re-training the model and hence result in a significant efficiency boost.

Another approach is to focus on the effectiveness or precision of the learned augmentation policy. All of the aforementioned methods focus on the resources and time consumption of the search phase. Not much progress has been made in terms of the improvement of classification accuracy. To fill this gap, [65] proposes a more fine-grained Patch AutoAugment (PAA) technique which optimizes the augmentation transformations targeted to local regions of images rather than the whole image. Other state-of-the-art methods in the Network Architecture Search (NAS) field help to increase the augmentation precision. One example is Knowledge Distillation (KD) [69] as used in [59].

4.1.2 Gradient-based

In contrast to gradient-free algorithms, approaches that approximate the gradient of hyper-parameters to be searched are referred to as gradient-based optimizations. So far, the only two-stage approach based on gradients is Faster AutoAugment (Faster AA) [22]. This achieves a more efficient augmentation search for image classification tasks than prior methods including AutoAugment [20], Fast AA [21] and PBA [23]. The authors of Faster AA adapt an innovative gradient approximation method, namely Relaxed Bernoulli distribution [70], to relax the non-differentiable distributions of hyper-parameters and use their gradients as input to a standard optimization algorithm. The consecutive two phases can therefore be done within a single pass. Faster AA model jointly optimizes the hyper-parameters of the augmentation policy (i.e. generation phase) and weights of the classification model (i.e. application phase). The simplification of the policy search space significantly reduces the search cost especially when compared to previous algorithms whilst maintaining the performance. What should be emphasized here is that the model trained during the search in Faster AA is actually abandoned later. To get the final classification result, the learned policy is applied to train the target classification model again. Hence there are still two stages involved in the Faster AA scheme.

4.1.3 Search-free

Despite the advantages of the aforementioned approaches, the added complexity of standard two-stage AutoDA methods might need prohibitive computing resources, for example the original implementation of AA in [20]. Subsequent works mainly aim to accelerate the search cost [21, 23] and utilize gradient approximation [22]. However, these approaches still require an expensive search stage, which usually relies on a simplified proxy task to alleviate efficiency issues. This setting presumes that the learned DA policy based on the proxy task can be transferred to the larger target dataset. However, such assumptions are challenged in [31]. According to the findings in [31], a proxy task might produce sub-optimal DA policies.

To solve the aforementioned problems, several works aim to re-formulate the search problem in AutoDA. These approaches are acknowledged as search-free methods due to the complete exclusion of the search phase. By challenging the optimality of traditional AutoDA methods, search-free approaches re-parameterize the entire search space, resulting in a small number of hyper-parameters, which can be manually adjusted. Therefore, there is no need to conduct the search anymore [62]. Additionally, it is now feasible to directly learn from the full target dataset instead of a reduced proxy task. Therefore, AutoDA models may learn an augmentation policy more tailored to the task of interest instead of through small proxy tasks.

Existing works such as [58] and [31] both belong to the search-free category. Both approaches completely re-parameterize the entire search space so that there is no need to perform resource-intensive searches at all. RandAugment (RA) replaces the enormous search space with a small search space controlled by only two parameters. Both parameters are human-interpretable such that a simple grid search is quite effective. Inspired by RA, UniformAugment (UA) further reduces the complexity of the search space by assuming the approximate invariance of the augmentation space, where uniform sampling is sufficient. Both methods completely avoid a search phase and dramatically increase the efficiency of AutoDA algorithms while maintaining their performance.

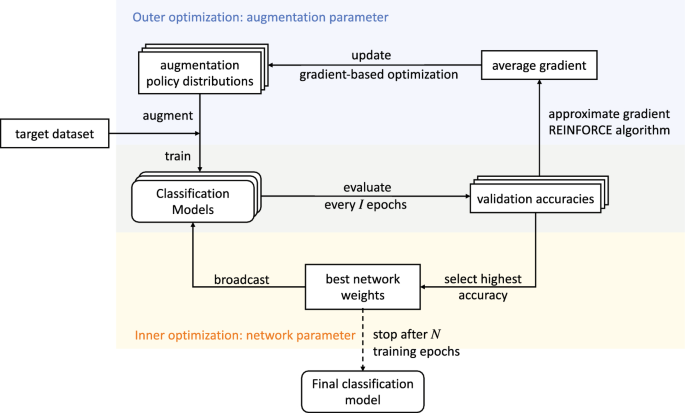

4.2 One-stage methods

The biggest difference between two-stage and one-stage approaches is the joint optimization process in the latter. Previous approaches in the two-stage category mainly rely on an additional surrogate model for policy sampling. They then evaluate the sampled policies via full training on another classification network. The expensive training and evaluation procedure leads to efficiency bottlenecks of AutoDA techniques. To mitigate this issue, one-stage approaches complete the policy generation and application in one single step, eliminating the need for repetitive model training. In standard one-stage schemes, the weights of the classification network and the hyper-parameters of the augmentation policy are optimized simultaneously. This is implemented by a bi-level optimization scheme [71].

At the inner level, they seek to optimize the weights of the discriminative networks, whilst at the outer level looking for hyper-parameters that describe the optimal augmentation policy, under which they can obtain the best performed model as solution to the inner problem. Due to the dependency of inner and outer level optimization, the learning of these two goals are conducted in an interleaved way. Specifically, a separate augmentation network is adapted to describe the probability distribution of sampled policies. The parameters of such a policy model are regarded as hyper-parameters, which are updated after a given number of epochs of inner training [53, 54]. In this bi-level framework, the distribution hyper-parameters and network weights are optimized simultaneously. The minimization of training loss (inner objective) can be easily achieved through classical Stochastic Gradient Descent (SGD), while the vanilla gradient of outer objective is relatively hard to obtain, as the model accuracy is non-differentiable with regard to augmentation hyper-parameters. Therefore, one-stage AutoDA models need to leverage gradient approximation to estimate such gradients for later optimization. In other words, all one-stage approaches in AutoDA are based on gradients.

4.2.1 Gradient-based

As its name suggests, gradient-based models optimize the augmentation policy from the perspective of gradients. The reason it has to rely on gradient approximation is because the original model accuracy is non-differentiable with regard to augmentation policy distribution. Only after the relaxation of distribution, can the gradient of validation accuracy or training loss with regard to hyper-parameters be obtained. There are several advantages of gradient-based approaches. Due to the differentiable accuracy, gradient-based method can directly optimize the hyper-parameters according to the estimated gradient. There is no need to invest a significant amount of time in training child models to test sampled policies. This substantially reduces the workload of policy evaluation. The removal of expensive evaluation procedures also enables the AutoDA algorithm to scale up to even larger datasets and deeper models. The first one-stage AutoDA work based on gradients was Online Hyper-parameter Learning AutoAugment (OHL-AA) [54] in 2019, based on the REINFORCE gradient estimator [55]. The augmentation policy model in OHL-AA is similar to previous works [20, 21], while the original search problem is reformulated as a bi-level optimization task. Published in the same year, Adversarial AutoAugment (AAA) [53] employs the same gradient approximator in an adversarial framework, which further eases the efficiency issue. As the NAS technique develops in 2020, Differentiable Automatic Data Augmentation (DADA) [57] and Automated Dataset Optimization (AutoDO) [62] use the more advanced DARTS estimator [56].

5 Two-stage approaches

In this section, we review two-stage strategies in detail, with focus on the pipeline of the algorithms. We start from the fundamental definition of the augmentation parameters and corresponding search space used in each method. After that, the core algorithms are explored along with their overall workflow. Following that, the major contribution of each method is covered based on experimental results provided in the original paper. Then, we provide a systematic analysis and evaluate the pros and cons of the different two-stage category approaches. Finally, we compare all available two-stage algorithms from the perspective of their accuracy and efficiency, and give suggestions on model selection from a practical application perspective.

5.1 Gradient-free optimization

5.1.1 Transformation adversarial networks for data augmentations (TANDA)

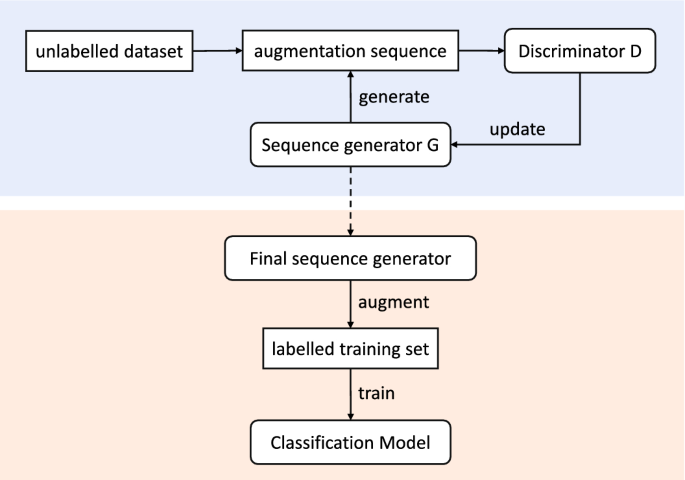

TANDA is considered to be the earliest work supporting automatic discovery of optimised data augmentation policies. Even though other works aimed at automating data augmentation, most of them focused on either creating innovative augmentation algorithms [10], or generating synthetic training data based on a given set of starting images [24]. TANDA, on the other hand, used only the basic image operations based on a user’s specification, and output a sequence of transformation functions as the final augmentation policy. This made it more relevant to many scenarios with diverse data augmentation demands.

An augmentation policy is represented as a sequence of image processing operations in [52]. Users need to specify a range of augmentation operations for the TANDA model to select from, which are also called as Transformation Functions (TFs). In order to support various types of TFs, TANDA regards them as black-box functions that ignores application details, and only emphasizes the final effect of such transformations. For instance, a \(30^{\circ }\) rotation can be achieved with one single TF, or alternatively it can be split into a combination of three \(10^{\circ }\) rotation transformations. The policy modelling in TANDA might not be deterministic or differentiable, but it provides an applicable way of tuning the TF hyper-parameters.

The major objective of TANDA is to learn a model that can generate augmentation policies composed of a fixed number of TFs. Depending on the types of TFs, the DA policy is modelled in two different ways. The first policy model, namely the straightforward mean field model, assumes each TF in an augmentation policy is selected independently. Therefore, the probability of each operation is optimized individually. Mean field modelling largely reduces the number of learnable hyper-parameters during the search. However, this independent representation can be biased, especially when TFs affect each other. In practical scenarios, a certain image processing operation can lead to totally different effects if applied with other TFs. The actual sequence of TF application also matters when some of the TFs are not commutative. To fully represent the interaction among augmentation TFs, TANDA offers another option to model DA policies, the Long Short-Term Memory (LSTM) network. The LSTM model in TANDA outputs probability distributions over all TFs, which emphases the relationship among searched TFs.

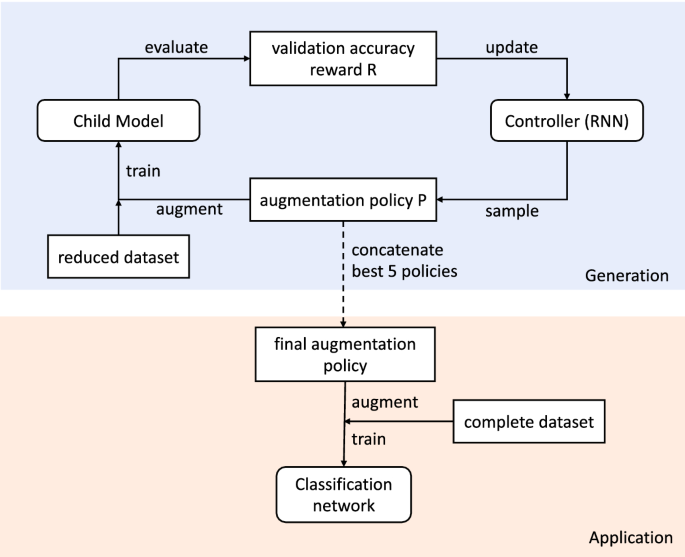

TANDA workflow [52]. Upper/lower sections indicate the policy generation/application stage respectively

The TANDA model applies standard the GAN architecture, consisting of a generator G and a discriminative model D. The general workflow of TANDA is illustrated in Fig. . There are two stages involved in TANDA: policy generation and application. The policy generation phase can be viewed as a classical min-max game in GAN. The goal of sequence generator model G is to sample DA policies that are most likely to fool the discriminator model D, while the D tries to distinguish the transformed images out of the original data. This is done by assigning reward values to the input data. Ideally, in-distribution data points will get higher values whereas the images generated via augmentation will be assigned lower rewards. The reward information is then used to update G for the next policy sampling. After the searching is completed, the final generator is used to augment the original training set to better train the classification network.

There are various advantages of TANDA. Firstly, the performance improvement out of TANDA is convincing. From the experimental results in [52], TANDA outperforms most contemporaneous heuristic DA approaches. In terms of problem formulation, the LSTM policy model tends to be more effective than mean field representation in most cases, which empirically encourages the sequence modelling in the AutoDA scheme. The proposal of these two policy models is considered to be the most significant contribution of TANDA. The representation of augmentation transformations inspired AutoAugment (AA) [20], which also utilized the LSTM model for policy prediction. Furthermore, the positive influence resulted from sequential modelling provides empirical support for later Population-Based Augmentation (PBA) [23], which outputs application schedules rather than a fixed policy. The use of unlabelled data is also a favorable characteristic especially for tasks with limited data. Additionally, a trained TANDA model shows a certain degree of robustness against TF mis-specification. In TANDA, there is no limitation on the selection of the TF range or requirement for safety property of available transformations, therefore it is much easier for users to use in practice. More importantly, TANDA is open-source and can be adapted and applied to any task with limited datasets, not only in the imaging domain but also for text data.

5.1.2 AutoAugment (AA)

AutoAugment (AA) [20] is one of the most popular AutoDA approaches. The majority of subsequent works in this field [21,22,23] adapt a similar setup as AA, especially the definition of the search space and policy model. However, the AA algorithm itself does not provide an optimal solution to the policy search problem due to its severe efficiency issues. However, as the authors of AA emphasize, the fundamental contribution of AA lies in the automated approach to DA and the development of the search space, rather than the search strategy.

AA formulates the automation of DA policy design as a discrete search problem. In AA, an augmentation policy is a composition of 5 sub-policies, each of which is applied to one training batch. One sub-policy consists of two sequential transformation functions, such as geometric translation, flipping or colour distortion. Each TF in an augmentation policy is described by two hyper-parameters, i.e. the probability of applying this transformation and the magnitude of the application. Inspired by TANDA, the application sequence of these TFs is emphasized. For simplification, the range of probability and magnitude is discrete. The probability is evenly discretized into 11 values, ranging from 0 to 1, whilst the magnitude is selected from positive integers between 1 to 10. The 14 operations implemented in AA are all from standard Python Image Library (PIL). Two additional augmentation techniques, Cutout [45] and SamplePairing [46], are also considered due to their effectiveness in classification tasks. Overall, there are 16 distinct TFs in AA’s search space. Finding an augmentation policy via AA thus has \((16\times 10\times 11)^{10}\approx 2.9\times 10^{32}\) possibilities.

AutoAugment workflow [20]. Upper/lower sections indicate the policy generation/application stage respectively

To automate the process of constructing DA policy, AA has to search over an enormous search space. It now becomes a discrete search problem using the aforementioned formulation. At a high level, the workflow of AA is displayed in Fig. . One of the key components in the AutoDA model is the search algorithm. In the search phase, the search algorithm is used to generate an augmentation policy, which is then evaluated for updates. AA chooses a simple Recurrent Neural Network (RNN) as its search algorithm/controller to sample policy P. The evaluation procedure is done through model training, but using reduced data and a simplified model. Such a model is also called a child model, due to its similar but much simpler architecture when compared to the final classification network. After testing the trained child model on a validation set, the validation accuracy is regarded as reward R to update the search controller. Generally, the reward signal R reflects how effective a policy P is in improving the performance of a child model. The training of the child model has to be done multiple times, because R is not differentiable over policy hyper-parameters, i.e. probability and magnitude.

Through extensive experiments, AA achieves excellent results. It can be directly applied on the target data and achieves competitive model accuracy. Experiments in [20] report state-of-the-art results for common datasets, including CIFAR-10/100, ImageNet and SVHN. AA not only shows superiority in terms of DA policy design, but also provides the option of transferring the searched policy to other similar data. For example, the augmentation policy leaned on CIFAR-10 can function well on similar data CIFAR-100. There is no need to conduct expensive searches on the later, as the policies discovered by AA are able to be generalized over multiple models and datasets. This is a viable alternative especially when direct search is unaffordable. Another advantage of AA is its simple structure and procedure. The search phase is actually conducted over a subset of data, using a simplified child model. Those simplifications provide direct evaluation of augmentation policies, without the recourse to any complicated approximation algorithms. More importantly, AA standardizes the modelling of the augmentation policy and search space in the AutoDA field. The policy model it designs has been widely acknowledged as the de facto solution.

However, AA has serious disadvantages. The choice of algorithms in AA can be substantially improved. It applies Reinforcement Learning as the search algorithm, but this selection is made mainly out of convenience. The authors of AA also indicate that other search algorithms, such as genetic programming [72] or even random search [73, 74], may further improve the final performance. Furthermore, the reduced dataset and simplified model used during the search phase can result in sub-optimal results. According to [31], the power of an augmentation policy largely depends on the size of model and dataset. Therefore, simplification in AA is likely to introduce bias into the found policy. Additionally, the final policy is formed by a simple concatenation of the 5 best policies found in the data batch. The application schedule of these policies is not considered in AA. The greatest shortcoming of AA lies in its efficiency. Evaluation of augmentation policies relies on expensive model training. Due to the stochasticity of DA policies introduced by the probability hyper-parameter, such training has to be conducted for a certain number of epochs till the policy starts to take effect. In most cases, running AA is extremely resource-intensive, which raises timing and cost issues. This also becomes the major challenge for AutoDA tasks and promotes multiple later methods aiming at efficiency improvement.

5.1.3 Augmentation-wise weight sharing (AWS)

A major reason for the inefficiency of AA is the repeated training process during policy evaluation. To enhance the efficiency of evaluation, some methods [23, 53, 54] sacrifice reliability to some extent. On the contrary, Augmentation-wise Weight Sharing (AWS) designs a proxy task based on the weight sharing concept in NAS, proposing a faster but still accurate evaluation process. The augmentation policies found by AWS also achieve competitive accuracy when compared to other AutoDA methods.

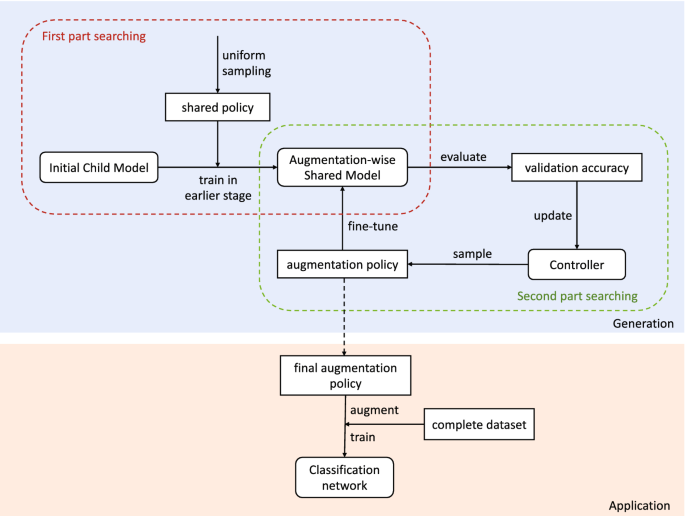

Augmentation-wise Weight Sharing workflow [30]. Upper/lower sections indicate the policy generation/application stage respectively

Inspired by the idea of early stopping, the authors of AWS hypothesize that the benefit of DA is mainly shown in the later phase of training. This assumption is supported through empirical observations in [30]. Motivated by this observation, AWS proposes a new proxy task to test the sampled policies. In this proxy task, the original search stage is split into two parts. The AWS pipeline is displayed in Fig. . In the early stage, the child model is trained using a fixed augmentation strategy, i.e. a shared policy. During this phase, the search controller will not sample policies or be updated. Only the child model used for policy evaluation will be trained for a certain number of epochs. The network weights obtained after the first part of training will be shared and reused during the later evaluation, so that AWS model does not need to repeat the full training for each of the sampled policies. The major challenge here is to select a representative shared policy for the initial stage. According to the findings in [30], simple uniform sampling can work for most tasks.

In the second part of searching, AWS samples augmentation policies via a controller, and updates the model according to an associated accuracy reward. The reward information is obtained from the shared model, instead of an untrained child model. Therefore, in order to evaluate the sampled policies, it is only necessary to resume training for a few epochs using these policies. Since the training of the child model in AWS is divided into two parts based on the different DA policies utilized, AWS is an augmentation-wise algorithm. The idea of weight sharing originates from NAS, where training from scratch is prohibitively expensive. This scheme substantially accelerates the overall evaluation procedure. The design of proxy tasks in AWS is flexible, so it can be combined with other search algorithms. Standard AWS follows a similar setting to the original AA [20] applying Reinforcement Learning (RL) techniques.

The major contribution of AWS is the effective period of the data augmentation technique. The empirical conclusion in [30] is that the DA policies mainly improve the model in the late training phase. This phenomenon reflects the greatest innovation in AWS, its unique augmentation-wise proxy task that substitutes the traditional evaluation procedure. By sharing the policy at the early phase of searching, the child model only needs to be pre-trained once. The selection of the shared augmentation policy in the first-part searching is done via a uniform sampling on the search space. The network weights are then re-used in the later application stage to evaluate each of the sampled augmentation policies. There is no need to conduct child model training from scratch thousands of times. Compared to the original AA [20], it is much more efficient to obtain reward signals in AWS through the use of weight-sharing strategies. The efficiency gains of AWS makes it have the potential to scale on even larger datasets. Moreover, according to [30], the evaluation process in AWS is still reliable. This is because in the second part of searching, the child model will be fine-tuned by DA policies to reflect the strength of each of the policies.

The disadvantages of AWS cannot be ignored however. Overall, there are excessive simplifications in AWS, aimed to increasing search efficiency. For example, sampled policies are evaluated by child models on the reduced data, and the early stage of training is substituted by shared model weights. Such settings however might lead to sub-optimal results. The final policy AWS model outputs may be more designed to the proxy task rather than the target dataset according to the findings in [31]. In terms of the search algorithm, AWS utilizes the same RL framework as in AA, bringing not much improvement, especially when compared with methods such as Fast AA [21] and PBA [23]. Lastly, AWS is not open-source, which makes it less accessible for users.

5.1.4 Greedy AutoAugment (GAA)

To improve the search efficiency, Greedy AutoAugment (GAA) [60, 63] adapts a completely different algorithm. The GAA model applies a greedy search algorithm to exponentially reduce its complexity when sampling the next policy to be searched. From the experiments conducted in [60], the TFs learned by GAA are able to further enhance the generalization ability of the classification. Moreover, the greedy idea in GAA can be a reliable complement to other search approaches in AutoDA tasks.

The policy model of GAA follows a similar setup in AA [20]. A complete augmentation policy is comprised of k sub-policies, each of which contains two consecutive TFs. Each TF is described by two essential hyper-parameters: probability and magnitude. The values of these two parameters are modeled following the same discretization as described earlier. There are 11 values for probability parameter, ranging from 0 to 1 with uniform spacing, whilst the discrete values for magnitude are positive integers, range from 1 to 10. However, GAA employs a wider range of augmentation transformations. There are 20 available image transformation functions in GAA that can be selected to form the DA policy, including 4 extra operations compared to original AA. Assuming each augmentation policy contains L image operations, where L is a positive integer greater than 0, then the search space can be defined as \((20\times 11\times 10)^L\). In this setup, the expansion of the search space is exponential to the value of L, which can be infeasible when using larger L values.