Abstract

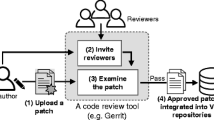

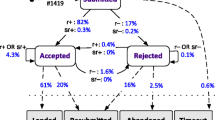

Modern Code Review (MCR) plays a key role in software quality practices. In MCR process, a new patch (i.e., a set of code changes) is encouraged to be examined by reviewers in order to identify weaknesses in source code prior to an integration into main software repositories. To mitigate the risk of having future defects, prior work suggests that MCR should be performed with sufficient review participation. Indeed, recent work shows that a low number of participated reviewers is associated with poor software quality. However, there is a likely case that a new patch still suffers from poor review participation even though reviewers were invited. Hence, in this paper, we set out to investigate the factors that are associated with the participation decision of an invited reviewer. Through a case study of 230,090 patches spread across the Android, LibreOffice, OpenStack and Qt systems, we find that (1) 16%-66% of patches have at least one invited reviewer who did not respond to the review invitation; (2) human factors play an important role in predicting whether or not an invited reviewer will participate in a review; (3) a review participation rate of an invited reviewers and code authoring experience of an invited reviewer are highly associated with the participation decision of an invited reviewer. These results can help practitioners better understand about how human factors associate with the participation decision of reviewers and serve as guidelines for inviting reviewers, leading to a better inviting decision and a better reviewer participation.

Similar content being viewed by others

Notes

We provide a full list of questions online at https://goo.gl/forms/Du48JXAsbBhKSeSx2.

References

Ackerman AF, Buchwald LS, Lewski FH (1989) Software inspections: an effective verification process. IEEE Softw 6(3):31–36

Armstrong F, Khomh F, Adams B (2017) Broadcast vs. unicast review technology: does it matter?. In: Proceedings of the 10th international conference on software testing, verification and validation (ICST), pp 219–229

Bacchelli A, Bird C (2013) Expectations, outcomes, and challenges of modern code review. In: Proceedings of the 35th international conference on software engineering (ICSE), pp 712–721

Balachandran V (2013) Reducing human effort and improving quality in peer code reviews using automatic static analysis and reviewer recommendation. In: Proceedings of the 35th international conference on software engineering (ICSE), pp 931–940

Bavota G, Russo B (2015) Four eyes are better than two: on the impact of code reviews on software quality. In: Proceedings of the 31st international conference on software maintenance and evolution (ICSME), pp 81–90

Baysal O, Kononenko O, Holmes R, Godfrey MW (2013) The influence of non-technical factors on code review. In: Proceedings of the 20th working conference on reverse engineering (WCRE), pp 122–131

Beller M, Bacchelli A, Zaidman A, Juergens E (2014) Modern code reviews in open-source projects: which problems do they fix?. In: Proceedings of the 11th working conference on mining software repositories (MSR), pp 202–211

Bettenburg N, Hassan AE, Adams B, German DM (2015) Management of community contributions - A case study on the Android and Linux software ecosystems. Empirical Software Engineering (EMSE) 20(1):252–289

Bird C, Nagappan N, Murphy B, Gall H, Devanbu P (2011) Don’t touch my code!: examining the effects of ownership on software quality. In: Proceedings of the 19th ACM SIGSOFT symposium and the 13th european conference on foundations of software engineering (ESEC/FSE), pp 4–14

Bosu A, Carver JC (2014) Impact of developer reputation on code review outcomes in oss projects: an empirical investigation. In: Proceedings of the 8th international symposium on empirical software engineering and measurement (ESEM), pp 33:1–33:10

Brier GW (1950) Verification of forecasts expressed in terms of probability. Mon Weather Rev 78(1):1–3

Carr DB, Littlefield RJ, Nichloson WL, Littlefield JS (1987) Scatterplot matrix techniques for large N. Journal of the American Statistical Association (JASA) 82(398):424–436

Cliff N (1993) Dominance statistics: ordinal analyses to answer ordinal questions. Multivar Behav Res 114(3):494–509

Cliff N (1996) Answering ordinal questions with ordinal data using ordinal statistics. Multivar Behav Res 31(3):331–350

Cohen J (1992) Statistical power analysis. Curr Dir Psychol Sci 1(3):98–101

Croux C, Dehon C (2010) Influence functions of the Spearman and Kendall correlation measures. Statistical Methods &, Applications (SMA) 19(4):497–515

Edmundson A, Holtkamp B, Rivera E, Finifter M, Mettler A, Wagner D (2013) An empirical study on the effectiveness of security code review. In: Proceedings of the 5th international conference on engineering secure software and systems (ESSoS), pp 197–212

Efron B (1983) Estimating the error rate of a prediction rule: improvement on cross-validation. Journal of the American Statistical Association (JASA) 78(382):316–331

Elish KO, Elish MO (2008) Predicting defect-prone software modules using support vector machines. J Syst Softw 81(5):649–660

Fagan ME (1976) Design and code inspections to reduce errors in program development. IBM Syst J 15(3):182–211

Fagan ME (1986) Advances in software inspections. Transactions on Software Engineering (TSE) 12(7):744–751

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27 (8):861–874

Foo KC, Jiang ZMJ, Adams B, Hassan AE, Zou Y, Flora P (2015) An industrial case study on the automated detection of performance regressions in heterogeneous environments. In: Proceedings of the 37th international conference on software engineering (ICSE), pp 159–168

Freeman LC (1978) Centrality in social networks conceptual clarification. Soc Networks 1(3):215–239

Goeminne M, Mens T (2011) Evidence for the pareto principle in open source software activity. In: Proceedings of the 1st international workshop on model driven software maintenance (MDSM) and 5th international workshop on software quality and maintainability (SQM), pp 74–82

Guzzi A, Bacchelli A, Lanza M, Pinzger M, van Deursen A (2013) Communication in open source software development mailing lists. In: Proceedings of the 10th working conference on mining software repositories (MSR), pp 277–286

Hahn J, Moon JY, Zhang C (2008) Emergence of new project teams from open source software developer networks: impact of prior collaboration ties. Inf Syst Res 19 (3):369–391

Hamasaki K, Kula RG, Yoshida N, Cruz AEC, Fujiwara K, Iida H (2013) Who does what during a code review? Datasets of OSS peer review repositories. In: Proceedings of the 10th working conference on mining software repositories (MSR), pp 49–52

Hanley J, McNeil BJ (1982) The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143(4):29–36

Hardin G (1968) The tragedy of the commons. Science 162(3859):1243–1248

Harrell FE Jr (2002) Regression modeling strategies, 1st edn. Springer

Harrell FE Jr (2015a) Hmisc: Harrell Miscellaneous. http://CRAN.R-project.org/package=Hmisc

Harrell FE Jr (2015b) Regression modeling strategies, 2nd edn. Springer

Harrell FE Jr (2015c) rms: Regression Modeling Strategies. http://CRAN.R-project.org/package=rms

Hinkle DE, Wiersma W, Jurs SG (1998) Applied statistics for the behavioral sciences, 4th edn. Houghton Mifflin Boston

Huizinga D, Kolawa A (2007) Automated defect prevention: best practices in software management. Wiley

Kononenko O, Baysal O, Guerrouj L, Cao Y, Godfrey MW (2015) Investigating code review quality: do people and participation matter?. In: Proceedings of the 31st international conference on software maintenance and evolution (ICSME), pp 111–120

Kononenko O, Baysal O, Godfrey MW (2016) Code review quality: how developers see it. In: Proceedings of the 38th international conference on software engineering (ICSE), pp 1028–1038

Lanubile F, Ebert C, Prikladnicki R, Vizcaíno A (2010) Collaboration tools for global software engineering. Software 27(2):52–55

Lee A, Carver JC, Bosu A (2017) Understanding the impressions, motivations, and barriers of one time code contributors to FLOSS projects: a survey. In: Proceedings of the 39th international conference on software engineering (ICSE), pp 187–197

Liang J, Mizuno O (2011) Analyzing involvements of reviewers through mining a code review repository. In: Proceedings of the 21st international workshop on software measurement and the 6th international conference on software process and product measurement (IWSM-Mensura), pp 126–132

Mason CH, Perreault WD Jr (1991) Collinearity, power, and interpretation of multiple regression analysis. Journal of Marketing Research (JMR) 28(3):268–280

McGraw G (2004) Software security. Security & Privacy 2(2):80–83

McIntosh S, Kamei Y, Adams B, Hassan AE (2014) The impact of code review coverage and code review participation on software quality: a case study of the Qt, VTK, and ITK projects. In: Proceedings of the 11th working conference on mining software repositories (MSR), pp 192–201

McIntosh S, Kamei Y, Adams B, Hassan AE (2016) An empirical study of the impact of modern code review practices on software quality. Empirical Software Engineering (EMSE) 21(5):2146–2189

Menardi G, Torelli N (2014) Training and assessing classification rules with imbalanced data. Data Min Knowl Disc 28(1):92–122

Meyer B (2008) Design and code reviews in the age of the internet. Commun ACM 51(9):66–71

Mishra R, Sureka A (2014) Mining peer code review system for computing effort and contribution metrics for patch reviewers. In: Proceedings of the 4th workshop on mining unstructured data (MUD), pp 11–15

Mukadam M, Bird C, Rigby PC (2013) Gerrit software code review data from android. In: Proceedings of the 10th working conference on mining software repositories (MSR), pp 45–48

Newson R (2002) Parameters behind “non-parametric” statistics: Kendall’s tau, Somers’ D and median differences. Stata J 2(1):45–64. (20)

Rigby PC, Storey MA (2011) Understanding broadcast based peer review on open source software projects. In: Proceedings of the 33rd international conference on software engineering (ICSE), pp 541–550

Rigby PC, German DM, Storey MA (2008) Open source software peer review practices: a case study of the apache server. In: Proceedings of the 30th international conference on software engineering (ICSE), pp 541–550

Rigby PC, Cleary B, Painchaud F, Storey MA, German DM (2012) Open source peer review – lessons and recommendations for closed source. IEEE Software

Rigby PC, German DM, Cowen L, Storey MA (2014) Peer review on open-source software projects: parameters, statistical models, and theory. Transactions on Software Engineering and Methodology (TOSEM) 23(4):35:1–35:33

Sarle W (1990) The VARCLUS procedure, 4th edn. SAS Institute, Inc

Shihab E, Jiang ZM, Hassan AE (2009) Studying the use of developer IRC meetings in open source projects. In: Proceedings of the 25th international conference on software maintenance (ICSM), pp 147–156

Spearman C (1904) The proof and measurement of association between two things. The American Journal of Psychology (AJP) 15(1):72–101

Steinmacher I, Conte T, Gerosa MA, Redmiles D (2015) Social barriers faced by newcomers placing their first contribution in open source software projects. In: Proceedings of the 18th ACM conference on computer supported cooperative work & social computing (CSCW), pp 1379–1392

Tantithamthavorn C, Hassan AE (2018) An experience report on defect modelling in practice: pitfalls and challenges. In: Proceedings of the 40th international conference on software engineering: software engineering in practice (ICSE-SEIP), pp 286–295

Tantithamthavorn C, McIntosh S, Hassan AE, Ihara A, Matsumoto K (2015) The impact of mislabelling on the performance and interpretation of defect prediction models. In: Proceedings of the 37th international conference on software engineering (ICSE), pp 812–823

Tantithamthavorn C, McIntosh S, Hassan AE, Matsumoto K (2016) Comments on “Researcher bias: the use of machine learning in software defect prediction”. Transactions on Software Engineering (TSE) 42(11):1092–1094

Tantithamthavorn C, Hassan AE, Matsumoto K (2017a) The impact of class rebalancing techniques on the performance and interpretation of defect prediction models. Under Review at Transactions on Software Engineering (TSE)

Tantithamthavorn C, McIntosh S, Hassan AE, Matsumoto K (2017b) An empirical comparison of model validation techniques for defect prediction models. Transactions on Software Engineering (TSE) 43(1):1–18

Thongtanunam P, McIntosh S, Hassan AE, Iida H (2015a) Investigating code review practices in defective files: an empirical study of the Qt system. In: Proceedings of the 12th working conference on mining software repositories (MSR), pp 168–179

Thongtanunam P, Tantithamthavorn C, Kula RG, Yoshida N, Iida H, Matsumoto K (2015b) Who should review my code? A file location-based code-reviewer recommendation approach for modern code review. In: Proceedings of the the 22nd international conference on software analysis, evolution, and reengineering (SANER), pp 141–150

Thongtanunam P, McIntosh S, Hassan AE, Iida H (2016a) Review participation in modern code review: an empirical study of the Android, Qt, and OpenStack projects. Empirical Software Engineering (EMSE) 22(2):768–817

Thongtanunam P, McIntosh S, Hassan AE, Iida H (2016b) Revisiting code ownership and its relationship with software quality in the scope of modern code review. In: Proceedings of the 38th international conference on software engineering (ICSE), pp 1039–1050

Vasilescu B, Serebrenik A, Devanbu P, Filkov V (2014) How social Q&A sites are changing knowledge sharing in open source software communities. In: Proceedings of the 17th ACM conference on computer supported cooperative work & social computing (CSCW), pp 342–354

van Wesel P, Lin B, Robles G, Serebrenik A (2017) Reviewing career paths of the openstack developers. In: Proceedings of the 33rd international conference on software maintenance and evolution (ICSME), pp 544–548

Whitehead J (2007) Collaboration in software engineering: a Roadmap. In: Proceedings of the 2007 future of software engineering (FOSE), pp 214–225

Xia X, Lo D, Wang X, Yang X (2015) Who should review this change?: putting text and file location analyses together for more accurate recommendations. In: Proceedings of the 31st international conference on software maintenance and evolution (ICSME), pp 261–270

Yang X, Kula RG, Yoshida N, Iida H (2016a) Mining the modern code review repositories: a dataset of people, process and product. In: Proceedings of the 13th international conference on mining software repositories (MSR), pp 460–463

Yang X, Yoshida N, Kula RG, Iida H (2016b) Peer review social network (peRSon) in open source projects. Transactions on Information and Systems E99.D (3):661–670

Yu Y, Wang H, Yin G, Ling CX (2014) Reviewer recommender of pull-requests in GitHub. In: Proceedings of the 30th international conference on software maintenance and evolution (ICSME), pp 610–613

Zanjani MB, Kagdi H, Bird C (2016) Automatically recommending peer reviewers in modern code review. Transactions on Software Engineering (TSE) 42 (6):530–543

Zimmermann T, Zeller A, Weissgerber P, Diehl S (2005) Mining version histories to guide software changes. Transactions on Software Engineering (TSE) 31 (6):429–445

Acknowledgments

This research was partially supported by JSPS KAKENHI Grant Number 16J02861 and 17H00731, and Support Center for Advanced Telecommunications (SCAT) Technology Research, Foundation. We would also like to thank Dr. Chakkrit Tantithamthavorn for his insightful comments and the survey participants for their time.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Lin Tan

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ruangwan, S., Thongtanunam, P., Ihara, A. et al. The impact of human factors on the participation decision of reviewers in modern code review. Empir Software Eng 24, 973–1016 (2019). https://doi.org/10.1007/s10664-018-9646-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-018-9646-1