Abstract

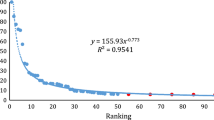

League tables that rank universities may use reputational measures, performance measures, or both. Each type of measure has strengths and weaknesses. In this paper, we rank disciplines in Australian universities both by reputation, using an international survey of senior academics, and with actual performance measures. We then compare the two types of measures to see how closely they match. The criterion we use for both sets of measures is ‘international academic standing’. We find a high correlation between the survey results and the various measures of research performance. We also find a correlation between the quality of student intake and the survey rankings, but the satisfaction levels of recent graduates do not correlate well with the rankings by academics. We then construct an overall measure of performance, which gives very similar rankings to the survey results, especially for the top-ranked institutions.

Similar content being viewed by others

Introduction

Globalisation has affected higher education by opening up the choices for students, academics and funding agencies. These groups now explore a wider range of options than in the past before making decisions about where to study, where to teach and research, and what work to fund. In order to make informed choices stakeholders need information about the nature and quality of institutions and programs. This need is being met by a proliferation of rating and ranking exercises as summarized in Dill and Soo (2005), Van Dyke (2005) and Sadlak and Liu (2007).

League tables that rank universities may use reputational measures, performance measures, or both. The reputation of a university is based on its performance over a period of time. In this paper, we are interested in examining the extent to which reputation matches reality, or current performance.

The reputation of a university may differ across groups in society, ranging from the opinions of the population as a whole to academics within a discipline. The gap between reputation and current performance will be narrower the more informed are the respondents. In the longer run, of course, there is a limit to the extent to which reputation and performance can diverge.

In general terms, reputation is measured through surveys and current performance is measured from compilations of data relating to attributes such as research output and its impact, and teaching quality. Some rankings, such as those developed by researchers at Shanghai Jiao Tong University (SJTU) use only performance measures, whereas others, such as those by the Times Higher Education Supplement-QS (THES-QS), combine both reputational and performance measures. Footnote 1

In this paper, we rank disciplines in Australian universities using as the criterion, ‘international academic standing’. Given this criterion, we measure reputation by surveying leading scholars in the various disciplines, within Australia and internationally. This group is relatively well-informed and so we expect less divergence here between reputation and performance than if we surveyed, say, all past university graduates in the discipline. Were we to use a different criterion, we might choose a different group of people to survey. Different groups of respondents may use quite different criteria, so the choice of respondents is important. The institutional rankings developed by THES-QS, for example, use surveys of both academics and employers in order to encompass different attributes of institutions.

The performance measures we use relate to research output and its impact, research training, and teaching. The seven disciplines or groupings of disciplines that we evaluate are: Arts & Humanities, Business & Economics, Education, Engineering, Law, Medicine, and Science.

By relating survey results back to the quantitative performance measures we are able to throw light on the question of what determines peer opinion of international standing, and the extent to which performance and reputation, at least according to academics, converge. Footnote 2

Surveys versus performance measures

What are the relative strengths and weaknesses of surveys and performance indicators? Survey results are subjective whereas performance measures are objective, but objectivity does not ensure that the measures actually chosen are always appropriate. For example, measuring research output in the humanities based on articles in refereed journals may be misleading because much output is in the form of books.

Moreover, if the overall academic standing of an institution in a discipline is to be based purely on performance measures, rating or ranking requires that a set of weights be attached to the various measures used. The choice of weights by an investigator is arguably the most controversial aspect of ranking or rating institutions. Footnote 3 A major advantage of a survey in which respondents are asked to provide rankings or ratings is that the choice of weights (including zero weights, which rule out certain performance measures) is implicitly left to the respondents.

A limitation of surveys is that respondents may be ill-informed or well-informed about an institution as it was some time ago. Another limitation of surveys is that, on their own, they do not provide information about how an institution might improve its academic standing, although this deficiency may be overcome by linking the survey results with explicit performance measures.

Survey results indicate the average view. Linking survey results to performance indicators resolves the issue of whether the two methods are alternative ways of achieving the same result.

The surveys

We evaluate the performance of disciplines in the 38 universities that are members of the Australian Vice-Chancellors Committee (AVCC). Footnote 4 A full listing with abbreviations used is given in Appendix Table A1.

We sent questionnaires by mail to deans, departmental heads and full professors, in the seven discipline areas, in Australia and overseas. We used separate questionnaires for each of the disciplines and we asked each academic only about their own discipline.

Not all universities teach all disciplines. Therefore, the number of universities we included on the questionnaire range from the full set for Arts & Humanities, Business & Economics, and Science, to 14 for Medicine. We discuss later the methods we used to decide which universities to include in each discipline.

We selected overseas scholars from universities which fell into at least one of the following groupings: universities in the top 100 in the 2005 rankings by Shanghai Jiao Tong University (SJTU), Canadian and UK universities in the SJTU top 202 or members of the UK Russell Group, representative Asian universities in the SJTU top 400, and eight New Zealand universities. This selection method resulted in a total of 131 overseas universities. Because a few first-rate schools are located in institutions which are not included using the criteria set out above, we added for each of Business, Engineering, Medicine and Law one or two U.S. institutions that are highly ranked in the US News and World Report discipline rankings (see http://www.usnews.com/section/rankings) but would otherwise not have entered our sample. Australian scholars were selected from the universities we include in each discipline list.

We chose academics so as to provide a balance among subdisciplines. In Science, for example, we included scholars who span the biological and physical sciences. We chose sample numbers for each discipline in proportion to the diversity of the discipline, with the largest samples for Science and the Humanities and the smallest samples for Economics, Law and Education.

We sent questionnaires to overseas academics in March 2006 and to Australian scholars in June 2006. We chose large sample sizes (1620 for overseas scholars, 1029 for Australian) because previous work indicated that response rates would be low, especially for overseas scholars.

As Dill and Soo (2005) have emphasized, it is unrealistic to expect academics in one country to have a detailed knowledge of all universities in another country. Therefore, rather than asking respondents to rank all Australian universities in a discipline we instead asked them first to rate all the universities using a five-point scale, that included a “don’t know” option. We then asked respondents to rank only the top five universities in a discipline on the basis of international academic standing.

In the first question we asked respondents to rate universities by placing the academic standing of the discipline in each university into one of the following five categories:

-

Comparable to top 100 in world

-

Comparable to top 101–200 in world

-

Comparable to top 201–500 in world

-

Not comparable to top 500 in world

-

Don’t know well enough to rate

In the second question, in addition to asking respondents to rank the top five Australian universities, we asked them to state whether they considered each of these universities to be rated in the top 25 or top 50 in the world. Combining the results of question two with those from question one enabled us to construct a seven-point scale of perceived international standing for each discipline.

In order to combine the ratings into single measures we use a linear rating scale, allocating 6 points to a university discipline in the top 25, 5 to one in the top 50, and so on, with 1 if not in the top 500 and 0 if not known. In order to combine the rankings into a single measure we again use a linear scale, allocating 5 to the first-ranked university, 4 to the second-ranked university, and so on. The top five rankings should of course be consistent with the ratings; the rankings have the effect of separating out more precisely the top-rated universities, which may have the same ratings.

We calculate both the ratings and rankings measures separately for returns from overseas academics and from Australian academics, standardizing each of the four results by giving the highest ranked or rated university a score of 100 and expressing all other university scores as a percentage of the highest score. We then obtain an overall measure by weighting equally the four components and rescaling so that the top university is given a score of 100.

The range of overall scores across universities in each discipline shows some sensitivity to the method of aggregating the four survey measures, but the rankings of universities are relatively insensitive. To check the robustness of our findings, when comparing the survey results with the performance measures we also use a survey measure based just on the ratings data. This measure is obtained by averaging the ratings data for Australian and overseas respondents.

Respondents were selected in each of the disciplines as follows.

-

Arts & Humanities: We selected scholars predominately from English, History/Classics, and Philosophy; all universities were included.

-

Business & Economics: We conducted separate surveys for Business and for Economics. We did so because, unlike Australian universities, in many overseas universities Economics is located separately from Business, although the business school may also employ economists. We included all universities in the Business questionnaire, which was sent to academics in the areas of management, marketing, accounting and finance. For Economics, we included 29 universities, based on the list used by Neri and Rogers (2006), chosen as having both Ph.D. programs in economics and identifiable staff in the area.Footnote 5 We combined the results for the surveys using a weight of 1/4 for Economics and 3/4 for Business, based roughly on staff and student numbers; for those universities in which Economics was not surveyed we allocated the full weight to Business.

-

Education: The size and nature of education schools vary across universities but we used a wide definition of a ‘school of education’: any university which had more than four academic staff in ‘Education’ as classified by the Department of Education, Science and Training (DEST). Under this definition, we included 35 universities. Footnote 6

-

Engineering: We excluded institutions if no academic staff member was classified by DEST as “Engineering and Related Technologies”. This definition left us with 28 universities. Footnote 7 We sent the questionnaire to academics in each of the main branches of engineering.

-

Law: The included universities are the 29 that are members of the Council of Australian Law Deans.

-

Medicine: The recent and proposed establishment of new schools of medicine make it less clear cut than in other disciplines which schools should be included. We included all medical schools that are represented on the Committee of Deans of Australian Medical Schools except for the University of Notre Dame, Australia—a total of 14. It is inevitable that the relatively new schools (ANU, Bond, James Cook and Griffith) will score lower in our survey. We surveyed academics based in both clinical and non-clinical departments and in a range of specialist areas.

-

Science: We included all universities in the questionnaire. Respondents were selected from the biological sciences, physics, chemistry and earth sciences.

Performance measures

The variables we use are listed below, grouped under two headings: quality of staff and quality of programs. The variables we chose meet the two criteria of conceptual relevance and data availability for more than one discipline.

Quality/international standing of academic staff

The variables we use to measure the quality of academic staff are publications and citations of journal articles using the Thomson Scientific (TS) Data bases; highly-cited authors as defined by TS; membership in Australian academic academies; success in national competitive grants; and downloads of papers.

For Engineering, Medicine and Science we use the TS Essential Science Index (ESI) data for the period 1 January 1996–30 April 2006. The ESI data are available for 22 fields of scholarship. There is some arbitrariness in allocating the fields to Medicine and Science. We allocate publications (and citations) in the two fields of Biology & Biochemistry and Molecular Biology & Genetics equally between Medicine and Science. We allocate all output in the following fields to Medicine: Clinical Medicine, Immunology, Microbiology, Neuroscience & Behaviour, Pharmacology & Toxicology, and Psychiatry/Psychology. In publications and citations we rank universities for clinical and non-clinical medicine separately. We allocate all of Chemistry, Geosciences, Physics, Plant & Animal Science and Space Science to Science.

For Arts & Humanities, Business & Economics, and Education we use the TS University Statistical Indicators (USI) for the period 1996–2005. Footnote 8 A feature of the ESI data is that universities enter only if a threshold is reached; namely, an institution must be in the top 1% in the world in a field. The USI data bank does not have this constraint, although only journals in the TS data bank are included. Footnote 9 The data base is therefore biased towards journals of international standard, but this bias matches our aim of measuring international standing.

For all disciplines we also present publication counts for the period 2001–2005. The citations data in both ESI and USI relate to citations to articles published within the period. We deem that for many disciplines a 5-year window is too small and so we confine the presentation of citations to ESI data for 1996–2006, which uses a 10-year window. Footnote 10 No suitable data base for books exists and so we excluded this form of output. The time period for ISI highly-cited authors is 1981–1999.

We extracted membership of the four academies (Science, Humanities, Social Sciences and Technological Sciences and Engineering) from the relevant web pages in March 2006. Footnote 11 We included all academics who provided a university affiliation other than those listed as ‘visiting’.

Downloads of papers from the Social Sciences Research Network (SSRN) relate to Law and Business for the 12 months ending 1st October 2006 and were obtained from http://www.ssrn.com.

Our final measure of research standing is success in national competitive grants. For Medicine we use the total value of funding from the National Health and Medical Research Council in the 2005 and 2006 rounds. For other disciplines we use the number of Australian Research Council (ARC) discovery projects and linkage projects funded in the last two rounds. Footnote 12 We map the Research Fields, Courses and Disciplines (RFCD) codes into our disciplines in a way that is broadly consistent with the sample we used in the surveys.

Because we are interested in evaluating the international academic standing of disciplines, we focus on total research performance rather than performance per academic staff member. In our previous work (Williams and Van Dyke 2007) we concluded that such standing was related primarily to the former rather than the latter. Of course, size is relevant for other measures, such as research productivity. Gathering accurate data on staff sizes is not an easy matter, however. There is a particular difficulty in measuring the input of auxiliary staff, especially in professional faculties. To match output against academic staff numbers would require time series on the number of staff, however defined, who were capable of contributing to that output. In the Appendix tables we do however provide some data on the relative size of disciplines in each university where size is defined as full-time equivalent numbers of research only and teaching-and-research staff (we exclude casuals and teaching only staff).

Quality of programs

The variables we use to measure quality of programs are quality of undergraduate student intake, undergraduate student evaluation of programs, doctorates by research, Footnote 13 and staff–student ratio. The ratio of teaching staff to students is a proxy measure for resources devoted to teaching. We take completions of research doctorates over the 3 years 2002–2004.

We measure the quality of undergraduates by the average Tertiary Entry Score (TES) for students entering bachelors degrees (including those with graduate entry) and undergraduate diplomas in 2004.

We use results from the Course Experience Questionnaire (CEQ) to measure student evaluation of courses. We use responses to the question, “Overall, I was satisfied with the quality of the course”, for each of our discipline groups. The results are coded on a five-point scale ranging from ‘strongly agree’ to ‘strongly disagree’ and are converted to a single number with a maximum of 100 using the weights −100, −50, 0, 50, 100. We use 2-year averages of those who graduated with a bachelor’s degree in 2003 and 2004.

Staff–student ratios relate to 2004; we measure student and academic staff numbers in equivalent full-time units; we exclude research-only staff and off-shore students. Our staff–student ratios are valid in those cases in which there are no off-shore courses or in which off-shore students are taught by offshore staff (who are not included in our data); for cases in which off-shore students are taught by Australian staff, the staff–student ratios we use are over-estimates. In practice, of course, universities may teach off-shore students using a combination of Australian and off-shore staff.

Results

Response rates for the surveys were 31% for Australian scholars and a low 13% for overseas academics. We know little about response bias but it seems reasonable to assume that the overseas respondents are those academics who are more familiar with Australian universities.Footnote 14 We are comforted by the high correlations between the results from overseas and Australian academics (see Table 1).

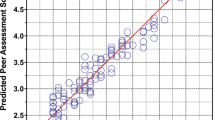

We now turn to the question of how well the survey results correlate with actual performance. In Table 1 we present correlation coefficients between the overall survey measure and those individual performance measures which we deem to be reliable for the particular discipline. We also present the correlation between the survey results and an overall measure of performance that is a simple average of the performance indicators for which we have reliable data for the discipline. We do, however, use a half weight on the staff–student ratio because of the data problems relating to offshore students as explained above. In the absence of any reliable data on publications in Law we do not construct an overall measure of performance for this discipline.

Correlations between the survey findings and overall performance are high, ranging from 0.86 for Education to 0.96 for Arts & Humanities and Engineering. The high correlation for Arts & Humanities is of particular interest given that we had no data on book publications—the result suggests high correlations among various forms of output. Footnote 15 There is a tendency for the correlation between the survey results and overall performance to be higher than for the correlations with individual performance indicators. This result suggests that scholars take account of a range of performance measures. The only cases in which individual coefficients are higher than for the overall measure are competitive grants for Medicine and citations for Science.

Looking at the correlations between the surveys and articles published over 10 and over 5 years, the only noticeable difference occurs in Education in which the survey results are more highly correlated with articles over the most recent 5 years. There is thus no evidence that the reputational measure lags reality. On the other hand, the detailed results in the Appendix Tables A2–A8 suggest that relative publication rates are slow to change, although there are a few notable changes in rankings between the two time periods in Arts & Humanities and Business & Economics.

The correlation between the surveys and staff–student ratios is small, suggesting either that the latter, at least as measured, is not a good measure of teaching resources or that academics do not consider teaching resources in their evaluation of standing. Footnote 16

The relationship between the survey of academics and the survey of recent graduates is weak: it is negative for Business & Economics and less than 0.1 for Engineering and Law. Footnote 17 However, the low correlation between peer opinion and student perceptions does not in itself imply that academics undervalue undergraduate teaching. It may reflect, for example, differing beliefs between staff and students as to what constitutes good teaching or resource constraints may limit the quality of programs. In addition, overseas respondents to our survey are unlikely to be well informed about relative teaching standards in Australian universities.

Our results imply that peer opinion is determined largely by research performance and the correlation between research performance and student evaluations is weak, a fact which has been previously noted for Australian data by Ramsden (1999) and Ramsden and Moses (1992).

In Table 2 we compare the rankings for the top 12 universities in each discipline (10 in Medicine) using the survey results and aggregate performance measures. Results for all universities are given in the Appendix, Tables A2–A8. The self-styled Group of Eight (Go8) comprehensive research universities tend to dominate both sets of rankings. These universities are Adelaide, ANU, Melbourne, Monash, Queensland, Sydney, UNSW and UWA. Note, however, that in Education non-Go8 universities rank highly, in many cases reflecting the history of the institutions that incorporated teachers colleges.

We have already seen from the correlation coefficients that there is broad consistency between the two sets of measures. The strongest correspondence occurs in Arts & Humanities, where the ranking of the top five universities is the same for both the surveys and the overall performance measure. In Business & Economics the first and second ranked universities are the same on each measure and, considered as a block, the next five ranked are the same but with some differences in ordering. The rankings in Medicine and Science are also very consistent. The relationship between the survey and performance rankings is a little less strong in Engineering and Education. In Engineering, the publication measures favour what might be termed traditional engineering; in Education the performance measures we use do not fully reflect output and influence.

Among the Go8 universities, we note that the University of Queensland is rated more highly using the performance measures than using the survey scores. Combined with the finding that Australian academics rate Queensland higher than do their overseas colleagues, this suggests that Queensland’s reputation lags its performance.

Concluding remarks

In this paper, we were interested in measuring the academic standing of disciplines in Australian universities using the criterion, ‘international standing’. In order to do so, we surveyed academics both in Australia and internationally and gathered a range of actual performance measures which we deem to determine this criterion. We then compared the results.

Although reputation and reality cannot diverge too far over time, it is an interesting question as to which of the two matters most in the short run. In a study of student decision making, Roberts and Thompson (2007) concluded that general reputation is much more important than current league table position. On the other hand, in formula-funded research, governments give full weight to current performance.

A second interesting but related question is the extent to which reputation and reality are in alignment for different groups of stakeholders. Our study shows that if reputation in a discipline is measured by peer opinion then it is highly correlated with a range of research measures and with an overall measure of performance comprising determinants of international standing. We leave for further research the question of what are the main determinants of reputation in the eyes of students, employers and the general community.

Turning to measures of research performance only, the correlations within our various research measures are high. If correlations are high then the simplest and cheapest methods for measuring research performance are preferred. There are lessons in this for governments trying to develop research measures for the purpose of allocating funds: use of a plethora of measures is unlikely to pass a cost-benefit test.

Our empirical results show that high international academic standing among peers is achieved through research performance, which in turn translates into high demand for places by students who are then not always pleased with what they get, at least immediately after graduation. Under any system of education, high academic standing in research is the main driver for postgraduate students and scholars seeking positions. A weakness of the current Australian system is that good teaching is not a major driver of student preferences at the undergraduate level. To overcome this deficiency would require appropriate funding measures, and incentives and encouragement for students to change institutions as they progress through their studies and research training.

While discipline ratings are important in themselves, they can be aggregated up to university ratings that reflect the relative importance of each discipline in each university. Such aggregation overcomes the difficulties that often arise in attempting to compare institutions with quite different profiles—for example, the London School of Economics and the California Institute of Technology. The main constraint on the aggregating up approach is the availability of data. Also, the appropriate performance measures are not the same across disciplines: those for music, nursing, law and science, for example, are all quite different. Representative professional groups and societies have an important role to play in improving performance measurement.

Notes

For the Shanghai rankings see http://www.ed.sjtu.edu.cn/ranking.htm; for the THES-QM ranking see http://www.topuniversities.com

Toutkhoushian et al (1998) undertook a similar analysis for the ratings of US graduate programs.

As part of our previous work we attempted to overcome investigator bias by asking survey respondents to provide weights on a set of attributes—see Williams and Van Dyke (2007). Of course the data can be presented in a form which enables the user to choose the weights. Indeed, one of the ‘Berlin Principles’ for rating or ranking universities is that “[users] should have some opportunity to make their own decisions about how these indicators should be weighted” (see Sadlak and Liu 2007, p. 28).

The 38 universities comprise all the 37 ‘public’ universities plus the private Bond University. We included the private university Notre Dame Australia in our surveys but lack of comparable data on performance indicators means that comparisons between the two types of measures are not possible.

Neri and Rodgers list 29 universities to which we add Bond and delete the Australian Defence Force Academy.

The universities which did not reach our threshold of a school of education were ANU, Bond University, Swinburne University of Technology, and the University of the Sunshine Coast. All included universities had more than 10 academic staff except Adelaide which had only 6 teaching staff in 2004.

The institutions with no academic staff classified as Engineering in 2004 were: Australian Catholic University, Bond, Charles Darwin, Charles Sturt, Flinders, Murdoch, Southern Cross, Canberra, New England, Notre Dame Australia and the University of the Sunshine Coast. The institutions included differ from the membership of the deans of engineering; that list does not include Macquarie University but does include Charles Darwin, Flinders, Murdoch and the University of Canberra. The difference between the lists seems to be due largely to the fact that we have not included staff in information technology.

We are indebted to Thomson Scientific for permission to use this data.

The USI data base does not include the University of Ballarat and the University of Southern Queensland.

The external reviewers of the research output of the World Bank noted the limitations of using short citation periods in Economics. They write (World Bank 2006, p. 45): “The period we are assessing here is 1998 to 2005, and there has not been time for the citation record to accumulate”.

Details are as follows: the Australian Academy of the Humanities (http://www.humanities.org.au), the Australian Academy of Science (http://www.science.org.au), the Academy of Social Sciences in Australia (http://www.assa.edu.au), and the Australian Academy of Technological Sciences and Engineering (http://www.atse.org.au).

These are Discovery Projects with funding to commence in 2006 and 2007 and Linkage Projects to commence in 2007 and July 2006. Sources for the data on grants are http://www.nhmrc.edu.au and http://www.arc.edu.au/grant_programs. We ignore the fact that some NHMRC grants are allocated to disciplines other than Medicine and some ARC grants are allocated to Medicine.

In most disciplines the data relate to Ph.D. completions but other research doctorates are numerically important in areas such as Business and Education.

More detail on the survey results is contained in Williams and Van Dyke (2006).

The correlations are not sensitive to the manner in which we construct the overall result from the surveys. If we exclude the ranking data and just use just the ratings data from the surveys (combining both Australian and overseas responses) the correlations with the overall performance measure are: Arts & Humanities, 0.968; Business & Economics, 0.951; Education, 0.860; Engineering, 0.963; Medicine, 0.919; Science, 0.958.

Apart from the difficult of dealing with off-shore students, there are difficulties in adequately capturing the role of casual and support staff.

Recall however that the student survey does not include those graduating with post bachelors degrees.

References

Dill, D. D., & Soo, M. (2005) Academic quality, league tables, and public policy: A cross-national analysis of university ranking systems. Higher Education, 49, 495–533.

Neri, F., & Rodgers, J. R. (2006) Ranking Australian economics departments by research productivity. Economic Record, 83(Special Issue), S74–S84.

Ramsden, P. (1999) Predicting institutional research performance from published indicators: A test of a classification of Australian university types. Higher Education, 37, 341–358.

Ramsden, P., & Moses, I. (1992) Associations between research and teaching in Australian higher education. Higher Education, 23, 273–295.

Roberts, D., & Thompson, L. (2007). Reputation management for universities, Working Paper Series 2, The Knowledge Partnership (http://www.theknowledgepartnership.com).

Sadlak, J. & Liu, N. C. (Eds.) (2007). The world-class university and ranking: Aiming beyond status. Bucharest: UNESCO-CEPES.

Toutkoushian, R. K., Dundar, H., & Becker, W. E. (1998) The National Research Council Graduate Program ratings: What are they measuring? Review of Higher Education, 21, 427–443.

Van Dyke, N. (2005) Twenty years of university report cards. Higher Education in Europe, 103–125.

Williams, R., & Van Dyke, N. (2006). Rating major disciplines in Australian, universities: Perceptions and reality, Melbourne Institute (available at http://www.melbourneinstitute.com).

Williams, R., & Van Dyke, N. (2007). Measuring the international standing of universities with an application to Australian universities. Higher Education, 53, 819–841.

World Bank. (2006). An evaluation of World Bank Research 1998–2005. Retrieved 29 January 2007 from http://www.tinyurl.com/yck7wc

Acknowledgements

We owe thanks to Emayenesh Seyoum and Carol Smith for assistance with the data. We are greatly indebted to the Department of Education, Science and Training (DEST) and the Graduate Careers Council of Australia (GCCA) for providing data. The use to which we put the data is of course our responsibility. Two anonymous referees provided insightful comments.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Williams, R., Van Dyke, N. Reputation and reality: ranking major disciplines in Australian universities. High Educ 56, 1–28 (2008). https://doi.org/10.1007/s10734-007-9086-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10734-007-9086-0