Abstract

The operation of squaring (coproduct followed by product) in a combinatorial Hopf algebra is shown to induce a Markov chain in natural bases. Chains constructed in this way include widely studied methods of card shuffling, a natural “rock-breaking” process, and Markov chains on simplicial complexes. Many of these chains can be explicitly diagonalized using the primitive elements of the algebra and the combinatorics of the free Lie algebra. For card shuffling, this gives an explicit description of the eigenvectors. For rock-breaking, an explicit description of the quasi-stationary distribution and sharp rates to absorption follow.

Similar content being viewed by others

1 Introduction

A Hopf algebra is an algebra \(\mathcal{H}\) with a coproduct \(\Delta:\mathcal{H}\to\mathcal{H}\otimes\mathcal{H}\) which fits together with the product \(m:\mathcal{H}\otimes\mathcal{H}\rightarrow\mathcal{H}\). Background on Hopf algebras is in Sect. 2.2. The map \(m\Delta:\mathcal{H}\to\mathcal{H}\) is called the Hopf-square (often denoted Ψ 2 or x [2]). Our first discovery is that the coefficients of x [2] in natural bases can often be interpreted as a Markov chain. Specializing to familiar Hopf algebras can give interesting Markov chains: the free associative algebra gives the Gilbert–Shannon–Reeds model of riffle shuffling. Symmetric functions give a rock-breaking model of Kolmogoroff [54]. These two examples are developed first for motivation.

Example 1.1

(Free associative algebra and riffle shuffling)

Let x 1,x 2,…,x n be noncommuting variables and \(\mathcal {H}=k\langle x_{1},\ldots,x_{n}\rangle\) be the free associative algebra. Thus \(\mathcal{H}\) consists of finite linear combinations of words \(x_{i_{1}}x_{i_{2}}\cdots x_{i_{k}}\) in the generators with the concatenation product. The coproduct Δ is an algebra map defined by Δ(x i )=1⊗x i +x i ⊗1 and extended linearly. Consider

A term in this product results from a choice of left or right from each factor. Equivalently, for each subset S⊆{1,2,…,k}, there corresponds the term

Thus mΔ is a sum of 2k terms resulting from removing \(\{ x_{i_{j}}\}_{j\in S}\) and moving them to the front. For example,

Dividing mΔ by 2k, the coefficient of a word on the right is exactly the chance that this word appears in a Gilbert–Shannon–Reeds inverse shuffle of a deck of cards labeled by x i in initial order \(x_{i_{1}}x_{i_{2}}\cdots x_{i_{k}}\). Applying \(\frac{1}{2^{k}}m\Delta\) in the dual algebra gives the usual model for riffle shuffling. Background on these models is in Sect. 5. As shown there, this connection between Hopf algebras and shuffling gives interesting new theorems about shuffling.

Example 1.2

(Symmetric functions and rock-breaking)

Let us begin with the rock-breaking description. Consider a rock of total mass n. Break it into two pieces according to the symmetric binomial distribution:

Continue, at the next stage breaking each piece into {j 1,j−j 1}, {j 2,n−j−j 2} by independent binomial splits. The process continues until all pieces are of mass one when it stops. This description gives a Markov chain on partitions of n, absorbing at 1n.

This process arises from the Hopf-square map applied to the algebra Λ=Λ(x 1,x 2,…,x n ) of symmetric functions, in the basis of elementary symmetric functions {e λ }. This is an algebra under the usual product. The coproduct, following [38] is defined by

extended multiplicatively and linearly. This gives a Hopf algebra structure on Λ which is a central object of study in algebraic combinatorics. It is discussed in Sect. 2.4. Rescaling the basis elements to \(\{\hat{e}_{i}:=i!e_{i}\}\), a direct computation shows that mΔ in the {e λ } basis gives the rock-breaking process; see Sect. 4.1.

A similar development works for any Hopf algebra which is either a polynomial algebra as an algebra (for instance, the algebra of symmetric functions, with generators e n ), or is cocommutative and a free associative algebra as an algebra (e.g., the free associative algebra), provided each object of degree greater than one can be broken non-trivially. These results are described in Theorem 3.4.

Our second main discovery is that this class of Markov chains can be explicitly diagonalized using the Eulerian idempotent and some combinatorics of the free associative algebra. This combinatorics is reviewed in Sect. 2.3. It leads to a description of the left eigenvectors (Theorems 3.15 and 3.16) which is often interpretable and allows exact and asymptotic answers to natural probability questions. For a polynomial algebra, we are also able to describe the right eigenvectors completely (Theorem 3.19).

Example 1.3

(Shuffling)

For a deck of n distinct cards, the eigenvalues of the Markov chain induced by repeated riffle shuffling are 1,1/2,…,1/2n−1 [45]. The multiplicity of the eigenvalue 1/2n−i equals the number of permutations in S n with i cycles. For example, the second eigenvalue, 1/2, has multiplicity \(\binom{n}{2}\). For 1≤i<j≤n, results from Sect. 5 show that a right eigenvector f ij is given by

Summing in i<j shows that \(d(w)-\frac{n-1}{2}\) is an eigenvector with eigenvalue 1/2 (d(w)=# descents in w). Similarly \(p(w)-\frac {n-2}{3}\) is an eigenvector with eigenvalue 1/4 (p(w)=# peaks in w). These eigenvectors are used to determine the mean and variance of the number of carries when large integers are added.

Our results work for decks with repeated values allowing us to treat cases when, e.g., the suits do not matter and all picture cards are equivalent to tens. Here, fewer shuffles are required to achieve stationarity. For decks of essentially any composition we show that all eigenvalues 1/2i, 0≤i≤n−1, occur and determine multiplicities and eigenvectors.

Example 1.4

(Rock-breaking)

Consider the rock-breaking process of Example 1.2 started at (n), the partition with a single part of size n. This is absorbing at the partition 1n. In Sect. 4, this process is shown to have eigenvalues 1,1/2,…,1/2n−1 with the multiplicity of 1/2n−l the number of partitions of n into l parts. Thus, the second eigenvalue is 1/2 taken on uniquely at the partition 1n−22. The corresponding eigenfunction is

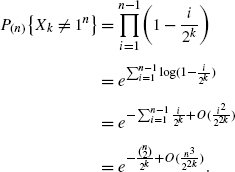

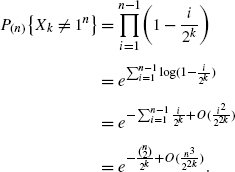

This is a monotone function in the usual partial order on partitions and equals zero if and only if λ=1n. If X 0=(n),X 1,X 2,… are the successive partitions generated by the Markov chain then

Using Markov’s inequality,

This shows that for k=2log2 n+c, the chance of absorption is asymptotic to 1−1/2c+1 when n is large. Section 4 derives all of the eigenvectors and gives further applications.

Section 2 reviews Markov chains (including uses for eigenvectors), Hopf algebras, and some combinatorics of the free associative algebra. Section 3 gives our basic theorems, generalizing the two examples to polynomial Hopf algebras and cocommutative, free associative Hopf algebras. Section 4 treats rock-breaking; Section 5 treats shuffling. Section 6 briefly describes other examples (e.g., graphs and simplicial complexes), counter-examples (e.g., the Steenrod algebra), and questions (e.g., quantum groups).

Two historical notes: The material in the present paper has roots in work of Patras [65–67], whose notation we are following, and Drinfeld [34]. Patras studied shuffling in a purely geometric fashion, making a ring out of polytopes in \(\mathbb{R}^{n}\). This study led to natural Hopf structures, Eulerian idempotents, and generalization of Solomon’s descent algebra in a Hopf context. His Eulerian idempotent maps decompose a graded commutative or cocommutative Hopf algebra into eigenspaces of the ath Hopf-powers; we improve upon this result, in the case of polynomial algebras or cocommutative, free associative algebras, by algorithmically producing a full eigenbasis. While there is no hint of probability in the work of Patras, it deserves to be much better known. More detailed references are given elsewhere in this paper.

We first became aware of Drinfeld’s ideas through their mention in Shnider–Sternberg [82]. Consider the Hopf-square, acting on a Hopf algebra \(\mathcal{H}\). Suppose that \(x\in\mathcal{H}\) is primitive, Δ(x)=1⊗x+x⊗1. Then mΔ(x)=2x so x is an eigenvector of mΔ with eigenvalue 2. If x and y are primitive then mΔ(xy+yx)=4(xy+yx) and, similarly, if x 1,…,x k are primitive then the sum of symmetrized products is an eigenvector of mΔ with eigenvector 2k. Drinfeld [34, Prop. 3.7] used these facts without comment in his proof that any formal deformation of the cocommutative universal enveloping algebra \(\mathcal{U}(\mathfrak{g})\) results already from deformation of the underlying Lie algebra \(\mathfrak{g}\). See [82, Sect. 3.8] and Sect. 3.4 below for an expanded argument and discussion. For us, a description of the primitive elements and their products gives the eigenvectors of our various Markov chains. This is developed in Sect. 3.

2 Background

This section gives notation and background for Markov chains (including uses for eigenvectors), Hopf algebras, the combinatorics of the free associative algebra and symmetric functions. All of these are large subjects and pointers to accessible literature are provided.

2.1 Markov chains

Let \(\mathcal{X}\) be a finite set. A Markov chain on \(\mathcal{X}\) may be specified by a transition matrix K(x,y) \((x,y\in\mathcal {X})\) with K(x,y)≥0, ∑ y K(x,y)=1. This is interpreted as the chance that the chain moves from x to y in one step. If the chain is denoted X 0,X 1,X 2,… and X 0=x 0 is a fixed starting state then

Background and basic theory can be found in [50] or [15]. The readable introduction [58] is recommended as close in spirit to the present paper. The analytic theory is developed in [75].

Let K 2(x,y)=∑ z K(x,z)K(z,y) denote the probability of moving from x to y in two steps. Similarly, K l is defined. Under mild conditions [58, Sect. 1.5] Markov chains have unique stationary distributions π(x): thus π(x)≥0, ∑ x π(x)=1, ∑ x π(x)K(x,y)=π(y), so π is a left eigenvector of K with eigenvalue 1. Set

Then K operates as a contraction on L 2 with Kf(x)=∑ y K(x,y)f(y). The Markov chains considered in this paper are usually not self-adjoint (equivalently reversible), nonetheless, they are diagonalizable over the rationals with eigenvalues \(1=\beta_{0}\geq \beta_{1}\geq\cdots\geq\beta_{|\mathcal{X}|-1}>0\). We have a basis of left eigenfunctions \(\{g_{i}\}_{i=0}^{|\mathcal{X}|-1}\) with g 0(x)=π(x) and ∑ x g i (x)K(x,y)=β i g i (y), and, in some cases, a dual basis of right eigenfunctions \(\{f_{i}\}_{i=0}^{|\mathcal {X}|-1}\) with f 0(x)≡1, Kf i (x)=β i f i (x), and ∑ x f i (x)g j (x)=δ ij . As is customary in discussions of random walks on algebraic structures, we will abuse notation and think of the eigenfunctions f i both as functions on the state space and as linear combinations of the states—in other words, ∑ x f i (x)x will also be denoted f i .

Throughout, we are in the unusual position of knowing β i , g i and possibly f i explicitly. This is rare enough that some indication of the use of eigenfunctions is indicated.

Use A

For any function \(f:\mathcal{X}\to\mathbb{R}\), expressed in the basis of right eigenfunctions {f i } as

the expectation of f after k steps, having started at x 0, is given by

For example, for shuffling, the normalized number of descents d(π)−(n−1)/2 is the sum of the 1/2-eigenfunctions for riffle shuffling; see Example 5.8. Thus, with x 0=id and all k, 0≤k<∞,

In [27, 28] it is shown that the number of descents in repeated riffle shuffles has the same distribution as the number of carries when n integers are added. Further, the square of this eigenfunction has a simple eigenfunction expansion leading to simple formulas for the variance and covariance of the number of carries.

Use B

If f is a right eigenfunction with eigenvalue β, then the self-correlation after k steps (starting in stationarity) is

This indicates how certain correlations fall off and gives an interpretation of the eigenvalues.

Use C

For f a right eigenfunction with eigenvalue β, let Y i =f(X i )/β i, 0≤i<∞. Then Y i is an \(\mathcal{F}_{i}\) martingale with \(\mathcal {F}_{i}=\sigma (X_{0},X_{1}, \ldots, X_{i} )\). One may try to use optional stopping, maximal and concentration inequalities and the martingale central limit theorem to study the behavior of the original X i chain.

Use D

One standard use of right eigenfunctions is to prove lower bounds for mixing times for Markov chains. The earliest use of this is the second moment method [26]. Here, one uses the second eigenfunction as a test function and expands its square in the eigenbasis to get concentration bounds. An important variation is Wilson’s method [95] which only uses the first eigenfunction but needs a careful understanding of the variation of this eigenfunction. A readable overview of both methods and many examples is in [76].

Use E

The left eigenfunctions come into computations since ∑ x g i (x)f j (x)=δ ij . Thus in (2.1), a i =〈g i |f/π〉. (Here f/π is just the density of f with respect to π.)

Use F

A second prevalent use of left eigenfunctions throughout this paper: the dual of a Hopf algebra is a Hopf algebra and left eigenfunctions of the dual chain correspond to right eigenfunctions of the original chain. This is similar to the situation for time reversal. If \(K^{*}(x,y)=\frac{\pi(y)}{\pi(x)}K(y,x)\) is the time-reversed chain (note K ∗(x,y) is a Markov chain with stationary distribution π), then g i /π is a right eigenfunction of K ∗.

Use G

The left eigenfunctions also come into determining the quasi-stationary distribution of absorbing chains such as the rock-breaking chain. A useful, brief introduction to quasi-stationarity is in [50]. The comprehensive survey [91] and annotated bibliography [68] are also useful. Consider the case where there is a unique absorbing state x • and the second eigenvalue β 1 of the chain satisfies 1=β 0>β 1>β 2≥⋯. This holds for rock-breaking. There are two standard notions of “the limiting distribution of the chain given that it has not been absorbed”:

In words, π 1(x) is the limiting distribution of the chain given that it has not been absorbed up to time k and π 2(x) is the limiting distribution of the chain given that it is never absorbed. These quasi-stationary distributions can be expressed in terms of the eigenfunctions:

These results follow from simple linear algebra and are proved in the references above. For rock-breaking, results in Sect. 4 show that π 1=π 2 is point mass at the partition 1n−22.

Use H

Both sets of eigenfunctions appear in the formula

This permits the possibility of determining convergence rates. It can be difficult to do for chains with large state spaces. See the examples and discussion in [29].

To conclude this discussion of Markov chains we mention that convergence is customarily measured by a few standard distances:

Here \(\|K_{x_{0}}^{l}-\pi\|_{\operatorname{TV}}\leq\operatorname{sep}_{x_{0}}(l)\leq l_{\infty}(l)\) and all distances are computable by determining the maximizing or minimizing values of A or y and using (2.5)–(2.8). See [58, Lemma 6.13] for further discussion of these distances.

2.2 Hopf algebras

A Hopf algebra is an algebra \(\mathcal{H}\) over a field k (usually the real numbers in the present paper). It is associative with unit 1, but not necessarily commutative. Let us write m for the multiplication in \(\mathcal{H}\), so m(x⊗y)=xy. Then \(m^{[a]}:\mathcal{H}^{\otimes a}\to\mathcal{H}\) will denote a-fold products (so m=m [2]), formally m [a]=m(ι⊗m [a−1]) where ι denotes the identity map.

\(\mathcal{H}\) comes equipped with a coproduct \(\Delta :\mathcal{H}\to\mathcal{H}\otimes\mathcal{H}\), written Δ(x)=∑(x) x (1)⊗x (2) in Sweedler notation [89]. The coproduct is coassociative in that

so there is no ambiguity in writing Δ[3](x)=∑(x) x (1)⊗x (2)⊗x (3). Similarly, \(\Delta ^{[a]}:\mathcal{H}\to\mathcal{H}^{\otimes a}\) denotes the a-fold coproduct, where Δ is applied a−1 times, to any one tensor-factor at each stage; formally Δ[a]=(ι⊗⋯⊗ι⊗Δ)Δ[a−1]. The Hopf algebra \(\mathcal{H}\) is cocommutative if ∑(x) x (1)⊗x (2)=∑(x) x (2)⊗x (1); in other words, an expression in Sweedler notation is unchanged when the indices permute. An element x of \(\mathcal{H}\) is primitive if Δ(x)=1⊗x+x⊗1.

The product and coproduct have to be compatible so Δ is an algebra homomorphism, where multiplication on \(\mathcal{H}\otimes \mathcal{H}\) is componentwise; in Sweedler notation this says Δ(xy)=∑(x),(y) x (1) y (1)⊗x (2) y (2). All of the algebras considered here are graded and connected, i.e., \(\mathcal{H}=\bigoplus_{i=0}^{\infty}\mathcal{H}_{i}\) with \(\mathcal {H}_{0}=k\) and \(\mathcal{H}_{n}\) finite-dimensional. The product and coproduct must respect the grading so \(\mathcal{H}_{i}\mathcal{H}_{j}\subseteq\mathcal{H}_{i+j}\), and \(x\in\mathcal{H}_{n}\) implies \(\Delta(x)\in\bigoplus_{j=0}^{n}\mathcal{H}_{j}\otimes\mathcal {H}_{n-j}\). There are a few more axioms concerning a counit map and an antipode (automatic in the graded case); for the present paper, the most important is that the counit is zero on elements of positive degree, so, by the coalgebra axioms, \(\bar{\Delta}(x):=\Delta(x) - 1 \otimes x - x \otimes1 \in\bigoplus_{j=1}^{n-1}\mathcal{H}_{j}\otimes\mathcal{H}_{n-j}\), for \(x\in\mathcal{H}_{n}\). The free associative algebra and the algebra of symmetric functions, discussed in Sect. 1, are examples of graded Hopf algebras.

The subject begins in topology when H. Hopf realized that the presence of the coproduct leads to nice classification theorems which allowed him to compute the cohomology of the classical groups in a unified manner. Topological aspects are still a basic topic [46] with many examples which may provide grist for the present mill. For example, the cohomology groups of the loops on a topological space form a Hopf algebra, and the homology of the loops on the suspension of a wedge of circles forms a Hopf algebra isomorphic to the free associative algebra of Example 1.1 [14].

Joni and Rota [49] realized that many combinatorial objects have a natural breaking structure which gives a coalgebra structure to the graded vector space on such objects. Often there is a compatible way of putting pieces together, extending this to a Hopf algebra structure. Often, either the assembling or the breaking process is symmetric, leading to commutative or cocommutative Hopf algebras, respectively. For example, the symmetric function algebra is commutative and cocommutative while the free associative algebra is just cocommutative.

The theory developed here is for graded commutative or cocommutative Hopf algebras with one extra condition: that there is a unique way to assemble any given collection of objects. This amounts to the requirement that the Hopf algebra is either a polynomial algebra as an algebra (and therefore commutative) or a free associative algebra as an algebra and cocommutative (and therefore noncommutative). (We write a free associate algebra to refer to the algebra structure only, as opposed to the free associative algebra which has a specified coalgebra structure—namely, the generating elements are primitive.)

Increasingly sophisticated developments of combinatorial Hopf algebras are described by [4, 77–80] and [1]. This last is an expansive extension which unifies many common examples. Below are two examples that are prototypes for their Bosonic Fock functor and Full Fock functor constructions, respectively [1, Ch. 15]; they are also typical of constructions detailed in other sources.

Example 2.1

(The Hopf algebra of unlabeled graphs) [79, Sect. 12] [35, Sect. 3.2]

Let \(\bar{\mathcal{G}}\) be the vector space spanned by unlabeled simple graphs (no loops or multiple edges). This becomes a Hopf algebra with product disjoint union and coproduct

where the sum is over subsets of vertices S with G S , \(G_{S^{\mathcal{C}}}\) the induced subgraphs. Graded by number of vertices, \(\bar{\mathcal{G}}\) is both commutative and cocommutative, and is a polynomial algebra as an algebra. The associated random walk is described in Example 3.1 below.

Example 2.2

(The noncommutative Hopf algebra of labeled graphs) [79, Sect. 13] [35, Sect. 3.3]

Let \(\mathcal{G}\) be the vector space spanned by the set of simple graphs where vertices are labeled {1,2,…,n}, for some n. The product of two graphs G 1 G 2 is their disjoint union, where the vertices of G 1 keep their labels, and the labels in G 2 are increased by the number of vertices in G 1. The coproduct is

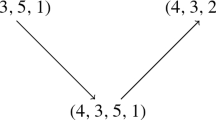

where we again sum over all subsets S of vertices of G, and G S , \(G_{S^{\mathcal{C}}}\) are relabeled so the vertices in each keep the same relative order. For example,

where 1 denotes the empty graph. \(\mathcal{G}\) is noncommutative and cocommutative and a free associative algebra as an algebra; the associated random walk is detailed in Example 3.2. As the notation suggests, \(\bar{\mathcal{G}}\) is a quotient of \(\mathcal {G}\), obtained by forgetting the labels on the vertices.

Aguiar–Bergeron–Sottile [4] define a combinatorial Hopf algebra as a Hopf algebra \(\mathcal{H}\) with a character \(\zeta :\mathcal{H}\to k\) which is both additive and multiplicative. They prove a universality theorem: any combinatorial Hopf algebra has a unique character-preserving Hopf morphism into the algebra of quasisymmetric functions. They show that this unifies many ways of building generating functions. When applied to the Hopf algebra of graphs, their map gives the chromatic polynomial. In Sect. 3.7 we find that their map gives the probability of absorption for several of our Markov chains. See also the examples in Sect. 6.

A good introduction to Hopf algebras is in [82]. A useful standard reference is in [64] and our development does not use much outside of her Chap. 1. The broad-ranging text [62] is aimed towards quantum groups but contains many examples useful here. Quantum groups are neither commutative nor cocommutative and need special treatment; see Example 6.3.

A key ingredient in our work is the Hopf-square map Ψ 2=mΔ; Ψ 2(x) is also written x [2]. In Sweedler notation, Ψ 2(x)=∑(x) x (1) x (2); in our combinatorial setting, it is useful to think of “pulling apart” x according to Δ, then using the product to put the pieces together. On graded Hopf algebras, Ψ 2 preserves the grading and, appropriately normalized, gives a Markov chain on appropriate bases. See Sect. 3.2 for assumptions and details. The higher power maps Ψ a=m [a]Δ[a] will also be studied, since under our hypothesis, they present no extra difficulty. For example, Ψ 3(x)=∑(x) x (1) x (2) x (3). In the shuffling example, Ψ a corresponds to the “a-shuffles” of [10]. A theorem of [90] shows that, for commutative or cocommutative Hopf algebras, the power rule holds: (x [a])[b]=x [ab], or Ψ a Ψ b=Ψ ab. See also the discussion in [56]. In shuffling language this becomes “an a-shuffle followed by a b-shuffle is an ab-shuffle” [10]. In general Hopf algebras this power law often fails [51]. Power maps are actively studied as part of a program to carry over to Hopf algebras some of the rich theory of groups. See [44, 59] and their references.

2.3 Structure theory of a free associative algebra

The eigenvectors of our Markov chains are described using combinatorics related to the free associative algebra, as described in the self-contained [60, Chap. 5].

A word in an ordered alphabet is Lyndon if it is strictly smaller (in lexicographic order) than its cyclic rearrangements. So 1122 is Lyndon but 21 or 1212 are not. A basic fact [60, Th. 5.1.5] is that any word w has a unique Lyndon factorization, that is, w=l 1 l 2⋯l k with each l i a Lyndon word and l 1≥l 2≥⋯≥l k . Further, each Lyndon word l has a standard factorization: if l is not a single letter, then l=l 1 l 2 where l i is non-trivial Lyndon and l 2 is the longest right Lyndon factor of l. (The standard factorization of a letter is just that letter by definition.) Thus 13245=13⋅245. Using this, define, for Lyndon l, its standard bracketing λ(l) recursively by λ(a)=a for a letter and λ(l)=[λ(l 1),λ(l 2)] for l=l 1 l 2 in standard factorization. As usual, [x,y]=xy−yx for words x,y. Thus

and

Garsia and Reutenauer [37, Sect. 2] describes how to visualize the standard bracketing of a Lyndon word as a rooted binary tree: given a Lyndon word l with standard factorization l=l 1 l 2, inductively set T l to be the tree with \(T_{l_{1}}\) as its left branch and \(T_{l_{2}}\) as its right branch. T 13245 and T 1122 are shown below.

Observe that a word w appears in the expansion of λ(l) only if, after exchanging the left and right branches at some vertices of T l , the leaves of T l , when read from left to right, spell out w. The coefficient of w in λ(l) is then the signed number of ways to do this (the sign is the parity of the number of exchanges required). For example,

-

25413 has coefficient 1 in λ(13245) since the unique way to rearrange T 13245 so the leaves spell 25413 is to exchange the branches at the root and the highest interior vertex;

-

21345 does not appear in λ(13245) since whenever the branches of T 13245 switch, 2 must appear adjacent to either 4 or 5, which does not hold for 21345;

-

1221 has coefficient 0 in λ(1122) as, to make the leaves of T 1122 spell 1221, we can either exchange branches at the root, or exchange branches at both of the other interior vertices. These two rearrangements have opposite signs, so the signed count of rearrangements is 0.

A final piece of notation is the following symmetrized product: let w=l 1 l 2⋯l k in Lyndon factorization. Then set

Viewing \(\operatorname{sym}(w)\) as a polynomial in the letters w 1,w 2,…,w l will be useful for Theorem 3.16.

Garsia and Reutenauer’s tree construction can be extended to visualize \(\operatorname{sym}(w)\), using what Barcelo and Bergeron [9] call decreasing Lyndon hedgerows, which simply consist of \(T_{l_{1}},T_{l_{2}},\ldots,T_{l_{k}}\) placed in a row. Denote this as T w also. The example T 35142 is shown below.

We can again express the coefficient of w′ in \(\operatorname {sym}(w)\) as the signed number of ways to rearrange T w so the leaves spell w′. Now there are two types of allowed moves: exchanging the left and right branches at a vertex, and permuting the trees of the hedgerow. The latter move does not come with a sign. Thus 14253 has coefficient −1 in \(\operatorname{sym}(35142)\), as the unique rearrangement of T 35142 which spells 14253 requires transposing the trees and permuting the branches labeled 3 and 5.

It is clear from this pictorial description that every term appearing in \(\operatorname{sym}(w)\) is a permutation of the letters in w. Garsia and Reutenauer [37, Th. 5.2] shows that \(\{\operatorname{sym}(w)\}\) form a basis for a free associative algebra. This will turn out to be a left eigenbasis for inverse riffle shuffling, and similar theorems hold for other Hopf algebras.

2.4 Symmetric functions and beyond

A basic object of study is the vector space \(\varLambda_{k}^{n}\) of homogeneous symmetric polynomials in k variables of degree n. The direct sum \(\varLambda_{k}=\bigoplus_{n=0}^{\infty}\varLambda_{k}^{n}\) forms a graded algebra with familiar bases: the monomial (m λ ), elementary (e λ ), homogeneous (h λ ), and power sums (p λ ). For example, e 2(x 1,…,x k )=∑1≤i<j≤k x i x j and for a partition λ=λ 1≥λ 2≥⋯≥λ l >0 with λ 1+⋯+λ l =n, \(e_{\lambda}=e_{\lambda_{1}}e_{\lambda_{2}}\cdots e_{\lambda_{l}}\). As λ ranges over partitions of n, {e λ } form a basis for \(\varLambda_{k}^{n}\), from which we construct the rock-breaking chain of Example 1.2. Splendid accounts of symmetric function theory appear in [61] and [86]. A variety of Hopf algebra techniques are woven into these topics, as emphasized by [38] and [96]. The comprehensive account of noncommutative symmetric functions [39] and its follow-ups furthers the deep connection between combinatorics and Hopf algebras. However, this paper will only involve its dual, the algebra of quasisymmetric functions, as they encode informations about absorption rates of our chains, see Sect. 3.7. A basis of this algebra is given by the monomial quasisymmetric functions: for a composition α=(α 1,…,α k ), define \(M_{\alpha}=\sum_{i_{1} < i_{2} < \cdots<i_{k}} x_{i_{1}}^{\alpha_{1}} \cdots x_{i_{k}}^{\alpha_{k}}\). Further details are in [86, Sect. 7.19].

3 Theory

3.1 Introduction

This section states and proves our main theorems. This introduction sets out definitions. Section 3.2 develops the reweighting schemes needed to have the Hopf-square maps give rise to Markov chains. Section 3.3 explains that these chains are often acyclic. Section 3.4 addresses a symmetrization lemma that we will use in Sections 3.5 and 3.6 to find descriptions of some left and right eigenvectors, respectively, for such chains. Section 3.7 determines the stationary distributions and gives expressions for the chance of absorption in terms of generalized chromatic polynomials. Applications of these theorems are in the last three sections of this paper.

As mentioned at the end of Sect. 2.2, we will be concerned with connected, graded (by positive integers) Hopf algebras \(\mathcal{H}\) with a distinguished basis \(\mathcal{B}\) satisfying one of two “freeness” conditions (in both cases, the number of generators may be finite or infinite):

-

1.

\(\mathcal{H}=\mathbb{R} [c_{1},c_{2},\ldots ]\) as an algebra (i.e., \(\mathcal{H}\) is a polynomial algebra) and \(\mathcal {B}= \{ c_{1}^{n_{1}}c_{2}^{n_{2}}\cdots\mid n_{i}\in\mathbb{N} \}\), the basis of monomials. The c i may have any degree, and there is no constraint on the coalgebra structure. This will give rise to a Markov chain on combinatorial objects where assembling is symmetric and deterministic.

-

2.

\(\mathcal{H}\) is cocommutative, \(\mathcal{H}=\mathbb{R}\langle c_{1},c_{2},\ldots \rangle \) as an algebra, (i.e., \(\mathcal{H}\) is a free associative algebra) and \(\mathcal{B}= \{ c_{i_{1}}c_{i_{2}}\cdots \mid i_{j}\in\mathbb{N} \}\), the basis of words. The c i may have any degree, and do not need to be primitive. This will give rise to a Markov chain on combinatorial objects where pulling apart is symmetric, assembling is non-symmetric and deterministic.

By the Cartier–Milnor–Moore theorem [19, 63], any graded connected commutative Hopf algebra has a basis which satisfies the first condition. However, we will not make use of this, since the two conditions above are reasonable properties for many combinatorial Hopf algebras and their canonical bases. For example, the Hopf algebra of symmetric functions, with the basis of elementary symmetric functions e λ , satisfies the first condition.

Write \(\mathcal{H}_{n}\) for the subspace of degree n in \(\mathcal {H}\), and \(\mathcal{B}_{n}\) for the degree n basis elements. The generators c i can be identified as those basis elements which are not the non-trivial product of basis elements; in other words, generators cannot be obtained by assembling objects of lower degree. Thus, all basis elements of degree one are generators, but there are usually generators of higher degree; see Examples 3.1 and 3.2 below. One can view the conditions 1 and 2 above as requiring the basis elements to have unique factorization into generators, allowing the convenient view of \(b \in\mathcal{B}\) as a word b=c 1 c 2⋯c l . Its length l(b) is then well-defined—it is the number of generators one needs to assemble together to produce b. Some properties of the length are developed in Sect. 3.3. For a noncommutative Hopf algebra, it is useful to choose a linear order on the set of generators refining the ordering by degree: i.e. if deg(c)<deg(c′), then c<c′. This allows the construction of the Lyndon factorization and standard bracketing of a basis element, as in Sect. 2.3. Example 3.17 demonstrates such calculations.

The ath Hopf-power map is Ψ a:=m [a]Δ[a], the a-fold coproduct followed by the a-fold product. These power maps are the central object of study of [65–67]. Intuitively, Ψ a corresponds to breaking an object into a pieces (some possibly empty) in all possible ways and then reassembling them. The Ψ a preserve degree, thus mapping \(\mathcal{H}_{n}\) to \(\mathcal{H}_{n}\).

As noted in [66], the power map Ψ a is an algebra homomorphism if \(\mathcal{H}\) is commutative:

and a coalgebra homomorphism if \(\mathcal{H}\) is cocommutative:

Only the former will be necessary for the rest of this section.

3.2 The Markov chain connection

The power maps can sometimes be interpreted as a natural Markov chain on the basis elements \(\mathcal{B}_{n}\) of \(\mathcal{H}_{n}\).

Example 3.1

(The Hopf algebra of unlabeled graphs, continuing from Example 2.1)

The set of all unlabeled simple graphs gives rise to a Hopf algebra \(\bar{\mathcal{G}}\) with disjoint union as product and

where the sum is over subsets of vertices S with G S , \(G_{S^{\mathcal{C}}}\) the induced subgraphs. Graded by the size of the vertex set, \(\bar{\mathcal{G}}\) is a commutative and cocommutative polynomial Hopf algebra with basis \(\mathcal{B}\) consisting of all graphs. The generators are precisely the connected graphs, and the length of a graph is its number of connected components.

The resulting Markov chain on graphs with n vertices evolves as follows: from G, color the vertices of G red or blue, independently with probability 1/2. Erase any edge with opposite colored vertices. This gives one step of the chain; the process terminates when there are no edges. Observe that each connected component breaks independently; that Δ is an algebra homomorphism ensures that, for any Hopf algebra, the generators break independently. The analogous Hopf algebra of simplicial complexes is discussed in Sect. 6.

Example 3.2

(The noncommutative Hopf algebra of labeled graphs, continuing from Example 2.2)

Let \(\mathcal{G}\) be the linear span of the simple graphs whose vertices are labeled {1,2,…,n} for some n. The product of two graphs G 1 G 2 is their disjoint union, where the vertices of G 1 keep their labels, and the labels in G 2 are increased by the number of vertices in G 1. The coproduct is

where the sum again runs over all subsets S of vertices of G, and G S , \(G_{S^{\mathcal{C}}}\) are relabeled so the vertices in each keep the same relative order. An example of a coproduct calculation is in Example 2.2. \(\mathcal{G}\) is cocommutative and a free associative algebra; its distinguished basis \(\mathcal{B}\) is the set of all graphs. A graph in \(\mathcal{G}\) is a product if and only if there is an i such that no edge connects a vertex with label ≤i to a vertex with label >i. Thus, all connected graphs are generators, but there are non-connected generators such as

Each step of the associated random walk on \(\mathcal{B}_{n}\), the graphs with n vertices, has this description: from G, color the vertices of G red or blue, independently with probability 1/2. Suppose r vertices received the color red; now erase any edge with opposite colored vertices, and relabel so the red vertices are 1,2,…,r and the blue vertices are r+1,r+2,…,n, keeping their relative orders. For example, starting at the complete graph on three vertices, the chain reaches each of the graphs shown below with probability 1/8:

So, forgetting the colors of the vertices,

As with \(\bar{\mathcal{G}}\), the chain on \(\mathcal{G}_{n}\) stops when all edges have been removed.

When is such a probabilistic interpretation possible? To begin, the coefficients of mΔ(b) must be non-negative real numbers for \(b\in\mathcal{B}\). This usually holds for combinatorial Hopf algebras, but the free associative algebra and the above algebras of graphs have an additional desirable property: for any \(b\in\mathcal {B}\), the coefficients of Ψ 2(b) sum to 2deg(b), regardless of b. Thus the operator \(\frac{1}{2^{n}}\varPsi^{2}(b)=\sum_{b'}K(b,b')b'\) forms a Markov transition matrix on basis elements of degree n. Indeed, the coefficients of Ψ a(b) sum to a deg(b) for all a, so \(\frac{1}{a^{n}}\varPsi^{a}(b)=\sum_{b'}K_{a}(b,b')b'\) defines a transition matrix K a . For other Hopf algebras, the sum of the coefficients in Ψ 2(b) may depend on b, so simply scaling Ψ 2 does not always yield a transition matrix.

Zhou’s rephrasing [97, Lemma 4.4.1.1] of the Doob transform [58, Sect. 17.6.1] provides a solution: if K is a matrix with non-negative entries and ϕ is a strictly positive right eigenfunction of K with eigenvalue 1, then \(\hat{K}(b,b'):= \phi(b)^{-1}K(b,b')\phi(b')\) is a transition matrix. Here \(\hat{K}\) is the conjugate of K by the diagonal matrix whose entries are ϕ(b). Theorem 3.4 below gives conditions for such ϕ to exist, and explicitly constructs ϕ recursively; Corollary 3.5 then specifies a non-recursive definition of ϕ when there is a sole basis element of degree 1. The following example explains why this construction is natural.

Example 3.3

(Symmetric functions and rock-breaking)

Consider the algebra of symmetric functions with basis {e λ }, the elementary symmetric functions. The length l(e λ ) is the number of parts in the partition λ, and the generators are the partitions with a single part. The coproduct is defined by

with the sum over all compositions \(\lambda'=\lambda_{1}',\lambda _{2}',\ldots,\lambda_{l}'\) with \(0\leq\lambda'_{i}\leq\lambda_{i}\), and λ−λ′ is the composition \(\lambda_{1}-\lambda_{1}',\ldots ,\lambda_{l}-\lambda_{l}'\). When reordered, some parts may be empty and some parts may occur several times. There are (λ 1+1)⋯(λ l +1) possible choices of λ′, so the coefficients of Ψ 2(e λ ) sum to (λ 1+1)⋯(λ l +1), which depends on λ.

Consider degree 2, where the basis elements are \(e_{1^{2}}\) and e 2. For K such that \(\frac{1}{2^{2}}\varPsi^{2}(b)=\sum_{b'}K(b,b')b'\),

which is not a transition matrix as the second row does not sum to 1. Resolve this by performing a diagonal change of basis: set \(\hat {e}_{1^{2}}=\phi(e_{1^{2}})^{-1}e_{1^{2}}\), \(\hat{e}_{2}=\phi(e_{2})^{-1}e_{2}\) for some non-negative function \(\phi:\mathcal{B}\rightarrow\mathbb{R}\), and consider \(\hat{K}\) with \(\frac{1}{2^{2}}\varPsi^{2}(\hat {b})=\sum_{\hat{b}'}\hat{K}(\hat{b},\hat{b}')\hat{b}'\). Since the first row of K, corresponding to \(e_{1^{2}}\), pose no problems, set \(\phi(e_{1^{2}})=1\). In view of the upcoming theorem, it is better to think of this as \(\phi(e_{1^{2}})= (\phi (e_{1}) )^{2}\) with ϕ(e 1)=1. Equivalently, \(\hat{e}_{1^{2}} = \hat{e}_{1} ^{2}\) with \(\hat{e}_{1} =e_{1}\). Turning attention to the second row, observe that \(\Delta(\hat{e}_{2})=\phi(e_{2})^{-1}(e_{2}\otimes1+e_{1}\otimes e_{1}+1\otimes e_{2})\), so \(\varPsi^{2}(\hat{e}_{2})=\hat{e}_{2}+\phi(e_{2})^{-1}\hat {e}_{1^{2}}+\hat{e}_{2}\), which means

so \(\hat{K}\) is a transition matrix if \(\frac{1}{4}\phi (e_{2})^{-1}+\frac{1}{2}=1\), i.e. if \(\phi(e_{2})= \frac{1}{2}\).

Continue to degree 3, where the basis elements are \(e_{1^{3}}\), e 12 and e 3. Now define K such that \(\frac{1}{2^{3}}\varPsi ^{2}(b)=\sum_{b'}K(b,b')b'\);

Again, look for \(\phi(e_{1^{3}}),\phi(e_{12})\) and ϕ(e 3) so that \(\hat{K}\), defined by \(\frac{1}{2^{3}}\varPsi^{2}(\hat {b})=\sum_{\hat{b}'}\hat{K}(\hat{b},\hat{b}')\hat{b}'\), is a transition matrix, where \(\hat{e}_{1^{3}}=\phi(e_{1^{3}})^{-1}e_{1^{3}}\), \(\hat{e}_{12}=\phi(e_{12})^{-1}e_{12}\), \(\hat{e}_{3}\,{=}\,\phi (e_{3})^{-1}\!e_{3}\). Note that, taking \(\phi(e_{1^{3}})\,{=}\,(\phi(e_{1}) )^{3}\!\,{=}\,1\) and \(\phi(e_{12})\,{=}\,\phi(e_{2})\phi(e_{1})\,{=}\,\frac{1}{2}\), the first two rows of \(\hat{K}\) sum to 1. View this as \(\hat {e}_{1^{3}}=\hat{e}_{1} ^{3}\) and \(\hat{e}_{12}=\hat{e}_{2} \hat{e}_{1}\). Then, as \(\varPsi^{2}(\hat{e}_{3})=\phi (e_{3})^{-1}(e_{3}+e_{2}e_{1}+e_{1}e_{2}+e_{3})=\hat{e}_{3}+\frac {1}{2}\phi(e_{3})^{-1}\hat{e}_{2,1}+\frac{1}{2}\phi(e_{3})^{-1}\hat {e}_{2,1}+\hat{e}_{3}\), the transition matrix is given by

and choosing \(\phi(e_{3})=\frac{1}{6}\) makes the third row sum to 1.

Continuing, we find that \(\phi(e_{i})=\frac{1}{i!}\), so \(\hat {e}_{i}=i!e_{i}\), more generally, \(\hat{e}_{\lambda}=\prod (i!)^{a_{i}(\lambda)}e_{\lambda}\) with i appearing a i (λ) times in λ. Then, for example,

So, for any partition λ of n,

and the coefficients of \(m\Delta(\hat{e}_{\lambda})\) sum to \(\sum_{\lambda'\leq\lambda}\binom{\lambda_{1}}{\lambda '_{1}}\cdots\binom{\lambda_{l}}{\lambda'_{l}}=2^{\lambda_{1}}\cdots 2^{\lambda_{n}}=2^{n}\), irrespective of λ. Thus \(\frac{1}{2^{n}}m\Delta\) describes a transition matrix, which has the rock-breaking interpretation of Sect. 1.

The following theorem shows that this algorithm works in many cases. Observe that, in the above example, it is the non-zero off-diagonal entries that change; the diagonal entries cannot be changed by rescaling the basis. Hence the algorithm would fail if some row had all off-diagonal entries equal to 0, and diagonal entry not equal to 1. This corresponds to the existence of \(b \in\mathcal{B}_{n}\) with \(\frac {1}{2^{n}}\varPsi^{2} (b)=\alpha b\) for some α≠1; the condition \(\bar{\Delta}(c):=\Delta(c) - 1 \otimes c - c\otimes1 \neq0\) below precisely prevents this. Intuitively, we are requiring that each generator of degree greater than one can be broken non-trivially. For an example where this condition fails, see Example 6.5.

Theorem 3.4

(Basis rescaling)

Let \(\mathcal{H}\) be a graded Hopf algebra over \(\mathbb{R}\) which is either a polynomial algebra or a free associative algebra that is cocommutative. Let \(\mathcal{B}\) denote the basis of monomials in the generators. Suppose that, for all generators c with deg(c)>1, all coefficients of Δ(c) (in the \(\mathcal{B}\otimes\mathcal{B}\) basis) are non-negative and \(\bar{\Delta}(c)\neq0\). Let K a be the transpose of the matrix of a −n Ψ a with respect to the basis \(\mathcal{B}_{n}\); in other words, a −n Ψ a(b)=∑ b′ K a (b,b′)b′ (suppressing the dependence of K a on n). Define, by induction on degree,

where ϕ(b) satisfies \(b=\phi(b)\hat{b}\). Write \(\hat{\mathcal {B}}:=\{\hat{b}\mid b \in\mathcal{B}\}\) and \(\hat{\mathcal{B}}_{n}:=\{ \hat{b}\mid b \in\mathcal{B}_{n}\}\). Then the matrix of the ath power map with respect to the \(\hat{\mathcal{B}}_{n}\) basis, when transposed and multiplied by a −n, is a transition matrix. In other words, the operator \(\hat{K}_{a}\) on \(\mathcal{H}_{n}\), defined by \(a^{-n}\varPsi ^{a}(\hat{b})=\sum_{b'}\hat{K}_{a}(\hat{b},\hat{b}')\hat{b}'=\sum_{b'}\phi(b)^{-1}K_{a}(b,b')\phi(b')b'\), has \(\hat{K}_{a}(\hat{b},\hat {b}')\geq0\) and \(\sum_{b'}\hat{K}_{a}(\hat{b},\hat{b}')=1\) for all \(b\in\mathcal{B}_{n}\), and all a≥0 and n≥0 (the same scaling works simultaneously for all a).

Remarks

-

1.

Observe that, if b=xy, then the definition of \(\hat{b}\) ensures \(\hat{b}=\hat{x}\hat{y}\). Equivalently, ϕ is a multiplicative function.

-

2.

The definition of \(\hat{c}\) is not circular: since \(\mathcal {H}\) is graded with \(\mathcal{H}_{0}=\mathbb{R}\), the counit is zero on elements of positive degree so that \(\bar{\Delta}(c)\in\bigoplus_{j=1}^{\deg (c)-1} \mathcal{H}_{j}\otimes \mathcal{H}_{\deg(c)-j}\). Hence K 2(c,b) is non-zero only if b=c or l(b)>1, so the denominator in the expression for \(\hat{c}\) only involves ϕ(b) for b with l(b)>1. Such b can be factorized as b=xy with deg(x),deg(y)<deg(b), whence ϕ(b)=ϕ(x)ϕ(y), so \(\hat{c}\) only depends on ϕ(x) with deg(x)<deg(c).

Proof

First note that \(\hat{K}_{2}(c,c)=\phi(c)^{-1}K_{2}(c,c)\phi (c)=K_{2}(c,c)=2^{1-\deg(c)}\), since \(m\Delta(c)=2c+m\bar{\Delta}(c)\) and \(\bar{\Delta}(c) \in\bigoplus_{j=1}^{\deg(c)-1}\mathcal {H}_{j}\otimes\mathcal{H}_{\deg(c)-j}\) means no c terms can occur in \(m\bar{\Delta}(c)\). So

as desired.

Let \(\eta_{c}^{xy}\) denote the coefficients of Δ(c) in the \(\mathcal{B}\otimes\mathcal{B}\) basis, so \(\Delta(c)= \sum_{x,y\in \mathcal{B}}\eta_{c}^{xy}x\otimes y\). Then \(K_{2}(c,b)=2^{-\deg(c)}\sum_{xy=b}\eta_{c}^{xy}\), and

So, if b has factorization into generators b=c 1⋯c l , then

so

Thus

as desired, where the third equality is due to multiplicativity of ϕ.

The above showed each row of \(\hat{K}_{2}\) sums to 1, which means (1,1,…,1) is a right eigenvector of \(\hat{K}_{2}\) of eigenvalue 1. \(\hat{K}_{a}\) describes Ψ a in the \(\hat{\mathcal{B}}\) basis, which is also a basis of monomials/words, in a rescaled set of generators \(\hat{c}\), so, by Theorems 3.19 and 3.20, the eigenspaces of \(\hat{K}_{a}\) do not depend on a. Hence (1,1,…,1) is a right eigenvector of \(\hat{K}_{a}\) of eigenvalue 1 for all a, thus each row of \(\hat{K}_{a}\) sums to 1 also.

Finally, to see that the entries of \(\hat{K}_{a}\) are non-negative, first extend the notation \(\eta_{c}^{xy}\) so \(\Delta^{[a]}(c)=\sum_{b_{1},\ldots b_{a}}\eta_{c}^{b_{1},\ldots,b_{a}}b_{1}\otimes\cdots \otimes b_{a}\). As Δ[a]=(ι⊗⋯⊗ι⊗Δ)Δ[a−1], it follows that \(\eta_{c}^{b_{1},\ldots,b_{a}}=\sum_{x}\eta _{c}^{b_{1},\ldots,b_{a-2},x}\eta_{x}^{b_{a-1},b_{a}}\), which inductively shows that \(\eta_{c}^{b_{1},\ldots,b_{a}}\ge0\) for all generators c and all \(b_{i}\in\mathcal{B}\). So, if b has factorization into generators b=c 1⋯c l , then

where the sum is over all sets \(\{b_{i,j}\}_{i=1,j=1}^{i=l,j=a}\) such that the product b 1,1 b 2,1⋯b l,1 b 1,2⋯b l,2⋯b 1,a ⋯b l,a =b′. Finally, \(\hat{K}_{a}(\hat{b},\hat{b}')=\phi(b)^{-1}K_{a}(b,b')\phi (b')\geq0\). □

Combinatorial Hopf algebras often have a single basis element of degree 1—for the algebra of symmetric functions, this is the unique partition of 1; for the Hopf algebra \(\mathcal{G}\) of graphs, this is the discrete graph with one vertex. After the latter example, denote this basis element by •. Then there is a simpler definition of the eigenfunction ϕ, and hence \(\hat{b}\) and \(\hat{K}\), in terms of \(\eta_{b}^{b_{1},\ldots,b_{r}}\), the coefficient of b 1⊗⋯⊗b r in Δ[r](b):

Corollary 3.5

Suppose that, in addition to the hypotheses of Theorem 3.4, \(\mathcal{B}_{1} =\{\bullet\}\). Then \(\hat{b}=\frac{(\deg b)!}{\eta _{b}^{\bullet,\ldots,\bullet}}b\), so \(\hat{K}_{a}\) is defined by

Proof

Work on \(\mathcal{H}_{n}\) for a fixed degree n. Recall that ϕ is a right eigenvector of \(\hat{K}_{a}\) of eigenvalue 1, and hence, by the notation of Sect. 3.6, an eigenvector of Ψ ∗a of eigenvalue a n. By Theorems 3.19 and 3.20, this eigenspace is spanned by f b for b with length n. Then \(\mathcal{B}_{1} =\{\bullet\}\) forces b=•n, so \(f_{\bullet^{n}}(b')=\frac{1}{n!}\eta_{b'}^{\bullet,\ldots,\bullet}\) spans the a n-eigenspace of Ψ ∗a. Consequently, ϕ is a multiple of \(f_{\bullet^{n}}\). To determine this multiplicative factor, observe that Theorem 3.4 defines ϕ(•) to be 1, so ϕ(•n)=1, and \(f_{\bullet^{n}}(\bullet^{n})=1\) also, so \(\phi=f_{\bullet^{n}}\). □

3.3 Acyclicity

Observe that the rock-breaking chain (Examples 1.2 and 3.3) is acyclic—it can never return to a state it has left, because the only way to leave a state is to break the rocks into more pieces. More specifically, at each step the chain either stays at the same partition or moves to a partition which refines the current state; as refinement of partitions is a partial order, the chain cannot return to a state it has left. The same is true for the chain on unlabeled graphs (Example 3.1)—the number of connected components increases over time, and the chain never returns to a previous state. Such behavior can be explained by the way the length changes under the product and coproduct. (Recall that the length l(b) is the number of factors in the unique factorization of b into generators.) Define a relation on \(\mathcal{B}\) by b→b′ if b′ appears in Ψ a(b) for some a. If Ψ a induces a Markov chain on \(\mathcal{B}_{n}\), then this precisely says that b′ is accessible from b.

Lemma 3.6

Let b,b i ,b (i) be monomials/words in a Hopf algebra which is either a polynomial algebra or a free associative algebra that is cocommutative. Then

-

(i)

l(b 1⋯b a )=l(b 1)+⋯+l(b a );

-

(ii)

For any summand b (1)⊗⋯⊗b (a) in Δ[a](b), l(b (1))+⋯+l(b (a))≥l(b);

-

(iii)

if b→b′, then l(b′)≥l(b).

Proof

(i) is clear from the definition of length.

Prove (ii) by induction on l(b). Note that the claim is vacuously true if b is a generator, as each l(b (i))≥0, and not all l(b (i)) may be zero. If b factorizes non-trivially as b=xy, then, as Δ[a](b)=Δ[a](x)Δ[a](y), it must be the case that b (i)=x (i) y (i), for some x (1)⊗⋯⊗x (a) in Δ[a](x), y (1)⊗⋯⊗y (a) in Δ[a](y). So l(b (1))+⋯+l(b (a))=l(x (1))+⋯+l(x (a))+l(y (1))+⋯+l(y (a)) by (i), and by inductive hypothesis, this is at least l(x)+l(y)=l(b).

(iii) follows trivially from (i) and (ii): if b→b′, then b′=b (1)⋯b (a) for a term b (1)⊗⋯⊗b (a) in Δ[a](b). So l(b′)=l(b (1))+⋯+l(b (a))≥l(b). □

If \(\mathcal{H}\) is a polynomial algebra, more is true. The following proposition explains why chains built from polynomial algebras (i.e., with deterministic and symmetric assembling) are always acyclic; in probability language, it says that, if the current state is built from l generators, then, with probability a l−n, the chain stays at this state, otherwise, it moves to a state built from more generators. Hence, if the states are totally ordered to refine the partial ordering by length, then the transition matrices are upper-triangular with a l−n on the main diagonal.

Proposition 3.7

(Acyclicity)

Let \(\mathcal{H}\) be a Hopf algebra which is a polynomial algebra as an algebra, and \(\mathcal{B}\) its monomial basis. Then the relation → defines a partial order on \(\mathcal{B}\), and the ordering by length refines this order: if b→b′ and b≠b′, then l(b)<l(b′). Furthermore, for any integer a and any \(b \in\mathcal{B}\) with length l(b),

for some α bb′.

Proof

It is easier to first prove the expression for Ψ a(b). Suppose b has factorization into generators b=c 1 c 2⋯c l(b). As \(\mathcal{H}\) is commutative, Ψ a is an algebra homomorphism, so Ψ a(b)=Ψ a(c 1)⋯Ψ a(c l(b)). Recall from Sect. 2.2 that \(\bar{\Delta}(c)=\Delta(c)-1 \otimes c - c\otimes 1 \in\bigoplus_{i=1}^{deg(c)-1}\mathcal{H}_{i} \otimes\mathcal {H}_{deg(c)-i}\), in other words, 1⊗c and c⊗1 are the only terms in Δ(c) which have a tensor-factor of degree 0. As Δ[3]=(ι⊗Δ)Δ, the only terms in Δ[3](c) with two tensor-factors of degree 0 are 1⊗1⊗c, 1⊗c⊗1 and c⊗1⊗1. Inductively, we see that the only terms in Δ[a](c) with all but one tensor-factor having degree 0 are 1⊗⋯⊗1⊗c,1⊗⋯⊗1⊗c⊗1,…,c⊗1⊗⋯⊗1. So Ψ a(c)=ac+∑ l(b′)>1 α cb′ b′ for generators c. As Ψ a(b)=Ψ a(c 1)⋯Ψ a(c l ), and length is multiplicative (Lemma 3.6(i)), the expression for Ψ a(b) follows.

It is then clear that → is reflexive and antisymmetric. Transitivity follows from the power rule: if b→b′ and b′→b″, then b′ appears in Ψ a(b) for some a and b″ appears in Ψ a′(b′) for some a′. So b″ appears in Ψ a′ Ψ a(b)=Ψ a′a(b). □

The same argument applied to a cocommutative free associative algebra shows that all terms in Ψ a(b) are either a permutation of the factors of b, or have length greater than that of b. The relation → is only a preorder; the associated chains are not acyclic, as they may oscillate between such permutations of factors. For example, in the noncommutative Hopf algebra of labeled graphs, the following transition probabilities can occur:

(the bottom state is absorbing). The probability of going from b to some permutation of its factors (as opposed to a state of greater length, from which there is no return to b) is a l(b)−n.

Here is one more result in this spirit, necessary in Sect. 3.5 to show that the eigenvectors constructed there have good triangularity properties and hence form an eigenbasis:

Lemma 3.8

Let \(b,b_{i}, b_{i}'\) be monomials/words in a Hopf algebra which is either a polynomial algebra or a free associative algebra that is cocommutative. If b=b 1⋯b k and \(b_{i} \rightarrow b'_{i}\) for each i, then \(b \rightarrow b'_{\sigma(1)} \cdots b'_{\sigma(k)}\) for any σ∈S k .

Proof

For readability, take k=2 and write b=xy, x→x′, y→y′. By definition of the relation →, it must be that x′=x (1)⋯x (a) for some summand x (1)⊗⋯⊗x (a) of \(\bar{\Delta}^{[a]}(x)\). Likewise y′=y (1)⋯y (a′) for some a′. Suppose a>a′. Coassociativity implies that Δ[a](y)=(ι⊗⋯⊗ι⊗Δ[a−a′])Δ[a′](y), and y (a′)⊗1⊗⋯⊗1 is certainly a summand of Δ[a−a′](y (a′)), so y (1)⊗⋯⊗y (a′)⊗1⊗⋯⊗1 occurs in Δ[a](y). So, taking y (a′+1)=⋯=y (a)=1, we can assume a=a′. Then Δ[a](b)=Δ[a](x)Δ[a](y) contains the term x (1) y (1)⊗⋯⊗x (a) y (a). Hence Ψ a(b) contains the term x (1) y (1)⋯x (a) y (a), and this product is x′y′ if \(\mathcal{H}\) is a polynomial algebra.

If \(\mathcal{H}\) is a cocommutative, free associative algebra, the factors in x (1) y (1)⊗⋯⊗x (a) y (a) must be rearranged to conclude that b→x′y′ and b→y′x′. Coassociativity implies Δ[2a]=(Δ⊗⋯⊗Δ)Δ[a], and Δ(x (i) y (i))=Δ(x (i))Δ(y (i)) contains (x (i)⊗1)(1⊗y (i))=x (i)⊗y (i), so Δ[2a](b) contains the term x (1)⊗y (1)⊗x (2)⊗y (2)⊗⋯⊗x (a)⊗y (a). As \(\mathcal{H}\) is cocommutative, any permutation of the tensor-factors, in particular, x (1)⊗x (2)⊗⋯⊗x (a)⊗y (1)⊗⋯⊗y (a) and y (1)⊗y (2)⊗⋯⊗y (a)⊗x (1)⊗⋯⊗x (a), must also be summands of Δ[2a](b), and multiplying these tensor-factors together shows that both x′y′ and y′x′ appear in Ψ [2a](b). □

Example 3.9

(Symmetric functions and rock-breaking)

Recall from Example 3.3 the algebra of symmetric functions with basis {e λ }, which induces the rock-breaking process. Here, e λ →e λ′ if and only if λ′ refines λ. Lemma 3.8 for the case k=2 is the statement that, if λ is the union of two partitions μ and ν, and μ′ refines μ, ν′ refines ν, then μ′∐ν′ refines μ∐ν=λ.

3.4 The symmetrization lemma

The algorithmic construction of left and right eigenbases for the chains created in Sect. 3.2 will go as follows:

-

(i)

Make an eigenvector of smallest eigenvalue for each generator c;

-

(ii)

For each basis element b with factorization c 1 c 2⋯c l , build an eigenvector of larger eigenvalue out of the eigenvectors corresponding to the factors c i , produced in the previous step.

Concentrate on the left eigenvectors for the moment. Recall that the transition matrix K a is defined by a −n Ψ a(b)=∑ b′ K a (b,b′)b′, so the left eigenvectors for our Markov chain are the usual eigenvectors of Ψ a on \(\mathcal{H}\). Step (ii) is simple if \(\mathcal{H}\) is a polynomial algebra, because then \(\mathcal{H}\) is commutative so Ψ a is an algebra homomorphism. Consequently, the product of two eigenvectors is an eigenvector with the product eigenvalue. This fails for cocommutative, free associative algebras \(\mathcal{H}\), but can be fixed by taking symmetrized products:

Theorem 3.10

(Symmetrization lemma)

Let x 1,x 2,…,x k be primitive elements of any Hopf algebra \(\mathcal{H}\), then \(\sum_{\sigma\in S_{k}} x_{\sigma(1)} x_{\sigma (2)} \cdots x_{\sigma(k)}\) is an eigenvector of Ψ a with eigenvalue a k.

Proof

For concreteness, take a=2. Then

□

In Sects. 3.5 and 3.6, the fact that the eigenvectors constructed give a basis will follow from triangularity arguments based on Sect. 3.3. These rely heavily on the explicit structure of a polynomial algebra or a free associative algebra. Hence it is natural to look for alternatives that will generalize this eigenbasis construction plan to Hopf algebras with more complicated structures. For example, one may ask whether some good choice of x i exists with which the symmetrization lemma will automatically generate a full eigenbasis. When \(\mathcal{H}\) is cocommutative, an elegant answer stems from the following two well-known structure theorems:

Theorem 3.11

(Cartier–Milnor–Moore) [19, 63]

If \(\mathcal{H}\) is graded, cocommutative and connected, then \(\mathcal{H}\) is Hopf isomorphic to \(\mathcal {U}(\mathfrak{g})\), the universal enveloping algebra of a Lie algebra \(\mathfrak{g}\), where \(\mathfrak{g}\) is the Lie algebra of primitive elements of \(\mathcal{H}\).

Theorem 3.12

(Poincaré–Birkoff–Witt) [48, 60]

If {x 1,x 2,…} is a basis for a Lie algebra \(\mathfrak{g}\), then the symmetrized products \(\sum_{\sigma \in S_{k}} x_{i_{\sigma(1)}} x_{i_{\sigma(2)}} \cdots x_{i_{\sigma(k)}}\), for 1≤i 1≤i 2≤⋯≤i k , form a basis for \(\mathcal {U}(\mathfrak{g})\).

Putting these together reduces the diagonalization of Ψ a on a cocommutative Hopf algebra to determining a basis of primitive elements:

Theorem 3.13

(Strong symmetrization lemma)

Let \(\mathcal{H}\) be a graded, cocommutative, connected Hopf algebra, and let {x 1,x 2,…} be a basis for the subspace of primitive elements in \(\mathcal{H}\). Then, for each \(k \in\mathbb{N}\),

is a basis of the a k-eigenspace of Ψ a.

Much work [2, 3, 35] has been done on computing a basis for the subspace of the primitives of particular Hopf algebras, their formulas are in general more efficient than our universal method here, and using these will be the subject of future work. Alternatively, the theory of good Lyndon words [55] gives a Grobner basis argument to further reduce the problem to finding elements which generate the Lie algebra of primitives, and understanding the relations between them. This is the motivation behind our construction of the eigenvectors in Theorem 3.16, although the proof is independent of this theorem, more analogous to that of Theorem 3.15, the case of a polynomial algebra.

3.5 Left eigenfunctions

This section gives an algorithmic construction of an eigenbasis for the Hopf power maps Ψ a on the Hopf algebras of interest. If K a as defined by a −n Ψ a(b)=∑ b′ K a (b,b′)b′ is a transition matrix, then this eigenbasis is precisely a left eigenbasis of the associated chain, though the results below stand whether or not such a chain may be defined (e.g., the construction works when some coefficients of Δ(c) are negative, and when there are primitive generators of degree >1). The first step is to associate each generator to an eigenvector of smallest eigenvalue, this is achieved using the (first) Eulerian idempotent map

Here \(\bar{\Delta}(x)=\Delta(x) - 1 \otimes x - x \otimes1 \in\bigoplus_{j=1}^{n-1}\mathcal{H}_{j}\otimes\mathcal{H}_{n-j}\), as explained in Sect. 2.2. Then inductively define \(\bar{\Delta }^{[a]}=(\iota\otimes \cdots\otimes\iota\otimes\bar{\Delta})\bar{\Delta}^{[a-1]}\), which picks out the terms in Δ[a](x) where each tensor-factor has strictly positive degree. This captures the notion of breaking into a non-trivial pieces. Observe that, if \(x\in\mathcal{H}_{n}\), then \(\bar{\Delta}^{[a]}(x)=0\) whenever a>n, so e(x) is a finite sum for all x. (By convention, e≡0 on \(\mathcal{H}_{0}\).)

This map e is the first of a series of Eulerian idempotents e i defined by Patras [66]; he proves that, in a commutative or cocommutative Hopf algebra of characteristic zero where \(\bar{\Delta }\) is locally nilpotent (i.e. for each x, there is some a with \(\bar{\Delta}^{[a]} x=0\)), the Hopf-powers are diagonalizable, and these e i are orthogonal projections onto the eigenspaces. In particular, this weight decomposition holds for graded commutative or cocommutative Hopf algebras. We will not need the full series of Eulerian idempotents, although Example 3.18 makes the connection between them and our eigenbasis.

To deduce that the eigenvectors we construct are triangular with respect to \(\mathcal{B}\), one needs the following crucial observation (recall from Sect. 3.3 that b→b′ if b′ occurs in Ψ a(b) for some a):

Proposition 3.14

For any generator c,

for some real α cb′.

Proof

The summand \(\frac{(-1)^{a-1}}{a}m^{[a]}\bar{\Delta}^{[a]}(c)\) involves terms of length at least a, from which the second expression of e(c) is immediate. Each term b′ of e(c) appears in Ψ a(c) for some a, hence c→b′. Combine this with the knowledge from the second expression that c occurs with coefficient 1 to deduce the first expression. □

The two theorems below detail the construction of an eigenbasis for Ψ a in a polynomial algebra and in a cocommutative free associative algebra, respectively. These are left eigenvectors for the corresponding transition matrices. A worked example will follow immediately; it may help to read these together.

Theorem 3.15

Let \(\mathcal{H}\) be a Hopf algebra (over a field of characteristic zero) that is a polynomial algebra as an algebra, with monomial basis \(\mathcal{B}\). For \(b\in\mathcal{B}\) with factorization into generators b=c 1 c 2⋯c l , set

Then g b is an eigenvector of Ψ a of eigenvalue a l satisfying the triangularity condition

Hence \(\{ g_{b}\mid b\in\mathcal{B}_{n} \} \) is an eigenbasis for the action of Ψ a on \(\mathcal{H}_{n}\), and the multiplicity of the eigenvalue a l in \(\mathcal{H}_{n}\) is the coefficient of x n y l in \(\prod_{i} (1-yx^{i} )^{-d_{i}}\), where d i is the number of generators of degree i.

Theorem 3.16

Let \(\mathcal{H}\) be a cocommutative Hopf algebra (over a field of characteristic zero) that is a free associative algebra with word basis \(\mathcal{B}\). For \(b\in\mathcal{B}\) with factorization into generators b=c 1 c 2⋯c l , set g b to be the polynomial \(\operatorname{sym}(b)\) evaluated at (e(c 1),e(c 2),…,e(c l )). In other words, in the terminology of Sect. 2.3,

-

for c a generator, set g c :=e(c);

-

for b a Lyndon word, inductively define \(g_{b}: = [g_{b_{1}}, g_{b_{2}} ]\) where b=b 1 b 2 is the standard factorization of b;

-

for b with Lyndon factorization b=b 1⋯b k , set \(g_{b}:=\sum_{\sigma\in S_{k}} g_{b_{\sigma(1)}} g_{b_{\sigma(2)}}\cdots g_{b_{\sigma(k)}}\).

Then g b is an eigenvector of Ψ a of eigenvalue a k (k the number of Lyndon factors in b) satisfying the triangularity condition

Hence \(\{ g_{b}\mid b\in\mathcal{B}_{n} \} \) is an eigenbasis for the action of Ψ a on \(\mathcal{H}_{n}\), and the multiplicity of the eigenvalue a k in \(\mathcal{H}_{n}\) is the coefficient of x n y k in \(\prod_{i} (1-yx^{i} )^{-d_{i}}\), where d i is the number of Lyndon words of degree i in the alphabet of generators.

Remarks

-

1.

If Ψ a defines a Markov chain, then the triangularity of g b (in both theorems) has the following interpretation: the left eigenfunction g b takes non-zero values only on states that are reachable from b.

-

2.

The expression of the multiplicity of the eigenvalues (in both theorems) holds for Hopf algebras that are multigraded, if we replace all xs, ns and is by tuples, and read the formula as multi-index notation. For example, for a bigraded polynomial algebra \(\mathcal{H}\), the multiplicity of the a l-eigenspace in \(\mathcal{H}_{m,n}\) is the coefficient of \(x_{1}^{m} x_{2}^{n} y^{l}\) in \(\prod_{i,j} (1-yx_{1}^{i} x_{2}^{j} )^{-d_{i,j}}\), where d i,j is the number of generators of bidegree (i,j). This idea will be useful in Sect. 5.

-

3.

Theorem 3.16 essentially states that any cocommutative free associative algebra is in fact isomorphic to the free associative algebra, generated by e(c). But there is no analogous interpretation for Theorem 3.15; being a polynomial algebra is not a strong enough condition to force all Hopf algebras with this condition to be isomorphic. A polynomial algebra \(\mathcal{H}\) is isomorphic to the usual polynomial Hopf algebra (i.e. with primitive generators) only if \(\mathcal{H}\) is cocommutative; then e(c) gives a set of primitive generators.

Example 3.17

As promised, here is a worked example of this calculation, in the noncommutative Hopf algebra of labeled graphs, as defined in Example 3.2. Let b be the graph

which is the product of three generators as shown. (Its factors happen to be its connected components, but that’s not always true). Since the ordering of generators refines the ordering by degree, a vertex (degree 1) comes before an edge (degree 2), so the Lyndon factorization of b is

So g b is defined to be

The first Lyndon factor of b has standard factorization

so

The Eulerian idempotent map fixes the single vertex, and

thus substituting into the previous equation gives

Since

returning to the first expression for g b gives the following eigenvector of eigenvalue a 2

Proof of Theorem 3.15 (polynomial algebra)

By Patras [66], the Eulerian idempotent map is a projection onto the a-eigenspace of Ψ a, so, for each generator c, e(c) is an eigenvector of eigenvalue a. As \(\mathcal{H}\) is commutative, Ψ a is an algebra homomorphism, so the product of two eigenvectors is another eigenvector with the product eigenvalue. Hence g b :=e(c 1)e(c 2)⋯e(c l ) is an eigenvector of eigenvalue a l.

To see triangularity, note that, by Proposition 3.14,

Lemma 3.8 shows that \(b \rightarrow c_{1} ' \cdots c_{l}'\) in each summand, and the condition \(c_{i}' \neq c_{i}\) for some i means precisely that \(c_{1}' \cdots c_{l}' \neq b\). Also, by Proposition 3.14,

and thus \(l(c_{1}' \cdots c_{l}')>l\) as length is multiplicative.

The multiplicity of the eigenvalue a l is the number of basis elements b with length l. The last assertion of the theorem is then immediate from [94, Th. 3.14.1]. □

Example 3.18

We show that g b =e l(b)(b), where the higher Eulerian idempotents are defined by

By Patras [66], e i is a projection to the a i-eigenspace of Ψ a, so, given the triangularity condition of the eigenbasis {g b }, it suffices to show that b is the only term of length l(b) in e l(b)(b). Note that e l(b)(b) is a sum of terms of the form e(b (1))e(b (2))⋯e(b (l)) for some b (i) with b (1)⊗⋯⊗b (l) a summand of Δ[l](b). As e≡0 on \(\mathcal{H}_{0}\), the b i s must be non-trivial. Hence each term b′ of e l(b)(b) has the form \(b'=b_{(1)}'\cdots b_{(l)}'\), with \(b_{(i)}\rightarrow b_{(i)}'\) and b→b (1)⋯b (l). It follows from Lemma 3.8 that \(b_{(1)} \cdots b_{(l)} \rightarrow b_{(1)}' \cdots b_{(l)}'\), so b→b′ by transitivity, which, by Lemma 3.7 means l(b′)>l(b) unless b′=b.

It remains to show that the coefficient of b in e l(b)(b) is 1. Let b=c 1⋯c l be the factorization of b into generators. With notation from the previous paragraph, taking b′=b results in \(b \rightarrow b_{(1)} \cdots b_{(l)} \rightarrow b_{(1)}' \cdots b_{(l)}' =b\), so b=b (1)⋯b (l). This forces the b (i)=c σ(i) for some σ∈S l . As b (i) occurs with coefficient 1 in e(b (i)), the coefficient of b (1)⊗⋯⊗b (l) in (e⊗⋯⊗e)Δ[l](b) is the coefficient of c σ(1)⊗⋯⊗c σ(l) in Δ[l](b)=Δ[l](c 1)⋯Δ[l](c l ), which is 1 for each σ∈S l . Each occurrence of c σ(1)⊗⋯⊗c σ(l) in (e⊗⋯⊗e)Δ[l](b) gives rise to a b term in m [l](e⊗e⊗⋯⊗e)Δ[l](b) with the same coefficient, for each σ∈S l , hence b has coefficient l! in m [l](e⊗e⊗⋯⊗e)Δ[l](b)=l!e l (b).

The same argument also shows that, if i<l(b), then e i (b)=0, as there is no term of length i in e i (b). In particular, e(b)=0 if b is not a generator.

Proof of Theorem 3.16 (cocommutative and free associative algebra)

Schmitt [79, Thm. 9.4] shows that the Eulerian idempotent map e projects a graded cocommutative algebra onto its subspace of primitive elements, so g c :=e(c) is primitive. A straightforward calculation shows that, if \(x,y\in\mathcal{H}\) are primitive, then so is [x,y]. Iterating this implies that, if b is a Lyndon word, then g b (which is the standard bracketing of e(c)s) is primitive. Now apply the symmetrization lemma (Lemma 3.10) to deduce that, if \(b\in\mathcal{B}\) has k Lyndon factors, g b is an eigenvector of eigenvalue a k.

To see triangularity, first recall that \(\operatorname{sym}\) is a linear combination of the permutations of its arguments, hence g b is a linear combination of products of the form e(c σ(1))⋯e(c σ(l)) for some σ∈S l . Hence, by Proposition 3.14, each term in g b has the form \(c'_{\sigma(1)} \cdots c'_{\sigma(l)}\) with \(c_{i} \rightarrow c_{i}'\), and by Lemma 3.8, we have \(b \rightarrow c'_{\sigma(1)} \cdots c'_{\sigma(l)}\). Also, by Proposition 3.14,

and all terms of the sum have length greater than l, as length is multiplicative, and \(\operatorname{sym}\) is a linear combination of the permutations of its arguments.

The multiplicity of the eigenvalue a k is the number of basis elements with k Lyndon factors. The last assertion of the theorem is then immediate from [94, Th. 3.14.1]. □

3.6 Right eigenvectors

To obtain the right eigenvectors for our Markov chains, consider the graded dual \(\mathcal{H}^{*}\) of the algebras examined above. The multiplication Δ∗ and comultiplication m ∗ on \(\mathcal {H}^{*}\) are given by

for any \(x^{*},y^{*}\in\mathcal{H}^{*}\), \(z,w\in\mathcal{H}\). Then Ψ ∗a:=Δ∗[a] m ∗[a] is the dual map to Ψ a. So, if K a , defined by a −n Ψ a(b)=∑ b′ K a (b,b′)b′, is a transition matrix, then its right eigenvectors are the eigenvectors of Ψ ∗a. The theorems below express these eigenvectors in terms of {b ∗}, the dual basis to \(\mathcal{B}\). Dualizing a commutative Hopf algebra creates a cocommutative Hopf algebra, and vice versa, so Theorem 3.19 below, which diagonalizes Ψ ∗a on a polynomial algebra, will share features with Theorem 3.16, which diagonalizes Ψ a on a cocommutative free associative algebra. Similarly, Theorems 3.20 and 3.15 will involve common ideas. However, Theorems 3.19 and 3.20 are not direct applications of Theorems 3.16 and 3.15 to \(\mathcal{H}^{*}\) as \(\mathcal{H}^{*}\) is not a polynomial or free associative algebra—a breaking and recombining chain with a deterministic recombination does not dualize to one with a deterministic recombination. For example, the recombination step is deterministic for inverse shuffling (place the left pile on top of the right pile), but not for forward riffle shuffling (shuffle the two piles together).

The two theorems below give the eigenvectors of Ψ ∗a; exemplar computations are in Sect. 4.2. Theorem 3.19 gives a complete description of these for \(\mathcal{H}\) a polynomial algebra, and Theorem 3.20 yields a partial description for \(\mathcal{H}\) a cocommutative free associative algebra. Recall that \(\eta_{b}^{b_{1},\ldots,b_{a}}\) is the coefficient of b 1⊗⋯⊗b a in Δ[a](b).

Theorem 3.19

Let \(\mathcal{H}\) be a Hopf algebra (over a field of characteristic zero) that is a polynomial algebra as an algebra, with monomial basis \(\mathcal{B}\). For \(b\in\mathcal{B}\) with factorization into generators b=c 1 c 2⋯c l , set

where the normalizing constant A(b) is calculated as follows: for each generator c, let a c (b) be the power of c in the factorization of b, and set A(b)=∏ c a c (b)!. Then f b is an eigenvector of Ψ ∗a of eigenvalue a l, and

where the sum on the second line runs over all σ with (c σ(1),…,c σ(l)) distinct (i.e., sum over all coset representatives of the stabilizer of (c 1,…,c l )). The eigenvector f b satisfies the triangularity condition

Furthermore, {f b } is the dual basis to {g b }. In other words, f b (g b′)=0 if b≠b′, and f b (g b )=1. Yet another formulation: the change of basis matrix from {f b } to \(\mathcal{B}\), which has f b as its columns, is the inverse of the matrix with g b as its rows.

Remarks

-

1.

If \(\mathcal{H}\) is also cocommutative, then it is unnecessary to symmetrize—just define \(f_{b}=\frac {1}{A(b)}c_{1}^{*}c_{2}^{*}\cdots c_{l}^{*}\).

-

2.

If Ψ a defines a Markov chain on \(\mathcal{B}_{n}\), then the theorem says f b (b′) may be interpreted as the number of ways to break b′ into l pieces so that the result is some permutation of the l generators that are factors of b. In particular, f b takes only non-negative values, and f b is non-zero only on states which can reach b. Thus f b may be used to estimate the probability of being in states that can reach b, see Corollary 4.10 for an example.

Theorem 3.20

Let \(\mathcal{H}\) be a cocommutative Hopf algebra (over a field of characteristic zero) which is a free associative algebra as an algebra, with word basis \(\mathcal{B}\). For each Lyndon word b, let f b be the eigenvector of Ψ ∗a of eigenvalue a such that f b (g b )=1 and f b (g b′)=0 for all other Lyndon b′. In particular, f c =c ∗ and is primitive. For each basis element b with Lyndon factorization b=b 1⋯b k , let

where the normalizing constant A′(b) is calculated as follows: for each Lyndon basis element b′, let \(a'_{b'}(b)\) be the number of times b′ occurs in the Lyndon factorization of b, and set \(A'(b)=\prod_{b'}a'_{b'}(b)!\). Then f b is an eigenvector of Ψ ∗a of eigenvalue a k, and {f b } is the dual basis to {g b }. If b=c 1 c 2⋯c l with c 1≥c 2≥⋯≥c l in the ordering of generators, then

Proof of Theorem 3.19 (polynomial algebra)

Suppose b ∗⊗b′∗ is a term in m ∗(c ∗), where c is a generator. This means m ∗(c ∗)(b⊗b′) is non-zero. Since comultiplication in \(\mathcal{H}^{*}\) is dual to multiplication in \(\mathcal{H}\), m ∗(c ∗)(b⊗b′)=c ∗(bb′), which is only non-zero if bb′ is a (real number) multiple of c. Since c is a generator, this can only happen if one of b,b′ is c. Hence c ∗ is primitive. Apply the symmetrization lemma (Lemma 3.10) to the primitives \(c_{1}^{*}, \ldots, c_{l}^{*}\) to deduce that f b as defined above is an eigenvector of eigenvalue a l.

Since multiplication in \(\mathcal{H}^{*}\) is dual to the coproduct in \(\mathcal{H}\), \(c_{\sigma(1)}^{*} c_{\sigma(2)}^{*}\cdots c_{\sigma(l)}^{*}(b')=c_{\sigma (1)}^{*} \otimes c_{\sigma(2)}^{*} \otimes\cdots\otimes c_{\sigma(l)}^{*} (\Delta^{[l]}(b') )\), from which the first expression for f b (b′) is immediate. To deduce the second expression, note that the size of the stabilizer of (c σ(1),c σ(2),…,c σ(l)) under S l is precisely A(b).

It is apparent from the formula that b′∗ appears in f b only if c σ(1)⋯c σ(l)=b appears in Ψ l(b′), hence b′→b is necessary. To calculate the leading coefficient f b (b), note that this is the sum over S l of the coefficients of c σ(1)⊗⋯⊗c σ(l) in Δ[l](b)=Δ[l](c 1)⋯Δ[l](c l ). Each term in Δ[l](c i ) contributes at least one generator to at least one tensor-factor, and each tensor-factor of c σ(1)⊗⋯⊗c σ(l) is a single generator, so each occurrence of c σ(1)⊗⋯⊗c σ(l) is a product of terms from Δ[l](c i ) where one tensor-factor is c i and all other tensor-factors are 1. Such products are all l! permutations of the c i in the tensor-factors, so, for each fixed σ, the coefficient of c σ(1)⊗⋯⊗c σ(l) in Δ[l](b) is A(b). This proves the first equality in the triangularity statement. Triangularity of f b with respect to length follows, as ordering by length refines the relation → (Proposition 3.7).

To see duality, first note that, since Ψ ∗a is the linear algebra dual to Ψ a, f b (Ψ a g b′)=(Ψ ∗a f b )(g b′). Now, using that f b and g b are eigenvectors, it follows that

so f b (g b′)=0 if l(b′)≠l(b).

Now suppose l(b′)=l(b)=l. Then

which is 0 when b≠b′, and 1 when b=b′. □

Proof of Theorem 3.20 (cocommutative and free associative algebra)

\(\mathcal{H}^{*}\) is commutative, so the power map is an algebra homomorphism. Then, since f b is defined as the product of k eigenvectors each of eigenvalue a, f b is an eigenvector of eigenvalue a k.

For any generator c, c ∗ is primitive by the same reasoning as in Theorem 3.19, the case of a polynomial algebra. To check that c ∗(g c )=1 and c ∗(g b )=0 for all other Lyndon b, use the triangularity of g b :

Each summand c ∗(b′) in the second term is 0 as l(c)=1≤l(b)<l(b′). As \(\operatorname{sym}(b)\) consists of terms of length l(b), \(c^{*}(\operatorname{sym}(b))\) is 0 unless l(b)=1, in which case \(\operatorname{sym}(b)=b\). Hence \(c^{*}(g_{b})=c^{*}(\operatorname {sym}(b))\) is non-zero only if c=b, and \(c^{*}(g_{c})=c^{*}(\operatorname {sym}(c))=c^{*}(c)=1\).

Turn now to duality. An analogous argument to the polynomial algebra case shows that f b (g b′)≠0 only when they have the same eigenvalue, which happens precisely when b and b′ have the same number of Lyndon factors. So let b 1⋯b k be the decreasing Lyndon factorization of b, and let \(b'_{1}\cdots b'_{k}\) be the decreasing Lyndon factorization of b′. To evaluate

observe that

As \(g_{b'_{\sigma(i)}}\) is primitive, each term in \(\Delta^{[k]} (g_{b'_{\sigma(i)}} )\) has \(g_{b'_{\sigma(i)}}\) in one tensor-factor and 1 in the k−1 others. Hence the only terms of \(\Delta^{[k]} (g_{b'_{\sigma (1)}} )\cdots\Delta^{[k]} (g_{b'_{\sigma(k)}} )\) without 1s in any tensor factors are those of the form \(g_{b'_{\tau \sigma(1)}}\otimes\cdots\otimes g_{b'_{\tau\sigma(k)}}\) for some τ∈S k . Now \(f_{b_{i}}(1)=0\) for all i, so \(f_{b_{1}}\otimes\cdots\otimes f_{b_{k}}\) annihilates any term with 1 in some tensor-factor. Hence

As f b is dual to g b for Lyndon b, the only summands which contribute are when \(b_{i}=b'_{\sigma\tau(i)}\) for all i. In other words, this is zero unless the b i are some permutation of the \(b'_{i}\). But both sets are ordered decreasingly, so this can only happen if \(b_{i}=b'_{i}\) for all i, hence b=b′. In that case, for each fixed σ∈S k , the number of τ∈S k with b i =b στ(i) for all i is precisely A′(b), so f b (g b )=1.

The final statement is proved in the same way as in Theorem 3.19, for a polynomial algebra, since, when b=c 1 c 2⋯c l with c 1≥c 2≥⋯≥c l in the ordering of generators, \(f_{b}=\frac{1}{A'(b)}c_{1}^{*}c_{2}^{*}\cdots c_{l}^{*}\). □

3.7 Stationary distributions, generalized chromatic polynomials, and absorption times

This section returns to probabilistic considerations, showing how the left eigenvectors of Sect. 3.5 determine the stationary distribution of the associated Markov chain. In the absorbing case, “generalized chromatic symmetric functions”, based on the universality theorem in [4], determine rates of absorption. Again, these general theorems are illustrated in the three sections that follow.

3.7.1 Stationary distributions

The first proposition identifies all the absorbing states when \(\mathcal{H}\) is a polynomial algebra:

Proposition 3.21

Suppose \(\mathcal{H}\) is a polynomial algebra where K a , defined by a −n Ψ a(b)=∑ b′ K a (b,b′)b′, is a transition matrix. Then the absorbing states are the basis elements \(b\in\mathcal{B}_{n}\) which are products of n (possibly repeated) degree one elements, and these give a basis of the 1-eigenspace of K a .

Example 3.22