Abstract

This paper considers computing partial eigenpairs of differential eigenvalue problems (DEPs) such that eigenvalues are in a certain region on the complex plane. Recently, based on a “solve-then-discretize” paradigm, an operator analogue of the FEAST method has been proposed for DEPs without discretization of the coefficient operators. Compared to conventional “discretize-then-solve” approaches that discretize the operators and solve the resulting matrix problem, the operator analogue of FEAST exhibits much higher accuracy; however, it involves solving a large number of ordinary differential equations (ODEs). In this paper, to reduce the computational costs, we propose operation analogues of Sakurai–Sugiura-type complex moment-based eigensolvers for DEPs using higher-order complex moments and analyze the error bound of the proposed methods. We show that the number of ODEs to be solved can be reduced by a factor of the degree of complex moments without degrading accuracy, which is verified by numerical results. Numerical results demonstrate that the proposed methods are over five times faster compared with the operator analogue of FEAST for several DEPs while maintaining almost the same high accuracy. This study is expected to promote the “solve-then-discretize” paradigm for solving DEPs and contribute to faster and more accurate solutions in real-world applications.

Similar content being viewed by others

1 Introduction

This paper considers solving differential eigenvalue problems (DEPs)

with boundary conditions, where \(\mathcal {A}\) and \({\mathscr{B}}\) are linear, ordinary differential operators acting on functions from a Hilbert space \({\mathscr{H}}\) and Ω is a prescribed simply connected open set. This type of problems appears in various fields such as physics [1, 2] and materials science [3,4,5]. Here, λi and ui is an eigenvalue and the corresponding eigenfunction, respectively. We assume that the boundary Γ of Ω is a rectifiable, simple closed curve and that the spectrum of (1) is discrete and does not intersect Γ, while only m finite eigenvalues counting multiplicities are in Ω. We also assume that there are eigenfunctions of (1) that form a basis for the invariant subspace associated with λi ∈Ω.

A conventional way to solve (1) is to discretize the operators \(\mathcal {A}\) and \({\mathscr{B}}\) and solve the resulting matrix eigenvalue problem using some matrix eigensolver, e.g., the QZ and Krylov subspace methods [6]. Fine discretization can reduce discretization error but lead to the formation of large matrix eigenvalue problems. Owing to parallel efficiency, complex moment-based eigensolvers are practical choices for large eigenvalue problems such as Sakurai–Sugiura’ s approach [7] and FEAST eigensolvers [1]. This class of eigensolvers constructs an approximation of the target invariant subspace using a contour integral and computes an approximation of the target eigenpairs using a projection onto the subspace. Because of the high efficiency of parallel computation of the contour integral [2, 3], which is the most time-consuming part, complex moment-based eigensolvers have attracted considerable attention.

In contrast to the above “discretize-then-solve” paradigm, a “solve-then-discretize” paradigm emerged, motivated by mathematical software Chebfun [8]. Chebfun enables highly adaptive computation with operators and functions in the same manner as matrices and functions. This paradigm has extended numerical linear algebra techniques in finite dimensional spaces to infinite-dimensional spaces [9,10,11,12,13,14]. Under the circumstances, an operator analogue of FEAST was recently developed [15] for solving (1) and dealing with operators \(\mathcal {A}\) and \({\mathscr{B}}\) without their discretization.Footnote 1 On one hand, the operator analogue of FEAST exhibits much higher accuracy than methods based on the traditional “discretize-then-solve” paradigm. On the other hand, a large number of ordinary differential equations (ODEs) must be solved for the construction of invariant subspaces, which is computationally expensive, although the method can be efficiently parallelized.

In this paper, we propose operation analogues of Sakurai–Sugiura’s approach for DEPs (1) in the “solve-then-discretize” paradigm. The difference between the operator analogue of FEAST and the proposed methods lies in the order of complex moments used: the operator analogue of FEAST used only complex moments of order zero, whereas the proposed methods use complex moments of higher order. The difference enables the proposed methods to reduce the number of ODEs to be solved by a factor of the degree of complex moments without degrading accuracy. The proposed methods can be extended to higher dimensions in a straightforward manner for simple geometries.

The remainder of this paper is organized as follows. Section 2 briefly introduces the complex moment-based matrix eigensolvers. In Section 3, we propose operation analogues of Sakurai–Sugiura’s approach for DEPs (1). We also introduce a subspace iteration technique and analyze an error bound. Numerical experiments are reported in Section 4. The paper concludes with Section 5.

We use the following notations for quasi-matrices. Let \(V = [v_{1}, v_{2}, \dots , v_{L}]\), \(W = [w_{1}, w_{2}, {\dots } ,w_{L}]\): \(\mathbb {C}^{L} \rightarrow {\mathscr{H}}\) be quasi-matrices, whose columns are functions defined on an interval [a, b], \(a, b \in \mathbb {R}\). Then, we define the range of V by \({\mathscr{R}}(V) = \{ y \in {\mathscr{H}} \mid y = V {\boldsymbol {x}}, {\boldsymbol {x}} \in \mathbb {C}^{L} \}\). In addition, the L × L matrix X, whose (i, j) element is \(X_{ij} = (v_{i}, w_{j})_{{\mathscr{H}}}\), is expressed as X = VHW. Here, VH is the conjugate transpose of a quasi-matrix V such that its rows are the complex conjugates of functions \(v_{1}, v_{2}, {\dots } , v_{L}\).

2 Complex moment-based matrix eigensolvers

The complex moment-based eigensolvers proposed by Sakurai and Sugiura [7] are intended for solving matrix generalized eigenvalue problems:

where zB − A is nonsingular in a boundary Γ of the target region Ω. These eigensolvers use Cauchy’s integral formula to form complex moments. Complex moments can extract the target eigenpairs from random vectors or matrices.

We denote the k th order complex moment by

where π is the circular constant, i is the imaginary unit, and Γ is a positively oriented closed Jordan curve of which Ω is the interior. Then, the complex moment Mk applied to a matrix \(V \in \mathbb {C}^{n \times L}\) serves as a filter that stops undesired eigencomponents in the column vectors of V from passing through. To achieve this role of a complex moment, we introduce a transformation matrix \(S \in \mathbb {C}^{n \times LM}\)

where \(V \in \mathbb {C}^{n \times L}\) and M − 1 is the largest order of complex moments. Note that L and M are regarded as parameters. The special case M = 1 in S reduces to FEAST [1, (3)]. Thus, the range \({{\mathscr{R}}}(S)\) of S forms the eigenspace of interest (see, e.g., [16, Theorem 1]).

Practical algorithms of the complex moment-based eigensolvers approximate the contour integral of the transformation matrix \(\widehat {S}_{k} \simeq S_{k}\) of (2) using a quadrature rule

where \(z_{j}, \omega _{j} \in \mathbb {C}\) \((j = 1, 2, \dots , N)\) are quadrature points and the corresponding weights, respectively.

The most time-consuming part of complex moment-based eigensolvers involves solving linear systems at each quadrature point. These linear systems can be independently solved so that the eigensolvers have good scalability, as demonstrated in [2, 3]. For this reason, complex moment-based eigensolvers have attracted considerable attention, particularly in physics [1, 2], materials science [3,4,5], power systems [17], data science [18], and so on. Currently, there are several methods, including direct extensions of Sakurai and Sugiura’s approach [19,20,21,22,23,24,25], the FEAST eigensolver [1] developed by Polizzi, and its improvements [2, 26, 27]. We refer to the study by [23] and the references therein, for relationship among typical complex moment-based methods: the methods using the Rayleigh–Ritz procedure [19, 21], the methods using Hankel matrices [7, 20], the method using the communication avoiding Arnoldi procedure [24], FEAST eigensolver [1], and so on.

3 Complex moment-based methods

In the “solve-then-discretize” paradigm, an operator analogue of the FEAST method was proposed [15] for solving (1) without requiring discretization of the operators \(\mathcal {A}\) and \({\mathscr{B}}\). The operator analogue of FEAST (contFEAST) is a simple extension of the matrix FEAST eigensolver and is based on an accelerated subspace iteration only with complex moments of order zero (see Algorithm 1). In each iteration, contFEAST requires solving a large number of ODEs to construct a subspace. In this study, to reduce computational costs, we propose operator analogues of Sakurai–Sugiura-type complex moment-based eigensolvers: contSS-RR, contSS-Hankel, and contSS-CAA using complex moments of higher order.

3.1 Complex moment subspace and its properties

For the differential eigenvalue problem (1), spectral projectors \(\mathcal {P}_{i}\) and \(\mathcal {P}_{\Omega }\) associated with a finite eigenvalue λi and the target eigenvalues λi ∈Ω are defined as

respectively, where Γi is a positively oriented closed Jordan curve in which λi lies and contour paths Γi and Γj do not intersect each other for i≠j (see [28, pp.178–179] for the case of \({\mathscr{B}}=\mathcal {I}\)). Here, spectral projectors \(\mathcal {P}_{i}\) satisfy

where δij is the Kronecker delta.

Analogously to the complex moment-based eigensolvers for matrix eigenvalue problems, we define the k th order complex moment as

and the transformation quasi-matrix as

for \(k = 0, 1, \dots , M-1\), where M − 1 is the highest order of complex moments and \(V: \mathbb {C}^{L} \rightarrow {\mathscr{H}}\) is a quasi-matrix. Here, L is a parameter. Note the identity \(\mathcal {P}_{i} = {\mathscr{M}}_{0}\) for Γ = Γi. Then, the range \({\mathscr{R}}(S)\) has the following properties.

Theorem 1

The columns of S defined in (5) form a basis of the target eigenspace \(\mathcal {X}_{\Omega }\) corresponding to Ω, i.e.,

if rank(S) = m, where m is the number of eigenvalues, counting multiplicity, in Ω of (1).

Proof

Cauchy’s integral formula shows

Therefore, from the definitions of S and Sk, the quasi-matrix S can be written as

which provides (6) if rank(S) = m. □

Remark 1

Theorem 1 shows that the target eigenpairs of (1) can be obtained by using a projection method onto \({\mathscr{R}}(S)\).

Theorem 2

Let S0 and S be defined as in (5). Then, the range \({\mathscr{R}}(S)\) and the block Krylov subspace

are the same, i.e.,

where

Here, \(\mathcal {P}_{i}\) is defined in (3). Moreover, the eigenvalue problem of linear operator \(\mathcal {C}\)

has the same finite eigenpairs as \(\mathcal {A}u_{i} = \lambda _{i}{\mathscr{B}}u_{i}\).

Proof

The quasi-matrix Sk is written as

which provides (7). Hence, the eigenspace of \(\mathcal {C}\) and that of (1) are the same. □

Remark 2

Theorem 2 shows that several techniques for block Krylov subspace can be used to form \({\mathscr{R}}(S)\) and the target eigenpairs of (1) can be obtained by solving (8).

Theorems 1 and 2 are used to derive methods in Section 3.2 and provide an error bound in Section 3.3.

3.2 Derivations of methods

Using Theorems 1 and 2, based on the complex moment-based eigensolvers, SS-RR, SS-Hankel, and SS-CAA, we develop complex moment-based differential eigensolvers for solving (1) without the discretization of operators \(\mathcal {A}\) and \({\mathscr{B}}\). The proposed methods are projection methods based on \({\mathscr{R}}(S)\), which is a larger subspace than \({\mathscr{R}}(S_{0})\) used in contFEAST (Algorithm 1).

In practice, we numerically deal with operators, functions, and the contour integrals. The contour integral in (4) is approximated using the quadrature rule:

where \(z_{j}, \omega _{j} \in \mathbb {C}\) \((j = 1, 2, \dots , N)\) are quadrature points and the corresponding weights, respectively. As well as contFEAST, we avoid discretizing the operators, but we construct polynomial approximations on the basis of the invariant subspace by approximately solving ODEs of the form

with boundary conditions. Note that the number of ODEs to be solved does not depend on the degree of complex moments M.

For real operators \(\mathcal {A}\) and \({\mathscr{B}}\), if quadrature points and the corresponding weights are set symmetric about the real axis, \((z_{j}, \omega _{j}) = (\overline {z}_{j+N/2}, \overline {\omega }_{j+N/2}), j = 1,2,\dots , N/2\), we can halve the number of ODEs to be solved as follows:

As another efficient computation technique for real self-adjoint problems, we can avoid complex ODEs using the real rational filtering technique [29] for matrix eigenvalue problems. Using the real rational filtering technique, quasi-matrix S is approximated by (9) with the N Chebyshev points of the first kind and the corresponding barycentric weights,

for \(j = 1,2, \dots , N\), where γ and ρ are the center and radius of the target interval. Note that \(z_{j}, \omega _{j} \in \mathbb {R}\) for \(j = 1,2, \dots , N\).

3.2.1 ContSS-RR method

An operator analogue of the complex moment-based method using the Rayleigh–Ritz procedure for matrix eigenvalue problems [19, 21] is presented. Theorem 1 shows that the target eigenpairs of (1) can be obtained by a Rayleigh–Ritz procedure based on \({\mathscr{R}}(S)\), i.e.,

where (λi,ui) = (𝜃i,Sti). We approximate this Rayleigh–Ritz procedure using an \({\mathscr{H}}\)-orthonormal basis of the approximated subspace \({\mathscr{R}}(\widehat {S})\). Here, to reduce computational costs and improve numerical stability, we use a low-rank approximation of quasi-matrix \(\widehat {S}\) based on its truncated singular value decomposition (TSVD) [10], i.e.,

where \({\Sigma }_{\text {S1}} \in \mathbb {R}^{d \times d}\) is a diagonal matrix whose diagonal entries are the d largest singular values such that σd/σ1 ≥ δ ≥ σd+ 1/σ1 \((\sigma _{i} \geq \sigma _{i+1}, i = 1, 2, \dots , d)\) and \(U_{\text {S1}}: \mathbb {C}^{d} \rightarrow {\mathscr{H}}\) and \(W_{\text {S1}} \in \mathbb {C}^{LM \times d}\) are column-orthonormal (quasi-)matrices corresponding to the left and right singular vectors, respectively.

Thus, the target problem (1) is reduced to a d-dimensional matrix generalized eigenvalue problem

where the approximated eigenpairs are computed as \((\widehat {\lambda }_{i}, \widehat {u}_{i}) = (\theta _{i}, U_{\text {S1}} {\boldsymbol {t}}_{i})\). The procedure of the contSS-RR method is summarized in Algorithm 2.

3.2.2 ContSS-Hankel method

An operator analogue of the complex moment-based method using Hankel matrices for matrix eigenvalue problems [7, 20] is presented. Let \(\mu _{k} \in \mathbb {C}^{L \times L}\) be a reduced complex moment of order k defined as

with \(\widetilde {V}: \mathbb {C}^{L} \rightarrow {\mathscr{H}}\). We also define block Hankel matrices

which have the following property.

Theorem 3

If rank(HM) = rank(HM<) = m, where m is the number of eigenvalues in Ω of (1), then the nonsingular part of a matrix pencil zHM − HM< and \(z - \mathcal {P}_{\Omega }\) have the same spectrum, where \(\mathcal {P}_{\Omega }\) is defined in (3).

Proof

The complex moment μk can be written as

where \(\mathcal {C}\) is defined in Theorem 2. Letting

the block Hankel matrices are written as

which proves Theorem 3. □

Theorem 3 shows that the target eigenpairs of (1) can be computed via a matrix eigenvalue problem:

Note that from the equivalence

this approach can be regarded as a Petrov–Galerkin-type projection for (8), which has the same finite eigenpairs as \(\mathcal {A}u_{i} = \lambda _{i}{\mathscr{B}}u_{i}\). In practice, block Hankel matrices HM< and HM are approximated by block Hankel matrices \(\widehat {H}_{M}^<\) and \(\widehat {H}_{M}\) whose block (i, j) entries are \(\widehat {\mu }_{i+j+1}\) and \(\widehat {\mu }_{i+j}\), respectively, where

for \(k = 1, 2, \dots , 2M-1\). To reduce computational costs and improve numerical stability, we use a low-rank approximation of \(\widehat {H}_{M}\) based on TSVD, i.e.,

where \({\Sigma }_{\text {H1}} \in \mathbb {R}^{d \times d}\) is a diagonal matrix whose diagonal entries are the d largest singular values such that σd/σ1 ≥ δ ≥ σd+ 1/σ1 \((\sigma _{i} \geq \sigma _{i+1}, i = 1, 2, \dots , d)\) and \(U_{\text {H1}}, W_{\text {H1}} \in \mathbb {C}^{LM \times d}\) are column-orthonormal matrices corresponding to the left and right singular vectors, respectively.

Then, the target problem (1) is reduced to a d-dimensional standard matrix eigenvalue problem of the form

where the approximated eigenpairs can be computed as \((\widehat {\lambda }_{i}, \widehat {u}_{i}) = (\theta _{i}, \widehat {S} W_{\text {H1}} {\Sigma }_{\text {H1}}^{-1} {\boldsymbol {t}}_{i})\). The procedure of the contSS-Hankel method is summarized in Algorithm 3.

3.2.3 ContSS-CAA method

An operator analogue of the complex moment-based method using the communication avoiding Arnoldi procedure for matrix eigenvalue problems [24] is presented. Theorem 2 shows that the target eigenpairs of (1) can be obtained by using a block Arnoldi method with \({{\mathscr{R}}}(S) = {\mathscr{K}}_{M}(\mathcal {C},S_{0})\) for (8), which has the same finite eigenpairs as \(\mathcal {A}u_{i} = \lambda _{i}{\mathscr{B}}u_{i}\). In this algorithm, a quasi-matrix \(Q : \mathbb {C}^{LM} \rightarrow {\mathscr{H}}\) whose columns form an orthonormal basis of \({{\mathscr{R}}}(S) = {\mathscr{K}}_{M}(\mathcal {C},S_{0})\) and a block Hessenberg matrix \(T_{M} = Q^{\textsf {H}} \mathcal {C} Q\) are constructed, and the target eigenpairs are computed by solving the standard matrix eigenvalue problem

Therefore, we have (λi,ui) = (𝜃i,Qti).

Further, we consider using a block version of the communication-avoiding Arnoldi procedure [30]. Let \(S_{+}= [S_{0}, S_{1}, \dots , S_{M}]: \mathbb {C}^{L(M+1)} \rightarrow {\mathscr{H}}\) be a quasi-matrix. From Theorem 2, we have

Here, based on the concept of a block version of the communication-avoiding Arnoldi procedure, using the QR factorizations

where Q = Q+(:,1 : LM),R = R+(1 : LM,1 : LM), the block Hessenberg matrix TM is obtained by

In practice, we approximate the block Hessenberg matrix TM by

where

are the QR factorizations of \(\widehat {S}_{+}\) and \(\widehat {S}\), respectively, \(\widehat {Q} = \widehat {Q}_{+}(:,1\!:\!LM)\), and \(\widehat {R}=\widehat {R}_{+}(1\!:\!LM,1\!:\!LM)\), and use a low-rank approximation of \(\widehat {S}\) based on TSVD, i.e.,

where \({\Sigma }_{\text {R1}} \in \mathbb {R}^{d \times d}\) is a diagonal matrix whose diagonal entries are the d largest singular values σd/σ1 ≥ δ ≥ σd+ 1/σ1 \((\sigma _{i} \geq \sigma _{i+1}, i = 1,2, \dots , d)\) and \(U_{\text {R1}}, W_{\text {R1}} \in \mathbb {C}^{LM \times d}\) are column-orthonormal matrices corresponding to the left and right singular vectors of \(\widehat {R}\), respectively.

Then, the target problem (1) is reduced to a d-dimensional matrix standard eigenvalue problem of the form

The approximate eigenpairs are obtained as \((\widehat {\lambda }_{i},\widehat {u}_{i}) = (\theta _{i}, \widehat {Q} U_{\text {R1}} {\boldsymbol {t}}_{i})\). The coefficient matrix \(U_{\text {R1}}^{\textsf H} \widehat {T}_{M} U_{\text {R1}}\) is efficiently computed by

The procedure of the contSS-CAA method is summarized in Algorithm 4.

3.3 Subspace iteration and error bound

We consider improving the accuracy of the eigenpairs via a subspace iteration technique, as in the matrix version of complex moment-based eigensolvers. We construct \(\widehat {S}_{0}^{(\ell -1)}\) via the following iteration step:

with the initial quasi-matrix \(\widehat {S}_{0}^{(0)} = V\). Then, instead of \(\widehat {S}\) in each method, we use \(\widehat {S}^{(\ell )}\) constructed from \(\widehat {S}_{0}^{(\ell -1)}\) by

The orthonormalization of the columns of \(\widehat {S}_{0}^{(\nu )}\) in each iteration may improve the numerical stability.

Now, we analyze the error bound of the proposed methods with the subspace iteration technique as introduced in (13) and (14). We assume that \({\mathscr{B}}\) is invertible and all the eigenvalues are isolated. Then, we have

where \(\mathcal {P}_{i}\) is a spectral projector associated with λi s.t. \(\mathcal {P}_{i} \mathcal {P}_{j} = \delta _{ij} \mathcal {P}_{i}\) [28, VII Section 6]. We also assume that the numerical quadrature satisfies

Under the above assumptions, for \(k = 0, 1, \dots , M-1\), the quasi-matrix \(\widehat {S}_{k}^{(\ell )}\) can be written as

where

From the definitions of \(\mathcal {C}\) and \(\mathcal {F}\), these operators are commutative, \(\mathcal {C}\mathcal {F} = \mathcal {F}\mathcal {C}\). Therefore, we have

Here, fN(λi) is called the filter function; it is used for error analyses of complex moment-based matrix eigensolvers [16, 23, 26, 27]. Figure 1 shows the magnitude of the filter function \(\lvert f_{N}(\lambda _{i}) \rvert \) for the N-point trapezoidal rule with N = 16,32, and 64 for the unit circle region Ω. Here, note that the oscillations at \(\lvert f_{N}(\lambda ) \rvert \approx 10^{-16}\) are due to roundoff errors. The filter function has \(\lvert f_{N}(\lambda ) \rvert \approx 1\) inside Ω, \(\lvert f_{N}(\lambda ) \rvert \approx 0\) far from Ω, and \(0 < \lvert f_{N}(\lambda ) \rvert < 1\) outside but near the region. Therefore, \(\mathcal {F}\) is a bounded linear operator.

Applying [15, Theorem 5.1] to (15) under the above assumptions, we have the following theorem for an error bound of the proposed methods.

Theorem 4

Let (λi,ui) be exact finite eigenpairs of the differential eigenvalue problem \(\mathcal {A} u_{i} = \lambda _{i} {\mathscr{B}} u_{i}, i = 1, 2, \dots , LM\). Assume that the filter function fN(λi) is ordered by decreasing magnitude \(\lvert f_{N}(\lambda _{i}) \rvert \geq \lvert f_{N}(\lambda _{i+1}) \rvert \). We define as an orthogonal projector onto the subspaces \({\mathscr{R}}(\widehat {S}^{(\ell )} )\) and the spectral projector with an invariant subspace span{u1,u2,…,uLM} by \(\mathcal {P}^{(\ell )}\) and \(\mathcal {P}_{LM}\), respectively. Assume that \(\mathcal {P}_{LM} \widehat {S}^{(0)}\) has full rank, where \(\widehat {S}_{0}\) is defined in (14). Then, for each eigenfunction \(u_{i}, i = 1, 2, \dots \), LM, there exists a unique function \(s_{i} \in \mathcal {K}_{M}^{\square }(\mathcal {C},V)\) such that \(\mathcal {P}_{LM} s_{i} = u_{i}\). Thus, the following inequality is satisfied:

where α is a constant and \(\beta _{i} = \| u_{i} - s_{i} \|_{{\mathscr{H}}}\).

Theorem 4 indicates that, using a sufficiently large number of columns LM in the transformation quasi-matrix \(\widehat {S}\) such that \(\lvert f_{N}(\lambda _{LM+1}) \rvert ^{\ell } \approx 0\), the proposed methods achieve high accuracy for the target eigenpairs even if N is small and some eigenvalues exist outside but near the region.

3.4 Summary and advantages over existing methods

We summarize the proposed methods and present their advantages over existing methods.

3.4.1 Summary of the proposed methods

ContSS-RR is a Rayleigh–Ritz-type projection method that explicitly solves (1); on the other hand, contSS-Hankel and contSS-CAA are a Petrov–Galerkin-type projection method and block Arnoldi method that implicitly solve (8), respectively. If the computational cost for explicit projection, i.e., \(U_{\text {S1}}^{\textsf H} \mathcal {A} U_{\text {S1}}\) and \(U_{\text {S1}}^{\textsf H} {\mathscr{B}} U_{\text {S1}}\) in contSS-RR, is large, contSS-Hankel and contSS-CAA can be more efficient than contSS-RR.

Orthogonalization of basis functions is required for contSS-RR (step 3 of Algorithm 2) and contSS-CAA(step 5 of Algorithm 4) for accuracy but not performed in contSS-Hankel. This is the advantage of contSS-Hankel regarding computational costs over other methods. In addition, this is advantageous for contSS-Hankel when applied to DEPs over a domain for which it is difficult to construct accurate orthonormal bases in such as triangles and tetrahedra domains.

As well as contFEAST, since solving LN ODEs (10) is the most-time consuming part of the proposed methods and is fully parallelizable, the proposed methods can be efficiently parallelized.

3.4.2 Advantages over contFEAST

ContFEAST is a subspace iteration method based on the L dimensional subspace \({\mathscr{R}}(\widehat {S}_{0})\) for (1). Instead, the proposed methods are projection methods based on the LM dimensional subspace \({\mathscr{R}}(\widehat {S})\). From Theorem 4, we can also observe that the proposed methods using higher-order complex moments achieve higher accuracy than contFEAST, since \( \lvert f_{N}(\lambda _{LM+1}) \rvert < \lvert f_{N}(\lambda _{L+1})\rvert \). In other words, the proposed methods can use a smaller number of initial functions L than contFEAST to achieve almost the same accuracy. Since the number of ODEs to solve is LN in each iteration, the reduction of L drastically reduces the computational costs.

Therefore, the proposed methods exhibit smaller elapsed time than contFEAST, while maintaining almost the same high accuracy, as experimentally verified in Section 4.

3.4.3 Advantages over complex moment-based matrix eigensolvers

Methods using a “solve-then-discretize” approach, including the proposed methods and contFEAST, automatically preserves the normality or self-adjointness of the problems with respect to a relevant Hilbert space \({\mathscr{H}}\). In addition, the stability analysis in [15] shows that the sensitivity of the eigenvalues is preserved by Rayleigh–Ritz-type projection methods with an \({\mathscr{H}}\)-orthonormal basis for self-adjoint DEPs, but can be increased by methods using a “discretize-then-solve” approach. As well as contFEAST, contSS-RR follows this result.

Based on these properties, the proposed methods exhibit much higher accuracy than the complex moment-based matrix eigensolvers using a “solve-then-discretize” approach, as experimentally verified in Section 4.

4 Numerical experiments

In this section, we evaluate the performances of the proposed methods, contSS-RR (Algorithm 2), contSS-Hankel (Algorithm 3), and contSS-CAA (Algorithm 4), and compare them with that of contFEAST (Algorithm 1) for solving DEPs (1). Although the target problem of this paper is DEPs with ordinary differential operators, here we apply the proposed methods to DEPs with partial differential operators and evaluate their effectiveness.

The compared methods use the N-point trapezoidal rule to approximate the contour integrals. In Sections 4.1–4.4 (Experiments I–IV) for ordinary differential operators, the quadrature points for the N-point trapezoidal are on an ellipse with center γ, major axis ρ, and aspect ratio α, i.e.,

The corresponding weights are set as

Here, for real problems, we used (11) to reduce the number of ODEs to be solved. In Section 4.5 (Experiment V) for partial differential operators, we used the real rational filtering technique (12) to avoid complex partial differential equations (PDEs). For the proposed methods, we set δ = 10− 14 for the threshold of the low-rank approximation. In all the methods, we set \(V: \mathbb {C}^{L} \rightarrow {\mathscr{H}}\) to a random quasi-matrix, whose columns are randomly generated functions represented by using 32 Chebyshev points on the same domain with the target problem.

Methods were implemented using MATLAB and Chebfun [8]. ODEs and PDEs were solved by using the “∖” command of Chebfun. All the numerical experiments were performed on a serial computer with the Microsoft (R) Windows (R) 10 Pro Operating System, an 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz CPU, and 32GB RAM.

4.1 Experiment I: proof of concept

For a proof of concept of the proposed methods, i.e., to show an advantage of the proposed method in the “solve-then-discretize” paradigm over a “discretize-then-solve” approach in terms of accuracy, we tested on the one-dimensional Laplace eigenvalue problem

Note that the true eigenpairs are \((\lambda _{i}, u_{i}) = (i^{2}, {\sin \limits } (ix)), i \in \mathbb {Z}_{+}\). We computed four eigenpairs such that λi ∈ [0,20].

First, we apply standard “discretize-then-solve” approaches for solving (16) in which the coefficient operator is discretized by a three-point central difference. The obtained matrix eigenvalue problem of size n is

and its eigenvalues can be written as

Note that we have \(\lim _{n \rightarrow \infty } \lambda _{i}^{(n)} = i^{2}\). We computed the eigenvalues using (18) with increasing n. We also applied SS-RR [21] with (L, M, N) = (3,2,16) to the discretized problem (17) for each n.

The absolute errors of approximate eigenvalues computed by the “discretize-then-solve” approaches are shown in Fig. 2. The errors decrease with increasing n; however, the errors turn to increase at n ≈ 105 when using (18) due to rounding error and n ≈ 106 for SS-RR due to quadrature and rounding errors. The error reaches a minimum approximately 10− 8 and 10− 10 when using (18) and SS-RR, respectively.

Absolute error of eigenvalue of “discretize-then-solve” approaches for the Laplace eigenvalue problem (16)

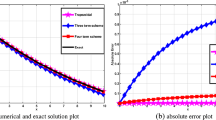

Next, we apply contSS-RR with (L, M, N) = (3,2,16) to (16). Here, we set (γ, ρ, α) = (10,10,1) for the contour path. The obtained eigenvalues and eigenfunction are shown in Table 1 and Fig. 3, respectively. In contrast to the “discretize-then-solve” approach, the proposed method achieves much higher accuracy (absolute errors are approximately 10− 14), which shows effectiveness of the “solve-then-discretize” approach over the “discretize-then-solve” approach. This is one of the greatest advantages of the proposed method over complex moment-based matrix eigensolvers.

Obtained eigenfunction of the contSS-RR for the Laplace eigenvalue problem (16)

These results demonstrate that the proposed methods work well for solving DEPs without discretization of the coefficient operator.

4.2 Experiment II: parameter dependence

We evaluate the parameter dependence of contSS-RR with the subspace iteration technique and contFEAST on the convergence. We computed the same four eigenpairs λi ∈ [0,20] of (16) using the same (γ, ρ, α) = (10,10,1) for the contour path as used in Section 4.1. We evaluate the convergence of contSS-RR with (L, N) = (4,4) varying M = 1,2,3,4 and with (M, N) = (1,4) varying L = 4,8,12,16, and contFEAST with N = 4 varying L = 4,8,12,16.

We show the residual history of each method in Fig. 4(a)–(c) regarding residual norm \(\| r_{i} \|_{{\mathscr{H}}} = \| \mathcal {A} \widehat {u}_{i} - \widehat {\lambda }_{i} {\mathscr{B}} \widehat {u}_{i} \|_{{\mathscr{H}}}\). The convergences of contFEAST and contSS-RR with M = 1 are almost identical and improve with an increase in L (Fig. 4(a) and (b)). We also observed that, in contSS-RR, increasing M also improves the convergence to the same degree as increasing L (Fig. 4(c)).

Convergence for the Laplace eigenvalue problem (16)

We also show in Fig. 4(d) the theoretical convergence rate obtained from Theorem 4, i.e., \(\max \limits _{\lambda _{i} \in {\Omega }} \lvert f_{N}(\lambda _{LM+1}) / f_{N}(\lambda _{i}) \rvert \), and the evaluated convergence rate of each method, i.e., the ratio of the residual norm between the first and second iterations. Although, in contSS-RR, increasing L indicates a slightly smaller convergence rate than increasing M, both evaluated convergence rates are almost the same as the theoretical convergence rate obtained from Theorem 4.

These results demonstrate that the proposed method with M ≥ 2 achieves fast convergence even with a small value of L. This contributes to the reduction in elapsed time, which will be shown in Section 4.3.

4.3 Experiment III: performance for real-world problems

Next, we evaluate the performances of the proposed methods without iteration (ℓ = 1) and compare them with those of contFEAST and the “eigs” function [31] in Chebfun for the following six eigenvalue problems: two for computing real outermost eigenvalues, two for computing real interior eigenvalues, and two for computing complex eigenvalues.

-

Real Outermost: Mathieu eigenvalue problem [32]:

$$ \left( - \frac{ \mathrm{d}^{2} }{ \mathrm{d}x^{2} } + 2 q \cos(2x) \right) u = \lambda u, \quad u(0) = u(\pi/2) = 0 $$with q = 2. It has only real eigenvalues. We computed 15 eigenpairs corresponding to outermost eigenvalues λi ∈ [0,1000].

-

Real Outermost: Schrödinger eigenvalue problem [33, Chapter 6]:

$$ \left( - \frac{\hbar}{2m} \frac{ \mathrm{d}^{2} }{ \mathrm{d}x^{2} } + V(x) \right) u = \lambda u, \quad u(-1) = u(1) = 0 $$with a double-well potential V (x) = 1.5,x ∈ [− 0.2,0.3], where we set \(\hbar /2m = 0.01\). It has only real eigenvalues. We computed 19 eigenpairs corresponding to outermost eigenvalues λi ∈ [0,10].

-

Real Interior: Bessel eigenvalue problem [34]:

$$ \left( x^{2} \frac{ \mathrm{d}^{2} }{ \mathrm{d}x^{2} } + x \frac{ \mathrm{d} }{ \mathrm{d}x} - \alpha^{2} \right) u = - \lambda x^{2} u, \quad u(0) = u(1) = 0 $$with α = 1. It has only real eigenvalues. We computed 11 eigenpairs corresponding to interior eigenvalues λi ∈ [500,3000].

-

Real Interior: Sturm–Liouville-type eigenvalue problem:

$$ \left( - \frac{ \mathrm{d}^{2} }{ \mathrm{d}x^{2} } + x^{2} \right) u = \lambda \cosh(x) u, \quad u(-1) = u(1) = 0, $$which is used in [15]. It has only real eigenvalues. We computed 12 eigenpairs corresponding to interior eigenvalues λi ∈ [200,1000].

-

Complex: Orr–Sommerfeld eigenvalue problem [35]:

$$ \begin{array}{@{}rcl@{}} & \left\{ \frac{1}{ Re } \left( \frac{ \mathrm{d}^{2} }{ \mathrm{d}x^{2} } - \alpha^{2}\right)^{2} - \mathrm{i} \alpha \left[ U \left( \frac{ \mathrm{d}^{2}}{ \mathrm{d}x^{2}} - \alpha^{2} \right) + U^{\prime\prime} \right] \right\} u = \lambda \left( \frac{ \mathrm{d}^{2}}{ \mathrm{d}x^{2}} - \alpha^{2} \right) u, \\ & u(-1) = u(1) = 0 \end{array} $$with α = 1 and U = 1 − x2. We solved two cases with Re = 1000 and Re = 2000. They have complex eigenvalues. We computed 18 eigenpairs for Re = 1000 and 28 eigenpairs for Re = 2000 shown in Fig. 5.

Tables 2 and 3 give the contour path and values of parameters for each problem. The “eigs” function in Chebfun with parameters k and σ computes k closest eigenvalues to σ and the corresponding eigenfunctions. We set the parameters k and σ to the number of input functions L of contFEAST in Table 3 and the center of contour path γ in Table 2, respectively.

Figures 6 and 7 show the residual norms \(\| r_{i} \|_{{\mathscr{H}}} = \| \mathcal {A} \widehat {u}_{i} - \widehat {\lambda }_{i} {\mathscr{B}} \widehat {u}_{i} \|_{{\mathscr{H}}}\) for each problem and Fig. 8 shows the elapsed times for each problem. In Fig. 8, “Solve ODEs,” “Orthonormalization,” “Matrix Eig,” and “MISC” denote the elapsed times for solving ODEs (10), orthonormalization of the column vectors of \(\widehat {S}\), construction and solution of the matrix eigenvalue problem, and other parts including computation of the contour integral, respectively.

First, we discuss the accuracy of the presented methods. For the real outermost and interior problems (Fig. 6), the residual norms of contFEAST decrease with more iterations reaching \(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-10}\) at ℓ = 3 for the target eigenpairs. ContSS-RR and contSS-CAA demonstrate almost the same high accuracy (\(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-10}\)) as contFEAST with ℓ = 3; on the other hand, contSS-Hankel shows lower accuracy than the others except for the Bessel eigenvalue problem. The residual norms for the eigenvalues outside the target region tend to be large depending on the distance from the target region. The experimental results exhibit a similar trend for both outermost and interior problems.

For the complex problems (Fig. 7), the residual norms of contFEAST stagnate at \(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-7}\) for Re = 1000 and \(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-6}\) for Re = 2000 in ℓ = 2. ContSS-RR achieves almost the same accuracy as contFEAST; on the other hand, SS-Hankel and contSS-CAA are less accurate than contFEAST and contSS-RR.

Next, we discuss the elapsed times of the methods (Fig. 8). For the complex moment-based methods, most of the elapsed time is spent on solving the ODEs. The total elapsed time of contFEAST increases in proportion to the number of iterations ℓ. Although contSS-RR and contSS-CAA account for larger portions of elapsed time for orthonormalization of the basis functions of \({\mathscr{R}}(\widehat {S})\) because they use a larger dimensional subspace (Section 3.4.2), the proposed methods exhibit much less total elapsed times than contFEAST. The proposed methods are over eight times faster than contFEAST with ℓ = 3 for real problems and over four times faster than contFEAST with ℓ = 2 for complex problems, while maintaining almost the same high accuracy.

We also compare the performance of the proposed methods with that of the “eigs” function in Chebfun. As shown in Fig. 8, the “eigs” function is much faster than the proposed methods and contFEAST. On the other hand, Figs. 6 and 7 show that the “eigs” function exhibits significant losses of accuracy in several cases (\(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-5}\) for the Bessel eigenvalue problem, \(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-4}\) for the Orr–Sommerfeld eigenvalue problems with Re = 1000, and \(\| r_{i} \|_{{\mathscr{H}}} \approx 10^{-2}\) for the Orr–Sommerfeld eigenvalue problems with Re = 2000) and is unrobust in accuracy relative to the complex moment-based methods.

4.4 Experiment IV: parallel performance

As demonstrated in Section 4.3, the most time-consuming part of the complex moment-based methods is the solutions of LN ODEs (10). Since these LN ODEs can be solved independently, the methods are expected to have high parallel performance.

Here, we estimated the strong scalability of the methods by using the following performance model. We assume that the elapsed time \(T_{\text {ODE}}^{(j)}\) for solving ODEs (10) depends on the quadrature point zj but is independent of the right-hand side \({\mathscr{B}} v_{i}\). We also assume that the elapsed time TQP for other computation at each quadrature point is independent of the quadrature point zj. In addition, we let Tother be the elapsed time for computation of other parts in each method, respectively. The LN ODEs are solved in parallel by P processes, computations at quadrature points are parallelized in \(\min \limits (P,N)\) processes, and other parts are computed in serial.

Then, using the measured elapsed times \(T_{\text {ODE}}^{(j)}, T_{\text {QP}}\), and Tother, we estimate the total elapsed time Ttotal(P) of each method in P processes as

where \({\mathscr{J}}_{p}\) is the index set of quadrature points equally assigned to each process p and ⌈⋅⌉ denotes the ceiling function.

We estimated the strong scalability of methods for solving the Orr–Sommerfeld eigenvalue problem with Re = 2000. We used the same parameter values as in Section 4.3. Figure 9 shows the estimated time and strong scalability of methods. This result demonstrates that all the methods exhibit highly parallel performance. The proposed methods, especially contSS-Hankel, are much faster than contFEAST even with a large number of processes P, although contFEAST shows slightly better scalability than the proposed methods.

4.5 Experiment V: performance for partial differential operators

The complex moment-based methods can be extended to partial differential operators in a straightforward manner in which L PDEs are solved regarding each quadrature point.

Here, we evaluate the performances of the proposed methods without iteration (ℓ = 1) and compare them with that of contFEAST for two real self-adjoint problems:

-

2D Laplace eigenvalue problem:

$$ - \frac{\hbar}{2m} \left( \frac{ \partial^{2} }{ \partial x^{2} } + \frac{ \partial^{2} }{ \partial y^{2} } \right) u = \lambda u $$in a domain [0,π] × [0,π] with zero Dirichlet boundary condition. The true eigenvalues are \({i_{x}^{2}} + {i_{y}^{2}}\) with \(i_{x}, i_{y} \in \mathbb {Z}_{+}\). We computed 4 eigenpairs, counting multiplicity, corresponding to λi ∈ [0,9]. Note that the target eigenvalues are 2,5, and 8, where the eigenvalue 5 has multiplicity 2.

-

2D Schrödinger eigenvalue problem:

$$ \left[ - \frac{\hbar}{2m} \left( \frac{ \partial^{2} }{ \partial x^{2} } + \frac{ \partial^{2} }{ \partial y^{2} } \right) + V(x,y) \right] u = \lambda u $$in a domain [− 1,1] × [− 1,1] with a potential V (x) = 0.1(x + 0.4)2 + 0.1(y − 0.8)2 and zero Dirichlet boundary condition, where we set \(\hbar /2m = 0.01\). We computed 5 eigenpairs corresponding to λi ∈ [0.15,0.4].

For both problems, we set (L, M, N) = (2,4,24) for the proposed methods and (L, N) = (6,24) for contFEAST.

Table 4 gives the obtained eigenvalues of contSS-RR for the 2D Laplace eigenvalue problem. In addition, residual norms \(\| r_{i} \|_{{\mathscr{H}}} = \| \mathcal {A} \widehat {u}_{i} - \widehat {\lambda }_{i} {\mathscr{B}} \widehat {u}_{i} \|_{{\mathscr{H}}}\) for each problem are presented in Fig. 10 and the elapsed times for each problem are presented in Fig. 11.

We observed from Table 4 and Fig. 10 that, as in the case of ordinary differential operators, the proposed methods work well for solving DEPs with partial differential operators even for a non-simple case (2D-Laplacian eigenvalue problem). In addition, the proposed methods exhibit much lower elapsed times than contFEAST (see Fig. 11), although the elapsed times for orthonormalization of the column vectors of \(\widehat {S}\) and construction of the matrix eigenvalue problem are relatively larger than the cases of ordinary differential operators in Section 4.3.

4.6 Summary of numerical experiments

From the numerical experiments, we observed the following:

-

As well as contFEAST, the proposed methods in the “solve-then-discretize” paradigm exhibit a much higher accuracy than the “discretize-then-solve” approach for solving DEPs (1).

-

Using higher-order complex moments improves the accuracy as well as increasing the number of input functions L.

-

Thanks to the higher-order complex moments, the proposed methods are over eight times faster for real problems and more than four times faster for complex problems compared with contFEAST while maintaining almost the same high accuracy.

5 Conclusion

In this paper, based on the “solve-then-discretize” paradigm, we propose operation analogues of the Sakurai–Sugiura’s approach, contSS-Hankel, contSS-RR, and contSS-CAA, for DEPs (1), without discretization of operators \(\mathcal {A}\) and \({\mathscr{B}}\). Theoretical and numerical results indicate that the proposed methods significantly reduce the number of ODEs to solve and elapsed time by using higher-order complex moments while maintaining almost the same high accuracy as contFEAST.

As well as contFEAST, the proposed methods based on the “solve-then-discretize” paradigm exhibit much higher accuracy than methods based on the traditional “discretize-then-solve” paradigm. This study successfully reduced the computational costs of contFEAST and is expected to promote the “solve-then-discretize” paradigm for solving differential eigenvalue problems and contribute to faster and more accurate solutions in real-world applications.

This paper did not intend to investigate a practical parameter setting, rounding error analysis and parallel performance evaluation. In future, we will develop the proposed methods and evaluate the parallel performance specifically for higher dimensional problems. Furthermore, based on the concept in [36], we will rigorously evaluate the truncation error of the quadrature and numerical errors in the proposed methods and investigate a verified computation method based on the proposed methods for differential eigenvalue problems.

References

Polizzi, E.: A density matrix-based algorithm for solving eigenvalue problems. Phys. Rev. B 79, 115112 (2009). https://doi.org/10.1103/physrevb.79.115112

Kestyn, J., Kalantzis, V., Polizzi, E., Saad, Y.: PFEAST: A high performance sparse eigenvalue solver using distributed-memory linear solvers. In: SC’16 Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis. https://doi.org/10.1109/SC.2016.15, pp 178–189 (2016)

Iwase, S., Futamura, Y., Imakura, A., Sakurai, T., Ono, T.: Efficient and scalable calculation of complex band structure using Sakurai–Sugiura method. In: SC’17 Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis. https://doi.org/10.1145/3126908.3126942, pp 1–12 (2017)

Huang, T.-M., Liao, W., Lin, W.-W., Wang, W.: An efficient contour integral based eigensolver for 3D dispersive photonic crystal. J. Comput. Appl. Math. 395, 113581 (2021). https://doi.org/10.1016/j.cam.2021.113581

Kurz, S., Schöps, S., Unger, G., Wolf, F.: Solving Maxwell’s eigenvalue problem via isogeometric boundary elements and a contour integral method. Mathematical Methods in the Applied Sciences 44(13), 10790–10803 (2021). https://doi.org/10.1002/mma.7447

Chatelin, F.: Eigenvalues of Matrices: Revised Edition. SIAM, Philadelphia (2012)

Sakurai, T., Sugiura, H.: A projection method for generalized eigenvalue problems using numerical integration. J. Comput. Appl. Math. 159(1), 119–128 (2003). https://doi.org/10.1016/S0377-0427(03)00565-X

Driscoll, T.A., Hale, N., Trefethen, L.N.: Chebfun Guide. Pafnuty Publications, Oxford (2014)

Battles, Z., Trefethen, L.N.: An extension of MATLAB to continuous functions and operators. SIAM J. Sci. Comput. 25(5), 1743–1770 (2004). https://doi.org/10.1137/s1064827503430126

Trefethen, L.N.: Householder triangularization of a quasimatrix. IMA J. Numer. Anal. 30(4), 887–897 (2010). https://doi.org/10.1093/imanum/drp018

Olver, S., Townsend, A.: A practical framework for infinite-dimensional linear algebra. In: 2014 First Workshop for High Performance Technical Computing in Dynamic Languages. https://doi.org/10.1109/HPTCDL.2014.10, pp 57–62 (2014)

Townsend, A., Trefethen, L.N.: Continuous analogues of matrix factorizations. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 471(2173), 20140585 (2015). https://doi.org/10.1098/rspa.2014.0585

Gilles, M.A., Townsend, A.: Continuous analogues of Krylov subspace methods for differential operators. SIAM J. Numer. Anal. 57(2), 899–924 (2019). https://doi.org/10.1137/18M1177810

Mohr, S., Nakatsukasa, Y., Urzúa-torres, C.: Full operator preconditioning and the accuracy of solving linear systems. arXiv:2105.07963(2021)

Horning, A., Townsend, A.: FEAST For differential eigenvalue problems. SIAM J. Numer. Anal. 58(2), 1239–1262 (2020). https://doi.org/10.1137/19M1238708

Imakura, A., Du, L., Sakurai, T.: Error bounds of Rayleigh–Ritz type contour integral-based eigensolver for solving generalized eigenvalue problems. Numer. Algorithms 71(1), 103–120 (2016). https://doi.org/10.1007/s11075-015-9987-4

Tzounas, G., Dassios, I., Liu, M., Milano, F.: Comparison of numerical methods and open-source libraries for eigenvalue analysis of large-scale power systems. Appl. Sci. 10(21). https://doi.org/10.3390/app10217592 (2020)

Imakura, A., Matsuda, M., Ye, X., Sakurai, T.: Complex moment-based supervised eigenmap for dimensionality reduction. In: Proceedings of the AAAI Conference on Artificial Intelligence. https://doi.org/10.1609/aaai.v33i01.33013910, pp 3910–3918 (2019)

Sakurai, T., Tadano, H.: CIRR: A Rayleigh–Ritz type method with counter integral for generalized eigenvalue problems. Hokkaido Math. J 36, 745–757 (2007). https://doi.org/10.14492/hokmj/1272848031

Ikegami, T., Sakurai, T., Nagashima, U.: A filter diagonalization for generalized eigenvalue problems based on the Sakurai–Sugiura projection method. J. Comput. Appl. Math. 233(8), 1927–1936 (2010). https://doi.org/10.1016/j.cam.2009.09.029

Ikegami, T., Sakurai, T.: Contour integral eigensolver for non-Hermitian systems: a Rayleigh–Ritz-type approach. Taiwan. J. Math., 825–837. https://doi.org/10.11650/twjm/1500405869 (2010)

Imakura, A., Du, L., Sakurai, T.: A block Arnoldi-type contour integral spectral projection method for solving generalized eigenvalue problems. Appl. Math. Lett. 32, 22–27 (2014). https://doi.org/10.1016/j.aml.2014.02.007

Imakura, A., Du, L., Sakurai, T.: Relationships among contour integral-based methods for solving generalized eigenvalue problems. Jpn. J. Ind. Appl. Math. 33(3), 721–750 (2016). https://doi.org/10.1007/s13160-016-0224-x

Imakura, A., Sakurai, T.: Block Krylov-type complex moment-based eigensolvers for solving generalized eigenvalue problems. Numer. Algorithms 75(2), 413–433 (2017). https://doi.org/10.1007/s11075-016-0241-5

Imakura, A., Futamura, Y., Sakurai, T.: Structure-preserving technique in the block SS–Hankel method for solving Hermitian generalized eigenvalue problems. In: International Conference on Parallel Processing and Applied Mathematics, pp 600–611. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-78024-5_52

Tang, P.T.P., Polizzi, E.: FEAST As a subspace iteration eigensolver accelerated by approximate spectral projection. SIAM J. Matrix Anal. Appl. 35 (2), 354–390 (2014). https://doi.org/10.1137/13090866X

Güttel, S., Polizzi, E., Tang, P.T.P., Viaud, G.: Zolotarev quadrature rules and load balancing for the FEAST eigensolver. SIAM J. Sci. Comput. 37(4), 2100–2122 (2015). https://doi.org/10.1137/140980090

Kato, T.: Perturbation Theory for Linear Operators, 2nd edn., vol. 132. Springer, Berlin, Heidelberg (1995). https://doi.org/10.1007/978-3-642-66282-9

Austin, A.P., Trefethen, L.N.: Computing eigenvalues of real symmetric matrices with rational filters in real arithmetic. SIAM J. Sci. Comput. 37(3), 1365–1387 (2015). https://doi.org/10.1137/140984129

Hoemmen, M.: Communication-avoiding Krylov subspace methods. Technical Report UCB/EECS-2010-37, University of California, Berkeley (2010)

Driscoll, T.A., Bornemann, F., Trefethen, L.N.: The chebop system for automatic solution of differential equations. BIT Numer. Math. 48(4), 701–723 (2008). https://doi.org/10.1007/s10543-008-0198-4

Mathieu, E.: Mémoire sur le mouvement vibratoire d’une membrane de forme elliptique. Journal de Mathématiques Pures et Appliquées 13, 137–203 (1868)

Trefethen, L.N., Birkisson, A., Driscoll, T.A.: Exploring ODEs. Society for Industrial and Applied Mathematics, Philadelphia, PA (2017). https://doi.org/10.1137/1.9781611975161

Watson, G.N.: A treatise on the theory of Bessel functions. Cambridge mathematical library. Cambridge University Press, New York, NY (1995)

Schmid, P.J., Henningson, D.S.: Stability and transition in shear flows. Springer, New York, NY (2001). https://doi.org/10.1007/978-1-4613-0185-1

Imakura, A., Morikuni, K., Takayasu, A.: Verified partial eigenvalue computations using contour integrals for Hermitian generalized eigenproblems. J. Comput. Appl. Math. 369, 112543 (2020). https://doi.org/10.1016/j.cam.2019.112543

Acknowledgements

The authors would like to thank the reviewers for their careful reading and constructive comments.

Funding

This work was supported in part by the Japan Society for the Promotion of Science (JSPS), Grants-in-Aid for Scientific Research (Nos. JP18K13453, JP19KK0255, JP20K14356, and JP21H03451).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Dedicated to Claude Brezinski on the occasion of his eightieth birthday.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Akira Imakura, Keiichi Morikuni, and Akitoshi Takayasu contributed equally to this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Imakura, A., Morikuni, K. & Takayasu, A. Complex moment-based methods for differential eigenvalue problems. Numer Algor 92, 693–721 (2023). https://doi.org/10.1007/s11075-022-01456-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-022-01456-y