Abstract

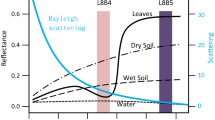

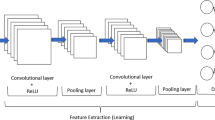

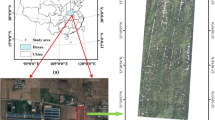

In this paper, a new method to fuse low resolution multispectral and high resolution RGB images is introduced, in order to detect Gramineae weed in rice fields with plants at 50 days after emergence (DAE).The images are taken from a fixed-wing unmanned aerial vehicle (UAV) at 60 and 70 m altitude. The proposed method combines the texture information given by a high resolution red–green–blue (RGB) image and the reflectance information given by a low resolution multispectral (MS) image, to obtain a fused RGB-MS image with better weed discrimination features. After analyzing the normalized difference vegetation index (NDVI) and normalized green red difference index (NGRDI) for weed detection, it was found that NGRDI presents better features. The fusion method consists of decomposing the RGB image using the intensity, hue and saturation (IHS) transformation, then, a second order Haar wavelet transformation is applied to the intensity layer (I) and the NGRDI image. From this transformation, the low–low (LL) coefficients of the NGRDI image are replaced by the LL coefficients of the I layer. Finally, the fused image is obtained by transforming the new wavelet coefficients to RGB space. To test the method, a one hectare experimental plot with rice plants at 50 DAE with Gramineae weeds was selected. Additionally, to compare the performance of the method, two indices were used, specifically, the M/MGT index which is the percentage of detected weed area, and the MP index which indicates the precision of weed detection. These indices were evaluated in four validation zones using three Neural Networks (NN) detection systems based on three types of images; namely, RGB, RGB + NGRDI, and fused RGB-NGRDI. The best weed detection performance was obtained by the NN with the fused image, with M/MGT index between 80 and 108% and MP between 70 and 85%.

Similar content being viewed by others

References

Bishop, C. M. (1995). Neural networks for pattern recognition. Oxford, UK: Oxford University Press.

Castro, A. I., Jurado-Exposito, M. T., Gomez-Casero, M., & Lopez-Granados, F. (2012). Applying neural networks to hyperspectral and multispectral field data for discrimination of cruciferous weeds in winter crops. The Scientific World Journal. https://doi.org/10.1100/2012/630390.

Chauhan, B. S., & Johnson, D. E. (2011). Row spacing and weed control timing affect yield of aerobic rice. Field Crops Research, 121(1), 226–231.

Cobb, A. H., & Reade, J. (2010). Herbicides and plant physiology. Oxford, UK: Willey-Blackwell.

Ehlers, M., Klonus, S., Astrand, P., & Rosso, P. (2010). Multi-sensor image fusion for pansharpening in remote sensing. International Journal of Image and Data Fusion, 1(1), 25–45.

Fonseca, L., Namikawa, L., Castejon, E., Carvalho, L., Pinho, C., & Pagamisse, A. (2011). Image fusion for remote sensing applications. Y. Zheng (Ed.), Image fusion and its applications. InTech, https://doi.org/10.5772/22899. Available from: https://www.intechopen.com/books/image-fusion-and-itsapplications/image-fusion-for-remote-sensing-applications.

Gitelson, A. A., Kaufman, Y. J., Stark, R., & Rundquist, D. (2002). Novel algorithms for remote estimation of vegetation fraction. Remote Sensing of Environment, 80(1), 76–87.

Gray, C. J., Shaw, D. R., Gerard, P. D., & Bruce, L. (2008). Utility of multispectral imagery for soybean and weed species differentiation. Weed Technology, 22(4), 713–718.

Hagan, M., Demuth, H. B., Beale, M. H., & De Jesús, O. (1996). Neural network design (2nd ed.). Stillwater, OK, USA: Martin Hagan.

Haralick, R., Shanmugam, K., & Dinstein, I. (1973). Textural features for image classification. IEEE Transactions on Systems, Man, and Cybernetics, SMC (3), 610–621.

Hong, G., Zhang, Y., & Mercer, B. (2009). A wavelet and IHS integration method to fuse high resolution SAR with moderate resolution multispectral images. Photogrammetric Engineering & Remote Sensing, 75, 1213–1223.

Johnson, D., Wopereis, M., Mbodi, D., Diallo, S., Power, S., & Haefele, S. (2004). Timing of weed management and yield losses due to weeds in irrigated rice in the Sahel. Field Crop Research, 85, 31–42.

Juraimi, A., Mohamad, M., Begum, M., Anuar, A., & Azmy, M. (2009). Critical period of weed competition in direct seeded rice under saturated and flooded conditions. Journal of Tropical Agricultural Science, 32(2), 305–316.

Li, S., Kang, X., & Fang, L. (2016). Pixel-level image fusion: A survey of the state of the art. Information Fusion, 33, 100–112. https://doi.org/10.1016/j.inffus.2016.05.004.

López-Granados, F. (2011). Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Research, 51(1), 1–11.

Lottes, P., Khanna, R., Pfeifer, J., Siegwart, R., & Stachniss, C. (2017). UAV-based crop and weed classification for smart farming. IEEE international conference on robotics and automation, ICRA 2017 (pp. 3024–3031). Singapore: IEEE.

Louargant, M., Villette, S., Jones, G., Vigneau, N., Paoli, J., & Gée, C. (2017). Weed detection by UAV: Simulation of the impact of spectral mixing in multispectral images. Precision Agriculture, 18(6), 932–951. https://doi.org/10.1007/s11119-017-9528-3.

Mather, P. M., & Koch, M. (2011). Computer processing of remotely-sensed images: An introduction. Oxford, UK: Wiley.

Pantazi, X., Tamouridou, A., Alexandridis, T., Lagopodi, A., Kashefi, J., & Moshou, D. (2017). Evaluation of hierarchical self-organising maps for weed mapping using UAS multispectral imaginery. Computers and Electronics in Agriculture, 139, 224–230.

Pohl, C., & Genderen, J. (1998). Multisensor image fusion in remote sensing: Concepts. International Journal of Remote Sensing, 19(5), 823–854.

Ramirez, J. (2004). Weed population dynamics in rice fields in the center, plateau and north of Tolima Region (in Spanish, Dinámica Poblacional de Malezas del Cultivo de Arroz en las Zonas Centro, Meseta y Norte del Departamento del Tolima). Bogota, Colombia: Universidad Nacional de Colombia.

Ripley, B. D. (1996). Pattern recognition and neural networks. Cambridge, UK: Cambridge University Press.

Rouse, J. W., Haas, R. H., Schell, J. A., & Deering, D. W. (1973). Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the earth resources technology satellite symposium NASA SP-351 (Vol. 1, pp. 309–317). Washington D.C.

Sanli, F., Abdikna, S., Esetlili, M., & Sunar, F. (2016). Evaluation of image fusion methods using PALSAR, RADARSAT-1 and SPOT images for land use/land cover classification. Journal of Indian Society of Remote Sensing, 45(4), 591–601. https://doi.org/10.1007/s12524-016-0625-y.

Schneider, C. A., Rasband, W. S., & Eliceiri, K. W. (2012). NIH image to ImageJ: 25 years of image analysis. Nature Methods, 9(7), 671–675, PMID 22930834 (on Google Scholar).

Sonka, M., Hlavac, V., & Boyle, R. (1993). Image processing, analysis and machine vision. Boston, USA: Springer.

Torres-Sánchez, J., Peña-Barragán, J. M., Gómez-Candón, D., De Castro, A. I., & López-Granados, F. (2013). Imagery from unmanned aerial vehicles for early site specific weed management. In: J. V. Stafford (Ed.), Precision agriculture 2013. Wageningen, The Netherlands: Wageningen Academic Publishers, https://doi.org/10.3920/978-90-8686-778-3_22.

Wald, L. (2002). Data fusion: Definitions and architectures—Fusion of images of different spatial resolution. Paris, France: Presses de l’Ecole, Ecole des Mines de Paris. pp. 200

Zhang, Y. (2004). Understanding image fusion. Photogrammetric Engineering and Remote Sensing, 70(6), 657–661.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Barrero, O., Perdomo, S.A. RGB and multispectral UAV image fusion for Gramineae weed detection in rice fields. Precision Agric 19, 809–822 (2018). https://doi.org/10.1007/s11119-017-9558-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-017-9558-x