Abstract

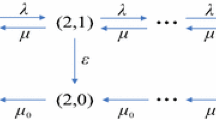

We investigate the behavior of equilibria in an M/M/1 feedback queue where price- and time-sensitive customers are homogeneous with respect to service valuation and cost per unit time of waiting. Upon arrival, customers can observe the number of customers in the system and then decide to join or to balk. Customers are served in order of arrival. After being served, each customer either successfully completes the service and departs the system with probability q, or the service fails and the customer immediately joins the end of the queue to wait to be served again until she successfully completes it. We analyze this decision problem as a noncooperative game among the customers. We show that there exists a unique symmetric Nash equilibrium threshold strategy. We then prove that the symmetric Nash equilibrium threshold strategy is evolutionarily stable. Moreover, if we relax the strategy restrictions by allowing customers to renege, in the new Nash equilibrium, customers have a greater incentive to join. However, this does not necessarily increase the equilibrium expected payoff, and for some parameter values, it decreases it.

Similar content being viewed by others

References

Altman, E., Shimkin, N.: Individual equilibrium and learning in processor sharing systems. Oper. Res. 46(6), 776–784 (1998)

Adan, I.J., Kulkarni, V.G., Lee, N., Lefeber, E.: Optimal routeing in two-queue polling systems. J. Appl. Probab. 55(3), 944–967 (2018)

Boucherie, R.J., Van Dijk, N.M.: On the arrival theorem for product form queueing networks with blocking. Perform. Eval. 29(3), 155–176 (1997)

Brooms, A.C., Collins, E.J. Stochastic order results and equilibrium joining rules for the Bernoulli Feedback Queue. Working Paper (2013). http://eprints.bbk.ac.uk/8363/1/8363.pdf

Disney, R.L., McNickle, D.C., Simon, B.: The M/G/1 queue with instantaneous Bernoulli feedback. Naval Res. Logist. Q. 27(4), 635–644 (1980)

Disney, R.L.: A note on sojourn times in M/G/1 queues with instantaneous Bernoulli feedback. Naval Res. Logist. Q. 28(4), 679–684 (1981)

Disney, R.L., König, D.: Stationary queue-length and waiting-time distributions in single-server feedback queues. Adv. Appl. Probab. 16(2), 437–446 (1984)

Dendievel, S., Latouche, G., Liu, Y.: Poisson’s equation for discrete-time quasi-birth-and-death processes. Perform. Eval. 70(9), 564–577 (2013)

Dendievel, S., Hautphenne, S., Latouche, G., Taylor, P.G.: The time-dependent expected reward and deviation matrix of a finite QBD process. Linear Algebra Appl. 570, 61–92 (2019)

Filliger, R., Hongler, M.O.: Syphon dynamics—a soluble model of multi-agents cooperative behavior. Europhys. Lett. 70(3), 285 (2005)

Gallay, O., Hongler, M.O.: Circulation of autonomous agents in production and service networks. Int. J. Prod. Econ. 120(2), 378–388 (2009)

Hassin, R.: On the advantage of being the first server. Manag. Sci. 42(4), 618–623 (1996)

Hassin, R., Haviv, M.: Equilibrium threshold strategies: the case of queues with priorities. Oper. Res. 45(6), 966–973 (1997)

Hassin, R., Haviv, M.: To Queue or Not to Queue: Equilibrium Behavior in Queueing Systems, vol. 59. Springer, Berlin (2003)

Haviv, M., Oz, B.: Regulating an observable M/M/1 queue. Oper. Res. Lett. 44(2), 196–198 (2016)

Latouche, G., Ramaswami, V.: Introduction to Matrix Analytic Methods in Stochastic Modeling, vol. 5. Siam, Philadelphia (1999)

Maynard Smith, J.: Evolutionary game theory. Phys. D Nonlinear Phenom. 22(1–3), 43–49 (1986)

Naor, P.: The regulation of queue size by levying tolls. Econom. J. Econom. Soc. 37(1), 15–24 (1969)

Neuts, M.F.: Matrix Geometric Solutions in Stochastic Models: An Algorithmic Approach. Johns Hopkins University Press, Baltimore (1981)

Seneta, E.: Non-negative Matrices and Markov Chains. Springer, Berlin (2006)

Takacs, L.: A single-server queue with feedback. Bell Syst. Tech. J. 42(2), 505–519 (1963)

Takagi, H.: Analysis and applications of a multiqueue cyclic service system with feedback. IEEE Trans. Commun. 35(2), 248–250 (1987)

Walrand, J.: An Introduction to Queueing Networks. Prentice Hall, Upper Saddle River (1988)

Acknowledgements

P. G. Taylor’s research is supported by the Australian Research Council (ARC) Laureate Fellowship FL130100039 and the ARC Centre of Excellence for the Mathematical and Statistical Frontiers (ACEMS). M. Fackrell’s research is supported by the ARC Centre of Excellence for the Mathematical and Statistical Frontiers (ACEMS). J. Wang would like to thank the University of Melbourne for supporting her work through the Melbourne Research Scholarship.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

The nonreneging case

.

1.1 Proof of Lemma 1

For integer \(d \ge 1\), we first define

where \(V^d\) is the dth power of V and \(\varvec{v}_k\) is the kth element of a vector \(\varvec{v}\). We now prove by mathematical induction that \(^{d}\nu ^{(x)}_{j,j}\) and \(^{d}\nu ^{(x)}_{i,j}\) are increasing in j for any d and \(1 \le i \le j \le \lceil x \rceil \).

When \(d =1\),

That is, \(^{1}\nu ^{(x)}_{j+1,j+1} = \, ^{1}\nu ^{(x)}_{j,j} > \, ^{1}\nu ^{(x)}_{1,1}\) for \(1 < j \le \lceil x \rceil \), and \(^{1}\nu ^{(x)}_{i,j+1} = \, ^{1}\nu ^{(x)}_{i,j}\) for \(1 \le i \le j \le \lceil x \rceil \).

Next suppose that the induction assumption is at the dth transition,

Before proving that (B.4) holds for \(d+1\), we first prove that \(^{d}\nu ^{(x)}_{i,j} \, \ge \, ^{d+1}\nu ^{(x)}_{i,j}\). Since \(^{d}\nu ^{(x)}_{i,j}\) represents the sum of probabilities of being in each state in \({\mathcal {S}}\) at the dth transition, that is, the probability that the tagged customer is still in the system in the dth transition, if the initial state is (i, j). It follows from this physical interpretation that \(^{d}\nu _{i,j}^{(x)} = 1\) when \(d < i\). Furthermore, since the event that the tagged customer is still in the system after \(d+1\) transitions is a subset of the event that it is still in the system after d transitions, it must be the case that \(^d\nu ^{(x)}_{i,j}\) is decreasing in d.

Then by expanding both \(^{d+1}\nu ^{(x)}_{i,j+1}\) and \(^{d+1}\nu ^{(x)}_{i,j}\) as in Eq. (3.1) and collecting identical terms together, for \(1 \le i \le j \le \lceil x \rceil \), we have

Again, by expanding \(^{d+1}\nu ^{(x)}_{j+1,j+1}\), using Eq. (3.1), and collapsing the term \(\displaystyle \frac{\mu q}{\lambda +\mu } \, ^{d}\nu ^{(x)}_{j,j}\), we obtain, for \(1 \le i \le j \le \lceil x \rceil \),

where the inequality in (B.6) holds strictly if and only if \(d > j-1\). It follows from induction assumption (B.4) that \(^{d}\nu ^{(x)}_{j+1,j+2} \ge \,^{d}\nu ^{(x)}_{j+1,j+1} \ge \,^{d}\nu ^{(x)}_{j,j}\), hence, for \(1 \le i \le j \le \lceil x \rceil \),

and

Finally, from Eq. (3.2),

It follows that

are increasing in j. \(\square \)

1.2 Proof of Lemma 2

-

When \(n< x_1 = n+ p_1 < n+ p_2 = x_2 \le n+1\), from Eq. (3.2),

$$\begin{aligned}&\left( {\mathbf {I}}-P^{(x_1)} \right) \varvec{w}^{(x_1)} \, = \, \frac{1}{\lambda +\mu }\varvec{e}, \end{aligned}$$(B.12)$$\begin{aligned}&\left( {\mathbf {I}}-P^{(x_2)} \right) \varvec{w}^{(x_2)} \, = \, \frac{1}{\lambda +\mu }\varvec{e} , \end{aligned}$$(B.13)where the probability transition matrices \(P^{(x_1)}\) and \(P^{(x_2)}\) have the same dimension. Noting that \(P^{(x_2)}\) and \(P^{(x_1)}\) only differ in n rows, we have

$$\begin{aligned} \left( I-P^{(x_2)} \right) \, (\varvec{w}^{(x_2)}-\varvec{w}^{(x_1)})= & {} (P^{(x_2)}-P^{(x_1)}) \varvec{w}^{(x_1)}\nonumber \\= & {} \frac{\lambda (p_2-p_1)}{\lambda +\mu } \, \begin{bmatrix} {\mathbf {0}}_{\frac{n(n-1)}{2} \times 1} \\ w^{(x_1)}_{1,n+1} - w^{(x_1)}_{1,n} \\ w^{(x_1)}_{2,n+1} - w^{(x_1)}_{2,n} \\ \vdots \\ w^{(x_1)}_{n,n+1} - w^{(x_1)}_{n,n} \\ {\mathbf {0}}_{(2n+3) \times 1} \end{bmatrix} . \end{aligned}$$(B.14)We know from Eq. (B.10) that \(w^{(x_1)}_{i,n+1} - w^{(x_1)}_{i,n} > 0\). Also, since the \(((i_1,j_1),(i_2,j_2))\)th entry of \((I-P^{(x_2)})^{-1}\) is the tagged customer’s expected number of visits to state \((i_2,j_2)\) starting from state \((i_1,j_1)\) in \({\mathcal {S}}\) before she leaves the system,

$$\begin{aligned} (I-P^{(x_2)})^{-1} > 0 . \end{aligned}$$Hence

$$\begin{aligned} \varvec{w}^{(x_2)}-\varvec{w}^{(x_1)} = (I-P^{(x_2)})^{-1} \, (P^{(x_2)}-P^{(x_1)}) \, \varvec{w}^{(x_1)} > 0 . \end{aligned}$$(B.15) -

When \(x_1 = n\) and \(x_2 =n + p_2 \) or \(x_2 = n+1\), \(P^{(x_1)}\) and \(P^{(x_2)}\) have different sizes. However, we can write \(P^{(x_2)}\) as

$$\begin{aligned} P^{(x_2)} \quad&= \quad \left[ \begin{array}{c c c c c | c} A_0^{(1)} &{} A_1^{(1)} &{} \cdots &{} \cdots &{} \cdots &{} 0\\ A_{-1}^{(2)} &{} A_0^{(2)} &{} A_1^{(2)} &{} \cdots &{} \cdots &{} 0\\ \vdots &{} A_{-1}^{(3)} &{} A_0^{(3)} &{} A_1^{(3)} &{} \cdots &{} 0 \\ \vdots &{}\vdots &{} \vdots &{} \ddots &{} \ddots &{} \vdots \\ \vdots &{} \vdots &{} \vdots &{} A_{-1}^{(n+1)} &{} A_0^{(n+1)} &{} 0 \\ \hline 0 &{} \cdots &{} \cdots &{} \cdots &{} {A}_{-1}^{(n+2)} &{} {A}_0^{(n+2)} \end{array} \right] \end{aligned}$$(B.16)$$\begin{aligned}&= \quad \left[ \begin{array}{c | c} {\bar{P}}^{(x_2)} &{} \varvec{0}_{\frac{(n+1)(n+2)}{2} \times (n+2)}\\ \hline \varvec{0}_{(n+2) \times \frac{n(n+1)}{2}} \quad {A}_{-1}^{(n+2)}&{} {A}_0^{(n+2)} \end{array} \right] , \end{aligned}$$(B.17)and

$$\begin{aligned} (I-P^{(x_2)})^{-1} = \left[ \begin{array}{c | c} ( I-{\bar{P}}^{(x_2)})^{-1} &{} {\mathbf {0}} \\ \hline N \, ( I-{\tilde{P}}^{(x_2)})^{-1} &{} ( I- {A}_0^{(n+2)} )^{-1} \end{array} \right] , \end{aligned}$$(B.18)where \(N = \begin{bmatrix} \varvec{0}_{(n+2) \times \frac{n(n+1)}{2}}&( I- {A}_0^{(n+2)} )^{-1} \, {A}_{-1}^{(n+2)} \end{bmatrix}\). When \(x_1 = n\), the position where the tagged customer can join is at most \(n+1\), so we compare \(\varvec{w}^{(x_1)}_k\) with \(\varvec{w}^{(x_2)}_k\) for \(k = 1, \ldots , \displaystyle \frac{(n+1)(n+2)}{2}\). If we define \(\varvec{{\bar{w}}}^{(x_2)}: = \left[ {I}_{\frac{(n+1)(n+2)}{2}} \quad \varvec{0}\right] \varvec{w}^{(x_2)} \), then

$$\begin{aligned} \varvec{{\bar{w}}}^{(x_2)} = \frac{1}{\lambda +\mu }( I-{\bar{P}}^{(x_2)})^{-1} \, \varvec{e} , \end{aligned}$$(B.19)thus

$$\begin{aligned} \varvec{{\bar{w}}}^{(x_2)}-\varvec{w}^{(x_1)} = \frac{\lambda \, p_2}{\lambda +\mu } \, (I-{\bar{P}}^{(x_2)})^{-1}\, \begin{bmatrix} {\mathbf {0}}_{\frac{n(n-1)}{2} \times 1} \\ w^{(x_1)}_{1,n+1} - w^{(x_1)}_{1,n} \\ w^{(x_1)}_{2,n+1} - w^{(x_1)}_{2,n} \\ \vdots \\ w^{(x_1)}_{n,n+1} - w^{(x_1)}_{n,n} \\ {\mathbf {0}}_{(n+1) \times 1} \end{bmatrix} > 0 . \end{aligned}$$(B.20)In (B.20), with an abuse of notation, we include the case \(p_2 = 1\).

-

When \(x_1 = n_1 +p_1, x_2= n_2 +p_2\), and \(n_1 <n_2\), by comparing the expected sojourn time for all the consecutive integers between \(x_1\) and \(x_2\), it follows from the aforementioned reasoning that

$$\begin{aligned}&w_{i,j}^{(x_1)} < w_{i,j}^{(\lceil x_1 \rceil )}&1&\le i \le j \le \lceil x_1 \rceil +1, \nonumber \\&\cdots&\end{aligned}$$(B.21)$$\begin{aligned}&w_{i,j}^{(\lceil x_2 \rceil -2)} < w_{i,j}^{(\lceil x_2 \rceil -1)}&1&\le i \le j \le \lceil x_2 \rceil -1, \end{aligned}$$(B.22)$$\begin{aligned}&w_{i,j}^{(\lceil x_2 \rceil -1)} < w_{i,j}^{(x_2)}&1&\le i \le j \le \lceil x_2 \rceil . \end{aligned}$$(B.23)Hence, \( w_{i,j}^{(x_1)} < w_{i,j}^{(x_2)}, \, 1 \le i \le j \le \lceil x_1\rceil +1\).

The reneging case

1.1 .

1.2 .

Proof of Lemma 4

Proof

It follows from the explanation at the beginning of Sect. 3.1 that the tagged customer will never renege after joining if she uses \(x_{tag} \ge \lceil x \rceil \) when others uses x. Thus the comparison of \(z^{(x)}_{i,j}\) and \({\hat{z}}_{i,j}^{(\lfloor x \rfloor +1,x)}\) is actually the comparison of the respective expected sojourn time \(w^{(x)}_{i,j}\) and \({\hat{w}}_{i,j}^{(\lfloor x \rfloor +1,x)}\). As in Lemma 1, the tagged customer’s expected sojourn time is her expected total number of visits of different states until she leaves the system, times \(\frac{1}{\lambda +\mu }\). Similar to the proof of Lemma 1, we first define

which is the probability that the tagged customer is still in the system at the dth transition, if her initial state is (i, j), she never reneges, and others join and renege with threshold x. We prove Eq. (4.6) by mathematical induction.

First, it follows from the physical interpretation that when \(d \le \lfloor x \rfloor \), \(^d \nu ^{(x)}_{i,j} = \, ^d{\hat{\nu }}^{(\lfloor x \rfloor +1,x)}_{i,j}\). This is because others’ reneging will not affect the tagged customer until she rejoins the queue and is in service for the second time, and the queue size has reached \(\lfloor x \rfloor + 1\) before she is in service for the first time. This is possible only when \(d > \lfloor x \rfloor \). Indeed,

Figure 11 shows an example of Eq. (D.2) with \((i,j) = (2,3)\) in (a), \((i,j) = (3,3)\) in (b), and \(2< x < 3\). The gray block represents the tagged customer, and the white ones represent other customers in the system. It shows the sample paths where the reneging of others affects the tagged customer at the earliest possible transition. The first row is when others use threshold 2, and the second row is when others use threshold 3. In both Fig. 11a, b, the reneging of others affects the queue size at the first transition, but will not affect the tagged customer until \(d >3\).

Next, we assume that \( ^d \nu ^{(x)}_{i,j} \ge \,^d{\hat{\nu }}^{(\lfloor x \rfloor +1,x)}_{i,j}\). Then we write the difference between \(P^{(x)}\) and \({\hat{P}}^{(\lfloor x \rfloor +1,x)}\) in the form

where

Hence,

The first inequality follows from the conclusion that \(^{d}\nu ^{(x)}_{i,j}\) is increasing in j for any d and \(1 \le i \le j \le \lceil x \rceil \) in Lemma 1. Since \(^{d}\nu ^{(x)}_{i,\lfloor x \rfloor + 1} \ge ^{d}\nu ^{(x)}_{i,\lfloor x \rfloor }\) for \(i = 1, \dots , \lfloor x \rfloor \),

The second inequality is from the induction assumption. Hence,

This concludes the proof for inequality (4.6).

When \(x = \lfloor x \rfloor \), \({\hat{P}}^{(\lfloor x \rfloor , \lfloor x \rfloor )} = P^{(\lfloor x \rfloor )}\), and so \(\varvec{{\hat{z}}}^{(\lfloor x \rfloor ,\lfloor x\rfloor )} = \varvec{z}^{(\lfloor x \rfloor )}\). Also, \(A_1^{(\lfloor x \rfloor )} = 0\), so

Multiplying Eq. (3.2) by \(\left[ I_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}} \quad 0_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2} \times (\lfloor x \rfloor +1)} \right] \) on both sides and applying Eq. (D.8), we have

where \({\bar{P}}^{(\lfloor x \rfloor )}:= \left[ I_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}} \quad 0_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2} \times (\lfloor x \rfloor +1)} \right] P^{(\lfloor x \rfloor )} \begin{bmatrix} I_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}} \\ 0_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2} \times (\lfloor x \rfloor +1)} \end{bmatrix}\). Hence,

Similarly,

where \(\bar{{\hat{P}}}^{(\lfloor x \rfloor )}:= \left[ I_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}} \quad 0_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2} \times (\lfloor x \rfloor +1)} \right] {\hat{P}}^{(\lfloor x \rfloor +1, \lfloor x \rfloor )} \begin{bmatrix} I_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}} \\ 0_{\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2} \times (\lfloor x \rfloor +1)} \end{bmatrix}\). Since \(P^{(\lfloor x \rfloor +1, x )}\) and \(P^{(x)}\) have the same first \(\displaystyle \frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}\) lines and rows, it follows that \(\varvec{w}_{1:\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}}^{(\lfloor x \rfloor )} = \varvec{{\hat{w}}}_{1:\frac{\lfloor x \rfloor (\lfloor x \rfloor +1)}{2}}^{(\lfloor x \rfloor +1, \lfloor x \rfloor )}\). \(\square \)

E

1.1 E.1 The derivative of function f(p)

The calculation of \(f'(p)\) is implemented in Wolfram Mathematica.

1.2 E.2 Social welfare expressions

The social welfare in the N-case:

The social welfare in the \(R-\)case:

Rights and permissions

About this article

Cite this article

Fackrell, M., Taylor, P. & Wang, J. Strategic customer behavior in an M/M/1 feedback queue. Queueing Syst 97, 223–259 (2021). https://doi.org/10.1007/s11134-021-09693-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-021-09693-z

Keywords

- Feedback queue

- Nash equilibrium

- Reneging

- Nonhomogeneous quasi-birth-and-death processes

- Matrix analytic methods