Abstract

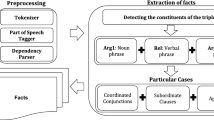

Several techniques for the automatic acquisition of Information Extraction (IE) systems have used dependency trees to form the basis of an extraction pattern representation. These approaches have used a variety of pattern models (schemes for representing IE patterns based on particular parts of the dependency analysis). An appropriate pattern model should be expressive enough to represent the information which is to be extracted from text without being overly complex. Previous investigations into the appropriateness of the currently proposed models have been limited. This paper compares a variety of pattern models, including ones which have been previously reported and variations of them. Each model is evaluated using existing data consisting of IE scenarios from two very different domains (newswire stories and biomedical journal articles). The models are analysed in terms of their ability to represent relevant information, number of patterns generated and performance on an IE scenario. It was found that the best performance was observed from two models which use the majority of relevant portions of the dependency tree without including irrelevant sections.

Similar content being viewed by others

References

Abe, K., Kawasoe, S., Asai, T., Arimura, H., & Arikawa, S. (2002). Optimised substructure discovery for semi-structured data. In Proceedings of the 6th European Conference on Principles and Practice of Knowledge in Databases (PKDD-2002), pp. 1–14.

Briscoe, T., & Carroll, J. (2002). Robust accurate statistical annotation of general text. In Proceedings of the Third International Conference on Language Resources and Evaluation, Las Palmas, Gran Canaria, pp. 4–8.

Bunescu, R., & Mooney, R. (2005). A shortest path dependency kernel for relation extraction. In Proceedings of the Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing, Vancouver, B.C., pp. 724–731.

Chieu, H., & Ng, H. (2002). A maximum entropy approach to information extraction from semi-structured and free text. In Proceedings of the Eighteenth International Conference on Artificial Intelligence (AAAI-02), Edmonton, Canada, pp. 768–791.

Clark S., Curran J. (2008) Wide-coverage efficient statistical parsing with CCG and log-linear models. Computational Linguistics 33(4): 493–552

Collins, M., & Duffy, N. (2001). Convolution kernels for natural language processing. In Advances in Neural Information Processing Systems (NIPS 2001), Vancouver, Canada, pp. 625–632.

Craven, M., & Kumlien, J. (1999). Constructing biological knowledge bases by extracting information from text sources. In Proceedings of the Seventh International Conference on Intelligent Systems for Molecular Biology, Heidelberg, Germany, (pp. 77–86). Heidelberg: AAAI Press.

Culotta, A., & Sorensen, J. (2004). Dependency tree Kernels for relation extraction. In 42nd Annual Meeting of the Association for Computational Linguistics, Barcelona, Spain, pp. 423–429.

Fox, C., & Lappin, S. (2005). Achieving expressive completeness and computational efficiency for underspecified semantic representations. In Proceedings of the Fifteenth Amsterdam colloquium, pp. 77–82.

Goldberg L.A., Jerrum M. (2000) Counting unlabelled subtrees of a tree is #P-complete. London Mathmatical Society Journal of Computation and Mathematics 3: 117–124

Greenwood, M. A., & Stevenson, M. (2006). Improving semi-supervised acquisition of relation extraction patterns. In Proceedings of the Information Extraction Beyond the Document workshop (COLING/ACL 2006), Sydney, Australia, pp. 29–35.

Greenwood, M. A., Stevenson, M., Guo, Y., Harkema, H., & Roberts, A. (2005). Automatically acquiring a linguistically motivated genic interaction extraction system. In Proceedings of the 4th Learning Language in Logic workshop (LLL05), Bonn, Germany.

Grover C., Lascarides A., Lapata M. (2005) A comparison of parsing technologies for the biomedical domain. Natural Language Engineering 11(1): 27–65

Hamosh A., Scott A.F., Amberger J., Bocchini C., Valle D., McKusick V.A. (2002) Online Mendelian Inheritance in Man (OMIM), a knowledge base of human genes and genetic disorders. Nucleic Acids Research 30(1): 52–55

Hodges P.E., McKee A.H.Z., Davis B.P., Payne W.E., Garrels J.I. (1999) The Yeast Proteome Database (YPD): a model for the organization and presentation of genome-wide functional data. Nucleic Acids Research 27(1): 69–73

Jiang, J., & Zhai, C. (2007). A systematic exploration of the feature space for relation extraction. In Proceeding of the Conference of the North American Chapter of the Association for Computational Linguistics, Rochester, New York, pp. 113–120.

Klein, D., & Manning, C. D. (2002). Fast exact inference with a factored model for natural language parsing. In Advances in Neural Information Processing Systems 15 (NIPS 2002), Vancouver, Canada, pp. 3–10.

Klein, D., & Manning, C. D. (2003). Accurate unlexicalized parsing. In Proceedings of the 41st Annual Meeting of the Association for Computational Linguistics (ACL-03), Sapporo, Japan, pp. 423–430.

Kudo, T., Suzuki, J., & Isozaki, H. (2005). Boosting-based parse reranking with subtree features. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics, Ann Arbour, MI, pp. 189–196.

Lin, D. (1999). MINIPAR: A minimalist parser. In Maryland Linguistics Colloquium, University of Maryland, College Park.

Lin D., Pantel P. (2001) Discovery of inference rules for question answering. Natural Language Engineering 7(4): 343–360

Manning C., Schütze H. (1999) Foundations of statistical natural language processing. MIT Press, Cambridge, MA

Marcus M.P., Marcinkiewicz M.A., Santorini B. (1993) Building a large annotated corpus of English: The Penn Treebank. Computational Linguistics 19(2): 313–330

McDonald, R., Pereira, F., Kulick, S., Winters, S., Jin, Y., & White, P. (2005). Simple algorithms for complex relation extraction with applications to biomedical IE. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics, Ann Arbour, MI, pp. 491–498.

MUC (1995). Proceedings of the sixth message understanding conference (MUC-6), San Mateo, CA. MUC: Morgan Kaufmann.

Nédellec, C. (2005). Learning language in logic—genic interaction extraction challenge. In Proceedings of the 4th Learning Language in Logic workshop (LLL05), Bonn, Germany.

Nivre, J., & Scholz, M. (2004). Deterministic dependency parsing of English text. In Proceedings of the Twentieth International Conference on Computational Linguistics (COLING-04), Geneva, Switzerland, pp. 64–70.

Pullum G., Gazdar G. (1982) Natural languages and context-free languages. Linguistics and Philosophy 4: 471–504

Romano, L., Kouylekov, M., Szpektor, I., Dagan, I., & Lavelli, A. (2006). Investigating a generic paraphrase-based approach for relation extraction. In Proceedings of the 11th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2006), Trento, Italy, pp. 401–408.

Rose, T., Stevenson, M., & Whitehead, M. (2002). The Reuters Corpus Vol. 1—from yesterday’s news to tomorrow’s language resources. In Proceedings of the Third International Conference on Language Resources and Evaluation (LREC-02), La Palmas de Gran Canaria, pp. 827–832.

Sampson G. (1995) English for the computer. Oxford University Press, Oxford

Soderland S. (1999) Learning information extraction rules for semi-structured and free text. Machine Learning 31(1–3): 233–272

Stevenson, M., & Greenwood, M. A. (2005). A semantic approach to IE pattern induction. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics, Ann Arbor, MI, pp. 379–386.

Stevenson, M., & Greenwood, M. A. (2006). Comparing information extraction pattern models. In Proceedings of the workshop “Information Extraction Beyond The Document” at COLING/ACL 2006, Sydney, Australia, pp. 12–19.

Sudo, K., Sekine, S., & Grishman, R. (2001). Automatic pattern acquisition for japanese information extraction. In Proceedings of the Human Language Technology Conference (HLT2001), San Diego, CA.

Sudo, K., Sekine, S., & Grishman, R. (2003). An improved extraction pattern representation model for automatic IE pattern acquisition. In Proceedings of the 41st Annual Meeting of the Association for Computational Linguistics (ACL-03), Sapporo, Japan, pp. 224–231.

Szpektor, I., Tanev, H., Dagan, I., & Coppola, B. (2004). Scaling web-based acquisition of entailment relations. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing (EMNLP 2004), Barcelona, Spain, pp. 41–48.

Tapanainen, P., & Järvinen, T. (1997). A non-projective dependency parser. In Proceedings of the 5th Conference on Applied Natural Language Processing, Washington, DC, pp. 64–74.

Yangarber, R. (2003). Counter-training in the discovery of semantic patterns. In Proceedings of the 41st Annual Meeting of the Association for Computational Linguistics (ACL-03), Sapporo, Japan, pp. 343–350.

Yangarber, R., Grishman, R., Tapanainen, P., & Huttunen, S. (2000a). Automatic acquisition of domain knowledge for information extraction. In Proceedings of the 18th International Conference on Computational Linguistics (COLING 2000), Saarbrücken, Germany, pp. 940–946.

Yangarber, R., Grishman, R., Tapanainen, P., & Huttunen, S. (2000b). Unsupervised discovery of scenario-level patterns for information extraction. In Proceedings of the Applied Natural Language Processing Conference (ANLP 2000), Seattle, WA, pp. 282–289.

Zaki, M. (2002). Effectively mining frequent trees in a forest. In 8th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, Canada, pp. 71–80.

Zelenko D., Aone C., Richardella A. (2003) Kernel methods for relation extraction. Journal of Machine Learning Research 3: 1083–1106

Zhang, M., Zhang, J., Su, J., & Zhou, G. (2006). A composite kernel to extract relations between entities with both flat and structured features. In Proceedings of the 21st International Conference on Computational Linguistcs and 44th Annual Meeting of the ACL, Sydney, Australia, pp. 825–832.

Zhou, G., Zhang, M., Ji, D., & Zhu, Q. (2007). Tree kernel-based relation extraction with context-sensitive structured parse tree information. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, pp. 728–736.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Stevenson, M., Greenwood, M.A. Dependency Pattern Models for Information Extraction. Res on Lang and Comput 7, 13–39 (2009). https://doi.org/10.1007/s11168-009-9061-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11168-009-9061-2