Abstract

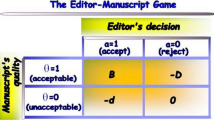

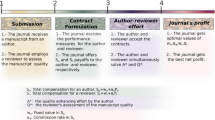

In this paper, we study the reviewer’s compensation problem in the presence of quality standard considerations. We examine a typical scenario in which a journal has to match the uncertain manuscript quality with a specified quality standard, but it imperfectly observes the reviewer’s efforts. This imperfect observability issue is an edge case where editors cannot tell the quality/effort of the review at all. We find that the journal always chooses an incentive scheme to reward the reviewer for achieving the highest quality outcome. However, this can lead to an inefficiency when the journal’s quality standard is below the highest possible quality outcome. This is so because reviewers usually seek to ensure that the manuscript’s quality acceptably matches the journal’s standard. Therefore, to improve the observability of review outcome achieved and to obtain a better signal of the reviewer’s effort, the journal can have the incentive to increase the quality standard. This, however, is only beneficial for non-extreme costs. In addition, we find that in order to motivate the reviewer to work hard in the situation where review outcomes of high quality are imperfectly observed due to a limited quality standard, the journal must give a larger reward to the reviewer. In sum, we show that a failure to observe the reviewer’s efforts motivates higher quality standards, and quality standard considerations lead to higher-powered reviewer’s compensations.

Similar content being viewed by others

References

Bianchi, F., Grimaldo, F., Bravo, G., et al. (2018). The peer review game: an agent-based model of scientists facing resource constraints and institutional pressures. Scientometrics, 116, 1401–1420. https://doi.org/10.1007/s11192-018-2825-4.

Bornmann, L. (2008). Scientific peer review: An analysis of the peer review process from the perspective of sociology of science theories. Human Architecture: Journal of the Sociology of Self-Knowledge, 6(2), 23–38.

Bornmann, L. (2011). Scientific peer review. Annual Review of Information Science and Technology, 45(1), 197–245.

Cabotà, J., Grimaldo, F., Cadavid, L., Squazzoni, F., et al. (2014). A few bad apples are enough. An agent-based peer review game. In Conference: Social simulation conference 2014 (SSC’14). https://ddd.uab.cat/pub/poncom/2014/128567/ssc14_a2014a97iENG.pdf.

Collabra: Psychology. (2020). https://www.collabra.org/.

Dai, T., & Jerath, K. (2013). Salesforce compensation with inventory considerations. Management Science, 59(11), 2490–2501. https://doi.org/10.1287/mnsc.2013.1809.

Davis, P. (2013). Rewarding Reviewers: Money, Prestige, or Some of Both?, The Scholarly Kitchen. Retrieved from https://scholarlykitchen.sspnet.org/2013/02/22/rewarding-reviewers-money-prestige-or-some-of-both/.

El-Omar, E. M. (2014). How to publish a scientific manuscript in a high-impact journal. Advances in Digestive Medicine, 1, 105–109.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2015a). The author-editor game. Scientometrics, 104, 361–380. https://doi.org/10.1007/s11192-015-1566-x.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2015b). Adverse selection of reviewers. Journal of the Association for Information Science and Technology., 66(6), 1252–1262. https://doi.org/10.1002/asi.23249.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2018). Competition between academic journals for scholars’ attention: the ‘Nature effect’ in scholarly communication. Scientometrics, 115, 1413–1432. https://doi.org/10.1007/s11192-018-2723-9.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2019). The optimal amount of information to provide in an academic manuscript. Scientometrics, 121, 1685–1705. https://doi.org/10.1007/s11192-019-03270-1.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2020a). Confirmatory bias in peer review. Scientometrics, 123, 517–533. https://doi.org/10.1007/s11192-020-03357-0.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2020b). The author-reviewer game. Scientometrics, 124, 2409–2431. https://doi.org/10.1007/s11192-020-03559-6.

Garcia, J. A., Rodriguez-Sanchez, R., & Fdez-Valdivia, J. (2020c). Quality censoring in peer review. Scientometrics,. https://doi.org/10.1007/s11192-020-03693-1.

Gasparyan, A. Y., Gerasimov, A. N., Voronov, A. A., & Kitas, G. D. (2015). Rewarding peer reviewers: Maintaining the integrity of science communication. Journal of Korean Medical Science, 30(4), 360–364. https://doi.org/10.3346/jkms.2015.30.4.360.

Johnson, R., Watkinson, A., & Mabe, M. (2018). The STM Report An Overview of Scientific and Scholarly Publishing. STM Association: Fifth Edition. https://www.stm-assoc.org/2018\_10\_04\_STM\_Report\_2018.pdf.

Kovanis, M., Porcher, R., Ravaud, P., et al. (2016). Complex systems approach to scientific publication and peer-review system: Development of an agent-based model calibrated with empirical journal data. Scientometrics, 106, 695–715. https://doi.org/10.1007/s11192-015-1800-6.

Mavrogenis, A. F., Sun, J., Quaile, A., et al. (2019). How to evaluate reviewers: The international orthopedics reviewers score (INOR-RS). International Orthopaedics (SICOT), 43, 1773–1777. https://doi.org/10.1007/s00264-019-04374-2.

Messias, A. M. V., Lira, R. P. C., Furtado, J. M. F., Paula, J. S., & Rocha, E. M. (2017). How to evaluate and acknowledge a scientific journal peer reviewer: a proposed index to measure the performance of reviewers. Arquivos Brasileiros de Oftalmologia, 80(6), 5. https://doi.org/10.5935/0004-2749.20170084.

Mulligan, A., Hall, L., & Raphael, E. (2013). Peer review in a changing world: An international study measuring the attitudes of researchers. Journal of the American Society for Information Science and Technology, 64(1), 132–161.

Mulligan, A., & Mabe, M. (2011). The effect of the internet on researcher motivations, behaviour and attitudes. Journal of Documentation, 67(2), 290–311.

Peer Review Survey. (2019). Sense About Science. Retrieved fromhttps://senseaboutscience.org/activities/peer-review-survey-2019/.

PRC Peer Review Survey. (2015). Mark Ware Consulting. Retrieved fromhttp://publishingresearchconsortium.com/index.php/134-news-main-menu/prc-peer-review-survey-2015-key-findings/172-peer-review-survey-2015-key-findings.

Publons. (2018). Global state of peer review. Retrieved fromhttps://publons.com/community/gspr.

Publons. (2020). Mission. Retrieved fromhttps://publons.com/about/mission.

Roebber, P., & Schultz, D. M. (2011). Peer Review. Program Officers and Science Funding: PLOS ONE. https://doi.org/10.1371/journal.pone.0018680.

Squazzoni, F., & Gandelli, C. (2013). Opening the black-box of peer review: An agent-based model of scientist behaviour. Journal of Artificial Societies and Social Simulation, 16(2), 3. https://doi.org/10.18564/jasss.2128.

Stinchcombe, A. L., & Ofshe, R. (1969). On journal editing as a probabilistic process. American Sociologist, 4, 116–117.

Thurner, S., & Hanel, R. (2011). Peer-review in a world with rational scientists: Toward selection of the average. The European Physical Journal B, 84, 707–711. https://doi.org/10.1140/epjb/e2011-20545-7.

Van Noorden, R. (2013). Open access: The true cost of science publishing. Nature, 495, 426–429.

Van Rooyen, S., Godlee, F., Evans, S., Black, N., & Smith, R. (1999). Effect of open peer review on quality of reviews and on reviewers’ recommendations: a randomised trial. BMJ, 318(7175), 23–27.

Zhuo, J, Cai, N., Li, Y., (2016). Analysis of peer review system based on fewness distribution function. In 6th International Conference on Management, Education, Information and Control (MEICI 2016) (pp. 1133-1136). Amsterdam: Atlantis Press.

Acknowledgements

This research was sponsored by the Spanish Board for Science, Technology, and Innovation under grant PID2020-112579GB-I00, and co-financed with European FEDER funds. We would like to thank the reviewers for their thoughtful comments and efforts towards improving our manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: For the Benchmark scenario, the journal’s standard must be either high quality (H) or medium quality (M)

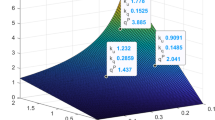

For the Benchmark scenario, the scholarly journal solves for the optimal value of the standard of quality \(Q_b\) to maximize the journal’s net profit:

Then the optimal quality standard \(Q_b\) must be either high quality (H) or medium quality (M). To see this, suppose that the journal chooses a quality standard \(Q_b\) intermediate between M and H, i.e., \(M< Q_b <H\). Then the journal’s expected profit is

and therefore, if \(p_\mathrm{H} r -c <0\), then, the profit is dominated by choosing a medium-quality journal standard \(Q_b =M\), and if \(p_\mathrm{H} r -c \ge 0\), then, this profit is weakly dominated by choosing a high-quality journal standard \(Q_b =H\). Note that the dominance is strict unless \(p_\mathrm{H} r -c = 0\).

Appendix B: A sufficient condition on the reviewer’s effort cost

Following (Dai and Jerath 2013), to ensure that the journal has the incentive to induce high effort \(e_\mathrm{H}\) rather than low effort \(e_\mathrm{L}\), we need to have that the journal’s profit for the optimal quality standard \(Q_{e_\mathrm{H}}\) if the reviewer exerts a high effort \(e_\mathrm{H}\)

is greater than or equal to the journal’s profit for the optimal quality standard \(Q_{e_\mathrm{L}}\) if the reviewer exerts a low effort \(e_\mathrm{L}\)

or equivalently

Now we can prove that \(r [ (p_\mathrm{H}+p_\mathrm{M} - q_\mathrm{H} -q_\mathrm{M})M + (p_\mathrm{L} -q_\mathrm{L})L ] - c(H-L)\) is a lower bound of the right-hand side of the above inequality. To see this, taking into account that the optimal quality standard \(Q_{e_\mathrm{L}}\) (if the reviewer exerts low effort \(e_\mathrm{L}\)) is less than the optimal quality standard \(Q_{e_\mathrm{H}}\) (if the reviewer exerts a high effort \(e_\mathrm{H}\)), i.e., \(Q_{e_\mathrm{L}} < Q_{e_\mathrm{H}}\), we obtain:

since \(Q_{e_\mathrm{L}} < Q_{e_\mathrm{H}}\), \(L\le Q_{e_\mathrm{L}}\), and \(Q_{e_\mathrm{H}} \le H\), and

since \(Q_{e_\mathrm{H}} \ge M\).

Therefore, we have that the following condition on the reviewer’s effort cost \(\psi\) is sufficient to ensure that the journal wants to motivate a high effort \(e_\mathrm{H}\) from the reviewer

Appendix C: The reviewer’s incentive should be given only for the best review outcome

Firstly, we consider the case when the journal chooses a quality standard \(Q > M\). In that case, if the journal only pays a positive incentive for the highest quality outcome of the review process, then the reviewer’s expected compensation is \(\frac{\psi }{1-q_\mathrm{H}/p_\mathrm{H}}\).

Suppose that the journal uses a different incentive scheme where the reviewer receives a reward \(t_s \ge 0\) for \(s \in \{H,M,L\}\), and at least one of \(t_\mathrm{M}\) and \(t_\mathrm{L}\) is positive. Following (Dai and Jerath 2013), we must prove that the second scheme is dominated by the first one.

For the second incentive strategy, the incentive compatibility constraint that ensures that the reviewer chooses a high effort level \(e_\mathrm{H}\) rather than a low effort level \(e_\mathrm{L}\) is

which gives

where \(\epsilon _s = t_s (p_s -q_s)\) for \(s \in \{M,L\}\).

Then, it follows that the reviewer’s expected incentive is

where the last inequality is true because the monotone likelihood ratio property (MLRP)

Secondly, we consider the case when the journal chooses a quality standard \(Q = M\). In that case, if the journal only pays a positive incentive for the medium quality outcome of the review process, then the reviewer’s expected compensation is \(\frac{\psi }{1-(q_\mathrm{H}+q_\mathrm{M})/(p_\mathrm{H}+ p_\mathrm{M})}\). Suppose that the journal uses a different incentive scheme where the reviewer receives a reward \(t_s > 0\) for \(s \in \{M,L\}\). Again, we have to prove that the second scheme is dominated by the first one.

For the second incentive strategy in this new case, the incentive compatibility constraint that ensures that the reviewer chooses a high effort level \(e_\mathrm{H}\) rather than a low effort level \(e_\mathrm{L}\) is

which gives

where \(\epsilon _{L} = t_\mathrm{L} (p_\mathrm{L} -q_\mathrm{L})\).

Therefore, in this second case, the reviewer’s expected incentive is

where the last inequality is true because of the MLRP.

In practice, many academic publishers now routinely keep records of the review history of each reviewer in the journal’s editorial manager system (e.g., Springer). Information such as current review statistics, historical reviewer invitation statistics, historical reviewer performance summary, reviewing variables scored by the assigned editors, historical reviewer averages, and so on. Publishers’ evaluations are usually related to the readability, downloads and marketability of their journals.

Nevertheless, there have been some attempts to evaluate reviewers for the quality of their peer-reviews, (Van Rooyen et al. 1999; Mavrogenis et al. 2019; Messias et al. 2017). For example, Van Rooyen et al. (1999) described the Review Quality Instrument (RQI) for reviewers. The RQI is an easy to calculate, seven item instrument that evaluates the importance of the research question, originality of the manuscript, strengths and weaknesses of the methods, provides comments about writing and presentation, constructive criticism, evidence to support comments and comments on interpretation of the results. Van Rooyen et al. (1999) concluded that reviewers performed best on aspects that help the authors to improve the quality of their manuscripts, and less well on aspects that help the editors select papers (originality of the research), which is obvious because more reviewers are experienced as authors and not as editors. An open peer commentary or open peer-review and a post-publication peer-review have also been suggested (Van Rooyen et al. 1999). Reviewers would record authors’ complaints and authors would formally reply to all reviewers’ comments and their reply will be published in its entirety for all readers of the journal to see and appraise. In these situations, a non-anonymous peer-review is recommended, on the basis that if reviewers have to sign their peer-reviews they may put more effort and produce better analysis, (Van Rooyen et al. 1999).

Also, Messias et al. (2017) described a Reviewer Index (RI). This index is a ratio that considers the substantial importance of the reliability of the reviewers as measured by the number of peer-reviews completed, the time spent from peer-review acceptance to submission, and the editor’s score (in cubic), (Messias et al. 2017).

As shown in Mavrogenis et al. (2019), to evaluate who are the best reviewers and to determine if there are any characteristics that would tend to predict the quality of their peer-reviews, they routinely review the peer-reviews performed in their journal by the reviewers included in the journal’s reviewers’ panel. They evaluate the time taken to reply to an invitation, the numbers of accepted assignments and returned reviews, and the scientific quality of reviews. Based upon these observations, they created an index to measure the performance of the reviewers. This index, named the International Orthopaedics Reviewers Score (INOR-RS) considers reviewers’ and reviewing variables calculated using the outputs of the “Editorial Manager” workflow platform and scored by the assigned editor. The INOR-RS can be used specifically for Surgery Journals or in general for any scientific publication. More specifically, the INOR-RS is a five item score; each letter stands for a variable related to the peer-review(er), and scored from 0 to 4 points by the assigned editor. The sum of the total points (0-10 points) indicates the INOR-RS for the respective reviewer for the respective manuscript. This score is inserted by the assigned editor at the review rate section of the Editorial Manager workflow platform, and it accompanies the reviewer at the reviewers’ details section of the reviewer’s panel of the Editorial Manager for the respective reviewed paper. The score may change at the next peer-review performed by the reviewer for another manuscript, and then the mean score is inserted at the reviewer’s details section of the Editorial Manager workflow platform.

Appendix D: The assumption on c that rules out the case in which the journal does not need to motivate any effort from the reviewer

Comparing the journal’s expected profit for the cases of medium and low quality standard, i.e., \(\pi ^*_{Q=M}\) and \(\pi ^*_{Q=L}\), we obtain:

if and only if

or equivalently

Therefore, if the marginal cost c associated with the journal’s standard Q is not too high, with \(c <C\), then, in that case, the journal’s expected profit for a medium-quality standard is strictly higher than that for a low-scientific quality. Hence, the assumption \(c <C\) rules out the case when the journal does not need to motivate any effort from the reviewer because the journal’s quality standard is strictly lower than medium quality.

Rights and permissions

About this article

Cite this article

Garcia, J.A., Rodriguez-Sánchez, R. & Fdez-Valdivia, J. The interplay between the reviewer’s incentives and the journal’s quality standard. Scientometrics 126, 3041–3061 (2021). https://doi.org/10.1007/s11192-020-03839-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-020-03839-1