Abstract

In this paper, we develop a penalized realized variance (PRV) estimator of the quadratic variation (QV) of a high-dimensional continuous Itô semimartingale. We adapt the principle idea of regularization from linear regression to covariance estimation in a continuous-time high-frequency setting. We show that under a nuclear norm penalization, the PRV is computed by soft-thresholding the eigenvalues of realized variance (RV). It therefore encourages sparsity of singular values or, equivalently, low rank of the solution. We prove our estimator is minimax optimal up to a logarithmic factor. We derive a concentration inequality, which reveals that the rank of PRV is—with a high probability—the number of non-negligible eigenvalues of the QV. Moreover, we also provide the associated non-asymptotic analysis for the spot variance. We suggest an intuitive data-driven subsampling procedure to select the shrinkage parameter. Our theory is supplemented by a simulation study and an empirical application. The PRV detects about three–five factors in the equity market, with a notable rank decrease during times of distress in financial markets. This is consistent with most standard asset pricing models, where a limited amount of systematic factors driving the cross-section of stock returns are perturbed by idiosyncratic errors, rendering the QV—and also RV—of full rank.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The covariance matrix of asset returns is a central component, which is required in several contexts in financial economics, such as portfolio composition, pricing of financial instruments, or risk management (e.g., Andersen et al. (2003)). In the past few decades, estimation of quadratic variation (QV) from high-frequency data has been intensively studied in the field of econometrics. The standard estimator of QV, \(\Sigma \), say over a window [0, 1], is the realized variance (RV) (e.g., Andersen and Bollerslev (1998); Barndorff-Nielsen and Shephard (2002, 2004); Jacod (1994)). Given a decreasing sequence \((\Delta _{n})_{n \ge 1}\) with \(\Delta _{n} \rightarrow 0\) and equidistant observations \((Y_{i \Delta _{n}})_{i = 0}^{ \lfloor \Delta _{n}^{-1} \rfloor }\) of a d-dimensional semimartingale \((Y_{t})_{t \in [0,1]}\), the RV is defined as

where \(\Delta _{k}^{n} Y = Y_{k \Delta _{n}} - Y_{(k-1) \Delta _{n}}\) is the increment, and \(^{ \top }\) denotes the transpose operator. The asymptotic properties of \(\widehat{\Sigma }_n\) are generally known, when the dimension d is fixed (e.g., Barndorff-Nielsen et al. (2006); Diop et al. (2013); Heiny and Podolskij (2020); Jacod (1994, 2008)). In particular, for any semimartingale \(\widehat{\Sigma }_n\) is by definition a consistent estimator of \(\Sigma \).

When modeling the dynamics of a large financial market over a relatively short time horizon, one is often faced with an ill-posed inference problem, where the number of assets d is comparable to (or even exceeding) the number of high-frequency data \(\lfloor \Delta _{n}^{-1} \rfloor \) available to compute \(\widehat{ \Sigma }_{n}\). This renders RV inherently inaccurate, and it also implies that d cannot be treated as fixed in the asymptotic analysis. To recover an estimate of \(\Sigma \) in such a high-dimensional setting, the covariance matrix has to display a more parsimonious structure.

Currently, the literature on high-dimensional high-frequency data, mostly based on a continuous semimartingale model for the security price process, is rather scarce. Wang and Zou (2010) investigate optimal convergence rates for estimation of QV under sparsity constraints on its entries. Zheng and Li (2011) apply random matrix theory to estimate the spectral eigenvalue distribution of QV in a one-factor diffusion setting. Related work based on random matrix theory and eigenvalue cleaning, or on approximating the high-dimensional system by a low-dimensional factor model, can be found in, e.g., Cai et al. (2020); Hautsch et al. (2012); Lunde et al. (2016). Aït-Sahalia and Xiu (2017) study a high-dimensional factor model, where the eigenvalues of QV are assumed to be dominated by common components (spiked eigenvalue setting), and estimate the number of common factors under sparsity constraints on the idiosyncratic components.

In the context of joint modeling of the cross-section of asset returns, if a factor model is adopted, one should expect a small number of eigenvalues of \(\Sigma \) to be dominant (see also Sect. 4). In other settings, such as when the market is complete and some entries of \(Y_{t}\) reflect prices on derivative contracts (spanned by the existing assets and therefore redundant), one should even expect the rank of the volatility to be strictly smaller than the dimension. In such an instance, the rank of the covariance matrix is smaller than d, meaning that the intrinsic dimension of the system can be represented in terms of a driving Brownian motion of lower dimension. In general, identifying a low rank can, for instance, help with the economic interpretation of the model, or it may lighten the computational load related to its estimation or simulation. We refer to several recent studies on identification and estimation of the intrinsic dimension of the driving Brownian motion and eigenvalue analysis of QV in Aït-Sahalia and Xiu (2017, 2019); Fissler and Podolskij (2017); Jacod et al. (2008); Jacod and Podolskij (2013, 2018), see also Kong (2017, 2020) in the high-dimensional setting. A related contribution of Pelger (2019) incorporates a finite-activity jump process in the model.

In this paper, we develop a regularized estimator of \(\Sigma \) called the penalized realized variance (PRV). We draw from the literature of shrinkage estimation in linear regression (e.g., Hoerl and Kennard (1970); Tibshirani (1996)). In particular, we propose the following LASSO-type estimator of \(\Sigma \) based on the RV in (1.1):

Here, \(\lambda \ge 0\) is a tuning parameter that controls the degree of penalization, while \(\Vert \, \cdot \, \Vert _{1}\) and \(\Vert \, \cdot \, \Vert _{2}\) denote the nuclear and Frobenius matrix norm. It has been shown in various contexts that estimators based on nuclear norm regularization possess good statistical properties, such as having optimal rates of convergence and being of low rank. For instance, Koltchinskii et al. (2011) study such properties in the trace regression model and, in particular, in the matrix completion problem, where one attempts to fill out missing entries of a partially observed matrix. Negahban and Wainwright (2011) adopt a rather general observation model and, among other things, cover estimation in near low rank multivariate regression models and vector autoregressive processes. Further references in this direction include Argyriou et al. (2008); Bach (2008); Candès and Recht (2009); Recht et al. (2010). While previous papers on nuclear norm penalization are solely dedicated to discrete-time models, the current work is—to the best of our knowledge—the first to study this problem in a continuous-time Itô setting. This implies a number of subtle technical challenges, since the associated high-frequency data are dependent and potentially non-stationary.

We provide a complete non-asymptotic theory for the PRV estimator in (1.2) by deriving bounds for the Frobenius norm of its error. We show that it is minimax optimal up to a logarithmic factor. The derivation relies on advanced results from stochastic analysis combined with a Bernstein-type inequalities presented in Theorems 2.3 and 2.4, which constitute some of the major theoretical contributions of the paper. The latter builds upon recent literature on concentration inequalities for matrix-valued random variables, but it is more general as the variables are allowed to be both dependent and unbounded. We discuss the necessary conditions on d and \(\Delta _n\) to ensure consistency results. We further show that in a “local-to-deficient” rank setting, where some eigenvalues are possibly decreasing as the dimension of the system increases, the penalized estimator in (1.2) identifies the number of non-negligible eigenvalues of \(\Sigma \) with high probability.

The estimator depends on a tuning parameter \(\lambda \). We exploit the subsampling approach of Christensen et al. (2017) to choose a data-driven amount of shrinkage in the implementation. We also provide a related theoretical analysis for the local volatility, which is more likely to exhibit a lower rank than QV (the latter is only expected to have a near low rank in many settings).

In the empirical analysis, we look at the 30 members of the Dow Jones Industrial Average index to demonstrate that our results are consistent with a standard Fama–French three-factor structure, and one may expect a lower number of factors during crisis.

The paper proceeds as follows. In Sect. 2, we establish concentration inequalities for the estimation error \(\Vert \widehat{\Sigma }^\lambda _n - \Sigma \Vert _{2}\) and show minimax optimality up to a logarithmic factor. In Sect. 2.3, we present sufficient conditions to ensure that the rank of \(\widehat{\Sigma }^\lambda _n\) coincides with the number of non-negligible eigenvalues of \(\Sigma \) with high probability. In Sect. 2.4, we show how the tuning parameter \(\lambda \) can be selected with a data-driven approach. In Sect. 3, the associated non-asymptotic theory for estimation of the instantaneous variance is developed. In Sect. 4, we design a simulation study to demonstrate the ability of our estimator to identify a low-dimensional factor model through \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda })\). In Sect. 5, we implement the estimator on empirical high-frequency data from the 30 members of the Dow Jones Industrial Average index. The proofs are included in the appendix.

1.1 Notation

This paragraph introduces some notation used throughout the paper. For a vector or a matrix A the transpose of A is denoted by \(A^{ \top }\). We heavily employ the fact that any real matrix \(A \in \mathbb {R}^{m \times q}\) admits a singular value decomposition (SVD) of the form:

where \(s_{1} \ge \cdots \ge s_{m \wedge q}\) are the singular values of A, and \(\{u_1,\dots , u_{m\wedge q}\}\) and \(\{v_1,\dots , v_{m\wedge q}\}\) are orthonormal vectors. If \(m = q\) and A is symmetric and positive semidefinite, its singular values coincide with its eigenvalues and the SVD is the orthogonal decomposition (meaning that one can take \(v_{k} = u_{k}\)). Sometimes we write \(s_{k}(A)\), \(v_{k}(A)\) and \(u_{k}(A)\) to explicitly indicate the dependence on the matrix A. The rank of A is denoted by \({{\,\textrm{rank}\,}}(A)\), whereas

is the number of singular values of A exceeding a certain threshold \(\varepsilon \in [0, \infty )\). Note that \(\varepsilon \mapsto {{\,\textrm{rank}\,}}(A; \varepsilon )\) is non-increasing on \([0,\infty )\), and càdlàg and piecewise constant on \((0,\infty )\). Furthermore, \({{\,\textrm{rank}\,}}(A;0) = m\wedge q\) and \(\lim _{ \varepsilon \downarrow 0} {{\,\textrm{rank}\,}}(A;\varepsilon ) = {{\,\textrm{rank}\,}}(A)\). We denote by \(\Vert A \Vert _{p}\) the Schatten p-norm of \(A \in \mathbb {R}^{m \times q}\), i.e.,

for \(p \in (0,\infty )\). Moreover, \(\Vert A \Vert _{ \infty }\) denotes the maximal singular value of A, i.e., \(\Vert A\Vert _{\infty }= s_{1}\). In particular, in this paper we work with \(p=1\), \(p=2\), and \(p = \infty \) corresponding to the nuclear, Frobenius, and spectral norm. The Frobenius norm is also induced by the inner product \(\langle A,B \rangle = {{\,\textrm{tr}\,}}(A^{ \top } B)\) and we have the trace duality

For a linear subspace \(S \subseteq \mathbb {R}^m\), \(S^{\perp }\) denotes the linear space orthogonal to S and \(P_{S}\) stands for the projection onto S.

Given two sequences \((a_{n})_{n \ge 1}\) and \((b_{n})_{n \ge 1}\) in \((0, \infty )\), we write \(a_{n} = o(b_{n})\) if \(\lim _{n \rightarrow \infty } a_{n}/b_{n} = 0\) and \(a_{n} = O (b_{n})\) if \(\limsup _{n \rightarrow \infty } a_{n}/b_{n}\) is bounded. Furthermore, if both \(a_{n} = O(b_{n})\) and \(b_{n} = O(a_{n})\), we write \(a_{n} \asymp b_{n}\). Finally, to avoid trivial cases we assume throughout the paper that the dimension d of the process \((Y_t)_{t\in [0,1]}\) is at least two.

2 Theoretical framework

We suppose a d-dimensional stochastic process \(Y_{t} = (Y_{1,t}, \dots , Y_{d,t})^{ \top }\) is defined on a filtered probability space \((\Omega , \mathcal {F}, ( \mathcal {F}_{t})_{t \in [0,1]}, \mathbb {P})\). In our context, \(Y_{t}\) is a time t random vector of log-prices of financial assets, which are traded in an arbitrage-free, frictionless market and therefore semimartingale (e.g., Delbaen and Schachermayer (1994)). In particular, we assume \((Y_t)_{t \in [0,1]}\) is a continuous Itô semimartingale, which can be represented by the stochastic differential equation:

where \((W_{t})_{t \in [0,1]}\) is an adapted d-dimensional standard Brownian motion, \(( \mu _{t})_{t \in [0,1]}\) is a progressively measurable and locally bounded drift with values in \(\mathbb {R}^{d}\), and \((\sigma _{t})_{t \in [0,1]}\) is a predictable and locally bounded stochastic volatility with values in \(\mathbb {R}^{d \times d}\). In the above setting the QV (or integrated variance) is \(\Sigma = \int _{0}^{1} c_{t} \textrm{d}t\), where \(c_t \equiv \sigma _{t} \sigma _{t}^{ \top }\). We remark that the length \(T = 1\) of the estimation window [0, T] is fixed for expositional convenience and is completely without loss of generality, since a general T can always be reduced to the unit interval via a suitable time change.

Note that we exclude a jump component from (2.1). It should be possible to extend our analysis and allow for at least finite-activity jump processes. To do so, we can replace the RV with its truncated version, following the ideas of Mancini (2009). We leave the detailed analysis of more general jump processes for future work. Apart from these restrictions, our model is essentially nonparametric, as it allows for almost arbitrary forms of random drift, volatility, and leverage.

2.1 Upper bounds for the PRV

The goal of this section is to derive a sharp upper bound on the estimation error \(\Vert \widehat{ \Sigma }_{n}^{ \lambda } - \Sigma \Vert _{2}\) that apply with high probability, where the PRV estimator \(\widehat{ \Sigma }_{n}^{ \lambda }\) has been defined in (1.2). We first show that the PRV can alternatively be found by soft-thresholding of the eigenvalues in the orthogonal decomposition of \(\widehat{ \Sigma }_{n}\).

Proposition 2.1

Let \(\widehat{ \Sigma }_{n} = \sum _{k=1}^{d} s_{k} u_{k}u_{k}^{ \top }\) be the orthogonal decomposition of \(\widehat{ \Sigma }_{n}\). Then, the unique solution \(\widehat{ \Sigma }_{n}^{ \lambda }\) to (1.2) is given by

Proposition 2.1 is standard (see Koltchinskii et al. (2011)), but we state it explicitly for completeness. The interpretation of the PRV representation in (2.2) is that all “significant” eigenvalues of \(\widehat{ \Sigma }_{n}\) are shrunk by \(\lambda /2\), while the smallest ones are set to 0. Hence, we only retain the principal eigenvalues in the orthogonal decomposition of \(\widehat{ \Sigma }_{n}\). The PRV can therefore account for a (near) low rank constraint. In the next proposition, we present a general non-probabilistic oracle inequality for the performance of \(\widehat{ \Sigma }_{n}^{ \lambda }\).

Proposition 2.2

Assume that \(2 \Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty } \le \lambda \). Then, it holds that

In particular, \(\Vert \widehat{ \Sigma }_{n}^{ \lambda } - \Sigma \Vert _{2}^{2} \le \min \left\{ 2 \lambda \Vert \Sigma \Vert _{1}, 3 \lambda ^{2} {{\,\textrm{rank}\,}}(\Sigma ) \right\} \).

The statement of Proposition 2.2 is also a general algebraic result that is standard for optimization problems under nuclear penalization (e.g., Koltchinskii et al. (2011)). It says that an oracle inequality for \(\widehat{ \Sigma }_{n}^{ \lambda }\) is available if we can control the stochastic error \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty }\). The latter is absolute key to our investigation.

In order to assess this error, we need to impose the following assumption on the norms of the drift and volatility processes.

Assumption (A): \(\sup _{t\in [0,1]} \Vert \mu _{t} \Vert _{2}^{2} \le \nu _{ \mu }\), \(\sup _{t \in [0,1]} {{\,\textrm{tr}\,}}(c_{t}) \le \nu _{c,2}\), and \(\sup _{t \in [0,1]} \Vert c_{t} \Vert _{ \infty } \le \nu _{c, \infty }\) for some constants \(\nu _{ \mu }\), \(\nu _{c,2}\), and \(\nu _{c, \infty }\) with \(\nu _{c, \infty } \le \nu _{c,2}\).

Mathematically speaking, Assumption (A) appears a bit strong, since it imposes an almost sure bound on the drift and volatility. We can weaken the requirement on the drift to a suitable moment condition without affecting the rate of \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty }\) in Theorem 2.4 below, but as usual the cost of this is more involved expressions. If the volatility does not meet the boundedness condition, which it does not for most stochastic volatility models, one can resort to the localization technique from Sect. 4.4.1 in Jacod and Protter (2012). In most financial applications, however, Assumption (A) is not too stringent if the drift and volatility do not vary strongly over short time intervals, such as a day.

The constants \(\nu _{ \mu }\), \(\nu _{c,2}\), and \(\nu _{c, \infty }\) may depend on d, but they should generally be as small as possible to get the best rate (see, e.g., (2.13)). For example, if the components of \(\mu \) and c are uniformly bounded, we readily deduce that

To study the concentration of \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty }\), we present an exponential Bernstein-type inequality, which applies to matrix-valued martingale differences as long as the conditional moments are sufficiently well-behaved. While there are several related concentration inequalities (see, e.g., Minsker (2017); Tropp (2011, 2012, 2015) and references therein), existing results require that summands are either independent or bounded. Thus, to be applicable in our setting we needed to modify these.

Theorem 2.3

Suppose that \((X_{k})_{k=1}^{n}\) is a martingale difference sequence with respect to a filtration \(( \mathcal {G}_{k})_{k=0}^{n}\) and with values in the space of symmetric \(d \times d\) matrices. Assume that, for some predictable sequence \((C_{k})_{k=1}^{n}\) of random variables and constant \(R \in (0, \infty )\),

Then, for all \(x,\nu \in (0, \infty )\), it holds that

Assume that Theorem 2.3 applies and that \(C_{1}, \dots , C_{n}\) in (2.5) can also be chosen to be deterministic. Then, with \(\nu = \sum _{k=1}^{n} C_{k}\),

so \(\Vert \sum _{k=1}^n X_k \Vert _\infty \) has a sub-exponential tail. The next theorem derives a concentration inequality for \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty }\). We remark that, although we have Theorem 2.3 at our disposal, the derivation of Theorem 2.4 requires a number of non-standard inequalities in order to prove that R in (2.5) can be chosen sufficiently small. For further details, see the discussion in relation to its proof in the supplementary file.

Theorem 2.4

Suppose that Assumption (A) holds. Then, there exists an absolute constant \(\gamma \) such that

for all \(\displaystyle x \ge \gamma \max \left\{ \frac{ \nu _{c,2} \nu _{\mu } \Delta _{n}}{ \nu _{c, \infty }}, \sqrt{ \nu _{c,2} \nu _{ \mu } \Delta _{n}}, \nu _{c, \infty } \Delta _{n} \right\} \).

We now combine Proposition 2.2 and Theorem 2.4 to deliver a result on the concentration of \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{2}\), which is the main statement of this section.

Theorem 2.5

Suppose that Assumption (A) holds. For a given \(\tau \in (0,\infty )\) consider a regularization parameter \(\lambda \) such that

where \(\gamma \) is a large absolute constant. Then, with probability at least \(1 - e^{- \tau }\),

for all \(A \in \mathbb {R}^{d \times d}\).

If the drift term is non-dominant, in the sense that \(\nu _{ \mu } \le \nu _{c, \infty }\), it follows that the regularization parameter \(\lambda \) should meet

for an absolute constant \(\gamma \). To get a concentration probability as large as possible without impairing the rate implied by (2.10), we should choose \(\tau \asymp \log (d)\). Moreover, the first term of the maximum in (2.11) is largest for \(1/ \Delta _{n} \ge ( \log (d)+ \tau ) \nu _{c,2}/ \nu _{c, \infty }\). In light of these observations, the following corollary is an immediate consequence of Theorem 2.5, so we exclude the proof.

Corollary 2.6

Suppose that Assumption (A) holds with \(\nu _{ \mu } \le \nu _{c, \infty } \le \nu _{c,2}\). Assume further that \(\Delta _{n}^{-1} \ge 2 \frac{ \nu _{c,2}}{ \nu _{c, \infty }} \log (d)\) and choose the regularization parameter

where \(\gamma \) is a sufficiently large absolute constant. Then, it holds that

with probability at least \(1-d^{-1}\).

It follows from (2.13) that the estimation error of \(\widehat{ \Sigma }_{n}^{ \lambda }\) is closely related to the size of \(\nu _{c,2}\) and \(\nu _{c, \infty }\), which are both determined from the properties of the volatility process. If c is uniformly bounded, \(\nu _{c,2} = O(d)\). As emphasized by Tropp (2015, Section 1.6.3), we can also often assume that \(\Vert c_{s} \Vert _{ \infty }\) and, hence, \(\nu _{c, \infty }\) can be chosen independently of d. When this is the case, the rate implied by (2.13) is \({{\,\textrm{rank}\,}}( \Sigma ) \Delta _{n}d \log (d)\), and since \({{\,\textrm{rank}\,}}( \Sigma ) \le d\), Corollary 2.6 implies consistency of \(\widehat{ \Sigma }_{n}^{ \lambda }\) when both \(\Delta _{n} \rightarrow 0\) and \(d \rightarrow \infty \) so long as \(d^{2} \log (d) = o( \Delta _{n}^{-1})\). If \({{\,\textrm{rank}\,}}( \Sigma )\) can be further bounded by a constant (that does not depend on d), the growth condition on d improves to \(d \log (d) = o( \Delta _{n}^{-1})\). In contrast, for the estimation error \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{2}^{2} \rightarrow 0\), one cannot expect a better condition than \(d^{2} = o( \Delta _{n}^{-1})\), since this estimation error corresponds to a sum of \(d^{2}\) squared error terms, each of the order \(\Delta _{n}\).

2.2 Minimax optimality

We denote by \(\mathcal {C}_{r}\), for a given non-zero integer \(r \le d\), the subclass of \(d \times d\) symmetric positive semidefinite matrices \(\mathcal {S}_{+}^{d}\) whose effective rank is bounded by r:

where

Compared to the rank, the effective rank is a more stable measure for the intrinsic dimension of a covariance matrix (see, e.g., (Vershynin 2010, Remark 5.53)). In the following we argue that \(\widehat{ \Sigma }_{n}^{ \lambda }\), with \(\lambda \) of the form (2.12), is a minimax optimal estimator of \(\Sigma \) in the parameters \(\nu _{c,2}\), \(\nu _{c, \infty }\), and \({{\,\textrm{rank}\,}}( \Sigma )\) over the parametric class of continuous Itô processes generated by (2.1) with no drift \(\mu _{s} \equiv 0\) and a constant volatility \(\sigma _{s} \equiv \sqrt{A}\) for \(A \in \mathcal {C}_{r}\). To this end, denote by \(\mathbb {P}_{A}\) a probability measure for which \((Y_{t})_{t \in [0,1]}\) is defined as in (2.1) with no drift and constant volatility \(c_{s} \equiv A\). In this setting, we can choose \(\nu _{c,2} = {{\,\textrm{tr}\,}}(A)\) and \(\nu _{c,\infty } = \Vert A \Vert _{\infty }\), which by Corollary 2.6 means that

for an absolute constant \(\overline{ \gamma }\) and \(\Delta _{n}^{-1} \ge 2r \log (d)\).

Now, since the log-price increments \(\Delta _{1}^{n} Y, \dots , \Delta _{ \lfloor \Delta _{n}^{-1} \rfloor }^{n} Y\) are i.i.d. Gaussian random vectors under \(\mathbb {P}_{A}\), we can exploit existing results from the literature to assess the performance of \(\widehat{ \Sigma }_{n}^{ \lambda }\). Hence, the following is effectively an immediate consequence of Theorem 2 in Lounici (2014). It shows that, up to a logarithmic factor, no estimator can do better than (2.16).

Theorem 2.7

Let \(\mathcal {C}_r\) be given as in (2.14) and suppose that \(\lfloor \Delta _n^{-1}\rfloor \ge r^2\). Then, there exist absolute constants \(\beta \in (0,1)\) and \(\underline{\gamma }\in (0,\infty )\) such that

where the infimum runs over all estimators \(\widehat{\Sigma }\) of \(\Sigma \) based on \(( \Delta _{1}^{n}Y, \dots , \Delta _{ \lfloor \Delta _{n}^{-1} \rfloor }^{n}Y)\).

2.3 Bound on the rank of PRV

In this section, we study the rank of \(\widehat{ \Sigma }_{n}^{ \lambda }\) relative to the number of non-negligible eigenvalues and, in particular, the true rank of \(\Sigma \). In line with Sect. 2.1, we begin by stating a general non-probabilistic inequality in Theorem 2.8. In the formulation of this result, we recall that \({{\,\textrm{rank}\,}}(A; \varepsilon )\) is the number of singular values of A exceeding \(\varepsilon \in [0, \infty )\).

Theorem 2.8

Suppose that \(2 \Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty } \le \bar{ \lambda }\) for some \(\bar{ \lambda } \in (0, \infty )\). Then

for any \(\lambda \in ( \bar{ \lambda }, \infty )\). In particular, if \(\lambda \in ( \bar{ \lambda }, s]\), where s is the smallest non-zero eigenvalue of \(\Sigma \), then \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda }) = {{\,\textrm{rank}\,}}( \Sigma )\).

With this result in hand we can rely on the exponential inequality for the quantity \(\Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty }\) established in Theorem 2.4 to show that, with high probability and in addition to converging to \(\Sigma \) at a fast rate, \(\widehat{ \Sigma }_{n}^{ \lambda }\) automatically has the rank of \(\Sigma \) when we neglect eigenvalues of sufficiently small order. In particular, if \(\Sigma \) has full rank, but many of its eigenvalues are close to zero, \(\widehat{ \Sigma }_{n}^{ \lambda }\) is of low rank and reflects the number of important components (or factors) in \(\Sigma \).

Corollary 2.9

Suppose that Assumption (A) holds with \(\nu _{ \mu } \le \nu _{c, \infty } \le \nu _{c,2}\) and fix \(\delta \in (0,1/2)\). Assume further that \(\Delta _{n}^{-1} \ge 2 \frac{ \nu _{c,2}}{ \nu _{c, \infty }} \log (d)\) and, for a sufficiently large constant \(\gamma \) depending only on \(\delta \), choose the regularization parameter \(\lambda \) as follows:

Then, with probability at least \(1-d^{-1}\), it holds that

and

If, in addition, \(\lambda \le s\), where s is the smallest non-zero eigenvalue of \(\Sigma \), then both \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda }) = {{\,\textrm{rank}\,}}( \Sigma )\) and (2.20) hold with probability at least \(1-d^{-1}\).

In the setting of Corollary 2.9, it follows that with high probability an eigenvalue s of \(\Sigma \) affects \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda })\) for large n if \(\lambda = o(s)\), while it does not if \(s = o( \lambda )\). The first condition says that, relative to the level of shrinkage, an eigenvalue is significant (or non-negligible), whereas the second says the opposite. Hence, the notion of negligibility depends on \(\lambda \), which in turn depends on the model through the constants \(\nu _{c,2}\) and \(\nu _{c, \infty }\). However, we know that for \(\widehat{ \Sigma }_{n}^{ \lambda }\) to be a consistent estimator of \(\Sigma \), a necessary condition is that \(\lambda \rightarrow 0\) as \(n \rightarrow \infty \), which implies that for an eigenvalue of \(\Sigma \) to be negligible, it must tend to zero as n increases. In particular, if d is fixed, \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda }) = {{\,\textrm{rank}\,}}( \Sigma )\) with a probability tending to one as \(n \rightarrow \infty \).

The following example illustrates a stylized setting, where many eigenvalues of \(\Sigma \) are negligible.

Example 2.10

Let \(r\in \mathbb {N}\) be an absolute constant (i.e., independent of d and n). In line with the factor model studied in Aït-Sahalia and Xiu (2017), suppose that \(c_{t}\) is of the form

where \(e_{t} \in \mathbb {R}^{r \times r}\) and \(g_{t} \in \mathbb {R}^{d \times d}\) are predictable, symmetric, and positive definite processes, which are uniformly bounded such that \(\Vert e_{t} \Vert _{ \infty } \le C_{e}\) and \(\Vert g_{t} \Vert _{ \infty } \le C_{g}\) for some constants \(C_{e}, C_{g} = O(1)\). The matrix \(\beta \in \mathbb {R}^{d \times r}\) of factor loadings is deterministic and constant in time; a common assumption in the literature, which is also supported by Reiss et al. (2015). The form (2.22) of \(c_{t}\) can be motivated by a standard multi-factor model, where the total risk associated with any of the d assets can be decomposed into a systematic and an idiosyncratic component. The systematic component \(\beta e_{t} \beta ^{ \top }\) is composed of loadings on the r underlying priced common factors in the economy, \(\beta \), and the risk of those factors, \(e_{t}\). The idiosyncratic component \(g_{t}\) is asset-specific and can therefore be reduced by diversification.

Suppose that, given an initial set of d individual securities, we start forming a new set of (orthogonalized) portfolios by taking linear combinations of the original assets, as prescribed by the APT model of Ross (1976), and a standard way to implement factor models in practice when constructing sorting portfolios. Then, these new assets are generally diversified enough to assume that \(C_{g} = O(d^{-1})\). To identify the number of driving factors, r, we assume that \(\Vert d^{- \gamma } \beta ^{ \top } \beta - I_{r} \Vert _{ \infty } = o(1)\) (as \(d \rightarrow \infty \)) and \(s_{r}(e_{t}) \ge \varepsilon \) for some absolute constants \(\gamma \in [0,1]\) and \(\varepsilon \in (0, \infty )\). This corresponds to Assumption 4 in Aït-Sahalia and Xiu (2017), but it is slightly more general as the assets are assumed to be diversified portfolios.

Now, given a suitable drift process \(( \mu _{t})_{t \in [0,1]}\), Corollary 2.9 applies with regularization parameter \(\lambda = O \left( d^{ \gamma } \sqrt{ \Delta _{n} \log (d)} \right) \). In particular, due to the fact \(0 \le s_{k} ( \Sigma ) - s_{k} ( \beta E \beta ^{ \top }) \le \Vert \Gamma \Vert _{ \infty }\) with \(E = \int _0^1 e_t \textrm{d} t\) and \(\Gamma = \int _0^1 g_t \textrm{d} t\), it follows from (2.21) that

with probability at least \(1 - d^{-1}\). By assumption \(\vert s_{r}( \beta E \beta ^{ \top }) - d^{ \gamma } s_{r}(E) \vert = o(d^{ \gamma })\) and \(s_{r}(E) \ge \varepsilon \) (the former follows from arguments as in the proof of Theorem 1 in Aït-Sahalia and Xiu (2017)), and hence \(d^{ \gamma } = O(s_{r}( \beta E \beta ^{ \top }))\). Combining this with (2.23), we conclude that \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda }) = r\) with probability at least \(1 - d^{-1}\) if both \(\log (d) = o( \Delta _{n}^{-1})\) and \(\Delta _{n}^{-1}= o(d^{2+2 \gamma } \log (d))\), and n is large. Or to put it differently, \({{\,\textrm{rank}\,}}( \widehat{ \Sigma }_{n}^{ \lambda })\) is, with a probability tending to one, exactly the number of underlying factors in the model.

We remark that the restrictions imposed above are too weak to ensure that the Frobenius estimation error \(\Vert \widehat{ \Sigma }_{n}^{ \lambda } - \Sigma \Vert _{2}\) tends to zero, meaning that our estimator can be used to detect the underlying factor structure even in very high-dimensional settings. To ensure the estimation error is small, we need \(d^{1+2 \gamma } \log (d) = o( \Delta _{n}^{-1})\) rather than \(\log (d) = o( \Delta _{n}^{-1})\).

2.4 Selection of tuning parameter

In view of Theorem 2.7 and Corollary 2.9, it follows that \(\widehat{ \Sigma }_{n}^{ \lambda }\) can be of low rank and accurate, given optimal tuning of \(\lambda \). However, as evident from (2.19), \(\lambda \) depends on the latent spot variance process \((c_t)_{t \in [0,1]}\) through \(\nu _{c,2}\) and \(\nu _{c, \infty }\) as well as the unknown absolute constant \(\gamma \), whose value can be important in finite samples.

We remark that \(\widehat{ \Sigma }_{n} - \Sigma \) provides an estimate of the null matrix, but it is perturbed by randomness in the data. Hence, a good choice of shrinkage parameter exactly shrinks the eigenvalues of \(\widehat{ \Sigma }_{n} - \Sigma \) to zero (in view of problem (1.2) with \(\widehat{ \Sigma }_{n}\) replaced by \(\widehat{ \Sigma }_{n} - \Sigma \)). By Proposition 2.1, this means \(\lambda = 2 \Vert \widehat{ \Sigma }_{n} - \Sigma \Vert _{ \infty }\).

The above is unobservable. To circumvent this problem and facilitate the calculation of our estimator in Sect. 4 and 5, we adopt a data-driven shrinkage selection by exploiting the subsampling technique of Christensen et al. (2017), see also Politis et al. (1999) and Kalnina (2011). To explain the procedure in short, suppose for notational convenience that \(n = \Delta _{n}^{-1}\). We select an integer L—the number of subsamples—that divides n and assign log-returns successively to each subsample. Hence, the lth subsample consists of the increments \(\left( \Delta _{(k - 1)L + l}^{n} Y \right) _{k = 1, \ldots , n/L}\) for \(l = 1, \dots , L\). We denote the associated subsampled RV by

Note that the random matrices in the sequence \((\widehat{ \Sigma }_{n,l})_{l = 1}^{L}\) are asymptotically conditionally independent, as \(n \rightarrow \infty \). Moreover, it follows from Christensen et al. (2017) that as \(n \rightarrow \infty \), \(L \rightarrow \infty \) and \(n/L \rightarrow \infty \), the half-vectorization of \(\sqrt{n/L}( \widehat{\Sigma }_{n,l} - \widehat{\Sigma }_{n})\) and \(\sqrt{n}(\widehat{\Sigma }_{n} - \Sigma )\) converge in law to a common mixed normal distribution, derived in Barndorff-Nielsen and Shephard (2004, Theorem 1).

Hence, in each subsample \(\lambda = 2 \Vert \widehat{\Sigma }_{n} - \Sigma \Vert _{ \infty }\) can be approximated by

As an estimator of \(\lambda \), we therefore take the sample average:

3 Estimation of the local variance

As noted in Sect. 1, the rank of the local variance \(c_{t}\) is often much smaller than the rank of \(\Sigma \). In fact, although \(\Sigma \) may be well-approximated by a matrix of low rank, we should expect that \({{\,\textrm{rank}\,}}(\Sigma ) = d\). On the other hand, there are many situations where it is reasonable to expect that \({{\,\textrm{rank}\,}}(c_{t})\) is small, in which case it provides valuable insight about the complexity of the underlying model. This motivates developing a theory for the spot version of our penalized estimator with particular attention on its ability to identify \({{\,\textrm{rank}\,}}(c_t)\).

To estimate \(c_{t}\), we follow Jacod and Protter (2012) and apply a localized realized variance, which is defined over a block of \(\lfloor h_{n} / \Delta _{n} \rfloor \ge 2\) log-price increments with \(h_{n} \in (0,1)\). The RV computed over the time window \([t, t+h_{n}]\), for \(t \in (0,1-h_{n}]\), is then defined as follows:

The corresponding penalized version \(\widehat{ \Sigma }_{n}^{ \lambda } (t;t+h_{n})\) is computed as

or by using Proposition 2.1 with \(\widehat{ \Sigma }_{n}\) replaced by \(\widehat{ \Sigma }_{n}(t;t+h_{n})\). Then, the penalized estimator \(\hat{c}_{n}^{ \lambda }(t)\) of \(c_{t}\) is given by

Also, we write

for the QV over \((t_{1},t_{2}]\) for arbitrary time points \(t_{1},t_{2} \in [0,1]\) with \(t_{1} < t_{2}\).

Recall that for a convex and increasing function \(\psi :[0, \infty ) \rightarrow [0, \infty )\) with \(\psi (0) = 0\), the Orlicz norm of a random variable Z with values in the Hilbert space \(( \mathbb {R}^{d \times d}, \Vert \, \cdot \, \Vert _{2})\) is defined as:

This setting includes, in particular, the \(L^{p}( \mathbb {P})\) norms (with \(\psi (s) = s^{p}\)), but also the \(\psi _{1}\) and \(\psi _{2}\) norms for sub-exponential (\(\psi (s) = e^{s}-1\)) and sub-Gaussian (\(\psi (s) = e^{s^{2}}-1\)) random variables. For convenience, we further impose the mild restriction that the range of \(\psi \) is \([0, \infty )\), so it admits an inverse \(\psi ^{-1}\) on \([0, \infty )\).

Theorem 3.1

Suppose that Assumption (A) holds with \(\nu _{ \mu } \le \nu _{c, \infty } \le \nu _{c,2}\). Furthermore, let \(t \in \big [ \frac{h_n}{2},1- \frac{3h_n}{2} \big ]\) and assume \(\sup _{0 \le u<s \le 1} \Vert c_{s} - c_{u} \Vert _{ \psi }/ \sqrt{s-u} \le \nu _{c, \psi }\) and \(h_{n} \ge 2 \Delta _{n} \frac{ \nu _{c,2}}{ \nu _{c, \infty }} \log (d)\). Take the regularization parameter \(\lambda \) as

for a sufficiently large absolute constant \(\gamma \). Then,

with probability at least \(1-d^{-1} - \psi ( \sqrt{ \log (d)})^{-1}\) for some absolute constant \(\kappa \).

The bound on the estimation error of \(\hat{c}_{n}^{ \lambda }(t)\) builds on Corollary 2.6, but Theorem 3.1 further enforces a smoothness condition on \((c_{t})_{t \in [0,1]}\). It entails a trade-off between the smoothness of spot variance and the concentration probability associated with (3.7). For example, if \((c_{t})_{t \in [0,1]}\) is 1/2-Hölder continuous in \(L^{2}( \mathbb {P})\), which corresponds to setting \(\psi (s) = s^{2}\), then we end up with a concentration probability converging to one at rate \(\log (d)^{-1}\), which is slower than for the PRV. On the other hand, if \((c_{t})_{t \in [0,1]}\) is 1/2-Hölder continuous in the sub-Gaussian norm \(\psi (s) = e^{s^{2}}-1\), the concentration probability converges to one at the rate \(d^{-1}\), which is equivalent to the PRV.

Note that with d fixed, (3.7) reveals how to select the length of the estimation window \(h_{n}\) optimally. The upper bound depends on \(\Delta _{n}/h_{n}\) and \(h_{n}\), and for these terms to converge to zero equally fast, we should take \(h_{n} \asymp \sqrt{ \Delta _{n}}\). This is consistent with the literature on spot variance estimation; see, e.g., Jacod and Protter (2012).

The next result, which relies on Corollary 2.9, shows that the rank and performance of \(\hat{c}_{n}^{ \lambda }(t)\) can be controlled simultaneously.

Theorem 3.2

Suppose that Assumption (A) holds with \(\nu _{ \mu } \le \nu _{c, \infty } \le \nu _{c,2}\) and fix \(\delta \in (0,1/4)\). Furthermore, let \(t \in [ \frac{h_n}{2},1 - \frac{3h_n}{2}]\) and assume that \(\sup _{0 \le u < s \le 1} \Vert c_{s} - c_{u} \Vert _{ \psi } / \sqrt{s-u} \le \nu _{c, \psi }\). Suppose further that \(h_{n} \ge 2 \Delta _{n} \frac{ \nu _{c,2}}{ \nu _{c, \infty }} \log (d)\) and consider a regularization parameter \(\lambda \) such that

for a sufficiently large constant \(\gamma \) depending only on \(\delta \). Then, with probability at least \(1-d^{-1}- \psi ( \sqrt{ \log (d)})^{-1}\), it holds that

and

where

and \(\kappa \) is an absolute constant. If, in addition, \(\underline{ \varepsilon } > 0\) and \(\overline{ \varepsilon } \le s_{ {{\,\textrm{rank}\,}}(c_{t})} (c_{t})\), then both (3.9) and \({{\,\textrm{rank}\,}}(\hat{c}_{n}^{ \lambda }(t)) = {{\,\textrm{rank}\,}}(c_{t})\) hold with probability at least \(1-d^{-1}- \psi ( \sqrt{ \log (d)})^{-1}\).

Consider the setting of Theorem 3.2. For the upper bound in (3.10) to be useful, we must have that \(\underline{ \varepsilon } > 0\) or, equivalently,

Suppose that \(\nu _{c,2}\), \(\nu _{c,\infty }\), and \(\nu _{c,\psi }\) do not depend on n. The inequality (3.12) can then be achieved in large samples by choosing \(h_{n} = o( \sqrt{ \Delta _{n}})\). However, as pointed out above one should take \(h_{n} \asymp \sqrt{ \Delta _{n}}\) in order to achieve an optimal rate for the estimation error \(\Vert \hat{c}_{n}^{ \lambda }(t) - c_{t} \Vert _{2}^{2}\). In that case, (3.12) translates directly into an upper bound on \(\nu _{c, \psi }\), which concerns the smoothness of \((c_t)_{t \in [0,1]}\).

When can the term \({{\,\textrm{rank}\,}}( \Sigma (t- \frac{h_{n}}{2}; t+ \frac{3h_{n}}{2}))\) in the upper bound of (3.9) be replaced by \({{\,\textrm{rank}\,}}(c_t)\)? This question appears difficult to answer in general, but it is not too difficult to show that the replacement is valid if the volatility process is locally constant.

4 Simulation study

In this section, we do a Monte Carlo analysis to inspect the finite sample properties of the PRV, \(\widehat{\Sigma }_n^\lambda \). The aim here is to demonstrate the ability of our estimator to detect the presence of near deficient rank in a large-dimensional setting. In view of Theorem 3.2, this can be achieved by a proper selection of \(\lambda \). We simulate a \(d = 30\)-dimensional log-price process \(Y_{t}\). The size of the vector corresponds to the number of assets in our empirical investigation. We assume \(Y_{t}\) follows a slightly modified version of the \(r = 3\)-factor model proposed in Aït-Sahalia and Xiu (2019):

where \(F_{t} \in \mathbb {R}^{r}\) is a vector of systematic risk factors, which has the dynamic:

and \(W_{t} \in \mathbb {R}^{r}\) is a standard Brownian motion. The drift term \(\alpha \in \mathbb {R}^{r}\) is constant \(\alpha = (0.05,0.03,0.02)^{ \top }\). The random volatility matrix \(\sigma _{t} = {{\,\textrm{diag}\,}}( \sigma _{1,t}, \sigma _{2,t}, \sigma _{3,t}) \in \mathbb {R}^{r \times r}\), where \({{\,\textrm{diag}\,}}( \, \cdot \,)\) is a diagonal matrix with coordinates \(\,\cdot \,\), is simulated as a Heston (1993) square-root process:

for \(j = 1, \dots , r\), where \(\widetilde{W}_{t} \in \mathbb {R}^{r}\) is an r-dimensional standard Brownian motion independent of \(W_{t}\). As in Aït-Sahalia and Xiu (2019), the parameters are \(\kappa = (3, 4, 5)^{ \top }\), \(\theta = (0.05, 0.04, 0.03)^{ \top }\), \(\eta = (0.3, 0.4, 0.3)^{ \top }\), and \(\rho = (-0.60, -0.40, -0.25)^{ \top }\). The factor correlation is captured by the Cholesky component L:

The idiosyncratic component \(Z_{t} \in \mathbb {R}^{d}\) is given by

where \(g_{t} = {{\,\textrm{diag}\,}}( \gamma _{1,t}^2, \dots , \gamma _{d,t}^2)\) with

for \(j = 1, \dots , d\), and \(B_{t} \in \mathbb {R}^{d}\) and \(\widetilde{B}_{t} \in \mathbb {R}^{d}\) are independent d-dimensional standard Brownian motions. Thus, \(g_{t}\) is the instantaneous variance of the unsystematic component. We set \(\kappa _{Z} = 4\), \(\theta _{Z} = 0.25\), and \(\eta _{Z} = 0.06\). Hence, the idiosyncratic error varies independent in the cross-section of assets, but the parameters controlling the dynamics are common.

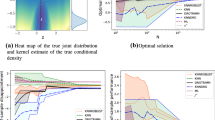

Relative importance of eigenvalues in simulated model. Note. In Panel A, we plot for daily RV the relative size of the three largest eigenvalues, and also the average of the remaining 27 eigenvalues. In Panel B, we plot a histogram of the sample average of the relative size of each of the eigenvalues across simulations

The above setup implies

so the spot covariance matrix has full rank at every time point t. However, it has only \(r = 3\) large eigenvalues associated with the systematic factors, while the remaining \(d-r\) associated with the idiosyncratic variance are relatively small. Note that in contrast to Aït-Sahalia and Xiu (2019), we allow for time-varying idiosyncratic variance.

We set \(\Delta _{n} = 1/n\) with \(n = 78\). This corresponds to 5-minute sampling frequency within a 6.5 hour window. Hence, d is relatively large compared to n. We construct 10,000 independent replications of this model. At the beginning of each simulation, we draw the associated factor loadings \(\beta \in \mathbb {R}^{d \times r}\) at random such that the first column (interpreted as the loading on the market factor) are from a uniform distribution on (0.25, 1.75), i.e. \(\beta _{i1} \sim U(0.25,1.75)\), \(i = 1, \dots , d\). The range of the support is consistent with the realized beta values reported in Table 1 in Sect. 5. The remaining columns are generated as \(\beta _{ij} \sim N(0, 0.5^{2})\), \(i = 1, \dots , d\) and \(j = 2\) and 3.

In Panel A of Fig. 1, we plot the relative size of the three largest eigenvalues, extracted from the corresponding RV, together with the average of the remaining 27 eigenvalues. In Panel B, we include a histogram of the relative size of the eigenvalues across simulations. We observe that it is generally challenging to distinguish important factors from idiosyncratic variation, since the relative size of the eigenvalues decays smoothly with the exception of the largest (and perhaps the second largest) eigenvalue.

In each simulation, we employ the subsampling procedure described in Sect. 2.4 with \(L = 6\) to choose \(\lambda ^{*}\).Footnote 1

In Panel A of Fig. 2, we report the distribution of the \(\lambda ^{ *}\) parameter across simulations, which is here expressed in percent of the maximal eigenvalue of RV. Overall, the penalization is relatively stable with a tendency to shrink around one-fourth to one-third of the largest eigenvalue most of the times.

In Panel B, we plot a histogram of the relative frequency of the rank of the PRV. In general, the PRV does a good job at identifying the number of driving factors, given the challenging environment. Although there are variations in the rank across simulations, caused by the various sources of randomness incorporated in our model, the picture is close to the truth. This means we tend to identify about one-to-three driving factors. The average rank estimate is 1.79, so as expected there is a slight tendency to overshrink leading to a small downward bias. Based on these findings, we can confidently apply the PRV in the empirical application.

5 Empirical application

In this section, we apply the PRV to an empirical dataset. The sample period is from January 3, 2007 through May 29, 2020 (3,375 trading days in total) and includes both the financial crisis around 2007 – 2009 and partly the recent events related to Covid-19.

We look at high-frequency data from the 30 members of the Dow Jones Industrial Average (DJIA) index as of April 6, 2020.Footnote 2 The ticker codes of the various firms are listed in Table 1, along with selected descriptive statistics. As readily seen from Table 1, most of these companies are very liquid. In order to compute a daily RV matrix estimate we collect the individual 5-minute log-return series spanning the 6.5 hours of trading on U.S. stock exchanges from 9:30am to 4:00pm EST.Footnote 3 Hence, our analysis is based on a relatively large cross-section (i.e., \(d = 30\)) compared to the sampling frequency (i.e., \(n = 78\)). The realized beta in Table 1 is calculated with SPY acting as market portfolio. The latter is an exchange-traded fund that tracks the S &P500 and its evolution is representative of the overall performance of the U.S. stock market. The dispersion of realized beta is broadly consistent with the simulated values generated in Sect. 4.

Proportion of variance explained by each eigenvalue. Note. In Panel A, we plot for each day in the sample the relative size of the three largest eigenvalues of RV, as well as the average of the remaining 27. In Panel B, we plot a histogram of the time series average of the relative size of each eigenvalues

In Fig. 3, we depict the factor structure in the time series of RV. In Panel A, we compute on a daily basis the proportion of the total variance (i.e., trace) explained by each eigenvalue, whereas Panel B reports the sample average of this statistic. As consistent with Aït-Sahalia and Xiu (2019), we observe a pronounced dynamic evolution in the contribution of each eigenvalue to RV with notably changes corresponding to times of severe distress in financial markets. The first factor captures the vast majority of aggregate return variation (about 35% on average). It is followed by a few smaller—but still important—factors accountable for an incremental 25% – 30% of the total variance, whereas the last 25 or so eigenvalues are relatively small.

Next, we turn our attention to the PRV estimator. To select the shrinkage parameter \(\lambda \), we follow the subsampling implementation from the simulation section. The relative frequency histogram of the rank of the PRV is reported in Panel A of Fig. 4, whereas Panel B reports a three-month (90-day) moving average of the rank. The vast majority (around 95%) of the daily rank estimates are between one and three, which is consistent with a standard Fama–French three-factor interpretation of the data. There are relatively few rank estimates at four, and it never exceeds five (with about a dozen of the latter). In Panel B, we observe the rank varies over time in an intuitive fashion, often dropping close to a single-factor representation during times of crisis, where correlations tend to rise. As a comparison, we compute the effective rank of the RV (cf. (2.15)), which does not depend on a shrinkage parameter. There is a high association between the series, which is consistent with a soft-thresholding that eliminates smaller eigenvalues of the RV.

6 Conclusion

In this paper, we develop a novel and powerful penalized realized variance estimator, which is applicable to estimate the quadratic variation of high-dimensional continuous-time semimartingales under a low rank constraint. Our estimator relies on regularization and adapts the principle ideas of the LASSO from regression analysis to the field of high-frequency volatility estimation in a high-dimensional setting. We derive a non-asymptotic analysis of our estimator, including bounds on its estimation error and rank. The estimator is found to be minimax optimal up to a logaritmic factor. We design a completely data-driven procedure for selecting the shrinkage parameter based on a subsampling approach. In a simulation study, the estimator is found to possess good properties. In our empirical application, we confirm a low rank environment that is consistent with a three-factor structure in the cross-section of log-returns from the large-cap segment of the U.S. stock market.

Notes

We also employed \(L = 13\) subsamples, but there were no discernible change in the results.

On April 6, 2020, Raytheon Technologies (RTX) replaced United Technologies (UTX) in the DJIA index following a merger of United Technologies and Raytheon Company. Moreover, on April 2, 2019, Dow replaced DowDuPont following a spin-off from the parent company, which itself was a fusion between Dow Chemical Company and DuPont in 2017. We employ high-frequency data for the preceding member prior to each index update.

We truncate 5-minute log-returns that exceed three local standard deviations, as gauged by the daily bipower variation estimator, in order to get shelter from potential jumps.

References

Aït-Sahalia Y, Xiu D (2017) Using principal component analysis to estimate a high dimensional factor model with high-frequency data. J Econ 201(2):384–399

Aït-Sahalia Y, Xiu D (2019) Principal component analysis of high-frequency data. J Am Stat Assoc 114(525):287–303

Andersen TG, Bollerslev T (1998) Answering the skeptics: yes, standard volatility models do provide accurate forecasts. Int Econ Rev 39(4):885–905

Andersen TG, Bollerslev T, Diebold FX, Labys P (2003) Modeling and forecasting realized volatility. Econometrica 71(2):579–625

Argyriou A, Evgeniou T, Pontil M (2008) Convex multi-task feature learning. Mach Learn 73(3):243–272

Bach FR (2008) Consistency of trace norm minimization. J Mach Learn Res 9(35):1019–1048

Barndorff-Nielsen OE, Graversen SE, Jacod J, Podolskij M, Shephard N (2006) A central limit theorem for realized power and bipower variations of continuous semimartingales. In: Kabanov Y, Lipster R, Stoyanov J (eds) From stochastic calculus to mathematical finance: the shiryaev festschrift. Springer, Heidelberg, pp 33–68

Barndorff-Nielsen OE, Shephard N (2002) Econometric analysis of realized volatility and its use in estimating stochastic volatility models. J Roy Stat Soc B 64(2):253–280

Barndorff-Nielsen OE, Shephard N (2004) Econometric analysis of realized covariation: high frequency based covariance, regression, and correlation in financial economics. Econometrica 72(3):885–925

Cai TT, Hu J, Li Y, Zheng X (2020) High-dimensional minimum variance portfolio estimation based on high-frequency data. J Econ 214(2):482–494

Candès EJ, Recht B (2009) Exact matrix completion via convex optimization. Found Comput Math 9(6):717–772

Christensen K, Podolskij M, Thamrongrat N, Veliyev B (2017) Inference from high-frequency data: a subsampling approach. J Econ 197(2):245–272

Clarke FH (1990) Optimization and Nonsmooth Analysis. Society for Industrial and Applied Mathematics, Philadelphia, 1st edn

Delbaen F, Schachermayer W (1994) A general version of the fundamental theorem of asset pricing. Math Ann 300(1):463–520

Diop A, Jacod J, Todorov V (2013) Central limit theorems for approximate quadratic variations of pure jump Itô semimartingales. Stoch Process Appl 123(3):839–886

Fissler T, Podolskij M (2017) Testing the maximal rank of the volatility process for continuous diffusions observed with noise. Bernoulli 23(4B):3021–3066

Hautsch N, Kyj LM, Oomen RCA (2012) A blocking and regularization approach to high dimensional realized covariance estimation. J Appl Econom 27(4):625–645

Heiny J, Podolskij M (2020) “On estimation of quadratic variation for multivariate pure jump semimartingales,” preprint arXiv:2009.02786

Heston SL (1993) A closed-form solution for options with stochastic volatility with applications to bond and currency options. Rev Financ Stud 6(2):327–343

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12(1):55–67

Jacod J (1994) “Limit of random measures associated with the increments of a Brownian semimartingale,” Preprint number 120, Laboratoire de Probabilitiés, Université Pierre et Marie Curie, Paris

Jacod J (2008) Asymptotic properties of realized power variations and related functionals of semimartingales. Stoch Process Appl 118(4):517–559

Jacod J, Lejay A, Talay D (2008) Estimation of the Brownian dimension of a continuous Itô process. Bernoulli 14(2):469–498

Jacod J, Podolskij M (2013) A test for the rank of the volatility process: the random perturbation approach. Ann Stat 41(5):2391–2427

Jacod J, Podolskij M (2018) On the minimal number of driving Lévy motions in a multivariate price model. J Appl Probab 55(3):823–833

Jacod J, Protter PE (2012) Discretization of processes, 2nd edn. Springer, Berlin

Kalnina I (2011) Subsampling high frequency data. J Econom 161(2):262–283

Koltchinskii V, Lounici K, Tsybakov AB (2011) Nuclear-norm penalization and optimal rates for noisy low-rank matrix completion. Ann Stat 39(5):2302–2329

Kong X-B (2017) On the number of common factors underlying large panel high-frequency data. Biometrika 104(2):397–410

Kong X-B (2020) A random-perturbation-based rank estimator of the number of factors. Biometrika 107(2):505–511

Lounici K (2014) High-dimensional covariance matrix estimation with missing observations. Bernoulli 20(3):1029–1058

Lunde A, Shephard N, Sheppard K (2016) Econometric analysis of vast covariance matrices using composite realized kernels and their application to portfolio choice. J Bus Econ Stat 34(4):504–518

Mancini C (2009) Non-parametric threshold estimation for models with stochastic diffusion coefficient and jumps. Scand J Stat 36(2):270–296

Marinelli C, Röckner M (2016) On the maximal inequalities of Burkholder, Davis and Gundy. Expo Math 34(1):1–26

Minsker S (2017) On some extensions of Bernstein’s inequality for self-adjoint operators. Stat Probab Lett 127(1):111–119

Negahban S, Wainwright MJ (2011) Estimation of (near) low-rank matrices with noise and high-dimensional scaling. Ann Stat 39(2):1069–1097

Pelger M (2019) Large-dimensional factor modeling based on high-frequency observations. J Econom 208(1):23–42

Politis DN, Romano JP, Wolf M (1999) Subsampling, 1st edn. Springer, Berlin

Recht B, Fazel M, Parrilo PA (2010) Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev 52(3):471–501

Reiss M, Todorov V, Tauchen G (2015) Nonparametric test for a constant beta between Itô semi-martingales based on high-frequency data. Stoch Process Appl 125(8):2955–2988

Ross SA (1976) The arbitrage theory of capital asset pricing. J Econ Theory 13(3):341–360

Seidler J, Sobukawa T (2003) Exponential integrability of stochastic convolutions. J Lond Math Soc 67(1):245–258

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J Roy Stat Soc B 58(1):267–288

Tropp J (2011) Freedman’s inequality for matrix martingales. Electron Commun Probab 16(1):262–270

Tropp JA (2012) User-friendly tail bounds for sums of random matrices. Found Comput Math 12(4):389–434

Tropp JA (2015) An introduction to matrix concentration inequalities.’ Foundations and Trends® in Machine Learning 8(1–2):1–230

Vershynin R (2010) Introduction to the non-asymptotic analysis of random matrices. In: Eldar YC, Kutyniok G (eds) Compressed sensing: theory and applications. Cambridge University Press, Cambridge, pp 210–268

Wang Y, Zou J (2010) Vast volatility matrix estimation for high-frequency financial data. Ann Stat 38(2):943–978

Watson GA (1992) Characterization of the subdifferential of some matrix norms. Linear Algebra Appl 170(1):33–45

Zheng X, Li Y (2011) On the estimation of integrated covariance matrices of high dimensional diffusion processes. Ann Stat 39(6):3121–3151

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Christensen and Nielsen were supported by the Independent Research Fund Denmark under grant 1028–00030B and 9056–00011B. Podolskij acknowledges funding from the ERC Consolidator Grant 815703 “STAMFORD: Statistical Methods for High Dimensional Diffusions”.

A Appendix of proofs

A Appendix of proofs

This appendix presents the proofs of the results from the main text.

Proof of Proposition 2.1

Since the map \(A \mapsto L_{n} (A) \equiv \Vert \widehat{\Sigma }_{n} - A \Vert _{2}^{2} + \lambda \Vert A \Vert _1\) is strictly convex, the minimizer A of \(L_{n}\) is uniquely determined by the property that \(0 \in \mathbb {R}^{d \times d}\) belongs to the set

Here, \(\partial L_{n}(A)\) denotes the subdifferential of \(L_{n}\) at A, and we employ Watson (1992) to get the explicit expression of this set in (A.1). Moreover, \(\{u_{1}(A), \dots , u_{d}(A) \}\) and \(\{v_{1}(A), \dots , v_{d}(A) \}\) are the vectors introduced in the notation paragraph associated with the SVD of A, whereas \(S_{1}(A)\) and \(S_{2}(A)\) are the corresponding linear spans, and \(P_{S}\) is the projection matrix onto the subspace S. With \(\widehat{ \Sigma }_{n}^{ \lambda }\) given as in (2.2), we find that \(\partial L_{n}( \widehat{ \Sigma }_{n}^{ \lambda })\) coincides with the set of matrices V of the form:

where \(W \in \mathbb {R}^{d \times d}\) is such that \(\Vert W \Vert _{ \infty } \le 1\), and U is the linear span of \(\{u_{k}\, :\, s_{k} \le \lambda /2 \}\). With \(W = \frac{2}{ \lambda } \sum _{k :s_{k} \le \lambda /2}s_{k} u_{k} u_{k}^{ \top }\), it follows that \(\Vert W \Vert _{ \infty } \le 1\) by submultiplicativity of the norm and \(P_{U} WP_{U} = W\). Hence, (A.2) shows that \(0 \in \partial L_{n} ( \widehat{ \Sigma }_{n}^{ \lambda })\), so \(\widehat{ \Sigma }_{n}^{ \lambda }\) is the unique minimizer of \(L_{n}\). \(\square \)

Proof of Proposition 2.2

The structure of the proof is based similar to the one of Theorem 1 in Koltchinskii et al. (2011), but is modified to fit our setting. Starting from the definition of \(\widehat{ \Sigma }_{n}^{ \lambda }\), and since \(L_{n}(A) = \Vert \widehat{ \Sigma }_{n} - A \Vert _{2}^{2} + \lambda \Vert A \Vert _1\), it follows that

for any \(A \in \mathbb {R}^{d \times d}\). Note also that

By using the above identity and (A.3),

The first term in the minimum follows by observing that

where we employ the trace duality \(\langle A_{1}, A_{2} \rangle \le \Vert A_{1} \Vert _{1} \Vert A_{2} \Vert _{ \infty }\), the triangle inequality, and the assumption that \(2\Vert \widehat{ \Sigma }_{n}-\Sigma \Vert _\infty \le \lambda \). To show the second part of the proposition, we observe that if B is a subgradient of \(L_{n}\) at \(\widehat{ \Sigma }_{n}^{ \lambda }\), then by definition

or, equivalently,

for all matrices A. This shows that \(B + 2 \widehat{ \Sigma }_{n}\) is a subgradient of the function \(A \mapsto \Vert A \Vert _{2}^{2} + \lambda \Vert A \Vert _{1}\) at \(\widehat{ \Sigma }_{n}^{ \lambda }\) and, thus, \(B = 2 \widehat{ \Sigma }_{n}^{ \lambda } - 2 \widehat{ \Sigma }_{n} + \lambda \widehat{V}\) for an appropriate \(\widehat{V} \in \partial \Vert \widehat{ \Sigma }_{n}^{ \lambda } \Vert _{1}\). Moreover, because \(\widehat{ \Sigma }_{n}^{ \lambda }\) is a minimizer of \(L_{n}\), there must exist \(B \in \partial L_{n}( \widehat{ \Sigma }_{n}^{ \lambda })\) such that \(\langle B, \widehat{ \Sigma }_{n}^{ \lambda } -A \rangle \le 0\) for all \(A \in \mathbb {R}^{d \times d}\) (see, e.g., (Clarke 1990), Section 2.4). Combining these facts, we establish that

for some \(\widehat{V} \in \partial \Vert \widehat{ \Sigma }_{n}^{ \lambda } \Vert _{1}\) and any \(A \in \mathbb {R}^{d \times d}\). Note the identity

Now, fix \(A \in \mathbb {R}^{d \times d}\) and consider a generic matrix \(V \in \partial \Vert A \Vert _{1}\). Then, if we subtract \(\langle 2 \Sigma + \lambda V, \widehat{ \Sigma }_{n}^{ \lambda } - A \rangle \) on both sides of (A.4), exploit (A.5), and note that \(\langle \widehat{V} - V, \widehat{ \Sigma }_{n}^{ \lambda } - A \rangle \ge 0\) by the monotonicity of subdifferentials (see (Clarke 1990, Proposition 2.2.9)), we deduce that

We shall bound each of the last two terms on the right-hand side of (A.6), starting with \(\langle V,A - \widehat{ \Sigma }_{n}^{ \lambda } \rangle \). According to Watson (1992), with \(r = {{\,\textrm{rank}\,}}(A)\) and \(A = \sum _{k=1}^{r} s_{k} u_{k} v_{k}^{ \top }\) being the SVD of A, the subdifferential \(\partial \Vert A\Vert _1\) has the characterization:

Here, \(S_{1}\) and \(S_{2}\) denote the span of \(\{u_{1}, \dots , u_{r} \}\) and \(\{v_{1}, \dots , v_{r} \}\), respectively. By the polar decomposition, one finds that \(W \in \mathbb {R}^{d \times d}\) with \(\Vert W \Vert _{ \infty } = 1\) and \(\langle W,P_{S_{1}^{ \perp }} \widehat{ \Sigma }_{n}^{ \lambda }P_{S_{2}^{ \perp }} \rangle = \Vert P_{S_{1}^{ \perp }} \widehat{ \Sigma }_{n}^{ \lambda } P_{S_{2}^{ \perp }} \Vert _{1}\). Fixing V to be the subgradient associated with this choice of W,

The last term on the right-hand side of (A.6) can be handled by setting \(B = \widehat{ \Sigma }_{n} - \Sigma - P_{S_{1}^{ \perp }}( \widehat{ \Sigma }_{n} - \Sigma )P_{S_{2}^{ \perp }}\). The Cauchy–Schwarz inequality and trace duality then delivers the estimate:

For any given matrix \(M \in \mathbb {R}^{d \times d}\):

Thus,

This shows that \(\Vert B \Vert _{2} \le \lambda \sqrt{r}\). Hence, by combining (A.6) – (A.8) we get

By subtracting \(\Vert \widehat{ \Sigma }_{n}^{ \lambda } - A \Vert _{2}^{2}\) on both sides and using the inequality \(\alpha ^{2}/4 \ge \alpha \beta - \beta ^{2}\) with \(\alpha = 3 \lambda \sqrt{r}\) and \(\beta = \Vert \widehat{ \Sigma }_{n}^{ \lambda } - A \Vert _{2}\), we arrive at the second term in the minimum (with \(A = \Sigma \)):

\(\square \)

Proof of Theorem 2.3

We set \(S_{n} = \sum _{k=1}^{n} X_{k}\) and \(\nu _{n} = \sum _{k=1}^{n} C_{k}\). The subadditivity of the maximal eigenvalue function \(\lambda _{ \max } ( \cdot )\) implies that

whenever \(\lambda _{ \max }(S_{n}) \ge x\), \(\nu _{n} \le \nu \) and \(\theta \in (0,1)\). By exponentiating both sides of (A.9), applying the spectral mapping theorem and that \(\lambda _{ \max }(A) \le {{\,\textrm{tr}\,}}(A)\) for any positive semidefinite matrix A,

where \(\displaystyle Y_{n} = {{\,\textrm{tr}\,}}\bigg [ \exp \left( \theta S_{n} - \frac{ \theta ^{2}}{2(1- \theta )} \nu _{n} I_{d} \right) \bigg ]\). Suppose for the moment that \(R=1\), so that \(\displaystyle \Vert \mathbb {E}[ X_{k}^{p} \mid \mathcal {G}_{k-1}] \Vert _{ \infty } \le \frac{p!}{2} C_{k}\) for all k and integers \(p \ge 2\). Since \(\mathbb {E} \bigl [X_{k} \mid \mathcal {G}_{k-1} \bigr ] = 0\), it then follows that

Thus, the matrix

is positive semidefinite, and hence it follows by Tropp (2011, Lemma 2.1) that \((Y_{k})_{k=1}^{n}\) is a positive supermartingale with \(Y_{0} = d\). By combining this observation with (A.10) and choosing \(\theta = x / (x+ \nu )\), we conclude that

The general result can now be deduced by taking \(\widetilde{X}_{k} = X_{k}/R\). \(\square \)

A key ingredient to verify the conditions of Theorem 2.3 and prove Theorem 2.4 below is a suitable version of the Burkholder–Davis–Gundy inequality. We shall employ the one given in (Seidler and Sobukawa 2003, Lemma 2.2), which is restated here for convenience. Note that, although their result applies to martingales with values in a general Hilbert space, we specialize the formulation here to the finite-dimensional setting.

Lemma A.1

(Seidler and Sobukawa (2003)) Let \(m \in \mathbb {N}\) and \(T \in (0, \infty )\). For any constant \(p \in [2, \infty )\) there exists \(\gamma _{p} \in (0, \infty )\) such that

for all predictable processes \(\varphi :\Omega \times [0,T] \rightarrow \mathbb {R}^{m \times d}\) with \(\int _{0}^{T} \mathbb {E} \big [ \Vert \varphi _{t} \Vert _{2}^{p} \big ] \textrm{d} t < \infty \). Moreover, one can always take

With the choice in (A.11), \(\gamma _{p}\) is of smaller asymptotic order (as \(p \rightarrow \infty \)) than the constant associated with the usual Burkholder–Davis–Gundy inequalities available by application of Itô’s formula (cf. the proof of Proposition 2.1 in Marinelli and Röckner (2016)).

Proof of Theorem 2.4

We decompose the log-price into the drift and volatility component \(Y_{t} = Y_{t}^{ \mu } + Y_{t}^{ \sigma }\), where \(Y_{t}^{ \mu } = \int _{0}^{t} \mu _{s} \textrm{d}s\) and \(Y_{t}^{ \sigma } = \int _{0}^{t} \sigma _{s} \textrm{d} W_{s}\). By the triangle inequality,

Jensen’s inequality implies that for the first term in (A.12),

The fourth term can be bounded as:

To handle the third term in (A.12), we note it has the form \(a_{3} = \sum _{k=1}^{ \lfloor \Delta _{n}^{-1} \rfloor } (A_{k} - B_{k})\), where

and that \((A_{k} - B_{k})_{k=1}^{ \lfloor \Delta _{n}^{-1} \rfloor }\) is a martingale difference sequence with respect to the filtration \((\mathcal {F}_{k \Delta _{n}})_{k=0}^{ \lfloor \Delta _{n}^{-1} \rfloor }\). Thus, we can apply Theorem 2.3 if we can control

for integer \(p \ge 2\). First note that, since \(\Vert B_{k} \Vert _{ \infty } \le \nu _{c, \infty } \Delta _{n}\), the sub-multiplicativity of \(\Vert \, \cdot \, \Vert _\infty \) and the binomial theorem imply that

We start by analyzing the second term on the right-hand side of (A.15). For an arbitrary integer \(m \ge 1\) and any \(\mathcal {F}_{(k-1) \Delta _{n}}\)-measurable set A, it follows from Lemma A.1 that

and, thus,

for a suitable absolute constant \(\alpha \ge 1\). In particular, (A.16) shows that \(\mathbb {E} \big [ \Vert A_{k} \Vert _{ \infty }^{p-i} \mid \mathcal {F}_{(k-1) \Delta _{n}}] \le ( \alpha p \nu _{c,2} \Delta _{n})^{p-i}\) for \(i = 1, \dots , p\). Together with the mean value theorem and Stirling’s formula, we can therefore establish that

Now, look at the first term on the right-hand side of (A.15). For a fixed \(u\in \mathbb {R}^d\) with \(\Vert u \Vert _2 = 1\), it follows from the Cauchy–Schwarz inequality that

The first term in (A.18) is handled by (A.16) with \(m=2(p-1)\):

The second term is treated as:

where Lemma A.1 is used here for the conditional expectation. Consequently, by combining (A.18) – (A.20), we get the estimate

Since this analysis holds for an arbitrary unit vector u, the right-hand side of (A.21) is an upper bound for \(\big \Vert \mathbb {E}[A_{k}^{p} \mid \mathcal {F}_{(k-1) \Delta _{n}}] \big \Vert _{ \infty }\). This fact, together with (A.15) and (A.17), shows that

for a suitable absolute constant \(\tilde{\alpha } \ge 2\). Now, it follows that Theorem 2.3 is applicable with \(R = \tilde{ \alpha } \nu _{c,2} \Delta _{n}\) and \(C_{k} = C_{1} = \nu _{c, \infty }/ \nu _{c,2}\). Since

and \(4 \nu R^{2} \le 4 \tilde{ \alpha }^{2} \nu _{c,2} \nu _{c, \infty } \Delta _{n}\), the inequality (2.7) implies that

In particular, (A.23) shows that

Finally, for the second term in (A.12) we invoke the Cauchy–Schwarz inequality and consider an x for which \(\frac{x^{2}}{25 \nu _{ \mu } \Delta _{n}} - \nu _{c,2} \ge \frac{ \nu _{c,2}}{5 \nu _{c, \infty }}x\) to deduce the following initial bound:

Next, observe that

is a martingale difference sequence. By (A.16), we see that

for a suitable absolute constant \(\alpha \ge 2\), and thus (2.7) ensures that

where we assume \(\tilde{ \alpha }\) in \(\tau \) is larger than \(\alpha \). This estimate together with (A.25) means that

By combining (A.13), (A.14), (A.24), and (A.26) we conclude that

if \(x \ge 5 \Delta _{n} \max \{ \nu _{ \mu }, \nu _{c, \infty } \}\) and \(\frac{x^{2}}{25 \nu _{ \mu } \Delta _{n}} - \nu _{c,2} \ge \frac{ \nu _{c,2}}{5 \nu _{c, \infty }}x\). In particular, (A.27) holds whenever \(x \ge \gamma \max \big \{ \frac{ \nu _{c,2} \nu _{ \mu } \Delta _{n}}{ \nu _{c, \infty }}, \sqrt{ \nu _{c,2} \nu _{ \mu } \Delta _{n}}, \nu _{c, \infty } \Delta _{n} \big \}\), where \(\gamma \) is a sufficiently large absolute constant. \(\square \)

Note that, in the above calculations, one has to be careful in order to achieve optimal rates. Indeed, instead of the estimate (A.22), it might have been more natural to rely on the following (seemingly harmless) sequence of inequalities using (A.16) and Stirling’s formula:

However, with notation as in Theorem 2.3, this would result in \(\nu R \asymp \nu _{c,2}\), while (A.22) satisfies \(\nu R \asymp \nu _{c,\infty }\). Since \(\nu R\) determines the rate and \(\nu _{c,\infty }\) can be of strictly smaller order than \(\nu _{c,2}\) (see the discussion in the end of Sect. 2.1), this implies that a concentration inequality based on (6.28) cannot be optimal.

Proof of Theorem 2.5

Consider a fixed \(\tau \in (0,\infty )\). Theorem 2.4 implies the existence of an absolute constant \(\alpha \) such that

as long as \(\lambda \ge \alpha \max \bigl \{\frac{\nu _{c,2}\nu _{\mu } \Delta _n}{\nu _{c,\infty }}, \sqrt{\nu _{c,2}\nu _\mu \Delta _n}, \nu _{c,\infty }\Delta _n \bigr \}\). Thus, under this restriction on \(\lambda \) it follows that \(2\Vert \widehat{\Sigma }_n -\Sigma \Vert _\infty \le \lambda \) with probability at least \(1-e^{-\tau }\) if

or, in particular, if

for a suitably chosen absolute constant \(\gamma \). In view of Proposition 2.2, the proof is complete. \(\square \)

Proof of Theorem 2.7

Under \(\mathbb {P}_A\), we have that \(Z_k = \Delta _k^n Y/\sqrt{\Delta _n}\), \(k=1,\dots , \lfloor \Delta _n^{-1}\rfloor \), are i.i.d. Gaussian random vectors with covariance matrix A. Consequently, since \(\lfloor \Delta _n^{-1}\rfloor \ge r^2\), (Lounici 2014, Theorem 2) implies that

for suitable absolute constants \(\beta \in (0,1)\) and \(\underline{\gamma } \in (0,\infty )\), and this proves the result. \(\square \)

Proof of Theorem 2.8

Set \(\hat{r}= {{\,\textrm{rank}\,}}(\widehat{\Sigma }^\lambda _n)\). To show the upper bound in (2.18) it suffices to argue that \(\Sigma \) has at least \(\hat{r}\) singular values which are larger than \((\lambda -\bar{\lambda })/2\) or, equivalently, \(s_{\hat{r}}(\Sigma )\ge (\lambda - \bar{\lambda })/2\). Observe first that the representation (2.2) implies \(s_{\hat{r}}(\widehat{\Sigma }_n)>\lambda /2\) (recall that \(s_k(A)\) refers to the kth largest singular value of A). By using this inequality together with the fact that \(A\mapsto s_k(A)\) is Lipschitz continuous with constant 1 with respect to the spectral norm, we establish that

In order to prove the lower bound of (2.18), we need to show that \(s_{r_\lambda }(\widehat{\Sigma }^\lambda _n) >0\) with \(r_\lambda = {{\,\textrm{rank}\,}}(\Sigma ;\lambda )\). However, this follows immediately from the following sequence of inequalities:

The last part of the result is a direct consequence of the inequality (2.18), since \({{\,\textrm{rank}\,}}(\Sigma ;x) = {{\,\textrm{rank}\,}}(\Sigma )\) for \(x \in (0,s]\). This finishes the proof. \(\square \)

Proof of Corollary 2.9

For an arbitrary number \(\bar{\lambda }\in (0,\infty )\), Proposition 2.2 and Theorem 2.8 imply that both

and

on the set \(A(\bar{\lambda })\equiv \{2\Vert \widehat{\Sigma }_n-\Sigma \Vert _\infty \le \bar{\lambda }\}\) whenever \(\lambda > \bar{\lambda }\). By the proof of Theorem 2.5, in particular (A.29) together with the fact that \(\nu _\mu \le \nu _{c,\infty }\), it follows that for any \(\tau \in (0,\infty )\), the set \(A(\bar{\lambda })\) has probability at least \(1-\exp (-\tau )\) if

for a sufficiently large absolute constant \(\bar{\gamma }\). By considering \(\tau =\log (d)\) and using that \(\Delta _n^{-1}\ge 2 \frac{\nu _{c,2}}{\nu _{c,\infty }} \log (d)\), the maximum in (A.33) equals its first term. With this choice of \(\tau \), the set \(A(\bar{\lambda })\) has probability at least \(1-d^{-1}\), and \((\lambda -\bar{\lambda })/2\ge \delta \lambda \) when \(\lambda \) is given by (2.19) as long as we choose \(\gamma \ge \bar{\gamma }\sqrt{2}/(1-2\delta )\). Consequently, by plugging the specific values of \(\lambda \) and \(\bar{\lambda }\) into (A.31) and (A.32), we have established the first part of the result. The last part of the result follows immediately from Theorem 2.8. \(\square \)

Proof of Theorem 3.1

First, let \(\delta _n\in \{0,1\}\) be defined such that

and set \(\tau (s) = \varepsilon s + \lfloor t \Delta _n^{-1}\rfloor \Delta _n\) with \(\varepsilon = h_n + \delta _n \Delta _n\). Clearly, \(\bar{Y}_s = Y_{\tau (s)}\), \(s\in [0,1]\), is a diffusion with drift \(\bar{\mu }_s = \varepsilon \mu _{\tau (s)}\), volatility \(\bar{\sigma }_s = \sqrt{\varepsilon } \sigma _{\tau (s)}\), and driving (standard) Brownian motion \(\bar{W}_s = (W_{\tau (s)}-W_{\tau (0)})/\sqrt{\varepsilon }\). Moreover, its RV at time 1 based on a sampling frequency of \(\bar{\Delta }_n = \Delta _n/\varepsilon \) coincides with \(\widehat{\Sigma }_n(t;t+h_n)\):

Hence, the first step is to apply Corollary 2.6 to the process \((\bar{Y}_s)_{s\in [0,1]}\) with sampling frequency \(\bar{\Delta }_n\). To this end, note that

Since we also have \(\varepsilon \in [h_n,2h_n]\) and \(h_n/\Delta \ge 2\frac{\nu _{c,2}}{\nu _{c,\infty }}\log (d)\), it follows that the assumptions of Corollary 2.6 are satisfied and we deduce that

with probability at least \(1-d^{-1}\) when \(\lambda \) meets (3.6) and \(\gamma \) is a sufficiently large absolute constant. Here \(\bar{\Sigma }\) denotes the QV of \((\bar{Y}_s)_{s\in [0,1]}\) at time 1. By dividing both sides of the inequality (A.35) by \(h_n^2\),

The error \(\Vert h_n^{-1}\bar{\Sigma }- \varepsilon ^{-1}\bar{\Sigma }\Vert _2\) can be bounded in the following way using that \(h_n/\Delta _n\ge \frac{\nu _{c,2}}{2\nu _{c,\infty }}\):

Now, for any given \(\beta \in (1,\infty )\), we want to argue that \(\Vert \varepsilon ^{-1}\bar{\Sigma }-c_t \Vert _2\) is small with probability \(1-\beta ^{-1}\). To do so, note initially that \(t\in A_n\equiv [\lfloor t \Delta _n^{-1}\rfloor \Delta _n, \lfloor t \Delta _n^{-1}\rfloor \Delta _n+ \varepsilon ]\) and that, for any \(s,u\in A_n\), we have \(\nu _{c,\psi } \ge \Vert c_s-c_u \Vert _\psi /\sqrt{2h_n}\). Using these two facts, and by relying on Jensen’s and Markov’s inequality, we do the following computations for an arbitrary \(x>0\):

It follows that, with probability at least \(1-\beta ^{-1}\),

By choosing \(\beta = \psi (\sqrt{\log (d)})\) and combining (A.36) – (A.38) we conclude that, with probability at least \(1-d^{-1}-\psi (\sqrt{\log (d)})^{-1}\),

for a suitably chosen absolute constant \(\kappa \). Since \(A_n \subseteq [t-\Delta _n,t+h_n+\Delta _n]\) and \(h_n \ge 2 \Delta _n\), it follows that \({{\,\textrm{rank}\,}}(\bar{\Sigma })\le {{\,\textrm{rank}\,}}(\Sigma (t-\tfrac{h_n}{2};t+\tfrac{3h_n}{2}))\), and the proof is complete. \(\square \)

Proof of Theorem 3.2

Similarly to the proof of Theorem 3.1, we consider the time-changed process \(\bar{Y}_s = Y_{\tau (s)}\), \(s\in [0,1]\), and apply Corollary 2.9 to deduce that, with probability at least \(1-d^{-1}\),

and

Here we recall that \(\tau (s) = \varepsilon s + \lfloor t \Delta _n^{-1}\rfloor \Delta _n\) and \(\varepsilon = h_n + \delta _n \Delta _n\), where \(\delta _n\in \{0,1\}\) is chosen such that (A.34) holds. By following the exact same arguments as in the proof of Theorem 3.1 we obtain the estimate

which applies with probability at least \(1-d^{-1}-\psi (\sqrt{\log (d)})^{-1}\) for a suitably chosen absolute constant \(\kappa \). The inequality (3.9) follows immediately from the fact that \({{\,\textrm{rank}\,}}(\bar{\Sigma })\le {{\,\textrm{rank}\,}}(\Sigma (t-\tfrac{h_n}{2};t+\tfrac{3h_n}{2}))\). To establish the bounds (3.10) on \({{\,\textrm{rank}\,}}(\hat{c}^\lambda _n(t))\) note initially that, from the proof of Theorem 3.1 (particularly, (A.38)), we may in fact assume that

on the event that we are considering. Consequently, since singular values are Lipschitz continuous with constant 1 with respect to \(\Vert \, \cdot \, \Vert _2\),

for \(k=1,\dots , d\). By using this observation, the fact that \(\varepsilon \in [h_n,2h_n]\), and the explicit expression for \(\lambda \) the following two implications can be deduced:

We have also imposed the innocent assumption that \(\gamma \) is chosen such that \(\delta \gamma \ge \sqrt{2}\).

From these two implications we conclude that \({{\,\textrm{rank}\,}}(\bar{\Sigma };2\delta \lambda )\le {{\,\textrm{rank}\,}}(c_t;\underline{\varepsilon })\) and \({{\,\textrm{rank}\,}}(\bar{\Sigma };\lambda )\ge {{\,\textrm{rank}\,}}(c_t;\overline{\varepsilon })\), which (in view of (A.39)) establishes (3.10). The last statement in the result is a direct consequence of (3.10), and thus the proof is complete. \(\square \)

Rights and permissions