Abstract

Empathy plays a fundamental role in building relationships. To foster close relationships and lasting engagements between humans and robots, empathy models can provide direct clues into how it can be done. In this study, we focus on capturing in a quantitative way indicators of early empathy realization between a human and a robot using a process that encompasses affective attachment, trust, expectations and reflecting on the other’s perspective within a set of collaborative strategies. We hypothesize that an active collaboration strategy is conducive to a more meaningful and purposeful engagement of realizing empathy between a human and a robot compared to a passive one. With a deliberate design, the interaction with the robot was presented as a maze game where a human and a robot must collaborate in order to reach the goal using two strategies: one maintaining control individually taking turns (passive strategy) and the other one where both must agree on their next move based on reflection and argumentation. Quantitative and qualitative analysis of the pilot study confirmed that a general sense of closeness with the robot was perceived when applying the active strategy. Regarding the specific indicators of empathy realization: (1) affective attachment, affective emulation was equally present throughout the experiment in both conditions, and thus, no conclusion could be reached; (2) trust, quantitative analysis partially supported the hypothesis that an active collaborative strategy will promote teamwork attitudes, where the human is open to the robot’s suggestions and to act as a teammate; and (3) regulating expectation, quantitative analysis confirmed that a collaborative strategy promoted a discovery process that regulates the subject’s expectation toward the robot. Overall, we can conclude that an active collaborative strategy impacts favorably the process of realizing empathy compared to a passive one. The results are compelling to move the design of this experiment forward into more comprehensive studies, ultimately leading to a path where we can clearly study engagements that reduce abandonment and disillusionment with the process of realizing empathy as the core design for active collaborative strategies.

Similar content being viewed by others

1 Introduction

Empathy is central to the development of artificial intelligence and technologies that focus on improving human decision-making, supporting their intentions and overall engagement (Asada 2015; Bickmore and Picard 2005; Paiva et al. 2017). The interaction between people, objects and the technology in their surrounding context is in constant experimentation on topics related to empathy, from affective computing to collaborative strategies between human and machine (Picard 2000; Shneiderman and Maes 1997). Current research on empathy suggests examining it as a set of stages with well-defined mechanisms (Asada 2015). This means going beyond viewing empathy as a singular phenomenon of emotional mirroring, but as an active body of constant emotional and cognitive exchanges that may help best engage and sustain a person’s experience with other agents through time, in other words developing a relationship with these. We refer to this process as the process of realizing empathy.

In previous work (Corretjer et al. 2020), we explored through a qualitative approach initial insights of realizing empathy between a human and a robots. We observed that the shared space and opportunity to argue with each other led to a shift in perception of the experience highlighting trustworthiness and affective care. These insights, along with novel literary references, led us to expand our previous work and further examine the role of regulating expectation, trust and affective attachment as key indicators to realize empathy.

We thus introduce a pilot study where the goal is to capture with further detail quantitative results of indicators of early realization of empathy between a human and a social robot. In the study, the actors: a human and a social robot, apply two different collaborative strategies to solve a maze: (1) based on turns, where one actor has no other choice than to accept the other’s decision at each turn (passive collaboration) and (2) based on exchange of viewpoints to reach an agreement (active collaboration). We evaluate the participants’ experience of regulating their expectations, trust and affective attachment toward the robot as indicators of realizing empathy. We hypothesize that a strategy where space for argumentation is offered (active collaboration) will promote the realization of empathy more effectively compared to an individualized collaborative one (passive collaboration).

In the following section, we summarize theoretical background which provide the foundation to our theoretical approach and study goals, as well as examples of past works where empathy has been applied in human–robot and human–computer interaction settings. Section 3 briefly describes the theoretical process to realize empathy used in this study and Sect. 4 its application in a ludic scenario of a mazelike game challenge. The study design is then introduced in Sect. 5 followed by the pilot study results in Sect. 6. Finally, a discussion on the results obtained and a conclusion are presented in Sects. 7 and 8, respectively.

2 Related work

2.1 Theoretical background

Empathy, as a term, has had great evolution and transformation since its origin during the late nineteenth century. It has captivated scientists across time and become pervasive among multiple disciplines (Singer and Lamm 2009) and therefore been applied with nuanced interpretations. Its concept evolved with science from the definition of “feeling into” an art piece to being represented by mirror neurons that provoke the emotional impulses as a reflection of other’s states (Gallese 2003). Going beyond emotional mirroring, Hoffman (2001) defines empathy as a psychological process that does not need to render an exact matching of the expressed feelings, but rather that they are congruent to the other’s situation. This appraisal is not only based on how people perceive another person’s emotions, but also on how people interpret the other’s situation (Wondra and Ellsworth 2015; Preston and de Waal 2002). It is thus essential that the observer receives all the necessary information from context (including affective states), to correctly interpret the other resulting in a formed expectation. Such expectation becomes more accurate and nuanced as more layers of information are provided within the situation through time and engagement (Trapp et al. 2018).

Furthermore, there is the focus of empathy as a shared experience with the willingness to discourse together through dialogue for a common goal (Rich et al. 2001; Ashar et al. 2017). Early empathy theories have focused on the actors’ role as observers that internalize the emotions and understandings of the other. It is in later theories linked to empathetic interactions such as perspective-taking and collaborative discourse that the element of active response is added, becoming necessary for strengthened empathetic relationships (De Waal 2009; Strickfaden et al. 2009). These active responses take the form of affective care, altruism, acts of trust and willingness to exchange perspectives.

In a scenario of collaboration, the actors manage their expectations of what may happen, whether their beliefs, thoughts and goals are aligned or need to shift, coordinating their actions toward an objective (Rich et al. 2001). It is precisely this exchange of ideas and discussions of potential solutions from each other’s perspectives that add value to the collaborative dialogue between the actors and enhance their expectations (Fong et al. 2003). Within this context, Bond-Barnard et al. (2018) suggest that “Trust can be defined as a function of the predictability and expectations of others’ behaviors or a belief in others’ competencies, which affects performance through activation of cooperation or other collaborative processes.” While trust can be generated by the matching expectations of an observer, based on their cognitive appraisal of the other’s situation and behavior, trust can be empowered when there is a secure affective attachment between the actors (Gillath et al. 2021). When affective care is present, a positive correlation is seen between an increased sense of trust and a secure attachment with the other, particularly if they ask for help.

As Preston and de Waal (2002) suggest, these sets of processes, affective attachment and cognitive appraisals resulting in trust between the actors, are part of the more ample understanding of the psychological process behind empathy. Thus, in this work, based on the literature and our initial study, we infer that the presence of trust, through regulated expectations and affective attachment can be relevant initial indicators for the process of realizing empathy.

2.2 Empathy in artificial agents

Given the strong role empathy plays in shaping communication and social relationships, it has been identified as a major element in human–machine interaction (Bickmore and Picard 2005). There is evidence that people can feel, care for or distress over their peers as well as game characters and even robots (Ashar et al. 2017). Paiva et al. (2017)’s survey comprehensively addresses different case studies and agent–empathy models describing how robots and artificial agents can induce empathy with their users. The authors distinguish between two perspectives for defining the nature of such empathic situations: (i) empathy is evoked in users because of the observed states, i.e., the agent’s features and contextual situation the agent is immersed in (e.g., Riek et al. (2009); Rosenthal-von der Pütten et al. (2013); Hayes et al. (2014)) and (ii) the agent displays an empathic response toward the observed user’s emotional state. In this case, mirroring the user’s emotional state (emotional mimicry) is typically proposed as a means to adopt an empathic response of the robot (Leite et al. 2013) such as in Gonsior et al. (2012) and Hegel et al. (2006). More comprehensive models for emulating empathy beyond mimicry have been also proposed. For instance, Leite et al. (2013) propose a perspective-taking-based empathy model. The robot infers the user’s affective state by analyzing the game the user is playing from his/her perspective and reacts accordingly through facial expressions and verbal responses. Obaid et al. (2018) present a rule-based system to trigger appropriate empathic responses of a tutoring system for children. More recently, Bagheri et al. (2020) propose a reinforcement learning model based on facial emotional recognition to learn empathic responses (verbal utterances) according to different states.

Besides understanding the features of the agents to induce an empathetic response from the user, we believe that it is equally important to provide a process by which the involved agents can be led to the realization of empathy. Dialogue has been often used as a means to create such spaces of exchange and understanding to promote the development of empathy. For instance, Aylett et al. (2005) introduce FearNot!, where through dialogue users interact and engage in empathic responses toward bullied virtual agents. Rosis et al. (2005) present an embodied conversational agent that acts as a therapist. The goal is to achieve involvement between the agent and the user to support eating habits change.

More recently, Morris et al. (2018) propose a conversational agent that could express empathetic support to enhance digital mental health interventions. Alves-Oliveira et al. (2019) show that an empathetic robot endowed with empathetic competencies fosters meaningful discussions among a group of children. Cunha et al. (2020) sets up a shared space between a user and a social robot that expressed affective states as they collaborate deciding together in resolving a physical construct of pipes. In these recent studies, we can observe a shift in the robot’s role from being a mere companion that shares the emotional state of the user (e.g., Leite et al. (2013)), toward an agent that “shares understanding, offering new perspectives” (Morris et al. 2018) and that such agent is a “means to open up discussions and raise awareness of others” (Alves-Oliveira et al. 2019) among the participants. Moreover, Cunha et al. (2020)’s study revealed that for “fluent interaction and building trust, the robot needed to actively contribute to the work and continuously communicate its reasoning and decisions to its human co-workers.” It is thus suggested that a shared immersive physical space and opportunity for the robot to argue as they made decisions, augmented a positive collaborative experience for the participants. These concepts are reinforced by other studies that support the significance of having a physical shared space with a robot while playing a collaborative game (Seo et al. 2015) on the one hand. And on the other, the use of an argumentation-based dialogue framework between user and robot results in the user being more satisfied in their collaboration and feeling more trust for and from the robot (Azhar 2015).

2.3 Contribution

Early works on empathy in artificial agents mostly addressed a provocation of empathy through passive observation of a situation or studying the computational modeling of empathy in social agents (Paiva et al. 2017). Similarly to the latest works (Morris et al. 2018; Alves-Oliveira et al. 2019; Cunha et al. 2020; Azhar 2015), we take Paiva’s perspective of provoking empathy a step further, where the actors are meant to actively collaborate to resolve a task by deciding together at each step while exchanging their point of view. We propose the robot to have the role of a teammate with an active contribution in the task at hand, instead of a companion that shares emotional states mainly. In this sense, its decisions have an impact both on the development of the task and on its human partner’s internal state (beliefs, intentions and emotions). Moreover, we focus on studying the act of realizing empathy as an experience of developing trust and attachment between the involved through time. Thus, we argue that along with the existing computational models, a context of active exchange, where the involved agents have a chance to expose and reflect on the different views, feeling listened and understood, while in a shared space and with clear intentions, is essential to effectively create empathetic experiences and thus achieve longer engagements in human–robot interaction.

This paper studies whether providing an explicit space for exchange of views and reflection on the other’s point of view, along with simple empathy mechanisms of affective expression, promotes the presence of indicators of empathetic interaction (attachment and trust) between subjects and robots.

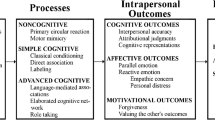

3 The process of realizing empathy

We next provide an overview of the process of realizing empathy described in (Corretjer et al. 2020) used in this work. The primary framing comes from the use of dialogue as an instrument “aimed at the understanding of thought as well as exploring the problematic nature of a day to day relationship and communication with the goal of making a shared meaning” (Bohm 2013). Dialogue is therefore used as a way of connecting and engaging among the involved in a process of desired understanding of the other, emotionally and cognitively, in order to drive the process to realize empathy (Head 2012).

3.1 Indicators

Based on the above literature, the formation of affective attachment and trust become present in the process in which actors come to understanding each other, in particular within collaborative scenarios. As affect and trust impact each other during an empathetic exchange, they tend to strengthen each other as well through time (Gillath et al. 2021). Therefore, we look for these as the indicators for the process in which the actors realize empathy.

-

Affective attachment: it means when the actors believe they are in a secure relation with the other as they interact and respond with behaviors reminiscent of care, for example asking how the other feels, emotionally reacting as the user would in a situation (Gillath et al. 2021).

-

Trust: takes place when there is an intrinsic motivation to willingly be with the other, to help them, pay attention, or collaborate with the other (Ashar et al. 2017). Trust exists when there is a clear sense of capability relevant to the context of interaction, but also when in that willingness the actors accept their vulnerability based on the positive expectation they have on the other (Rousseau et al. 1998). Hence the initial sense of trust begins to form when there is a reduced perception of risk in the interaction and future exchanges between actors (Gillath et al. 2021). Despite this, affect and emotional connections empower trust or helps stabilize the opportunities to trust in each other.

3.2 Stable elements

In order to provoke growing affective attachment and trust and make the process of realizing empathy actionable, it needs a set of elements that stabilize the exchange:

-

Environment: Is the space of interaction that depends on the context of the study and the actors involved. It must be open and intimate enough so the subjects can comfortably observe and embody the other and their context. If the space between them is too distant or broad for mutual acknowledgment, the message may get partially or completely lost, not creating the sense of immersive experience. While if the space is too close in relation to the context, the personal boundaries of those involved might feel violated, restricting the incentive for trust and attachment in each other (Mumm and Mutlu 2011).

-

Communication: A common tool of communication between the involved agreed upon to get the message across, whether it is a spoken language, metaphors, body movement, art, music or stimulating elements. If those involved are not willing to adapt, accept or agree on a common language, the communication fails or may be misinterpreted (Bohm 2013).

-

Open mindset: It is required to consciously position the mental state of those involved to practice an open behavior (Head 2012). The subject becomes the receiver of what the other wants to express. This does not mean that the subject must agree or accept as their own the other’s viewpoint. It merely means that they are welcoming new information for them to consider.

3.3 The process

The following steps activate the realization of empathy:

-

Regulate Expectation: Establishing a level of expectation of the other based on current exchange of information or previous experiences the actors have with each other is fundamental as the first step in the process. Depending on the type and amount of information, the matching of that expectation may be misled, or generate a biased expectation of the other. Throughout the interaction, expectations will naturally regulate as the actors clearly state their intentions, gather information and connect with each other, either to make it more precise in matching or at the risk of losing trust and attachment in the other (Trapp et al. 2018).

-

Listen: Refers to actively and widely collecting what the other is expressing externally. Thus, the mind of the receiver must not hold preconceived notions or judgments. Listening does not mean to silently wait until the other finishes their turn to express themselves. It means to actively take in the message for comprehension to generate meaning (Lim 2013; Bohm 2013).

-

Consider: Describes the process through which the actors internalize and cognitively reflect on the context, perspective and the message collected both through the regulating expectation and listening phases. It is the cognitive, emotional and embodied trial and error occurring inside the mind of each actor before they respond. This is the moment in which the actors choose tools to use in order to examine if their interpretation mirrors what the other is trying to express (Lisetti et al. 2013).

-

Act: The last step of the process is where the actors externalize their interpretations in the hopes that the other accepts this as reminiscent of their own message. This expression brings them closer to each other’s perspective as a result of what they have considered and the matching of their expectation for each other.

As presented in Fig. 1, this process continues iterating until a series of meaningful insights flourish between the subjects. These interactions act like a wave opening and converging, as each previous step informs and adds to the following set of insights. If the wave is consistent, the meaning created is valuable enough for the subjects to sustain the empathetic exchange with growing attachment and trust between them, therefore sparking continued motivation in the process (Corretjer et al. 2020).

4 A test bed for empathy evaluation

We have designed a test bed where a user interacts with a robot through a game activity to study the development of empathy between humans and robots according to the process presented above. We next describe the test bed and the reasoning behind the design decisions we considered throughout its conceptualization.

4.1 The maze test bed

The main requirements for the test bed were the following:

-

1.

In order to trigger initial trust, the sense of risk shall be reduced, which in turn will increase the willingness to interact with the system (McKnight et al. 2002). Thus, the setting had to involve an entertaining activity where no expertise was necessary and being inclusive with different users’ backgrounds and educational levels;

-

2.

It had to involve interaction with a robot and it had to share a physical space (i.e., not to be fully digital. As suggested by Seo et al. (2015), physical robots achieve higher empathetic responses from users than virtual ones);

-

3.

The activity should foster collaboration between robot and user, rather than competitiveness, to encourage empathetic behavior (Paiva et al. 2017);

-

4.

It should allow the instantiating of the empathy process elements and steps described in Sect. 3.

The activity we opted for after brainstorming was solving a maze, where user and robot collaborate to reach the exit (requirements (1), (2) and (3). Requirement (4) is described in Sect. 4.3). At each time step, user and robot have to agree on which movement to perform together, i.e., move forward, backward, left or right one step. As shown in Fig. 2 a token on the maze (white dot) represents their position in the maze and the game ends when the token reaches the exit sign. To make the game more challenging, the maze is only partially visible to both players, unveiling the walls as the players move together around the maze.

Maze GUI where the maze appears in the main area; icons on the bottom allow the user to state his/her affective state; and panels on the right provide feedback on the game state and written utterances of the robot’s speech. The maze is discovered as the players move around it: initial state (left) and half way (right)

To facilitate the developing of the test bed and to avoid spending time on overcoming the robot potential limitations when performing the task, we opted for using a digital mazeFootnote 1. Hence, the user and the robot solve the game looking at a projection of the maze on the floor (Fig. 3). We preferred the projection option in contrast to a laptop/monitor to allow the experience to become immersive (reducing the feeling of observing through a display (Slater and Wilbur 1997)), focusing the interaction and dialogue between the robot and the subject in a shared space (environment concept described in Sect. 3.2). In this sense, both players (human and robot) share the same physical environment with equivalent capabilities (i.e., no need to physically “walk” in the environment) and with equivalent information (both discover the maze as the game evolves).

4.2 Robobo, the robot

The robot used in the test bed is Robobo.Footnote 2 It is composed of a small mobile base and a smartphone attached on top (Fig. 4 left) representing its “head,” which can pan and tilt. The robot runs the Robobo free application to interact with the robot. Once the app is running, the screen shows a pictorial face which can represent up to 9 emotional faces provided by the company (Fig. 4 right).

To promote the development of empathy, we designed the robot’s behavior to be social and expressive (fundamental to trigger the empathy process (Paiva et al. 2017)). Thus, we used a combination of at least three different modalities to stress the robot’s internal state based on the different game states and user’s responses. Each combination was triggered through a rule-based approach that was manually defined. For instance, if the user refuses the robot’s suggestion, the robot would display a “sad” face. On the contrary, if the user accepts its choice, the robot would display “happy” face and acknowledge the user. Each speech act includes at least three different utterances that are randomly chosen to avoid repetitiveness. Moreover, utterances were generally positive or neutral when encountering failure situations. For example, “we reached a dead end, let’s go back” or “I don’t think it’s a good idea.”

We also provided the robot with different stressing levels when it comes to suggesting a move in the maze to the user. The first time the robot suggests a move, it communicates its decision through a gentle motion, e.g., to indicate “left,” slightly panning its head only while verbalizing its choice. However, if the user refuses the suggestion and the robot wants to continue persuading its peer, it would turn the base to the left to emphasize its choice and display a “sad” face. The most stressing level includes repetitive base movements (e.g., turning left back and forth), the use of LEDs and displaying a disappointment face. Overall, we used facial expression, body motion (head and base separately), sound, LEDs and verbal utterances to communicate with the user through different modalities.

4.3 Instantiating the empathy process

As described in Sect. 3, the process to realize empathy is defined by three elements, which rule the context, and four iterative steps. We instantiate them according to our test bed context as follows:

4.3.1 Stable elements

-

Environment: the space the user and the robot share has a deliberate intimate, yet ample design where they interact in a physical world, i.e., a space in a room where the user seats on the floor, next to the robot; and a digital world, i.e., both observe a floor projection of the maze.

-

Communication: the user communicates to the robot system through a keyboard and a mouse to indicate actions (using the 4 arrows to move in the maze up, down, left or right), arguments (numbered justification for his/her decisions described in next subsection available at the top right panel) and emotional state (pointing at 5 iconic faces below the maze as shown in Fig. 2). On the other hand, the robot communicates with the user through speech, movement (either head or base movements indicating the direction to take), sounds, LEDs, facial expressions mimicking affective states and text within the projection. The robot’s general speech is colloquial to sound generally familiar to all subjects.

-

Mindset: While the user’s mindset is mostly out of our control, i.e., we cannot force the user’s mindset, initial instructions were provided not only to set an initial level of expectation for the subject, but also nudge the subject at a state of open mindedness and consideration for the robot. On the other hand, we do control the robot mindset defining its behavior as receptive, i.e., open to listen to its peers and to consider their opinions and emotions during decision-making.

4.3.2 The process

-

Regulate expectation: the robot behavior is designed to be respectful with its human peer, i.e., being polite with the user and avoiding any harm to them. At the beginning of the game, a familiarization phase takes place with a series of instructions to set a base line of expectation of the experience with the robot (Gillath et al. 2021), thus adding to initial trust as early seeds of empathy. Throughout the game, the experience itself will naturally regulate the expectation they had previously set.

-

Listen: the available information for both players (human and robot) is the map of the maze (partially observable for both), the movements within the maze, the arguments justifying their decision-making and their emotional state. While the robot behavior is designed to take into consideration all these aspects in a systematic way to ensure the Listen step of the process, we cannot guarantee that its human partner will use such input in his/her decision process.

-

Consider: the information gathered in the Listen step is taken into account in the decision-making to determine what to do next (Algorithm 1). Given the current state, if both players suggest to move in the maze in the same direction (\(a^r\)), then the decision is clear and no further consideration is necessary (line 1). Otherwise, if each player chooses a different action, then a joint decision has to be made. This is where the exchange becomes an active provocation in how the robot is no longer in a supportive role, but actively participates adding or shifting how the user perceives, trusts and cares for it. The available options for both players are (i) to accept their peer’s suggestion, i.e., make the suggested movement; (ii) to reject the peer’s suggestion up to N times and provide an argument for such decision; or (iii) to toss a coin to decide who’s suggestion is the one to be performed. While the strategy for the human player is uncontrolled, i.e., she can consider any option at her own will, we have designed a parametric strategy for the robot decision-making as follows. The robot accepts its peer’s suggestion in the first m rounds (MIN_ROUNDS) so the user gains confidence and also to start populating the history of the game execution (line 1). From then on, the robot will take into consideration the game history as well as the user’s emotional state when making decisions. If the user has reported a negative emotional state, then the robot yields in an attempt to raise the user’s internal state (lines 4 and 5). Otherwise, an argumentation process takes place, where the robot tries to convince the user about accepting its suggestion (line 7 and 8). If no arguments are available or the maximum number of rejections has been reached, then the robot randomly chooses to either yield or toss a coin to decide where to move next. In the argumentation phase, the user is free to accept the robot’s argument and then yield, or to counter-argue. The scope of this work is not to build an argumentation framework, but to provide an opportunity where the different actors’ views (user and robot) are taken into consideration, which is essential in the developing of empathy. Hence, to simplify the argumentation phase, we have pre-defined a set of potential arguments from which both players select the most appropriate one given the current state and history of the game to counter-argue its peer’s suggestions as follows:

-

fairness: frequency of actions for each player is unbalanced, where my own suggestions have been less accepted. This principle is represented by the argument “We’re following your suggestions most of the time, it’s my turn to choose.”

-

leadership: frequency of actions for each player is unbalanced, where the other’s suggestions have been less accepted. This principle is represented by the argument “I’ve decided most of the time, let me continue leading.”

-

failure rate: frequency of actions that have led to dead ends in the maze. This principle is represented by the argument “Your decisions have taken us to dead ends, let me decide now.”

-

invalid path: when the next step will only lead toward a dead end. This principle is represented by the argument “There is no way out”Footnote 3.

-

-

Act: this step represents the execution of the action decided on the previous step, which can be: indicate the next movement to perform (the user through the keyboard and the robot through one of the modalities described earlier; argue (the user through the keyboard and the robot through speech and written text); yield or toss a coin. Along with these actions, emotional expression can also be provided (the user through the mouse and the robot through its face, sounds and LEDs).

5 Methodology

This section describes the pilot study design to evaluate whether initial steps of realizing empathy are triggered in people while using the test bed described above.

5.1 Experimental conditions

Two different collaborative strategies were designed for the players (human and robot) to follow when solving the maze. Each strategy corresponds to one experimental condition:

-

ARG-based: The first strategy, argumentative condition (an active collaborative strategy) corresponds to the one described in the Consider step in Sect. 4.3. In this case, both players have the opportunity to justify (to some extent) their choices and to influence on the decision-making of their partner. Moreover, the robot also takes into account the reported emotional state of its peer during its reasoning. When the user indicates feeling angry or sad, the robot would always yield when discrepancy occurs aiming at improving the participant’s feeling of frustration.

-

TURN-based: the second one is based on turns (passive collaborative strategy), where at each round of the game, the players alternate to decide which move to make next. The action is immediately executed, without space for discussion on the appropriateness of such move. Thus, each player has to accept the other’s choice. Moreover, in contrast to the ARG-based condition, the robot does not take into account the emotional state of the user in its decision-making at all.

5.2 Participants

We recruited 18 participants (age range between 18 and 42, \(M=27\), SD\(=7.1\)) from our university campus mainly from the Engineering Department. We asked participants about their previous experiences with robots. While some self-reported previous experiences with robots, most of the participants indicated not having or having very little experience with them. Table 1 describes our sample demographics.

Due to the difficulties for recruiting participants during the COVID pandemic period, the study was designed as a within-subject experiment, where the order of the two experimental conditions were randomly assigned.

5.3 Procedure

The experiments were performed in a closed room, where a projector displayed the game environment on a flat board on the floor. The participant sat on the floor during the experiment, and the robot was placed facing him/her to allow the user full view of the game and the robot itself.

Participants signed a consent form upon arrival, including a description of the study (avoiding specific references to empathy evaluation to prevent biasing their answers). Next, they went through a familiarization stage aiming at “nudging” an open mindset and to set the same baseline for both conditions, i.e., regulating their expectation (first step of the process). To this end, a set of instructions describing the game, the robot (named Bobby in the study), its limitations and how to interact with it were provided. Additionally, the participant also watched a video recording of the robot itself acting as if speaking directly to the subject.

Next, a demographic survey was filled in, as well as an empathy baseline questionnaire, the Toronto Empathy Questionnaire (TEQ) (Spreng et al. 2009). The aim was to assess the empathy level of the participant to evaluate any further correlation between their response to the experiment and their base empathy level.

The participant would then sit on the floor to undergo the first trial in one of the conditions, either ARG-based condition or TURN-based condition. After the first trial was finished, the participant filled in a post-questionnaire. Next, the experimenter and the participant sat together to annotate the recorded trial based on the user descriptions (post-recall procedure). The second trial took place then, applying the alternative condition. When the trial was over, the participant completed once again the post-questionnaire and the post-recall procedure. At this point the participant was debriefed. Overall, each experiment lasted around 50 to 60min per participant.

5.4 Measures

The objective of this pilot study is to evaluate the realization of empathy by observing (i) the participant’s regulation of expectation, (ii) the presence of attachment through affective emulation and (iii) behaviors related to trust. If such indicators are present, then a form of empathy has been reached. Moreover, it is an added assurance if the expectation not only regulates throughout the experiment, but is also perceived to match in understanding each other.

To this end, we have designed three types of data analysis: (1) subject perception of the experience through a set of questionnaires, (2) annotation of their perceptions and motivations behind their decision-making in the game through a cued-recall method and (3) subject performance based on their actions and movements throughout the interaction based on logged information of the game. Moreover qualitative data was gathered as the subjects freely expressed their feelings, observations and natural reactions throughout the experiment, and an additional open question at the end of the post-questionnaire.

5.4.1 Self-reporting of the experience

Inspired on existing questionnaires we decided to work with the following bodies of work: (1) the Networked Minds Measure of Social Presence (NMMSP)Footnote 4 (Biocca et al. 2001); and (2) the Game Experience Questionnaire (GEQ)Footnote 5, specifically, section Measure of Social Presence (IJsselsteijn et al. 2013). Though these questionnaires do not use the specific language and modeling as we do in the theoretical process of realizing empathy, they tackle aspects of empathy, interrelation and teamwork that do relate very closely to it. Therefore we have designed our own set of questionnaires (Q1, Q2 and Q3) tailored to our context particularly focusing on the three indicators of our objective:

-

Attachment: Both NMMSP and GEQ questionnaires define empathy primarily as an emotional phenomenon that translate to our indicator of attachment. Items related to affective states in empathy were highly similar in both questionnaires. We thus chose the more comprehensive of the two questionnaires taking items 4, 8, 9, 10, 11 and 12 from the GEQ Social Presence module.

-

Trust: We analyze trust in collaborative mindset as a concept interlinked to the notions of willingness to be with the other, observing their actions, teamwork, collaborating and having affective trust (Gillath et al. 2021; Rousseau et al. 1998; Ashar et al. 2017). We thus used items 2, 3, 5, 6, 13, 14 and 15 from the GEQ Social Presence module, on the one hand, and all items from the Mutual Assistance NMMSP Behavioral Engagement module, on the other hand. Such items describe the perception of teamwork and assistance of each other.

-

Regulating expectation: While expectation regulation is a dynamic process that takes place throughout the interaction, in this questionnaire we are interested in assessing the outcome of such process as a matched expectation, or mutual understanding. In this sense, we took all items in NMMSP Mutual Understanding module, as well as items 3 and 4 from the Behavioral Interdependence module which relate to our concept of matched expectation.

Table 2 summarizes the items included in our questionnaire (Q1, Q2 and Q3), their sources and the Likert scale adopted for each.

Moreover, we also wanted to assess how the participant felt the overall experience with the robot in terms of relational closeness (Branand et al. 2019). We thus used the Inclusion of Other in the Self (IOS) scale (Aron et al. 1992) (Q4 from now on). This scale measures how close the respondent feels with another person or group. The scale consists of a set of circles with different degrees of overlap representing the “self” and the “other.” Participants should answer the question: “Which picture best describes your relationship with xxx,” where xxx is replaced with Bobby in our setting. Figure 5 depicts the scale participants used to answer the question. Besides this scale, we also offered an opportunity for the subject to express in their own words how they perceived the robot’s behavior and the experience overall through an open question at the end of each trial.

5.4.2 Cued-recall debrief

We were interested in gathering details of the participant’s perception and motivations behind their decision-making throughout the experiment to best evaluate how these fell within the parameters of our realizing empathy process. We discarded questioning the participant during the game since we believed that such interruptions would have a negative impact on the natural interaction flow with the robot. Hence, we opted for using a cued-recall approach, a form of situated recallFootnote 6, as a method to elicit information about the user during system use (Bentley et al. 2005). We thus video recorded the participant while s/he was solving the maze with the robot. Once the trial was over, we asked the participant to watch the recorded video while answering a set of structured questions after each user action classified in three categories:

-

1.

Predictability, to observe if the situation or behaviors where unexpected with respect to the robot or the game. We link these answers to the regulating expectation indicator of realizing empathy.

-

2.

Motivation, to categorize why participants acted they way they did. Responses related to teamwork and testing are linked to empathy development. In the first case, taking into consideration our teammate’s opinion and emotional state is a clear indicator of involvement with the other, and not driving our decision only based on a rational mindset. In this sense, we refer to “implicit yielding” as the act of agreeing or accepting the robot’s choice without further discussion. Such decision indicates the participant trusts the robot’s suggestion, hence, being a strong indicator of teamwork. In the second case, testing is usually driven by curiosity, a key element in the realization of empathy as the participant regulates his/her expectations in order to develop trust. We link this category to regulating expectation. Finally, random choices indicate that no clear stance has been adopted, neither trust in the self nor in the other. In any case, a lack of trust is evidenced. We thus link this category to the trust indicator of empathy.

-

3.

Joint affective expression, to observe any shift on the way participants describe their emotional state. We specifically look into the use of singular or plural subject pronouns in their speech, i.e., “I” vs “we.” While the former relates to an egocentric point of view, the latter relates to working as a team. We thus link this category to the indicator of attachment.

We allowed the participants to freely answer each question to avoid biasing their responses. We then coded these as described in Table 3.

5.4.3 Game performance

While the participant plays with the robot, all actions from both user and robot are stored in log files, along with general statistics on game duration, number of steps taken in the game, paths followed, success or failure solving the game, among other. In this work, we are only interested in observing in which situations the participant opted for either explicitly accepting (yielding) or denying the robot’s choice in an argumentative process when using the ARG-based conditionFootnote 7. The aim is to provide objective additional evidence related to trust based on the participants motivations toward opting for an action or another when facing different stances on where to move next.

Table 4 summarizes the different tools and items used to measure indicators of empathy realization.

5.5 Statistical analyses

We used the Wilcoxon matched pairs test to compare the different responses (both individual and overall scores) obtained from the follow-up questionnaires and cued-recall annotations for the two strategies. Furthermore, we assessed the influence of the degree of empathy of the participants on their responses using Spearman correlation analyses. Finally, we performed additional tests and analyses, namely the Wilcoxon rank-sum test to explore the degree of influence of the first strategy used and gender of the participants on their responses and Spearman correlation analysis to explore the influence of the age of the participants on their responses. We set the significance level to 0.05. All statistical analyses were performed using MATLAB R2018b Update 7 (9.5.0.1298439).

5.6 Hypotheses

The pilot study aims at verifying whether early indicators of empathy realization are present after interacting with the robot in the maze game when applying different collaborative strategies. Thus, the we seek to validate the following hypotheses:

H1 An active collaborative strategy between human and robot will elicit affective response from the subject, developing a perceived and observed sense of attachment. We expect to observe higher scores in Q1, and more frequent occurrences of the “self/other” and less frequent of the “self” items in the cued-recall joint affective expression category when applying the ARG-based condition (collaborative strategy) compared to the TURN-based condition (passive collaborative strategy).

H2 An active collaborative strategy between human and robot will promote teamwork attitudes where the human is open to the robot’s suggestions. We expect to observe higher scores in Q2, more frequent occurrences of the “teamwork” item and less frequent occurrences of the “random” and “rational” items in the cued-recall motivation category when applying the ARG-based condition (collaborative strategy) compared to the TURN-based condition (passive collaborative strategy) reflecting on a basis of trust.

H3 An active collaborative strategy between human and robot will increase the perception of matched expectation from the subject toward the robot compared to a passive collaborative one. We expect to observe higher scores in Q3 when applying the ARG-based condition (collaborative strategy) compared to the TURN-based condition (passive collaborative strategy).

H4 An active collaborative strategy between human and robot will promote a discovery process driven by curiosity to regulate the expectation of the subject toward the robot. We expect to observe in the cued-recall predictability category more frequent occurrences of the “intelligence” and “behavior” items and less frequent of the “situational” one, and more frequent occurrences in the “testing” item in the cued-recall motivation category when applying the ARG-based condition (collaborative strategy) compared to the TURN-based condition (passive collaborative strategy).

H5 An active collaborative strategy between human and robot will promote higher levels of closeness perception compared to a passive collaborative one. We expect to observe higher scores in Q4 when applying the ARG-based condition (collaborative strategy) compared to the TURN-based condition (passive collaborative strategy) unveiling a general indicator of empathy realization.

6 Results

We next describe the quantitative data gathered in this pilot study.

6.1 Influence of gender, age, empathy baseline and order of strategies

First, we wanted to know whether there was any difference on the results obtained throughout the study with regard to gender, age, empathy base level and order of strategies applied in the within-subject experiment. The aim was to evaluate whether there was any bias in the data that should be taken into consideration when analyzing the participants’ scores. We run the following statistical analyses considering the difference between the two strategies as outcome variable:

-

1.

We performed a Spearman correlation analysis to assess the influence of the degree of empathy base level of the participants on their responses. We found a statistically significant association between the degree of empathy and the differences in items T8 in Q2 (p value\( = 0.0402\)) and E2 in Q3 (p value\( = 0.0120\)), respectively.

-

2.

We used a Wilcoxon rank-sum test to assess the degree of influence on the participants’ responses of the first strategy used with respect to the second one. In other words, whether the first trial had any impact on the responses of the second one (regardless of the strategy used). In this case, we found a statistically significant association between the first strategy used and the difference in item E1 in Q3 (p value\( = 0.0334\)).

-

3.

We performed a Spearman correlation analysis to assess the influence of age of the participants on their responses. Age was statistically associated with the difference in item T1 (p value\( = 0.0194\)) and T6 (p value\( = 0.0164\)) in Q2, respectively.

-

4.

A Wilcoxon rank-sum test was used to assess the influence of gender on the participants responses. The difference in item T3 was statistically different across gender groups (p value\( = 0.0043\)) in Q2.

Despite obtaining some significant differences between few items, the items involved in the previous associations did not show statistical associations with the scores (see next Sections). Therefore, we can compare the different results in both conditions independently of gender, age, empathy level and first strategy used.

6.2 Attachment

The following analysis of attachment is based on questionnaire Q1 and the joint affective expression category from the cued-recall method.

6.2.1 Questionnaire Q1

No significant differences could be observed in any of the items of Q1. As observed in Fig. 6 (left), both strategies scored very similar in all items. We thus cannot reach any conclusion from this seldom evaluation.

6.2.2 Joint affective expression

As shown in Fig. 6 (right), we have computed the average ratio of annotations related to “self” and to “self/other,” i.e., percentage of occurrences where the participant described his/her affective state using singular vs. plural pronouns. Contrary to our expectations, the TURN-based condition achieved higher scores for the “self/other” item compared to the ARG-based one, which means that more often participants in the TURN-based condition made joint references.

Overall, we cannot confirm H1. However, we did observe differences on the language used when participants naturally described specific situations while solving the maze, or even on the way they directly addressed the robot. As we will discuss in Sect. 7, such differences might provide insights on the development of attachment which could not be observed in the quantitative data presented here. In any case, no significant differences were found between the two conditions.

6.3 Trust

The following analysis of trust is based on questionnaire Q2, the motivation category from the cued-recall method and the game performance log.

6.3.1 Questionnaire Q2

Figure 7 depicts the average outcomes for each item in Q2. Significant differences (p value\( = 0.0107\)) between the two conditions was found on item T3 (“Bobby paid close attention to me”). No significant differences were found in the remaining items. Moreover, no clear indications toward higher values for the ARG-based condition can be observed either. Hence, we cannot confirm H2 based on Q2.

6.3.2 Motivation

As earlier described, we are interested in understanding the motivation behind the actions taken by the participant, i.e., why did they opt for one action or another. We thus compute the average ratio of annotations coded as rational, teamwork, testing and random. Significant differences were found between the two conditions with respect to teamwork (p value\( = 0.0074\)) and testing (p value\( = 0.0313\)) (Fig. 8). In both cases, we can thus confirm that the ARG-based strategy promoted teamwork behaviors (i.e., accepting the robot’s suggestions and taking into consideration its emotional state). The testing item is related to a process of expectation regulation discussed later on.

With respect to the other two items, we can observe higher average rational responses for the ARG-based strategy compared to the TURN-based, but not significant. On the contrary, higher random choices were made during the TURN-based strategy, which suggests that more often participants relied on luck instead of trusting the robot’s opinions. Nevertheless, no significant difference was found either.

Based on these results, we can state that H2 is partially supported.

6.3.3 Game performance

We were interested in observing the game state and argumentation outcome when the participant’s choice did not match the robot’s suggestion, i.e., when disagreement on the direction to follow next took placeFootnote 8. The only possible outcomes were: user explicitly yields, robot explicitly yields, user forces the robot to yield and coin tossFootnote 9.

Figure 9 depicts a set of heatmaps representing the frequency of argumentation outcomes throughout all trials where the ARG-based strategy was used (the TURN-based strategy is not included in this analysis since it does not allow space for discussion on the next move to perform). We can observe that:

-

As described in Sect. 4.3, the robot always yields when the first two disagreements raise. We can see in Fig. 9b that most occurrences (26 out of 36) took place at the first part of the maze (Fig. 9a), where most uncertainty is present. Not only because when starting solving the maze very few walls are visible (the walls only appear as the players walk through the maze), but also because it is the area where most crossroads are placed. Hence, opposed views easily raise, and it is an opportunity for the robot to yield to build a first sense of involvement and trust between the players.

-

The next two critical situations where unclear direction toward where to move are within the middle–right area of the maze. At this point, it is the user’s chance to demonstrate trust on the robot and accept its suggestion. As we can observe in Fig. 9c, 5 out of 7 yielding toward the robot’s suggestion occurrences took place at any one of these junctions (cells (4,4), (5,4) and (6,3)).

-

There are two obvious circumstances where a dead end will be reached if the players follow that path: cells (6,1) and (4,4) or (4,3) (depending on how the maze has been explored and thus, unveiling different walls). However, the robot is not aware of such situation until it is too late, i.e., when the dead end is reachedFootnote 10. Thus, only the user can foresee non-visited visible dead ends. Figure 9d shows that participants forced the robot to yield to their choice in 8 occasions, but mainly when a dead end was on the way (7 out of 8 times).

-

Finally, coin tossing is also an option when uncertainty is present. As shown in Fig. 9e, 4 single occurrences of this choice are scattered around the first and second areas of the maze, while the highest frequency (3 occurrences) takes place at the final crossroad before reaching the goal.

Overall, when an argumentative process started in situations where no clear path was foreseeable, participants equally balanced the option of yielding to the robot’s suggestion or tossing the coin. However, except for one occasion, participants only used their option to force the robot to yield when strictly necessary, i.e., when dead ends were present.

6.4 Regulating expectation

The following analysis of regulating expectation is based on questionnaire Q3, the predictability category and the testing item of the motivation category from the cued-recall method.

6.4.1 Questionnaire Q3

Significant difference (p value\( = 0.0012\)) between the two conditions was found in the overall measure of regulating expectation (summed scores of Q3 items) as shown in Fig. 10 (left). Moreover, individual significant differences were obtained in items E1 (p value\( = 0.0071\)), E3 (p value\( = 0.0166\)) and E5 (p value\( = 0.0313\)). (Fig. 10 right). We can thus confirm H3, where a perception of matched expectation from the subject toward the robot develops when using an argumentative-based strategy.

6.4.2 Predictability

We finally looked into understanding the reasons why certain situations in the game could result unexpected to the participants. No significant differences were found between the two conditions in any of the categories. The average ratio of unexpected situations is very similar in both conditions: \(M=17.20\%\) (\(SM=10.91\%\)) for the ARG-based, and \(M=16.89\%\) (\(ST=15.80\%\)) for the TURN-based. Interestingly, the distribution of unanticipated situations varies considerably for each condition (Fig. 11). While situational elements are the most frequent source of surprise in the TURN-based condition, the robot features (both intelligence and behavior) are the most noticed in then ARG-based one. Such results suggest that participants in the TURN-based condition were more focused or aware of the game features (mainly unexpected deadens encountered along the path) than on the interaction with the robot. Despite observing a trend where the participants were more interested in the robot, no clear evidence could be obtained through this measure.

6.4.3 Motivation

We did find significant difference in the “testing” item of the motivation category (Fig. 8). Such category describes “messing with the robot” behaviors, related to an attempt to satisfy participants’ curiosity toward the robot behavior and capabilities.

Based on the two measures described above, we can thus partially confirm hypothesis H4, where a collaborative strategy promoted a discovery process driven by curiosity to regulate the expectation of the subject toward the robot.

6.5 Overall empathic response

The analysis of the responses to Q4 indicated a significant difference (p value\( = 0.0045\)) on the level of perceived closeness between the robot and the participant across conditions. As shown in Fig. 12 (left) the ARG-based condition achieves higher average score (\(M=5.11\), SD\(=1.13\)) compared to the TURN-based one (\(M=3.83\), SD\(=1.79\)). A closer look at the frequency of participants’ answers for each condition (Fig. 12 right) shows that:

-

ARG-based: Most scores fall between “equal overlap" and “very strong overlap" (17 out of 18), showing clear indication that closeness was positively perceived at least equally and to even higher extent by almost all participants.

-

TURN-based: The most rated statement was “some overlap” (6 participants), followed by "little overlap" (3 participants). The remaining participants’ responses are distributed along the different scores, thus evidencing little consistency across the perception of closeness with the robot.

With these results, we can thus confirm hypothesis H5, i.e., an argumentative-based strategy does have an impact on the perception of the closeness with the other, which in turn, reveals potential growth of empathy.

7 Discussion

We next discuss the results obtained in this study, both from a quantitative and a qualitative perspective. Since we focus on the process of realizing empathy and not on empathy as a singular phenomenon, we measure indicators of such process through observing the development of affective attachment, trust and regulating expectations.

7.1 On attachment

Based on the Q1 results presented, we observe that attachment indicators are equally present throughout the experiment in both conditions (achieving scores above 3 in average). Moreover, contrary to our expectations, joint affective expressions showed a trend to be more frequent in a passive collaborative strategy compared to an active one.

Nevertheless, qualitative data does suggest a shift in affective attachment between the two conditions. Such data was collected at two stages: (1) during the trials, where participants’ natural reactions while interacting with the robot or when describing situations in the cued-recall process were annotated; and (2) through participants’ written feedback in an open question at the end of each trial. In the first case, participants in the ARG-based condition reacted naturally to the experience when: (1) they would “speak” to the robot saying things like “We are done,” “we are happy,” “we have done it!,” or (2) they would comment things about the experience such as “It’s fun. Should have last longer,” or “in the experience you get into the character, it feels natural.” In the latter case, multiple participants wrote they felt the “experience was better,” “more involved,” “more fun,” that the robot was “nice to me” or that “this time around I felt the robot’s emotions more.”

In contrast, participants in the TURN-based condition greatly varied in the use of affective expressions throughout the trials. Some were more self-indicative, such as “I completed the maze,” while others focused on how they viewed Bobby’s feelings: “I feel bad for Bobby,” “Sorry Bobby!,” “Bobby is sad,” “it doesn’t feel right to be happy [when Bobby is sad].” With regard to the open question, the most frequently used phrase in reference to attachment was that they felt the robot acted “not emotionally connected”

It is also worth mentioning that in both conditions participants had an embodied instinctive reaction to the robot’s affective cues, i.e., by laughing, pouting or smiling. Overall, there is no clear evidence, nor trend, that an active collaborative strategy between human and robot as designed in this experiment promotes the development of attachment more than a passive one. We thus believe that either the measures used in this study or the experience itself were not appropriate to reflect on the indicator of attachment. In future assessments, we recommend to enhance tools that allow for the participant to share their affective states more seamlessly as well as more thorough quantitative measures of the enhanced experience. Though we understand the limitations we had in our experiment in this regard, we continue to see signs of the potential impact the role of affective attachment has through the qualitative speech we gathered. Hence, there is much room for improvement in both aspects that merit further research.

7.2 On trust

The results obtained in the quantitative analysis partially support the hypothesis that an active collaborative strategy will promote teamwork attitudes, where the human is open to the robot’s suggestions. Yet, it also suggests that there is more to it than just agreeing with the robot, i.e., the willingness to act as a teammate.

Participants indicated that their motivations driving their decisions lies in their desire to follow the robot’s suggestion and not wanting to contradict or oppose the robot, believing that the robot might be right or that “its suggestions make more sense.” This idea is supported by qualitative statements of their experience. Quite a few participants specifically used the term “cooperative” when describing the ARG-based condition, while others defined to robot having “leadership” qualities. In natural response statements, we have recorded participants saying things such as “we both agree” or “we made mistakes together,” “we are in-tuned,” “I trust Bobby” or “he was excited about going that way. I respect his wishes, it is all the same to me.” In contrast, most participants in the TURN-based condition lacked a sense of teamwork, and when in doubt of where to go, they rather made decisions based on luck. The open question at the end of the trial reflected that most participants viewed the robot as being “more individual and independent” and the experience “not being about teamwork.”

In deeper inspection based on the game performance analysis and the cued-recall process, we realized that trust in the robot was not present at the same levels throughout the movements of the maze. Instead, it depended on their position on the map. When the trial started, trust was initiated through the robot concessions. As the they progressed, we can observe that the subject was shifting from trusting the robot often explicitly and implicitly yielding to him/herself and back. These observations are supported by the qualitative comments of the participants.

We thus believe that trust itself evolves as a process. From the measures used in this study, the cued-recall method was capable of evidencing such progress, while the questionnaire items in Q2 did not. Thus, alternative measures to assess the development of trust and to capture what happens when human and robot balance leadership in an active collaborative scenario and its impact on trust is required. It is important to note that this study addressed a ludic and neutral experience useful to gather initial insights. However, a context that carries higher risk/consequences may heighten the value and contrast of trust and sense connection in relation to the process of realizing empathy, such as a professional setting.

7.3 On regulating expectation

Contrary to trust and attachment, we clearly observed through the designed measures that when it came to regulating expectation users were both adapting that expectation and ultimately, self-reporting if it was matched.

As our hypothesis states, a collaborative strategy between human and robot will promote a discovery process to regulate the expectation of the subject toward the robot. Results show that the ARG-based condition opened the door for participants to discover and test the robot driven by such curiosity. Participants wanted to see what it was ultimately capable of, which led to more subjects reporting unpredictable events in relation to the robot’s behavior, focused on their play with their partner. Again these observations are also supported by qualitative data remarks that users made along the experimentation sharing things like: “don’t use that argument! neither of us could have known,” “its not a bad idea to try something different,” “[after arguing with the robot to play with it and eventually yielding] I was good to Bobby, it was just a joke, so Bobby could laugh a little bit,” “I feel synchronized with Bobby,” or “I can see that he respects that I went to the right, so I will do the same.” In stark contrast, results evidence that in the TURN-based condition participants reported that unpredictable events were mostly associated to the maze itself, i.e., the environment rather than about the robot, as their frustrations stemmed from finding walls along the way.

After the trials, it was pivotal to gather data of how they self-report their expectations and if they were matched. We believe that matching occurs when the subject reports a mutual understanding, clear intentions and knowledge of the other. Results in Q3 did clearly showcase significant impact as participants did feel more understood and believe there was clearer knowledge of the robot’s intentions in the ARG-based condition. We can also support this finding with the qualitative responses highlighting how several participants used the terms “clear behavior” to describe the robot’s intentions. A clear difference can be also observed compared to the participants’ responses in the TURN-based condition, where the data becomes rather neutral with no significant pattern, highlighting the lack of connectivity, understanding and teamwork. In the case of regulating expectation, both the design of the study and measures served well for the purpose of this experiment, but there is still unlocked potential in understanding these shifts and their relation to affective attachment and trust processes.

7.4 Overall

Taking into consideration all these results, we also had one more element to draw a cohesive conclusion: a general indicator of how the participants overall felt their experience with the robot in terms of how the subject self reported his/her relational closeness to the robot (as a result of affective presence, trust behavior and matched expectation). Q4 helps us visualize this generalization, and the results do show a very significant difference in that the ARG-based condition did induce participants to feel closer to the robot, despite moments of frustration, unexpectedness and experienced waves of trust. In contrast, the TURN-based condition revealed what many participants had already spoken and written: they felt the experience was more individual and not as close.

7.5 Limitations

We next summarize main limitations in the the current pilot study that should be considered when evaluating the results obtained:

-

Gender bias: our sample includes more male than females participants (2/3 male, 1/3 female). Despite not finding statistical associations between gender and the outcomes in our study, there is some evidence that women tend to exhibit more empathetic responses than men (Rueckert et al. 2011), which should be taken into account in future studies.

-

Background bias: most of our participants were technology-based. While such background could have had some influence on the evaluation, because of the within-subject study design, such association does not have an effect. However, understanding how people from different backgrounds and past experiences empathize with robot is essential, and should be included in the future.

-

Number of participants: the small sample size of this study did not allow us to find a larger number of statistically significant differences in certain items where the mean values appeared to be different depending on the strategy used (e.g., expectation item E7). However, we aim to leverage the information gathered through this pilot study to design a more powered study. In this regard, a sample calculation using a t-test with a two-sided 0.05 significance level revealed that 49 samples have 80% statistical power to detect statistically significant differences in E7 scores (mean of difference = 0,89; standard deviation of difference = 2,1; effect size = 0,42).

-

Experiment duration: this particular experiment allowed us to capture a good amount of data that does reflect on our current objectives on observing indicators of early empathy realization. The next step is thus to evaluate if the realization of empathy is sustained through time, in which case, longer and recurrent trials will be necessary.

-

Tools: collecting and measurement tools need a revision according to the nature of the elements being studied. For example, if trust is a process and is not meant to carry a constant weight on one side or the other, the measuring tool must reflect such ability. We thus question ourselves to which extent questionnaires shall be used to capture processes or on the contrary, to focus only on outcomes.

7.6 Takeaway message

Despite the limitations and areas that need further refinement, the results shown do correlate with the hypotheses exposed and we have taken a step further where we do see clear indications that empathy was being realized. The analyzed data signals that a collaborative strategy, which allows for reflection as well as argumentation, has become a powerful tool in how participants see their relation with the robot. It means that both parties get to have a say, a sense of freedom and control, even though the participants always had all the tools needed to make all the decisions themselves. In other words, participants decided that they wanted to play and collaborate with the robot, instead of ignoring or playing against it. This is evidenced by the fact that participants associated the ARG-based condition to a “cooperative experience” with the robot as a “team member,” while also feeling connected and automatically referring to the experience as “more fun” or “feeling more emotionally connected to the robot.”

As we analyzed the quantitative data in this new study, we realized that the measures did capture individual instances of the empathetic interaction indicators. However, it was also revealed that we need to capture with quantitative measures the rich detail in the nuances throughout the participant’s experience, and the cause/effect between the appraised situations and their rational/emotional response, as observed in the qualitative data.

Therefore, we believe that we need to develop measures that can truly capture the internal processes that the participant experiences as s/he engages with the robot. We envision a set of mapped trends for each of the variables under a study (affective attachment, trust, and regulating expectation) where specific events happening at one layer may impact the other layers, thus allowing us to clearly observe correlations between them which may reflect the divergent and convergent effect of the process of realizing empathy.

8 Conclusion

As we analyzed in the theoretical background on empathy, empathy is viewed as a combination of emotional mirroring, situational embodiment both from an emotional and cognitive standpoint, as well as the multilayered mechanism that includes active response and willingness to share experiences. Therefore, in this body of work, we focus on the process of realizing empathy referring to going beyond viewing empathy as a singular phenomenon of emotional mirroring, but as an active body of constant emotional and cognitive exchanges that may help best engage and sustain a person’s experience with other agents through time, in other words developing a relationship with these. Thus, our aim is not to evaluate empathy as a singular event, but to measure indicators of such process through observing the development of affective attachment, trust and regulating expectations. Through this process, the involved actors engage in mutual understanding of intent, listening, reflecting and acting, all within a conducive environment, open mindset and tools for communication.

This work presents an extended version of our prior work (Corretjer et al. 2020), where we focus on quantitatively studying indicators of early empathy realization between a human and a robot in a maze challenge scenario. To this end, we have designed a pilot study where we specifically look into attachment, trust and expectation regulation as indicators to validate our hypotheses. Two collaborative strategies were used by the human and robot to solve the maze: an active one (ARG-based condition) and a passive one (TURN-based condition). The main difference between them lies in the fact that an active one opens space for discussion between the involved, where consideration of additional factors (such as emotional state, justification of different on viewpoints, etc.) play a key role in the process of understanding and supporting the other. The results obtained did confirm that a general sense of closeness with the robot was perceived within the ARG-based condition, both from quantitative and qualitative data. Focusing on the individual indicators of empathy realization, we could not confirm strong differences between the two conditions with respect to the development of attachment. However, we did partially confirm the development of trust based on the motivations behind the decisions taken by the participants. Here, participants clearly displayed teamwork attitudes when accepting the robot’s suggestions in front of uncertainty, evidencing a sense of trust. Unfortunately, the questionnaires did not confirm differences between the two conditions, hence not fully validating such hypothesis. Finally, we did confirm that an active strategy does influence the process of expectation regulation.

Thus, despite the limitations and areas that need further refinement, we can confidently conclude that an active collaborative strategy impacts favorably the process of realizing empathy compared to a passive one. The former strategy allows a mutual exchange in reflection and argumentation based on reason and affection, which became a powerful tool in how participants view their relation with a social robot. To them, it means that both get to have a say, have freedom and control.

De Vignemont and Singer (2006) suggests that the social role of empathy “is to serve as the origin of the motivation for cooperative and pro-social behavior, as well as help for effective social communication.” Based on our results and analysis we are emboldened to believe that we have taken a step closer toward this view, where we may be able to promote people’s willingness to interact with social robots, reducing disillusionment and abandonment of the engagement by inducing an empathetic interaction with specific tools. Now we look forward to next steps that close some of the gaps and limitations this study has, particularly that of introducing a more seamless affective experience and measurement tools that best reflect the nature of trust and attachment. To do so we plan to endow the robot with more complex ability to express and observe the participant’s affective states and behaviors, while continuing to refine the use of the realizing empathy process.

Notes

The goal of this work is not to develop a robotic system capable of moving through a maze to reach the exit, but to study the development of empathy between humans and robots.

There are situations where dead ends become visible prior to visiting the cells in the maze. Contrary to the human, the robot is only aware of the maze cells once they are visited. Hence, this argument is only available for the human actor and if raised, the robot will immediately yield without further arguing.