Abstract

We consider a large population dynamic game in discrete time where players are characterized by time-evolving types. It is a natural assumption that the players’ actions cannot anticipate future values of their types. Such games go under the name of dynamic Cournot-Nash equilibria, and were first studied by Acciaio et al. (SIAM J Control Optim 59:2273–2300, 2021), as a time/information dependent version of the games devised by Blanchet and Carlier ( Math Oper Res 41:125–145, 2016) for the static situation, under an extra assumption that the game is of potential type. The latter means that the game can be reduced to the resolution of an auxiliary variational problem. In the present work we study dynamic Cournot-Nash equilibria in their natural generality, namely going beyond the potential case. As a first result, we derive existence and uniqueness of equilibria under suitable assumptions. Second, we study the convergence of the natural fixed-point iterations scheme in the quadratic case. Finally we illustrate the previously mentioned results in a toy model of optimal liquidation with price impact, which is a game of non-potential kind.

Similar content being viewed by others

1 Introduction

In this paper we consider a discrete-time dynamic game of mean field type. In this game, a representative player takes actions in time so as to minimize a cost functional which depends on her type, her action, and the distribution of actions of the whole population of players. Crucially, players’ types may encode different characteristics or preferences, and may change progressively in time. The players’ actions on a given date are only allowed to depend on their types up to that date, introducing an adaptability, or non-anticipativity, constraint into the game. The solutions to this game are dubbed dynamic Cournot-Nash equilibria following Acciaio et al. [2]. As in mean field games, searching for equilibria in dynamic Cournot-Nash games boils down to solving a fixed point problem, and an equilibrium to these games allows to build approximate equilibria in related large population games. See [2, Section 2] for a detailed discussion on the connection between finite and infinite population versions of dynamic Cournot-Nash games.

Building on the work [16] by Blanchet and Carlier, it was shown in [2] that the emerging field of causal optimal transport provides the right framework to describe dynamic Cournot-Nash games. However, when it comes to establishing existence or uniqueness of equilibria, the aforementioned paper makes the crucial assumption of the game being of potential type. In a nutshell, this amounts to a structural assumption under which equilibria correspond to minimizers of an auxiliary variational problem. However the assumption of being potential type is not ideal for multiple reasons. First, there are commonly used games/models of non-potential structure. Second, the link between causal optimal transport and dynamic Cournot-Nash games is blurred when one superimposes such structural assumption. Finally, the proposed method in [2] was not only restricted to the potential case, but also a further cost-separability assumption was made, namely that the type of a player does not interact with the distribution of actions within the cost function. The goal of the present paper is to remedy these shortcomings, following the blueprint set forth in [15], by Blanchet and Carlier, for the static case.

We now summarize our contributions in some details.

In Sect. 2 we define the problem, recall the connection and the elements of causal optimal transport, and study the question of existence of (mixed) Nash equilibria. As customary, this is done by considering the best-response correspondence, which in our case assigns to any prior distribution \({\nu }\) of actions for the population of players the set \(\Phi ({\nu })\) of optimal responses by a single player. Using causal transport, we establish the closedness and convexity of the set \(\Phi ({\nu })\). Applying Kakutani fixed point theorem, we obtain the existence of equilibria in our games under suitable assumptions. Finally, a uniqueness result is derived from a Lasry-Lions monotonicity condition.

In Sect. 3 we assume a specific structure of the cost functional of the game, which allows us to find the equilibrium using the contraction mapping theorem. To do so, we use the structure of the game in order to get a hold on the best response correspondence. To this goal we use the fact that, conditioning on the past evolution of types, the optimal response can be constructed backwards (i.e. recursively) in time. Under appropriate Lipschitz and convexity assumptions, we prove that the best response is a contraction.

In Sect. 4, we introduce and study a simple optimal liquidation problem in a price impact model. We first describe this model, and then establish the applicability of the results of Sect. 3. We prove that the game is not of potential type, and hence cannot be covered by the existing literature. Furthermore, we provide an example which illustrates how to compute the optimal response map and equilibrium.

We close this introduction by giving a broader overview of the related literature.

1.1 Related literature

The games we are concerned with are closely related to mean field games (MFG) in a discrete-time setting (see e.g. Gomes et al. [20]). For this parallel, the different types of agents considered in our setup correspond to different subpopulations of players in the MFG. The theory of mean field games aims at studying dynamic games as the number of agents tends to infinity. It was established independently by Lasry and Lions [24, 25] and by Huang, Malhamé and Caines [21, 22], and has since seen a burst in activity, as e.g. documented in the monograph by Carmona and Delarue [18]. See Cardialaguet’s notes [17], based on P.L. Lions’ lectures at Collége de France, for seminal results on mean field games, and also Bayraktar et al. [9,10,11] or Cecchin and Fischer [19] for the study of finite state mean field games. The key assumption is that players are symmetric and weakly interacting through their empirical distributions, and the idea is to approximate large N-player systems by studying the behaviour as \(N \rightarrow \infty \).

On the other hand, the notion of Cournot-Nash games has been pioneered by Blanchet and Carlier [14, 16] who, building on the seminal contribution of Mas-Colell [27], developed a connection between static Cournot-Nash equilibria and optimal transport. From a probabilistic perspective, large static anonymous games have been studied by Lacker and Ramanan in [23], with an emphasis on large deviations and the asymptotic behaviour of the Price of Anarchy. We also refer to this paper for a thorough review on the (vast) game theoretic literature. Building from this body of work, Acciaio et al. introduced in [2] the concept of dynamic Cournot-Nash game/equilibria. Working in the so-called potential case, that article studied questions of existence, convergence from finite to infinite populations, and computational aspects. Crucially, the article observed that instead of optimal transport, it is the theory of causal optimal transport, which we discuss in the next paragraph, that plays the main role in the mathematical analysis of these games. Another article that took a similar, variational point of view is [12] wherein competitive games with mean field effect were studied. The advantage of the potential / variational setting, is that instead of studying an equilibrium problem, an auxiliary optimization problem is solved, which is in many ways better suited for analysis and computational resolution. To the best of our knowledge, the only article where non-potential (with non-separable costs) static Cournot-Nash games have been studied is Blanchet and Carlier’s [15]. That article serves us as inspiration as we carry out our analysis of the dynamic case in similar non-potential settings.

As already mentioned, to deal with our dynamic setting, it is the tools from causal optimal transport (COT) rather than classical optimal transport that play a role. In a nutshell, COT is a relative of the optimal transport problem where an extra constraint, which takes into account the arrow of time (filtrations), is added. This in turn is crucial to ensure, in our application, the adaptedness of players’ actions to their types in a dynamic framework. The theory of COT, used to reformulate our asymptotic equilibrium problem, has been developed in the works [7, 26]. This theory has been successfully employed in various applications, e.g. in mathematical finance and stochastic analysis [1, 3, 6, 8], in operations research [28,29,30], and in machine learning [4].

We close this part by clarifying the similarities and differences between Cournot-Nash and mean field games (see also [2, Remark 3.8] for a related explanation). To simplify the matter we only discuss a static situation. In an N-player symmetric game, if players adopt the decisions \(\{y^N_j\}_j\) then the cost faced by player i is \(F(y^N_i,\frac{1}{N}\sum _{j\ne i}\delta _{y^N_j})\). In a (pure) Nash equilibrium we have \(F(y^N_i,\frac{1}{N}\sum _{j\ne i}\delta _{y^N_j})\le F(z_i,\frac{1}{N}\sum _{j\ne i}\delta _{y^N_j}) \) for each \(i\le N\) and any \(z_i\). Taking averages in these inequalities and a compactness argument, which gives a subsequence that \(\frac{1}{N}\sum _{j\le N}\delta _{y^N_j}\rightarrow {\hat{\nu }}\), provides us heuristically with a static mean field equilibrium: \(\int F(y,{\hat{\nu }}){\hat{\nu }}(dy)\le \int F(y,{\hat{\nu }})\nu (dy)\) for all \(\nu \) probability measure over decisions. For the static Cournot-Nash case the N-player game story is quite similar, but now player i has a type \(x^N_i\) and faces the type-dependent cost \(F(x_i^N, y^N_i,\frac{1}{N}\sum _{j\ne i}\delta _{y^N_j})\). In a Nash equilibrium we thus have \(F(x_i^N, y^N_i,\frac{1}{N}\sum _{j\ne i}\delta _{y^N_j})\le F(x_i^N, z_i,\frac{1}{N}\sum _{j\ne i}\delta _{y^N_j}) \) for each \(i\le N\) and any \(z_i\). If we take averages, assume that the types \(x_i^N\) are e.g. i.i.d. samples distributed according to \(\eta \), and apply a compactness argument, which yields a subsequence \(\frac{1}{N}\sum _{j\le N}\delta _{(x_j^N, y^N_j)}\rightarrow {\hat{\pi }}\), we get heuristically a static Cournot-Nash equilibrium: \(\int F(x,y,{\hat{\nu }}){\hat{\pi }}(dx,dy)\le \int F(x,y,{\hat{\nu }})\pi (dx,dy)\) for all \(\pi \) probability measures over types and decisions with x-marginal \(\eta \), while here \({\hat{\nu }}\) is the y-marginal of \({\hat{\pi }}\). If we consider players of the same type belonging to the same sub-population, then Cournot-Nash games are very close to multi-population mean field games (cf. [5]), with the caveat that it is the aggregate distribution of decisions that is included in the cost criterion, i.e. we do not disaggregate the decisions of the population along the various sub-populations. Mathematically this corresponds to \({\hat{\nu }}\) (the second marginal of \({\hat{\pi }}\)) being the last argument of F, instead of \(x\mapsto {\hat{\pi }}_x\) (the family of conditional probabilities given the first coordinate).

Notation. Let \(\mathcal {X}_1, \cdots , \mathcal {X}_N, \mathcal {Y}_1, \cdots , \mathcal {Y}_N\) be Polish spaces, and take \(\mathcal {X}:=\mathcal {X}_1 \times \cdots \times \mathcal {X}_N, \mathcal {Y}:=\mathcal {Y}_1 \times \cdots \times \mathcal {Y}_N\). Define \(\mathcal {X}_{s:t}= \mathcal {X}_s \times \cdots \times \mathcal {X}_t\) and \(\mathcal {Y}_{s:t}= \mathcal {Y}_s \times \cdots \times \mathcal {Y}_t\) for \(1 \le s \le t \le N\). For \(x \in \mathcal {X}\), we denote \(x_{s:t}=(x_s, \cdots , x_t)\) for \(1 \le s \le t \le N\), and similarly define \(y_{s:t}\) for \(y \in \mathcal {Y}\). Denote the canonical filtration on \(\mathcal {X}\) and \(\mathcal {Y}\) by \((\mathcal {F}^{\mathcal {X}}_t)_{t=1}^N\) and \((\mathcal {F}^{\mathcal {Y}}_t)_{t=1}^N\) respectively. For any Polish space \(\mathcal {Z}\), we denote by \(\mathcal {P}(\mathcal {Z})\) the space of Borel probability measures on \(\mathcal {Z}\). Given \(\eta \in \mathcal {P}(\mathcal {X})\), and \(\nu \in \mathcal {P}(\mathcal {Y})\), we denote the set of all couplings between \(\eta \) and \(\nu \) by

The letter \({\mathcal {L}}\) stands for Law and if \(T:\mathcal {X}\rightarrow \mathcal {Y}\) is measurable we denote by \(T(\eta ):=\eta \circ T^{-1}\in \mathcal {P}(\mathcal {Y})\) the push-forward of \(\eta \) by T.

2 Existence by set-valued fixed point theorem

In this section, we formulate the Cournot-Nash equilibrium as a fixed point problem, and solve it by applying Kakutani fixed point theorem. First we recall the notion of causal coupling.

Definition 2.1

Suppose \(\eta \in \mathcal {P}(\mathcal {X}), \, \nu \in \mathcal {P}(\mathcal {Y})\). A coupling \(\pi \in \Pi (\eta , \nu )\) is said to be casual if under \(\pi \) it holds that

Denote by \(\Pi _c(\eta , \nu )\) the collection of all causal couplings from \(\eta \) to \(\nu \).

Remark 2.1

In words, the above means that \(\mathcal {F}^{\mathcal {Y}}_{t}\) and \(\mathcal {F}^{\mathcal {X}}_{N}\) are conditionally independent under \(\pi \) given the information in \(\mathcal {F}^{\mathcal {X}}_{t}\), and this for each t. See [7, 26] for equivalent formulations of this condition, or our proof of Lemma 2.2 below. The set \(\Pi _c(\eta , \nu )\) is never empty, as the product of \(\eta \) and \(\nu \) is always an element thereof. It is instructive to consider the case when \(\pi \) is supported on the graph of a function T from \(\mathcal {X}\) to \(\mathcal {Y}\): in this case causality essentially boils down to the named function being adapted (\(T(x)=(T_1(x_1),T_2(x_{1:2}),\dots , T_N(x_{1:N})\)).

In the rest of this paper, N stands for a fixed time horizon. At each time \(t \in \{1, \cdots , N\}\), a representative player is characterized by her type at that time, denoted by \(x_t\in \mathcal {X}_t\), and her control/action undertaken at that time, denoted by \(y_t\in \mathcal {Y}_t\). Hence \(x\in \mathcal {X}\) and \(y\in \mathcal {Y}\) denote the type-path and action-path of a player. We fix once and for all \(\eta \in \mathcal {P}(\mathcal {X})\). The measure \(\eta \) is the distribution of the types in the population of players, and is known in advance by the players.

We denote

We now recall the notion of dynamic Cournout-Nash equilibrium (see [2]), which we will simply call equilibrium in the rest of the work.

Definition 2.2

An equilibrium is a coupling \(\hat{\pi } \in \Pi _c(\eta ,\cdot )\) such that

Above \(F: \mathcal {X}\times \mathcal {Y}\times \mathcal {P}(\mathcal {Y}) \rightarrow \mathbb {R}\) is a given cost function, assumed lower-bounded for the time being. Here \( \hat{\nu }\) represents the distribution of controls/actions by the population of players, which is only determined at equilibrium, and \(\hat{\pi }\) characterizes the optimal response of each type of player given the cost function that they face \((x,y) \mapsto F(x,y, \hat{\nu })\).

Remark 2.2

The above should be interpreted as randomized, or mixed strategies, equilibrium. A pure equilibrium would be an adapted map \({\hat{T}}:\mathcal {X}\rightarrow \mathcal {Y}\) satisfying

As usual in game theory we introduce the best-response set-valued map, or correspondence, defined by

and also the projection from \(\Pi _c(\eta , \cdot )\) to \(\mathcal {P}(\mathcal {Y})\)

Finally we introduce

the \(\mathcal {Y}\)-marginals of the best responses to \(\hat{\nu }\), i.e. the possible distributions of actions in response to \(\hat{\nu }\).

It can be readily seen that \(\hat{\nu }\) is a fixed point as in (2.1) if and only if \(\hat{\nu } \in R(\hat{\nu })\). We will show the existence of fixed points of R applying Kakutani fixed point theorem, which we recall in the following lemma.

Lemma 2.1

Let \(R: \mathcal {Z}\rightarrow 2^{\mathcal {Z}}\) be a set-valued map. Then R has a fixed point, i.e. \(\exists z\) s.t. \(z \in R(z)\), if

-

(i)

\(\mathcal {Z}\) is a nonempty compact, convex set in a locally convex space.

-

(ii)

R is upper semi-continuous, and the set R(y) is nonempty, closed, and convex for all \(z \in \mathcal {Z}\).

Proof

See [31, Theorem 9.B]. \(\square \)

The following lemma will be used to show that \(R(\nu )\) is closed and convex for any \(\nu \in \mathcal {P}(\mathcal {Y})\). See [7, 26] for similar statements: We present it here, separately, for the sake of clarity.

Lemma 2.2

Causality is preserved under weak convergence, i.e., \(\pi \in \Pi _c(\eta , \cdot )\) if \(\pi =\lim \limits _{n \rightarrow \infty } \pi _n\) for a sequence \((\pi _n)_{n \ge 0} \subset \Pi _c(\eta , \cdot )\), and so \(\Pi _c(\eta , \cdot )\) is closed. Also \(\Pi _c(\eta , \cdot )\) is convex, i.e., \(a\pi _1+(1-a)\pi _2 \in \Pi _c(\eta , \cdot )\) for any \( \pi _1, \pi _2 \in \Pi _c(\eta , \cdot )\) and \(a \in [0,1]\).

Proof

Clearly the \(\mathcal {X}\)-marginal of \(\pi \) is \(\eta \). Let us prove that \(\mathcal {F}^{\mathcal {Y}}_{t} \underset{\mathcal {F}^{\mathcal {X}}_{t}}{\perp \!\!\! \perp } \mathcal {F}^{\mathcal {X}}_{N}\) under \(\pi \) for any \(t \in \{1, \cdots , N\}\). This is equivalent to proving that, for any bounded continuous function \(g: \mathcal {Y}_{1:t} \rightarrow \mathbb {R}\), it holds

where \(Y_{1:t}: \mathcal {Y}\rightarrow \mathcal {Y}_{1:t}\) is the projection map on the first t coordinates. Denote by \(\eta _{x_{1:t}}(dx_{t+1:N})\) the disintegration of \(\eta \) on the first t components \(x_{1:t}\). Then it suffices to prove that

for any bounded continuous function \(f: \mathcal {X}\rightarrow \mathbb {R}\). Since the function

is measurable, by Lusin’s Theorem, there exists a closed \(\mathcal {V}\subset \mathcal {X}_{1:t}\) such that \(\eta (\mathcal {V}) > 1-\delta \) and \(\bar{f}\) is continuous restricted to \(\mathcal {V}\). Then by Tietze’s Theorem, we extend \(\bar{f}\) to a bounded continuous function \(\bar{f}'\) on \(\mathcal {X}_{1:t}\), and it is clear that \(f|_{\mathcal {V}}=\bar{f}'|_{\mathcal {V}}\) and \(\Vert f-\bar{f}' \Vert _{\infty } < 2\Vert f \Vert _{\infty }\).

The equality (2.3) holds for each causal coupling \(\pi _n \). It can be readily seen that

and

Therefore we conclude that

Letting \(\delta \rightarrow 0\), we finish proving (2.3).

Convexity of \(\Pi _c(\eta , \cdot )\) is a direct consequence of (2.3). \(\square \)

Now we are ready to show our main result of this section. The precise assumption on the cost function F is:

Assumption 2.1

-

(i)

\(F: \mathcal {X}\times \mathcal {Y}\times \mathcal {P}(\mathcal {Y})\) is non-negative, \(F(\cdot ,\cdot ,\nu )\) is continuous for each \(\nu \), and \(\nu \mapsto F(\cdot ,\cdot ,\nu )\) is continuous in supremum norm.

-

(ii)

\(\left\{ y: \inf _{(x,{\nu }) \in \mathcal {X}\times \mathcal {P}(\mathcal {Y})} F(x,y,{\nu }) \le r \right\} \) is compact for any \(r>0\).

-

(iii)

There exists a \(y_0 \in \mathcal {Y}\) and \(C<+\infty \) such that

$$\begin{aligned} \sup _{\nu \in \mathcal {P}(\mathcal {Y})} \int F (x,y_0,\nu ) \, \eta (dx)\le C. \end{aligned}$$

Here are two simple examples that satisfy Assumption 2.1

Example 2.1

-

(i).

Suppose \(\mathcal {X}\) and \(\mathcal {Y}\) are compact. Then any non-negative continuous function \(F: \mathcal {X}\times \mathcal {Y}\times \mathcal {P}(\mathcal {Y}) \rightarrow \mathbb {R}\) satisfies Assumption 2.1

-

(ii).

Suppose \(\mathcal {X}=\mathcal {Y}=\mathbb {R}^N\) and \(\eta \) has finite second moment. Let \(\alpha , \beta , \gamma \) be three positive constants and \(g:\mathbb {R}^N \times \mathbb {R}^N \rightarrow \mathbb {R}\) be a non-negative, bounded and uniformly continuous function. Then it can be easily verified that

$$\begin{aligned} F(x,y,\nu )=\alpha \Vert x-y\Vert ^2 + \beta \Vert y \Vert ^2+ \gamma \int g(y, {\bar{y}}) \, \nu (d {\bar{y}}) \end{aligned}$$satisfies Assumption 2.1.

Theorem 2.1

Under Assumption 2.1, a solution to the fixed point problem (2.1) exists.

Proof

We show that the composition \(R= Pj \circ \Phi \) has a fixed point. In Step 1, we prove that \(R(\nu )\) is relatively compact for any \(\nu \in \mathcal {P}(\mathcal {Y})\), and hence we can restrict R to a compact domain. In Step 2, invoking Lemma 2.2, we show that \(R(\nu )\) is closed and convex. In Step 3 we prove the R is upper-semicontinuous and therefore the existence of a fixed points for R according to Lemma 2.1.

- Step 1:

-

Take \(y_0 \in \mathcal {X}\) and \(C<+\infty \) as in Assumption 2.1 (iii). It is clear that \(\eta (dx)\delta _{y_0}(dy)\in \Pi _c(\eta , \cdot ) \). Then for any putative \(\pi \in \Phi ({\nu })\) we would have

$$\begin{aligned} \int _{\mathcal {X}\times \mathcal {Y}} F(x,y,{\nu }) \, \pi (dx ,dy) \le \int _{\mathcal {X}\times \mathcal {Y}} F(x,y_0,{\nu }) \, \eta (dx) \le C. \end{aligned}$$From Assumption 2.1 (ii), we know that for any \( r >0\), a compact subset \(\mathcal {V}_{r} \subset \mathcal {Y}\) exists such that

$$\begin{aligned} F(x,y,\nu ) \ge r\, (\text { all }x,\nu ) \quad \text { whenever } \quad y \not \in \mathcal {V}_{r} . \end{aligned}$$Therefore we obtain the inequality

$$\begin{aligned} Pj({\pi })[y \not \in \mathcal {V}_r] \le \pi [(x,y):F(x,y,\nu ) \ge r]\le \frac{\int _{\mathcal {X}\times \mathcal {Y}} F(x,y,{\nu }) \, \pi (dx ,dy)}{r} \le \frac{C}{r}. \end{aligned}$$Define a subset \(\mathcal {E}\subset \mathcal {P}(\mathcal {Y})\) as

$$\begin{aligned} \mathcal {E}:=\left\{ \nu \in \mathcal {P}(\mathcal {Y}): \, \nu [y \not \in \mathcal {V}_r] \le C/r, \, \forall r >0 \right\} . \end{aligned}$$It is clear that \(\mathcal {E}\) is relatively compact, by Prokhorov theorem, as it is tight. By Portmanteau theorem, \(\mathcal {E}\) is also closed, since each set \(\mathcal {Y}\backslash \mathcal {V}_r\) is open. Hence \(\mathcal {E}\) is compact, and clearly convex too. By design we have \(R({\nu }) \subset \mathcal {E}\) for any \({\nu } \in \mathcal {P}(\mathcal {Y})\). We restrict the domain of R to \({\mathcal {E}}\), which is a compact and convex subset of the space of finite signed measures equipped with the weak topology.

- Step 2 :

-

We define \(\Pi _c(\eta , \mathcal {E})\) as the subset of \(\Pi _c(\eta , \cdot )\) consisting of measures with a \(\mathcal {Y}\)-marginal lying in \(\mathcal {E}\). Note that \(\Phi ({\nu }) \subset \Pi _c(\eta , \mathcal {E})\), by Step 1. The compactness of \(\mathcal {E}\), Lemma 2.2, and Prokhorov theorem, yield that \(\Pi _c(\eta , \mathcal {E})\) is compact and so \(\Phi ({\nu })\) is relatively compact. We notice that

$$\begin{aligned} \Phi (\nu )&=\left\{ \pi \in \Pi _c(\eta , \mathcal {E}): \, \int F(x,y,\nu ) \, \pi (dx ,dy) \right. \\&\left. \le \int F(x,y,\nu ) \, \pi '(dx ,dy), \forall \pi ' \in \Pi _c(\eta , \mathcal {E}) \right\} , \end{aligned}$$and by the compactness of \(\Pi _c(\eta , \mathcal {E})\) and Assumption 2.1 (i) we obtain that \(\Phi (\nu )\) is non-empty. By the same token, \(\Phi (\nu )\) is closed and hence compact, and clearly \(\Phi (\nu )\) is convex too. On the other hand, the map Pj is continuous and linear. Hence \(R({\nu })=Pj(\Phi ({\nu }))\) is also nonempty, convex and compact.

- Step 3:

-

We prove that \(R:\mathcal {E}\rightarrow \mathcal {E}\) is an upper-semicontinuous set-valued map. Thus there exists a fixed point in \({\mathcal {E}}\), as a result of Lemma 2.1. Since \({\mathcal {E}}\) is compact, it is equivalent to show that the graph of R is closed in \({\mathcal {E}} \times {\mathcal {E}}\). Take any sequence \(({\nu }_n, \nu _n')_{n\ge 0} \subset \mathcal {E}\times \mathcal {E}\) such that

$$\begin{aligned} \nu _n' \in R({\nu }_n),\quad {\nu }_n \rightarrow \hat{\nu },\quad \nu _n' \rightarrow \hat{\nu }'. \end{aligned}$$Let us prove that \(\hat{\nu }' \in R(\hat{\nu })\). Note that for each n, there exists a \(\pi _n \in \Phi ({\nu }_n)\) such that \(Pj (\pi _n)=\nu _n'\). Since \((\pi _n)_{n \ge 0} \subset \Pi _c(\eta , \mathcal {E})\), there exists a subsequence \((\pi _{n_k})_{k \ge 0}\) converging to \(\hat{\pi }\). According to Lemma 2.2, we know that \(\hat{\pi } \in \Pi _c(\eta , \cdot )\) as well. It is clear then that \(Pj(\hat{\pi })=\hat{\nu }'\). Let us verify that

$$\begin{aligned} \int _{\mathcal {X}\times \mathcal {Y}} F(x,y,\hat{\nu }) \, \hat{\pi }(dx ,dy) \le \int _{\mathcal {X}\times \mathcal {Y}} F(x,y,\hat{\nu }) \, \pi '(dx ,dy), \quad \forall \pi ' \in \Pi _c(\eta , \cdot ). \end{aligned}$$(2.4)According to the definition of \(\pi _{n_k} \in \Phi ({\nu }_{n_k})\), we know that

$$\begin{aligned} \int _{\mathcal {X}\times \mathcal {Y}} F(x,y,{\nu }_{n_k}) \, \pi _{n_k}(dx ,dy) \le \int _{\mathcal {X}\times \mathcal {Y}} F(x,y,{\nu }_{n_k}) \, \pi '(dx ,dy), \quad \forall \pi ' \in \Pi _c(\eta , \cdot ). \end{aligned}$$Now using the uniform continuity of F in Assumption 2.1 (i), and letting \(k \rightarrow \infty \) in the above inequality, we conclude (2.4).

\(\square \)

Remark 2.3

Inspection of the previous proof shows that Assumption 2.1 (i) could be weakened to

-

(i’)

The function \(\nu \mapsto F(\cdot ,\cdot ,\nu )\) is continuous in sup-norm and for each \(\nu \) the function \(F(\cdot ,\cdot ,\nu )\) jointly lower semicontinuous and continuous in its second argument.

As this seems to be a technicality, we do not develop this further.

To guarantee the uniqueness of fixed point, we impose the following monotonicity condition on F.

Assumption 2.2

For any \(\pi \in \Pi _c ( \eta , {\nu }), \pi ' \in \Pi _c(\eta , {\nu }')\), if \(\pi \ne \pi '\) then

Corollary 2.1

There exists at most one equilibrium under Assumption 2.2.

Proof

Suppose there are two distinct equilibria \(\pi \in \Pi _c ( \eta , \hat{\nu }) \) and \(\pi '\in \Pi _c ( \eta , \hat{\nu }')\), so \(\pi \in \Phi (\hat{\nu })\) and \( \pi ' \in \Phi (\hat{\nu }')\). Then by definition

Adding the above inequalities, we obtain that

which contradicts Assumption 2.2. \(\square \)

Here is a simple example of F that satisfies Assumption 2.2.

Example 2.2

\(F(x,y,{\nu })=c(x,y)+V[{\nu }](y)\), where V is strictly Lasry-Lions monotone:

3 Fixed point iterations in the quadratic case

In this section, we apply fixed point iterations/the contraction mapping theorem, in order to find the fixed point of (2.1). As it is known, this is an algorithmic recipe unlike the result in Lemma 2.1. Let us assume that \(\mathcal {X}_t=\mathcal {Y}_t=\mathbb {R}\), \(t=1, \cdots , N\), and

where \(y \mapsto \mathcal {V}[{\nu }](y)\) is lower semicontimuous and bounded from below for any \({\nu } \in \mathcal {P}(\mathcal {Y})\). Due to the explicit structure of F, for any \(\nu \in \mathcal {P}(\mathcal {Y})\) we can actually solve the minimization problem

recursively. We first present the construction of minimizers of (3.1), and hence obtain a map \(\Psi : \mathcal {P}(\mathcal {Y}) \rightarrow \mathcal {P}(\mathcal {Y})\). Then we prove that \(\Psi \) is actually a contraction under further assumptions.

3.1 Minimizer of (3.1)

We first sketch the idea. For any \(\eta \in \mathcal {P}(\mathcal {X})\), define its disintegration

Then we have that \(\eta =\eta _1 \otimes \eta ^{x_1} \otimes \cdots \otimes \eta ^{x_{1:N-1}}\). Denote \(V[{\nu }]_N(x,y):= V[{\nu }](y)\). For \(t=N,\cdots , 1\), we define recursively

and also

with the understanding that, when \(t=1\), we interpret \(1:0=\emptyset \) and hence \( \eta ^{x_{1:t-1}}:=\eta _1\) and so forth, in the above equation. We assume implicitly, for the time being, that the optimal value (3.2) depends measurably on the various parameters, and likewise that at least one optimizing kernel (3.3) exists. With each measurable choice of optimizing kernels in (3.3) it is possible to paste together a coupling as follows: by induction one defines first \(\pi [\nu ]_1\in \mathcal {P}(\mathcal {X}_1\times \mathcal {Y}_1)\) as \(\eta _1(dx_1)T[\nu ]_1^{\emptyset }(x_1)(dy_1)\) and then \(\pi [\nu ]^{(x,y)_{1:t-1}}(dx_t,dy_t):=\eta ^{x_{1:t-1}}(dx_t)T[{\nu }]_{t}^{(x,y)_{1:t-1}}(x_t)(dy_t)\). Setting

we construct a causal coupling with \(\mathcal {X}\)-marginal \(\eta \). It can be proven that, given \(\nu \), the set of all such couplings \(\pi [\nu ]\) is equal to \(\Phi (\nu )\), i.e. the best responses to \(\nu \). In particular \(T(\nu )\), the set of \(\mathcal {Y}\)-marginals of best responses, is equal to the set of \(\mathcal {Y}\)-marginals of all such \(\pi [\nu ]\).

In the particular case that the selection (3.3) is a Dirac measure (we still denote by \(T[{\nu }]_{t}^{(x,y)_{1:t-1}}(x_t)\) the support of such Dirac measure), then the above recipe allows us to build an adapted map \(\mathcal {T}[\nu ](x)=(\mathcal {T}[\nu ]_1(x_1),\mathcal {T}[\nu ]_2(x_{1:2}),\dots ,\mathcal {T}[\nu ]_N(x_{1:N}) )\) inductively as follows: \(\mathcal {T}[\nu ]_1(x_1):= T[\nu ]_1(x_1)\) and \(\mathcal {T}[\nu ]_t(x_{1:t}):= T[{\nu }]_k^{\left( x_{1:k-1}, \mathcal {T}[{\nu }]_{1:k-1}(x_{1:k-1})\right) }(x_k)\). Hence this defines a causal coupling with \(\mathcal {X}\)-marginal \(\eta \), supported on the graph of an adapted map, via \(\pi [\nu ]:=(id,\mathcal {T}[\nu ])(\eta )\).

Proposition 3.1

If (3.2) admits a minimizer (for any \( t=1, \cdots , N\), \(x_{1:t} \in \mathcal {X}_{1:t}\) and \(y_{1:t-1} \in \mathcal {Y}_{1:t-1}\)), then \(\pi [\nu ]\) defined in (3.5) minimizes (3.1). If (3.2) admits a unique minimizer (for any \( t=1, \cdots , N\), \(x_{1:t} \in \mathcal {X}_{1:t}\) and \(y_{1:t-1} \in \mathcal {Y}_{1:t-1}\)), then so does (3.1) and its unique minimizer is supported on the graph of an adapted map.

Proof

First of all we stress that the proposed construction of \(\pi [\nu ]\) is well-founded. This is proved by backwards induction from \(t=N-1\) to \(t=0\), and standard measurable selection arguments: Details aside, one applies [13, Proposition 7.50] so that (3.2) is analytically measurable in its parameters, and (3.3) admits analytically measurable selectors. By the same token (3.4) is well-defined and analytically measurable. Then one iterates these arguments. The same arguments, applied to the case when (3.2) admits a unique minimizer (for any \( t=1, \cdots , N\), \(x_{1:t} \in \mathcal {X}_{1:t}\) and \(y_{1:t-1} \in \mathcal {Y}_{1:t-1}\)), show the well-foundedness of the mentioned coupling supported on the graph of an adapted map. Hence, it remains to discuss optimality.

Let \(\gamma \in \Pi _c ( \eta , \cdot )\). Denote its disintegration by \(\gamma _1 \otimes \gamma ^{(x,y)_1} \otimes \cdots \otimes \gamma ^{(x,y)_{1:N-1}}\). Since \(\gamma \) is causal, the \(\mathcal {X}_t\)-marginal of \(\gamma ^{(x,y)_{1:t-1}}\) is just \(\eta ^{x_{1:t-1}}\), and hence we have the disintegration \(\gamma ^{(x,y)_{1:t-1}}(dx_t,dy_t)=\eta ^{x_{1:t-1}}(dx_t) \otimes \gamma ^{(x,y)_{1:t-1}}(x_t,d y_t )\).

For any fixed \((x,y)_{1:N-1}\), according to our construction of \(\pi \), it is clear that

since by definition \(\pi [\nu ]^{(x,y)_{1:N-1}}(x_N,dy_N)\) is concentrated on the set of minimizers of (3.2). Similarly, for any fixed \((x,y)_{1:N-2}\), it can be readily seen that

Repeating the above argument iteratively for \(t= N-2, \cdots , 1\), one can show that

\(\square \)

3.2 \(\mathcal {W}_1\) contraction

As a first step, the convexity of \(y_{1:t} \mapsto V[\nu ]_t(x_{1:t},y_{1:t})\) will be analyzed quantitatively in Proposition 3.2 under a convexity assumption on \(V[\nu ]\). Then we will prove the contractivity of the best response map in Propositions 3.3 and 3.4 using the convexity of \(y_{1:t} \mapsto V[\nu ]_t(x_{1:t},y_{1:t})\) together with a Lipschitz property of \(\nu \mapsto \nabla V[\nu ](y)\). In addition, to exchange derivatives and integrals in Proposition 3.2, we need to assume that \(\eta \) has finite first moment. We now give the precise assumptions we need. These will be of quantitative flavor. The reason is that, as we will be arguing with backwards induction, we will need to make sure that neither convexity nor the Lipschitz property are destroyed.

Assumption 3.1

-

(i)

For any \({\nu } \in \mathcal {P}(\mathcal {Y})\), \(y \mapsto V[{\nu }](y)\) is twice continuously differentiable, and there exist two constants \(\kappa \ge \lambda \ge 0\) such that \(\kappa I_N \ge \nabla ^2 V[{\nu }] \ge \lambda I_N\), and

$$\begin{aligned} \kappa +\lambda \ge 3 \times 5 \times \cdots \times (2N-1) \times (\kappa -\lambda ). \end{aligned}$$ -

(ii)

There exists a constant \(L>0\) such that \(\nu \mapsto \nabla V [{\nu }](y)\) is L-Lipschitz for any \(y \in \mathcal {Y}\).

-

(iii)

\(\eta \) has finite first moment.

Remark 3.1

In Point (ii) of Assumption 3.1, the Lipschitz property is meant to hold under the 1-Wasserstein distance, defined by:

For the convexity of \(y_{1:t} \mapsto V[{\nu }]_{t}(x_{1:t}, y_{1:t})\), we need the following lemma whose proof is trivial and so it is omitted.

Lemma 3.1

Suppose M is a symmetric \(N \times N\) matrix such that \(\kappa \, I_N \ge M \ge \lambda \, I_N\). Then

In the rest of the paper, let us denote by \(\nabla _{y_{1:k}}V[\nu ]_k\) and \(\nabla ^2_{y_{1:k}}V[\nu ]_k\) the gradient and Hessian of \( y_{1:k} \mapsto V[\nu ]_k(x_{1:k},y_{1:k})\) respectively.

Proposition 3.2

Under Points (i) and (iii) of Assumption 3.1, the function \(y_{1:k} \mapsto V[{\nu }]_k(x_{1:k},y_{1:k})\) is twice continuously differentiable, and \(\kappa _k I_k \ge \nabla ^2_{y_{1:k}} V[{\nu }]_k \ge \lambda _k I_k\), where

Proof

Suppose \(t=N-1\). The minimization problem (3.2) is strictly convex for each value of x and \(y_{1:N-1}\). Hence the first order conditions of (3.3) completely characterize the unique minimizer \(T[{\nu }]_{N}^{(x,y)_{1:N-1}}(x_N)\), and we obtain that

Let us show that \(T[{\nu }]_{N}^{(x,y)_{1:N-1}}(x_N)\) is Lipschitz in \(x_N\), which is necessary for us to exchange integral and derivative later in this argument. Denote \(y_N= T[{\nu }]_{N}^{(x,y)_{1:N-1}}(x_N)\), \(y_N' = T[{\nu }]_{N}^{(x,y)_{1:N-1}}(x_N')\). Due to the first order condition, we have that

According to Assumption 3.1 (i), the left hand side is bounded from below by \((1+\lambda ) (y_N-y_N')^2\), and hence we obtain that

As abbreviations, we take \(T_N:=T[{\nu }]_{N}^{(x,y)_{1:N-1}}(x_N)\), \(V_N=V[{\nu }]_N(x,y_{1:N-1}, T_N)\), and

According to the implicit function theorem, which is applicable thanks to Assumption 3.1(i), \(T_N\) is continuously differentiable in y. By the envelope theorem, \(V_{N-1}\) is continuously differentiable (as \(V_N\) is) in y, and we have

We can deduce from (3.8) and Lemma 3.1 that \(\partial _{y_t} V[\nu ]_N(x,y_{1:N-1}, T_N)\) is Lipschitz in \(x_N\) and y, which justifies together with Assumption 3.1 (iii) the exchange of derivative and integral in (3.9). By the same token, we deduce that \(V_{N-1}\) is in effect twice continuously differentiable in y and we have

Taking derivative of (3.7) with respect to \(y_k\), it can be seen that

and hence

Therefore we obtain that

Take any vector \(\xi =(\xi _1, \cdots , \xi _{N-1})\). Using (3.10), Cauchy-Schwarz inequality, and Lemma 3.1, it can be easily seen that

and similarly

Therefore, we obtain that

or equivalently, that

By induction, following the exact same arguments as above, we can get that for each \(1\le k \le N-1\) the function \(V_k\) is twice continuously differentiable in y and

where \(\lambda _k, \kappa _k\) are defined as in (3.6). \(\square \)

By Proposition 3.2, we know that \(V[{\nu }]_t\) is convex in \(y_t\) for any \(t =1,\cdots , N\) under Assumption 3.1 (i). It follows that the problems (3.2) admit a unique minimizer. Then, by Proposition 3.1, it follows that Problem (3.1) admits a unique minimizer \(\pi [\nu ]\). This minimizer is furthermore supported on the graph of an adapted map \(\mathcal {T}[\nu ]\). To simplify notation, we write

which is now an actual function, rather than a set-valued one. Observe that any minimizer of the problem

is also the minimizer of (3.1). Hence we conclude that \(\pi [\nu ]\) is also the unique minimizer of (3.12).

Now we analyze the Lipschitz property of the function \(({\nu },y) \mapsto T[{\nu }]_k^{(x,y)_{1:k-1}}(x_k)\), and after that we will show that \(\Psi \) is a contraction under Assumption 3.1. Here the contraction property is meant to hold under the 1-Wasserstein distance.

Proposition 3.3

Under Assumption 3.1, it holds that

where \(L_N:=L\) and

Proof

- Step 1:

-

First we prove that

$$\begin{aligned}&\left| T[{\nu }]_{k}^{(x,y)_{1:k-1}}(x_k)- T[{\nu }']_k^{(x,y)_{1:k-1}}(x_k) \right| \le \frac{L_k}{1+ \lambda _k} \mathcal {W}_1({\nu }, {\nu }') . \end{aligned}$$(3.14)Denote \(\overline{y}_N=T[{\nu }]^{(x,y)_{1:N-1}}_N(x_N)\), \(\overline{y}_N'=T[{\nu }']^{(x,y)_{1:N-1}}_N(x_N)\). It can be easily seen, by the first order optimality conditions as in (3.7), that

$$\begin{aligned} \overline{y}_N-\overline{y}_N'+\partial _{y_N}V[{\nu }]_N(x,y_{1:N-1}, \overline{y}_N) - \partial _{y_N}V[{\nu }']_N(x,y_{1:N-1},\overline{y}_N')=0, \end{aligned}$$and hence

$$\begin{aligned}&(\overline{y}_N-\overline{y}_N')^2+(\overline{y}_N-\overline{y}_N')\left( \partial _{y_N}V[{\nu }]_N(x,y_{1:N-1}, \overline{y}_N) - \partial _{y_N}V[{\nu }]_N(x,y_{1:N-1},\overline{y}_N')\right) \nonumber \\&=(\overline{y}_N-\overline{y}_N')\left( \partial _{y_N}V[{\nu }']_N(x,y_{1:N-1}, \overline{y}_N') - \partial _{y_N}V[{\nu }]_N(x,y_{1:N-1},\overline{y}_N')\right) . \end{aligned}$$(3.15)Using the convexity of \(V[{\nu }]_N\) in \(y_N\), the left hand side of (3.15) is greater than

$$\begin{aligned} (1+\lambda ) (\overline{y}_N-\overline{y}_N')^2, \end{aligned}$$while the right hand side is smaller than \(L|\overline{y}_N-\overline{y}_N'|\mathcal {W}_1({\nu }, {\nu }')\). Therefore we obtain that

$$\begin{aligned} |\overline{y}_N-\overline{y}_N'| \le \frac{L\mathcal {W}_1({\nu }, {\nu }')}{1+\lambda }. \end{aligned}$$(3.16)

According to (3.9), we know that

The first term on the right hand side is bounded above by \(L\mathcal {W}_1({\nu }, {\nu }')\) due the point (ii) of Assumption 3.1. By Lemma 3.1, we obtain

and thus (3.16) implies

Combining these estimates, we get that

Recursively, we get that for \(k=N-1, \cdots , 1\),

and also (3.14)

- Step 2 :

-

Let us compute \(|T[{\nu }]_k^{(x,y)_{1:k-1}}(x_k)-T[{\nu }]_k^{(x,y')_{1:k-1}}(x_k)|\). By first order condition, we have that

$$\begin{aligned}&T[{\nu }]_k^{(x,y)_{1:k-1}}(x_k)-T[{\nu }]_k^{(x,y')_{1:k-1}}(x_k) \\&\quad + \partial _{y_k} V[{\nu }]_k\left( x_{1:k}, y_{1:k-1}, T[{\nu }]_k^{(x,y)_{1:k-1}}(x_k)\right) \\&\quad - \partial _{y_k} V[{\nu }]_k\left( x_{1:k}, y'_{1:k-1}, T[{\nu }]_k^{(x,y')_{1:k-1}}(x_k)\right) =0. \end{aligned}$$Similar to the derivation of (3.16), using Proposition 3.2 and Lemma 3.1 we get that

$$\begin{aligned} \left| T[{\nu }]_k^{(x,y)_{1:k-1}}(x_k)-T[{\nu }]_k^{(x,y')_{1:k-1}}(x_k) \right| \le \frac{(\kappa _k -\lambda _k)\sum _{t=1}^{k-1} |y_t-y'_t|}{1+\lambda _k}. \end{aligned}$$(3.17) - Step 3 :

-

We combine the first two steps using the triangle inequality.

\(\square \)

Proposition 3.4

Under Assumption 3.1 the function \(\Psi \) defined in (3.11) is a contraction in \(\mathcal {W}_1\) metric if

Proof

Let us recall the construction from Sect. 3.1: Using \(T[{\nu }]_1, \cdots , T[{\nu }]_N\), we can define \(\mathcal {T}[{\nu }]=(\mathcal {T}[{\nu }]_1, \cdots , \mathcal {T}[{\nu }]_N): \mathcal {X}\rightarrow \mathcal {Y}\) inductively via

It is clear that \(\Psi ({\nu })= (\mathcal {T}[{\nu }]) ({\eta })\), and therefore

Now according to Proposition 3.3, we have that

and

By induction, one can prove that

and hence

Therefore \(\Psi \) is a contraction if (3.18) is satisfied. \(\square \)

In the contracting case, it is well-known that there exist a unique fixed-point, which is furthermore determined by repeatedly iterating a map (fixed-point iterations). This tells us how to completely solve our equilibrium problem:

Corollary 3.1

Under Assumption 3.1 and Condition (3.18), we have

-

(1)

The Cournot-Nash problem (3.1) has a unique equilibrium \(\pi \);

-

(2)

The second marginal of \(\pi \) is the unique fixed point of \(\Psi \), and it can be determined by the usual fixed-point iterations “\(\nu _{m+1}=\Psi (\nu _m)\)”.

-

(3)

Conversely, after determining \(\nu \) the unique fixed point of \(\Psi \), the unique Cournot-Nash equilibrium \(\pi \) is determined by minimizing (3.12) or equivalently by taking \(\pi =(id,T[\nu ])(\eta )\) with \(T[\nu ]\) adapted and being uniquely (\(\eta \)-a.s.) determined via the recursions (3.3).

4 Application to optimal liquidation in a price impact model

We give a description of the price impact model in discrete time. An agent has at time 0 a number \(Q_0>0\) of shares on a stock. At time 1, based on the available information, she aims to sell \(y_1\) shares for their current price \(S_1\), after which she is left with \(Q_1=Q_0-y_1\) shares. This is iterated until time N, where she chooses to sell \(y_{N}\) shares based on her current information, at the current price of \(S_{N}\), leaving her with \(Q_{N}=Q_{N-1}-y_{N}\) shares. The total earnings from this strategy is then

As for the behaviour of the share prices \(S_i\), we suppose that \(S_0\in \mathbb {R}\) is known and that otherwise

where \(x\sim \eta \) is noise (wlog. we assume \(x_0=0\)) and \(m_i[\nu ]\) stands for the mean of the i-th marginal of a measure \(\nu \). The idea is that the i-th marginal of \(\nu \) is (in equilibrium) the distribution of the number of shares sold at time i, and so the term \(m_i[\nu ]\) in the dynamics of S indicates a permanent market impact caused by a population of identical, independent and negligible agents who at time i decide to sell a number of shares.

We define

where the first term accounts for a final cost of inventory and the second term models the accumulated transaction costs. Given a distribution \(\nu \) of decisions taken by a population of agents, a negligible agent will aim to minimize the \(\eta \)-expectation of F over the strategies adapted to the information of the share prices, or equivalently, the strategies adapted to x. More precisely, a pure equilibrium for this game would be an adapted map \(\hat{T}\) and a measure \(\hat{\nu }\) such that

- (i):

-

\(\hat{T}\in {\mathop {\textrm{argmin}}\limits _{T \, \text {adapted}}}\int F(x, T(x),\hat{\nu }) \, \eta (dx);\)

- (ii):

-

\(\hat{T}(\eta )=\hat{\nu }\).

For this model we easily check that \(F(x,y,\nu )=\frac{1}{2}\Vert x-y\Vert ^2+V[\nu ](y)\) where

Let us denote by \(\mathbbm {1}_N\) an N-dimensional vector with 1 in each coordinate, and by \(\mathbbm {1}_{N \times N}\) an \(N \times N\) matrix equal to \(\mathbbm {1}_N^\top \mathbbm {1}_N\). On the other hand \(I_N\) denotes the identity matrix. Hence \(\nabla V[\nu ](y)=(2K-1)y+\{2A(\sum y_i-Q_0)-S_0\}\mathbbm {1}_{N}+(\sum _{k\le i}m_k[\nu ] )_{i=1}^N\), and so \(\nu \mapsto \nabla V[\nu ](y)\) is N-Lipschitz with respect to the 1-Wasserstein distance, uniformly in y. Moreover, \(\nabla ^2V[\nu ](y)=2A\mathbbm {1}_{N\times N}+(2K-1)I_N\), and so we have that \(\kappa I_N \ge \nabla ^2V[\nu ](y) \ge \lambda I_N\), where \(\kappa =2K-1+2AN\) and \(\lambda =2K-1\).

Corollary 4.1

Take \(L_N=N\), \(\kappa =2K-1+2AN\), \(\lambda =2K-1\), and define \(L_t, \kappa _t, \lambda _t\), \(t=N-1, \cdots 1\) recursively as in (3.6) and (3.13). Then there exists a unique equilibrium if Assumption 3.1 (i) and (3.18) are satisfied.

In our model, it can be readily seen that assumptions of Corollary 4.1 are satisfied if \( N +A \ll K \). Now we show that it is not a potential game, and therefore cannot be covered by [2]. Let us only prove it for the simplest case \(N=2\).

Lemma 4.1

There exists no Fréchet differentiable \(\mathcal {E}: \mathcal {P}(\mathbb {R}^2) \rightarrow \mathbb {R}\) such that

for any \(\mu , \nu \in \mathcal {P}(\mathbb {R}^2)\).

Proof

Let us define

and

It can easily verified that

for any \(\mu , \nu \in \mathcal {P}(\mathbb {R}^2)\). Therefore it suffices to show that \(m_1[\nu ]y_2\) is not potential. Otherwise suppose there exists some \(\mathcal {E}\) such that (4.2) holds with \(V[\nu ](y)=m_1[\nu ]y_2\).

Then it can be readily seen that

Therefore we obtain that

which is a contradiction. \(\square \)

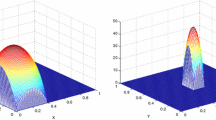

To finish the article, let us present a simple example where we can illustrate how to compute the best response map \( \mathcal {T}[\nu ]\) and the fixed point \(\nu \).

Example 4.1

Suppose \(N=2\) and \(\eta =\frac{1}{2}(\delta _{0} +\delta _1) \times \frac{1}{2}(\delta _{0} + \delta _1)\). Take \(F^{\epsilon }(x,y,\nu )= \frac{1}{2} \Vert x-y \Vert ^2 + \epsilon V[\nu ](y)\), where V is given by (4.1). In the case of \(\epsilon =1\), it is just price impact model above. Hence we know that \(F^{\epsilon }\) is non-potential for \(\epsilon >0\). Let us compute the best response given \(\nu \):

Plugging the above equation into

one can express \(V^{\epsilon }[\nu ]_1(x_1,y_1)\) in terms of \(m_1[\nu ],m_2[\nu ]\) and \(y_1\). Then using the first order condition

one can find a formula of \(T^{\epsilon }[\nu ]_1(x_1)\).

After some computation, there exists some constants \(a_1^{\epsilon },\cdots , a_4^{\epsilon }\), \(\tilde{b}_1^{\epsilon }, b_1^{\epsilon }, \cdots , b_4^{\epsilon }\) such that

Since we assume that \(\eta =\frac{1}{2}(\delta _{0} +\delta _1) \times \frac{1}{2}(\delta _{0} + \delta _1)\), the optimal response measure is given by

and hence is completely determined by means \( m_1 [\nu ]\) and \(m_2[\nu ]\). Computing the means of \({\hat{\nu }} \), we obtain that

Therefore, the equilibrium is given by the solution of the linear system

where variables \(m_1^{\epsilon }\), \(m_2^{\epsilon }\) stand for the mean of the first and second marginals of the equilibria.

It can be verified that if \(F(x,y,\nu )\) satisfies assumptions of Proposition 3.4, e.g. when K is large, then \(F^{\epsilon }(x,y,\nu )\) also satisfies that for any \( \epsilon \in [0,1]\). Therefore, there always exists a unique solution of \(\Psi (\nu ) =\nu \) in (4.3). As discussed in the paragraph above, the solution of (4.3) is provided by the linear system (4.4). Therefore there always exists a unique solution to (4.4).

Although it is not immediate how to interpret this equilibrium, we do notice that as \(\epsilon \rightarrow 0\) the unique equilibria converge to the intuitive solution for \(\epsilon =0\). In the case that \(\epsilon =0\), the solution sends \((x_1,x_2)\) to \((y_1,y_2)\), i.e., \( \mathcal {T}^{0}[\nu ]_1(x_1)=x_1\), \( \mathcal {T}^{0}[\nu ]_2(x_1,x_2)=x_2\). Indeed, as \(\epsilon \rightarrow 0\), we have \( a_1^{\epsilon }, b_1^{\epsilon } \rightarrow 1\) and \( a_2^{\epsilon }, a_3^{\epsilon },a_4^{\epsilon }, \tilde{b}_1^{\epsilon }, b_2^{\epsilon }, b_3^{\epsilon }, b_4^{\epsilon } \rightarrow 0\). Therefore the fixed point \(m_1^{\epsilon }, m_2^{\epsilon }\) both converge to \(\frac{1}{2}\), and thus \(\lim _{\epsilon \rightarrow 0} \mathcal {T}^{\epsilon }[\nu ]_1(x_1)=x_1\), \(\lim _{\epsilon \rightarrow 0} \mathcal {T}^{\epsilon }[\nu ]_2(x_1,x_2)=x_2\).

References

Acciaio, B., Backhoff-Veraguas, J., Carmona, R.: Extended mean field control problems: stochastic maximum principle and transport perspective. SIAM J. Control. Optim. 57, 3666–3693 (2019)

Acciaio, B., Backhoff-Veraguas, J., Jia, J.: Cournot-Nash equilibrium and optimal transport in a dynamic setting. SIAM J. Control. Optim. 59, 2273–2300 (2021)

Acciaio, B., Backhoff-Veraguas, J., Zalashko, A.: Causal optimal transport and its links to enlargement of filtrations and continuous-time stochastic optimization. Stoch. Process. Their Appl. 130, 2918–2953 (2020)

Acciaio, B., Munn, M., Wenliang, L.K., Xu, T.: Cot-gan: generating sequential data via causal optimal transport. NeurIPS 33, 8798 (2020)

Achdou, Y., Bardi, M., Cirant, M.: Mean field games models of segregation. Math. Models Methods Appl. Sci. 27, 75–113 (2017)

Backhoff-Veraguas, J., Bartl, D., Beiglböck, M., Eder, M.: Adapted Wasserstein distances and stability in mathematical finance. Financ. Stoch. 24, 601–632 (2020)

Backhoff-Veraguas, J., Beiglböck, M., Lin, Y., Zalashko, A.: Causal transport in discrete time and applications. SIAM J. Optim. 27, 2528–2562 (2017)

Bartl, D., Beiglböck, M., Pammer, G.: The Wasserstein space of stochastic processes (2021) arXiv:2104.14245

Bayraktar, E., Cecchin, A., Cohen, A., Delarue, F.: Finite state mean field games with wright-fisher common noise. J. de Mathématiques Pures et Appliquées 147, 98–162 (2021)

Bayraktar, E., Cohen, A.: Analysis of a finite state many player game using its master equation. SIAM J. Control. Optim. 56, 3538–3568 (2018)

Bayraktar, E., Zhang, X.: On non-uniqueness in mean field games. Proc. Am. Math. Soc. 148, 4091–4106 (2020)

Benamou, J.-D., Carlier, G., Santambrogio, F.: Variational mean field games. In: Active Particles, vol. 1, pp. 141–171. Springer, Cham (2017)

Bertsekas, D. P., Shreve, S. E.: Stochastic optimal control. Volume 139 of Mathematics in Science and Engineering, Academic Press, Inc. [Harcourt Brace Jovanovich, Publishers], New York-London (1978)

Blanchet, A., Carlier, G.: From Nash to Cournot-nash equilibria via the Monge-kantorovich problem. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 372, 20130398 (2014)

Blanchet, A., Carlier, G.: Remarks on existence and uniqueness of Cournot-Nash equilibria in the non-potential case. Math. Financ. Econ. 8, 417–433 (2014)

Blanchet, A., Carlier, G.: Optimal transport and Cournot-Nash equilibria. Math. Oper. Res. 41, 125–145 (2016)

Cardaliaguet, P.: Notes on mean field games (from P.-L. Lions’ lectures at Collège de France), (2010)

Carmona, R., Delarue, F.: Probabilistic Theory of Mean Field Games with Applications I-II. Springer, Cham (2018)

Cecchin, A., Fischer, M.: Probabilistic approach to finite state mean field games. Appl. Math. Optim. 81, 253–300 (2020)

Gomes, D.A., Mohr, J., Souza, R.R.: Discrete time, finite state space mean field games. J. de mathématiques pures et appliquées 93, 308–328 (2010)

Huang, M., Caines, P.E., Malhamé, R.P.: Large-population cost-coupled lqg problems with nonuniform agents: individual-mass behavior and decentralized \(\epsilon \)-Nash equilibria. IEEE Trans. Autom. Control 52, 1560–1571 (2007)

Huang, M., Malhamé, R.P., Caines, P.E.: Large population stochastic dynamic games: closed-loop McKean–Vlasov systems and the Nash certainty equivalence principle. Commun. Inform. Syst. 6, 221–252 (2006)

Lacker, D., Ramanan, K.: Rare Nash equilibria and the price of anarchy in large static games. Math. Oper. Res. 44, 400–422 (2019)

Lasry, J.-M., Lions, P.-L.: Jeux à champ moyen: i-le cas stationnaire. Comptes Rendus Mathématique. 343, 619–625 (2006)

Lasry, J.-M., Lions, P.-L.: Mean field games. Jpn. J. Math. 2, 229–260 (2007)

Lassalle, R.: Causal transport plans and their Monge–Kantorovich problems. Stoch. Anal. Appl. 36, 452–484 (2018)

Mas-Colell, A.: On a theorem of Schmeidler. J. Math. Econ. 13, 201–206 (1984)

Pflug, G.: Version-independence and nested distributions in multistage stochastic optimization. SIAM J. Optim. 20, 1406–1420 (2009)

Pflug, G., Pichler, A.: A distance for multistage stochastic optimization models. SIAM J. Optim. 22, 1–23 (2012)

Pflug, G., Pichler, A.: Multistage Stochastic Optimization. Springer series in operations research and financial engineering, Springer, Cham (2014)

Zeidler, E.: Nonlinear Functional Analysis and its Applications : 1: Fixed-Point Theorems. Springer, New York (1986)

Funding

Open access funding provided by University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Backhoff-Veraguas, J., Zhang, X. Dynamic Cournot-Nash equilibrium: the non-potential case. Math Finan Econ 17, 153–174 (2023). https://doi.org/10.1007/s11579-022-00327-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11579-022-00327-3