Abstract

Since December 2019, the novel coronavirus disease 2019 (COVID-19) has claimed the lives of more than 3.75 million people worldwide. Consequently, methods for accurate COVID-19 diagnosis and classification are necessary to facilitate rapid patient care and terminate viral spread. Lung infection segmentations are useful to identify unique infection patterns that may support rapid diagnosis, severity assessment, and patient prognosis prediction, but manual segmentations are time-consuming and depend on radiologic expertise. Deep learning-based methods have been explored to reduce the burdens of segmentation; however, their accuracies are limited due to the lack of large, publicly available annotated datasets that are required to establish ground truths. For these reasons, we propose a semi-automatic, threshold-based segmentation method to generate region of interest (ROI) segmentations of infection visible on lung computed tomography (CT) scans. Infection masks are then used to calculate the percentage of lung abnormality (PLA) to determine COVID-19 severity and to analyze the disease progression in follow-up CTs. Compared with other COVID-19 ROI segmentation methods, on average, the proposed method achieved improved precision (\(47.49\%\)) and specificity (\(98.40\%\)) scores. Furthermore, the proposed method generated PLAs with a difference of \(\pm 3.89\%\) from the ground-truth PLAs. The improved ROI segmentation results suggest that the proposed method has potential to assist radiologists in assessing infection severity and analyzing disease progression in follow-up CTs.

Similar content being viewed by others

1 Introduction

The coronavirus disease 2019 (COVID-19), caused by severe acute respiratory syndrome coronavirus 2, originated in China in December 2019 and rapidly spread to 1,000 people in only 48 days [1]. As of June 2021, there are 175 million global cases and 3.75 million deaths spanning more than 192 countries/regions (http://coronavirus.jhu.edu/map.html).

Reverse transcription-polymerase chain reaction (RT-PCR) is the gold standard diagnostic method for COVID-19 [2]. However, RT-PCR may take several days to generate results [3], and in initial disease presentation and asymptomatic cases [4, 5], has shown relatively low sensitivity (\(71\%\)) [6]. Because of these challenges, researchers have explored other methods to classify COVID-19 patients. Chest computed tomography (CT) plays a key role in evaluating the extent of pulmonary involvement and prognostication [7]. Using visual inspection of 51 COVID-19 patients who underwent chest CT and RT-PCR testing within 3 days, Fang et al. [8] achieved classification sensitivity of \(98\%\) from chest CT. In another study [9] of 1014 patients suspected of COVID-19, \(59\%\) had positive RT-PCR results, while \(88\%\) had positive chest CT scans, determined by radiologists. The sensitivity of chest CT to classify COVID-19 was \(97\%\). Thus, CT may exhibit higher sensitivity than RT-PCR. Additionally, CT is widely accessible and already routinely used in hospitals for high-resolution views of lung features [10]. Given the many advantages, CT has become a promising complementary tool for identifying and monitoring COVID-19.

Numerous COVID-19 studies [9, 11,12,13,14] have proven that chest CT characteristics accurately correlate with patient conditions. Some CT features detected in COVID-19 cases are ground-glass opacities (GGO), consolidative opacities, crazy paving pattern, linear consolidations, and reverse halo sign. In advanced stages, the number of lesions increases significantly with newly formed GGO and superimposed consolidation. Also, predominant consolidation and crazy paving patterns can be suggestive of late/advanced stages [15] and GGOs with consolidation decrease in the recovery stage. Furthermore, in severe phases infected areas may appear in all segments of the lungs, expressing as “white lung” [16]. Since distinct chest CT features and extent of involvement relate to COVID-19 severity stage, quantitative assessment of patient severity may be useful for accurate diagnosis. However, to achieve applicable results, region of interest (ROI) segmentations must be precise and easily computed.

Several COVID-19 studies have used ROI segmentation to classify COVID-19 and determine COVID-19 severity [11,12,13] with deep learning (DL)-based systems [17]. Recently introduced DL methods for classification and segmentation have achieved outstanding results. Fan et al. [18] developed Semi-Inf-Net, pre-trained on 1600 CT images with pseudolabels and fine-tuned on 50 CT images with ground-truth (GT) labels, to segment infected regions from chest CT, yielding a dice similarity coefficient of \(73.9\%\) and a specificity rate of \(96.0\%\). MiniSeg [19], another DL method for automatic segmentation of ROI, was evaluated on 100 COVID-19 CT slices of 60 patients and achieved a dice similarity coefficient of \(75.91\%\) and a specificity rate of \(97.72\%\). Even though these DL methods show impressive advances in artificial intelligence-based systems, they are limited because GT lung ROI segmentations are often lacking: manual annotation and segmentation are time-consuming and expertise-dependent tasks and are susceptible to subjectivity driven by individual methodologies [17, 20]. As a result, the analysis of COVID-19 CT findings has been mainly limited to qualitative or semi-quantitative evaluations [14]. In addition, DL algorithms are often trained on datasets acquired by the same CT machine and are annotated by the same radiologists [21]. This limits the model’s usage because it becomes dataset-specific and lacks generalizability.

For these reasons, we propose a threshold-based semiautomatic segmentation method to generate ROI segmentations from lung CT available on COVID-19 Data Archive (COVID-ARC) (https://covid-arc.loni.usc.edu) which curates and disseminates multimodal and longitudinal datasets related to COVID-19 [22]. This segmentation method is used to calculate the percentage of lung abnormality (PLA) to determine COVID-19 severity and improve analysis of disease progression in follow-up CT. It is primarily based on the image segmentation method of thresholding. Using Yen [23], IJ Isodata (ImageJ’s [24] default thresholding method adapted from Ridler et al. [25]), and Region Adjacency Graph (RAG) methods in combination with gray-level binary thresholding, we developed an efficient image processing pipeline for ROI segmentation in lung CT. We compared our method with seven existing thresholding methods to determine the best fit methods.

Diagram of the pipeline: first a preprocessed lung segmentation of a CT image is inputted, and then, Yen thresholding is implemented on the preprocessed input image. After that the lung mask of the CT image is used to generate the percentage of lung abnormality (PLA). If the PLA is \(100\%\) then IJ Isodata thresholding is implemented on the lung segmentation image, else if PLA is less than \(100\%\) the Yen thresholded binary image is used for next steps. Then the Region Adjacency Graph method and gray-level binary thresholding are implemented to finally generate the ROI segmentation

2 Materials and Methods

2.1 Dataset

We applied our segmentation methodology to heterogeneous datasets to assess generalizability. We combined 2 datasets containing CT images of COVID-19-positive patients and segmented ROI images which we used as GT images to evaluate the results. These datasets were accessed from COVID-ARC (https://covid-arc.loni.usc.edu/). Dataset 1 was collected by the Italian Society of Medical Radiology and included 9 CT images, 9 lung masks, and 9 ROI masks (http://medicalsegmentation.com/covid19). Dataset 2 was collected from Wenzhou Medical University in Wenzhou, China, and Netanya, Israel: 19 CT images, 19 lung masks, and 19 ROI segmentation images [26]. Considering the two datasets, we used a total of 84 images from 28 patients: 28 CT images, 28 lung masks, and 28 ROI segmentation images. There are still limited publicly available annotations despite the drive for publicizing datasets; therefore, we evaluated the pipeline on a small, annotated dataset. Additionally, all GT ROI segmentations were performed by radiologists from each source.

2.2 Lung Segmentation and Preprocessing

Lung segmentation is an essential preprocessing step because the thoracic tissues surrounding the lungs and other anatomical structures on a CT image must be removed to allow for accurate analysis of lung features. To generate lung segmentation of each CT image, we first created lung segmentation masks using the lungmask command line tool that provided the trained U-net (R231CovidWeb) model [27]. The lung masks were then used to extract the lung areas from the CT image to process the lung segmentation. As shown in Fig. 1, which represents the different steps to obtain ROI segmentation for each image, the lung segmentation image is first inputted into the pipeline.

2.3 Image Thresholding

A threshold value is used to classify pixel values as either 0 or 1, and in our case, respectively, the normal lung tissue or ROI. We explored the intensity pixel distributions of the lung segmentations identifying which images had a unimodal intensity histogram and which ones had a multimodal intensity histogram. Therefore, we differentiated the images into two groups depending on their intensity histograms: images of type I (IM1) that have unimodal histograms and images of type II (IM2) that have multimodal histograms. To determine the best fit thresholding method for each image type (IM1 and IM2), we evaluated seven thresholding methods and compared the generated PLA with the corresponding GT PLA. The methods we evaluated are Huang [28], Ridler [25], Li [29], Kapur [30], Otsu [31], Yen, and IJ Isodata. These were chosen based on their outstanding performances in converting gray-scale images to binary, and more detailed information about each algorithm is discussed by Sezgin et al. [32]. In the first binarization, all seven thresholding methods are implemented onto the image using ImageJ. First in ImageJ, we uploaded an image in 8-bit format (Image/Types/8-bit) and used the Process/Binary/Options command to select Dark Background. Next to generate a threshold-based binary image, we used the threshold tool (Image/Adjust/Threshold) to select Black Background and then chose a thresholding method and selected Apply to generate the binarized image implemented with the automatically generated optimal method-based threshold value.

2.4 Region Adjacency Graph

After the first binarization, segmentation of the ROI is partially completed since there are other features of the lungs present along with the ROI, which would affect the calculation of PLA or other analyses of the ROI segmentation. To generate an accurate segmentation of only the ROI, RAG thresholding is implemented onto the binary image. This is a region connecting algorithm, which uses graph representation to describe relationships between different regions [33].

The graph connects different superpixels through an edge, and each superpixel region is considered a vertex. The edges between superpixels are colored depending on their weights corresponding to similarities between the regions [34, 35]. The regions covered with dark edges have similar pixel characteristics, while others covered with light-colored edges have different pixel characteristics. Each region \(R_i\) is characterized by two parameters given by Eqs. (1,2): \(\mu _{i}\) is the mean intensity of the set of pixels in the region and \(\sigma _{i}\) is the standard deviation:

At each edge \(e_{ij}\) corresponds to a pair of adjacent regions (\(R_i\),\(R_j\)) and an intensity distance \(d^2\)(\(R_i\),\(R_j\)), given by the Fisher distance in Eq. (3), which is used to measure the similarity between the intensity distributions of two regions and to define the weight of \(e_{ij}\) connecting two adjacent regions.

where \(N_{Ri}\) and \(N_{Rj}\) border pixels that were removed are converted to gray-scale pixels. Since the gray-scaled image may cause pixel miscalculations, a gray-level binarization is used to generate accurate segmentations.

2.5 Calculations

Pixel densities are calculated using the image processing program, ImageJ [24], which calculates pixel density without manually segmenting the ROI. The lung mask is also used to calculate the pixel density of the total lung. After calculating the pixel densities, we used the mathematical expression shown in Eq. (4) to calculate COVID-19 PLA:

Using this equation, we calculated the PLA for each CT image in the dataset.

Several evaluation metrics were computed to evaluate the pixels correctly and not correctly classified in terms of true positive (TP), true negative (TN), false positive (FP), and false negative (FN), where positive pixels are the pixels affected by the infection and negative pixels are uninfected pixels. The Dice similarity coefficient is the overlap index which represents the similarity between the GT and the prediction maps [36]. The Matthews correlation coefficient (MCC) measures the similarity of the GT PLA and the calculated PLA with values between -1 and +1 [37]. Positive values indicate strong similarities between the GT PLA and the calculated PLA, thus corresponding to a good model, and negative values indicate weak similarities as the result of a poor model. Precision measures the reproducibility of the method and is the number of correct positive results divided by the number of positive results predicted by the method. Sensitivity is the proportion of positive pixels that are correctly considered positive with respect to all positive pixels. Finally, specificity is the proportion of negative pixels that are correctly considered negative with respect to all negative pixels.

3 Results

3.1 Evaluation of Best Fit ImageJ Thresholding Methods

Table 1 shows the optimal thresholds for accurate binarization results and the corresponding PLA difference from GT for the different thresholding methods and for the two types of images (IM1 and IM2) analyzed in this study. As shown in Fig. 2, when only observing the generated ROI segmentations, five distinct methods (Ridler, Kapur, Otsu, Yen, and IJ Isodata) seem to give images with indistinguishable physical characteristics from the others, as a result of the similar threshold values for IM1s. Indeed, as listed in Table 1, both Ridler and Otsu shared a threshold value of 87, while Kapur generated 97, Yen was 86, and IJ Isodata generated 117. After comparing the calculated PLA with the GT PLA for each method, we found that out of the seven methods, the Yen thresholding method generated the most accurate segmentations for IM1 images with a difference of \(1.53\%\) from the GT segmentations and IJ Isodata generated the most accurate segmentations for IM2 images with a difference of \(3.24\%\) from the GT segmentations. Therefore, to generate the initial binary images in the proposed method, Yen for IM1 images and IJ Isodata for IM2 images are the best fit methods generating the most accurate threshold value.

3.2 Quantitative Segmentation Results

To evaluate the performance of our method, we compared our segmentation method with three commonly used segmentation methods based on three different deep neural network-based models: Inf-Net [18], Semi-Inf-Net [18], MiniSeg [19], nCoVSegNet [38], and Oulefki [39]. Table 2 shows the statistics of the evaluations. Oulefki was only evaluated with 19 CT images from the dataset, and the other four methods were evaluated on all images from the dataset. Compared with the five models, the proposed method achieved the highest precision (\(47.49\%\)) and specificity (\(98.40\%\)) scores.

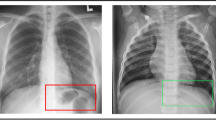

3.3 Qualitative Segmentation Results

To further evaluate the performance of our method, we compared the qualitative ROI segmentation results of our method to the three other DL methods. Figure 3 shows six representative examples of segmentation results, and our method consistently generates results that outperform other DL methods’.

3.4 Quantitative Validation

To validate the performance, we compared the PLA of the single-slice dataset computed from the GT-infected region masks and the ROI segmentations generated by the proposed method. In Fig. 4, the PLA of the GT images and our segmentation images are displayed for each patient. On average, the percentage difference between the PLA obtained with the proposed method and the PLA obtained with GT images is \(\pm 3.89\%\).

Additionally, throughout the dataset IM1s tended to have lower PLAs and IM2s had higher PLAs. To demonstrate the method’s effectiveness in analysis of severity, Fig. 5 shows a diagram of three cases of COVID-19 patients: Fig. 5a IM1 with a low PLA of \(3.33\%\), Fig. 5b IM1 with a medium PLA of \(11.64\%\), and Fig. 5c IM2 with a high PLA of \(67.96\%\). Beginning with the first column, each axial lung CT image of a COVID-19 patient from the dataset is followed by its lung mask in the second column. Lastly, in the third column, the ROI segmentations generated by our method showing the increasing progression of the infection are highlighted in red.

4 Discussion

In this study, we proposed a method for the quantification of lung abnormalities from COVID-19-patients CT images. Based on our analysis, the proposed method is highly effective in calculating accurate PLA for most cases of COVID-19-positive patients. On average, the proposed method generated infection rates within \(\pm 3.89\%\) precision from the GT PLAs. As of our knowledge, this study is the first to specifically validate a thresholding method to generate precise ROI segmentations for COVID-19 CT images. In this work, by evaluating seven thresholding methods: Huang, Ridler, Li, Kapur, Otsu, Yen, and IJ Isodata, we found that the Yen thresholding method generates the most accurate results compared with the other seven methods for IM1 images. In other words, the Yen method performed exceedingly well for the IM1 images by yielding infection rates with the lowest difference of \(1.53\%\) from the GT infection rate out of all the evaluated methods. Additionally, we found that IJ Isodata yields the best segmentations for IM2 images which generate multimodal intensity histograms. The fact that we obtain higher PLA for the IM2 images and lower PLA for the IM1 images may suggest that the two types of pixel intensity distributions (unimodal and multimodal) correspond to two different severity levels of COVID-19 infection. In this view, while the IJ Isodata method seems to be more appropriate for severe infection cases, the Yen method appears to be more appropriate for mild cases. However, further clinical investigations must be done to assess whether different image types correspond to different infection severity rates.

Several methods performed poorly due to large pixel intensities and physical variances of the ROIs. Since the cross-entropies of the images are not convex, Li generated under-segmentations. Ridler and Otsu are cluster-based methods that distinguish natural clusters, and the sharp valley of the gray-level histogram degrades when applied to the dataset. Huang generated several local maximas that resulted in under-segmentations. Kapur did not generate the best threshold value due to the large intensity differences of ROI and background and presence of outlying pixels. Conversely, Yen used the entropic correlation to obtain the maximizing threshold and IJ Isodata used a variation of the iterative intermeans method to compute the best thresholding values.

Additionally, to design a consistent pipeline to apply to both types of COVID-19 CT images we included a system to effectively implement either Yen or IJ Isodata thresholding on a lung segmentation image, as shown in Fig. 1. Yen thresholding mostly applies to mild cases and generates only lung masks, which corresponds to a PLA of 100, when implemented on IM2, as shown in Fig. 2. For this reason the user must first implement Yen thresholding and then either continue to the next step or implement RAG on the binary image if the calculated PLA is less than 100 or implement IJ Isodata thresholding on the lung segmentation CT image if PLA is equal to 100. This system ensures an objective technique to choose between implementing either Yen or IJ Isodata thresholding methods on lung CT images.

Particularly, we were interested to develop an unsupervised, image processing method to facilitate rapid COVID-19 diagnosis. Since annotated medical images of lung segmentation are lacking, unsupervised and semi-supervised methods are in high demand for COVID-19 studies [17]. After further analysis of the method’s potential, as discussed in introduction and results sections, we found that it is also effective in quantitatively analyzing the progression of the disease with information available in lung CT. To further explain, in addition to assisting medical professionals in the rapid diagnosis of COVID-19-positive patients, the proposed method has the potential to facilitate radiologists to quantify severity and visualize disease progression or remission.

A number of studies have established scoring systems useful for the diagnosis and follow-up of COVID-19 patients. For example, Li et al. [12] reviewed the clinical and CT features of 58 mild/ordinary COVID-19 cases and 25 severe/critical cases using a 0–5 thin-section CT scoring system for infection region involvement in each lobe. They found that a common CT finding associated with severe/critical patients has significantly more frequent consolidation compared with mild/ordinary groups. This results in the CT scores of severe/critical patients to be significantly higher than those of mild/ordinary patients. In essence, since the severity rate computed from a chest CT accurately corresponds to the patients’ condition, it may be useful in assisting level-of-care decisions for COVID-19 positive patients.

Additionally, Lessmann et al. [11] developed and validated an Artificial Intelligence algorithm (CORADS-AI) to score the likelihood and extent of COVID-19 on chest CT scans using the COVID-19 Reporting and Data System (CO-RADS) [40] and CT severity score (CTSS). The assigned standardized scores of 367 patients were found to be in good agreement with eight independent observers and generalized well to external data. In another study, Lieveld et al. [13] validated CO-RADS and evaluated its corresponding CTSS with 741 adult patients suspected COVID-19 positive from two tertiary centers in The Netherlands. Since there was a significant association between CTSS and hospital admission (stage 1), ICU admission (stage 2), and 30-day mortality (stage 3), the study findings support the use of CTSS in triage, diagnosis, and management decisions of potential COVID-19-positive patients at hospital emergency departments.

As proven by these studies, the quantified severity of COVID-19 lung abnormalities based on chest CT accurately correlates to patient conditions; therefore, the PLA estimated by our proposed method can potentially help in evaluating the prognosis and assessing treatment methods for rapid care. Indeed, our method gives outstanding performance in generating precise segmentations of lung abnormalities due to COVID-19 that correlate well with the GT PLA. As proven by various studies [12, 13], COVID-19 lung infection severity correlates with the patient condition; similarly, in our study each PLA directly describes the ROI segmentation. Our method could also be used to quantify follow-up CT images of patients since it generates precise PLA from accurate ROI segmentation. Also, when compared with other state-of-the-art methods, the proposed method performed significantly better to generate ROI segmentations. The method produced the highest precision and specificity scores.

Even though the proposed method achieved promising results, there are limitations to this study. First, the lack of COVID-19 datasets including ROI segmentation to use as GT restricted us from further validating this method on a variety of datasets instead of computing data-specific results varying two datasets. Second, since patient demographic information was not provided for either dataset, we were not aware of any underlying conditions which might have contributed to the patients’ lung CT characteristics. Finally, as future work, we consider extending this pipeline to quantify other types of pneumonia lung infections.

5 Conclusion

In this study, we proposed a semi-automatic threshold-based segmentation method to generate lung ROI segmentations from COVID-19 positive patients’ lung CT images. Our results demonstrated the method’ effectiveness in generating accurate ROI segmentations, hence calculating precise PLA to determine COVID-19 severity. Also, given the accurate ROI segmentations obtained, the proposed method may potentially be useful to assess the severity of pneumonia in initial imaging and disease progression or remission in follow-up CT which could assist medical professionals with patient management and prognosis of the disease course. In future experiments, this method could be utilized to develop a fully automatic model with advanced features to assist medical professionals in rapid patient care.

References

Boulos, M.N.K., Geraghty, E.M.: Geographical tracking and mapping of coronavirus disease covid-19/severe acute respiratory syndrome coronavirus 2 (sars-cov-2) epidemic and associated events around the world: how 21st century gis technologies are supporting the global fight against outbreaks and epidemics (2020)

Wang, S., Kang, B., Ma, J., Zeng, X., Xiao, M., Guo, J., Cai, M., Yang, J., Li, Y., Meng, X., et al.: A deep learning algorithm using ct images to screen for corona virus disease (covid-19). Eur. Radiol. pp. 1–9 (2021)

School, H.M.: If you’ve been exposed to the coronavirus. Available at https://www.health.harvard.edu/diseases-and-conditions/if-youve-been-exposed-to-the-coronavirus (2021/06/09)

Gao, Z., Xu, Y., Sun, C., Wang, X., Guo, Y., Qiu, S., Ma, K.: A systematic review of asymptomatic infections with covid-19. J. Microbiol. Immunol. Infect. 54(1), 12–16 (2021)

Xiao, A.T., Tong, Y.X., Zhang, S.: False negative of rt-pcr and prolonged nucleic acid conversion in covid-19: rather than recurrence. J. Med. Virol. 92(10), 1755–1756 (2020)

Zhou, S., Zhu, T., Wang, Y., Xia, L.: Imaging features and evolution on ct in 100 covid-19 pneumonia patients in wuhan, china. Eur. Radiol. 30(10), 5446–5454 (2020)

Kwee, T.C., Kwee, R.M.: Chest ct in covid-19: what the radiologist needs to know. RadioGraphics 40(7), 1848–1865 (2020)

Fang, Y., Zhang, H., Xie, J., Lin, M., Ying, L., Pang, P., Ji, W.: Sensitivity of chest ct for covid-19: comparison to rt-pcr. Radiology 296(2), E115–E117 (2020)

Ai, T., Yang, Z., Hou, H., Zhan, C., Chen, C., Lv, W., Tao, Q., Sun, Z., Xia, L.: Correlation of chest ct and rt-pcr testing for coronavirus disease 2019 (covid-19) in china: a report of 1014 cases. Radiology 296(2), E32–E40 (2020)

Bhandary, A., Prabhu, G.A., Rajinikanth, V., Thanaraj, K.P., Satapathy, S.C., Robbins, D.E., Shasky, C., Zhang, Y.D., Tavares, J.M.R., Raja, N.S.M.: Deep-learning framework to detect lung abnormality-a study with chest x-ray and lung ct scan images. Pattern Recognit. Lett. 129, 271–278 (2020)

Lessmann, N., Sánchez, C.I., Beenen, L., Boulogne, L.H., Brink, M., Calli, E., Charbonnier, J.P., Dofferhoff, T., van Everdingen, W.M., Gerke, P.K., et al.: Automated assessment of covid-19 reporting and data system and chest ct severity scores in patients suspected of having covid-19 using artificial intelligence. Radiology 298(1), E18–E28 (2021)

Li, K., Wu, J., Wu, F., Guo, D., Chen, L., Fang, Z., Li, C.: The clinical and chest ct features associated with severe and critical covid-19 pneumonia. Investig. Radiol. (2020)

Lieveld, A.W., Azijli, K., Teunissen, B.P., van Haaften, R.M., Kootte, R.S., van den Berk, I.A., van der Horst, S.F., de Gans, C., van de Ven, P.M., Nanayakkara, P.W.: Chest ct in covid-19 at the ed: validation of the covid-19 reporting and data system (co-rads) and ct severity score: a prospective, multicenter, observational study. Chest 159(3), 1126–1135 (2021)

Pan, F., Ye, T., Sun, P., Gui, S., Liang, B., Li, L., Zheng, D., Wang, J., Hesketh, R.L., Yang, L., et al.: Time course of lung changes on chest ct during recovery from 2019 novel coronavirus (covid-19) pneumonia. Radiology (2020)

Salehi, S., Abedi, A., Balakrishnan, S., Gholamrezanezhad, A.: Coronavirus disease 2019 (covid-19) imaging reporting and data system (covid-rads) and common lexicon: a proposal based on the imaging data of 37 studies. Eur. Radiol. 30(9), 4930–4942 (2020)

Li, M., Lei, P., Zeng, B., Li, Z., Yu, P., Fan, B., Wang, C., Li, Z., Zhou, J., Hu, S., et al.: Coronavirus disease (covid-19): spectrum of ct findings and temporal progression of the disease. Acad. Radiol. 27(5), 603–608 (2020)

Shi, F., Wang, J., Shi, J., Wu, Z., Wang, Q., Tang, Z., He, K., Shi, Y., Shen, D.: Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for covid-19. IEEE Rev. Biomed. Eng. (2020)

Fan, D.P., Zhou, T., Ji, G.P., Zhou, Y., Chen, G., Fu, H., Shen, J., Shao, L.: Inf-net: automatic covid-19 lung infection segmentation from ct images. IEEE Trans. Med Imaging 39(8), 2626–2637 (2020)

Qiu, Y., Liu, Y., Li, S., Xu, J.: Miniseg: An extremely minimum network for efficient covid-19 segmentation. arXiv preprint arXiv:2004.09750 (2020)

Huang, L., Han, R., Ai, T., Yu, P., Kang, H., Tao, Q., Xia, L.: Serial quantitative chest ct assessment of covid-19: a deep learning approach. Radiol. Cardiothorac. Imaging 2(2), e200075 (2020)

Zhou, L., Li, Z., Zhou, J., Li, H., Chen, Y., Huang, Y., Xie, D., Zhao, L., Fan, M., Hashmi, S., et al.: A rapid, accurate and machine-agnostic segmentation and quantification method for ct-based covid-19 diagnosis. IEEE Trans. Med. Imaging 39(8), 2638–2652 (2020)

Duncan, D.: COVID-19 data sharing and collaboration. Commun. Inf. Syst. 21(3), 325–340 (2021)

Yen, J.C., Chang, F.J., Chang, S.: A new criterion for automatic multilevel thresholding. IEEE Trans. Image Proces. 4(3), 370–378 (1995)

Abràmoff, M.D., Magalhães, P.J., Ram, S.J.: Image processing with image j. Biophotonics Int. 11(7), 36–42 (2004)

Ridler, T., Calvard, S., et al.: Picture thresholding using an iterative selection method. IEEE Trans. Syst. Man Cybern. 8(8), 630–632 (1978)

Jun, M., Cheng, G., Yixin, W., Xingle, A., Jiantao, G., Ziqi, Y., Minqing, Z., Xin, L., Xueyuan, D., Shucheng, C., et al.: Covid-19 ct lung and infection segmentation dataset. Zenodo, 20 (2020)

Hofmanninger, J., Prayer, F., Pan, J., Röhrich, S., Prosch, H., Langs, G.: Automatic lung segmentation in routine imaging is primarily a data diversity problem, not a methodology problem. Eur. Radiol. Exp. 4(1), 1–13 (2020)

Huang, L.K., Wang, M.J.J.: Image thresholding by minimizing the measures of fuzziness. Pattern Recognit. 28(1), 41–51 (1995)

Li, C., Tam, P.K.S.: An iterative algorithm for minimum cross entropy thresholding. Pattern Recognit. Lett. 19(8), 771–776 (1998)

Kapur, J.N., Sahoo, P.K., Wong, A.K.: A new method for gray-level picture thresholding using the entropy of the histogram. Comput. Vis. Gr. Image Proces. 29(3), 273–285 (1985)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Sezgin, M., Sankur, B.: Survey over image thresholding techniques and quantitative performance evaluation. J. Electron. Imaging 13(1), 146–165 (2004)

Shanahan, M.: Perception as abduction: turning sensor data into meaningful representation. Cogn. Sci. 29(1), 103–134 (2005)

Jaworek-Korjakowska, J., Kleczek, P.: Region adjacency graph approach for acral melanocytic lesion segmentation. Appl. Sci. 8(9), 1430 (2018)

Trémeau, A., Colantoni, P.: Regions adjacency graph applied to color image segmentation. IEEE Trans. Image Proces. 9(4), 735–744 (2000)

Taha, A.A., Hanbury, A.: Metrics for evaluating 3d medical image segmentation: analysis, selection, and tool. BMC Med. Imaging 15(1), 1–28 (2015)

Brown, J.B.: Classifiers and their metrics quantified. Mol. Inf. 37(1–2), 1700127 (2018)

Liu, J., Dong, B., Wang, S., Cui, H., Fan, D.P., Ma, J., Chen, G.: COVID-19 lung infection segmentation with a novel two-stage cross-domain transfer learning framework. Med. Image Anal. 74, 102205 (2021)

Oulefki A, Agaian S, Trongtirakul T, Laouar AK (2021) Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 114 : 107747

Prokop, M., Van Everdingen, W., Beenen, L., Geurts, B., Gietema, H., Krdzalic, J., Schaefer-Prokop, C., Van Rees Vellinga, T., Van Ufford, H.Q., Stöger, L., et al.: Co-rads: a categorical ct assessment scheme for patients suspected of having covid-definition and evaluation. Radiology 296(2), 97–104 (2020)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Science Foundation under Award Number 2027456 (COVID-ARC)

Rights and permissions

About this article

Cite this article

Khan, A., Garner, R., Rocca, M.L. et al. A Novel Threshold-Based Segmentation Method for Quantification of COVID-19 Lung Abnormalities. SIViP 17, 907–914 (2023). https://doi.org/10.1007/s11760-022-02183-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02183-6