Abstract

Arithmetic Optimization Algorithm (AOA) is a recently developed population-based nature-inspired optimization algorithm (NIOA). AOA is designed under the inspiration of the distribution behavior of the main arithmetic operators in mathematics and hence, it also belongs to mathematics-inspired optimization algorithm (MIOA). MIOA is a powerful subset of NIOA and AOA is a proficient member of it. AOA is published in early 2021 and got a massive recognition from research fraternity due to its superior efficacy in different optimization fields. Therefore, this study presents an up-to-date survey on AOA, its variants, and applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of all human endeavors is to accomplish as much as possible with the fewest resources (or efforts). For instance, from a corporate perspective, greatest profit with the least amount of expenditure is desired [1]. The idea of optimization then emerges, which is fundamental to achieving the optimum (minimum or maximum) from a given circumstance and has significant significance in both human interactions and the nature's laws [1, 2]. It is therefore abundantly evident that the study of design optimization could aid not just in human activity such as coming up with the best designs for systems, processes, and products. However, it is also utilized in the comprehension, examination, and solution of mathematical issues as well as in the study of mathematical and physical phenomena. [1]. Additionally, it is true that in actual engineering practices, every optimization problem has several necessary limitations or prerequisites. Because of this, creating a design that can be implemented while considering all these different objectives and restrictions is already a demanding endeavor, and guaranteeing that the design is "the best" is even more challenging. Due to the fact that they are so nonlinear and multimodal, conventional optimization strategies were unable to address them. Therefore, in order to find an optimal or nearly optimal solution with the least amount of computing work, sophisticated and intelligent algorithms are required to address the real-world problems. Many NIOAs have been created in the present that mimic the characteristics of biological and natural systems [3]. These algorithms are extremely robust and effective at resolving practical optimization issues in a reasonable amount of time [3,4,5]. Creating these sophisticated algorithms has as its goal the generation of novel and effective answers to the challenging optimization problems that arise in the actual world. In recent years, NIOAs have played an increasingly important role in numerous domains, including computational intelligence (CI), artificial intelligence (AI), machine learning (ML) and others.

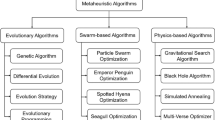

Therefore, NIOAs are widely employed to address real-world issues, and they have proven their efficacy by delivering encouraging results. The scientific era of these kinds of effective optimization algorithms began in 1975, when Holland invented Genetic Algorithm (GA). In addition to that, the literature reports various NIOAs that have been produced up to the year 2022 that are based not just on natural laws but also on physical, social, and biological aspects. Under such circumstances, literature [1, 3] has classified NIOAs into some heterogeneous classes (a) Evolutionary Algorithms (EA) [1, 2]; (b) Bio-inspired optimization algorithms (BIOA) [1, 2]; (c) Physics inspired optimization algorithms (PIOA) [5]; (d) Chemistry inspired optimization algorithms (CIOA) [4]; (e) Mathematics inspired optimization algorithms (MIOA) [4]; (f) Human inspired optimization algorithms (HIOA) [6]. The classification of NIOAs is represented as Fig. 1.

EA utilize the concepts from the Darwinian Theory of Evolution (DTE) in computational terms. GA [7], Evolution Strategies (ES) [8], Differential Evolution (DE) [9], Genetic Programming (GP) [10], and Evolutionary Programming (EP) are the examples of the EA.

The idea of BIOA is to create new exploration and exploitation operators by abstracting biological systems. The majority of created algorithms belong to this class of NIOAs, however bio-inspired techniques are also classified into two main sub-classes: Swarm Intelligence (SI) algorithms and Not Swarm Intelligence (NSI) algorithms. SI relies on the coordinated behaviour of many agents to address complicated issues. These algorithms are thought of as population-based procedures that are motivated by the ways in which ants, bees, and other animal species interact with their natural surroundings. In this category, the most familiar algorithm is the Particle Swarm Optimization (PSO) [11] algorithm, which is inspired by the swarming behaviour of animals like birds and fish. Similarly, the Firefly Algorithm (FA) [12], Cuckoo Search (CS) [13], Ant Colony Optimization (ACO) [14], Artificial Bee Colony (ABC) [15] algorithm etc. NSI algorithms consider biological metaphors without considering the collective behaviour of individuals, as well as many communication methods that have been abstracted from natural events. The Flower Pollination Algorithm (FPA) [16] is one example of an NSI approach. It was created by simulating the pollination process of flowers using pollinating insects.

PIOA is a subset of NIOA that comes from real-world physical laws and usually refers to the communication of search solutions based on guidelines that are built into physical methods. Simulated Annealing [17], Gravitational Search Algorithm [18], Archimedes Optimization Algorithm (AOA) [19], Atomic Orbital Search (AOS) [20], Flow Direction Algorithm (FDA) [21], Equilibrium Optimizer (EO) [22], Atom Search Optimization (ASO) [23], and Nuclear Reaction Optimization (NRO) [24] are some examples of PIOA.

CIOA are devised based on the chemical reaction chemical reactions which are further formulated into solutions to resolve the problems and the examples are Henry Gas Solubility Optimization [25], Chemical Reaction Optimization [26], Artificial Chemical Reaction Optimization [27], Water Evaporation Optimization [28], etc.

MIOA imitates the procedure of numerical techniques, mathematical programming, mathematical operators, and its orientation to resolve numerous constraints and optimization issues of the real environment. Some of the widely known maths-based algorithms are Sine Cosine Algorithm (SCA) [29], Arithmetic Optimization Algorithm [30], Golden Ratio Optimization Method [31], etc.

Lastly, HIOA imitates human behaviour, supremacy, and intelligence. Some examples of HIOA are Teaching Learning-Based Optimization [32], Brain Storm Optimization [33], Human Behavior-Based Optimization [34], etc.

AOA considered as an elite member of MIOA. Total number of citations of AOA is 915 according to Google Scholar on dated 30.12.2022. Several variants and their applications in numerous optimization fields have been performed in literature. However, there is no review or survey paper on AOA is published till date according the best of the knowledge and this is the main motivation of this work. Therefore, the goal of this study is to critically appraise and summarize existing research on AOA. This study is important because it recognizes, categorizes, and analyses the AOA and its improved variants which are used to solve different optimization problems. This study also looks at how AOA is used in different real-world situations and does a thorough analysis. Lastly, makes suggestions for future studies.

The remaining part of this paper is structured as follows. Section 2 presents research methodology and AOA survey taxonomy. Section 3 gives a brief mathematical implementation of AOA and its time complexity. Section 4 discusses and analyzes the improved variants of AOA. Section 5 discusses the binary variants of AOA. Multi-objective AOA variants are demonstrated in Sect. 6. Applications of the AOA and its variants are discussed in Sect. 7. Evaluation of AOA in image clustering field has been performed in Sect. 8. The paper is concluded in Sect. 9 and the potential future directions are also made in the same section.

2 Research Methodology and AOA Survey Taxonomy

Swarm intelligence algorithms, metaheuristic algorithms, multi-objective optimization, optimization algorithms, and evolutionary algorithms are some of the pertinent search phrases which are utilized for the research papers collection. Then, these search terms are put together with the keywords "Arithmetic Optimization Algorithm" and "AOA" to do a thorough search in the sites given below.

-

i.

Google Scholar—https://scholar.google.com

-

ii.

IEEE Xplore—https://ieeexplore.ieee.org

-

iii.

ScienceDirect—https://www.sciencedirect.com

-

iv.

SpringerLink—https://www.springerlink.com

-

v.

ACM Digital Library—https://dl.acm.org

- vi.

After deleting duplicates and studies not directly connected to AOA, collection of 140 research papers has been done. The roadmap to research articles selection has been presented in Fig. 2. Finally, new taxonomies are additionally suggested for the study based on the compiled papers. The papers that have been collected are put into different classes based on whether they are "enhanced" or "single-objective" or "multi-objective." Also, because there is a lot of research on a single objective based on AOA and their enhanced variants, this part also divided as mechanism-based, hybrid-based, and discrete-based. Then, the collected papers are looked at from the point of view of the application domains and summed up. Energy optimizations, engineering optimization, machine learning, image processing, scheduling optimization, etc. areas are popular places to use AOA as optimization tool. Number of enhanced variants of AOA published by different publishers as per surveyed is depicted in Fig. 3. Figure 4 elaborates the top 5 Journals ranked based on publications of variants of AOA. Number of publications of research papers related to AOA per year is depicted in Fig. 5.

3 Overview of AOA

Abualigah et. al. [30] proposed a novel population based meta-heuristic technique named Arithmetic Optimization Algorithm (AOA) which uses the distribution characteristics of the four major arithmetic operators in mathematics namely Multiplication \((M "\times ")\), Division \((D "\div")\), Subtraction \((S "-")\), and Addition \((A "+")\).

In AOA, the initial population is generated randomly by using below equation:

where \(x\) represents the population's solution. The upper and lower limits of the search space for an objective function are denoted by \(U\) and \(L\), respectively. \(\partial\) is the random variable that has a value between [0, 1].

Before the commencement of AOA, the selection of exploration and exploitation has been performed depending on the outcome of Math Optimizer Accelerated \((MOA)\) function which is computed by Eq. (2).

where \(MOA\left(C\_Iter\right)\) represents the functional outcome at the tth iteration. \(C\_Iter\) indicates the present iteration that lies within 1 and maximum number of iterations \((M\_Iter)\). The MOA's minimum value is denoted by the notation \(Min\), and its maximum value is denoted by the notation \(Max\).

The exploration or global search in AOA explore has been performed by using Division \((D)\) and Multiplication \((M)\) operators-based search strategies which is formulated as Eq. (3).

where \({x}_{i}\left(t+1\right)\) represents the ith solution of the \(\left(t+1\right)th\) iteration, \({x}_{i,j}\left(t\right)\) represents the jth position of the ith individual at the present generation. \(best\left({x}_{j}\right)\) expresses the jth position of the finest solution so far. \(\epsilon\) is a tiny positive integer, the upper and lower limit values of the jth position are represented by \({U}_{j}\) and \({L}_{j}\), respectively. \(\mu\) which is a controlling parameter has been set to 0.5 in the source paper. The Math Optimizer Probability \((MoPr)\) which is a coefficient has been computed as follows:

where \(MoPr\left(t\right)\) indicates the value of \(MoPr\) function at tth iteration. \(M\_Iter\) is the maximum iteration number.\(\theta\) is a critical parameter that controls the exploitation efficiency throughout iterations. It is set to 5 in the source paper.

In AOA, the exploitation strategy has been developed using Subtraction \((S)\) or Addition \((A)\) operators as formulated in Eq. (5). Here \(\mu\) is also a constant which is fixed equal to 0.5 in the source paper.

The AOA procedure has been described in Algorithm 1 based on the proposed exploration and exploitation strategies. The AOA flowchart is presented in Fig. 6.

3.1 The Computational Complexity of AOA

The population size, initialization procedures, assessment of the fitness function, and adjustment of solutions are the only factors that affect the AOA's computational complexity. The complexity of the population initialization procedure is \(O\left(n\right)\). Because the fitness function depends on the problem, we do not consider its complexity in this case. If \({M}_{Iter}\) denotes the maximum number of iteration and \(Nd\) represents problem dimension then the AOA's complexity is \(O\left(n\times {M}_{Iter}\times Nd+n\right).\)

Since NIOAs all have their own unique searching mechanisms, it is not possible to reliably predict how long it will take to find the global optimal using a stochastic algorithm. This makes it extremely challenging to estimate the complexity of such methods. For this reason, theoretical CPU time can be used to compare NIOAs, as is noted in the literature.

3.2 Parameter Sensitivity

In the AOA, \(\mu\) and \(\theta\) are very significant parameters because they are the mainly balance the tradeoff between exploration and exploitation. Maintaining of well-balanced exploration- exploitation in any NIOA is very challenging task. In the source paper of AOA, \(\mu\) has been fixed equal to 0.5 for both exploration and exploitation. However, \(\theta\), a crucial parameter that affects the effectiveness of exploitation across iterations, is set at 5. Both the values of \(\mu\) and \(\theta\) are set from exhaustive experiment. Authors performed experiments over different values of \(\mu\) and \(\theta\) and found that \(\mu =0.5\) and \(\theta =5\) produced best results most of the time for unimodal and multi-modal test function over different dimensions. Authors also did the experiments to measure the ability of AOA over the different dimensions and found that AOA performed better for low as well as high dimensional test functions.

However, authors did not perform any test over population size which is also a great parameter of any NIOA. AOA performance is significantly impacted by the size and candidates of the initial population. In the source paper of AOA, authors set the population size \(\left(n\right)\) to 30 for all tested benchmark functions and other problems. However, literature showed that a fixed population size for all kinds of problems over different dimensions cannot be scientific and thus, it is called the curse of population size [35, 36]. According to the literature, one type of adaptation strategy to include well-balanced exploration and exploitation in any NIOA is to vary the population size. Experiments had been performed under three conditions: large population and small number of iterations, small population and large number of iterations, and large population and large number of iterations [37]. The findings revealed that AOA is affected by the size of the population, and a large population is required for the best results. However, a depth research on population size must be performed.

4 Improved Variants of AOA

According to the No Free Lunch (NFL) theorem, just because an algorithm outperforms another on one class of problems does not guarantee that it will outperform all classes of problems [38]. As a result, the effectiveness and superiority of AOA and its variants depends on the problem. However, it is also true that the effectiveness AOA is significantly influenced by the algorithm design perspective such as basic skills like combining and variety amongst individuals, proper equilibrium for both exploration and exploitation search capabilities, etc. Although the AOA showed effective performance in different optimization field, but it suffers from premature convergence, well balanced exploration, and exploitation, etc. Therefore, researchers developed different improved variants of AOA over several optimization fields to overcome such shortcomings. In comparison to classical AOA, the improved AOA variants are better able to handle a variety of intricate real-world optimization issues. The improved variants of AOA are developed by using some strategies. The improvement strategies are specified in Table 1. The utilization proportion of several strategies which are mentioned in Table 1 is presented in Fig. 7. Research articles based on the mentioned strategies are discussed as follows.

4.1 Opposition Based Learning (OBL)

The theoretical foundation of OBL [108] was introduced in 2005, and it evaluates the present guess (estimate) and its equivalent opposite at the same time to create an efficient solution. Whenever the main aim of a method is to determine the optimal solution for an objective function, evaluating a guess and its opposite at the same time will help the algorithm perform better. In classical OBL, the opposite solution of \(X\in [l, u]\) i.e., \(\overline{X}\) is calculated as follows.

Researchers also developed some variants of OBL which are [109] (i) random opposition-based learning (ROBL); (ii) quasi-reflection based OBL; (iii) Archive Solution based OBL. The OBL techniques are utilized for three major purposes: (i) create high-quality initial population; (ii) create better solutions during optimization; (iii) Perform Opposition based Jumping.

Researchers also incorporated OBL and its variants into AOA and found to be a nice efficacy improvement strategy. Classical OBL [71], quasi-reflection based OBL [76], chaotic quasi-reflection based OBL [81] had been efficiently utilized for the AOA’s improvement. Izci et al. [71] propose a new hybrid metaheuristic algorithm to improve the effectiveness of the AOA by applying a modified opposition-based learning mechanism (mOBL). Through test experiments on benchmark functions, the result of this study demonstrated that the suggested method produces a superior outcome compared to other tested algorithms and original AOA technique. Zivkovic et al. [76] presented a new HAOA algorithm that was created by fusing the AOA and SCA algorithms with a quasi-reflection-learning (QRL) based approach to overcome the limitations of the original AOA. The suggested technique begins by doing an AOA search operation; if the outcome does not improve after several iterations, it switches to a SCA search technique, which was governed by an auxiliary trial parameter. If the solution still does not enhance, a quasi-reflective opposite solution will eventually take its order to achieve a better equilibrium between exploration and exploitation during the optimization phase. The HAOA method was tested through CEC2017 benchmark functions and compared with other cutting-edge metaheuristics approaches. The result of this study demonstrates that proposed HAOA performed well compared to other algorithms. Turgut et al. [81] suggested a chaotic quasi-reflection-based AOA method for the thermo-economic optimization of a shell and tube condenser functioning with refrigerant mixes. The suggested method has been used to determine the least yearly fee of the shell and tube condenser by repeatedly changing 9 decision variables. Through tests on benchmark functions and comparisons to the AOA and its quasi-oppositional variant, the proposed method found the best configuration for a shell and tube condenser in a fair amount of time.

4.2 Hybridization Based AOA Variants

One of the notable and effective techniques to improve the ability of NIOA is hybridization. Hybridization helps to improve the exploration–exploitation balance and enables algorithms to introduce knowledge particular to a given situation. Hybrid AOA variants can be categorized into three classes based on the implementation approach. The three classes are (i) Entire NIOA based hybridization, (ii) NIOA’s operator-based hybridization, and (iii) Eagle strategy.

In the entire NIOA based hybridization, both the considered NIOAs work independently. However, they perform the information sharing among their populations during optimization process. It is the simplest form of hybridization which takes the benefit of various NIOAs to find the optimal solution.

In NIOA’s operator-based hybridization, operators of one NIOA is incorporated into other NIOA to achieve a deep fusion between NIOAs and enrich the searching capability.

Yang and Deb's Eagle strategy (ES) [110] is a two-stage optimization technique that preserves excellent exploration and exhaustive exploitation through a well-balanced combination of several techniques. In one stage, ES searches the entire globe for promising solutions by utilizing excellent global search technique. After that, the promising solutions achieved in global search period are enhanced with a more effective local optimizer. Following this, the two-stage procedure begins once more with fresh global exploration, which is preceded by a local search in an area that is either new or more promising. In Table 2, a brief summary of the hybridized variant of AOA is presented.

4.3 Lévy Flight-Based Strategies

Lévy flight (LF), a search mechanism in metaheuristic optimization algorithms, has become more popular in the last few years as a means of resolving challenging real-world issues. In a LF-based strategy, the search process is modeled as a stochastic walk in which the steps taken are distributed based on a Lévy probability distribution. This distribution has "heavy tails", which means that it allows for larger steps to be taken with a higher probability than in a standard random walk. This property can be useful for escaping local optima and exploring various areas of the search space. In comparison to their non-LF contemporaries, LF-based algorithms have been found to produce better or equivalent results, and they are therefore expected to be more useful in scenarios where there is no prior knowledge available, the targets are difficult to determine, and the distribution of the targets is sparse. It has been developed as a substitute for the Gaussian distribution (GD) to create randomization in metaheuristic optimization algorithms and has uses in a wide range of biological, chemical, and physical processes. Azizi et al. [86] presented a technique in which the AOA was used as the primary optimization algorithm to enhance fuzzy controllers used in steel constructions with nonlinear characteristics. An enhanced variant of this technique, the IAOA, is also proposed to increase the performance of the AOA. The LF is integrated in the IAOA's main loop as part of a new parameter identification. IAOA and AOA are used to optimize the membership functions and rule base of the fuzzy controllers employed in a big construction framework. The obtained results of the improved method show this method's ability to produce highly competitive solutions. Zhang et al. [87] proposed an enhanced variant of AOA called EAOA that is based on Lévy variation and differential sorting variation to increase overall optimization capability and address AOA's limitations. The Lévy variation boosts population variety, broadens the space for optimization, improves the power of global search, and boosts computing accuracy. The differential sorting variation eliminates the best search agent, prevents search stagnation, improves the effectiveness of local searches, and quickens convergence rates. The experimental findings demonstrate that, when compared to other algorithms, the EAOA has superior exploration and exploitation capability to obtain the global optimal solution. Abualigah et al. [79] presented an Augmented AOA (AAOA) based on the OBL and LF distributions to improve the AOA’s exploration and exploitation findings. The outcome of this study shows that the addition of OLB and LF enhances the functionality of conventional AOA and yields notable results in comparison to other metaheuristic algorithms. The same technique was also used in [80] for efficient text document clustering. The suggested strategy was tested using several UCI datasets for the text clustering issues and evaluated using a set of CEC 2019 benchmark functions. The findings demonstrate that, in comparison to other examined procedures, the suggested strategy yields better outcomes. In order to improve the exploitation capability of AOA throughout the search process, Abualigah et al. [58] proposed the AOASC techniques, which relied on utilizing the sine–cosine algorithm's operators and LF distribution. As a result, the proposed method's rate of convergence with the optimum solution increased. The result of this study showed that the suggested strategy performed well than other cutting-edge metaheuristic algorithms in terms of performance metrics.

4.4 Chaotic Random Number-Based Strategies

Chaotic random number-based strategies for metaheuristic optimization algorithms make use of chaotic systems, which are systems that exhibit seemingly random behavior but are actually deterministic. These systems are characterized by sensitivity to initial conditions, also known as the butterfly effect, which means that small changes in the starting conditions can result in significant changes in the behaviour of the system over time. The idea behind using chaotic systems in optimization is that the system's chaotic behaviour can be leveraged to provide random numbers that can be used to more effectively explore the search space. This is accomplished by using a chaotic system to make a sequence of random numbers, which are then used in the optimization algorithm. The chaotic random numbers have the property of being highly dependent on the initial conditions, this allows to prevent falling in local optima and accelerate convergence. Due to these advantages, chaotic maps are useful in the development of evolutionary algorithms. Xu et al. [88] suggested an enhanced variant of the Extreme Learning Machine (ELM) paradigm by developing a novel enhanced metaheuristic termed the developed AOA (dAOA) for the detection of proton exchange membrane fuel cells. To improve AOA's efficiency and overcome the constraint of the local optima trapping problem, chaotic theory is employed. It aids the algorithm in escaping from the local optima, resulting in a faster convergence speed. The chaotic arithmetic optimization (CAOA) approach was presented by Li et al. [89] to enhance the equilibrium of exploration and exploitation during the optimization process and increase convergence accuracy. At first, 10 chaotic maps are embedded independently into two parameters, the MOA and math optimizer probability (MOP), which influence the exploration and balancing of AOA. Twenty-six benchmark functions from CEC-BC-2017 are used to evaluate the efficiency of the proposed CAOA. The experimental findings showed that CAOA can clearly address the issues associated with engineering optimization and function optimization. The same method was also recommended by Aydemir [90] to improve AOA effectiveness and help get over the problem of local optima trapping. The outcome of this study reveals that the suggested CAOA algorithm outperforms the original AOA algorithm in producing satisfactory and encouraging outcomes in solving optimization problems. In order to improve the AOA's potential for both global searches and local development, Li et al. [91] proposed a Lorentz triangular search variable step coefficient based on the broad-spectrum trigonometric functions accompanied with the Lorentz chaotic mapping approach, that include a total of twenty-four search operations in four different categories. The position update improved the accuracy and convergence speed of the algorithm. The findings of this research showed that the suggested method outperforms other well-known meta-heuristic methods through test experiments on benchmark functions.

4.5 Other Probability Density Functions-Based Strategies

The combined economic emission dispatch (CEED) problem was addressed by Hao et al. [92] using a probability distribution AOA based on variable order penalty functions. The variation trend of the MOA in the original AOA was suggested to be replaced with the energy attenuation technique, which more evenly balances the AOA's exploitation and exploration. The control parameter was changed to five different probability distribution functions in order to improve the system's exploratory capacity, rate of convergence, and capability of eluding the local optimal. Through test experiments on CEC-BC-2017 benchmark functions, experimental findings demonstrate that the probability distribution-based AOA solves CEED problems superior than other algorithms, and the variable order penalty approach increases search efficiency and convergence speed compared to the fixed penalty approach. Influenced by various initialization techniques, Agushaka et al. [93] presented an enhanced variant of AOA, namely nAOA, based on a probability distribution function. The initialization circumstances addressed include population sizes, population variability, and the number of iterations. Twenty-three different probability distributions with varying degrees of diversity were utilised in order to investigate the impact of initialization techniques on the efficiency and precision of the nAOA. Performance of the suggested method was compared to that of seven other well-known metaheuristic methods, and the findings indicate that it can show promise in resolving all issue scenarios while utilizing the best initialization procedures. Abualigah et al. [78] suggested a method called GNDAOA by fusing three elements: AOA, generalised normal distribution optimization, and OBL in order to address the large and difficult issues. In order to solve the main drawbacks of the original AOA approach, these features are used based on a new transitions technique to organize the executions of the applicable methods throughout the optimization procedure. The presented method outperformed other widely used approaches, and it got the finest outcome in 93% of the tested cases of the 23 benchmark functions. Being a metaheuristic algorithm, AOA’s performance is influenced by the size of population and the iterations number. In order to handle this issue, Agushaka et al. [37] presented various initialization methods to enhance the effectiveness of AOA. The numerical results showed that AOA is susceptible to size of the population and requires a large size of the population for optimum solution. In addition to that, various distribution functions were incorporated to initialize the AOA, and the result showed that, out of the various distribution functions, beta distribution leads the AOA's performance to find the global optima. Emre Celik [55] presented an enhanced version of AOA based on information exchange, GD, and quasi-opposition learning to address issues like local optima trapping and slow convergence rates. At first, information sharing was introduced among the search agents. Then, a reasonable method based on the GD was used to explore promising options in the vicinity of the optimal and current solutions. Finally, quasi-opposition learning was employed to boost the possibility of getting close to the global solution. The efficacy of the proposed method was evaluated using twenty-three standard benchmark functions, ten CEC2020 test functions, and one real-life engineering design. The result demonstrates that the proposed technique achieved a higher accuracy and convergence speed in comparison to the original AOA. Ates et al. [94] presented a distribution function-based AOA for global optimization. Instead of uniform distribution, the random coefficients in their study are updated with various other distribution functions. The suggested method's efficiency was assessed using a set of global benchmark optimization and real-world engineering applications, and it was observed that half-normal and exponential distribution functions have higher controlled efficiency.

4.6 Other Arithmetic Operators

In order to boost convergence throughout the exploitation and exploration phases, Panga et al. [95] presented an enhanced AOA by combining various functions like square, cube, sine, and cosine in the algorithms stochastic scaling coefficients. Several benchmark functions are used to contrast the presented method with the conventional method. The numerical findings showed that the suggested method using sin and cos has finest mean, best, and standard deviation values. Agushaka et al. [96] proposed the nAOA algorithm, which takes advantage of the high-density data that the natural logarithm and exponential operators may provide, to improve the AOA exploration capabilities. The exploitation was performed by the addition and subtraction operators, and the beta distribution is used to initialized the candidate solutions. The efficacy of the suggested technique was assessed using thirty benchmark functions and three engineering design benchmarks. The outcome of this study demonstrates that the presented nAOA achieved better performance in the benchmark functions compared to the AOA and nine other popular methods and was second only to the grey wolf optimizer (GWO). Hao et al. [97] presented an enhanced variant of AOA based on elementary function disturbances to resolve economic load dispatch (ELD) problems. Six elementary functions were adopted, and by adding the disturbance produced by each of these functions to the MOA and MOP parameters, it is possible to balance AOA capacity more effectively for exploration and exploitation, improve global search capability, prevent it from succumbing to local optimization, and boost the convergence rate. Based on the comparison of the original AOA to six variants using 23 benchmark functions from CEC-2005, it was found that the suggested AOA provides the finest outcome for solving ELD problems. By combining the essential arithmetic operators with the fundamental trigonometric functions, Selvam et al. [98] suggest a new arithmetic-trigonometric optimization algorithm (ATOA). The suggested technique is integrated in a variety of ways using several combined permutations from the sin, cos, and tan functions. Test experiments on 33 benchmark functions were conducted, and the findings showed that the presented techniques performs well than traditional algorithms. Devan et al. [99] suggested a hybrid ATOA for complicated and continually growing real-time problems using several trigonometric functions. The suggested method increases convergence speed and the optimum search area during the exploration and exploitation phases by combining the SCA and AOA with the trigonometric functions sin, cos, and tan. Thirty-three benchmark functions were utilized to assessed the efficacy of the suggested ATOA, and the findings demonstrated that the suggested ATOA obtained faster global minima in less iterations.

4.7 Population Based Strategies

Even though the AOA has performed well in comparison to other cutting-edge meta-heuristic algorithms, it still struggles with drawbacks including poor exploitation capability, a propensity for slipping into local optimum, and poor convergence rate in a wide range of applications. The initial population control technique is vital in enhancing the efficacy of optimization algorithms and assists in identifying the global optimum solution. Being a metaheuristic algorithm, AOA’s performance is influenced by size of the population and the iterations number. In order to handle this issue, Agushaka et al. [37] presented various initialization methods to improve the performance of AOA. Experiments had been performed under three conditions: large population and small number of iterations, small population and large number of iterations, and large population and large number of iterations. The numerical findings demonstrated that AOA is susceptible to the size of the population and needs a large population for optimum efficiency. In addition to that, various distribution functions were incorporated to initialize the AOA, and the result showed that, out of the various distribution functions, beta distribution leads the AOA's performance to find the global optimum solution. In order to address the drawbacks of the original AOA, Agushaka et al. [96] introduced an enhanced variant of AOA, called nAOA, which initializes the candidate solutions with the beta distribution. The efficacy of presented method was evaluated using 30 benchmark functions and the result of this study demonstrates that use of beta distribution to generate initial population greatly enhance the algorithms performance compared to other tested algorithms. In order to examine how initialization methods affected the convergence rate and accuracy of the nAOA, the same author used 23 distinct PDFs with varying degrees of diversity in [93]. The study's findings demonstrated that the nAOA is susceptible to size of the population and iteration count, both of which must be high for best performance. It was also noted that the various initialization techniques employed for the majority of test functions did not significantly affect the efficiency of the nAOA. However, for the majority of functions, the beta distribution variation b (3, 2) gives the optimum solution. Hu et al. [48] proposed an improved hybrid AOA called CSOAOA that incorporates a point-set strategy, an optimal neighborhood learning approach, and the crisscross method. Firstly, a good point set initialization technique increases algorithm convergence by creating a better beginning population. Then, the algorithm is guided by the optimal neighbourhood learning technique, which increases search efficiency and computation accuracy by preventing the algorithm from settling into the current local optimum. Finally, by merging the crisscross optimization method with AOA, boost the method’s exploration and exploitation capabilities. The experimental findings demonstrated that the suggested method performed better in terms of precision, convergence rate, and solution quality than comparing methods. In order to solve numerical optimization problems, Chen et al. [100] presented an improved AOA method based on the population control mechanism. The information of every individual can be employed efficaciously by categorizing the population and dynamically regulating the numbers of individuals in each subpopulation. This expedites the algorithm's search for the optimal value, prevents it from encountering a local optimum, and increases the solution's accuracy. Six systems of nonlinear equations, 10 integrations, and engineering challenges are utilized to assess the effectiveness of the presented IAOA technique. The findings of this study reveal that the suggested approach outperforms other algorithms in terms of accuracy, convergence rate, consistency, and robustness.

4.8 Information Sharing

One of the most significant issues with AOA is becoming stuck in the local optimal solution. This limitation of AOA has been overcome by incorporating a variety of other strategies, one of which is the sharing of information amongst the search agents, which has proven to be an excellent alternative in the search for the global optimum solution. Since AOA's search agents only adjust their locations in relation to the position of the finest-obtained solution, this strategy is highly likely to result in a stuck state at a local optimum. Emre Celik [55] presented an enhanced version of AOA based on information exchange, GD, and quasi-opposition learning to overcome the limitation of local optima trapping. To promote diversity within the swarm and alleviate premature convergence, information sharing mechanism was enabled among all individuals. The efficacy of the proposed method was assessed using 23 standard benchmark functions, ten CEC2020 test functions, and one real-life engineering design. The result demonstrates that the presented method outperforms the original AOA and the other tested algorithms in terms of accuracy, convergence rate and helps to find the global optimal solution. An improved hybrid AOA, CSOAOA, was proposed by Hu et al. [48], which combines a point set approach with an optimal neighbourhood learning strategy and a crisscross technique. At first, a high-quality initial population is obtained through the incorporation of a good point set initialization technique, which increases the convergence speed of the algorithm. To further enhance search efficiency and calculation accuracy, the optimal neighbourhood learning approach was implemented to direct an individual's search behaviour and prevent the algorithm from settling into the present local optimum. Finally, the exploration and exploitation capabilities of the crisscross algorithm were integrated to boost the algorithm searching efficacy. In terms of accuracy, convergence time, and solution quality, the experiment's findings demonstrate that CSOAOA performs better than the comparative algorithms.

4.9 Other Utilized Strategies to Improve AOA

In addition to the aforementioned method, several other strategies have been proposed to enhance the effectiveness of AOA. Abualigah et al. [54] suggested an improved AOA called MCAOA, by combining the marine predators algorithm (MPA) and ensemble mutation strategy (EMS). The MPA and EMS enhance the AOA’s convergence speed and balance in its exploration and exploitation methodologies. The suggested approach was examined using seven typical engineering design cases, 16 feature selection issues, and 23 different benchmark functions. The findings demonstrate that the suggested method achieved the best solutions for numerous challenging issues when compared to other cutting-edge methods.

An enhanced variant of AOA was presented by Fang et al. [101] to evaluate the support vector machine's parameters. At first, dynamic inertia weights are applied to enhance the algorithm's capacity for exploration and exploitation as well as to speed up convergence. Secondly, dynamic mutation probability coefficients and the triangular mutation strategy are presented to enhance the algorithm's capabilities for avoiding local optimum. The efficacy of the suggested technique was assessed using six benchmark functions, and the experimental result revealed that the presented technique had strong global search capabilities, greatly increased optimization-seeking capabilities, and excellent performance in SVM parameter optimization.

Liu et al. [102] presented an enhanced AOA, by employing a multi-strategy method to enhance the functionality of the AOA and address issues in the AOA. First, circle chaotic mapping is employed to broaden the population's diversity. In order to increase the algorithm's convergence, the MOA function optimized using a composite cycloid is also suggested. The composite cycloid's symmetry is also employed to equilibrium the global search capability between early and late iterations. Finally, a sparrow elite mutation technique combined with Cauchy disturbances is used in an optimal mutation strategy to increase individuals' ability to jump out of the local optimal. Through test experiments on 20 benchmark functions, the experimental findings demonstrate that the proposed algorithm has better global search ability and speeds up convergence in comparison to other tested algorithms.

Zheng et al. [103] introduce an enhanced variant AOA, incorporating a suggested forced switching mechanism (FSM) to enhance the searching ability of the original AOA. In order to boost population variety and improve global search, the modified method makes use of the random math optimizer probability (RMOP). In order to assist the search agents in leaving the local optima, the FSM was then added to the AOA. The suggested FSM will force search agents to engage in exploratory behaviour when they run out of iterations trying to locate better places. As a result, incidents of becoming trapped in local optima might be avoided. The efficiency of suggested technique was evaluated using 23 benchmark functions and 10 test functions from CEC2020, and it was compared to the AOA and various well-known optimization methods. The experimental findings demonstrate that, for most of the test functions, the suggested method outperforms other comparison algorithms.

Peng et al. [104] developed an enhanced AOA named PSAOA that utilizes the suggested probabilistic search strategy to improve the original AOA's searching quality. In addition, an adjustable parameter is created to optimize the operations of exploration and exploitation. Additionally, a jump strategy is built into the PSAOA to help individuals get out from local optima. The proposed PSAOA performance was assessed using 29 benchmark functions and tested with other algorithms. The experimental result demonstrated that, for most of the test functions, the presented PSAOA outperforms other comparison algorithms.

Wang et al. [105] introduced an adaptive parallel arithmetic optimization algorithm (APAOA) with a unique parallel communication strategy to address the problem of robot path planning with the aim of searching a collision-free optimal motion path in an environment containing obstacles. The fitness value of the particles serves as the foundation for the suggested adaptive equation. Because of the benefits of the adaptive equation, the algorithm might be better capable of balancing the search abilities of the exploration and exploitation phases and avoid settling on a local optimum solution. Numerous techniques may be combined for population-based optimization algorithms to modify the primary procedure and enhance optimization efficiency.

Liu et al. [106] suggests an enhanced AOA using hybrid elite pool strategy to address the drawbacks of the AOA. MOA function is redeveloped to balance the capacity for both local exploration and global exploitation. To boost the efficiency of the search, hybrid elite pool techniques combine search approaches with various skills and allow them to support one another. The efficacy of the presented algorithm evaluated using 28 benchmark functions and two engineering problems, and the result demonstrate that AOA with developed MOA boost the convergence rate and helps to escaping from local optima.

Hu et al. [48] developed CSOAOA, an upgraded hybrid AOA with point set strategy, optimal neighbourhood learning approach, and crisscross strategy. At first, a good point set initialization technique increases method convergence by creating a better initial population. The ideal neighbourhood learning technique guides the individual's search behaviour and prevents the algorithm from stuck into the current local optima, enhancing search efficacy and computation accuracy. Finally, the CSOAOA approach improved its exploration and exploitation capabilities by combining AOA with the crisscross optimization method.

Masdari et al. [107] presented a binary quantum inspired technique based on AOA (BQAOA) for computational tasks offloading choices on mobile devices (MDs) with low computation and assured convergence. Due to the fact that task offloading is an NP-hard problem, it is essential to choose strategies that have the best chance of producing outcomes for a range of quality criteria, including energy consumption and response time. The benefits of AOA and quantum computing have been applied to enhance MD performance. The result of the study demonstrates that suggested BQAOA performed well compared to other cutting-edge heuristic and meta-heuristic algorithm.

4.10 Utilization of AOA’s Operators

In addition to that AOA’s operators are also utilized by other NIOA like Flow direction algorithm (FDA) [115] and Moth Flame optimizer (MFO) [116] to improve their performance. To enhance the effectiveness of the original FDA a new technique called FDAOA was proposed that employed the arithmetic operators which have been utilized in the AOA. The primary goal of the presented FDAOA was to overcome the shortcomings in the original techniques, such being stuck in the local optima, convergent too early, and having a weak balance between the exploration and exploitation of search processes. The suggested FDAOA's effectiveness was assessed using 23 benchmark functions, and the findings indicated that it achieved noteworthy outcomes in comparison to the other techniques on the various tested problems. For optimal data clustering, a new approach, called MFOAOA was proposed, which combines MFO and AOA to determine the optimal centroid. The optimal clustering of data in a dynamic system produces the most relevant and actionable insights for making decisions. The effectiveness of the suggested method was assessed using Friedman ranking and Wilcoxon rank tests and the outcome of the study demonstrated that incorporates of these two techniques greatly enhance the overall performance.

5 Binary Variants of AOA

Discrete problems are a vital area of study in optimization. The decision space for discrete problems is not continuously distributed like that of continuous problems, and a little shift in a single dimension of the solution might have a huge effect on the solution in the objective space. AOA's algorithmic base and encoding techniques must be developed for application in discrete problems. Several separate variants of AOA have now been produced by researchers. While the core mathematical principles of AOA have remained constant, researchers have mostly updated the original AOA in terms of encoding techniques by translating or interpreting of every dimension of the candidate solution into binary values. For instance, the binary encoding binarizes the potential solutions while maintaining the algorithmic foundation of the original AOA and modifies the operator formulas to support binary data operations.

Dahou et al. [117] and Bansal et al. [118] developed binary version of AOA and employed for feature selection. The convolutional neural network (CNN) was utilized to learn and extract features from input data, and the Binary AOA was used to pick the best features for Human Activity Recognition [117]. Binary AOA with S-shaped (sigmoid) and V-shaped (hyperbolic tangent) transfer functions had been designed and renamed as BAOA-S and BAOA-V in [118]. Both the BAOA variants are utilized to select optimal feature set from traditional handcrafted features and CNN based features. BAOA was also utilized for incapacitated facility location problems [119]. A binary quantum AOA had been developed in [107] and applied in mobile edge computing.

There are still numerous issues with the binary AOA. First, rather than discretizing the AOA formula, most studies simply convert continuous AOA to binary AOA by translating the continuous values of the feasible solutions to discrete binary values using the conversion function. Since continuous space and discrete space are essentially distinct from one another, this approach is simple yet ineffective because the local region in continuous space can be created by making minor adjustments to the candidate solution over several dimensions. Contrarily, the local region in discrete space is challenging to describe, and in discrete space, a tiny change in one potential solution's dimension can result in a significant shift in the objective value.

6 Multi-Objective AOA

In contrary to typical single objective optimization, many objectives must be met in a multi objective optimization situation. It is difficult to find a single optimal solution since goals are fundamentally conflicting, with the optimization of one aim at the expense of the diminution of others. Instead, they exchange off and collaborate to achieve the best overall goal feasible. As a result, multi objective problem optimization is a difficult undertaking in the optimization discipline. Numerous studies have attempted to enhance AOA's ability to tackle multi-objective problems as a result of its exceptional performance in optimizing a single objective.

Premkumar et al. [120] designed a Multi-Objective AOA (MOAOA) through an elitist non-dominance sorting and crowding distance-based mechanism and found promising results in CEC-2021 Constrained Optimization Problems. Khodadadi et al. [121] developed an archive-based MOAOA to find non-dominated Pareto optimum solutions. The experimental results showed that proposed MOAOA produced competitive outcomes when compare to MOPSO, MSSA, MOALO, NSGA2 and MOGWO over CEC-2020. To boost the setup of the hybrid system under various strategies, Li et al. [122] created a MOAOA employing non-dominated sorting, mutation procedures, and an external archive mechanism. Bahmanyar et al. [123] used a MOAOA to figure out optimal scheduling of household appliances to lower daily electricity bills, peak to average ratio (PAR), and user comfort (UC). MOAOA's findings were determined to be competitive when compared to MOPSO, MOGWO, and MOALO.

It can be observed that only a few works and applications were completed, despite the fact that MOAOA generated exceptional outcomes. As a result, an increasing number of academics must employ AOA to resolve multi-objective optimization problems. Experiments reveal that AOA outperforms similar techniques in terms of search multi-directionality and exploration, exploitation, and convergence rate. As a result, using AOA to multi-objective optimization problems is a potential research topic. There is still a scarcity of research on MOAOA, and more is required.

7 Application Areas of AOA and Its Variants

Since its inception in the year 2021, AOA and its variants have been employed to unravel assorted problems that belong to different application areas. The application areas are presented in Table 3.

8 Evaluation of AOA in Image Clustering Field

It can be observed from applications of Table 3 that AOA and its improved variants are applied in several domain including data and text clustering domain. However, AOA has not been utilized for image clustering which is an efficient image segmentation domain. AOA and its variants are used to resolve multi-level thresholding-based image segmentation problem. Therefore, this study performs experiments on image segmentation using AOA based fast fuzzy clustering where image histogram has been taken for clustering rather than all pixels of the image.

8.1 Histogram Based Fast Fuzzy Clustering

Histogram based fast fuzzy clustering has been developed in [171, 172] and provides excellent results over different kinds of image segmentation. FCM associates with an enormous time complexity (TC) because it considers all pixels of the image and image size is large in recent era. The TC of FCM is \(O(N\times c\times d\times t)\), where,\(N, c, t,\mathrm{ and }d\) are the number of pixels, clusters, iterations, and dimension respectively. To overcome the time complexity issue, segmentation has been done in this study on a grey level histogram rather than image pixels. The objective function for histogram based FCM (HBFCM) may then be written as:

Here, \(L\) and \(c\) are the number of gray levels and clusters, respectively. \({u}_{ki}^{m}\) is the membership of the \({g}_{i}\) gray level in the \({k}{th}\) cluster. \(\Vert .\Vert\) is an inner product-induced norm in \(d\) dimensions that determines the distance among gray level \({g}_{i}\) and \({k}{th}\) cluster center\({z}_{k}\).

At last, \(U={\left[{u}_{ki}\right]}^{c\times L}\) is obtained. Finally, the ultimate membership matrix \({U}^{^{\prime}}={\left[{u}_{ki}^{^{\prime}}\right]}^{c\times N}\) for entire pixels of However, \({u}_{ki}\) \(f\), is found by the following expression.

\(\text{Here, }f\left(x, y\right)\mathrm{is the intensity at} \, (x, y)\) and pixel having intensity \({g}_{i}\) associates with \({u}_{ij}^{^{\prime}}\) membership. TC of HBFCM is \(O(L\times c\times \mathrm{d}\times t)\), where \(L\ll N\) and TC is largely reduced. The procedure of AOA based fast fuzzy clustering has been shown in Algorithm 2.

8.2 Experimental Results

This section presents the experimental findings that were carefully carried out on 50 real gray scale images using MatlabR2018b and Windows-7 OS on an × 64-based PC with an Intel core i5 CPU and 8 GB RAM. These grey scale images were obtained from the following website: http://www.imageprocessingplace.com/rootfilesV3.htm. The results of AOA have been compared with PSO [11], Dragonfly Algorithm (DA) [173], SCA [29], Ant Lion Optimizer (ALO) [174], GWO [175], and Whale Optimization Algorithm (WOA) [176].

The numbers of clusters \(\left(nc\right)\) are taken as 6 and 10 for all clustering algorithms. The parameters setting of the tested NIOA are given in Table 4. The size of the population and maximum number of iterations for all NIOA are taken as 50 and 500, respectively for fair comparison. The parameters of the tested NIOA are set from the experience, doing exhaustive experiment, and source papers. The optimization capability of the NIOA is compared by computing the mean fitness (MF) and standard deviation (STD) values. Every NIOA based clustering model has been run 30 times for every image and cluster number. The efficacy of the NIOA based fast fuzzy clustering models are evaluated using the three well-known segmentation quality metrics namely Feature Similarity Index (FSIM), Peak Signal to Noise Ratio (PSNR), and Structural Similarity Index (SSIM) [177].

Figure 8 demonstrates the segmented outcomes of the tested NIOA based fast fuzzy clustering models for Fig. 9 over \(nc\) = 6 and 10. The MF and STD values along with segmentation quality metrics are reported in Tables 5 and 6 for \(nc\) = 6 and 10, respectively. The MF and STD values demonstrates that the optimization capability of the AOA is improved and competitive compared to other tested NIOA regardless of \(nc\). The MF values are graphically plotted in Fig. 10 which clearly demonstrates that the following order is seen based on the optimization ability in terms of MF over \(nc\) = 6 and 10.

Segmented output of NIOA based fast fuzzy clustering models over Fig. 7

AOA > ALO > GWO > WOA > DA > PSO > SCA.

Average numerical values of the three segmentation quality metrics namely PSNR, FSIM, and SSIM are also reported in Tables 5 and 6 for \(nc\) = 6 and 10, respectively to measure the segmentation capability of the NIOA based fast fuzzy clustering models. Here, it is also found that AOA based clustering model provides best outcomes. Results in bold in the given tables indicate best outcomes.

To compare the fitness values of AOA to those of other NIOA, the Wilcoxon's ranking test is also utilized. It is possible to compare the results of two various strategies using such a test. To exclude the null hypothesis, a p-value of less than 0.05 (5% significance level test) significantly demonstrates that the findings of the improved algorithm vary statistically substantially from those of its peers and that the difference is not due to chance. Wilcoxon's test for pair-wise comparison of fitness functions for cluster numbers of 6, 8, and 10, yields p-values in Table 7. It is evident from Table 7 that p ≤ 0.05 and h = 1 which demonstrate that the AOA fitness values for the performance are statistically greater and did not occur by chance.

9 Conclusion and Future Directions

This study represents an up-to-date survey on AOA and its different variants and their applications in different optimization fields. The research progress of AOA is excellent and attracts researchers to work on it. In this paper, we present the procedure of paper collection, overview of AOA including its time complexity and parameters sensitivity. After that the utilized improvement strategies for the enhancement of AOA are discussed. In addition to that binary variants of AOA for solving discrete problems are also discussed. AOA and its improvements produced supreme results in multi-objective-based optimization domains. Applications of different variants of AOA to solve single-objective, multi-objective, discrete problems are outstanding and encouraging. We also evaluate the effectiveness of AOA in clustering-based image segmentation domain because AOA’s application in this domain has not been done according to the best of our knowledge. The results proved its splendid efficacy also in image clustering domain. AOA’s efficiency is superior for small as well as for large cluster number. The key benefits of AOA are its simple structure, good performance over complex and high dimensional problems, and its scalability. However, AOA’s major limitations based on the AOA’s structure are as follows:

-

(1)

The exploration–exploitation ability of AOA depends on two parameters namely \(\mu\) and \(\theta\) which are fixed in AOA.

-

(2)

The sharing of information and guidance by global best solution which are the major mechanism on any NIOA, are not included in the classical AOA.

-

(3)

Only division, multiplication, subtraction, and addition are present in the AOA. Only uniform distribution is utilized for randomization, and only the finest solution is modified based on the arithmetic operators in both exploration and exploitation steps of the AOA.

In addition to that other limitations based on the surveyed research are as follows:

-

(1)

Application of AOA and its variants for solving discrete problems and multi-objective problems are limited. AOA has been majorly employed in Power and Control engineering.

-

(2)

Hybridization and opposition based learning are mostly utilized techniques to enhance the performance of AOA.

-

(3)

Lévy flight, chaotic sequence, and other efficient random number generators based different performance improvement strategies are utilized but not to a great extent.

Based on the above discussion the following future directions can be made:

-

(1)

More research should be performed on parameters adaptivity including population size of AOA which makes it versatile over different problems and problem’s dimensions.

-

(2)

Co-evolution, Communication, and Sharing of Information among solutions must be incorporated for the betterment of AOA. Multi-swarm or mult-population based strategies should be considered in future. Other novel and well-established improvement strategies especially based on randomization (popular random number generators) which are not widely applied for the enhancement of AOA in literature should be applied in near future.

-

(3)

Application AOA’s variants in mutli-objective and discrete problems can be a good future research direction. In addition to that application in machine learning and computer vision must be done.

-

(4)

Prior information or expertise is always a huge benefit in developing an effective optimization algorithm, but it remains difficult to incorporate domain-specific knowledge into optimization algorithms.

-

(5)

Usually, a qualitative approach is used to analyse AOA. Mathematical assessments of AOA can be done in a variety of approaches, but they all involve strict premises that, in some circumstances, are also unworkable. The creation of a suitable mathematical framework for NIOA, such as AOA, may lead to the emergence of a brand-new field of study.

Data Availability

The authors do not have the permission to share the data.

References

Dhal KG, Ray S, Das A, Das S (2019) A survey on nature-inspired optimization algorithms and their application in image enhancement domain. Arch Comput Methods Eng 26(5):1607–1638

Dhal KG, Das A, Gálvez J, Ray S, Das S (2020) An overview on nature-inspired optimization algorithms and their possible application in image processing domain. Pattern Recognit Image Anal 30(4):614–631

Dhal KG, Das A, Ray S, Gálvez J, Das S (2020) Nature-inspired optimization algorithms and their application in multi-thresholding image segmentation. Arch Comput Methods Eng 27(3):855–888

Rai R, Das A, Dhal KG (2022) Nature-inspired optimization algorithms and their significance in multi-thresholding image segmentation: an inclusive review. Evol Syst 2022:1–57. https://doi.org/10.1007/s12530-022-09425-5

Dhal KG, Ray S, Rai R, Das A (2023) Archimedes optimizer: theory, analysis, improvements, and applications. Arch Comput Methods Eng 2023:1–36. https://doi.org/10.1007/s11831-022-09876-8

Rai R, Das A, Ray S, Dhal KG (2022) Human-inspired optimization algorithms: theoretical foundations, algorithms, open-research issues and application for multi-level thresholding. Arch Comput Methods Eng 29:5313–5352. https://doi.org/10.1007/s11831-022-09766-z

Holland JH (1992) Genetic algorithms. Sci Am 267(1):66–73

Beyer HG, Schwefel HP (2002) Evolution strategies–a comprehensive introduction. Nat Comput 1:3–52

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341

Koza JR (1994) Genetic programming as a means for programming computers by natural selection. Stat Comput 4:87–112

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN'95-international conference on neural networks vol 4. IEEE, pp 1942–1948

Yang XS, He X (2013) Firefly algorithm: recent advances and applications. Int J Swarm Intell 1(1):36–50

Yang XS, Deb S (2010) Engineering optimisation by cuckoo search. Int J Math Modell Numer Optim 1(4):330–343

Dorigo M, Di Caro G (1999) Ant colony optimization: a new meta-heuristic. In: Proceedings of the 1999 congress on evolutionary computation-CEC99 (Cat. No. 99TH8406), vol 2. IEEE, pp 1470–1477

Karaboga D (2010) Artificial bee colony algorithm. Scholarpedia 5(3):6915

Yang XS (2012) Flower pollination algorithm for global optimization. In: Unconventional computation and natural computation: Proceedings of the 11th international conference, UCNC 2012, Orléan, France, September 3–7, 2012. 11 (pp. 240-249). Springer Berlin Heidelberg

Kirkpatrick S, Gelatt CD Jr, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Hashim FA, Hussain K, Houssein EH, Mabrouk MS, Al-Atabany W (2021) Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Appl Intell 51:1531–1551

Azizi M (2021) Atomic orbital search: a novel metaheuristic algorithm. Appl Math Model 93:657–683

Karami H, Anaraki MV, Farzin S, Mirjalili S (2021) Flow direction algorithm (FDA): a novel optimization approach for solving optimization problems. Comput Ind Eng 156:107224

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: a novel optimization algorithm. Knowl-Based Syst 191:105190

Zhao W, Wang L, Zhang Z (2019) Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl-Based Syst 163:283–304

Wei Z, Huang C, Wang X, Han T, Li Y (2019) Nuclear reaction optimization: a novel and powerful physics-based algorithm for global optimization. IEEE Access 7:66084–66109

Hashim FA, Houssein EH, Mabrouk MS, Al-Atabany W, Mirjalili S (2019) Henry gas solubility optimization: a novel physics-based algorithm. Future Gener Comput Syst 101:646–667

Lam AY, Li VO (2012) Chemical reaction optimization: a tutorial. Memet Comput 4:3–17

Alatas B (2011) ACROA: artificial chemical reaction optimization algorithm for global optimization. Expert Syst Appl 38(10):13170–13180

Kaveh A, Bakhshpoori T (2016) Water evaporation optimization: a novel physically inspired optimization algorithm. Comput Struct 167:69–85

Mirjalili S (2016) SCA: a sine cosine algorithm for solving optimization problems. Knowl-Based Syst 96:120–133

Abualigah L, Diabat A, Mirjalili S, Abd Elaziz M, Gandomi AH (2021) The arithmetic optimization algorithm. Comput Methods Appl Mech Eng 376:113609

Nematollahi AF, Rahiminejad A, Vahidi B (2020) A novel meta-heuristic optimization method based on golden ratio in nature. Soft Comput 24:1117–1151

Rao RV, Savsani VJ, Vakharia DP (2011) Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Shi Y (2011) Brain storm optimization algorithm. In: Proceedings of advances in swarm intelligence: second international conference, ICSI 2011, Chongqing, China, June 12–15, 2011, Part I 2. Springer, Berlin Heidelberg, pp 303–309

Ahmadi SA (2017) Human behavior-based optimization: a novel metaheuristic approach to solve complex optimization problems. Neural Comput Appl 28(Suppl 1):233–244

Dhal KG, Das A, Sahoo S, Das R, Das S (2021) Measuring the curse of population size over swarm intelligence based algorithms. Evol Syst 12(3):779–826. https://doi.org/10.1007/s12530-019-09318-0

Dhal KG, Sahoo S, Das A, Das S (2019) Effect of population size over parameter-less firefly algorithm. Applications of firefly algorithm and its variants: case studies and new developments. Springer Singapore, Singapore, pp 237–266. https://doi.org/10.1007/978-981-15-0306-1_11

Agushaka JO, Ezugwu AE (2022) Evaluation of several initialization methods on arithmetic optimization algorithm performance. J Intell Syst 31(1):70–94

Adam, S. P., Alexandropoulos, S. A. N., Pardalos, P. M., & Vrahatis, M. N. (2019). No free lunch theorem: a review. Approximation and Optim, pp 57–82

Abd Elaziz M, Abualigah L, Ibrahim RA, Attiya I (2021) IoT workflow scheduling using intelligent arithmetic optimization algorithm in fog computing. Comput Intell Neurosci 2021:1–14

Ibrahim RA, Abualigah L, Ewees AA, Al-Qaness MA, Yousri D, Alshathri S, Abd Elaziz M (2021) An electric fish-based arithmetic optimization algorithm for feature selection. Entropy 23(9):1189

Emine BAŞ (2021) Hybrid the arithmetic optimization algorithm for constrained optimization problems. Konya Mühendislik Bilimleri Dergisi 9(3):713–734

Ewees AA, Al-qaness MA, Abualigah L, Oliva D, Algamal ZY, Anter AM, Abd Elaziz M (2021) Boosting arithmetic optimization algorithm with genetic algorithm operators for feature selection: case study on cox proportional hazards model. Mathematics 9(18):2321

Abualigah L, Diabat A, Sumari P, Gandomi AH (2021) A novel evolutionary arithmetic optimization algorithm for multilevel thresholding segmentation of covid-19 ct images. Processes 9(7):1155

Zheng R, Jia H, Abualigah L, Liu Q, Wang S (2021) Deep ensemble of slime mold algorithm and arithmetic optimization algorithm for global optimization. Processes 9(10):1774

Issa M (2022) Enhanced arithmetic optimization algorithm for parameter estimation of PID controller. Arab J Sci Eng 2022:1–15

Liu X, Zhou Z (2022) Illumination estimation via random vector functional link based on improved arithmetic optimization algorithm. Color Res Appl 47(3):644–656

Shi X, Yu X, Esmaeili-Falak M (2023) Improved arithmetic optimization algorithm and its application to carbon fiber reinforced polymer-steel bond strength estimation. Compos Struct 306:116599

Hu G, Zhong J, Du B, Wei G (2022) An enhanced hybrid arithmetic optimization algorithm for engineering applications. Comput Methods Appl Mech Eng 394:114901

ÇetınbaŞ İ, Tamyürek B, Demırtaş M (2022) The hybrid harris hawks optimizer-arithmetic optimization algorithm: a new hybrid algorithm for sizing optimization and design of microgrids. IEEE Access 10:19254–19283

Zhang YJ, Yan YX, Zhao J, Gao ZM (2022) AOAAO: the hybrid algorithm of arithmetic optimization algorithm with aquila optimizer. IEEE Access 10:10907–10933

Alweshah M (2022) Hybridization of arithmetic optimization with great deluge algorithms for feature selection problems in medical diagnosis. Jordan J Comput Inf Technol 8(2):1

Abdel-Mawgoud H, Fathy A, Kamel S (2022) An effective hybrid approach based on arithmetic optimization algorithm and sine cosine algorithm for integrating battery energy storage system into distribution networks. J Energy Storage 49:104154

Kharrich M, Abualigah L, Kamel S, AbdEl-Sattar H, Tostado-Véliz M (2022) An improved arithmetic optimization algorithm for design of a microgrid with energy storage system: case study of El Kharga Oasis, Egypt. J Energy Storage 51:104343

Abualigah L, Diabat A (2022) Improved multi-core arithmetic optimization algorithm-based ensemble mutation for multidisciplinary applications. J Intell Manuf 2022:1–42

Çelik E (2023) IEGQO-AOA: information-exchanged gaussian arithmetic optimization algorithm with quasi-opposition learning. Knowl-Based Syst 260:110169

Liu Q, Li N, Jia H, Qi Q, Abualigah L, Liu Y (2022) A hybrid arithmetic optimization and golden sine algorithm for solving industrial engineering design problems. Mathematics 10(9):1567

Mahajan S, Abualigah L, Pandit AK (2022) Hybrid arithmetic optimization algorithm with hunger games search for global optimization. Multimed Tools Appl 81(20):28755–28778

Abualigah L, Ewees AA, Al-qaness MA, Elaziz MA, Yousri D, Ibrahim RA, Altalhi M (2022) Boosting arithmetic optimization algorithm by sine cosine algorithm and levy flight distribution for solving engineering optimization problems. Neural Comput Appl 34(11):8823–8852

Karthik E, Sethukarasi T (2022) A centered convolutional restricted boltzmann machine optimized by hybrid atom search arithmetic optimization algorithm for sentimental analysis. Neural Process Lett 54(5):4123–4151

Davut IZCI (2022) A novel modified arithmetic optimization algorithm for power system stabilizer design. Sigma J Eng Nat Sci 40(3):529–541

Mahdad B (2022) Novel adaptive sine cosine arithmetic optimization algorithm for optimal automation control of DG units and STATCOM devices. Smart Sci 2022:1–22

Ekinci S, Izci D, Al Nasar MR, Abu Zitar R, Abualigah L (2022) Logarithmic spiral search based arithmetic optimization algorithm with selective mechanism and its application to functional electrical stimulation system control. Soft Comput 26(22):12257–12269

Mahajan S, Abualigah L, Pandit AK, Altalhi M (2022) Hybrid Aquila optimizer with arithmetic optimization algorithm for global optimization tasks. Soft Comput 26(10):4863–4881

Mahajan S, Abualigah L, Pandit AK, Al Nasar MR, Alkhazaleh HA, Altalhi M (2022) Fusion of modern meta-heuristic optimization methods using arithmetic optimization algorithm for global optimization tasks. Soft Comput 26(14):6749–6763

Chauhan S, Vashishtha G, Kumar A (2022) A symbiosis of arithmetic optimizer with slime mould algorithm for improving global optimization and conventional design problem. J Supercomput 78(5):6234–6274

Pashaei E, Pashaei E (2022) Hybrid binary arithmetic optimization algorithm with simulated annealing for feature selection in high-dimensional biomedical data. J Supercomput 78(13):15598–15637

Tahiri MA, Karmouni H, Bencherqui A, Daoui A, Sayyouri M, Qjidaa H, Hosny KM (2022) New color image encryption using hybrid optimization algorithm and Krawtchouk fractional transformations. Vis Comput 2022:1–26

Mohamed AA, Abdellatif AD, Alburaikan A, Khalifa HAEW, Elaziz MA, Abualigah L, AbdelMouty AM (2023) A novel hybrid arithmetic optimization algorithm and salp swarm algorithm for data placement in cloud computing. Soft Comput 2023:1–12

Krishna MM, Majhi SK, & Panda N (2022) Hybrid arithmetic-rider optimization algorithm as new intelligent model for travelling salesman problem

Qiao L, Liu K, Xue Y, Tang W, Salehnia T A multi-level thresholding image segmentation method using hybrid arithmetic optimization and Harris hawks optimizer algorithms. Available at SSRN 4188471

Izci D, Ekinci S, Eker E, Dündar A (2021) Improving arithmetic optimization algorithm through modified opposition-based learning mechanism. In: 2021 5th international symposium on multidisciplinary studies and innovative technologies (ISMSIT). IEEE, pp 1–5

Lin X, Li H, Jiang X, Gao Y, Wu J, Yang Y (2021) Improve exploration of arithmetic optimization algorithm by opposition-based learning. In: 2021 IEEE international conference on progress in informatics and computing (PIC). IEEE, pp 265–269

Izci D, Ekinci S, Kayri M, Eker E (2021) A novel enhanced metaheuristic algorithm for automobile cruise control system. Electrica 21(3):283–297

Sharma A, Khan RA, Sharma A, Kashyap D, Rajput S (2021) A Novel opposition-based arithmetic optimization algorithm for parameter extraction of PEM fuel cell. Electronics 10(22):2834

Zhang YJ, Wang YF, Yan YX, Zhao J, Gao ZM (2022) LMRAOA: an improved arithmetic optimization algorithm with multi-leader and high-speed jumping based on opposition-based learning solving engineering and numerical problems. Alex Eng J 61(12):12367–12403

Zivkovic M, Bacanin N, Antonijevic M, Nikolic B, Kvascev G, Marjanovic M, Savanovic N (2022) Hybrid CNN and XGBoost model tuned by modified arithmetic optimization algorithm for COVID-19 early diagnostics from X-ray images. Electronics 11(22):3798

Yang Y, Gao Y, Tan S, Zhao S, Wu J, Gao S, Wang YG (2022) An opposition learning and spiral modelling based arithmetic optimization algorithm for global continuous optimization problems. Eng Appl Artif Intell 113:104981

Abualigah L, Altalhi M (2022) A novel generalized normal distribution arithmetic optimization algorithm for global optimization and data clustering problems. J Ambient Intell Hum Comput 2022:1–29

Abualigah L, Elaziz MA, Yousri D, Al-qaness MA, Ewees AA, Zitar RA (2022) Augmented arithmetic optimization algorithm using opposite-based learning and lévy flight distribution for global optimization and data clustering. J Intell Manuf 2022:1–39

Abualigah L, Almotairi KH, Al-qaness MA, Ewees AA, Yousri D, Abd Elaziz M, Nadimi-Shahraki MH (2022) Efficient text document clustering approach using multi-search Arithmetic Optimization Algorithm. Knowl-Based Syst 248:108833

Turgut MS, Turgut OE, Abualigah L (2022) Chaotic quasi-oppositional arithmetic optimization algorithm for thermo-economic design of a shell and tube condenser running with different refrigerant mixture pairs. Neural Comput Appl 34(10):8103–8135

Izci D, Ekinci S, Eker E, Abualigah L (2022) Opposition-based arithmetic optimization algorithm with varying acceleration coefficient for function optimization and control of FES system. In: Proceedings of international joint conference on advances in computational intelligence: IJCACI 2021. Springer Nature, Singapore, pp 283–293