Abstract

Introduction Resilient provision of Position, Navigation and Timing (PNT) data can be considered as a key element of the e-Navigation strategy developed by the International Maritime Organization (IMO). An indication of reliability has been identified as a high level user need with respect to PNT data to be supplied by electronic navigation means. The paper concentrates on the Fault Detection and Exclusion (FDE) component of the Integrity Monitoring (IM) for navigation systems based both on pure GNSS (Global Navigation Satellite Systems) as well as on hybrid GNSS/inertial measurements. Here a PNT-data processing Unit will be responsible for both the integration of data provided by all available on-board sensors as well as for the IM functionality. The IM mechanism can be seen as an instantaneous decision criterion for using or not using the system and, therefore, constitutes a key component within a process of provision of reliable navigational data in future navigation systems. Methods The performance of the FDE functionality is demonstrated for a pure GNSS-based snapshot weighted iterative least-square (WLS) solution, a GNSS-based Extended Kalman Filter (EKF) as well as for a classical error-state tightly-coupled EKF for the hybrid GNSS/inertial system. Pure GNSS approaches are evaluated by combining true measurement data collected in port operation scenario with artificially induced measurement faults, while for the hybrid navigation system the measurement data in an open sea scenario with native GNSS measurement faults have been employed. Results First, the performance of the proposed FDE schemes in terms of the horizontal error is evaluated for both weighted and unweighted approaches in GNSS-based snapshot and KF-based schemes. Here, mainly due to availability of the process model, the KF approaches have demonstrated smaller sensitivity to the injected GNSS faults, while the methods with C N o weighting schemes have resulted in reduced spread of the obtained position solutions. The statistical evaluation of the proposed FDE schemes have been performed for pure GNSS schemes by considering the fault detection rate as a function of the amplitude for the randomly injected GNSS faults. Although the KF-based approaches have clearly outperformed the memoryless schemes, lower detection rates for weighted schemes could be clearly seen due to inability of the FDE to detect faults of fixed amplitude for satellites with lower C N o values. Moreover, the evaluation of the FDE schemes in terms of maximum horizontal position error have indicated bounded response of the FDE schemes when compared to that of non-FDE methods. Finally, the superiority of the FDE-enabled tightly-coupled GNSS/inertial EKF over the non-FDE solution have been demonstrated using a scenario with native GNSS faults. Conclusions The work had successfully demonstrated an applicability of the developed FDE schemes in snapshot and RBE-based algorithms for maritime applications using both non-inertial GNSS-based positioning and a hybrid IMU/GNSS EKF-based approach. The proposed methods form a solid foundation for construction of a more reliable and robust PNT-Unit, where state-of-the-art hybrid navigation algorithms are augmented with integrity monitoring functionality to ensure the system performance in the presence of GNSS faults. The FDE mechanism provides consistent improvements in terms of the horizontal accuracy both in LS and KF-based methods. Although only port operation case and one example of a true GNSS fault in open sea was considered, the presented results are believed to be general enough and the scheme could be adopted for other applications in future.

Similar content being viewed by others

1 Introduction

The last decades had witnessed a rapid development of new technologies for nautical applications both in order to support the constantly increasing marine traffic and the requirements to improve the safety of navigation. Here the process of vessel navigation is supported by a variety of independent sources of navigational information (sensors or sensor systems), where GNSS (Global Navigation Satellite Systems), in particular the Global Positioning System (GPS) is often adopted as main source for provision of absolute Position, Velocity and precise Time information (PVT). Nevertheless, GNSS sensors are often not integrated with other on-board sensors and sensor systems such as speed log, gyro compass, RADAR, etc., and, therefore, are mostly used as standalone sensors. However, with numerous independent and decoupled sources of navigational information available (e.g. non-hybrid systems, where the information from different sensors is not fused), the process of navigation can be formulated as a real-time decision making process that requires an extreme focus and constant attention from the navigator. In spite of all the efforts to improve the quality and reliability of different sensors, 43 % of the total number of accidents in the Baltic Sea during 2012 were actually caused by human factors such as mistakes in the planning process or skill-based errors, such as slip and lapse [9].

In order to improve the overall safety and efficiency of berth-to-berth navigation, the International Maritime Organization (IMO) has started the so-called e-Navigation initiative. Here resilient on-board provision of Position, Navigation, and Timing (PNT) data is recognized as a core functionality to improve the reliability, resilience and integrity of the navigation information provided by the bridge equipment [1]. Based on the concept of Maritime PNT System, the PNT data processing Unit will use all available on-board sensors and employ methods of sensor fusion in order to provide both the PNT data and the associated integrity information to the user. In a modular approach of a future Integrated Navigation System (INS) this PNT-Unit will serve as a module responsible for the on-board PNT data provision.

Moreover, it is rather well-known that an integration of multiple complementary positioning sensors could highly improve the overall system resilience against Radio Frequency (RF) channel contamination and is crucial in achieving a reliable provision of PNT data even for challenging scenarios with severe RF signal multipath and Non-Line-Of-Sight (NLOS) effects (especially important for inland waterways or port operations), jamming or effects caused by ionosphere weather conditions. Therefore, it is considered to be advantageous to augment the GNSS system with yet another sensor or sensor system with different error and/or failure patterns such as inertial sensors or Doppler Velocity Log (DVL). These sensors are able to provide a position with slowly degrading level of accuracy for a specified period of time while the GNSS information is either not available or is considered to be unreliable. The inertial navigation systems are able to overcome the GNSS vulnerability due to their inherent independence from the surroundings and, therefore, are often integrated with GNSS information so that the short term performance of the Inertial Measurement Unit (IMU) and long term stability of GNSS are incorporated optimally within the final hybrid navigation system.

Although the integrity algorithms are rather well-known in aviation applications, few works have reported on applying these or similar techniques to other scenarios such as terrestrial or nautical navigation under real operational conditions. The presented work tries to close this gap by introducing the discussion on performance of Fault Detection and Exclusion (FDE) methods for both snapshot and Recursive Bayesian Estimation (RBE) positioning algorithms in maritime applications and concentrates on the performance analysis of both the least-squares residuals (LSR) and Kalman Filter Innovation (KFI)-based FDE algorithms.

The presented work starts with the discussion on integrity algorithms for pure GNSS (GPS) systems. As the GNSS output in the form of position and velocity solutions is often employed by hybrid navigation systems in loosely-coupled configurations, it becomes crucial to understand the performance of FDE mechanisms when GNSS data are used in snapshot techniques. The discussed FDE approach is compared against the corresponding FDE extension for a RBE scheme. The obtained results confirm that even for a pure GNSS-based system the RBE methods with FDE functionality clearly outperform the non-RBE methods due to presence of an additional source of information in the form of the assumed process model. The performance of the developed techniques is assessed in terms of horizontal positioning accuracy using real data with artificially introduced faults and the results are evaluated for weighted and non-weighted GNSS measurement noise models. For this purpose a GNSS fault simulator based on Monte Carlo methods was developed, which is capable adding in a controlled manner faults to raw measurements recorded previously during typical maritime operational scenarios (e.g. port operation or coastal approach).

Within the second part of the work the FDE scheme is evaluated within a real hybrid navigation solution using both GNSS and inertial sensor data. As the hybrid navigation solutions are becoming more and more popular for non-aviation applications, mainly due to appearance of relatively cheap inertial sensors of tactical grade, odometer or Doppler velocity measurements, more advanced techniques for Integrity Monitoring (IM) in RBE methods are becoming necessary. In order to assess the performance of the proposed techniques for hybrid navigation, we employ a classical hybrid inertial/GNSS system. This allows the results to be easily extrapolated to other applications such as automotive and outdoor robotics scenarios. Furthermore, the obtained results are based on real operational conditions including the unmodelled GNSS effects and errors in inertial sensors such as misalignment. The performance of the hybrid navigation system with FDE functionality is compared to that of non-FDE loosely-coupled EKF using real measurement data collected during a coastal approach operation with native GNSS faults.

The rest of the paper is organized as follows. In Section 2 a brief discussion is provided on state-of-the-art FDE methods in IM both for snapshot and RBE positioning algorithms. The details on relevant mathematical methods are given in Section 3 with the description of the system setup presented in Section 4. The experimental results are shown in Section 5 with the summary and the outlook for future research provided in Section 6.

2 Current research status

The Snapshot LSR Receiver Autonomous Integrity Monitoring (RAIM) algorithms developed by the civil aviation community [16] or the statistical reliability testing adopted by the geodetic community [19] are the classic references for non-augmented (i.e. autonomous and completely self-contained) GPS-based LSR algorithms. All these approaches make use of the redundancy within the available measurements to check, on a measurement-by-measurement basis, the relative consistency among estimated residuals, and, correspondingly, to detect the most likely measurement fault. Most of the known approaches are based on the comparison between a test statistic depending on the estimated least-squares (LS) residuals and a given threshold under Gaussian noise assumption. The decision threshold is set considering a priori knowledge of the statistical distribution of the test residuals in the fault-free case and a given false detection rate. Although the classical methods mainly use snapshot techniques, some works have been reported on introducing the FDE algorithms for RBE techniques [17]. These techniques are usually formulated in a well-known form of a Kalman filter (KF), where it has been proven that the KF innovations follow a similar statistical distribution to that of the LS residuals under equal noise assumptions [23].

The trivial assumption that all GNSS code measurements are contaminated with noise of constant and known variance is often violated in real scenarios and several approaches have been reported on increasing the robustness of integrated solutions by using more advanced GNSS noise models, where the measurement covariance is not fixed and constant in time, but instead depends on the measurement signal quality, elevation angle, etc. [6, 7, 22]. Although it has been widely agreed that the number and the impact of possible error sources is strongly related with the satellite elevation, the elevation angle itself is not necessarily the best indicator of the actual signal quality [7] and C N o (received carrier (i.e. the signal) power (watts) divided by the measurement noise power density) is often considered as a fairly good alternative. Hence the measurements with higher C N o values are good indicators of less noisy range measurements and, therefore, should be weighted more to provide an improved precision of the positioning solution [6, 7, 24].

The augmentation of GNSS systems with inertial sensors in order to mitigate intentional or unintentional RF signal interference has a fairly long history. The work of [12] addressed both the issues of IM in a tightly-coupled (TC) IMU/GNSS system and the availability of hybrid navigation solutions. The latter one is defined as the ability of the system to coast upon the loss of all GNSS signals while still maintaining a certain accuracy. The authors in [12] used a GNSS ramp error model (GNSS pseudorange error, which constantly grows at a particular rate starting from zero error) and evaluated two IM strategies: solution separation method and extrapolation method. Within the first approach the test statistic is the horizontal separation between the full-set and subset solutions where the failure is claimed if the test statistic exceeds the associated decision threshold. The second approach detects the slowly growing (ramp) errors by considering the GPS measurements over a relatively long period (up to 30 minutes) and using KF innovations averaged over 2.5, 10 and 30 minutes. Nevertheless, both methods may not detect measurement failures during periods of low (less than four) satellite visibility. Note that GNSS ramp errors are often considered to be far more challenging compared to step errors as the latter generate instantaneous jumps in the measurement biases and can be relatively easy detected both via consistency check or by comparison to the actual output of inertial integration. The authors conclude that innovations can be only used to detect the failures caused by relatively fast growing errors, while the statistic for the extrapolation method, which averages the innovation vector elements over time, can be used to detect slower error ramps.

Although the Microelectromechanical Systems (MEMS) sensors have attracted an increasing attention for the pedestrian localization [4], robotics, automotive applications or low-cost Unmanned Aerial Vehicle (UAV) design, their applicability to Safety Critical Applications (SCA) such as maritime navigation has been until recently limited by their relatively high noise and bias instability, causing a rapid drift of the standalone inertial solution when reference positioning information is not available. Some recent works [14] have also assessed the possibility of replacing Fiber Optic Gyroscopes (FOG) with higher performance MEMS IMUs and have confirmed that a combined IMU/GNSS system is able to deliver position and velocity information at high update rate while preserving a low noise content due to smoothing performance of the inertial integration. Still, the performance of the hybrid system was not assessed under the presence of measurement faults and no IM algorithms were evaluated in that study.

3 Mathematical development

Obviously, the algorithms employed in maritime SCA must meet stringent reliability requirements. Here, for simplicity, we adopt an integrity concept from the aviation sector, where one of these reliability requirements is the so-called integrity risk. The integrity risk is the likelihood of an undetected navigation state error, that results in Hazardously Misleading Information (HMI). In practice, it is defined as a confidence bound for the navigation system state, which confines all state output errors with a confidence equal or higher than 1- α, where α is the integrity risk (in general, the value has to be adjusted according to the requirements of the target application). There is a case of loss of integrity when the navigation system state error exceeds the confidence bound without warning the system user. The probability of loss of integrity is also called probability of HMI. This probability can be mapped onto the state space and, in the case of KF-based navigation systems, it can be interpreted as the protection level (in physical units) of the state uncertainty (covariance) ellipsoid. SCA IM algorithms must provide functionality for real-time detection of navigation system state integrity loss and optionally, fault identification and exclusion mechanisms (so-called FDE) in order to guarantee service continuity.

The algorithms for positioning and hybrid navigation are usually formulated as state estimation problems using a combination of measurements from multiple sensors with complementary noise properties. A desired set of the parameters to be estimated from noisy measurements usually includes the object’s position, velocity and attitude as well as some of the sensor errors. Here one can utilize a well-established estimation strategy based on the RBE framework [5, 20], while a classical LS solution can be considered as a non-recursive memory-less (snapshot) approach. The classical RBE cycle is performed in two steps:

Prediction

The a priori probability is calculated from the last a posteriori probability using a probabilistic process (state transition) model f(⋅).

Correction

The a posteriori probability is calculated from the a priori probability using a probabilistic measurement model h(⋅) and the current measurement z k .

In practice, however, the theoretical methods formulated with probability densities do not scale up very well and can quickly become intractable even for estimation problems of reasonable dimensionality. Various implementations of RBE algorithms differ in the way the probabilities are represented and transformed in the process and measurement models [5, 20]. If the models are linear and the probabilities are Gaussian, the linear KF is an efficient and optimal solution of the estimation problem. Unfortunately, most of the useful real-world navigation systems are nonlinear and modifications to the linear KF have been developed to deal with nonlinear models. The Extended Kalman Filter (EKF) is one of the most popular nonlinear estimators and is historically considered as a standard tool within the engineering community. In the EKF the nonlinear models are linearized about the current estimate using Taylor series expansion, where model f and observation model h are replaced by the corresponding Jacobians. The system at every time t k is represented by the state x k and an associated covariance P k with the rest of the filtering scheme being essentially identical to that of the classical linear KF. Although the EKF inherits many advantages of the KF such as limited computational costs and a clear filtering structure, it still suffers from two main problems. Firstly, the performance of the estimator strongly depends on the validity of the linearized model assumption and can become inaccurate and lead to filter instabilities if these assumptions are violated. Secondly, the required Jacobians can be potentially difficult or even impossible to derive if the dynamical models involve complex approximation coefficients and/or discontinuities, or generative sensor models are used (e.g. 2D planar laser).

We start the discussion on the mathematical background by considering the non-recursive FDE method, where the corresponding FDE RBE scheme can be seen as an extension of this well-known strategy. The usual snapshot GNSS-based position determination involves four unknowns: receiver coordinates (X,Y,Z) and the GNSS receiver clock offset δ t with the number of unknowns n=4. For the memoryless LS estimation we follow a classical approach based on the linearization of the measurement function at each epoch t k around a point x 0 and finding the correction factor \(\delta \hat {x}\) using [2]:

and the iterative update of the initial estimates \(\hat {x}_{i}={\hat {x}_{i-1}}+ \delta \hat {x}_{i}\), where δ z is the misclosure vector and R is the measurement noise covariance. In the expression above the matrix H is the corresponding Jacobian of the measurement model, which, in this case, corresponds to the linearized GNSS pseudorange measurement model. If there are five or more observations z available (i.e. m>n), the redundant measurements could be used to check the consistency among the full set of measurements. This forms a fundamental principle for the fault detection using the LS method, where the measurement space with dimensionality m is separated into two subspaces: the state space and the parity space with the dimensionality n and m−n respectively [10]. The LSR methods are based on a detection test derived from the measurement residual norm \(\left \|\hat {e}\right \|\):

The test statistic is based on the estimated residual vector \(\hat {e}\) normalized by the standard deviation of the measurement errors \(\left \|\hat {e}\right \|^{2} = \hat {e}^{T} R^{-1} \hat {e}\). The probability density function of the normalized LS estimation residuals is shown in Fig. 1 for a fault-free scenario (real measurements passing global consistency check with conservative confidence level). The plot compares the theoretical distribution (assumed to be Gaussian with zero mean and unit standard deviation) and the experimental results for both classical equally weighted LS snapshot GNSS solution (e-LS) and a GNSS solution with C N o weighted measurement model (e-WLS, see below). One clearly sees that in both cases the residuals e approximately follow the assumed Gaussian distribution as the theory predicts.

Here one should notice that the test statistic is observable while the positioning error of the LS solution is not. In the fault-free case (the individual residuals follow \(\mathcal {N}\left (0,1\right )\), see Fig. 1), the test statistics value follows a central Chi-Square distribution with m−n degrees of freedom (see Fig. 2). Here the classical LS detection method is based on the hypothesis testing where the test statistic with the given threshold is compared to the LSR statistic defined as [21]:

For the fault-free case the test threshold T h for a given probability of false alarm (P f a ) and redundancy (or equivalently, degrees of freedom) is found by inverting the incomplete gamma function [21]. A common procedure consists of fixing P f a according to the application requirements and letting the threshold vary with the availability of the measurements. A typical value for P f a in maritime applications is 0.1 % [18]. The hypothesis test is given by the following condition:

This test can be seen as a global one as it checks the consistency of a full measurement set. The threshold determines whether the null-hypothesis H 0 of the global test should be accepted or rejected (H 1). If it is rejected, an inconsistency in the tested measurements is assumed and the fault source should be identified and further excluded using, e.g, the local tests [11, 17]. These tests assess the standardized residuals defined as follows:

where U is the covariance matrix for the residuals U = R−H(H T R −1 H)−1 H T.

In order to detect a fault, each standardized residual r i is tested using the quantile of a normal distribution corresponding to the P f a . In the local test, the residual under test is excluded if the respective standardized residual exceeds the test threshold. Similarly to the global test, the local test assumes the residuals to follow \(\mathcal {N}\left (0,1\right )\). The local hypothesis test is given by the following condition:

where a p is the quantile of the probability p of the standard normal distribution. Each measurement r i is tested against the H 0,i , as the measurement fault affects multiple standardized residuals. The measurement i is selected as a candidate to be excluded if and only if both of the following conditions are fulfilled:

The method as described above is based on an extension of the standard FDE methodology as suggested in [11], where some minor modifications are introduced in terms of forward-backward propagation in the process of measurement subset selection. Although further modifications of this standard scheme are still possible, we do not believe that significant improvement can be achieved for non-augmented GNSS snapshot positioning and assume this basic implementation to be sufficient for the purpose of the presented comparative study. Moreover, more advanced schemes, which could mitigate the problem of the algorithm to converge to a local minimum could require corresponding modification of the RBE-based techniques for the fairness of the comparison and, therefore, are beyond the scope of the presented work.

The corresponding RBE FDE algorithms are implemented either in the form of non-inertial position/velocity (constant velocity - CV model) filter (Scenario 1: non-inertial applications) or IMU/GNSS EKF-based fusion filter (Scenario 2: inertial filter). In the former case, the estimated state consists of 3D position and 3D velocity as well as the GNSS receiver clock offset and clock offset rate. The latter filter is far more advanced, where the estimated state includes the 3D attitude, 3D position and 3D velocity as well as the accelerometer and gyroscope offsets for each sensor axis. For the TC EKF architectures one also includes the receiver clock offset and offset rate to the filter state to be estimated. As the measurements we employ C/A L1 code and Doppler shift, where for loosely-coupled approaches, the solutions from external to KF snapshot solvers (position or velocity) are used as a direct observation of the state. The presented IMU/GNSS filter is a classical implementation of IMU/GNSS fusion mechanism with direct strapdown inertial mechanization model and the relevant mathematical details can be found elsewhere (e.g. see [8]).

The FDE approach for RBE algorithm (in our case represented by EKF, although the strategy can be easily extended for other filters such as Unscented KF [20]) can be derived from the one used in snapshot GNSS positioning as described above. The predicted residual vector (often called innovation vector) is given as follows:

where h(x k ) is a non-linear function relating the estimated state to the observations. The innovation vector can be considered as an indicator of the amount of information introduced in the system by the actual measurements and the respective normalized norm can be used again as the measurement quality indicator. For a fault-free situation, this norm follows a central Chi-Squared distribution but in this case not with m−n, but with m degrees of freedom and the global test statistic given as \(ts_{KF}=\sqrt {\hat {d}_{k}^{T} S_{k}^{-1} \hat {d}_{k}}\). Here S k is the innovation vector covariance matrix defined as \(S_{k}= H_{k}P^{-}_{k}{H^{T}_{k}} + R_{k}\), where \(P_{k}^{-}\) is the predicted error covariance of the state estimate. The global test and the local tests are performed following essentially the same procedure as for the LS methods described before. Again, it is assumed that under fault-free conditions the innovations have to follow a zero mean Gaussian distribution.

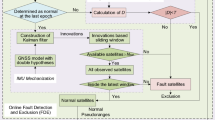

The RBE-based FDE scheme implemented in this work also consists of a two-step procedure as shown in Fig. 3. Firstly, the global test, as described before, checks the consistency among the full set of measurements. If an inconsistency is detected, the scheme performs a local test. The local test is recursively applied whenever a fault is detected until no more faults are found [11]. According to [3], the innovation property of the KF makes it also possible to detect even very slowly changing errors (e.g. drift) by estimating the mean of the residuals over a longer time interval, where in order to avoid contamination of the KF estimated state, the measurements and residuals are stored in buffers for periods up to 30 minutes. However, the performance of the methods under slowly changing errors is beyond the scope of the presented work. Note that both described procedures (snapshot RAIM-like and KF-based) are only some of the possible approaches and more advanced schemes can be implemented. Still, these algorithms are simple enough and, therefore, provide a fair ground for the method comparison.

Many practical applications address the problem of varying GNSS link quality by assuming non-constant measurement noise variance as a function of the elevation angle or measured signal quality indicators like, for instance, C N o. In order to evaluate the impact of similar methods on the FDE functionality, a realistic GPS L1 pseudorange noise model was extracted (further referred as weighted scheme), where the pseudorange measurement covariance depends on the actual measurement of C N o reported by the receiver. As the basis for the adaptive pseudorange measurement noise covariance model σ 2 we have adopted the following general expression [11]:

with three model parameters a,b and c, where the parameter a can be roughly mapped to the receiver correlator noise baseline. In the expression above the C N o is the measured carrier to noise density ratio for a particular pseudorange observation. The experimental data for model extraction have been obtained from a reference receiver of known position (previously surveyed) over 24 hours using broadcast ionospheric and tropospheric corrections (the same corrections were adopted in the presented filters) with the error statistic computed by analyzing the differences between the expected and the observed ranges. The obtained data have been binned according to the associated C N o values and for each bin a variance was estimated. Note that in this simplified approach only variance was modeled as a function of the signal quality and the non-zero mean offset was ignored. Figure 4 shows the experimental results and the extracted model using a nonlinear least squares fit. The points with lower C N o values have been manually excluded as having insufficient statistic and fit was found only to the C N o values larger than 40 dBHz, where the values between 45-55 dBHz are considered typical for the GPS L1 C/A signal. Moreover, the performance of the Delay Locked Loop (DLL) correlator in the GNSS receiver to track the satellite pseudoranges is often poor for low C N o values and the obtained values are simply not representative and should not be used for a model fit. The extracted model was used in weighted methods for both adaptive snapshot and RBE-based schemes within the first evaluation scenario.

Of course, one has to ensure that the Gaussian noise assumption is still valid as the performance of both LS and KF methods can be compromised or become far from optimal for other noise models. To verify the assumption from [11] that for the given C N o value the satellite noise can be well approximated by zero-mean Gaussian and covariance from Eq. 9, we have explicitly checked the Gaussianity of the residual distributions. For C N o>40 dB-Hz the distributions were passing classical Gaussianity test, while for smaller C N o values heavier tails in distributions have been observed and some of the tests failed.

Note that the experimental data show also a small noise for C N o larger than 55 dBHz. The observed values are close or even smaller than the correlator base noise level and are probably caused by the insufficient sample size for higher C N o values. Still this effect has been effectively eliminated from the fit model as the parameter a is almost 60 cm 2, which is close to the rough theoretical calculations for the associated hardware. This also allows us to not exclude these points manually as both LS and KF algorithms have shown relatively low sensitivity to small variations in variance models. As the equivalent constant noise model, the additive zero-mean Gaussian noise with σ=2.3 meters was considered. This value was obtained from the same 24 hours data set by taking the mean of all residuals irrespectively whether C N o values were large or not. Although this value can be considered as over-pessimistic for modern higher performance GNSS receivers, we believe that it is representative if one addressed challenging GNSS scenarios with significant NLOS, multipath etc. For simplicity we have not employed an adaptive noise model for Doppler shift measurements and used instead a constant noise model consisting of equivalent 5 cm/s range rate where applicable.

4 System setup

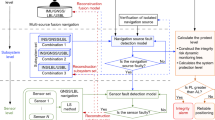

With the purpose to overcome the previously identified issues and to commit with the IMO recommendations for future developments, we have developed a PNT-Unit concept and an operational prototype in order to confirm the the performance under real operational conditions. Here the core goals are the provision of redundancy by support of all on-board PNT relevant sensor data including Differential GNSS (DGNSS) and future backup systems (e.g., eLoran, R-Mode), the design and implementation of parallel processing chains (single-sensor and multi-sensor architectures) for robust PNT data provision and the development of the IM algorithms in order to evaluate the events or conditions that have the potential to cause or contribute to HMI and could, therefore, compromise safety.

The experimental sensor setup for the PNT-Unit development is shown in Fig. 5. For the first scenario (pure GNSS-based positioning) the original sensor measurements were recorded using the multipurpose research and diving vessel Baltic Diver II (length 29 m, beam 6.7 m, draught 2.8 m, GT 146 t) as a base platform. The vessel was equipped with three dual frequency GNSS antennas and receivers (Javad Delta), tactical grade FOG (type IMAR FCAI) and MEMS IMUs, gyrocompass, DVL and echo sounder (see Fig. 6). The IALA (International Association of Marine Aids to Navigation and Lighthouse Authorities) beacon antenna and receiver were employed for the reception of DGNSS code-based corrections. The VHF modem was configured for the reception of RTK (Real-Time Kinematics) phase correction data from the Maritime Ground Based Augmentation System (MGBAS) station located in the port of Rostock [13, 15]. All relevant sensor measurements are provided either directly via Ethernet or via serial to Ethernet adapter to a Box PC, where the measurements are processed in real-time and stored in a SQlite3 database along with the corresponding time stamps. The described setup enables record and replay functionality for further processing of the original sensor data. The system consists of a highly modular hardware platform and a Real-Time software Framework (RTF) implemented in ANSI-C++.

For the first scenario (GNSS-based positioning only) the data have been recorded on the 1st. of September 2014 in a quasi-static scenario, where the vessel was moored at its home port “Alter Fischereihafen” on the river Warnow close to the Rostock port. At this time there was only a weak wind and little waves, so that only minor vessel motion could be observed. The evaluation is based on data (GPS L1 pseudorange and Doppler shift measurements) from the mid ship antenna, which is located besides the main mast of the ship and, therefore, some shadowing effects due to mast can be expected. The chosen environment represents a typical maritime port application. The recorded measurement data for the first scenario constitute the input data for the corresponding Monte Carlo GNSS fault simulator (or, in other words, the software based fault emulator). The general configuration of the simulator allows the user to select either static or dynamic fault profiles including both step-wise (instantaneous) and ramp errors. Step-wise faults simulate measurement additive faults (e.g. signal multipath) while the ramp faults correspond to slowly-varying cumulative errors (e.g. satellite clock drift), although the ramp errors are not addressed within the present work due to requirement of equivalent modifications of RBE-based FDE approaches. The simulated fault profile consisting of amplitude range and fault duration time is configurable for single satellites separately. As the fault impact on the estimated state is strongly influenced by the satellite constellation geometry, the fault onset time is randomly selected within the period the satellite is visible.

The simulation approach consists of adding a step of required amplitude to a particular pseudorange measurement a pre-defined number of times. For each amplitude simulation run the respective pseudorange satellite ID and fault start time are selected randomly while the respective fault duration is kept constant. Once the fault start/end point and satellite ID are defined, the fault of a given amplitude is injected. A particular constraint was imposed in the case of RBE algorithms to ensure that the fault was injected after the filter had converged. The simulated amplitude step ranged between negative and positive values in order to ensure a fair comparison for both snapshot and RBE algorithms. During the Scenario 1 the number of LOS satellites is always higher than seven and the satellite constellation geometry is assumed to be approximately constant during the simulation time with Dilution of Precision (DOP) lower than 3. Although the evaluation time for Scenario 1 can be considered relatively short, it was required to avoid possible variations in DOP. As the recorded GNSS data have been proved (both manually and with corresponding global tests) to be free of significant native GNSS faults, we were able to add in a controlled manner faults to raw code measurements and to count exactly the number of detected errors. The duration of the Scenario 1 with injected GNSS faults is 10 minutes with an output GNSS data rate of 1 Hz. The simulator was configured for 10 simulations per amplitude step of 1 meter with amplitude ranging from -30 to +30 meters.

The sensor data for the Scenario 2 (dynamic scenario used to test the IMU/GNSS integrated system) were recorded using the ferry vessel Mecklenburg-Vorpommern from Stena Lines, which is plying continuously between the ports of Rostock and Trelleborg. The vessel was equipped with essentially the same setup as the one described above with the GNSS antennas placed on the compass deck. Due to the computational complexity of the EKF filter no Monte Carlo simulation has been implemented for the hybrid scenario and the performance of the filters with and without FDE functionality is demonstrated using a single set of data. The advantage of the approach is that the presented data contain true significant GNSS faults and the performance of the EKF with FDE functionality is demonstrated using real (non-simulated) data recorded in open sea with the GNSS faults probably caused by multipath or similar effects.

5 Results

5.1 Scenario 1

Let us start the discussion on FDE performance by considering the accuracy of the implemented techniques for the case of single simulated GNSS faults in a pure GNSS-based approach. The provided figures are generated by converting the solver solution (X,Y,Z) coordinates from ECEF (Earth-Centered-Earth-Fixed) frame to ENU (East-North-Up) coordinate frame and centering them with respect to the RTK estimated mean position, which corresponds to the coordinates (0,0,0). In all non-weighted approaches the measurement noise standard deviation σ=2 meters was used, which is reasonably close to the average GPS L1 noise value of 2.3 meters extracted from reference data employed for adaptive model calculation as described previously in Section 3. The corresponding weighted schemes use the C N o weighting model as described in Section 3, where the noise assumed for each GPS L1 pseudorange is calculated with respect to the C N o value obtained for that range from the receiver. The same holds for RBE-based methods in Scenario 1 and Scenario 2, where the measurement noise covariance matrix R of the EKF is either populated with constant values (i.e. corresponding to σ=2 meters) or is also calculated dynamically depending on reported C N o using Eq. 9.

The top-left plot in Fig. 7 demonstrates the performance of the snapshot LS (non-RBE) algorithm with the measurement noise model assuming fixed measurement noise covariance for all available range measurements (non-weighted scheme). The main position cloud corresponds to the non-contaminated GNSS solutions, while a smaller cloud corresponds to the contaminated solutions, where the pseudorange measurement of one of the available satellites was combined with a step fault of 15 meters (usually, between 7 and 9 satellites are available for the presented scenarios). One can clearly see that the suggested LS FDE mechanism is able to completely eliminate the GNSS fault as the LS algorithm with the FDE functionality never results in the position within the second cloud and, furthermore, the solution spread is slightly improved as some faulty solutions close to the lower-right boundary of the main cloud are also eliminated. The performance of the corresponding LS solution for the case of weighted GNSS measurement model is shown in the top-right plot in Fig. 7. Similarly as in the case of the unweighted approach one can clearly see two distinct clouds of position solutions, where the smaller one corresponds to the time instances, when the satellite fault of 15 meters was added. First of all, one clearly sees the benefit of the adaptive noise model itself as it significantly reduces the spread of the position solutions by downweighting the satellites with low values of C N o (often corresponding to the satellites with lower elevation). Moreover, the FDE mechanism for the weighted approach works fine as well by completely eliminating the second cloud. In this case it is important to remember that due to adaptive noise model the test statistic is, in general, different for non-weighted and weighted LS methods as we will see later.

The results of the corresponding pure GNSS-based EKF are shown in the bottom-left and bottom-right plots in Fig. 7. Both plots demonstrate the impact of the process model as the position solution is far less random compared to the memory-less LS solution. Obviously, when the GNSS fault is applied, it takes a while for the position error to develop towards the offset position due to inertia imposed by the process model. Similarly, as in the case of LS methods, the spread of the position solutions is improved in weighted EKF (WEKF) when compared to non-weighted (EKF) approach, although a slight shift in the mean position solution seems to be preserved. The latter effect can be explained by a slight mismatch of the constant and adaptive noise models in terms of an overall impact with respect to the assumed noise dynamics as well as to the particular satellite geometry. Here again, the proposed FDE mechanism is able to detect and eliminate the corresponding measurement faults. In all cases the adaptive noise approach seems to significantly improve the spread of the solutions around the mean (although the effect of a slight shift of the mean position needs some further investigations) and the proposed FDE schemes were able to eliminate the imposed GNSS fault of 15 meters both in RBE and non-RBE approaches supported with constant and adaptive noise models.

Note that in all cases the solution clouds are not centered around the origin. Here one should recall that the test data have been obtained using both broadcast troposphere and ionosphere models and only code data have been used within the LS solver. Obviously, the real range errors are not Gaussian and non-random errors can be introduced to some of the links depending on the satellite elevation, actual ionosphere state, etc. All these effects can result in significant position offset with respect to the reference position. Obviously, an improved barycentric mean could be expected for solutions supported with ground augmentation services.

Although the performance as shown in Fig. 7 confirms similar functionality of all the approaches, the imposed GNSS fault can be considered relatively large. Although the RBE approaches seem to be far more conservative in taking into account the applied GNSS faults, the general behavior of the approaches can be hardly deduced from a single case. In order to address this issue the FDE exclusion rate statistic (number of detected GNSS faults relative to the total number of injected GNSS faults for a given fault amplitude) is shown in Fig. 8 both for weighted and non-weighted LS and KF methods using the Monte Carlo simulation with induced GNSS faults as discussed before. Although for reasonably large fault amplitudes all the methods converge to a detection rate of 100 %, the performance for moderate fault amplitudes is different. The KF-based FDE schemes are superior to the equivalent snapshot LS methods due to their inherent reliance on the available process model. Still, the provided results represent an averaged behavior of the methods and the impact of each satellite is not clearly visible as the performance statistic could be different from the shown averaged behavior depending on the actual geometry and associated C N o values. Due to the short duration of the test scenario we were not able to sample different satellite geometries and the impact of the geometry is left for future investigation.

The results confirm that FDE KF-based techniques constantly outperform the snapshot techniques when an equivalent measurement noise model is used. This, however, should come at no surprise as the KF has an explicit dynamics model (e.g. static position model for non-inertial approach) which fits nicely to the scenario and the results could be worse when the KF process model does not match the true object dynamics. This is, fortunately, not a problem for the second system (see Scenario 2 below) where the inertial sensors are employed within the prediction step using the methods of strapdown inertial mechanization. In this case the process dynamics is based not on the assumptions on expected motion models (as in Scenario 1), but on a true dynamics provided by a direct integration using the inertial sensors and, therefore, is able to capture all the details of the corresponding motion. The inertial unit provides a true short-term stable dynamics and the FDE mechanism benefits from this information as we can see at the end of this section.

Note that Fig. 8 shows the fault exclusion rate to become rather noisy for both the LS and EKF using the weighted observation model as the rates converge to 100 % only for the fault amplitudes close to 30 meters, whereas the methods using non-adaptive models demonstrate 100 % already at the fault amplitudes of 10 meters. We believe that this is a direct result of the nature of the adaptive noise model which assigns increased measurement noise variance for satellite signals with low C N o values and could lead to situations where even the faults of significant amplitudes can be still considered ’within’ the measurement statistic and, therefore not excluded from the final solution. Note that this does not imply that the position solution is unacceptable as the selected satellite is strongly down-weighted within this solution due to its low C N o value, but the performance figures in terms of the detection rates become significantly lower when compared to non-weighted schemes. Here comes an important conclusion that a direct adoption of the weighted measurement model, although resulting in precision improvement, could also lead to the failure of the FDE (although, probably, not failure in IM itself) or similar mechanisms even for relatively large GNSS faults. Thus, both adaptive noise model and FDE scheme seem to be mutually exclusive strategies: while the FDE scheme tries to check the measurement consistency using a given measurement statistic and eliminates the measurements which violate correct measurement assumption, the weighted approach tries to adjust the statistic to fit the measurements and simply down-weights the measurements of possibly poor quality. Still, this complementary behavior of the two strategies (higher detection rate of non-weighted method and lower spread of weighted solution) could form a basis for a hybrid positioning system, where the FDE mechanism on a non-weighted method is used to detect the GNSS faults, and the weighted measurements of the remaining satellites are used to calculate the solution. Although such algorithm configuration could potentially combine the benefits of both schemes, further research is necessary, especially, with respect to the lower availability of the corresponding position solution.

Obviously, a constant amplitude step can hardly be considered as the most representative sensor failure approach. For example, the performance of the KF-based techniques can become much worse for the ramp-like scenarios, where small amplitude and prolonged duration offsets in one of the measurements, when initially undetected, could force the filter to drift significantly from the true estimate. On the other hand, the step-like faults form a fairly representative error model when one considers multipath effects in maritime environments [18].

In Fig. 9 (left) we can see the average impact of the amplitudes of injected faults (artificial faults introduced in Monte Carlo GNSS fault simulator to the random satellite) on the estimated state (horizontal position) in both LS solver and non-inertial EKF for Scenario 1. For comparison, the impact on the system augmented with FDE functionality is also shown for both strategies. As expected, the RBE methods achieve an improved error performance compared to memory-less LS approaches due to the availability of explicit dynamic models. Consistently, the performance of the KF with FDE is also better than the performance of the snapshot algorithms with FDE as the KF approach results in almost two times smaller maximum position error (note that the exact gain is a trade-off of both process and measurement noises and, therefore, could be different for alternative filter configurations). Moreover, the fault amplitudes which corresponds to the inflection point is also smaller for the KF method compared to the LS techniques and this is consistent with the earlier rise of the exclusion rate curve as shown in Fig. 8 (left). Those values can be also interpreted as HMI for the system user as they represent the maximum impact in the estimated position caused by the undetected measurements faults.

5.2 Scenario 2

All results above have been demonstrated for pure GNSS-based approaches for a static scenario, where the FDE functionality has been embedded into either the snapshot LS or the corresponding EKF with explicit assumptions regarding the process dynamics (e.g. constant velocity model). Although the obtained results demonstrated a consistent performance improvement of FDE schemes over the methods without FDE functionality, the question remains on how the FDE methods would perform in more advanced hybrid (sensor fusion) solutions (Scenario 2) and dynamic scenarios (the vessel is moving). In order to address this problem we evaluate the FDE performance of the TC IMU/GNSS architecture (see [8] for mathematical details) and assess, how the presence of relatively high performance IMU affects the final accuracy of the integrated navigation solution during the coastal operation of the vessel Mecklenburg- Vorpommern. Note that the availability of the FOG IMU changes only the process model of the corresponding EKF as well as the numerical values of the association estimation uncertainty (covariance), while the measurement model for the tightly-coupled architecture remains identical to that of non-inertial EKF and all the discussion on FDE functionality from Section 3 is also valid for the presented algorithm.

Figure 9 (right) shows the horizontal position error (HPE) in the case of a real (non-simulated) fault in measurement data during a coastal approach. In order to distinguish between the effect of inertial smoothing and FDE, additionally the HPE of a loosely-coupled (LC) EKF (EKF fed with the position solution from LS solver without FDE mechanism) and a LS solution without FDE (referred as SPP in Fig. 9 (right)) are shown. Within the loosely-coupled architecture the SPP position and velocity solutions serve as direct observations instead of using separate measurement fusion as in the TC architecture, and, therefore, a FDE scheme based on single satellite signals is not applicable. One sees that the smoothing behavior of both TC and LC EKF is comparable, but during the measurement fault, the LC solution starts to slowly follow the wrong position observation from the non-FDE SPP, whereas the FDE in the TC EKF ensures that the faulty measurements are removed from the estimated navigation solution. A manual inspection of the faults during that 24h time span (not shown) leads to the conclusion, that all significant faulty measurements are detected and removed by the TC EKF augmented with the FOG IMU. One also expects the quality of the inertial sensors not to play a significant role for the FDE algorithm performance, at least for the step GNSS errors, although the performance could be different for the cases of ramp errors. We believe that the inertial sensor quality (in terms of additive noise and bias stability) is only important for bridging GNSS outages (standalone strapdown inertial mechanization) - i.e. for contingency functionality and reasonable FDE performance for step GNSS faults can be essentially achieved even with modern MEMS sensors of consumer grade, establishing the path for the FDE methods to be adopted even in lower-cost applications. Clearly, an in-depth statistical analysis is still necessary in order to confirm that also smaller GNSS fault amplitudes can be also detected using, for example, lower cost MEMS IMUs.

6 Summary and outlook

Within this preliminary work we have successfully demonstrated an application of the proposed FDE mechanisms in snapshot and RBE-based positioning algorithms for maritime applications using both non-inertial GNSS-based positioning (Scenario 1) and hybrid inertial/GNSS EKF-based approach (Scenario 2). The proposed methods form a solid foundation for the construction of a more reliable and robust PNT-Unit, where state-of-the-art hybrid navigation algorithms are augmented with integrity monitoring functionality to ensure the system performance in the presence of GNSS faults. The presented work is implemented within the framework of an integrated PNT-Unit with an additional integrity monitoring functionality, where the FDE mechanism provides consistent improvements in terms of the horizontal accuracy both in LS and RBE methods. An interesting behavior of the proposed FDE mechanism has been noticed for the methods with C N o-dependent measurement noise models as the fault detection rate had shown worse performance compared to that of the non-adaptive methods. The proposed methods were evaluated using either real measurements combined with Monte-Carlo simulation of GNSS faults (Scenario 1) or employing true GNSS fault in hybrid approach (Scenario 2). Although only a port operation scenario and one example of a true GNSS fault in open sea was considered, we believe the presented results to be general enough and the scheme could be adopted for other applications.

Further work is planned in extending the presented concepts for the GNSS Doppler shift measurements both in snapshot and RBE algorithms and more detailed analysis is required to assess the fault impact not only on the position, but also on velocity and the attitude (in IMU/GNSS integrated approaches) solutions. Correspondingly, the proposed techniques have to be systematically verified for hybrid inertial and GNSS navigation systems using presented Monte Carlo or similar approaches including DVL sensors. Moreover, while implementing the proposed schemes, special attention has to be paid to the challenging problem of the memory effects in RBE schemes as the typical KF-based implementations are, in principle, infinite memory filters. Clearly, the associated upper error bounds in estimated position, velocity and attitude have to be taken into account for the analysis to be considered as complete. Finally, robust schemes should be developed, in order to enable the system real-time channel selection functionality, where the PNT-Unit would switch between different channels (algorithms with different sensor combinations or sensor/filter configurations) according to the available FDE results and corresponding channel integrity information.

References

(2014) Development of an e-navigation strategy implementation plan

Borre K, Strang G (2010) Algorithms for global positioning Wellesley-Cambridge press

Diesel J, Luu S (1995) GPS/IRS AIME: Calculation of thresholds and protection radius using chi-square methods. In: Proceedings of the 8th international technical meeting of the satellite division of the institute of navigation (ION GPS 1995). CA, Palm Springs, pp 1959–1964

Foxlin E (2005) Pedestrian tracking with shoe-mounted inertial sensors. IEEE Comput. Graph. Appl. 25 (6):38– 46

Grewal MS, Andrews AP (2001) Kalman Filtering. Theory and Practice using MATLAB, 2nd edition. John Wiley & Sons, New York

Groves P, Jiang Z (2013) Height aiding, C/N0 weighting and consistency checking for GNSS NLOS and multipath mitigation in urban areas. J Navig 66(5):653–669

Groves P, Jiang Z, Rudi M, Strode P (2013) A portfolio approach to NLOS and multipath mitigation in dense urban areas. In: Proceedings of the 26th international technical meeting of the satellite division of the institute of navigation (ION GNSS+ 2013). Nashville Convention Center, Nashville, Tennessee, pp 3231–3247

Groves PD (2007) Principles of GNSS, Inertial and Multisensor IIntegrated Navigation Systems (GNSS Technology and Applications) Artech House Publishers

HELCOM (2014) Annual report on shipping accidents in the baltic sea area during 2012

Joerger M, Pervan B (2010) Sequential residual-based RAIM. In: Proceedings of the 23rd international technical meeting of the satellite division of the institute of navigation (ION GNSS 2010), pp. 3167–3180. OR

Kuusniemi H (2005) User-level reliability and quality monitoring in satellite-based personal navigation. Ph.D. thesis, Tampere University of Technology, Tampere

Lee YC, O’Laughlin DG (2000) A performance analysis of a tightly coupled GPS/inertial system for two integrity monitoring method. Navig 47(3):175–189

Minkwitz D, Schlüter S., Beckheinrich J (2010) Integrity assessment of a maritime carrier phase based GNSS augmentation system. In: ION GNSS. Institute of Navigation, Portland, USA, p 2010

Moore T, Hill C, Norris A, Hide C, Park D, Ward N (2008) The potential impact of GNSS/INS integration on maritime navigation. J Navig 61:221–237

Noack T, Engler E, Klisch A, Minkwitz D (2009) Integrity concepts for future maritime ground based augmentation systems. In: Proceedings of the 2nd GNSS vulnerabilities and solutions conference. baska, Croatia

Parkinson BW, Axelrad P (1988) Autonomous GPS integrity monitoring using the pseudorange residual. Navigation: J Inst Navig 35(2):255–274

Petovello MG (2002) Real-time integration of a tactical-grade imu and gps for high-accuracy positioning and navigation. Ph.D. thesis, University of Calgary, Calgary, Alberta

Ryan SJ (2002) Augmentation of DGPS for marine navigation. Ph.D. thesis, Department of Geomatics Engineering, University of Calgary, Calgary, Alberta, Canada

Teunissen P (1998) GPS for Geodesy, chap. Quality control and GPS Springer

Thrun S, Burgard W, Fox D (2005) Probabilistic robotics (intelligent robotics and autonomous agents) the MIT press

Walter T, Walter T, Enge P (1995) Weighted RAIM for precision approach

Wang J, Stewart M, Tsakiri M (1998) Stochastic modeling for static GPS baseline data processing. J Surv Eng 124(4):171–181

Wang JG (2008) Test statistics in Kalman filtering. J of Global Positioning Syst 7(1):81–90

Wieser A, Brunner F (2000) An extended weight model for GPS phase observations. Earth Planet Space 52:777–782

Acknowledgments

The authors would like to thank Baltic Taucher GmbH and Stena Lines and especially the crews of the vessels BALTIC DIVER II and MECKLENBURG VORPOMMERN for their support during the measurement activities. The authors are also grateful for the assistance of their colleagues Stefan Gewies, Carsten Becker, Anja Hesselbarth and Uwe Netzband. The authors would like to thank the reviewers for their valuable comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Open Access

This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

This article is part of Topical collection on Young Researchers Seminar ECTRI-FERSI-FEHRL 2015

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ziebold, R., Lanca, L. & Romanovas, M. On fault detection and exclusion in snapshot and recursive positioning algorithms for maritime applications. Eur. Transp. Res. Rev. 9, 1 (2017). https://doi.org/10.1007/s12544-016-0217-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12544-016-0217-5