Abstract

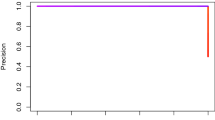

Binary classification on imbalanced data, i.e., a large skew in the class distribution, is a challenging problem. Evaluation of classifiers via the receiver operating characteristic (ROC) curve is common in binary classification. Techniques to develop classifiers that optimize the area under the ROC curve have been proposed. However, for imbalanced data, the ROC curve tends to give an overly optimistic view. Realizing its disadvantages of dealing with imbalanced data, we propose an approach based on the Precision–Recall (PR) curve under the binormal assumption. We propose to choose the classifier that maximizes the area under the binormal PR curve. The asymptotic distribution of the resulting estimator is shown. Simulations, as well as real data results, indicate that the binormal Precision–Recall method outperforms approaches based on the area under the ROC curve.

Similar content being viewed by others

References

Bache K, Lichman M (2013) UCI machine learning repository. University of California, School of Information and Computer Science, Irvine

Bamber D (1975) The area above the ordinal dominance graph and the area below the receiver operating characteristic graph. J Math Psychol 12(4):387–415

Box GE, Cox DR (1964) An analysis of transformations. J R Stat Soc Ser B 26(2):211–252

Boyd K, Eng KH, Page CD (2013) Area under the precision-recall curve: point estimates and confidence intervals. In: Blockeel H (ed) Machine learning and knowledge discovery in databases, vol 8190. Springer, New York, pp 451–466

Brodersen KH, Ong CS, Stephan KE, Buhmann JM (2010a) The balanced accuracy and its posterior distribution. In: Pattern Recognition (ICPR), 2010 20th International Conference on IEEE, pp 3121–3124

Brodersen KH, Ong CS, Stephan KE, Buhmann JM (2010b) The binormal assumption on precision-recall curves. In: Pattern Recognition (ICPR), 2010 20th International Conference on IEEE. pp 4263–4266

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Clémençon S, Vayatis N (2009) Nonparametric estimation of the precision-recall curve. In: Proceedings of the 26th Annual International Conference on Machine Learning. ACM, pp 185–192

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Craven JBM (2005) Markov networks for detecting overlapping elements in sequence data. Adv Neural Inf Process Syst 17:193

Davis J, Burnside ES, de Castro Dutra I, Page D, Ramakrishnan R, Costa VS, Shavlik JW (2005) View learning for statistical relational learning: with an application to mammography. In: Proceeding of the 19th international joint conference on artificial intelligence (IJCAI), pp 677–683

Davis J, Goadrich M (2006) The relationship between precision-recall and roc curves. In: Proceedings of the 23rd international conference on Machine learning, pp 233–240

Dorfman DD, Alf E (1968) Maximum likelihood estimation of parameters of signal detection theorya direct solution. Psychometrika 33(1):117–124

Fan Y, Kai Z, Qiang L (2014) A revisit to the class imbalance learning with linear support vector machine. In: Computer Science & Education (ICCSE), 2014 9th International Conference on, IEEE, pp 516–521

Friedman J, Popescu BE (2003) Gradient directed regularization for linear regression and classification. Technical report. Statistics Department, Stanford University, Stanford

Han H, Wang WY, Mao BH (2005) Borderline-smote: a new over-sampling method in imbalanced data sets learning. In: International Conference on Intelligent Computing. Springer, pp 878–887

Hanley JA, McNeil BJ (1982) The meaning and use of the area under a receiver operating characteristic (roc) curve. Radiology 143(1):29–36

Japkowicz N, Stephen S (2002) The class imbalance problem: a systematic study. Intell Data Anal 6(5):429–449

Kok S, Domingos P (2005) Learning the structure of Markov logic networks. In: Proceedings of the 22nd international conference on Machine learning, pp 441–448

Krzanowski WJ, Hand DJ (2009) ROC curves for continuous data. CRC Press, Boca Raton

LeDell E, Petersen M, van der Laan M (2015) Computationally efficient confidence intervals for cross-validated area under the roc curve estimates. Electron J Stat 9(1):1583

Ma S, Huang J (2005) Regularized roc method for disease classification and biomarker selection with microarray data. Bioinformatics 21(24):4356–4362

Ma S, Song X, Huang J (2006) Regularized binormal roc method in disease classification using microarray data. BMC Bioinform 7(1):253

Metz CE, Kronman HB (1980) Statistical significance tests for binormal roc curves. J Math Psychol 22(3):218–243

Metz CE, Pan X (1999) proper binormal roc curves: theory and maximum-likelihood estimation. J Math Psychol 43(1):1–33

Nash WJ (1994) The Population Biology of Abalone (Haliotis Species) in Tasmania: Blacklip Abalone (H. Rubra) from the North Coast and the Islands of Bass Strait. Sea Fisheries Division, Marine Research Laboratories-Taroona, Department of Primary Industry and Fisheries, Tasmania

Pepe MS (2003) The statistical evaluation of medical tests for classification and prediction. Oxford University Press, Oxford

Pepe MS, Cai T, Longton G (2006) Combining predictors for classification using the area under the receiver operating characteristic curve. Biometrics 62(1):221–229

Raghavan V, Bollmann P, Jung GS (1989) A critical investigation of recall and precision as measures of retrieval system performance. ACM Trans Inf Syst 7(3):205–229

Siebert JP (1987) Vehicle recognition using rule based methods. Project report, Turing Institute

Singla P, Domingos P (2005) Discriminative training of markov logic networks. AAAI 5:868–873

Zou KH, Hall W (2000) Two transformation models for estimating an roc curve derived from continuous data. J Appl Stat 27(5):621–631

Author information

Authors and Affiliations

Corresponding author

7 Appendix

7 Appendix

1.1 7.1 Appendix A: Proof of Proposition 2

The population version of the problem can be described as

We solve the optimization problem (7.15) separately for each \(b\in \{-1,1\}\) to find the overall maximum. We refer to Lagrange multipliers method to find the maxima of the function subject to four equality constraints, with the objective function f written as

Setting the gradient \(\nabla _{{\varvec{\lambda }}} f(\mu _p,\mu _n,\sigma _p,\sigma _n,\beta ,{\varvec{\lambda }})=0\) yields the system of constraint equations listed in (7.15), and setting the gradient \(\nabla _{\mu _p,\mu _n,\sigma _p,\sigma _n}f=0\) gives Lagrange multipliers \({\varvec{\lambda }}\), where functions \(\lambda _j=\lambda _j(b,\beta ,\mu _p,\mu _n,\sigma _p,\sigma _n)\) for \(j=1,\cdots ,4\) as follows.

where \(\phi (x)\) denotes the probability density function of standard normal distribution, i.e., \(\phi (x)=\frac{1}{\sqrt{2\pi }} e^{-x^2/2}\).

With the gradient \(\nabla _{\beta }f=0\), we have

1.2 7.2 Appendix B: Proof of Theorem 1

Let \({\bar{X}}_{11}=\sum \limits _{i=1}^{n_1}X_{11i}/n_1\), \({\bar{X}}_{12}=\sum \limits _{i=1}^{n_1}X_{12i}/n_1\), \({\bar{X}}_{01}=\sum \limits _{i=1}^{n_0}X_{01i}/n_0\), \({\bar{X}}_{02}=\sum \limits _{i=1}^{n_0}X_{02i}/n_0\), \({\hat{\sigma }}_{11}^2=\frac{1}{n_1}\sum \limits _{i=1}^{n_1}\left( X_{11i}-{\bar{X}}_{11}\right) ^2\), \({\hat{\sigma }}_{12}^2=\frac{1}{n_1}\sum \limits _{i=1}^{n_1}\left( X_{12i}-{\bar{X}}_{12}\right) ^2\), \({\hat{\sigma }}_{01}^2=\frac{1}{n_0}\sum \limits _{i=1}^{n_0}\left( X_{01i}-{\bar{X}}_{01}\right) ^2\), \({\hat{\sigma }}_{02}^2=\frac{1}{n_0}\sum \limits _{i=1}^{n_0}\left( X_{02i}-{\bar{X}}_{02}\right) ^2\), \({\hat{c}}_1=\frac{1}{n_1}\sum \limits _{i=1}^{n_1}\left( X_{12i}-{\bar{X}}_{12}\right) \left( X_{11i}-{\bar{X}}_{11}\right) \), and \({\hat{c}}_0=\frac{1}{n_0}\sum \limits _{i=1}^{n_0}\left( X_{02i}-{\bar{X}}_{02}\right) \left( X_{01i}-{\bar{X}}_{01}\right) \). We can replace the means and variances in (7.15) by the sample versions, and let \(({\hat{b}},{\hat{\beta }})\) be the corresponding solution. Then \({\hat{\mu }}_p={\hat{b}}{\bar{X}}_{11}+{\hat{\beta }}{\bar{X}}_{12}\), \({\hat{\mu }}_n={\hat{b}}{\bar{X}}_{01}+{\hat{\beta }}{\bar{X}}_{02}\), \({\hat{\sigma }}_p^2=\sum \limits _{i=1}^{n_1}\left( {\hat{b}}X_{11i}-{\hat{b}}{\bar{X}}_{11}+{\hat{\beta }}\left( X_{12i}-{\bar{X}}_{12}\right) \right) ^2/n_1\), and \({\hat{\sigma }}_n^2=\sum \limits _{i=1}^{n_0}\left( {\hat{b}}X_{01i}-{\hat{b}}{\bar{X}}_{01}+{\hat{\beta }}\left( X_{02i}-{\bar{X}}_{02}\right) \right) ^2/n_0\). Consequently, we can get the estimating equation \({\hat{g}}\) as follows, i.e., the sample version of Equation (7.18).

where \({\hat{\lambda }}_j=\lambda _j({\hat{b}},{\hat{\beta }},{\hat{\mu }}_p,{\hat{\mu }}_n,{\hat{\sigma }}_p,{\hat{\sigma }}_n)\) for \(j=1,\ldots ,4\).

Expand the estimating equation \({\hat{g}}\) in a 1st order Taylor series around \(\beta _0\) as

where \(g'\) denotes the first derivative of g with respect to \(\beta \) and \(g(\beta )\) is expressed in Eq. (7.18).

As a result,

By WLLN and the sample version of Eq. (7.17) with plugging in sample means and variances as estimates for the population ones, we can have

where \(\lambda _{j0}^{'}=\frac{\partial {\lambda _{j0}}}{\partial {\beta _0}}\) for \(j=1,\ldots ,4\).

In addition to the independence structure among predictors, by taking \(\frac{n_1}{n} \rightarrow \pi ,~\frac{n_0}{n} \rightarrow 1-\pi \) into consideration, the Central Limit Theorem (CLT) gives us

where \(\Sigma \) is a diagonal matrix with the elements of vector \(\Big (\frac{\sigma _{11}^2}{\pi }, \frac{\sigma _{12}^2}{\pi }, \frac{\sigma _{01}^2}{1-\pi }, \frac{\sigma _{02}^2}{1-\pi }, \frac{\mu _{114}-\sigma _{11}^4}{\pi }, \frac{\mu _{124}-\sigma _{12}^4}{\pi }, \frac{\mu _{014}-\sigma _{01}^4}{1-\pi }\), \(\frac{\mu _{024}-\sigma _{02}^4}{1-\pi }, \frac{\sigma _{11}^2\sigma _{12}^2}{\pi }, \frac{\sigma _{01}^2\sigma _{02}^2}{1-\pi }\Big )^T\) on the main diagonal, and \(\mu _{ij4}=\text {E}\left[ (X_{ij}-\mu _{ij})^4\right] \) with \(i \in \{0,1\}\) and \(j \in \{1,2\}\).

Consequently, according to multivariate delta method, we have

Finally, by Eq. (7.21), the asymptotic distribution of \({{\varvec{\beta }}}\) is

where \(V=D/B^2\) with B and D defined in Eqs. (7.22) and (7.24) separately.

Rights and permissions

About this article

Cite this article

Liu, Z., Bondell, H.D. Binormal Precision–Recall Curves for Optimal Classification of Imbalanced Data. Stat Biosci 11, 141–161 (2019). https://doi.org/10.1007/s12561-019-09231-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12561-019-09231-9