Abstract

The recent financial and sovereign debt crises emphasized the interdependence between bank and sovereign default risk and showed that major shocks may lead to a self-reinforcing negative spiral. In this paper, we analyse the pattern of interaction between bank and sovereign default risk by endogenously estimating the timing of structural breaks. The endogenous approach avoids the problem of choosing the number and the location of important turning points associated with the exogenous selection of break dates, commonly applied in the literature. In addition, it provides additional insight to which (if any) of the many exogenously proposed breaks are of particular importance to one specific economy, which can help policy makers to structure their actions accordingly. Using Spain during the 2008–2012 period as an illustrative example, we find supporting evidence for the three distinctive phases, marked by the breaks in the early-January and mid-May 2010. The three phases are characterized with an evident change in the bank–sovereign interaction, and we detect a bi-directional relationship only during the interim phase, i.e. at the very peak of the European sovereign debt crisis. We show that endogenously identified turning points coincide with important public events that affect investors’ perception about the government’s capacity and willingness to repay debt and support distressed banks. Finally, we provide evidence that structural dependence in the system extends to the interaction between bank and sovereign default risk volatility.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The global financial crisis, which started in the US in mid-2007 and reached its critical point in September 2008 with the collapse of Lehman Brothers, rapidly spread to Europe, strongly affecting a number of financial institutions. The financial sector downturn was immediately followed by massive government interventions to avoid the collapse of the entire financial system. In the absence of one common European policy to save the banking sector, European governments adopted a variety of national rescue measures (Petrovic and Tutsch 2009), many of which were rooted in the popular belief that government guarantees are largely costless measures used to prevent banks default (Köing et al. 2014). However, government guarantees to the banking sector and bank bailout programmes caused the deterioration of public finances and fostered the rise of the sovereign debt crisis in Europe.Footnote 1

The perceived deterioration in the sovereign creditworthiness further destabilized the banking sector through a guarantee, asset and collateral channel (De Bruyckere et al. 2013). In the first place, increased sovereign credit risk negatively affected the quality of banks’ assets through their large holdings of domestic sovereign debt and the value of the collateral banks can employ for funding. The asset channel was particularly pronounced in developed economies, which in general have a strong home bias in their sovereign portfolios (Davies and Ng 2011). Secondly, the increase in the sovereign credit risk prompted the deterioration of investors’ perceptions about the value and credibility of the implicit and explicit government guarantees that benefit banks. Köing et al. (2014), for example, note that sizable guarantee schemes eroded the credibility of government guarantees and induced a functional interdependence between the likelihood of government default and bank runs. Leonello (2018) provides a model that shows that even in the absence of banks’ holdings of sovereign debt, government guarantees play a key role in the bank-sovereign nexus. Consequently, the sizable rescue schemes, initially aimed at stabilizing domestic financial systems, resulted in an increased interdependence of banks and sovereigns. Since then, given its direct consequences on the overall financial stability, the relationship between the financial and public sectors has become the primary concern of policymakers and supervisory authorities. Nevertheless, as Merler and Pisani-Ferry (2012) emphasize, few policy actions have sought to break this negative feedback loop.

The creation of the bank-sovereign loop is justified in the academic literature from both theoretical (Acharya et al. 2014; Adler and Lizarazo 2015; Bocola 2016; Brunnermeier et al. 2016; Cooper and Nikolov 2018; Leonello 2018; Farhi and Tirole 2018; Abad 2019) and empirical (Mody and Sandri 2012; Alter and Schüler 2012; Acharya et al. 2014) perspectives. The general conclusion is that before government interventions, the risk transfer was mainly directed from banks to sovereigns, whereas after government interventions, sovereign credit spreads became an important determinant of banking sector credit spreads. To draw inferences regarding the dynamics of bank-sovereign default risk interaction, a common approach adopted in the academic literature is to divide the overall sample period under consideration into several stages and then analyse eventual differences in the risk transfer. However, the academic literature provides no consensus for either the number of phases or the policy events that should be used as the relevant break points. In addition, in international samples, the same exogenously defined break dates are commonly imposed to all considered countries. Mody and Sandri (2012), for example, distinguish between three phases of the eurozone sovereign and banking crisis and take as the crucial turning points the rescue of Bear Stearns in March 2008 and the nationalization of Anglo Irish in January 2009. Similarly, Acharya et al. (2014) use the collapse of Lehman Brothers in September 2008, the first bailout in Ireland in late September 2008, and the bailout in Sweeden at the end of October 2008 to divide their sample into three stages. Alter and Schüler (2012), on the other hand, follow BIS (2009) and consider six stages for the 2007–2010 sample period. Finally, De Bruyckere et al. (2013) conduct an analysis on a year-by-year basis.

Empirical research on the changes in the dynamics of bank-sovereign default risk interaction is in principle left with two possibilities, either to exogenously impose break dates that might be expected to alter the linkages between the two sectors or to search for the break dates endogenously. While the first option has been commonly applied in the previous literature, there is a lack of research on the endogenously identified break points. In this paper, we contribute to the existing literature by estimating important turning points in the bank-sovereign nexus within a formal econometric framework. Specifically, we apply Bai et al. (1998) and Bai (1997) procedure to test for structural change of unknown timing in the short-run dynamic relation between sovereign and banking sector default risk. That is, unlike previous studies, we do not impose ex-ante any specific event (i.e. break date). Rather, we endogenously search for potential structural breaks. Once the turning points are estimated, we then search for public events at the national and supranational level around the estimated break points. We additionally contribute to the existing literature by examining not only a mean but also a volatility return transmission within the VAR-BEKK-GARCH framework.Footnote 2 We focus on credit default swap (CDS) spreads as a directly observable market indicator of default risk and use Spain during the 2008–2012 period as an illustrative example.

The Bai et al. (1998) approach offers several advantages over the ex-ante sample splitting procedure. First, the methodology is based on the effective use of information implied in the data and, therefore, does not depend on the a priori decision on what is the exact break date (which is normally not known with certainty). Second, the endogenous detection of the relevant turning points has the potential of taking into account different crisis scenarios. Out of the plethora of proposed breaks in the literature, the procedure allows to pinpoint to those that are of particular importance for one specific country. Namely, the literature has shown that there are important differences in the causes and the development of the sovereign debt crisis across countries (Fernández-Villaverde et al. 2013; Quaglia and Royo 2015). Moreover, Leonello (2018) in a theoretical model shows that the effect of government guarantees on the bank-sovereign nexus could be positive or negative depending on the specific characteristics of the economy and the nature of the banking crisis. Therefore, the approach based on the use of country-specific information provides additional insight for the design of an adequate national policy response to the negative bank-sovereign loop. Third, the Bai et al. (1998) procedure is flexible enough to allow all or some of the model parameters to change. From the policy perspective, breaks in the slope coefficients are of particular interest as they are related to shifts in the pattern of interaction between sovereign and banking sector default risk.

Our main results are as follows. First, we find evidence that structural changes are present in the short-run dynamic relation between the sovereign and banking sector default risk during the period analysed. Following the endogenous search for the possible break dates, we find empirical evidence for the two significant break points which mark the three distinctive phases in the Spanish bank-sovereign system: November 2008 to December 2009, January 2010 to May 2010, and June 2010 to July 2012. A closer inspection of the changes in the model parameters shows that the main source of system instability comes from the changes in the slope rather than level, which indicates a clear shift in the interaction between bank and sovereign default risk.

Second, we provide empirical evidence that the selection of the break date matters for drawing inferences about the dynamic relationship between bank and sovereign credit risk. In particular, we find significant differences in the lead–lag relationship between banking and sovereign CDS spread changes from one phase to another. The first phase is characterized with the unidirectional bank-to-sovereign relationship. In contrast, the last phase is characterized with the unidirectional sovereign-to-bank relationship. The evidence on the two-way bank-sovereign influence, however, is supported only for the interim period between January 2010 and May 2010.

Third, we find that endogenously identified break dates coincide with detectable public events that affect investors’ perception about the government’s capacity and willingness to repay debt and support distressed banks. Specifically, the first break detected for the full model in which all the parameters are allowed to change, corresponds to January 6, 2010. This break date falls 2 days before the release of the official report on the irregularities in Greek government deficit reporting and could be associated with the “wake-up call” effect of the Greek crisis. The first structural break for the partial structural change model in which only slope coefficients are allowed to change, corresponds to January 28, 2010. This is exactly the day on which the European Commission approved bank recapitalization measures in Spain, an event that increased investors’ expectation of government support to distressed banks. The second break, for both the full and the partial structural change model, corresponds to May 12, 2010. This second break point exactly coincides with the public announcement of austerity measures by the Spanish government, and closely follows the creation of the temporary rescue fund for eurozone countries and the first EU-IMF bailout of Greece.

Finally, we find evidence that relationship between banks and sovereigns extends to default risk volatility. Namely, the conditional volatility of the sovereign CDS returns is significantly affected by the lagged volatility of banking sector CDS returns, and vice versa. We further provide preliminary evidence that volatility transmission is also subject to structural breaks, and that the endogenous break dates coincide with those previously detected in the return level.

The remainder of the paper is organized as follows. Section 2 discusses our choice of Spain as an illustrative example. Section 3 describes the data. Section 4 describes econometric tests for structural change and reports main empirical findings. Section 5 analyses volatility transmission through the BEKK-GARCH framework. Section 6 presents the study’s conclusions.

2 Spain as an illustrative example

In the context of the European financial and sovereign debt crisis, Spain provides a suitable setting to analyse the dynamic relationship between bank and sovereign credit risk. To be precise, the initial trigger—the banking sector downturn, subsequently followed by government interventions—was further magnified in Spain with two potential drivers of the feedback loop: the large financial sector and high exposure to domestic sovereign debt. Quaglia and Royo (2015) argue that Spain experienced a full-fledged sovereign debt crisis during the period 2009–2012. As a consequence, we expect that the bank-sovereign feedback effects were important in Spain during the time period we examine. Against this backdrop, and given the size of its economy, Spain has received attention in recent years as one of the countries that has the most important impact on the European CDS market (Kalbaska and Gątkowski 2012; Alter and Beyer 2014).

The Spanish economy entered the global financial crisis with large accumulated imbalances and a huge real estate bubble. The housing boom, spanning from the mid 1990’s to 2007, was originated by demographic trends (entry into the market of the baby boom generation and high immigration), legal developments (taxation and regulation) and favourable interest rates following euro membership (Santos, 2017). The economic expansion, lower mortgage fees and favourable tax treatment of homeownership, encouraged households to demand mortgages. Over time, household debt as a percentage of disposable income rose from 61% in 1997 to 139% in 2007, while nominal property prices more than tripled (IMF 2012). In parallel, banks were providing massive credits to construction and real estate sectors which, at the same time, were the main drivers of the Spanish economic activity. The overall result was the excessive exposure of the banking sector to the real estate. At the peak, household mortgages and loans to construction and real estate sectors were 65% and 45% of the GDP, respectively, and represented 62% of the banking sector’s loan portfolio (Jimeno and Santos 2014; Akin et al. 2014; Santos, 2017). Increasing exposure to the real estate was mainly financed through the wholesale markets and by borrowing from external sources. As a consequence, banking sector was severely affected once the access to financing in international markets was interrupted.

The Spanish banking sector in the pre-crisis period was characterized by coexistence of two types of entities, the traditional banks and Cajas—the unlisted regional savings and loans institutions with a particular governance structure, whose weight in the sector was around 50% (Santos, 2017). The accumulated imbalances were particularly pronounced in the case of Cajas. At the peak, the exposure of Cajas to the real estate amounted to 70% of the total loan portfolio, whereas for the traditional banks the share of real estate loans was much lower and amounted to 55% (Jimeno and Santos 2014; Santos, 2017). Another aggravating factor is that the period of credit boom was characterized with too soft lending standards and excessive risk-taking, and precisely were Cajas that granted mortgages with the highest loan-to-value ratio (Akin et al. 2014). This particular structure of the Spanish credit market largely contributed to the banking sector sensitivity to major economic shocks.

The accumulated imbalances during the pre-crisis boom period aggravated the effects of the global financial crisis by uncovering the vulnerabilities of the Spanish financial system. Jimeno and Santos (2014) emphasize the three key characteristics: high dependence of economic activity on the construction and real estate sectors, the structure and characteristics of the banking system and the excessive dependence on external financing, which made Spanish economy a particularly favourable ground for further crisis development. Consequently, after the burst of the real estate bubble in 2008, Spain entered a recession, and credit institutions experienced substantial increases in their non-performing loans and wholesale funding runs. The consolidation of the banking sector through interventions, mergers and takeovers took place. By way of example, the number of Cajas was reduced from 45 (operating in June, 2010) to only 11 by March 2011. The Spanish government took measures to create confidence in the banking system and stabilize financial markets. In October 2008, the Spanish government announced the provision of guarantees and liquidity to eligible financial institutions, whereas in June 2009 the government formed the bank bailout fund, the Fund for Orderly Bank Restructuring (FROB), to assist with the consolidation and restructuring of the domestic banking system. These measures strengthened state linkages with the banking sector and increased the probability of state intervention as perceived by market participants.

Another magnifying factor for the feedback effects among banking and sovereign sectors is the size of the Spanish financial system, which is dominated by banks that are large relative to the size of the economy. In such a setting investors might perceive sizable budgetary consequences of maintaining financial stability and have doubts about the fiscal capacity of the country. Merler and Pisani-Ferry (2012) argue that the perceived cost of bank rescue is precisely the channel through which credit risk can spill over from banks to sovereign. Gerlach et al. (2010) show that the size of the country’s banking sector has an effect on the sovereign CDS spreads: the greater the size of the sector, the higher the probability that the state will rescue banks in times of crisis. In the same line, Dieckmann and Plank (2012) document that the magnitude of the private-to-public risk transfer depends on the relative importance of the country’s financial system and that the sensitivity of the CDS spreads to the health of financial system is further magnified for EMU countries.

Finally, financial institutions in Spain are heavily exposed to home sovereign debt: their exposure to the home sovereign amounts to 113% of Tier 1 capital (Blundell-Wignall and Slovik 2010). In such a setting, the level of interconnectedness between the state and the banking sector is expected to be stronger. De Bruyckere et al. (2013) show that higher sovereign debt exposures lead to more contagion between bank and sovereign default risk. In addition, Adler and Lizarazo (2015) provide a theoretical model in which high banks’ exposure to domestic sovereign debt gives rise to a fragile interdependence between fiscal and bank solvency that potentially leads to a self-fulfilling crisis.

All these conditions made investors more concerned about the sustainability of Spanish government finances and made Spain more vulnerable to outside effects of the European sovereign debt crisis. For example, overall market confidence rapidly collapsed in November 2009 following the announcement by the newly appointed Greek government of a much larger than expected budget deficit (from originally stated 5% to 12.7% of GDP). This event made investors more sensitive to underlying country-specific fundamentals (i.e. the “wake-up call”) and led to a substantial increase in sovereign credit spreads across peripheral eurozone countries (Beirne and Fratzscher 2013; Giordano et al. 2013). In particular, Mink and de Haan (2013) provide empirical evidence that Spain’s sovereign debt responds to news about the economic situation of Greece. As a result, we could expect that new information that induces fundamentals contagion affects investors’ perception of sovereign creditworthiness and interferes in the bank-sovereign nexus.

3 Data

We focus on CDS spreads as a directly observable market indicator of default risk. A CDS represents a type of bilateral insurance contract that transfers the credit risk of an underlying reference entity (company or sovereign) from the buyer to the seller. The buyer of the protection pays the seller a periodic premium, called a CDS spread, as compensation for protection against the default of the particular reference entity. In the case of default, the contract terminates, and the seller of the protection pays the difference between the par value and the recovery value of the reference obligation to the buyer. Therefore, the CDS spread is a directly observable market measure of credit spread.

The analysis conducted in this paper could also be done by considering the bond market instead of the CDS market. We refrain from this approach for two reasons. First, the CDS market is in principle more liquid than the bond market and CDS contracts are typically traded on standardized terms (Acharya et al. 2014; Oehmke and Zawadowski 2017). As a result the CDS market tends to be less influenced by non-default factors (e.g. taxes, illiquidity, and market microstructure effects) which substantially affect bond prices (Longstaff et al. 2005). Specifically, Ang and Longstaff (2013) argue that sovereign bond spreads are affected by movements in interest rates, demand–supply imbalances and illiquidity, among other factors. Second, a number of studies have undoubtedly shown that CDS spreads reflect changes in credit risk more accurately and quickly than corporate bond yield spreads (Blanco et al. 2005; Forte and Peña 2009; Norden and Weber 2009, among others). As a result, CDS spreads are commonly used as a preferred market benchmark for credit risk in the analysis of the bank-sovereign nexus (Longstaff et al. 2011; Ejsing and Lemke 2011; Eichengreen et al. 2012; Dieckmann and Plank 2012; Acharya et al. 2014).

The analysis is performed using daily data on sovereign and bank CDS spreads. The data were downloaded from Thomson Reuters and spans from November 2008 to July 2012. The period of analysis is limited to the crisis period that encompasses both the European financial and sovereign debt crisis. Namely, Brunnermeier et al. (2016) argue that the nexus between bank and sovereign default risk was particularly pronounced during the 2009–2012 period. Although it would be optimal to choose the starting point of the sample before the collapse of Lehman Brothers in September 2008, we use November 2008 because data are available for all banks from that point onwards. In the Thomson Reuters CDS Database, the CDS spreads are available only for the two largest banks (Banco Santander and BBVA) from December 2007. The data on CDS spreads for the remaining Spanish banks are available from 2008. Still, the period chosen covers different phases of the European financial and sovereign debt crisis and we are able to capture the initial effect of the announcements of rescue packages by the majority of the Euro-area governments, which started in October 2008.Footnote 3 The sample period ends in 2012 which is commonly considered as the end of the European sovereign debt crisis (Horta et al. 2014; Brunnermeier et al. 2016; Hassan et al. 2017). For example, Horta et al. (2014) use April 2012, whereas Hassan et al. (2017) consider August 2012 and the announcement of the European Central Bank (ECB) of an Outright Monetary Transaction (OMT) programme as the end of the sovereign debt crisis. In our case, the sample period ends with the so-called bailout of Spain just after the Spanish government requested external assistance to eurozone policymakers to rescue its ailing banks. In July 2012, the Eurogroup officially approved up to €100 billion in financial assistance to Spain for the recapitalisation and restructuring of its banking sector. Furthermore, Alter and Beyer (2014) in their analysis on the spillover effects among sovereigns and banks in the euro area consider July 2012 as the end of the sample period. Therefore, the period chosen is in line with the current literature and seems suitable for analysing the presence of structural breaks in the private-to-public feedback mechanism.

We utilize only the most liquid 5-year euro-denominated CDS contracts on senior unsecured debt and consider the following banks in Spain for which data on CDS spreads are available: Banco Santander, BBVA, La Caixa, Banco Popular, Banco Pastor, Banco Sabadell and Bankinter.Footnote 4 In October 2011 Banco Pastor was acquired by Banco Popular but continued to run as a separate entity. The results of the paper, however, remain unchanged when Banco Pastor is excluded from the analysis. Together, these financial institutions hold approximately 60% of the total assets of Spanish banks and almost 70% of the total domestic exposure to sovereign debt.Footnote 5 More importantly, the majority of these banks are considered to be of local systemic importance and “too big to fail”. Banco Santander, BBVA, La Caixa, Banco Popular and Banco Sabadell have been classified as D-SIBs (domestic systematically important banks) by the European Banking Authority (EBA).Footnote 6 In that sense, market participants are particularly concerned with the repercussions that the eventual bailout of these institutions might have for the economy, which makes our sample of banks particularly suitable for the analysis.

General descriptive statistics of the data set are presented in Table 1. Panel A depicts main summary statistics for the CDS spread levels. The mean level of sovereign CDS spreads was around 193 basis points (bp) for the entire sample period, whereas the mean level of CDS spreads for the banking sector was substantially higher, 329.51 bp, on average. Panel B of Table 1 depicts main summary statistics for the CDS returns calculated as log-differences (Alter and Schüler 2012). The highest mean return of 0.191% was detected for the BBVA followed by the sovereign CDS mean return of 0.169% and Banco Santander CDS mean return of 0.153%. However, the sovereign CDS returns were characterized with the highest standard deviation.

To proxy for the risk inherent to the banking system, we analyse the systematic variation in the bank-level default risk on the basis of principal component analysis (PCA). We perform PCA on daily log-differences of CDS spreads provided that the respective time series are stationary. The Augmented Dickey Fuller Test (ADF) for the presence of unit roots shows that entity-specific CDS spreads are non-stationary at a 95% confidence level. In contrast, the null hypothesis of non-stationarity for the log first-differences of CDS spread series is rejected for all entities considered (see Table 1). The PCA analysis is commonly used to extract a common component in variations of CDS spreads. For example, Longstaff et al. (2011), Dieckmann and Plank (2012) and Hassan et al. (2017) use PCA to study the commonality in sovereign CDS spreads; Eichengreen et al. (2012) use PCA to study common factors in individual banks’ CDS spreads; while Chen and Härdle (2015) use PCA to extract common factors in the CDS index data.

The PCA shows that there is significant amount of commonality in the variation of the banking sector CDS spreads and that a single common factor (i.e. a first principal component) is able to explain around 50.1% of the sample variation. The loadings on the first eigenvector, presented in Table 2, are not equally distributed among the banks, however. The loadings for CDS spread changes lie in the range 0.23–0.43, where BBVA, Banco Santander and Banco Sabadell have the highest loading coefficients of around 0.43, and Banco Pastor the lowest loading coefficient of 0.23. In subsequent analysis we focus only on the systematic component and employ the first principal component (PC1) as a variable that captures a common trend in the banking sector default risk. In this way we mitigate any spurious results that may arise from noise in the data on the individual bank level.

4 Tests for structural change

The vector autoregressive (VAR) framework is commonly used to study short-run dynamic linkages among financial markets. Therefore, we first set up a benchmark VAR model and then analyse its stability using tests for structural change of unknown timing. Finally, we apply Granger-causality tests to illustrate the implications of parameter instability on the inference about the linkages between bank and sovereign default risk.

The benchmark VAR model takes the following form:

where \( \varvec{y}_{t} = \left( {y_{1,t , } y_{2,t} } \right)^{'} \) and \( \varvec{y}_{1,t} \) are daily log-changes of sovereign CDS spreads (\( y_{SOV,t} \)) and \( \varvec{y}_{2,t} \) are daily log-changes of banking sector CDS spreads (\( y_{BK,t} \)) proxied by the PC1; \( \varvec{\mu} \) and \( \varvec{\varepsilon}_{t} \) are \( 2 \times 1 \), \( \left\{ {{\varvec{\Gamma}}_{j} } \right\} \) is \( 2 \times 2 \) and p is the number of lags determined according to the Schwartz Information Criterion (SIC). The lag order selected by the SIC criterion is \( p = 1 \).

Following Bai et al. (1998), we further augment the benchmark VAR model and allow the parameters of the model to change at \( \tau \) (i.e. the break date):

where \( \varvec{y}_{t} \), \( \varvec{\mu} \), \( \varvec{\lambda} \) and \( \varvec{\varepsilon}_{t} \) are \( 2 \times 1 \); and \( \left\{ {{\varvec{\Gamma}}_{j} } \right\} \) and \( \left\{ {{\varvec{\Pi}}_{j} } \right\} \) are \( 2 \times 2 \); \( d_{t} \left( \tau \right) = 0 \) for \( t \le \tau \) and \( d_{t} \left( \tau \right) = 1 \) for \( t > \tau \). The break point \( \tau \) is explicitly treated as unknown and is estimated in the interval \( \tau \in \left[ {hT,\left( {1 - h} \right)T} \right] \), where \( h \) is the trimming value of the sample \( T \).Footnote 7

We consider a pure and a partial structural change model, thus allowing either all or a subset of parameters to change. The general framework of partial structural changes in stacked form is given by:

where \( \varvec{V}_{t}^{'} = \left( {1,\varvec{y}_{t - 1}^{'} , \ldots ,\varvec{y}_{t - p}^{'} } \right) \), \( \varvec{\theta}= Vec\left( {\varvec{\mu},{\varvec{\Gamma}}_{1} , \ldots ,{\varvec{\Gamma}}_{p} } \right) \), \( \varvec{\delta}= Vec\left( {\varvec{\mu},{\varvec{\Pi}}_{1} , \ldots ,{\varvec{\Pi}}_{p} } \right) \), \( \varvec{I} \) is the \( 2 \times 2 \) identity matrix and \( \varvec{S} \) is the selection matrix, containing 0’s and 1’s and having full low rank. The selection matrix \( \varvec{S} \) specifies which regressors appear in each equation. For example, for \( \varvec{S} = \varvec{I} \) we have a pure structural change model in which all the coefficients are allowed to change.

More compactly, Eq. (3) could be rewritten as:

where \( \varvec{Z}_{t}^{'} \left( \tau \right) = \left( {\left( {\varvec{V}_{t}^{'} \otimes \varvec{I}} \right),d_{t} \left( \tau \right)\left( {\varvec{V}_{t}^{'} \otimes \varvec{I}} \right)\varvec{S}^{\prime}} \right) \) and \( \varvec{\beta}= \left( {\varvec{\theta^{\prime}},\left( {\varvec{S\delta }} \right)^{'} } \right)^{'} . \)

4.1 Preliminary break-point tests

First, we perform preliminary break-point tests on single time-series and on individual equations of the benchmark VAR using the Quandt-Andrews SupF test (Quandt 1960; Andrews 1993). The Quandt-Andrews SupF test is based on a sequence of Wald statistics, performed over all possible break dates between \( hT \) and \( \left( {1 - h} \right)T \), testing the null hypothesis that no break exists, i.e. \( \varvec{S\delta } = 0. \)

For a single equation \( i, \left( {i = 1,2} \right) \) and for a given \( \tau \), the estimator of \( \hat{\varvec{\beta }}_{i} \left( \tau \right) \) and F-statistic is given by

where \( \varvec{Z}_{i,t}^{'} \left( \tau \right) = \left( {\varvec{V}_{t}^{'} ,d_{t} \left( \tau \right)\varvec{V}_{t}^{'} \varvec{S^{\prime}}} \right) \) and \( \varvec{\beta}_{i} = \left( {\varvec{\theta}_{i}^{'} ,\left( {\varvec{S\delta }_{i} } \right)^{'} } \right)^{'} . \)

where \( \varvec{R} = \left( {0,\varvec{I}} \right) \) so that \( \varvec{R\beta }_{i} = \varvec{S\delta }_{i} \), \( \hat{\sigma }_{\tau }^{2} =\varvec{\varepsilon}_{\varvec{i}}^{\varvec{'}}\varvec{\varepsilon}_{\varvec{i}} /\left( {T - k} \right) \), and k is the total number of parameters.

The SupF test is defined as the largest F-statistics of the individual break-point tests performed over all possible break dates:

The \( \hat{\tau } \) that maximizes \( F_{T} \left( \tau \right) \) is the estimated break date. Candelon and Lütkepohl (2001) show that the distributions of the test statistics under the stability hypothesis may be different from assumed χ2 and F distributions in dynamic models, and that, therefore, bootstrapped p values are more reliable. To ensure the robustness of the results we calculate p values using: the replacement bootstrap developed in Candelon and Lütkepohl (2001), the fixed-regressor bootstrap developed in Hansen (2000), and the robust “wild” bootstrap that accounts for heteroskedasticity in residuals. The number of bootstrap replications is set to 1000.

The results of the Quandt-Andrews SupF test for structural breaks on individual series of CDS returns and on individual equations of the VAR system are reported in Table 3.Footnote 8 We do not find evidence of any discrete shift in either series during the time period considered (see Table 3, Panel A). However, it is evident that the null hypothesis of no structural breaks is rejected in most of the cases for individual equations of the VAR model. These results are reported in Table 3, Panel B. The maximum sup-Wald statistic for the first equation refers to January 22, 2010, and for the second equation to January 28, 2010, suggesting that the VAR system is likely to have a common break. In addition, the break-point tests separately performed on the constant coefficient and slope coefficients reveal that the system’s instability comes precisely from the changes in the return transmission mechanism.

To detect eventual shifts in the variance, we work with VAR residuals before accounting for breaks in the mean equation. We do not find evidence of a shift in variance for the banking sector component return equation, whereas the variance of residuals of the sovereign CDS return equation indicates the presence of structural break on December 13, 2011. However, once we apply GARCH filtering on the data and consider GARCH filtered residuals, the evidence of the break disappears. These results indicate that there is no firm evidence of the abrupt structural change in the level of residual variance.

4.2 Tests for structural change in the VAR system

The equation-by-equation procedure clearly rejects the null hypothesis of no structural breaks in the data. However, Bai et al. (1998) show that the estimate of the change point is more precise when series with a common break are analysed jointly. The breaks specific to each equation do not necessarily reflect the breaks in the system that is approximated by the VAR, as the system approach makes an assumption that the change in the transmission mechanism occurs at the same point in time for both equations.

Building on the preliminary evidence that the underlying process of the mean transmission has changed over time and without imposing any specific date ex-ante, we start the endogenous search for the possible break dates in the transmission channel following Bai et al. (1998). For any given \( \tau \), the estimators of \( \hat{\varvec{\beta }}\left( \tau \right) \) and \( \hat{F}\left( \tau \right) \) testing that no break exists in the VAR taken as a system of equations is calculated as follows:

where \( \varvec{R} = \left( {0,\varvec{I}} \right) \) so that \( \varvec{R\beta } = \varvec{S\delta } \) and \( {\hat{\varvec{\Sigma }}}_{\tau } \) is the estimator of \( {\varvec{\Sigma}} \) obtained from OLS residuals under the alternative hypothesis, for a given \( \tau \). As before, we calculate p values using: the replacement bootstrap developed in Candelon and Lütkepohl (2001), the fixed-regressor bootstrap developed in Hansen (2000), and the robust “wild” bootstrap that accounts for heteroskedasticity in residuals. The number of bootstrap replications is set to 1000.

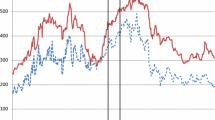

The sequence of Wald statistics for the VAR system is presented in Fig. 1. The sup-Wald statistic is statistically significant and corresponds to January 6, 2010, 2 days before the European Commission published official report on irregularities in Greek government deficit reporting. This finding goes in line with Mink and de Haan (2013) who provide evidence that, due to a “wake-up call”, the price of sovereign debt of Spain responds to news about the economic situation of Greece. The partial structural change model, in which only a subset of coefficients is subject to change, reveals that the instability of the system comes precisely from the changes in the return transmission mechanism: constant coefficients seem to be stable over time, whereas the slope coefficients exhibit structural breaks (see Table 4).Footnote 9 The sup-Wald statistic for the partial structural change model corresponds to January 28, 2010. Interestingly, this break date coincides exactly with the European Commission’s approval of the Spanish recapitalization scheme for credit institutions, which allowed the bank bailout fund—FROB to acquire convertible preference shares to be issued by credit institutions. This event sharply increased investors’ expectation of government support to distressed banks. Finally, we calculate confidence intervals for the estimated break date using the procedure proposed by Bai et al. (1998). The 90% confidence interval ranges between November 6, 2009 and March 8, 2010.

To check for the possibility of multiple structural breaks, we follow the sequential procedure described in Bai (1997). We further split the overall sample into two subsamples at the estimated break point—January 6, 2010, and reapply the unknown break-point test on the subsamples. We fail to find evidence of an additional break in the first sub-period (see Table 5, Panel A), but find a statistically significant break point in the second sub-period that corresponds to May 12, 2010 (see Table 5, Panel B).Footnote 10 This date coincides exactly with the Spanish government’s public announcement of severe austerity measures that were primarily designed to restore confidence in the Spanish economy, calm investors and suppress fears of a Greek-style bailout. On May 12, 2010, the government announced cuts to civil service salaries and public sector investments, a freeze in pension payments and the abolition of the childbirth allowance with the aim of reducing the public deficit from 11.2 to 6% of GDP by 2011. This austerity package, worth €15 billion, was subsequently approved by the Spanish parliament by a one-vote margin on May 27, 2010. This finding goes in line with the theoretical model of Leonello (2018) who shows that austerity measures have an important effect on the bank-sovereign nexus. At the same time, the estimated break date in the second sub-period closely follows the creation of the temporary rescue fund for eurozone countries (European Financial Stability Facility—EFSF) on May 9, 2010, and the first EU-IMF bailout of Greece on May 2, 2010. These measures, aimed at restoring market confidence, were considered as an important signal of the willingness of European governments to protect private investors (Mink and de Haan 2013). The 90% confidence interval ranges between April 1, 2010 and June 22, 2010.

When we allow only a subset of parameters to change within the partial structural change model, it seems that results on the presence of a structural break are primarily driven by the break in the slope coefficients (see Table 5, Panel B). The sup-Wald statistic for the partial structural change model in which we consider only the break in slope coefficients under the alternative hypothesis reaffirms May 12, 2010, as an important turning point.

The sequence of Wald statistics for the two sub-periods is presented in Fig. 2a, b. For subsequent divisions, we fail to reject the null hypothesis of the parameter constancy. The refinement procedure confirms the existence of the first break.

4.3 Granger-causality tests

We apply Granger-causality tests to illustrate the implications the parameter instability has on the inference about the linkages between bank and sovereign default risk. The Granger-causality is a commonly used procedure in the literature to study the pattern of interaction between markets (Granger et al. 2000; Sander and Kleimeier 2003; Norden and Weber 2009). For example, Sander and Kleimeier (2003), use Granger-causality to study shifts in sovereign debt market interdependencies during the Asian crisis. In particular, Alter and Schüler (2012) use Granger-causality to study the interdependencies between banking and sovereign daily CDS spread changes.

The Granger-causality test is not aimed at revealing the causality pattern between considered series, but does yield useful information regarding their lead-lag relationship. Following the definition of Granger-causality provided by Geweke et al. (1983), the test in our case actually reveals whether coefficients of the lagged banking sector (sovereign) CDS returns are statistically significant, and help to predict sovereign (banking sector) CDS returns. In other words, it tests if banking sector CDS returns contain important information for determining sovereign CDS returns (and vice versa). In reference to the previous analysis and detected structural breaks, we divide data into three sub-periods and conduct Granger-causality tests on the subsamples. Results, reported in Table 6 show that there are significant differences in the banking-sovereign sector interdependencies from one phase to another. The first sub-period (November 2008 to December 2009) is characterized with the one-way Granger-causality from the banking sector to the government. That is, banking sector CDS returns lead sovereign CDS returns. The second, interim period (January 2010–May 2010), is characterized with a two-way feedback effect. Finally, the third sub-period (June 2010 to July 2012) is characterized with a clear one-way Granger-causality from sovereign CDS spreads to banking sector CDS spreads. That is, in the third sub-period sovereign CDS returns lead banking sector CDS returns.

The results of Granger-causality tests obtained when all statistically significant turning points are accounted for are clearly different from the results for the complete data set or one break-date selection. For example, without accounting for the relevant structural breaks we would wrongly conclude that the overall sample period is characterized with one-way Granger-causality from sovereign CDS spreads to banking sector CDS spreads. Similarly, accounting just for the first break, we would report a two-way feedback effect from January 2010 onwards. Our results could also be contrasted with some of the findings of Alter and Schüler (2012). For example, the period spanning from November, 2008 until the second break in May, 2010, would in principle correspond to the “during and after” government interactions phase of Alter and Schüler (2012) which they define from late October, 2008 until the end of May, 2010. These authors only consider the two largest banks in Spain (i.e. Banco Santader and BBVA) on individual basis and report a two-way Granger causality for that period of time for both banks. In contrast, for roughly the same period we report two distinct phases and find the two-way Granger causality just for the period January 2010–May 2010.

In order to reinforce the structural break analysis we perform recursive Granger-causality tests by consecutively adding one observation to the sample. Figure 3 plots p values of recursive Granger-causality tests for the sovereign and banking sector component. We can observe a clear one-way influence of banking sector CDS spreads on sovereign CDS spreads before the first break in January 2010. This initial period is followed by the short transition period that ends with the structural change in May 2010. From May 2010 onwards, the null hypothesis that log-changes in sovereign CDS spreads do not Granger-cause log-changes in banking sector CDS spreads is rejected at 1% significance level.

5 Volatility transmission

Taken together, all conducted tests show strong evidence in favour of the presence of structural changes in the system. Thus, in reference to the previous analysis and the several patterns revealed, we divide the data into three sub-periods: November 2008 to December 2009, January 2010 to May 2010 and June 2010 to July 2012. In light of the previous findings, we augment each equation of the baseline VAR system with the interaction break dummy variables, allowing interactions with intercept and slope coefficients (i.e. lags of the endogenous variables), thus preserving the symmetry in the VAR system. The mean return generating process is defined as follows:

where \( \varvec{y}_{t} \) is a \( 2 \times 1 \) vector of the daily sovereign (\( y_{1,t} = y_{{{\text{SOV}},t}} \)) and banking sector (\( y_{2,t} = y_{{{\text{BK}},t}} \)) CDS spread log-differences; \( d_{t}^{1} \) is a dummy variable that takes the value 1 if the observation belongs to the first sub-period (November 2008–December 2009) and 0 otherwise, and \( d_{t}^{2} \) is a transition period dummy variable that takes the value 1 if the observation belongs to the second sub-period (January 2010–May 2010) and 0 otherwise. In this way we consider a VAR model with three structural regime shifts that reflect the change in the dynamics of the mean transmission. The own market segment mean spillovers and cross-market segment mean spillovers are measured by the estimates of the matrix \( {\varvec{\Gamma}} \), \( {\mathbf{D}}^{1} \) and \( {\mathbf{D}}^{2} \). The diagonal elements of the corresponding matrices measure the effect of the own lagged returns, and the off-diagonal elements the effect of the cross-market lagged returns. Thus, the off-diagonal elements detect the return spillover.

To extract conditional variances and covariances, we estimate a bivariate VAR-BEKK-GARCH model proposed by Engle and Kroner (1995). We consider the full BEKK representation of Baba et al. (1990) that allows for the time-varying variance–covariance matrix and, by construction, guarantees that the estimated conditional covariance matrix is positive definite. Specifically, in order to capture conditional heteroskedasticity and estimate volatility transmission throughout the financial system, we estimate the regime-switching VAR(1)-GARCH(1,1) model within the BEKK framework of the following form:

where

The \( \varvec{\varepsilon}_{t} \) is a \( 2 \times 1 \) vector of residuals, i.e. vector of innovations for each market segment at time t, with its corresponding conditional covariance matrix, \( \varvec{H}_{t} \). The market information available at time t − 1 is represented by the information set \( {\varvec{\Omega}}_{t - 1} \).

Equation (11) defines the variance generating process. The \( \varvec{H}_{t} \) is the \( 2 \times 2 \) conditional variance–covariance matrix of residuals that, in the bivariate case of the model, requires 11 parameters to be estimated. The matrix C is an upper triangular matrix containing the constants for the variance equation. The ARCH coefficients are derived from the A matrix, and the GARCH coefficients are derived from the B matrix. We use GARCH(1,1), as this specification is shown to generally outperform other models (Hansen and Lunde, 2005). The model is estimated using the maximum likelihood estimation procedure. The log-likelihood function is given by

The results from estimating the bivariate VAR-BEKK-GARCH model described with Eqs. (10) and (11) are provided in Table 7. We check the adequacy of the VAR-BEKK-GARCH specification using the Ljung-Box Q-statistic and Lagrange Multiplier (LM). We report Ljung-Box statistics for standardized squared residuals up to lag 12 and 24. The results show no serial dependence in the standardized squared residuals which indicates that the model is appropriate and that is able to capture the dynamics of the conditional volatility well.

The mean spillovers are measured with the parameters of the \( {\varvec{\Gamma}} \) matrix. Specifically, the parameters of the \( {\varvec{\Gamma}} \) matrix measure the effects of innovation in one segment of the market on its own lagged return (diagonal elements) and that of the other segment (off-diagonal elements). For example, \( \gamma_{12} \), measures the effect that the change in banking sector CDS return has on the sovereign sector CDS return in the span of 1 day. Given that we consider a regime-switching model, these coefficients have to be interpreted together with the elements provided in matrix \( {\mathbf{D}}^{1} \) and \( {\mathbf{D}}^{2} \). We can observe that the mean transmission from the banking sector to the government was the strongest in the first sub-period, decreased in the second and completely turned around in the third, in which we observe a highly pronounced mean transmission from government to the banking sector. Specifically, the \( \gamma_{21} \) coefficient is positive and statistically significant at the 1% level.

The ARCH parameters are represented by matrix A, whereas the coefficients of matrix B measure the influence of lagged conditional variances and covariances on the conditional variance today. These coefficients measure the volatility clustering: the larger the coefficient, the more persistent the effect of shocks. The diagonal elements of A and B matrices measure the effect of the own past shocks (\( a_{ii} \)) and past volatility (\( b_{ii} \)). The coefficient a11 measures the dependence of conditional sovereign CDS return volatility on its own lagged past shocks, and a22 the dependence of conditional banking sector CDS return volatility on its own lagged past shocks. The a11 and a22 coefficients are statistically significant at the 1% level, indicating that there were strong ARCH effects. The b11 measures the dependence of conditional sovereign CDS return volatility on its own lagged volatility, and b22 the dependence of conditional banking sector CDS return volatility on its own lagged volatility. The b11 and b22 coefficients are statistically significant at the 1% level, indicating that there were strong GARCH effects.

We are particularly interested in the off-diagonal elements, as they reveal the volatility spillovers across the market segments under consideration. The coefficient \( a_{ij} \) measures the spillover of the squared values of shocks in the previous period from the ith to the current volatility of jth market. The a21 measures the dependence of conditional sovereign CDS return volatility on the lagged shocks of bank CDS returns. The a21 coefficient is statistically significant at the 1% level. The opposite effect, the dependence of conditional banking sector CDS return volatility on the lagged shocks of sovereign CDS returns, is measured with the a12 coefficient which is not found to be statistically significant. Conversely, \( b_{ij} \) measures the spillover of the conditional volatility of the ith market in the previous period to the jth market in the current period. The b21 measures the dependence of conditional sovereign CDS return volatility on the lagged volatility of bank CDS returns. The b12 measures the dependence of conditional banking sector CDS return volatility on the lagged volatility of sovereign CDS returns. Both coefficients are statistically significant at the 1% level.

In conclusion, we find empirical evidence for the two-way volatility transmission between sovereign and banking sector CDS returns. The time-varying conditional correlations between sovereign CDS returns and the first principal component of the banking sector CDS returns are positive throughout the sample period, ranging from a minimum of 0.21 to maximum of 0.95, with the mean of 0.56. The period of almost perfect correlation coincides with the abrupt break detected in the mean transmission. In other words, the peak in conditional correlations refers to May 11, 2010, 1 day before the government announced severe austerity measures.

5.1 Structural changes in the volatility transmission

To detect eventual changes in the volatility transmission, it is possible to apply a Granger causality test directly on time-varying variances in a VAR framework of the following form:

where \( \sigma_{11,t} \) is the time-varying sovereign CDS return volatility, \( \sigma_{22,t} \) is the time-varying banking sector CDS return volatility, ε1 and ε2 are i.i.d. error terms and p is the number of lags determined according to the SIC. The selected number of lags is equal to one. In line with previous analysis, we test for the structural breaks in the VAR system and find a significant break point that, as before, coincides with May 12, 2010. Once the overall sample is divided into two subsamples and the structural break-point tests are reapplied on the two subsamples, an additional break point can be detected only in the first sub-period on January 28, 2010. These results, presented in Table 8, are consistent with the breaks detected in the mean transmission and independent of whether sovereign and banking sector volatility is calculated before or after accounting for the changes in the mean transmission. Finally, the results of the pairwise Granger causality tests are presented in Table 9.Footnote 11 It should be noted, however, that these results should be interpreted with caution due to the implicit measurement error in the variables employed and, therefore, should be regarded only as a preliminary evidence on the changes in volatility transmission.

6 Conclusions

The dynamics of the interaction between the sovereign and banking sector has direct implications for overall financial stability but these interactions are not well understood. A common approach adopted in the academic literature when analysing this issue is to divide the overall sample period under consideration into several stages and subsequently analyse eventual differences in the risk transfer. However, in the context of the recent European financial and sovereign debt crisis, the academic literature provides no consensus either on the number of phases or on the relevant turning points. In addition, when international samples are considered, specific characteristics of each country are usually ignored and the same a priori selected break dates are imposed to the overall sample. In this paper, we shed light on this issue by endogenously searching for potential structural breaks in the bank-sovereign nexus within a formal econometric framework.

We provide empirical evidence that the election of the break date is not a trivial question. Using Spain during the 2008–2012 period as an illustrative example, we find evidence for the three distinctive phases and for the evident change in the pattern of interaction between banking and sovereign CDS spreads: from a pronounced one-way bank to sovereign Granger-causality (November 2008 to December 2009) to a pronounced one-way sovereign to bank Granger-causality since May 2010. The bi-directional relationship between bank and sovereign CDS spreads is detected only during the interim period (January–May 2010), at the very peak of the European sovereign debt crisis. In addition, we provide evidence for the existence of volatility transmission between sovereign and banking sector CDS returns. Preliminary evidence on the breaks in volatility transmission points to the same break dates detected when analysing the mean return transmission. Together, all conducted tests show strong evidence in favour of the presence of structural changes precisely in the slope parameters, suggesting that detected breaks are indicative of the major shocks to the negative bank-to-sovereign feedback loop.

We observe that endogenously identified break dates coincide with detectable public events that on the one hand affect investors’ perception about the government’s capacity and willingness to repay debt and, on the other hand, investors’ perception regarding the government’s implicit and explicit support of distressed banks. To be precise, major breaks are detected in January and May 2010. For the pure structural change model, the first break falls on January 6, 2010, 2 days before the European Commission published an official report on irregularities in Greek government deficit reporting. For the partial structural change model, the first break falls on January 28, 2010, which coincides exactly with the European Commission’s approval of bank recapitalization measures in Spain. The second break date for both the pure and the partial structural change model falls on May 12, 2010, the exact date the Spanish government publically announced severe austerity measures. These results suggest that strong public finances might be one of the key factors to circumvent the bank-sovereign feedback loop. We believe that the methodology applied in this paper, which underscores the importance of accounting for all relevant structural breaks, could provide useful insights concerning the effect of major shocks on the pattern of bank-sovereign interdependence.

Notes

Attinasi et al. (2010), Kallestrup et al. (2016) and Merler and Pisani-Ferry (2012) provide empirical evidence for the bank-to-sovereign credit risk transfer induced by the perceived cost of bank rescue. Similarly, Ejsing and Lemke (2011) show that the sensitivity of sovereign risk premia to further crisis aggravations increased after the introduction of rescue packages by eurozone governments.

The acronym BEKK refers to Baba, Engle, Kraft and Kroner.

If just two largest banks are considered (i.e. Banco Santander and BBVA), and the initial point of the sample period confined to December 2007, the first endogenous break corresponds to October 14, 2008, just before the start of the sample period that we consider in our analysis. Intuitively, this would suggest that the sample we consider does not cover the "before" phase of Alter and Schüler (2012) that is, the phase before the collapse of Lehman Brothers and the announcements of rescue packages by the majority of the Euro-area governments, but does cover the phase "during and after" the implementation of bank aid schemes.

The database applied in this paper is aligned with other published research in this field. Acharya et al. (2014), in line with our approach, consider only banks with publically traded CDS and only banks with more than $10 billion in assets. Alter and Schüler (2012) and Ejsing and Lemke (2011), on the other hand, use the two biggest banks, Banco Santander and BBVA, to represent the banking sector in Spain.

Data on banks’ sovereign exposures were obtained from the CEBS EU-wide stress tests as of the late March 2010.

The D-SIBs list of the EBA includes in addition only Bankia.

We examine different values for h ranging from 10% to 15%.

Reported results are for h = 15%.

For h = 15%, the second break date is out of the break search interval. Reported results are for h = 13%.

References

Abad J (2019) Breaking the sovereign-bank nexus. Working paper, CEMFI

Acharya V, Drechsler I, Schnabl P (2014) A pyrrhic victory? Bank bailouts and sovereign credit risk. J Finance 69(6):2689–2739

Adler G, Lizarazo S (2015) Intertwined sovereign and bank solvencies in a simple model of self-fulfilling crisis. Int Rev Econ Finance 39(C):428–448

Akin O, García Montalvo J, García Villar J, Peydró J-L, Raya JM (2014) The real estate and credit bubble: evidence from Spain. SERIEs 5:223–243

Alter A, Beyer A (2014) The dynamics of spillover effects during the European sovereign debt turmoil. J Bank Finance 42:134–153

Alter A, Schüler YS (2012) Credit spread interdependencies of European states and banks during the financial crisis. J Bank Finance 36(12):3444–3468

Andrews DWK (1993) Tests for parameter instability and structural change with unknown change point. Econometrica 61(4):821–856

Ang A, Longstaff FA (2013) Systemic sovereign credit risk: lessons from the U.S. and Europe. J Monet Econ 60(5):493–510

Attinasi M, Checherita C, Nickel C (2010) What explains the surge in Euro area sovereign spreads during the financial crisis of 2007–09? Public Finance Manag 10(4):595–645

Baba Y, Engle RF, Kraft D, Kroner KF (1990) Multivariate simultaneous generalized ARCH. Mimeo, Department of Economics, University of California, San Diego

Bai J (1997) Estimating multiple breaks one at a time. Econ Theory 13(3):315–352

Bai J, Lumsdaine RL, Stock JH (1998) Testing for and dating common breaks in multivariate time series. Rev Econ Stud 65(3):395–432

Beirne J, Fratzscher M (2013) The pricing of sovereign risk and contagion during the European sovereign debt crisis. J Int Money Finance 34:60–82

BIS (2009) 79th Annual report. Bank for International Settlements, Basel

Blanco R, Brennan S, Marsh IW (2005) An empirical analysis of the dynamic relationship between investment grade bonds and credit default swaps. J Finance 60(5):2255–2281

Blundell-Wignall A, Slovik P (2010) The EU stress test and sovereign debt exposures. OECD Working Papers on Finance, Insurance and Private Pensions, No. 4, OECD Publishing

Bocola L (2016) The pass-through of sovereign risk. J Polit Econ 124(4):879–926

Brunnermeier MK, Garicano L, Lane PR, Pagano M, Reis R, Santos T, Thesmar D, Van Nieuwerburgh S, Vayanos D (2016) The sovereign-bank diabolic loop and ESBies. Am Econ Rev 106(5):508–512

Candelon B, Lütkepohl H (2001) On the reliability of chow-type tests for parameter constancy in multivariate dynamic models. Econ Lett 73(2):155–160

Chen CYH, Härdle WK (2015) Common factors in credit defaults swap markets. Comput Stat 30:845–863

Cooper R, Nikolov K (2018) Government debt and banking fragility: the spreading of strategic uncertainty. Int Econ Rev 59(4):1905–1925

Davies M, Ng T (2011) The rise of sovereign credit risk; Implications for financial stability. BIS Q Rev 59–70

De Bruyckere V, Gerhardt M, Schepens G, Vander Vennet R (2013) Bank/sovereign risk spillovers in the European debt crisis. J Bank Finance 37(12):4793–4809

Dieckmann S, Plank T (2012) Default risk of advanced economies: an empirical analysis of credit default swaps during the financial Crisis. Rev Finance 16(4):903–934

Eichengreen B, Mody A, Nedeljkovic M, Sarno L (2012) How the subprime crisis went global: evidence from bank credit default swap spreads. J Int Money Finance 31(5):1299–1318

Ejsing J, Lemke W (2011) The janus-headed salvation: sovereign and bank credit risk premia during 2008–2009. Econ Lett 110(1):28–31

Engle RF, Kroner KF (1995) Multivariate simultaneous generalized ARCH. Econ Theory 11(1):122–150

Farhi E, Tirole J (2018) Deadly embrace: sovereign and financial balance sheets doom loops. Rev Econ Stud 85(3):1781–1823

Fernández-Villaverde J, Garicano L, Santos T (2013) Political credit cycles: the case of the Eurozone. J Econ Perspect 27(3):145–166

Forte S, Peña JI (2009) Credit spreads: an empirical analysis on the informational content of stocks, bonds, and CDS. J Bank Finance 33(11):2013–2025

Gerlach S, Schulz A, Wolff GB (2010) Banking and sovereign risk in the Euro area. Center for Economic Policy Research Discussion Paper DP7833

Geweke J, Meese R, Dent W (1983) Comparing alternative tests of causality in temporal systems: analytic results and experimental evidence. J Econom 21(2):161–194

Giordano R, Pericoli M, Tommasino P (2013) Pure or wake-up-call contagion? Another look at the EMU sovereign debt crisis. Int Finance 16(2):131–160

Granger CWJ, Huangb BN, Yang CW (2000) A bivariate causality between stock prices and exchange rates: evidence from recent Asianflu. Q Rev Econ Finance 40(3):337–354

Hansen BE (2000) Testing for structural change in conditional models. J Econom 97(1):93–115

Hansen PR, Lunde A (2005) A forecast comparison of volatility models: does anything beat a GARCH(1,1)? J Appl Econ 20(7):873–889

Hassan K, Hoque A, Gasbarro D (2017) Sovereign default risk linkage: implication for portfolio diversification. Pac Basin Finance J 41:1–16

Horta P, Lagoa S, Martins L (2014) The impact of the 2008 and 2010 financial crises on the Hurst exponents of international stock markets: implications for efficiency and contagion. Int Rev Financ Anal 35:140–153

International Monetary Fund (2012) Spain: financial stability assessment. IMF Country Report No. 12/137

Jimeno JF, Santos T (2014) The crisis of the Spanish economy. SERIEs 5:125–141

Kalbaska A, Gątkowski M (2012) Eurozone sovereign contagion: evidence from the CDS market (2005–2010). J Econ Behav Organ 83(3):657–673

Kallestrup R, Lando D, Murgoci A (2016) Financial sector linkages and the dynamics of bank and sovereign credit spreads. J Empir Finance 38(Part A):374–393

Kawaller IG, Koch PD, Koch TW (1990) Intraday relationships between volatility in S&P 500 futures prices and volatility in the S&P 500 index. J Bank Finance 14(2–3):373–397

Köing P, Anand K, Heinemann F (2014) Guarantees, transparency and the interdependency between sovereign and bank default risk. J Bank Finance 45:321–337

Leonello A (2018) Government guarantees and the two-way feedback between banking and sovereign debt crises. J Financ Econ 130(3):592–619

Longstaff FA, Mithal S, Neis E (2005) Corporate yield spreads: default risk or liquidity? New evidence from the credit default swap market. J Finance 60(5):2213–2253

Longstaff FA, Pan J, Pedersen LH, Singleton KJ (2011) How sovereign is sovereign credit risk? Am Econ J Macroecon 3(2):75–103

Merler S, Pisani-Ferry J (2012) Hazardous tango: sovereign-bank interdependence and financial stability in the Euro area. Banque de France Financial Stab Rev 16:201–210

Mink M, de Haan J (2013) Contagion during the Greek sovereign debt crisis. J Int Money Finance 34:102–113

Mody A, Sandri D (2012) The eurozone crisis: how banks and sovereigns came to be joined at the hip. Econ Policy 27(70):199–230

Nikkinen J, Sahlström P, Vähämaa S (2006) Implied volatility linkages among major European currencies. J Int Financ Mark Inst Money 16(2):87–103

Norden L, Weber M (2009) The co-movement of credit default swap, bond and stock markets: an empirical analysis. Eur Financ Manag 15(3):529–562

Oehmke M, Zawadowski A (2017) The anatomy of the CDS market. Rev Financ Stud 30(1):80–119

Petrovic A, Tutsch R (2009) National rescue measures in response to the current financial crisis. ECB Legal Working Paper No. 8

Quaglia L, Royo S (2015) Banks and the political economy of the sovereign debt crisis in Italy and Spain. Rev Int Polit Econ 22(3):485–507

Quandt RE (1960) Tests of the hypothesis that a linear regression system obeys two separate regimes. J Am Stat Assoc 55(290):324–330

Remolona EM, Scatigna M, Wu E (2008) A ratings-based approach to measuring sovereign risk. Int J Finance Econ 13(1):26–39

Sander H, Kleimeier S (2003) Contagion and causality: an empirical investigation of four Asian crisis episodes. J Int Financ Mark Inst Money 13(2):171–186

Santos T (2017) Antes del Diluvio: the Spanish banking system during the first decade of the euro. In: Glaeser EL, Santos T, Glen Weyl E (eds) After the flood: how the great recession changed economic thought. University of Chicago Press, Chicago

Acknowledgements

We thank Nezih Guner (Co-Editor), two anonymous referees, Eric Duca, Santiago Forte, and Ranko Jelić for valuable comments and suggestions. Lidija Lovreta acknowledges financial support from the Spanish Ministry of Economy and Competitiveness (Grant ECO2012-32554). The usual disclaimers apply.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lovreta, L., López Pascual, J. Structural breaks in the interaction between bank and sovereign default risk. SERIEs 11, 531–559 (2020). https://doi.org/10.1007/s13209-020-00219-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13209-020-00219-z