Abstract

Background

Monitoring resistance training has a range of unique difficulties due to differences in physical characteristics and capacity between athletes, and the indoor environment in which it often occurs. Traditionally, methods such as volume load have been used, but these have inherent flaws. In recent times, numerous portable and affordable devices have been made available that purport to accurately and reliably measure kinetic and kinematic outputs, potentially offering practitioners a means of measuring resistance training loads with confidence. However, a thorough and systematic review of the literature describing the reliability and validity of these devices has yet to be undertaken, which may lead to uncertainty from practitioners on the utility of these devices.

Objective

A systematic review of studies that investigate the validity and/or reliability of commercially available devices that quantify kinetic and kinematic outputs during resistance training.

Methods

Following PRISMA guidelines, a systematic search of SPORTDiscus, Web of Science, and Medline was performed; studies included were (1) original research investigations; (2) full-text articles written in English; (3) published in a peer-reviewed academic journal; and (4) assessed the validity and/or reliability of commercially available portable devices that quantify resistance training exercises.

Results

A total of 129 studies were retrieved, of which 47 were duplicates. The titles and abstracts of 82 studies were screened and the full text of 40 manuscripts were assessed. A total of 31 studies met the inclusion criteria. Additional 13 studies, identified via reference list assessment, were included. Therefore, a total of 44 studies were included in this review.

Conclusion

Most of the studies within this review did not utilise a gold-standard criterion measure when assessing validity. This has likely led to under or overreporting of error for certain devices. Furthermore, studies that have quantified intra-device reliability have often failed to distinguish between technological and biological variability which has likely altered the true precision of each device. However, it appears linear transducers which have greater accuracy and reliability compared to other forms of device. Future research should endeavour to utilise gold-standard criterion measures across a broader range of exercises (including weightlifting movements) and relative loads.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

For the accurate measurement of kinetic and kinematic outputs during resistance training, it is advised that linear transducers are utilised. These devices have demonstrated greater accuracy and reproducibility when compared to other technology. |

It is strongly advised that future validity studies utilise gold-standard criterion measures across a range of relative intensities and exercises. |

For the assessment of reliability, technological and biological error must be acknowledged and separated, so that the precision of each device during exercise can be accurately reported. |

1 Introduction

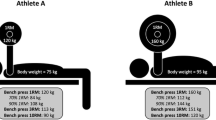

Resistance training is commonly used to improve strength, power, and lean body mass [1, 2], and is a fundamental part of athlete physical preparation. Traditionally, methods such as the number of repetitions or overall volume load (i.e., the multiplication of external mass, the number of repetitions and sets) have been used to quantify training loads [3,4,5]. However, these methods have fundamental errors that can reduce their application. For example, if an athlete utilises maximal intent or a pacing strategy, internal fatigue and adaptive responses can vastly differ [6, 7]. Furthermore, differences in exercise prescription, athlete physical capacity, and range of motion mean simple volume load equations can be misleading. This can be observed when completing differing repetition and set structures (e.g., three sets of 10 repetitions vs. 10 sets of three repetitions with the same external load) or when stronger athletes are compared against weaker counterparts [3, 4]. To circumvent these issues and support the accurate quantification of resistance training loads, a range of tools that assess kinetic and kinematic outputs have been developed [8,9,10,11]. By monitoring kinetic and kinematic outputs, changes in fatigue and proximity to concentric muscle failure can be closely monitored [6, 12, 13]. Furthermore, these devices have been used for a number of training purposes ranging from the immediate feedback of velocity and power outputs [14,15,16,17], to supporting full autoregulatory prescriptive methods [18, 19].

Linear position transducers (LPTs) and accelerometers are two commonly utilised tools that support the monitoring of training loads during resistance training [13, 20, 21]. While LPTs directly measure displacement and time, accelerometers are used to estimate kinetic and kinematic outputs by determining the time integral of the acceleration data. With respect to LPTs and accelerometers, there is an array of different brands, and these have been found to demonstrate varying levels of accuracy and reproducibility [9, 10]. It should be noted that LPTs should not be confused with linear velocity transducers (LVTs), which determine kinetic and kinematic outputs through the direct measurement of instantaneous velocity. Furthermore, in recent times, there have been a range of new devices that monitor resistance training outputs, with these being made possible through advancements in technology [22]. Examples of these include optic laser devices and the cameras within smartphones [22, 23]. While validity and reliability data have been published on these new devices, they have sparingly been compared to linear transducer (i.e., either LPTs or LVTs) and accelerometer data [24]. Furthermore, the literature has not been synthesised to inform practical use and help guide future research.

To support the accurate quantification of training loads, it is important that the technology used is both valid and reliable. This is particularly important for practitioners who utilise this information to make decisions regarding subsequent training sessions. The validity of an instrument often refers to its ability to measure what it is intended to measure with accuracy and precision [25,26,27]. This is typically quantified by comparing the output of the respective instrument to a ‘gold-standard’ or criterion measure. An example of a gold-standard measure would be the use of 3D high-speed motion capture when assessing velocity. Typical measures of validity include systematic and random bias, coefficient of variation (CV), and standard error of the estimate (SEE) [1, 28, 29]. Due to many resistance training methods now applying velocity loss thresholds with an aim to help mitigate fatigue responses [30, 31], or making programming decisions based on the force–velocity–power characteristics of an exercise [32], it is essential that outputs being produced are accurate. Otherwise, this may lead athletes to complete inappropriate training volumes or select exercises which may induce undue fatigue or generate a sub-optimal training stimulus.

The reliability of an instrument denotes its ability to reproduce measures on separate occasions when it is known that the measure of interest should not fluctuate [33]. When assessing devices that measure kinetic and kinematic outputs, both ‘intra-device’ (i.e., comparing outputs from the same device) and ‘inter-device’ (i.e., comparing outputs from two devices of the same make during the same trial) reliability are important. Intra-device reliability is essential to consider when tracking and identifying ‘meaningful’ changes over a specified period [34]. However, when assessing the reliability of an instrument, it is important to separate biological (i.e., human) and technological variation [22]. This is particularly pertinent during resistance training, where fluctuations in strength and readiness to train can cause substantial alterations in velocity and power outputs despite the same relative load being used [31, 35]. Therefore, research assessing reliability of devices needs to account for, and preferably remove, biological variation to gain a true insight into a device’s reproducibility. Inter-device reliability is important to consider when several devices of the same brand are being used in practice (e.g., two devices are being used to monitor two separate barbells when multiple athletes are training) [36, 37]. To ensure a true representation of each athlete’s capacity, the reproducibility of each device needs to be considered. Typical measures of reliability include typical/standard error of measurement (TEM/SEM), CV, and intra-class correlations (ICC) [25, 36, 38].

While there is an abundance of research that assesses the kinetic and kinematic outputs of commercially available devices during resistance training [1, 39, 40], there has not been a review assessing the validity and reliability of these different forms of technology. Due to the growing use of this equipment during resistance training, it is appropriate that a systematic review is completed to guide practitioners and researchers. Therefore, the aim of this review is to establish the level of evidence for: (1) the validity of all commercially available portable resistance training devices that monitor force, velocity, and power outputs; and, (2) the intra-device and inter-device reliability of these devices.

2 Methods

2.1 Search Strategy

Following PRISMA guidelines for systematic reviews [41], the academic databases SPORTDiscus, Web of Science, and Medline were systematically searched in August 2020 using titles, abstracts, keywords, and Boolean operators (AND/OR) to identify English-language peer-reviewed original research studies that investigated the validity and/or reliability of commercially available, portable devices that quantify kinetic and/or kinematic variables during resistance training. Studies were identified by searching abstracts, titles, and keywords for pre-determined terms relevant to the scope of this review (Table 1). All search results were extracted and imported into a reference manager (EndNote X9, Thomson Reuters, Philadelphia, PA, USA).

2.2 Selection Criteria

All duplicate studies were removed, and the titles and abstracts of all remaining studies were scanned for relevance by two authors (JW and MM). Studies that were deemed beyond the scope of the review were removed. The full text of the remaining studies was then assessed for eligibility. To be eligible for inclusion, studies were required to be (1) original research investigations; (2) full-text articles written in English; (3) published in a peer-reviewed academic journal before the 3rd of August, 2020; and (4) concerned with the validity and/or reliability of commercially available, portable, resistance training devices that monitor force, velocity, and power outputs during resistance training (i.e., exercise that consisted of applying an external load to the participant). If it was deemed that a study did not meet the inclusion criteria, it was excluded from the analysis. Additionally, if the study concerned a device that was no longer commercially available, it was not included. The reference lists of all eligible studies were then manually searched for any studies that were not retrieved in the initial search. If any studies were identified through this manual search strategy, it was subjected to the same assessment as previously described. Where necessary, means and measures of dispersion were extracted from figures in the manuscripts using WebPlotDigitizer v4.0 [42].

2.3 Assessment of Reporting Quality

The reporting quality of the research was assessed using a modified version of the Downs and Black checklist [43] (Table 2). This method is valid for assessing the methodological reporting quality of observational study designs and has previously been utilised by systematic reviews pertaining to sport science [44]. Not all of the assessment criteria were applicable to the studies used in this review; thus, only 9 of the 27 criteria were used. These questions can be found in Electronic Supplementary Material Table S1. Study reporting quality was scored on a scale from ‘0’ (unable to determine, or no) to ‘1’ (yes). In total, a score of ‘9’ was indicative of the highest reporting quality, with scores above 6 being considered ‘good’, scores of 4–6 considered ‘moderate’, and scores below 4 being considered ‘poor’ methodological reporting quality.

2.4 Criteria for ‘Acceptable’ Validity and Reliability

Devices were deemed to have demonstrated ‘acceptable’ validity if the literature reported a very high correlation (> 0.70), moderate CV (< 10%), and a trivial or small ES (< 0.60) based on a modified effect size scale [45]. This is consistent with previous resistance training literature which has assessed the validity of resistance training devices [8, 22, 46]. Devices were said to demonstrate acceptable reliability if a device had an intra-class correlation coefficient ≥ 0.90, CV < 10%, and a standardised mean bias < 0.60. This is consistent with previous resistance training literature which has assessed the reliability of resistance training devices [22, 47, 48].

3 Results

3.1 Identification of Studies

The systematic search retrieved a total of 129 studies, with 47 of these being removed as duplicates. The titles and abstracts of the remaining 82 studies were screened, with 38 being deemed to be outside the scope of the review and a further 4 being excluded as they were not written in English. The full-text manuscripts of the remaining 40 studies were reviewed, resulting in the identification of 31 studies that met the inclusion criteria. The references lists of these 31 manuscripts were subsequently assessed, which led to an additional 13 studies being identified and a total of 44 studies included in this review. The identification process is outlined in Fig. 1.

3.2 Research Reporting Quality

The methodological reporting quality of the research investigating the validity and/or reliability was relatively high [mean ± standard deviation 8.0 ± 1.3; median (interquartile range) 8 (1.25)] when appraised using the modified Downs and Black checklist [43] (Table 2). Items that were consistently not achieved included question 3 (full device details reported, n = 26 studies), 10 (actual statistics reported, n = 32 studies), and 18 (appropriate statistical analysis, n = 26 studies). To improve the quality of future research, authors should ensure that all statistics are reported and that the model and specifications of the device being used are included within the manuscript. Additionally, pooling of repeated measures must be accounted for with an appropriate statistical approach, while future research should seek to delineate the influence of technological variation from biological variation on reliability measures.

3.3 Study Characteristics

All devices that were included within this review can be found within Table 3. Seven accelerometers (Push Band, Push Band 2.0, Beast Sensor, Bar Sensei, MyoTest, and Wimu System, RehaGait), 10 linear transducers [GymAware, SmartCoach, 1080Q, T-Force, Chronojump, Tendo, Speed4Lift, FitroDyne (Fitronic), Open Barbell System, and Musclelab (Ergotest)], three mobile applications (PowerLift/MyLift, iLoad, and Kinovea), and two optic devices (Velowin and Flex) were included. 36 studies assessed the validity (Tables 4, 5, 6, 7), while 28 studies investigated reliability (Tables 8, 9, 10, 11). The most common exercises assessed were the squat and bench press, either within the Smith machine or with free-weights, while velocity outputs were the most commonly assessed kinetic or kinematic variable.

Of the 19 studies that assessed the validity of linear transducer devices, 11 used free-weight equipment, six used a Smith machine, and one used both free-weight and Smith machine exercises. Relative loads from 20 to 100% of 1RM were used, while absolute loads were used within seven studies (refer to Table 4). Of the 23 studies that assessed the validity of accelerometer devices, 14 used free-weight equipment, eight used a Smith machine, and one used both free-weight and Smith machine exercises. Relative loads from 10 to 100% of 1RM were assessed, while absolute loads were used within six studies (refer to Table 5). In the 10 studies that assessed the validity of mobile phone and tablet applications, three used free-weight equipment and seven used a Smith machine. Relative loads ranging from 40 to 100% of 1RM were used, while six studies used either repetitions above or below a given speed (i.e., 0.80 m·s−1), absolute, or maximal (i.e., 10RM) prescriptive methods (refer to Table 6). Finally, in the eight studies that quantified the validity of optic devices, four used free-weight equipment and four used a Smith machine. Relative loads from 20 to 100% of 1RM were assessed, and one study prescribed loads at or above a given speed, while absolute loads were used within five studies (refer to Table 7).

Of the 19 studies that quantified the reliability of linear transducer devices, 10 used free-weight equipment, eight used a Smith machine, and one used both free-weight and Smith machine exercises. Relative loads from 0 to 100% of 1RM were assessed, while absolute loads were used within seven studies (refer to Table 8). Of the 14 studies that quantified the reliability of accelerometer devices, eight used free-weight equipment, five used a Smith machine, and one used both free-weight and Smith machine exercises. Relative loads from 10 to 100% of 1RM were assessed, while absolute loads were used within five studies (refer to Table 9). In the six studies that quantified the reliability of mobile phone and tablet applications, three used free-weight equipment and three used a Smith machine. Relative loads ranging from 45 to 95% of 1RM were assessed, one study used repetitions above or below a given speed, while absolute loads were used within one study (refer to Table 10). Finally, in the six studies that quantified the reliability of optic devices, two used free-weight equipment and four used a Smith machine. Relative loads from 20 to 100% of 1RM were assessed, while absolute loads were used within four studies (refer to Table 11).

4 Discussion

The aims of this review were to (1) establish the level of evidence for the validity of all commercially available portable resistance training devices that monitor force, velocity, and power outputs; and, (2) determine the intra-device and inter-device reliability of these devices. Velocity was the most investigated output, with all but two studies investigating this outcome measure [49, 50]. Furthermore, it was found that most studies within this review did not utilise a gold-standard criterion measure (e.g., high-speed motion-capture set-up for measuring velocity) when assessing the validity of devices. This has likely led to under or overreporting of error for certain devices and may explain (at least in part) the inconsistent findings presented in different studies that have assessed the same device. However, when compared to a gold-standard criterion, it appears that linear transducers demonstrate greater accuracy and precision over other devices when measuring kinetic and kinematic outputs. In stating this, future research must consider utilising a broader range of exercises (e.g., Olympic weightlifting exercises and their derivatives) and loads to be confident of the reliability and validity of devices. For the assessment of reliability, only three studies have assessed the agreement between two different devices of the same brand (i.e., inter-device) [22, 36, 51]. In contrast, there has been a substantial amount of research concerning intra-device reliability [28, 48, 52]; however, it must be noted that all but one of these studies [22] failed to differentiate technological variation from biological variation to establish their respective influence on the unit’s reliability. Therefore, future research must attempt to separate these different forms of error to provide a fair reflection of the intra-device reliability and the variation that can be expected.

4.1 Validity

Of the 19 studies that have assessed the validity of linear transducers, 10 utilised a gold-standard criterion of high-speed 3D motion capture when assessing velocity [10, 24,25,26,27, 51, 53, 54] or force plate when assessing force [8, 25, 26, 50] (Table 4). From the evidence provided, these types of devices tend to demonstrate greater accuracy when compared to accelerometers [8, 9, 26]. Of all linear transducer devices, the GymAware PowerTool [8, 9, 24,25,26,27, 50, 54, 55] and Tendo Fitrodyne [36, 53, 54, 56,57,58] have been the most investigated, with nine and six independent validity studies, respectively. Additionally, the Fitrodyne (Fitronic) [9, 55] and Open Barbell System [53, 57] have both had two studies assessing their validity. However, when comparing the agreement of these devices [55], it appears that there are slight discrepancies. Mitter et al. [9] demonstrated greater accuracy of the GymAware PowerTool compared to the Fitrodyne (Fitronic), while Fernandes et al. [55] warned practitioners against interchanging these devices due to systematic differences (refer to Table 4). This is particularly pertinent when utilising peak velocity [55]. Differences between devices may be due to different sampling methods (e.g., through displacement or variable rate sampling), and/or the way in which the raw data signals are treated within the software (e.g., manufacturer-defined filtering routines). Thus, while linear transducers consistently demonstrate superior accuracy compared to other forms of velocity measuring devices [8,9,10, 53, 54], practitioners should avoid the interchangeable use of different devices during the long-term monitoring of athletes.

Ten studies have directly compared accelerometer-based devices (i.e., Push Band versions 1.0 and 2.0, Beast sensor, BarSensei, and Myotest) to gold-standard 3D motion capture [9, 10, 24, 29, 37, 54, 58, 59] or force plate (when assessing force variables) [8, 49], with the power clean [24], bench press [9, 10, 29, 58], back squat [9, 24, 59], deadlift [9], ballistic squat [54], shoulder press [37], and the biceps curl exercise [37] being assessed (Table 5). Across these studies, all outputs, except peak velocity at 60 and 90% of 1RM in the bench press for the Push Band 2.0 [29], have demonstrated questionable validity. Furthermore, there have been additional 13 studies that have assessed accelerometer-based devices against other devices, predominantly the GymAware PowerTool [28, 47, 52, 60] or the T-Force [36, 38, 61, 62] linear transducers. From this, mean and peak velocity are the most investigated outputs. The CV from these accelerometer devices tends to range from 10 to 20% across exercises, with lighter relative loads (i.e., faster velocities) tending to have less error [8, 47]. Furthermore, monitoring mean velocity with heavy loads (i.e., > 90% of 1RM) may be extremely inaccurate (i.e., CV = 27–35%) which may be related to the detection of different phases of each movement [8, 47, 60, 63]. This may be an issue for practitioners as mean concentric velocity is often advised for monitoring resistance training adaptations in non-ballistic exercises (e.g., squats, bench press) [64,65,66]. Considering these findings, accelerometers may best be used for the provision of feedback to enhance motivation and competitiveness during ballistic, high-velocity exercises [67]. However, accelerometers should not be used to track changes in performance (e.g., assessment of velocity against a fixed absolute load) nor to prescribe the loads or the volume of training sets when using velocity loss thresholds.

Of the studies that involved devices that were not accelerometer or linear transducers and assessed validity (Tables 6, 7), only three utilised a true gold-standard criterion [22, 24, 68]. When compared against a high-speed 3D motion-capture set-up, the Velowin opto-electronic device has demonstrated acceptable validity for both mean and peak velocity (CV = 6.5–7.5%); however, proportional bias in peak velocity may be present [68]. The optic laser Flex device has demonstrated acceptable validity for mean velocity during both free-weight squat and bench-press exercises across a range of loads (i.e., 20–100% 1RM) [22]. While there are small increases in variability at the lightest loads (i.e., 20% 1RM), between 40 and 100% of 1RM the typical error is approximately 0.02 m·s−1. It should be noted, though, that currently only mean velocity has been validated for the Flex, and other variables (e.g., peak velocity) still need to be compared against a gold-standard measure as these outputs may be most relevant to the lightest loads (e.g., 20% 1RM). Finally, with the increasing interest in monitoring resistance training performance, mobile phone apps have also become available [10, 24, 36, 69, 70]. While there is conflicting evidence [24, 36, 51, 70], it appears that substantial bias and error can be introduced when different devices and/or users implement these measuring tools [23]. Thus, practitioners should ensure thorough familiarisation and standardised protocols when using these applications.

4.2 Reliability

A number of studies have investigated the reliability of linear transducers [10, 26, 36, 52] (Table 8). To date, the T-Force has had six separate studies investigate some aspect of reliability [10, 36, 54, 61, 71, 72]. Specifically, Courel-Ibañez et al. [36] have recently demonstrated the inter-device (i.e., two devices of the same manufacturer) reliability of mean, mean propulsive, and peak velocity, and shown the extremely low error (e.g., mean velocity CV = 1.0–2.1%) when completing the Smith machine bench press, squat, and the prone row. With respect to the intra-device (i.e., the same unit assessed across multiple repetitions), the study by Courel-Ibañez [36] demonstrated a slightly greater, but still relatively small, level of variability (i.e., mean velocity CV = 1.9–3.0%) within the same exercises. Furthermore, the authors presented findings to suggest that the Chronojump LPT exhibited slightly increased inter-device and intra-device error than the T-Force, with mean velocity variability ranging from 3.3 to 4.7% and 3.9 to 5.2%, respectively [36]. It should be acknowledged that the slightly greater intra-device variability values reported in this review may be due to the introduction of biological variation across repetitions (i.e., the ability of a human to perfectly replicate two repetitions with the exact same physical output). Furthermore, it is reasonable to suggest that these reliability outcomes may be negatively impacted when exercises are taken outside of a 2D plane (i.e., a Smith machine). During free-weight exercises, the within-device reliability of the GymAware PowerTool has been shown to be of a high standard [48, 52]. During the free-weight back squat, typical errors of 0.03–0.05 m·s−1 across loads of 20–90% of 1RM have been shown. However, this variability may be artificially inflated due to the time between testing occasions (i.e., 7 days) and the normal fluctuations in human performance [48]. Future research is still required to assess the inter-device reliability of this device.

While the accuracy of accelerometers during resistance training appears to be questionable, some accelerometer devices may have greater intra-device reproducibility [29] (Table 9). When placed on the bar, Push 2.0 has demonstrated acceptable reliability of both mean and peak velocity within the bench press at 60% and 90% of 1RM [29]. Furthermore, Hughes et al. [52] have shown conflicting reliability for this device during the Smith machine and free-weight squat, bench press, overhead press, and prone row when placed on either the bar or arm. Contrasting this, Beckham et al. [28] demonstrated that the Bar Sensei achieved both poor accuracy and poor reliability for mean and peak velocity measures during the free-weight barbell back squat. However, these values may have been inflated due to the period of time between testing occasions (i.e., 3–7 days) and the potential for biological variation to influence reliability outcomes [22]. Finally, the Beast Sensor has demonstrated extremely large variability (CV 24–55%) and systematic error at intensities of 45–85% of 1RM in a Smith machine back squat [10]. While a previous study has suggested that it demonstrates satisfactory reliability [69], the statistical approach has recently been questioned due to the pooling of repeated measures from a range of different intensities and consequently violating the assumption of independence [28]. Naturally, this may help to explain the contrasting results for this device and the high reliability correlation values previously reported [69].

Recent work by Perez-Castilla et al. [10] has compared seven commercially available devices in the Smith machine bench press across a range of loads (i.e., 45–85% of 1RM). Of these, the Speed4Lifts linear position transducer was found to demonstrate the greatest intra-device reliability (CV = 2.39–3.92%). This was closely followed by the Velowin, PowerLift, T-Force, and Chronojump that all demonstrated similar levels of reliability (CV = ~ 3–6%) [10]. The authors reported that, outside of Speed4Lifts linear position transducer, all other devices demonstrated substantial heteroscedasticity when compared to a high-speed 3D motion-capture system. However, caution is required when interpreting these outcomes as the influence of between-day biological variation was not separated from the true technological error of the devices. Nevertheless, it should be noted that similar (CV = ~ 4–8%) within-device reproducibility was observed for the Velowin and Powerlift when procedures were completed within-day [36] (Tables 10, 11). To the authors’ knowledge, the only study to separate these forms of variation when assessing within-device reliability is the recent work by Weakley et al. [22] on the optic laser FLEX device. This study investigated the reliability across a prolonged time (i.e., 21 days between testing occasions) with the use of a purposely designed calibrated rig. Mean velocity demonstrated an overall within-device typical error of ~ 4% with velocities ranging from 0.09 to 0.99 m·s−1. Additionally, this study demonstrated inter-device variance with both technological and biological variation accounted for. The authors concluded that the optic laser FLEX device exhibited acceptable inter-device reliability, suggesting that these devices can be used interchangeably (e.g., within a team environment where multiple barbells are set up). However, it should be noted that additional metrics (e.g., peak velocity) have recently been released by the manufacturers, and future research should be completed to assess these outputs.

While this review has considered a range of commercially available devices for the monitoring of resistance training, there are still several aspects that need further investigation. First, it should be acknowledged that the accuracy of these devices has been tested within a limited number of exercises (e.g., squat, bench press). Furthermore, a number of these studies have been done within a Smith machine which is expected to increase the reliability of the outputs. However, strength and conditioning practitioners often utilise a wide range of exercises and these are often done with free weights [73,74,75,76]. Additionally, some exercises that have greater horizontal displacement (e.g., Olympic weightlifting movements) have had minimal investigation. Therefore, future research is required for the validation of current technology using a wider range of exercises that include weightlifting movements and their derivates. Second, future research must consider the influence of biological variation when assessing the reliability of measurement devices. To date, all but one reliability study [22] have disregarded this consideration during within-device analysis, despite it being widely acknowledged that human performances fluctuate within-session and between-days. Thus, most of the within-device reliability research may mistakenly report reproducibility errors that are unrelated to the device. Finally, it is important to acknowledge the wide range of statistical approaches that have been used within the literature and that erroneous conclusions of validity and reliability may be drawn from an individual statistical value. For example, alone, correlations characterise the relationship between two outcomes, but they are incapable of describing any systematic bias that may exist. This has implications for concluding whether a device is truly accurate or reliable. Additionally, when interpreting error of individual devices, this should be put into context in relation to practical criteria or acceptable levels of disagreement. Thus, when quantifying the validity and reliability of these technologies, researchers are strongly advised to consider using a number of analyses that provide information about the level of agreement and the magnitude of errors that are associated with each device and compare these to appropriate criteria.

5 Conclusions

The current review provides the reliability and validity of a range of different devices which are commercially available for the monitoring and prescription of resistance training. Generally, linear transducers have shown the greatest accuracy with mean concentric velocity the most assessed outcome. However, to date, only the GymAware [9, 25, 26, 54], T-Force [54], Open Barbell System [53], Tendo Fitrodyne [53, 54, 58], and Fitrodyne (Fitronic) [9] have been directly compared to a ‘true’ gold-standard 3D high-speed motion-capture system set-up during free-weight resistance training. When these devices have been directly compared to each other during free-weight exercises [9, 53, 54], it appears that the GymAware provides the greatest accuracy. This accuracy may be due to the device’s ability to account for horizontal displacement and variable rate sampling which is distinct to this device. Additionally, the T-Force demonstrates acceptable accuracy when exercise is performed within the Smith machine.

Accelerometer devices have shown promise, but the accuracy of these devices is still questionable [29, 37, 69]. Additionally, these devices are often validated against linear transducers which may introduce additional error that impacts the assessment of accuracy for the device [36, 38, 52, 61, 70]. Of the accelerometer devices, only the Push versions 1.0 and 2.0 [29, 37] and Beast Sensor [9] have been directly compared to a gold-standard criterion during free-weight exercises. Of these three devices, the Push 2.0 may have the greatest accuracy. However, it should be acknowledged that mean velocity from this device has been questioned [29], which limits its application to resistance training as this metric is widely recommended for use during non-ballistic exercises [12, 64, 65]. Finally, of the non-linear transducer and accelerometer devices, it appears that smart phone and tablet apps may be an alternative for a quick ‘snap-shot’ of training intensity, but substantial inter-device error may exist. Therefore, unless monitoring is done by a single individual with the same device, accurate tracking of performance may be limited [23, 36]. Nevertheless, the use of optic laser devices is a promising alternative that can provide accurate, real-time feedback [22]. While further research is still warranted on additional variables (e.g., peak velocity), this provides an additional cost-effective method for monitoring resistance training.

References

García-Ramos A, Barboza-González P, Ulloa-Díaz D, Rodriguez-Perea A, Martinez-Garcia D, Guede-Rojas F, et al. Reliability and validity of different methods of estimating the one-repetition maximum during the free-weight prone bench pull exercise. J Sports Sci. 2019;37(19):2205–12. https://doi.org/10.1080/02640414.2019.1626071.

Suchomel TJ, Nimphius S, Bellon CR, Stone MH. The importance of muscular strength: training considerations. Sports Med. 2018;48(4):765–85. https://doi.org/10.1007/s40279-018-0862-z.

Weakley JJS, Till K, Read DB, Roe GAB, Darrall-Jones J, Phibbs PJ, et al. The effects of traditional, superset, and tri-set resistance training structures on perceived intensity and physiological responses. Eur J App Phys. 2017;117(9):1877–89. https://doi.org/10.1007/s00421-017-3680-3.

Scott BR, Duthie GM, Thornton HR, Dascombe BJ. Training monitoring for resistance exercise: theory and applications. Sports Med. 2016;46(5):687–98. https://doi.org/10.1007/s40279-015-0454-0.

McBride JM, McCaulley GO, Cormie P, Nuzzo JL, Cavill MJ, Triplett NT. Comparison of methods to quantify volume during resistance exercise. J Strength Cond Res. 2009;23(1):106–10. https://doi.org/10.1519/jsc.0b013e31818efdfe.

Pareja-Blanco F, Rodríguez-Rosell D, Sánchez-Medina L, Gorostiaga EM, González-Badillo JJ. Effect of movement velocity during resistance training on neuromuscular performance. Int J Sports Med. 2014;35(11):916–24. https://doi.org/10.1055/s-0033-1363985.

Gonzalez-Badillo JJ, Rodriguez-Rosell D, Sanchez-Medina L, Gorostiaga EM, Pareja-Blanco F. Maximal intended velocity training induces greater gains in bench press performance than deliberately slower half-velocity training. Eur J Sports Sci. 2014;14(8):772–81. https://doi.org/10.1080/17461391.2014.905987.

Banyard HG, Nosaka K, Sato K, Haff GG. Validity of various methods for determining velocity, force, and power in the back squat. Int J Sports Phys Perform. 2017;12(9):1170–6. https://doi.org/10.1123/ijspp.2016-0627.

Mitter B, Holbling D, Bauer P, Stockl M, Baca A, Tschan H. Concurrent validity of field-based diagnostic technology monitoring movement velocity in powerlifting exercises. J Strength Cond Res. 2019. https://doi.org/10.1519/jsc.0000000000003143.

Perez-Castilla A, Piepoli A, Delgado-Garcia G, Garrido-Blanca G, Garcia-Ramos A. Reliability and concurrent validity of seven commercially available devices for the assessment of movement velocity at different intensities during the bench press. J Strength Cond Res. 2019;33(5):1258–65. https://doi.org/10.1519/jsc.0000000000003118.

van den Tillaar R, Ball N. Validity and reliability of kinematics measured with push band vs. linear encoder in bench press and push-ups. Sports. 2019. https://doi.org/10.3390/sports7090207.

García-Ramos A, Janicijevic D, González-Hernández JM, Keogh JWL, Weakley J. Reliability of the velocity achieved during the last repetition of sets to failure and its association with the velocity of the 1-repetition maximum. PeerJ. 2020;8:e8760. https://doi.org/10.7717/peerj.8760.

Weakley J, McLaren S, Ramirez-Lopez C, García-Ramos A, Dalton-Barron N, Banyard H, et al. Application of velocity loss thresholds during free-weight resistance training: responses and reproducibility of perceptual, metabolic, and neuromuscular outcomes. J Sports Sci. 2020;38(5):477–85. https://doi.org/10.1080/02640414.2019.1706831.

Weakley J, Till K, Sampson J, Banyard H, Leduc C, Wilson K, et al. The effects of augmented feedback on sprint, jump, and strength adaptations in rugby union players after a 4-week training program. Int J Sports Phys Perform. 2019;14(9):1205–11. https://doi.org/10.1123/ijspp.2018-0523.

Weakley JJS, Wilson KM, Till K, Read DB, Darrall-Jones J, Roe GAB, et al. Visual feedback attenuates mean concentric barbell velocity loss and improves motivation, competitiveness, and perceived workload in male adolescent athletes. J Strength Cond Res. 2019;33(9):2420–5. https://doi.org/10.1519/jsc.0000000000002133.

Argus CK, Gill ND, Keogh JW, Hopkins WG. Acute effects of verbal feedback on upper-body performance in elite athletes. J Strength Cond Res. 2011;25(12):3282–7. https://doi.org/10.1519/JSC.0b013e3182133b8c.

Wilson KM, Helton WS, de Joux NR, Head JR, Weakley JJS. Real-time quantitative performance feedback during strength exercise improves motivation, competitiveness, mood, and performance. Proc Hum Factors Ergon Soc Annu Meet. 2017;61(1):1546–50. https://doi.org/10.1177/1541931213601750.

Banyard H, Tufano J, Weakley J, Wu S, Jukic I, Nosaka K. Superior change in jump, sprint, and change of direction performance but not maximal strength following six weeks of velocity-based training compared to 1RM-percent-based training. Int J Sports Physiol Perform. 2020. https://doi.org/10.1123/ijspp.2019-0999.

Dorrell HF, Smith MF, Gee TI. Comparison of velocity-based and traditional percentage-based loading methods on maximal strength and power adaptations. J Strength Cond Res. 2020;34(1):46–53. https://doi.org/10.1519/jsc.0000000000003089.

Shattock K, Tee JC. Autoregulation in resistance training: a comparison of subjective versus objective methods. J Strength Cond Res. 2020. https://doi.org/10.1519/jsc.0000000000003530.

Pareja-Blanco F, Rodriguez-Rosell D, Sanchez-Medina L, Sanchis-Moysi J, Dorado C, Mora-Custodio R, et al. Effects of velocity loss during resistance training on athletic performance, strength gains and muscle adaptations. Scand J Med Sci Sports. 2017;27(7):724–35. https://doi.org/10.1111/sms.12678.

Weakley J, Chalkley D, Johnston R, García-Ramos A, Townshend A, Dorrell H, et al. Criterion validity, and interunit and between-day reliability of the flex for measuring barbell velocity during commonly used resistance training exercises. J Strength Cond Res. 2020;34(6):1519–24. https://doi.org/10.1519/jsc.0000000000003592.

Sánchez-Pay A, Courel-Ibáñez J, Martínez-Cava A, Conesa-Ros E, Morán-Navarro R, Pallarés JG. Is the high-speed camera-based method a plausible option for bar velocity assessment during resistance training? Measurement. 2019;137:355–61. https://doi.org/10.1016/j.measurement.2019.01.006.

Thompson SW, Rogerson D, Dorrell HF, Ruddock A, Barnes A. The reliability and validity of current technologies for measuring barbell velocity in the free-weight back squat and power clean. Sports. 2020;8(7):94. https://doi.org/10.3390/sports8070094.

Askow AT, Stone JD, Arndts DJ, King AC, Goto S, Hannon JP, et al. Validity and reliability of a commercially available velocity and power testing device. Sports. 2018;6(4):170. https://doi.org/10.3390/sports6040170.

Dorrell HF, Moore JM, Smith MF, Gee TI. Validity and reliability of a linear positional transducer across commonly practised resistance training exercises. J Sports Sci. 2019;37(1):67–73. https://doi.org/10.1080/02640414.2018.1482588.

Drinkwater EJ, Galna B, McKenna MJ, Hunt PH, Pyne DB. Validation of an optical encoder during free weight resistance movements and analysis of bench press sticking point power during fatigue. J Strength Cond Res. 2007;21(2):510–7.

Beckham GK, Layne DK, Kim SB, Martin EA, Perez BG, Adams KJ. Reliability and criterion validity of the assess2perform bar sensei. Sports. 2019;7(11):230–41. https://doi.org/10.3390/sports7110230.

Lake J, Augustus S, Austin K, Comfort P, McMahon J, Mundy P, et al. The reliability and validity of the bar-mounted PUSH Band(TM) 2.0 during bench press with moderate and heavy loads. J Sports Sci. 2019;37(23):2685–90. https://doi.org/10.1080/02640414.2019.1656703.

Sanchez-Moreno M, Cornejo-Daza PJ, Gonzalez-Badillo JJ, Pareja-Blanco F. Effects of velocity loss during body mass prone-grip pull-up training on strength and endurance performance. J Strength Cond Res. 2020;34(4):911–7. https://doi.org/10.1519/jsc.0000000000003500.

Weakley J, Ramirez-Lopez C, McLaren S, Dalton-Barron N, Weaving D, Jones B, et al. The effects of 10%, 20%, and 30% velocity loss thresholds on kinetic, kinematic, and repetition characteristics during the barbell back squat. Int J Sports Phys Perform. 2020;15(2):180–8. https://doi.org/10.1123/ijspp.2018-1008.

Suchomel TJ, Comfort P, Lake JP. Enhancing the force-velocity profile of athletes using weightlifting derivatives. Strength Cond J. 2017;39(1):10–20. https://doi.org/10.1519/SSC.0000000000000275.

Hopkins WG. Measures of reliability in sports medicine and science. Sports Med. 2000;30(1):1–15. https://doi.org/10.2165/00007256-200030010-00001.

Banyard HG, Nosaka K, Vernon AD, Haff GG. The reliability of individualized load-velocity profiles. Int J Sports Phys Perform. 2018;13(6):763–9. https://doi.org/10.1123/ijspp.2017-0610.

Vernon A, Joyce C, Banyard HG. Readiness to train: return to baseline strength and velocity following strength or power training. Int J Sports Sci Coach. 2020;15(2):204–11. https://doi.org/10.1177/1747954119900120.

Courel-Ibanez J, Martinez-Cava A, Moran-Navarro R, Escribano-Penas P, Chavarren-Cabrero J, Gonzalez-Badillo JJ, et al. Reproducibility and repeatability of five different technologies for bar velocity measurement in resistance training. Ann Biomed Eng. 2019;47(7):1523–38. https://doi.org/10.1007/s10439-019-02265-6.

Sato K, Beckham GK, Carroll K, Bazyler C, Sha Z. Validity of wireless device measuring velocity of resistance exercises. J Trainol. 2015;4(1):15–8. https://doi.org/10.17338/trainology.4.1_15.

Muyor JM, Granero-Gil P, Pino-Ortega J. Reliability and validity of a new accelerometer (Wimu®) system for measuring velocity during resistance exercises. J Sports Eng Tech. 2018;232(3):218–24. https://doi.org/10.1177/1754337117731700.

García-Ramos A, Ulloa-Díaz D, Barboza-González P, Rodríguez-Perea Á, Martínez-García D, Quidel-Catrilelbún M, et al. Assessment of the load-velocity profile in the free-weight prone bench pull exercise through different velocity variables and regression models. PLoS ONE. 2019;14(2):e0212085-e. https://doi.org/10.1371/journal.pone.0212085.

Wilson KM, de Joux NR, Head JR, Helton WS, Dang JS, Weakley JJS. Presenting objective visual performance feedback over multiple sets of resistance exercise improves motivation, competitiveness, and performance. Proc Hum Factors Ergon Soc Annu Meet. 2018;62(1):1306–10. https://doi.org/10.1177/1541931218621299.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLOS Med. 2009;6(7):e1000097. https://doi.org/10.1371/journal.pmed.1000097.

Rohatgi A. WebPlotDigitizer. 4.2 edn. 2019.

Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health. 1998;52(6):377–84. https://doi.org/10.1136/jech.52.6.377.

Johnston RD, Black GM, Harrison PW, Murray NB, Austin DJ. Applied sport science of australian football: a systematic review. Sports Med. 2018;48(7):1673–94. https://doi.org/10.1007/s40279-018-0919-z.

Batterham AM, Hopkins WG. Making meaningful inferences about magnitudes. Int J Sports Phys Perform. 2006;1(1):50–7. https://doi.org/10.1123/ijspp.1.1.50.

Weakley J, Fernández-Valdés B, Thomas L, Ramirez-Lopez C, Jones B. Criterion validity of force and power outputs for a commonly used flywheel resistance training device and bluetooth app. J Strength Cond Res. 2019;33(5):1180–4. https://doi.org/10.1519/jsc.0000000000003132.

Orange ST, Metcalfe JW, Liefeith A, Marshall P, Madden LA, Fewster CR, et al. Validity and reliability of a wearable inertial sensor to measure velocity and power in the back squat and bench press. J Strength Cond Res. 2019;33(9):2398–408. https://doi.org/10.1519/jsc.0000000000002574.

Orange ST, Metcalfe JW, Marshall P, Vince RV, Madden LA, Liefeith A. Test-retest reliability of a commercial linear position transducer (Gymaware powertool) to measure velocity and power in the back squat and bench press. J Strength Cond Res. 2020;34(3):728–37. https://doi.org/10.1519/jsc.0000000000002715.

Comstock BA, Solomon-Hill G, Flanagan SD, Earp JE, Luk HY, Dobbins KA, et al. Validity of the Myotest(R) in measuring force and power production in the squat and bench press. J Strength Cond Res. 2011;25(8):2293–7. https://doi.org/10.1519/JSC.0b013e318200b78c.

Crewther BT, Kilduff LP, Cunningham DJ, Cook C, Owen N, Yang GZ. Validating two systems for estimating force and power. Int J Sports Med. 2011;32(4):254–8. https://doi.org/10.1055/s-0030-1270487.

Martínez-Cava A, Hernández-Belmonte A, Courel-Ibáñez J, Morán-Navarro R, González-Badillo JJ, Pallarés JG. Reliability of technologies to measure the barbell velocity: implications for monitoring resistance training. PLoS ONE. 2020;15(6):e0232465. https://doi.org/10.1371/journal.pone.0232465.

Hughes LJ, Peiffer JJ, Scott BR. Reliability and validity of using the push band v2.0 to measure repetition velocity in free-weight and smith machine exercises. J Strength Cond Res. 2019. https://doi.org/10.1519/jsc.0000000000003436.

Goldsmith JA, Trepeck C, Halle JL, Mendez KM, Klemp A, Cooke DM, et al. Validity of the Open Barbell and Tendo weightlifting analyzer systems versus the Optotrak Certus 3d motion-capture system for barbell velocity. Int J Sports Phys Perform. 2019;14(4):540–3. https://doi.org/10.1123/ijspp.2018-0684.

Lorenzetti S, Lamparter T, Luthy F. Validity and reliability of simple measurement device to assess the velocity of the barbell during squats. BMC Res Notes. 2017;10(1):707–74. https://doi.org/10.1186/s13104-017-3012-z.

Fernandes JFT, Lamb KL, Clark CCT, Moran J, Drury B, Garcia-Ramos A, et al. Comparison of the FitroDyne and GymAware Rotary Encoders for quantifying peak and mean velocity during traditional multijointed exercises. J Strength Cond Res. 2018. https://doi.org/10.1519/jsc.0000000000002952.

Garnacho-Castaño MV, López-Lastra S, Maté-Muñoz JL. Reliability and validity assessment of a linear position transducer. J Sports Sci Med. 2015;14(1):128–36.

Gonzalez AM, Mangine GT, Spitz RW, Ghigiarelli JJ, Sell KM. Agreement between the Open Barbell and Tendo linear position transducers for monitoring barbell velocity during resistance exercise. Sports. 2019;7(5):125–33. https://doi.org/10.3390/sports7050125.

McGrath G, Flanagan E, O’Donovan P, Collins D, Kenny I. Velocity based training: validity of monitoring devices to assess mean concentric velocity in the bench press exercise. J Aust Strength Cond. 2018;26(1):23–30.

Abbott JC, Wagle JP, Sato K, Painter K, Light TJ, Stone MH. Validation of inertial sensor to measure barbell kinematics across a spectrum of loading conditions. Sports. 2020;8(7):93. https://doi.org/10.3390/sports8070093.

Chéry C, Ruf L. Reliability of the load-velocity relationship and validity of the PUSH to measure velocity in the deadlift. J Strength Cond Res. 2019;33(9):2370–80. https://doi.org/10.1519/jsc.0000000000002663.

García-Pinillos F, Latorre-Román PA, Valdivieso-Ruano F, Balsalobre-Fernández C, Párraga-Montilla JA. Validity and reliability of the Wimu® system to measure barbell velocity during the half-squat exercise. J Sports Eng Tech. 2019;233(3):408–15. https://doi.org/10.1177/1754337119831890.

Balsalobre-Fernández C, Kuzdub M, Poveda-Ortiz P, Campo-Vecino J. Validity and reliability of the push wearable device to measure movement velocity during the back squat exercise. J Strength Cond Res. 2016;30(7):1968–74. https://doi.org/10.1519/jsc.0000000000001284.

Garcia-Mateo P. Measurement of a squat movement velocity: comparison between a RehaGait accelerometer and the high-speed video recording method called MyLift. J Phys Ed Sport. 2020;20(3):1343–53. https://doi.org/10.7752/jpes.2020.03186.

Mann JB, Ivey PA, Sayers SP. Velocity-based training in football. Strength Cond J. 2015;37(6):52–7. https://doi.org/10.1519/SSC.0000000000000177.

Weakley J, Mann J, Banyard HG, McLaren S, Scott T, García-Ramos A. Velocity-based training: from theory to application. Strength Cond J. 2020. https://doi.org/10.1519/SSC.0000000000000560.

Pino-Ortega J, Bastida-Castillo A, Hernández-Belmonte A, Gomez-Carmona CD. Validity of an inertial device for measuring linear and angular velocity in a leg extension exercise. Proc Inst Mech Eng Part P J Sports Eng Technol. 2020;234(1):30–6. https://doi.org/10.1177/1754337119878296.

Weakley J, Wilson K, Till K, Banyard H, Dyson J, Phibbs P, et al. Show me, tell me, encourage me: the effect of different forms of feedback on resistance training performance. J Strength Cond Res. 2020;34(11):3157–63. https://doi.org/10.1519/jsc.0000000000002887.

Laza-Cagigas R, Goss-Sampson M, Larumbe-Zabala E, Termkolli L, Naclerio F. Validity and reliability of a novel opto-electronic device to measure movement velocity, force and power during the back squat exercise. J Sports Sci. 2019;37(7):795–802. https://doi.org/10.1080/02640414.2018.1527673.

Balsalobre-Fernández C, Marchante D, Baz-Valle E, Alonso-Molero I, Jiménez SL, Muñóz-López M. Analysis of wearable and smartphone-based technologies for the measurement of barbell velocity in different resistance training exercises. Front Physiol. 2017;28(8):649–58. https://doi.org/10.3389/fphys.2017.00649.

Balsalobre-Fernandez C, Marchante D, Munoz-Lopez M, Jimenez SL. Validity and reliability of a novel iPhone app for the measurement of barbell velocity and 1RM on the bench-press exercise. J Sports Sci. 2018;36(1):64–70. https://doi.org/10.1080/02640414.2017.1280610.

García-Ramos A, Pérez-Castilla A, Martín F. Reliability and concurrent validity of the Velowin opto-electronic system to measure movement velocity during the free-weight back squat. Int J Sports Sci Coach. 2018;13(5):737–42. https://doi.org/10.1177/1747954118791525.

Peña García-Orea G, Belando-Pedreño N, Merino-Barrero JA, Jiménez-Ruiz A, Heredia-Elvar JR. Validation of an opto-electronic instrument for the measurement of weighted countermovement jump execution velocity. Sports Biomech. 2018. https://doi.org/10.1080/14763141.2018.1526316.

Weakley J, Till K, Read DB, Leduc C, Roe GAB, Phibbs PJ, et al. Jump training in rugby union players: barbell or hexagonal bar? J Strength Cond Res. 2018. https://doi.org/10.1519/jsc.0000000000002742.

Weakley J, Till K, Read DB, Phibbs PJ, Roe G, Darrall-Jones J, et al. The effects of superset configuration on kinetic, kinematic, and perceived exertion in the barbell bench press. J Strength Cond Res. 2020;34(1):65–72. https://doi.org/10.1519/jsc.0000000000002179.

Jones B, Weaving D, Tee J, Darrall-Jones J, Weakley J, Phibbs P, et al. Bigger, stronger, faster, fitter: the differences in physical qualities of school and academy rugby union players. J Sports Sci. 2018;36(21):2399–404. https://doi.org/10.1080/02640414.2018.1458589.

Appleby BB, Cormack SJ, Newton RU. Reliability of squat kinetics in well-trained rugby players: implications for monitoring training. J Strength Cond Res. 2019;33(10):2635–40. https://doi.org/10.1519/jsc.0000000000003289.

Boehringer S, Whyte DG. Validity and test–retest reliability of the 1080 quantum system for bench press exercise. J Strength Cond Res. 2019;33(12):3242–51. https://doi.org/10.1519/jsc.0000000000003184.

Stock MS, Beck TW, DeFreitas JM, Dillon MA. Test–retest reliability of barbell velocity during the free-weight bench-press exercise. J Strength Cond Res. 2011;25(1):171–7. https://doi.org/10.1519/JSC.0b013e318201bdf9.

Jennings CL, Viljoen W, Durandt J, Lambert MI. The reliability of the FitroDyne as a measure of muscle power. J Strength Cond Res. 2005;19(4):859–63. https://doi.org/10.1519/r-15984.1.

de Sá EC, Ricarte Medeiros A, Santana Ferreira A, García Ramos A, Janicijevic D, Boullosa D. Validity of the iLOAD® app for resistance training monitoring. PeerJ. 2019;7:e7372. https://doi.org/10.7717/peerj.7372.

Sañudo B, Rueda D, Pozo-Cruz BD, de Hoyo M, Carrasco L. Validation of a video analysis software package for quantifying movement velocity in resistance exercises. J Strength Cond Res. 2016;30(10):2934–41. https://doi.org/10.1519/JSC.0000000000000563.

Pena Garcia-Orea G, Belando-Pedreno N, Merino-Barrero JA, Heredia-Elvar JR. Validation of an opto-electronic instrument for the measurement of execution velocity in squat exercise. Sports Biomech. 2019. https://doi.org/10.1080/14763141.2019.1597156.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

At no point was funding received by any of the authors for the writing of this manuscript. The publishing of this article open access has been made possible by the UK Read and Publish (Springer Compact) agreement.

Conflict of interest

Jonathon Weakley, Matthew Morrison, Amador García-Ramos, Rich Johnston, Lachlan James, and Michael Cole declare that they have no conflicts of interest relevant to the content of this review.

Availability of data and materials

All data and materials reported in this systematic review are from peer-reviewed publications. All of the extracted data are included in the manuscript and supplementary files.

Author's contributions

JW, MC, and MM conceptualized the review and criteria. JW, MM, and RJ completed the screening and data extraction of all data within this manuscript. AGR, LJ, and MM created the tables. JW, MC, AGR, LJ, and RJ completed the writing of the manuscript. All authors reviewed and refined the manuscript.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weakley, J., Morrison, M., García-Ramos, A. et al. The Validity and Reliability of Commercially Available Resistance Training Monitoring Devices: A Systematic Review. Sports Med 51, 443–502 (2021). https://doi.org/10.1007/s40279-020-01382-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40279-020-01382-w