Abstract

As the broader field of individual differences in second/foreign language learning has grown tremendously over the past few decades, its subfields have expanded with a similar intensity. Language learning strategies (LLS) is one such area. Developments have been made regarding the scope and methodology of LLS research, especially. While there have been a number of reviews of the field’s output, few have targeted research in a specific context. With this in mind, the current study offers a situated view of LLS research in Taiwan. It focuses on three core components that are essential to empirical research: (a) contexts and participant characteristics; (b) theoretical-conceptual aspects; and (c) methodological characteristics. Drawing on journal articles systematically collected from major databases and reviews conducted by multiple researchers to ensure reliability and to minimize bias, we provide an overview of the field as it has manifested in Taiwan. Findings from select studies are also discussed. In doing so, this article makes connections between LLS research in Taiwan and the larger, global context, with implications for "the road ahead." We hope it will be a valuable resource for anyone interested in reading about and/or conducting LLS research in this setting and others.

摘要

過去幾十年來, 第二語/外語學習者個體差異研究的蓬勃發展有目共睹, 而其子領域也隨之迅速發展。「語言學習策略」 即為其中一個領域, 且其研究的範圍和方法尤其取得了重大進展。然而, 儘管前人已提出許多與該議題有關的文獻及研究, 其中針對特定背景的研究卻只占少數。有鑑於此, 筆者針對在台灣有關語言學習策略研究為出發點, 聚焦於三個核心元素, 一旦缺少了其中一項, 實證研究將難以實踐: (a) 背景和參與者特徵; (b) 理論-概念之面向及(c) 方法論之特徵。利用從主要數據庫系統收集的期刊文章並經由多名研究者審閱,以確保文章可靠性並儘量減少偏誤, 我們綜述了該領域在台灣的表現並對 選定研究的結果進行討論。透過本文,台灣的語言學習策略研究將可以與全球情境產生連結, 並影響未來的相關研究。我們希望此研究能為有興趣閱讀或進行語言學習策略研究的讀者提供有價值的參考及建議。

Similar content being viewed by others

Language Learning Strategies as Individual Differences

The study of individual differences has been the purview of a number of great thinkers long before it became a formal area of scholarship [20]. In providing a broad, historical account, Revelle et al. [51] described individual differences as “the study of affect, behavior, cognition, and motivation as they are affected by biological causes and environmental events. That is, it includes all of psychology” (p. 3)—and all of the dissimilarities in how we think and feel, what we want and need, what we do, and how and why people differ.

Research on individual differences in language learning and use has proliferated in major domains such as reading [1], writing [33], and at the intersection of speaking and listening development [26]. Within these domains, the strategies that learners use to develop their language proficiency has continued to be a prominent component of individual differences research, especially in the field of second/foreign language (L2) learning [see 17–19, 25].

Language Learning Strategies: Early Work

Rubin [54] defined strategies as “the techniques or devices which a learner may use to acquire knowledge” (p. 43). Her early work marked the beginning of the field of language learning strategies (LLS).Footnote 1 She proposed a list of strategies that “good” language learners used. The aim was to identify and then teach those strategies to less successful learners. This initial list prompted further refinement [see 44, 55] and spurred early taxonomies that accounted for a range of cognitive, metacognitive, and social/affective strategies [45]. However, it was Oxford’s [46] categorization of strategies as direct—those contributing directly to learning (i.e., memory, cognitive, and compensation strategies)—and indirect—those contributing indirectly to learning (i.e., metacognitive, affective, and social strategies)—that formed the basis of her Strategy Inventory for Language Learning (SILL). Oxford’s SILL made researching LLS simple—perhaps too simple—as her questionnaire could be administered to learners quickly and easily, with little time spent on preparation or analysis. It became the most widely used instrument for data collection in the field. Furthermore, Oxford’s [46] definition of LLS became one of the most widely cited: “specific actions taken by the learner to make learning easier, faster, more enjoyable, more self-directed, more effective, and more transferable to new situations” (p. 8). Alongside Cohen [13] and Wenden [65], this early work contributed greatly to taking LLS research from a niche area to a mainstream endeavor within applied linguistics.

As time went on, LLS researchers continued to advance the field and engage in critical discussions over its trajectory. However, in the LLS chapter in his volume on individual differences in L2 learning, Dörnyei [17] argued that due to unresolved issues regarding definitions, categorizations, and underlying theory, LLS research should be replaced by research on self-regulation and self-regulatory capacity [see also 19]. He claimed that the shift to self-regulation does not solve the theoretical dilemma but affords researchers leeway when discussing learning processes. In one seminal example, Tseng et al. [63] demonstrated how a shift in research focus from strategies to underlying processes could be realized in the development of their Self-Regulating Capacity in Vocabulary Learning scale (SRCvoc). The items on this scale tap into the general trends and inclinations of learners rather than their specific strategies.

Unwilling to leave strategies behind, Macaro [38] offered his own theoretical framework that drew on existing theory from cognitive psychology while still remaining independent of a view of strategic learning subsumed by self-regulation. Some LLS researchers embraced a complementary view of strategies and self-regulation [see 22,24], while others sidestepped critical issues by focusing on equally complementary concepts such as metacognition [e.g., 70]. Further still, a small number of researchers embraced a sociocultural view of strategic learning that emphasized the mediation of others and artefacts [e.g., 23]. However, perhaps most notably, Oxford [47], a name synonymous with the explosion of LLS research in the late 80s and 90s, adopted self-regulation as a central feature of her Strategic Self-Regulation (S2R) model of language learning.

Recent Research

In a systematic review of LLS research from 2010 to 2016, Rose et al. [52] identified three broad types of studies, based on their theoretical and methodological orientations:

-

1.

Strategy research that embraced self-regulation theory as central to the research framework;

-

2.

Strategy research that utilized traditional LLS constructs, while acknowledging contributions from self-regulation;

-

3.

Strategy research that moved the field into novel territory, via means of developing new instruments, exploring new structures, or examining relationships between strategic learning and other theories.

[Adapted from 52, p. 155]

Methodologically, the authors found that quantitative research still dominated the LLS landscape (often using Oxford’s [46] SILL), despite numerous, earlier calls for more qualitative approaches [see 21,63].

Since Rose et al.’s [52] review, researchers have continued to publish studies that largely fit within the three types above. For example, (a) Tseng et al.’s [64] scale validation study that focused on L2 learners’ self-regulatory capacity; (b) Amerstorfer’s [2] repurposing of the SILL as a tool for mixed methods research; and (c) Teng and Zhang’s [59] continued work developing and evaluating new models of self-regulated learning strategies. Of note methodologically, Cohen and Wang [15] developed micro-level methods to trace the functions of LLS and the fluctuations of those functions when LLS are in use. Meanwhile, researchers such as Hajar [27] and Hu and Gao [31] have leveraged other in-depth, qualitative approaches that have proven fruitful in exploring nuances in self-/co-/other-regulated strategic behavior, which is supported by arguments in other recent work [4, 60,61,62].

Overall, however, the field is still very much dominated by rudimentary questionnaire research that is often situated in Oxford’s [46] categorization of strategies, despite enduring its fair share of criticism over the years and Oxford herself moving on to more innovative theoretical-conceptual work [see 48]. Nevertheless, the field is active, pedagogically promising, and continues to benefit from “well-intentioned scrutiny” [71, p. 91]. Thus, it remains strategically poised for further theoretical and methodological advancement.

Research Questions and Rationale

With the above developments in mind, the following review seeks to understand three core components of empirical research as they pertain to LLS research in Taiwan: context and participant characteristics, theoretical-conceptual aspects, and methodological characteristics. As there have been a number of general overviews of the field worldwide [e.g., 25,27,61], upon receiving the invitation to write a LLS article for this special issue, we wanted to home in on English Teaching & Learning’s position as the first and highest ranked academic journal in Taiwan dedicated to research on the teaching and learning of L2 English.

Moreover, Taiwan is an interesting context because it is at an intersection of influences in terms of traditional Confucian education and a relatively recent push for increased internationalization through English education. In 2002, Chern [8] noted that English language education is “a whole-nation movement” towards multilingualism for its citizens (p. 105). This sentiment was later echoed by other researchers [e.g. 7,37], signaling to us that that a focus on LLS in this context would be a worthwhile endeavor.

We use the following research questions to guide our discussion:

-

1.

What research contexts and participant characteristics have been investigated?

-

2.

What conceptual definitions and theoretical frameworks have been operationalized?

-

3.

What are the methodological characteristics of the research that has been conducted?

Methodology

Following the methodology of Rose et al. [52] and the synthetic reporting style of similar reviews [see 3, 30, 42], we carried out a systematic review of what we saw as the core components of LLS research in Taiwan (see previous section).

We consider our review systematic because we used: (a) transparent, systematic procedures from the initial literature search to final conclusions; (b) exhaustive coverage of content as it pertained to our research questions; and (c) multiple reviewers to ensure reliability of the analysis and to minimize bias. However, we did not attempt to synthesize research findings or critique the quality of individual studies beyond the systematic mapping process (see below). Consequently, to some academics, this review may be considered “semi-systematic” [as per 52], despite our adherence to strict reviewing protocols from start to finish. Nevertheless, our aims align with Snyder’s [57] description of semi-systematic reviews as attempts to synthesize research within a selected field using meta-narratives, whereby the “analysis can be useful for detecting themes, theoretical perspectives, or common issues” and “a potential contribution could be, for example, the ability to map a field of research, synthesize the state of knowledge, and create an agenda for further research” [57, p. 335].

Regarding systematic reviews in applied linguistics, Macaro [39] recommends building a team of relevant stakeholders—researchers and practitioners—to enable a collection of different perspectives on the given topic. Five members of our team have extensive experience conducting research and teaching in East/Southeast Asia (generalists in research and practice in this region). Four members have published strategy-related research (content specialists). One member completed a PhD in Taiwan and spent the majority of his professional career working there (context specialist). Another member is multilingual—academically proficient in English and Chinese—which enabled us to search for LLS research published in both languages (local language specialist). And one member was added to provide an objective, outsider (non-strategy researcher) perspective on research methods and measures (methods specialist).

Searching and Selecting

In order to build our collection of studies (i.e., article pool), we searched the following major databases in August 2020: Web of Science Core Collection, Scopus, British Education Index, ERIC, Linguistics Abstracts Online, Linguistics and Language Behavior Abstracts, and MLA International Bibliography. To ensure we did not miss important work published in national or regional journals, we also searched Airiti Library, which includes major indexes in Taiwan and mainland China. We searched for journal articles that included ["language learn* strateg*" AND "Taiwan*"] in the title, abstract, and/or keywords. We also searched for articles that included ["reading" OR "writing" OR "listening" OR "speaking" OR "grammar" OR "vocabulary" AND "strateg*"]—in the title—[AND "Taiwan*"] in the abstract and/or keywords. We then translated the search strings into Chinese and used them for another round of searching in Airiti Library. This produced a list of 237 articles. Then, we manually removed 119 articles because they were either duplicates that had not been automatically removed or did not fit the following inclusion criteria:

-

empirical research studies

-

published in an academic journal

-

related to L2 learning

-

situated in Taiwan and/or including Taiwanese learners in the sample

This left us with 118 unique studies. During the downloading stage, we removed 19 additional studies because they were either unavailable or written in a language other than English or Chinese (a noted limitation). In the event that a full text was unavailable, we searched online using Google Scholar, ResearchGate, and even emailed corresponding authors (when an email address was available), before removing the study. We also added one article [63], which was not retrieved by our searches because the paper was marketed beyond simply a study of Taiwanese learners’ LLS, and therefore, it was not identified in our initial keyword searches (it is considered a seminal study so we were familiar with its existence). The end result was an article pool of 100 studies which we organized chronologically and stored in a shared folder (the full list of articles can be found in Supplementary Material).

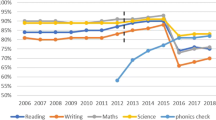

Figure 1 shows the breakdown of studies in two-year clusters in order “to produce robust time cells that reflect temporal trajectories in a more reliable manner than that of a single-year categorization” [3, p. 148]. Although 27 unique articles were identified via Chinese indexes, all of the full texts were written in English.

Data Collection and Analysis

Each researcher was allotted a set of articles to review with overlaps in each set to ensure all 100 articles would be reviewed by at least two members of the team. Replicating the procedures of Rose et al. [52], all 100 articles were reviewed using an extraction grid purpose-built for our research questions. The extraction grid included space for: (a) bibliographic and author information; (b) a definition of LLS (if any) provided by the author(s); (c) theoretical framework (if any); (d) strategy focus of the study; (e) context and participant information; (f) topic under investigation; (g) research methods; (h) measures; and (i) reviewers’ comments regarding key findings, study quality/trustworthiness, and a recommendation as to whether the study should be discussed in our findings section. Recommendations were accompanied by a brief rationale. Positive recommendations generally highlighted contextual novelty or some form of methodological rigor or innovation. Disagreements among reviewers were rare and were discussed until consensus was met.

Data from all extraction grids were aggregated and organized into a single systematic map. A condensed version of the systematic map can be found in Supplementary Material. Some specific aspects of the coding process will be discussed as the relevant topics are introduced. Due to space restrictions, only a small number of reviewed studies are cited below. We encourage interested readers to review the supplementary material for further information.

Findings and Discussion

Context and Participant Characteristics

Of the 100 studies included in our systematic map (see Supplementary Material), the vast majority were conducted at the tertiary level (k = 79), with LLS research at the secondary (k = 18) and primary (k = 3) levels lagging behind (two studies were coded as other; see below).Footnote 2 Figure 2 highlights this discrepancy. However, this is not surprising, since it is consistent with other reviews in applied linguistics. For example, in Mahmoodi and Yousefi’s [42] recent synthesis of articles on L2 motivation, the authors identified a similar trend: 66 empirical studies at the tertiary level, 27 at the secondary level, and just one at the primary level. This is likely due to more relaxed ethical considerations when researching adults versus children, and the ease of accessibility for graduate students and staff working in universities who conduct the lion’s share of academic research (see a similar discussion in [3]).

A small number of studies (k = 11) used terms such as college, junior college, technical college, and technical university. However, it was often unclear as to the differences between these contexts when compared to the majority of studies that used the term university. Some authors used terms such as college students to describe participants and university to describe the institution. Therefore, we coded all post-secondary education as tertiary in our final systematic map. This was also the case for studies that used terms such as elementary, high school, junior high school, and senior high school. In those instances, we coded broadly, using the terms primary and secondary.

Two studies did not fit easily into our three main groupings, so we created an other category. The first article in this category [49]—which is also the earliest in our article pool—included factor analyses from various contexts around the world in order to demonstrate the validity and reliability of Oxford’s [46] SILL. Although technically not an original empirical study, data from five unpublished studies at the Taiwanese tertiary level were discussed. Thus, it seemed remiss to exclude the article, as it shined a light on early LLS research in Taiwan.Footnote 3 Su and Weng’s [58] article was also included in this other category. It focused on older adults (N = 212) attending English-language classes at five institutions dedicated to teaching learners no younger than 55 years of age (up to 80 years of age in the study). To us, this qualified as a unique research context worth separating from other studies at the tertiary level.

At the youngest end of the spectrum, we were surprised to see only three studies conducted at the primary level, all with learners in grades five and/or six. Moreover, all three studies were conducted with relatively large groups of participants (ranging from N = 212 to N = 932). For instance, Lan and Oxford [35] used an adapted version of the SILL to determine the broad profile of self-reported LLS use for sixth grade students (N = 379). The authors then compared the findings to studies in other contexts and in relation to variables such as gender, proficiency level, and the students’ affinity for English. While interesting—and consistent with early LLS research more broadly—these types of studies tell us very little about the nuances of students’ strategy use (discussed in more detail below). Lan and Oxford stated that the questionnaires also featured open-ended items and that interviews were conducted with a small (sub)sample of students. However, the findings from these additional data collection methods were not reported, and we were unable to identify a follow-up article.

By coding the studies into one of six sample-size categories, we see a common trend for similarly designed research with medium-to-large sample sizes, as illustrated in Fig. 3. Three studies included a sample of five or fewer participants. Other samples ranged from 6–20 participants (k = 4), 21–50 participants (k = 15), 51–100 participants (k = 17), 101–250 participants (k = 32), 251–500 participants (k = 16), and 501 + participants (k = 12). Within this final category, five studies included a sample of more than 1,000 participants.

Unsurprisingly, most studies were carried out in Taiwan. Nevertheless, there were two studies conducted in Taiwan and other macro contexts (Hong Kong [67]; the Philippines [41]), and one study of Taiwanese participants in a macro context outside Taiwan (North America [50]). In most studies, the context was made clear in the title, abstract, and/or methods section, as one would expect. However, two studies did not reveal this information until the conclusion, and in five studies, it had to be inferred; those articles included vague descriptors such as Taiwanese college students or Taiwanese English learners, without specifying the institution where learners were enrolled.

The importance of a well-defined context is further highlighted by Magno et al.’s [41] article on Taiwanese university students (N = 146) in Taiwan (n = 66) and in the Philippines (n = 80). The authors found that the participants in the Philippines—an English as a second language (ESL) context—had more exposure to English in everyday life and experienced an increased need for English proficiency. Therefore, they used more LLS than the cohort in Taiwan. Magno et al. argued that taken together, these factors predicted increased oral proficiency for the Taiwanese ESL learners in the Philippines when compared to the home-based English as a foreign language (EFL) group.

Such findings highlight the importance of differentiating between ESL (learning English in contexts where English is recognized as an official language) and EFL (learning English in contexts where English is not recognized as an official language). Taiwan is an EFL context, which means there are likely constraints on access to English in everyday life and to proficient interlocutors. However, this was rarely mentioned in the articles we surveyed. There was also little exploration of individual differences in terms of exposure to English outside the immediate context of the study. For instance, a number of studies used demographic questionnaires but only elicited basic information like gender and age. Overall, due to broad definitions of research contexts and participant demographics, nearly all of the studies were portrayed as homogenous.

In one of the outlier studies that did not rely solely on Taiwanese learners, Yang [67] compared Taiwanese university students to Hong Kong university students, each in their home contexts. He found that the Taiwanese students used more indirect LLS, while the Hong Kong students used more direct LLS (see Oxford’s [46] categorization above). There was also more intra-group variation regarding common variables such as gender, academic discipline, and English proficiency level among the Taiwanese group than the Hong Kong group. In the other outlier study—and the only study in the sample to research LLS for a language other than English—Chu et al. [12] explored the relationships between ambiguity tolerance, LLS, and Chinese as a second language learners’ L2 proficiency. The participants (N = 60) were international students from fifteen different countries.

Theoretical-Conceptual Aspects

Before researchers can begin an empirical study, it is important that they define the key constructs to be examined. Researchers’ definitions of key constructs can provide insights into their theoretical and conceptual orientations, although this does not always occur. For example, Rubin’s [54] definition of strategies at the start of this article has to be read alongside the rest of Rubin’s publication to ascertain additional clues that inform readers of how she conceptualized LLS as “cognitive processes that seem to be going on in good language learners” (p. 48, emphasis added).

Theoretically, cognitive perspectives are considered mainstream approaches to conceptualizing LLS [e.g., 38,45], while socially oriented perspectives are generally seen as alternative approaches [see 23, 27, 31]. Both perspectives influence the nature of the research to be conducted and its implications. Therefore, it is important for authors to think carefully about the definitions they subscribe to and to state them explicitly. This is especially true in the field of LLS, since definitions have long been a topic of debate [see 48,60,61].

In our article pool, only 58 studies included an explicit definition of LLS and/or a definition for the specific types of strategies the researcher(s) investigated. Even still, this number is generous; there were some studies that included multiple, sometimes conflicting definitions, and yet the author(s) did not disclose what definition they intended to operationalize. The most popular definition cited was Oxford’s [46] (see above) or a variation of it (k = 24). Other popular definitions include those from Cohen [13, 14] and O’Malley and Chamot [45]. These findings are in line with a recent study of the field worldwide, which found a similar percentage of articles that included conceptual definitions and identified the same publications as influential in providing often-cited definitions [61].

In terms of theory, only 37 studies made explicit reference to a theoretical framework. As with our coding of definitions, this number is generous. Some studies described various theories but were unclear in terms of how they were integrated into the current study. On the whole, most theoretical frameworks were inherently cognitive, especially in regards to strategy-based instruction, with a handful of articles reporting through sociocultural lenses. The extent to which authors engaged with theory varied, and it did not appear to play a major role in most of the research conducted. Therefore, we have not provided a detailed treatment of theory here.

Methodological Characteristics

Although terms such as approach, design, and method(s) are used differently among researchers, following McKinley [43], we define approach as a “generic term given to the manner in which a researcher engages with a study as a whole … a macro-perspective of research methodology” (p. 3). The approach typically informs the research design as well as the methods for data collection and analysis. In reality, however, complex designs may necessitate blurring the lines between what would otherwise be a conventionalized, cascading framework (approach → design → method[s]).

De Vaus [16], like McKinley [43], described the research design as the structure, dictating the logical flow of the study. He recommended describing the design separately from the research methods, since “there is nothing intrinsic about any research design that requires a particular method of data collection” (p. 9). Thus, we coded approaches as quantitative, qualitative, or mixed methods [as per 40], designs as experimental, longitudinal, cross-sectional, and case study [as per 16], and methods—both for data collection and analysis—using an open-coding system [as per 52].

Unfortunately, not all studies were explicit about important methodological aspects. In this regard, we support the authors of other systematic reviews in creating codes (e.g., “Unclear”) for research designs and/or methods in studies that include descriptions with “inadequate detail about the overall approach or study setup” [30, p. 15]. Nevertheless, we used information reported throughout the text to make inferences, reporting in as much detail as could be ascertained from the original articles. We realize that there may be some disagreements regarding our coding decisions and those of other researchers and the studies’ original authors. We do not feel as though those potential disagreements qualitatively affect the review or our interpretations as a whole. If anything, they signal a need for more explicit reporting practices. Figure 4 shows a breakdown of the research approaches and designs. Table 1 provides a breakdown of the data collection methods.

Out of the 99 primary studies in our sample, the majority (k = 67) were exclusively quantitative, yet there were also seven qualitative studies and 25 that took a mixed methods approach. Studies coded as mixed methods involved the “combination of qualitative and quantitative approaches at the design, data collection, or data analysis level” [53, p. 262]. Most commonly, this materialized as approaches that centered on sequential (QUAN → QUAL) and, to a much lesser extent, concurrent (QUAN + QUAL) approaches, typified by questionnaires and semi-structured interviews. Sometimes, it was unclear as to how the approaches worked together to afford triangulation.

Though possessing different limitations, Kung’s [34] study, which explored the effects of reading strategy instruction on reading comprehension and learning experience, is an example of a study that used a typical mixed methods approach (with pre/post-tests, questionnaires, and interviews) and clearly demonstrated triangulation. The findings and discussion are organized in subsections, one for each research question, with both quantitative and qualitative findings presented together as support for the author’s claims.

Regarding research designs, over half of the studies (k = 68) were cross-sectional. Fifty-two of those studies reported on the use of at least one questionnaire as a method of data collection. Some studies relied on multiple questionnaires [e.g., 32] or a single questionnaire that combined items from various existing questionnaires [e.g., 11]. In our analyses, we observed that although a questionnaire of some sort was used in approximately 90% of the papers in our sample, basic, questionnaire-based cross-sectional designs, such as those which dominated early LLS research worldwide, lessened dramatically in recent years in Taiwan (although they were still an issue in less reputable journals). As we will discuss below, employing multiple measures and more advanced methods of data analysis played a part in this positive evolution.

Experimental (including quasi-experimental) designs (k = 27) were the second most common type in the article pool. The (quasi-)experimental studies used either a quantitative (k = 17) or mixed methods (k = 10) approach. The majority of these studies investigated the effects of strategy-based instruction interventions. For example, Yeldham [68] compared two EFL classes at a Taiwanese university. One class received listening strategy instruction (strategy group), while the other received strategy instruction and bottom-up skill-based instruction (interactive group). Repeated-measures ANOVA showed significant improvement for the strategy group F(1, 32) = 10.05, p = 0.003, with a medium effect size (d = 0.587), but not for the interactive group (among other pedagogically relevant findings).

With a focus on reading instead of listening, Shih and Reynolds [56] conducted a quasi-experimental study investigating the effectiveness of goal setting integrated with reading strategy instruction on increasing reading proficiency for secondary school students. This study took place over a 36-week academic year, and thus, as with other studies we coded as experimental, included a longitudinal component. However, the focus was on pre/post-intervention outcomes, as opposed to tracking students over time with repeated instances of data collection (see below). We used this distinction to differentiate between experimental and longitudinal designs so as to avoid placing studies in both categories based on what aspects researchers emphasized. This was not always easy, especially when study procedures were not reported clearly. On the whole, we found it promising that at least 25% of the studies involved some kind of intervention, exploring the efficacy of different approaches to strategy instruction, and thus illustrating that teachers still have an important role to play in developing successful strategy users [60–62].

Based on the explanation above, there were just three longitudinal studies in the article pool. Yet, each study featured elements similar to those coded as experimental. For example, Chen [5] implemented strategy-based instruction over 14 weeks in an English listening course; however, “rather than examining a cause-effect-relationship, this study focused in particular on exploring learners’ listening strategy development over the course of SI [(strategy instruction) via reflective journals]” (p. 54). Yeldham and Gruba [69] made a similar distinction, focusing on the idiosyncratic development of four Taiwanese EFL learners at a local university. The researchers used a battery of data collection methods, including vocabulary, listening, and cognitive style tests; verbal reports, semi-structured interviews, and questionnaires; and observations, informal interviews, and artefact inspections in what they described as “longitudinal multi-case studies” [69, p. 12]. Their article bridged the gap between experimental, longitudinal, and case study designs, while also drawing on what is the most diverse array of data collection methods of any study in the article pool.

Aside from the fact that Yeldham and Gruba’s [69] study could have also been coded as a case study design, whereby each individual represented a unique case, there was only one study coded as such. As Rose et al. [53] note, “in social science research, cases are primarily people, but in applied linguistics, a case can also be positioned as a class, a curriculum, [or] an institution” (p. 7), inter alia. In our article pool, Huang [29] established her case boundaries as the contexts in which her student-participants (N = 12) from two college EFL classes situated their learning. She used student interviews, teacher interviews, classroom observations, and document analyses to describe how strategic learning for her participants was influenced by changing contextual factors and students’ needs. Although not fleshed out to the extent we would expect to see from such a case, Huang’s study, as with others mentioned above, is a step in the right direction in its use of varied data collection methods with in an ethnographically informed design. In essence, such studies can account for the holistic experience of strategic learning in specific contexts.

Unsurprisingly, the most commonly used data analysis technique was descriptive statistics (k = 84). Typically, this involved examining means and standard deviations of students’ LLS use collected via questionnaires. It also included frequency counts of strategies and/or tallied codes in qualitative or mixed methods studies. Boo et al.’s [3] differentiation between studies using (a) standard inferential statistics and (b) structural equation modelling (SEM) as a more complex statistical approach is helpful here [see also 42]. As seen in Table 2, the vast majority of tests used standard inferential statistics, while there were only six studies that employed SEM (including all SEM-related tests, e.g., CFI [comparative fit index], TFL [Tucker-Lewis index], etc.).

Moreover, Table 2 shows that most quantitative data analysis involved the use of parametric tests, namely t-tests (k = 48), analysis of variance (k = 34) (all types, including one- and two-way ANOVA, MANOVA, etc.), and Pearson’s correlation coefficient (k = 20). Unfortunately, most researchers did not report on checking for assumptions to justify the use of parametric tests. Therefore, little can be said about the robustness of the findings.

Similarly, regarding the various types of content/thematic analyses that were used, more detailed reporting is needed in most of the studies we reviewed. In many instances, for example, it was impossible to tell what the researchers actually did during the coding process. When unsure, we labeled those analysis techniques generically as content analysis (including its quantitative and qualitative forms).

Overall, standard statistical procedures such as t-tests have been exploited to a large extent in our article pool, though it is promising to see that interest in more complex statistical procedures has emerged in recent years. For instance, following the first paper using SEM in 2014, we see an increase in the development of sophisticated models in regards to LLS (along with other factors) and writing performance [66], writing motivation [36], speaking assessment [28], reading performance [9], and self-regulation [64]. Moreover, there has been a recent increase in the number of papers that report on the results of regression analyses that delve into the causal effect between variables. This research has explored LLS alongside predictors of students’ self-rated writing ability [32], factors influencing English speaking anxiety [10], the effects of reading strategies on reading comprehension [6], and various factors predicting self-efficacy in test preparation [11], among others.

Conclusions and Limitations

This paper aimed to explore three core components of LLS research in Taiwan. While the review highlighted that much early research in this context continued the tradition of an “over-dependence on survey tools” [21, p. 4] and perpetuated inconsistencies in core components such as those highlighted by Dörnyei [17]—namely, definitions, theory, and methodology—there are movements toward more rigorous, nuanced work. This trend is positive, though, we have several recommendations for future research in this context and others more broadly.

First, we see a need for smaller, more in-depth studies that provide detailed information about the participants’ backgrounds and contextual factors. Since LLS use has been shown to be idiographic (see 69) and influenced by sociocultural factors (see 23, 27, 31), studies would benefit from discussing these aspects in relation to their participants and contexts. In a similar vein, the primary and secondary levels are woefully underrepresented in studies as a whole, with insufficient attention paid to age effects, institutional practices, and early learner development. While we understand the convenience of sampling older students in many instances, we share the same belief as Boo et al. [3] that it is perhaps more fruitful to investigate language learning at these younger age ranges, especially when dealing with formal education settings. Doing so will enable researchers to capture the foundations of L2 development and LLS use. Interestingly, no studies discussed grammar learning strategies—a major part of primary/secondary education in Taiwan.

Second, research would benefit from defining key constructs, adhering to these definitions, and linking those definitions to theoretical-conceptual frameworks. Given the broad scope of our review, we are hesitant to delve into the issue of underpowered theoretical frameworks. Nevertheless, we feel confident in stating that the practical nature of the studies we reviewed was clearly prioritized over theoretical engagement. Having pragmatic aims is important for applied research but need not result in an absence of theory. This message is not limited to Taiwan/LLS, however, as research in applied linguistics is severely undertheorized, especially when compared to psychology, for example [see 43]. We hope that studies continue to offer pedagogically relevant findings but engage more with an explicit theoretical framework.

Third, despite description and speculation being abundant in early studies, recent quantitative work has tried to explain, empirically, what variables affect each other, in what ways, and to what extent. While this is a step in the right direction, we see potential for further innovative research designs, where mixed methods research goes beyond a relatively superficial mixing of quantitative and qualitative approaches. Specifically, there is room to go beyond general, trait-based questionnaires and integrate more longitudinal, ethnographically informed designs, which can make better use of triangulation. Moreover, in conjunction with the increase in more sophisticated statistical testing measures, we would like to see the same rigor applied to qualitative measures, such as the application of grounded theory, process tracing, or increased observation of LLS use in situ. Most importantly, regardless of the design, all studies need clearly articulated reporting practices for both data collection and analysis.

While we believe this review will be beneficial to LLS researchers in Taiwan—especially our systematic map (see Supplementary Material)—there are limitations. In mapping the totality of published LLS research in this context, we left ourselves little room to expand in detail on exemplary studies. Within the article pool, there were studies that truly impressed us with their methodological rigor and novel findings, although only a few were given explicit attention here. Furthermore, despite our best efforts in casting a wide net, we cannot claim that we have been fully comprehensive in identifying all relevant studies. To this end, we encourage researchers to use our systematic map as a starting point for their own reviews. Finally, given the synthetic scope of our review, we did not eliminate studies based on their quality/weight of evidence, as in other, more focused systematic reviews, which typically report on a smaller sample of studies. We hope that after identifying studies via our systematic map, other researchers will be able to make those decisions if necessary.

Notes

We use “LLS” to refer to both singular and plural constructions (i.e., language learning strategy and language learning strategies).

Two studies took place at both the secondary and tertiary level, which is why the total is 102.

We have not included Oxford and Burry-Stock (1995) in further analyses because we were unable to review the original studies they discussed.

References

Afflerbach, P. (Ed.). (2016). Handbook of individual differences in reading: Reader, text, and context. Routledge. https://doi.org/10.4324/9780203075562

Amerstorfer, C. M. (2018). Past its expiry date? The SILL in modern mixed-methods strategy research. Studies in Second Language Learning and Teaching, 8(2), 497–523. https://doi.org/10.14746/ssllt.2018.8.2.14

Boo, Z., Dörnyei, Z., & Ryan, S. (2015). L2 motivation research 2005–2014: Understanding a publication surge and a changing landscape. System, 55, 145–157. https://doi.org/10.1016/j.system.2015.10.006

Bowen, N. E. J. A., Thomas, N., & Vandermeulen, N. Exploring feedback and regulation in writing classes with keystroke logging. Computers and Composition. (In press).

Chen, A. H. (2009). Listening strategy instruction: Exploring Taiwanese college students’ strategy development. Asian EFL Journal, 11(2), 54–85.

Chen, P. H. (2019). The individual and joint effects of bottom-up and top-down reading strategy use on Taiwanese EFL learners’ English reading comprehension. Languages and International Studies, 21, 123–157. https://doi.org/10.3966/181147172019060021006

Chen, S., & Tsai, Y. (2012). Research on English teaching and learning: Taiwan (2004–2009). Language Teaching, 45(2), 180–201. https://doi.org/10.1017/S0261444811000577

Chern, C.-L. (2002). English language teaching in Taiwan Today. Asia Pacific Journal of Education, 22(2), 97–105. https://doi.org/10.1080/0218879020220209

Chou, M. H. (2017). Modelling the relationship among prior English level, self-efficacy, critical thinking, and strategies in reading performance. Journal of Asia TEFL, 14(3), 380–397. https://doi.org/10.18823/asiatefl.2017.14.3.1.380

Chou, M. H. (2018). Speaking anxiety and strategy use for learning English as a foreign language in full and partial English-medium instruction contexts. TESOL Quarterly, 52(3), 611–633. https://doi.org/10.1002/tesq.455

Chou, M. H. (2019). Predicting self-efficacy in test preparation: Gender, value, anxiety, test performance, and strategies. The Journal of Educational Research, 112(1), 61–71. https://doi.org/10.1080/00220671.2018.1437530

Chu, W. H., Lin, D. Y., Chen, T. Y., Tsai, P. S., & Wang, C. H. (2015). The relationships between ambiguity tolerance, learning strategies, and learning Chinese as a second language. System, 49, 1–16. https://doi.org/10.1016/j.system.2014.10.015

Cohen, A. D. (1990). Language learning: Insights for learners, teachers, and researchers. Newbury House/Harper Collins.

Cohen, A. D. (1998). Strategies in learning and using a second language (1st ed.). Longman.

Cohen, A. D., & Wang, I. K. H. (2018). Fluctuation in the functions of language learner strategies. System, 74, 169–182. https://doi.org/10.1016/j.system.2018.03.011

De Vaus, D. (2001). Research design in social research. Sage. https://doi.org/10.4135/9781446263495

Dörnyei, Z. (2005). The psychology of the language learner: Individual differences in second language acquisition. Lawrence Erlbaum Associates.

Dörnyei, Z., & Ryan, S. (2015). The psychology of the language learner revisited. Routledge. https://doi.org/10.4324/9781315779553

Dörnyei, Z., & Skehan, P. (2003). Individual differences in second language learning. In C. J. Doughty & M. H. Long (Eds.), The handbook of second language acquisition (pp. 589–630). New York: Blackwell. https://doi.org/10.1002/9780470756492.ch18

Forsythe, A. (2019). Key thinkers in individual differences: Idea on personality and intelligence. Routledge. https://doi.org/10.4324/9781351026505

Gao, X. (2004). A critical review of questionnaire use in learner strategy research. Prospect: An Australian Journal of TESOL, 19(3), 3–14.

Gao, X., et al. (2007). Has language learning strategy research come to an end? A response to Tseng et al. (2006). Applied Linguistics, 28, 615–620. https://doi.org/10.1093/applin/amm034

Gao, X. (2010). Strategic language learning: The roles of agency and context. Multilingual Matters. https://doi.org/10.21832/9781847692450

Grenfell, M., & Macaro, E. (2007). Language learner strategies: Claims and critiques. In A. D. Cohen & E. Macaro (Eds.), Language learner strategies: 30 years of research and practice (pp. 9–28). Oxford University Press.

Griffiths, C., & Soruç, A. (2020). Individual differences in language learning: A complex systems theory perspective. Palgrave Macmillan. https://doi.org/10.1007/978-3-030-52900-0

Gurzynski-Weiss, L. (Ed.). (2020). Cross-theoretical explorations of interlocutors and their individual differences. John Benjamins. https://doi.org/10.1075/lllt.53

Hajar, A. (2019). International students’ challenges, strategies and future vision: A socio-dynamic perspective. Multilingual Matters. https://doi.org/10.21832/9781788922241

Huang, H. T. D. (2016). Exploring strategy use in L2 speaking assessment. System, 63, 13–27. https://doi.org/10.1016/j.system.2016.08.009

Huang, S. C. (2018). Language learning strategies in context. The Language Learning Journal, 46(5), 647–659. https://doi.org/10.1080/09571736.2016.1186723

Hiver, P., Al-Hoorie, A. H., Vitta, J. P., & Wu, J. (2021). Engagement in language learning: A systematic review of 20 years of research methods and definitions. Language Teaching Research. https://doi.org/10.1177/13621688211001289

Hu, J., & Gao, X. (2020). Appropriation of resources by bilingual students for self-regulated learning of science. International Journal of Bilingual Education and Bilingualism, 23(5), 567–583. https://doi.org/10.1080/13670050.2017.1386615

Kao, C. W., & Reynolds, B. L. (2017). A study on the relationship among Taiwanese college students’ EFL writing strategy use, writing ability and writing difficulty. English Teaching & Learning, 41(4), 31–67. https://doi.org/10.6330/ETL.2017.41.4.02

Kormos, J. (2012). The role of individual differences in L2 writing. Journal of Second Language Writing, 21, 390–403. https://doi.org/10.1016/j.jslw.2012.09.003

Kung, F. W. (2019). Teaching second language reading comprehension: The effects of classroom materials and reading strategy use. Innovation in Language Learning and Teaching, 13(1), 93–104. https://doi.org/10.1080/17501229.2017.1364252

Lan, R., & Oxford, R. L. (2003). Language learning strategy profiles of elementary school students in Taiwan. International Review of Applied Linguistics in Language Teaching, 41(4), 339–379. https://doi.org/10.1515/iral.2003.016

Lin, M. C., Cheng, Y. S., Lin, S. H., & Hsieh, P. J. (2015). The role of research-article writing motivation and self-regulatory strategies in explaining research-article abstract writing ability. Perceptual and Motor skills, 120(2), 397–415. https://doi.org/10.2466/50.PMS.120v17x9

Lin, W.-C., & Byram, M. (2016). New approaches to English language and education in Taiwan—Cultural and intercultural perspectives. Tung Hua Book Company.

Macaro, E. (2006). Strategies for language learning and for language use: Revising the theoretical framework. The Modern Language Journal, 90(3), 320–337. https://doi.org/10.1111/j.1540-4781.2006.00425.x

Macaro, E. (2020). Systematic review in applied linguistics. In J. McKinley & H. Rose (Eds.), The Routledge handbook of research methods in applied linguistics (pp. 230–239). Routledge. https://doi.org/10.4324/9780367824471-20

Macaro, E., Curle, S., Pun, J., An, J., & Dearden, J. (2018). A systematic review of English medium instruction in higher education. Language Teaching, 51(1), 36–76. https://doi.org/10.1017/S0261444817000350

Magno, C., de Carvalho Filho, M. K., & Lajom, J. A. L. (2011). Factors involved in the use of language learning strategies and oral proficiency among Taiwanese students in Taiwan and in the Philippines. The Asia-Pacific Education Researcher, 20(3), 489–502.

Mahmoodi, M. H., & Yousefi, M. (2021). Second language motivation research 2010–2019: A synthetic exploration. The Language Learning Journal (Online First). https://doi.org/10.1080/09571736.2020.1869809

McKinley, J. (2020). Introduction: Theorizing research methods in the ‘golden age’ of applied linguistics research. In J. McKinley & H. Rose (Eds.), The Routledge handbook of research methods in applied linguistics (pp. 1–12). Routledge. https://doi.org/10.4324/9780367824471-1

Naiman, N., Frohlich, M., Stern, H., & Todesco, A. (1978). The good language learner. The Ontario Institute for Studies in Education.

O’Malley, J. M., & Chamot, A. U. (1990). Learning strategies in second language acquisition. Cambridge University Press. https://doi.org/10.1017/CBO9781139524490

Oxford, R. L. (1990). Language learning strategies: What every teacher should know. Newbury House.

Oxford, R. L. (2011). Teaching and researching language learning strategies. Longman.

Oxford, R. L. (2017). Teaching and researching language learning strategies: Self-regulation in context (2nd ed.). Routledge.

Oxford, R. L., & Burry-Stock, J. A. (1995). Assessing the use of language learning strategies worldwide with the ESL/EFL version of the Strategy Inventory for Language Learning (SILL). System, 23(1), 1–23. https://doi.org/10.1016/0346-251X(94)00047-A

Poole, A. (2011). The online reading strategies used by five successful Taiwanese ESL Learners. Asian Journal of English Language Teaching, 21, 65–87.

Revelle, W., Wilt, J., & Condon, D. M. (2011). Individual differences and differential psychology: A brief history and prospect. In T. Chamorro-Premuzic, S. von Stumm, & A. Furnham (Eds.), The Wiley-Blackwell handbook of individual differences (pp. 3–38). Wiley Blackwell. https://doi.org/10.1002/9781444343120.ch1

Rose, H., Briggs, J. G., Boggs, J. A., Sergio, L., & Ivanova-Slavianskaia, N. (2018). A systematic review of language learner strategy research in the face of self-regulation. System, 72, 151–163. https://doi.org/10.1016/j.system.2017.12.002

Rose, H., McKinley, J., & Briggs Baffoe-Djan, J. (2020). Data collection research methods in applied linguistics. Bloomsbury.

Rubin, J. (1975). What the ‘good language learner’ can teach us. TESOL Quarterly, 9, 41–51. https://doi.org/10.2307/3586011

Rubin, J. (1981). Study of cognitive processes in second language learner. Applied Linguistics, 11, 117–131. https://doi.org/10.1093/applin/II.2.117

Shih, Y. C., & Reynolds, B. L. (2018). The effects of integrating goal setting and reading strategy instruction on English reading proficiency and learning motivation: A quasi-experimental study. Applied Linguistics Review, 9(1), 35–62. https://doi.org/10.1515/applirev-2016-1022

Snyder, H. (2019). Literature review as a research methodology: An overview and guidelines. Journal of Business Research, 104, 333–339. https://doi.org/10.1016/j.jbusres.2019.07.039

Su, M. M. H., & Weng, K. J. K. (2014). English learning strategy use by older adult language learners at Evergreen Learning Institutes in Northern Taiwan. Journal of Applied English, 7, 133–146.

Teng, L. S., & Zhang, L. J. (2018). Effects of motivational regulation strategies on writing performance: A mediation model of self-regulated learning of writing in English as a second/foreign language. Metacognition and Learning, 13(2), 213–240. https://doi.org/10.1007/s11409-017-9171-4

Thomas, N., & Rose, H. (2019). Do language learning strategies need to be self-directed? Disentangling strategies from self-regulated learning. TESOL Quarterly, 53(1), 248–257. https://doi.org/10.1002/tesq.473

Thomas, N., Bowen, N. E. J. A., & Rose, H. (2021). A diachronic analysis of explicit definitions and implicit conceptualizations of language learning strategies. System, 103, 102619, 1–13. https://doi.org/10.1016/j.system.2021.102619

Thomas, N., Rose, H., & Pojanapunya, P. (2021). Conceptual issues in strategy research: Examining the roles of teachers and students in formal education settings. Applied Linguistics Review, 12(2), 353-369. https://doi.org/10.1515/applirev-2019-0033

Tseng, W.-T., Dörnyei, Z., & Schmitt, N. (2006). A new approach to assessing strategic learning: The case of self-regulation in vocabulary acquisition. Applied Linguistics, 27(1), 78–102. https://doi.org/10.1093/applin/ami046

Tseng, W.-T., Liu, H., & Nix, J.-M.L. (2017). Self-regulation in language learning: Scale validation and gender effects. Perceptual and Motor Skills, 124(2), 531–548. https://doi.org/10.1177/0031512516684293

Wenden, A. (1991). Learner strategies for learner autonomy. Prentice Hall.

Yang, H. C. (2014). Toward a model of strategies and summary writing performance. Language Assessment Quarterly, 11(4), 403–431. https://doi.org/10.1080/15434303.2014.957381

Yang, W. (2018). The deployment of English learning strategies in the CLIL approach: A comparison study of Taiwan and Hong Kong tertiary level contexts. ESP Today: Journal of English for Specific Purposes at Tertiary Level, 6(1), 44–64. https://doi.org/10.18485/esptoday.2018.6.1.3

Yeldham, M. (2016). Second language listening instruction: Comparing a strategies-based approach with an interactive, strategies/bottom-up skills approach. TESOL Quarterly, 50(2), 394–420. https://doi.org/10.1002/tesq.233

Yeldham, M., & Gruba, P. (2016). The development of individual learners in an L2 listening strategies course. Language Teaching Research, 20(1), 9–34. https://doi.org/10.1177/1362168814541723

Zhang, L. J. (2010). A dynamic metacognitive systems account of Chinese university students’ knowledge about EFL reading. TESOL Quarterly, 44(2), 320–353. https://doi.org/10.5054/tq.2010.223352

Zhang, L. J., Thomas, N., & Qin, T. L. (2019). Language learning strategy research in System: Looking back and looking forward. System, 84, 87–92. https://doi.org/10.1016/j.system.2019.06.002

Author information

Authors and Affiliations

Corresponding author

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Thomas, N., Bowen, N.E.J.A., Reynolds, B.L. et al. A Systematic Review of the Core Components of Language Learning Strategy Research in Taiwan. English Teaching & Learning 45, 355–374 (2021). https://doi.org/10.1007/s42321-021-00095-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42321-021-00095-1