Abstract

The open science movement has developed out of growing concerns over the scientific standard of published academic research and a perception that science is in crisis (the “replication crisis”). Bullying research sits within this scientific family and without taking a full part in discussions risks falling behind. Open science practices can inform and support a range of research goals while increasing the transparency and trustworthiness of the research process. In this paper, we aim to explain the relevance of open science for bullying research and discuss some of the questionable research practices which challenge the replicability and integrity of research. We also consider how open science practices can be of benefit to research on school bullying. In doing so, we discuss how open science practices, such as pre-registration, can benefit a range of methodologies including quantitative and qualitative research and studies employing a participatory research methods approach. To support researchers in adopting more open practices, we also highlight a range of relevant resources and set out a series of recommendations to the bullying research community.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

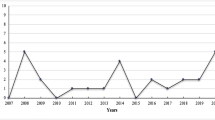

Bullying in school is a common experience for many children and adolescents. Such experiences relate to a range of adverse outcomes, including poor mental health, poorer academic achievement, and anti-social behaviour (Gini et al., 2018; Nakamoto & Schwartz, 2010; Valdebenito et al., 2017). Bullying research has increased substantially over the past 60 years, with over 5000 articles published between 2010 and 2016 alone (Volk et al., 2017). Much of this research focuses on the prevalence and antecedents of bullying, correlates of bullying, and the development and evaluation of anti-bullying interventions (Volk et al., 2017). The outcomes of this work for children and young people can therefore be life changing, and researchers should strive to ensure that their work is trustworthy, reliable, and accessible to a wide range of stakeholders both inside and outside of academia.

In recent years, the replication crisis has led to growing concern regarding the standard of research practices in the social sciences (Munafò et al., 2017). To address this, open science practices, such as openly sharing publications and data, conducting replication studies, and the pre-registration of research protocols, have provided the opportunity to increase the transparency and trustworthiness of the research process. In this paper, we aim to discuss the replication crisis and highlight the risks that questionable research practices pose for bullying research. We also aim to summarise open science practices and outline how these can benefit the broad spectrum of bullying research as well as to researchers themselves. Specifically, we aim to highlight how such practices can benefit both quantitative and qualitative research and studies employing a participatory research methods approach.

The Replication Crisis

In 2015, the Open Science Collaboration (Open Science Collaboration, 2015) conducted a large-scale replication of 100 published studies from three journals. The results questioned the replicability of research findings in psychology. In the original 100 studies, 97 reported a significant effect compared to only 35 of the replications. Furthermore, the effect sizes reported in the original studies were typically much larger than those found in the replications. The findings of the Open Science Collaboration received significant academic and mainstream media attention, which concluded that psychological research is in crisis (Wiggins & Chrisopherson, 2019). While these findings are based on the analysis of psychological research, challenges in replicating research findings have been reported in a range of disciplines including sociology (Freese & Peterson, 2017) and education studies (Makel & Pluker, 2014). Shrout and Rodgers (2018) suggest that the notion that science is in crisis is further supported by (1) the number of serious cases of academic misconduct such as that of Diederick Stapel (Nelson et al., 2018) and (2) the prevalence of questionable research practices and misuse of inferential statistics and hypothesis testing (see Ioannidis, 2005). The replication crisis has called into question the degree to which research across the social sciences accurately describes the world that we live in or whether this literature is overwhelmingly populated by misleading claims based on weak and error-strewn findings.

The trustworthiness of research reflects the quality of the method, rigour of the design, and the extent to which results are reliable and valid (Cook et al., 2018). Research on school bullying has grown exponentially in recent years (Smith & Berkkun, 2020) and typically focuses on understanding the nature, prevalence, and consequences of bullying to inform prevention and intervention efforts. If our research is not trustworthy, this can impede theory development and call into question the reliability of our research and meta-analytic findings (Friese & Frankenbach, 2020). Ultimately, if our research findings are untrustworthy, this undermines our efforts to prevent bullying and help and support young people. Bullying research exists within a broader academic research culture, which facilitates and incentivises the ways that research is undertaken and shared. As such, the issues that have been identified have direct relevance to those working in bullying.

The Incentive Culture in Academia

“The relentless drive for research excellence has created a culture in modern science that cares exclusively about what is achieved and not about how it is achieved.”

Jeremy Farrar, Director of the Wellcome Trust (Farrar, 2019).

In academia, career progression is closely tied to publication record. As such, academics feel under considerable pressure to publish frequently in high-quality journals to advance their careers (Grimes et al., 2018; Munafò et al., 2017). Yet, the publication process itself is biased toward accepting novel or statistically significant findings for publication (Renkewitz & Heene, 2019). This bias fuels a perception that non-significant results will not be published (the “file drawer problem”: Rosenthal, 1979). This can result in researchers employing a range of questionable research practices to achieve a statistically significant finding in order to increase the likelihood that a study will be accepted for publication. Taken together, this can lead to a perverse “scientific process” where achieving statistical significance is more important than the quality of the research itself (Frankenhuis & Nettle, 2018).

Questionable Research Practices

Questionable research practices (QRPs) can occur at all stages of the research process (Munafò et al., 2017). These practices differ from research misconduct in that they do not typically involve the deliberate intent to deceive or engage in fraudulent research practices (Stricker & Günther, 2019). Instead, QRPs are characterised by misrepresentation, inaccuracy, and bias (Steneck, 2006). All are of direct relevance to the work of scholars in the bullying field since each weakens our ability to achieve meaningful change for children and young people. QRPs emerge directly from “researcher degrees of freedom” that occur at all stages of the research process and which simply reflect the many decisions that researchers make with regard to their hypotheses, methodological design, data analyses, and reporting of results (see Wicherts et al., 2016 for an extensive list of researcher degrees of freedom). These decisions pose fundamental threats to how robust a study is as each compromises the likelihood that findings accurately model a psychological or social process (Munafò et al., 2017). QRPs include p-hacking; hypothesising after the result is known (HARKing); conducting studies with low statistical power; and the misuse of p values (Chambers et al., 2014). Such QRPs may reflect a misunderstanding of inferential statistics (Sijtsma, 2016). A misunderstanding of statistical theory can also lead to a lack of awareness regarding the nature and impact of QRPs (Sijtsma, 2016). This includes the prevailing approach to quantitative data analysis, Null Hypothesis Significance Testing (NHST) (Lyu et al., 2018; Travers et al., 2017), which is overwhelmingly the approach used in the bullying field. QRPs can fundamentally threaten the degree to which research in bullying can be trusted, replicated, and effective in efforts to implement successful and impactful intervention or prevention programs.

P-Hacking

P-hacking (or data-dredging) reflects methods of re-analysing data in different ways to find a significant result (Raj et al., 2018). Such methods can include the selective deletion of outliers, selectively controlling for variables, recoding variables in different ways, or selectively reporting the results of structural equation models (Simonsohn et al., 2014). While there are various methods of p-hacking, the end goal is the same: to find a significant result in a data set, often when initial analyses fail to do so (Friese & Frankenbach, 2020).

There are no available data on the degree to which p-hacking is a problem in bullying research per se, but the nature of the methods commonly used mean it is a clear and present danger. For example, the inclusion of multiple outcome measures (allowing those with the “best” results to be cherry-picked for publication), measures of involvement in bullying that can be scored or analysed in multiple ways (e.g. as a continuous measure or as a method to categorise participants as involved or not), and the presence of a diverse selection of demographic variables (which can be selectively included or excluded from analyses) all provide researchers with an array of possible analytic approaches. Such options pose a risk for p-hacking as decisions can be made on the results of statistical fishing (i.e. hunting to find significant effects) rather than on any underpinning theoretical rationale.

P-hacking need not be driven by a desire to deceive; rather, it can be used by well-meaning researchers and their wish to honestly identify useful or interesting findings (Wicherts et al., 2016). Sadly, even in this case, the impact of p-hacking remains profoundly problematic for the field. The p-hacking process biases the literature towards erroneous significant results and inflated effect sizes, impacting on our understanding of any issue that we seek to understand better, and biasing effect size estimates reported in meta-analyses (Friese & Frankenbach, 2020). While such effects may seem remote or of only academic interest, they compromise all that we in the bullying field seek to accomplish because they make it much less likely that effective, impactful, and meaningful intervention and prevention strategies can be identified and implemented.

HARKing

Typically, quantitative research follows the hypothetico-deductive model (Popper, 1959). From this perspective, hypotheses are formulated based on appropriate theory and previous research (Rubin, 2017). Once written, the study is designed, and data are collected and analysed (Rubin, 2017). Hypothesising after the result is known, or HARKing (Kerr, 1998), occurs when researchers amend their hypotheses to reflect their completed data analysis (Kerr, 1998). HARKing results in confusion between confirmatory and exploratory data analysis (Shrout & Rodgers, 2018), creating a literature where hypotheses are always confirmed and never falsified. This inhibits theory development (Rubin, 2017) in part because “progress” is, in fact, the accumulation of type 1 errors.

Low Statistical Power

Statistical power reflects the power in a statistical test to find an effect if there is one to find (Cohen, 2013). There are concerns regarding the sample sizes used in bullying research, as experiences of bullying are typically of a low frequency and positively skewed (Vessey et al., 2014; Volk et al., 2017). Low statistical power is problematic in two ways. First, it increases the type II error rate (the probability of falsely rejecting the null hypothesis), meaning that researchers may fail to report important and meaningful effects. Statistically significant effects can still be found under the conditions of low statistical power; however, the size of these effects is likely to be exaggerated due to a lower positive predictive value (the probability of a statistically significant result being genuine) (Button et al., 2013). In this case, researchers may find significant effects even in small samples, but those effects are at risk of being inflated.

QRPs in Qualitative Research

Apart from the previously discussed issues, there are also QRPs in qualitative work. Mainly, these involve issues pertaining to trustworthiness such as credibility, transferability, dependability, and confirmability (See Shenton, 2004). One factor that can influence perceptions about qualitative work is the possibility of subjectivity or different interpretations of the same data (Haven & Van Grootel, 2019). Additionally, the idea that the researcher will be biased and that their experiences, beliefs, and personal history will all influence how they both collect and interpret data has also been discussed (Berger, 2015). Clearly stating the positionality of the researcher and how their experiences informed their current research (the process of reflexivity) can help others better understand their interpretation of the data (Berger, 2015). Finally, one decision that qualitative researchers should consider when thinking about their designs is their stopping criteria. This might imply code or meaning saturation (see Hennink et al., 2017, for more detail on how these two types are different from one another). Thus, making it clear in the conceptualisation process when and how the data collection will stop is important to assure transparency and high-quality research. This is not a complete list of QRPs in qualitative research, but these seem to be the most urgent when it comes to bullying research when thinking about open science.

The Prevalence and Impact of QRPs

Identifying the prevalence of QRPs and academic misconduct is challenging as this is reliant on self-reports. In their survey of 2155 psychologists, John et al. (2012) identified that 78% of participants had not reported all dependent measures, 72% had collected more data after finding their statistical effects were not statistically significant, 67% reported selective reporting of studies that “worked” (yielded a significant effect), and 9% reported falsifying data. Such problematic practices have serious implications for the reliability of effects reported in the research literature (John et al., 2012), which can impact interventions and treatments such evidence may inform. Furthermore, De Vries et al. (2018) have highlighted how biases in the publication process threaten the validity of treatment results reported in the literature. Although focused on the treatment of depression, their work has clear lessons for the bullying research community. They demonstrate how the bias towards reporting more positive, significant effects, distorts a literature in favour of treatments that appear efficacious but are much less so in practice (Box 1).

Open Science

Confronting these challenges can be daunting, but open science offers several strategies that researchers in the bullying field can use to increase the transparency, reproducibility, and openness of their research. The most common practices include openly sharing publications and data, encouraging replication, pre-registration, and open peer-review. Below, we provide an overview of open science practices, with a particular focus on pre-registration and replication studies. We recommend that researchers begin by using those practices that they can most easily integrate into their work, building their repertoire of open science actions over time. We provide a series of recommendations for the school bullying research community alongside summaries of useful supporting resources (Box 2).

Open Publication, Open Data, and Reporting Standards

Open Publication

Ensuring research publications are openly available by providing access to pre-print versions of papers or paying for publishers to make articles openly available is now a widely adopted practice (Concannon et al., 2019; McKiernan et al., 2016). Articles can be hosted on websites such as ResearchGate and/or on institutional repositories, allowing a wider pool of potential stakeholders to access relevant bullying research and increasing the impact of research (Concannon et al., 2019). This process also supports access for the research and practice communities in low- and middle-income countries where even Universities may be unable to pay journal subscriptions. The authors can also share pre-print versions of their papers for comment and review before submitting them to a journal for review using an online digital repository, such as PsyArXiv. Sharing publications in this way can encourage both early feedback on articles and the faster dissemination of research findings (Chiarelli et al., 2019).

Open Data

Making data and data analysis scripts openly available is also encouraged, can enable further data analysis (e.g. meta-analysis), and facilitates replication (Munafò et al., 2017; Nosek & Bar-Anan, 2012). It also enables the collation of larger data sets, and secondary data analyses to test different hypotheses. Several publications on bullying in school are based on the secondary analysis of openly shared data (e.g. Dantchev & Wolke, 2019; Przybylski & Bowes, 2017) and highlight the benefits of such analyses. Furthermore, although limited in number, examples of papers on school bullying where data, research materials, and data analysis scripts are openly shared are emerging (e.g. Przybylski, 2019).

Bullying data often includes detailed personal accounts of experiences and the impact of bullying. Such data are highly sensitive, and there may be a risk that individuals can be identified. To address such sensitivities, Meyer (2018) (see box 3) proposes a tiered approach to the consent process, where participants are actively involved in decisions around what parts of their data and where their data are shared. Meyer (2018) also highlights the importance of selecting the right repository for your data. Some repositories are entirely open, whereas others only provide access to suitably qualified researchers. While bullying data pose particular ethical challenges, the sharing of all data is encouraged (Bishop, 2009; McLeod & O’Connor, 2020).

Reporting Standards

Reporting standards are standards for reporting a research study and provide useful guidance on what methodological and analytical information should be included in a research paper (Munafò et al., 2017). Such guidelines aim to ensure sufficient information is provided to enable replication and promote transparency (Munafò et al., 2017). Journal publishers are now beginning to outline what open science practices should be reported in articles. For example, from July 2021, when submitting a paper for review in one of the American Psychological Association journals, the authors are now required to state whether their data will be openly shared and whether or not their study was pre-registered. In a bullying context, Smith and Berkkun (2020) have highlighted that important contextual data is often missing from publications and recommend, for example, that the gender and age of participants alongside the country and date of data collection should be included as standard in papers on bullying in school.

Recommendations:

-

1.

Researchers to start to share all research materials openly using an online repository. Box 3 provides some useful guidance on how to support the open sharing of research materials.

-

2.

Journal editors and publishers to further promote the open sharing of research material.

-

3.

Researchers to follow the recommendations set out by Smith and Berkkun (2020) and follow a set of reporting standards when reporting bullying studies.

-

4.

Reviewers be mindful of Smith and Berkkun (2020) recommendations when reviewing bullying papers.

Replication Studies

Replicated findings increase confidence in the reliability of that finding, ensuring research findings are robust and enabling science to self-correct (Cook et al., 2018; Drotar, 2010). Replication reflects the ability of a researcher to duplicate the results of a prior study with new data (Goodman et al., 2018). There are different forms of replication that can be broadly categorised into two: those that aim to recreate the exact conditions of an earlier study (exact/direct replication) and those that aim to test the same hypotheses again using a different method (conceptual replication) (Schmidt, 2009). Replication studies are considered fundamental in establishing whether study findings are consistent and trustworthy (Cook et al., 2018).

To date, few replication studies have been conducted on bullying in schools. A Web of Science search using the Boolean search term bully* alongside the search term “replication” identified two replication studies (Berdondini & Smith, 1996; Huitsing et al., 2020). Such a small number of replications may reflect concerns regarding the value of these and concerns about how to conduct such work when data collection is so time and resource-intensive. In addition, school gatekeepers are themselves interested in novelty and addressing their own problems and may be reluctant to participate in a study which has “already been done”. One possible solution to this challenge is to increase the number of large-scale collaborations among bullying researchers (e.g. multiple researchers across many sites collecting the same data). Munafò et al. (2017) highlight the benefits of collaboration and “team science” to build capacity in a research project. They argue that greater collaboration through team science would enable researchers to undertake higher-powered studies and relieve the pressure on single researchers. Such projects also have the benefit of increasing generalisability across settings and populations.

Recommendations:

-

1.

Undertake direct replications or, as a more manageable first step, include aspects of replication within larger studies.

-

2.

Journal editors to actively promote the submission of replication studies on school bullying.

-

3.

Journal editors, editorial panels, and reviewers to recognise the value of replication studies rather than favouring new or novel findings (Box 4).

Pre-Registration

Pre-registration requires researchers to set out, in advance of any data collection, their hypotheses, research design, and planned data analysis (van’t Veer & Giner-Sorolla, 2016). Pre-registering a study reduces the number of researcher degrees of freedom as all decisions are outlined at the start of a project. However, to date, there have been few pre-registered studies in bullying. There are two forms of pre-registration: the pre-registration of analysis plans and registered reports. In a pre-registered analysis plan, the hypotheses, research design, and analysis plan are registered in advance. These plans are then stored in an online repository (e.g. the Open Science Framework (OSF) or AsPredicted website), which is then time-stamped as a record of the planned research project (van’t Veer & Giner-Sorolla, 2016). Registered reports, however, integrate the pre-registration of methods and analyses into the publication process (Chambers et al., 2014). With a registered report, researchers can submit their introduction and proposed methods and analyses to a journal for peer review. This creates a two-tier peer-review process, where the registered reports can be accepted in principle or rejected in the first stage of review, based on the rigour of the proposed methods and analysis plans rather than on the findings of the study (Hardwicke & Ioannidis, 2018). In the second stage of the review process, the authors then submit the complete paper (at a later date after data have been collected and analyses completed), and this is also reviewed. The decision to accept a study is therefore explicitly based on the quality of the research process rather than the outcome (Frankenhuis & Nettle, 2018) and in practice, almost no work is ever rejected following an in-principal acceptance at stage 1 (C. Chambers, personal communication, December 11, 2020). At the time of writing, over 270 journals accept registered reports, many of which are directly relevant to bullying researchers (e.g. Developmental Science, British Journal of Educational Psychology, Journal of Educational Psychology).

Pre-registration offers one approach for improving the validity of bullying research. Employing greater use of pre-registration would complement other recommendations on how to improve research practices in bullying research. For example, Volk et al. (2017) propose a “bullying research checklist” (see Box 5).

Pre-Registering Quantitative Studies

The pre-registration of quantitative studies requires researchers to state the hypotheses, method, and planned data analysis in advance of any data collection (van’t Veer & Giner-Sorolla, 2016). When outlining the hypotheses being tested, researchers are required to outline the background and theoretical underpinning of the study. This reflects the importance of theoretically led hypotheses (van’t Veer & Giner-Sorolla, 2016), which are more appropriately tested using NHST and inferential statistics in a confirmatory rather than exploratory design (Wagenmakers et al., 2012). Requiring researchers to state their hypotheses in advance of any data collection adheres to the confirmatory nature of inferential statistics and reduces the risk of HARKing (van’t Veer & Giner-Sorolla, 2016). Following a description of the hypotheses, researchers outline the details of the planned method, including the design of the study, the sample, the materials and measures, and the procedure. Information on the nature of the study and how materials and measures will be used and scored are outlined in full. Researchers are required to provide a justification for and an indication of the desired sample size.

The final stage of the pre-registration process requires researchers to consider and detail all steps of the data analysis process. The data analysis plan should be outlined in terms of what hypotheses are tested using what analyses and any plans for follow-up analysis (e.g. post hoc testing and any exploratory analyses). Despite concerns to the contrary (Banks et al., 2019; Gonzales & Cunningham, 2015), the aim of pre-registration is not to devalue exploratory research, but rather, to make more explicit what is exploratory and what is confirmatory (van’t Veer & Giner-Sorolla, 2016). While initially, the guidance on pre-registration focused more on confirmatory analyses, more recent guidance considers how researchers can pre-register exploratory studies (Dirnagl, 2020), and make a distinction between confirmatory versus exploratory research in the publication process (McIntosh, 2017). Irrespective of whether confirmatory or exploratory analyses are planned, pre-registering an analysis reduces the risk of p-hacking (van’t Veer & Giner-Sorolla, 2016). A final point, often a concern to those unfamiliar with open science practices, is that a pre-registration does not bind a researcher to a single way of analysing data. Changes to plans are entirely acceptable when they are deemed necessary and are described transparently.

Pre-Registering Qualitative Studies

Pre-registration of qualitative studies is still relatively new (e.g. Kern & Gleditsch, 2017a, b; Piñeiro & Rosenblatt, 2016). This is because most of the work uses inductive and hypothesis-generating approaches. Coffman and Niederle (2015) argue that this hypothesis-generation is one of the most important reasons why pre-registering qualitative work is so important. This could help distinguish between what hypotheses are generated from the data and which were hypotheses conceptualised from the start. Therefore, it could even be argued that pre-registering qualitative research encourages exploratory work. Using pre-registration prior to a hypothesis-generating study will also help with the internal validity of this same study, as it will be possible to have a sense of how the research evolved from before to post data collection.

Using investigator triangulation, where multiple researchers share and discuss conclusions and findings of the data, and reach a common understanding, could improve the trustworthiness of a qualitative study (Carter et al., 2014). Similarly, where establishing intercoder reliability is appropriate, the procedures demonstrating how this is achieved can be communicated and recorded in advance. One example of this would be the use of code books. When analysing qualitative data, developing a code book that could be used by all the coders could help with intercoder reliability and overall trustworthiness (Guest et al., 2012). These are elements that could be considered in the pre-registration process by clearly outlining if intercoder reliability is used and, if so, how this is done. To improve the transparency of pre-registered qualitative work, it has also been suggested that researchers should clearly state whether, if something outside the scope of the interview comes to light, such novel experiences will also be explored with the participant (Haven & Van Grootel, 2019; Kern & Gleditsch, 2017a, b). Issues of subjectivity, sometimes inherent to qualitative work, can be reduced as a result of pre-registering because it allows the researcher to clearly consider all the elements of the study and have a plan before data collection and analysis, which reduces levels of subjectivity.

Kern and Gleditsch (2017a, b) provide some practical suggestions on how to use pre-registration with qualitative studies. For example, when using in-depth interviews, one should make the interview schedule and questions available to help others to comprehend what the participants were asked. Similarly, they suggest that all recruitment and sampling strategy plans should be included to improve transparency (Haven & Van Grootel, 2019; Kern & Gleditsch, 2017a, b). Piñeiro and Rosenblatt (2016) provide an overview of how these pre-registrations could be achieved. They suggested three main elements: conceptualisation of the study, theory (inductive or deductive in nature), and design (working hypothesis, sampling, tools for data collection). More recently, Haven and Van Grootel (2019) highlighted a lack of flexibility in the existing pre-register templates to adapt to qualitative work, as such, they adapted an OSF template to a qualitative study.

Integrating Participatory Research Methods into Pre-Registration

Participatory research methods (PRMs) aim to address power imbalances within the research process and validate the local expertise and knowledge of marginalised groups (Morris, 2002). The key objective of PRM is to include individuals from the target population, also referred to as “local experts”, as meaningful partners and co-creators of knowledge. A scoping review of PRM in psychology recommends wider and more effective use (Levac et al., 2019). Researchers are calling specifically for youth involvement in bullying studies to offer their insight, avoid adult speculation, and assist in the development of appropriate support materials (O’Brien, 2019; O’Brien & Dadswell, 2020). PRM is particularly appropriate for research with children and young people who experience bullying behaviours given their explicit, defined powerlessness. Research has shown that engaging young people in bullying research, while relatively uncommon, provides lasting positive outcomes for both researchers and participants (Gibson et al., 2015; Lorion, 2004).

Pre-registration has rarely been used in research undertaking a PRM approach. It is a common misconception that pre-registration is inflexible and places constraints on the participant-driven nature of PRM (Frankenhuis & Nettle, 2018). However, pre-registration still allows for the exploratory and subjective nature of PRM but in a more transparent way, with clear rationale and reasoning. An appropriate pre-registration method for PRM can utilise a combination of both theoretical and iterative pre-registration. Using a pre-registration template, researchers should aim to document the research process highlighting the main contributing theoretical underpinnings of their research, with anticipatory hypotheses and complementary analyses (Haven & Van Grootel, 2019). This initial pre-registration can then be supported using iterative documentation detailing ongoing project development. This can include utilising workflow tools or online notebooks, which show insights into the procedure of co-researchers and collaborative decision making (Kern & Gleditsch, 2017a, b). This creates an evidence trail of how the research evolved, providing transparency, reflexivity, and credibility to the research process.

The Perceived Challenges of Pre-Registration.

To date, there have been few pre-registered studies in bullying. A Web of Science search using the Boolean search terms bully* peer-vict*, pre-reg*, and preregist* identified four pre-registered studies on school bullying (Kaufman et al., 2022; Legate et al., 2019; Leung, 2021; Noret et al., 2021). The lack of pre-registrations may reflect concerns that it is a difficult, rigid, and time-consuming process. Reischer and Cowan (2020) note that pre-registration should not be seen as a singular time-stamped rigid plan but as an ongoing working model with modifications. Change is possible so long as this is clearly and transparently articulated, for example, in an associated publication or in an open lab notebook (Schapira et al., 2019). The move to pre-registering a study requires a change in workflow rather than more absolute work. However, this early and detailed planning (especially concerning analytical procedures) can improve the focus on the quality of the research process (Ioannidis, 2008; Munafò et al., 2017).

The Impact of Pre-Registration.

The impact of pre-registration on reported effects can be extensive. The pre-registration of funded clinical trials in medicine has been a requirement since 2000. In an analysis of randomised control trials examining the role of drugs or supplements for intervening in or treating cardiovascular disease, Kaplan and Irvin (2015) identified a substantial change in the number of significant effects reported once pre-registration was introduced (57% reported significant effects prior to the requirement but and only 8% after). More recently, Scheel et al. (2021) compared the results of 71 pre-registered studies in psychology with the results published in 152 studies that were not pre-registered. They found that only 44% of the pre-registered studies reported a significant effect, compared to 96% of studies that were not pre-registered. As a result, the introduction of pre-registration has increased the number of null effects reported in the literature and presents a more reliable picture of the effects of particular interventions.

Recommendations:

-

1.

When conducting your next research study on bullying, consider pre-registering the study.

-

2.

Journal editors and publishers to actively encourage registered reports as a submission format.

The Benefits of Open Science for Researchers

Employing more open science practices can often be challenging, in part because they force us to reconsider methods that are already “successful” (often synonymous with “those which result in publication”). Based on our own experience, this takes time and is best approached by beginning small and building up to a wider application of the practices we have outlined in this article. Alongside increasing the reliability of research, open science practices are associated with several career benefits for the researcher. Articles which use open science practices are more likely to be accepted for publication, are more visible, and are cited more frequently (Allen & Mehler, 2019). Open science can also lead to the development of more supportive networks for collaboration (Allen & Mehler, 2019). In terms of career advancement, Universities are beginning to reward engagement with science principals in their promotion criteria. For example, the University of Bristol (UK) will consider open research practices such as data sharing and pre-registration in promotion cases in 2020–21. Given that formal recognition such as this has been recommended by the European Union for some time (O’Carroll et al., 2017), it is likely to be an increasingly important part of career progression in academia (Box 6).

Conclusion

This paper sought to clarify the ways in which bullying research is undermined by a failure to engage with open science practices. It highlighted the potential benefits of open science for the way we conduct research on bullying. In doing so, we aimed to encourage the greater use of open science practices in bullying research. Given the importance of this for the safety and wellbeing of children and young people, the transparency and reliability of this research is paramount and is enhanced via greater use of open science practices. Ultimately, researchers working in the field of bullying are seeking to accurately understand and describe the experiences of children and young people. Open science practices make it more likely that we will achieve this goal and, as a result, be well-placed to develop and implement successful evidence-based intervention and prevention programs.

References

Allen, C., & Mehler, D. (2019). Open science challenges, benefits and tips in early acreer and beyond. PLoS Biology, 17(5), 1–14. https://doi.org/10.1371/journal.pbio.3000246

Banks, G. C., Field, J. G., Oswald, F. L., O’Boyle, E. H., Landis, R. S., Rupp, D. E., & Rogelberg, S. G. (2019). Answers to 18 questions about open science practices. Journal of Business and Psychology, 34(3), 257–270. https://doi.org/10.1007/s10869-018-9547-8

Berdondini, L., & Smith, P. K. (1996). Cohesion and power in the families of children involved in bully/victim problems at school: An Italian replication. Journal of Family Therapy, 18, 99–102. https://doi.org/10.1111/j.1467-6427.1996.tb00036.x

Berger, R. (2015). Now I see it, now I don’t: Researcher’s position and reflexivity in qualitative research. Qualitative Research, 15(2), 219–234. https://doi.org/10.1177/1468794112468475

Bishop, L. (2009). Ethical sharing and reuse of qualitative data. Australian Journal of Social Issues, 44(3), 255–272. https://doi.org/10.1002/j.1839-4655.2009.tb00145.x

Brandt M. J., IJzerman, H., Dijksterhuis, A., Farach, F. J., Geller, J., Giner-Sorolla, R., Grange, J. A., Perugini, M., Spies, J. R., & van ’t Veer, A. (2014). The replication recipe: What makes for a convincing replication? Journal of Experimental Social Psychology, 50(1) 217 224. https://doi.org/10.1016/j.jesp.2013.10.005

Button, K. S., Ioannidis, J. P. A., Mokrysz, C., Nosek, B. A., Flint, J., Robinson, E. S. J., & Munafò, M. R. (2013). Power failure: Why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience, 14(5), 365–376. https://doi.org/10.1038/nrn3475

Carter, N., Bryant-Lukosius, D., Dicenso, A., Blythe, J., & Neville, A. J. (2014). The use of triangulation in qualitative research. Oncology Nursing Forum, 41(5), 545–547. https://doi.org/10.1188/14.ONF.545-547

Chambers, C. D., Feredoes, E., Muthukumaraswamy, S. D., & Etchells, P. J. (2014). Instead of “playing the game” it is time to change the rules: Registered reports at AIMS neuroscience and beyond. AIMS Neuroscience, 1(1), 4–17. https://doi.org/10.3934/Neuroscience.2014.1.4

Chiarelli, A., Johnson, R., Richens, E., & Pinfield, S. (2019). Accelerating Scholarly Communication: the Transformative Role of Preprints. https://doi.org/10.5281/ZENODO.3357727

Coffman, L. C., & Niederle, M. (2015). Pre-analysis plans have limited upside, especially where replications are feasible. Journal of Economic Perspectives, 29(3), 81–98. https://doi.org/10.1257/jep.29.3.81

Cohen, J. (2013). Statistical power analysis for the behavioral sciences. Academic Press.

Concannon, F., Costello, E., & Farrelly, T. (2019). Open science and educational research: An editorial commentary. Irish Journal of Technology Enhanced Learning, 4(1), ii–v. https://doi.org/10.22554/ijtel.v4i1.61

Cook, B. G., Lloyd, J. W., Mellor, D., Nosek, B. A., & Therrien, W. J. (2018). Promoting open science to increase the trustworthiness of evidence in special education. Exceptional Children, 85(1), 104–118. https://doi.org/10.1177/0014402918793138

Coyne, M. D., Cook, B. G., & Therrien, W. J. (2016). Recommendations for replication research in special education: A framework of systematic, conceptual replications. Remedial and Special Education, 37(4), 244–253. https://doi.org/10.1177/0741932516648463

Crüwell, S., Doorn, J. Van, Etz, A., Makel, M. C., Niebaum, J. C., Orben, A., Parsons, S., & Schulte, M. (2019). Seven easy steps to open science. Zeitschrift für Psychologie, 227(4), 237–248. https://doi.org/10.1027/2151-2604/a000387

Dantchev, S., & Wolke, D. (2019). Trouble in the nest: Antecedents of sibling bullying victimization and perpetration. Developmental Psychology, 55(5), 1059–1071. https://doi.org/10.1037/dev0000700

De Vries, Y. A., Roest, A. M., De Jonge, P., Cuijpers, P., Munafò, M. R., & Bastiaansen, J. A. (2018). The cumulative effect of reporting and citation biases on the apparent efficacy of treatments: The case of depression. Psychological Medicine, 48(15), 2453–2455. https://doi.org/10.1017/S0033291718001873

Dirnagl, U. (2020). Preregistration of exploratory research: Learning from the golden age of discovery. PLoS Biology, 18(3), 1–6. https://doi.org/10.1371/journal.pbio.3000690

Drotar, D. (2010). Editorial: A call for replications of research in pediatric psychology and guidance for authors. Journal of Pediatric Psychology, 35(8), 801–805. https://doi.org/10.1093/jpepsy/jsq049

Duncan, G. J., Engel, M., Claessens, A., & Dowsett, C. J. (2014). Replication and robustness in developmental research. Developmental Psychology, 50(11), 2417–2425. https://doi.org/10.1037/a0037996

Farrar, J. (2019). Why we need to reimagine how we do research. https://wellcome.org/news/why-we-need-reimagine-how-we-do-research. Accessed 18 December 2020.

Frankenhuis, W. E., & Nettle, D. (2018). Open science is liberating and can foster creativity. Perspectives on Psychological Science, 13(4), 439–447. https://doi.org/10.1177/1745691618767878

Freese, J., & Peterson, D. (2017). Replication in social science. Annual Review of Sociology, 43, 147–165. https://doi.org/10.1146/annurev-soc-060116-053450

Friese, M., & Frankenbach, J. (2020). p-Hacking and publication bias interact to distort meta-analytic effect size estimates. Psychological Methods, 25(4), 456–471. https://doi.org/10.1037/met0000246

Gehlbach, H., & Robinson, C. D. (2021). From old school to open science: The implications of new research norms for educational psychology and beyond. Educational Psychologist, 56(2), 79–89. https://doi.org/10.1080/00461520.2021.1898961

Gibson, J., Flaspohler, P. D., & Watts, V. (2015). Engaging youth in bullying prevention through community-based participatory research. Family & Community Health, 38(1), 120–130. https://doi.org/10.1097/FCH.0000000000000048

Gini, G., Card, N. A., & Pozzoli, T. (2018). A meta-analysis of the differential relations of traditional and cyber-victimization with internalizing problems. Aggressive Behavior, 44(2), 185–198. https://doi.org/10.1002/ab.21742

Gonzales, J. E., & Cunningham, C. A. (2015). The promise of pre-registration in psychological research. Psychological Science Agenda, 29(8), 2014–2017. https://www.apa.org/science/about/psa/2015/08/pre-Registration. Accessed 18 December 2020.

Goodman, S. N., Fanelli, D., & Ioannidis, J. P. A. (2018). What does research reproducibility mean? Science Translational Medicine, 8(341), 96–102. https://doi.org/10.1126/scitranslmed.aaf5027

Grimes, D. R., Bauch, C. T., & Ioannidis, J. P. A. (2018). Modelling science trustworthiness under publish or perish pressure. Royal Society Open Science, 5(1), 171511. https://doi.org/10.1098/rsos.171511

Guest, G., MacQueen, K. M., & Namey, E. E. (2012). Applied thematic analysis. Sage.

Hardwicke, T. E., & Ioannidis, J. P. A. (2018). Mapping the universe of registered reports. Nature Human Behaviour, 2, 793–796. https://doi.org/10.1038/s41562-018-0444-y

Haven, L. T., & Van Grootel, D. L. (2019). Preregistering qualitative research. Accountability in Research, 26(3), 229–244. https://doi.org/10.1080/08989621.2019.1580147

Hennink, M. M., Kaiser, B. N., & Marconi, V. C. (2017). Code saturation versus meaning saturation: How many interviews are enough? Qualitative Health Research, 27(4), 591–608. https://doi.org/10.1177/1049732316665344

Huitsing, G., Lodder, M. A., Browne, W. J., Oldenburg, B., Van Der Ploeg, R., & Veenstra, R. (2020). A large-scale replication of the effectiveness of the KiVa antibullying program: A randomized controlled trial in the Netherlands. Prevention Science, 21, 627–638. https://doi.org/10.1007/s11121-020-01116-4

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Medicine, 2(8), 2–8. https://doi.org/10.1371/journal.pmed.0020124

Ioannidis, J. P. A. (2008). Why most discovered true associations are inflated. Epidemiology, 19(5), 640–648. https://doi.org/10.1097/EDE.0b013e31818131e7

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23(5), 524–532. https://doi.org/10.1177/0956797611430953

Kaplan, R. M., & Irvin, V. L. (2015). Likelihood of null effects of large NHLBI clinical trials has increased over time. PLoS ONE, 10(8), 1–12. https://doi.org/10.1371/journal.pone.0132382

Kaufman, T. M., Laninga-Wijnen, L., & Lodder, G. M. (2022). Are victims of bullying primarily social outcasts? Person-group dissimilarities in relational, socio-behavioral, and physical characteristics as predictors of victimization. Child development, Early view. https://doi.org/10.1111/cdev.13772

Kern, F., & Gleditsch, K. S. (2017a). Exploring pre-registration and pre-analysis plans for qualitative inference. https://doi.org/10.13140/RG.2.2.14428.69769

Kern, F. G., & Gleditsch, K. S. (2017b). Exploring pre-registration and pre-analysis plans for qualitative inference. Pre-Print, 1–15. https://doi.org/10.13140/RG.2.2.14428.69769

Kerr, N. L. (1998). HARKing: Hypothesizing after the results are known. Personality and Social Psychology Review, 2(3), 196–217. https://doi.org/10.1207/s15327957pspr0203_4

Legate, N., Weinstein, N., & Przybylski, A. K. (2019). Parenting strategies and adolescents’ cyberbullying behaviors: Evidence from a preregistered study of parent–child dyads. Journal of Youth and Adolescence, 48(2), 399–409. https://doi.org/10.1007/s10964-018-0962-y

Leung, A. N. M. (2021). To help or not to help: intervening in cyberbullying among Chinese cyber-bystanders. Frontiers in Psychology, 2625. https://doi.org/10.3389/fpsyg.2021.483250

Levac, L., Ronis, S., Cowper-Smith, Y., & Vaccarino, O. (2019). A scoping review: The utility of participatory research approaches in psychology. Journal of Community Psychology, 47(8), 1865–1892. https://doi.org/10.1002/jcop.22231

Lindsay, D. S. (2020). Seven steps toward transparency and replicability in psychological science. Canadian Psychology/psychologie Canadienne, 61(4), 310–317. https://doi.org/10.1037/cap0000222

Lorion, R. P. (2004). The evolution of community-school bully prevention programs: Enabling participatory action research. Psykhe, 13(2), 73–83.

Lyu, Z., Peng, K., & Hu, C. P. (2018). P-Value, confidence intervals, and statistical inference: A new dataset of misinterpretation. Frontiers in Psychology, 9(JUN), 2016–2019. https://doi.org/10.3389/fpsyg.2018.00868

Makel, M. C., & Plucker, J. A. (2014). Facts are more important than novelty: Replication in the education sciences. Educational Researcher, 43(6), 304–316. https://doi.org/10.3102/0013189X14545513

McIntosh, R. D. (2017). Exploratory reports: A new article type for Cortex. Cortex, 96, A1–A4. https://doi.org/10.1016/j.cortex.2017.07.014

McKiernan, E. C., Bourne, P. E., Brown, C. T., Buck, S., Kenall, A., Lin, J., McDougall, D., Nosek, B. A. Ram, K., Soderberg, C. K., Spies, J. R., Thaney, K., Updegrove, A., Woo, K. H., Yarkoni, T. (2016). How open science helps researchers succeed. ELife, 1–19. https://doi.org/10.7554/eLife.16800

McLeod, J., O’Connor, K. (2020). Ethics, archives and data sharing in qualitative research. Educational Philosophy and Theory, 1–13. https://doi.org/10.1080/00131857.2020.1805310

Meyer, M. N. (2018). Practical tips for ethical data sharing. Advances in Methods and Practices in Psychological Science, 1(1), 131–144.

Morris, M. (2002). Participatory research and action: A guide to becoming a researcher for social change. In Canadian Research Institute for the Advancement of Women. https://doi.org/10.1177/0959353506067853

Munafò, M. R., Nosek, B. A., Bishop, D. V. M., Button, K. S., Chambers, C. D., Sert, P. D., & N., Simonsohn, U., Wagenmakers, E. J., Ware, J. J., & Ioannidis, J. P. A. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1(1), 1–9. https://doi.org/10.1038/s41562-016-0021

Nakamoto, J., & Schwartz, D. (2010). Is peer victimization associated with academic achievement? A Meta-Analytic Review. Social Development, 19(2), 221–242. https://doi.org/10.1111/j.1467-9507.2009.00539.x

Nelson, L. D., Simmons, J., & Simonsohn, U. (2018). Psychology’s renaissance. Annual Review of Psychology, 69, 511–534. https://doi.org/10.1146/annurev-psych-122216-011836

Noret, N., Hunter, S. C., & Rasmussen, S. (2021). The role of cognitive appraisals in the relationship between peer-victimization and depressive symptomatology in adolescents: A longitudinal study. School mental health, 13(3), 548–560. https://doi.org/10.1007/s12310-021-09414-0

Nosek, B. A., & Bar-Anan, Y. (2012). Scientific Utopia: I. Opening Scientific Communication. Psychological Inquiry, 23(3), 217–243. https://doi.org/10.1080/1047840X.2012.692215

O’Brien, N. (2019). Understanding alternative bullying perspectives through research engagement with young people Frontiers in Psychology, 10. https://doi.org/10.3389/fpsyg.2019.01984

O’Brien, N., & Dadswell, A. (2020). Reflections on a participatory research project exploring bullying and school self-exclusion: Power dynamics, practicalities and partnership working. Pastoral Care in Education, 38(3), 208–229. https://doi.org/10.1080/02643944.2020.1788126

O’Carroll, C., Rentier, B., Cabello Valdes, C., Esposito, F., Kaunismaa, E., Maas, K., Metcalfe, J., McAllister, D., & Vandevelde, K. (2017). Evaluation of Research Careers fully acknowledging Open Science Practices-Rewards, incentives and/or recognition for researchers practicing Open Science. Publication Office of the Europen Union. https://doi.org/10.2777/75255

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349. https://doi.org/10.1126/science.aac4716

Piñeiro, R., & Rosenblatt, F. (2016). Pre-analysis plans for qualitative research. Revista De Ciencia Política (santiago), 36(3), 785–796. https://doi.org/10.4067/s0718-090x2016000300009

Popper, K. (1959). The logic of scientific discovery. Hutchins and Company.

Przybylski, A. K. (2019). Exploring adolescent cyber victimization in mobile games: Preliminary evidence from a British cohort. Cyberpsychology, Behavior, and Social Networking, 22(3), 227–231. https://doi.org/10.1089/cyber.2018.0318

Przybylski, A. K., & Bowes, L. (2017). Cyberbullying and adolescent well-being in England: A population-based cross-sectional study. The Lancet Child and Adolescent Health, 1(1), 19–26. https://doi.org/10.1016/S2352-4642(17)30011-1

Raj, A. T., Patil, S., Sarode, S., & Salameh, Z. (2018). P-Hacking: A wake-up call for the scientific community. Science and Engineering Ethics, 24(6), 1813–1814. https://doi.org/10.1007/s11948-017-9984-1

Reischer, H. N., & Cowan, H. R. (2020). Quantity over quality? Reproducible psychological science from a mixed methods perspective. Collabra: Psychology, 6(1), 1–19. https://doi.org/10.1525/collabra.284

Renkewitz, F., & Heene, M. (2019). The replication crisis and open science in psychology: Methodological challenges and developments. Zeitschrift Fur Psychologie, 227(4), 233–236. https://doi.org/10.1027/a000001

Rosenthal, R. (1979). The “File Drawer Problem” and tolerance for null results. Psychological Bulletin, 86(3), 638–641.

Rubin, M. (2017). When does HARKing hurt? Identifying when different types of undisclosed post hoc hypothesizing harm scientific progress. Review of General Psychology, 21(4), 308–320. https://doi.org/10.1037/gpr0000128

Scheel, A. M., Schijen, M. R., & Lakens, D. (2021). An excess of positive results: Comparing the standard psychology literature with registered reports. Advances in Methods and Practices in Psychological Science, 4(2), 1–12. https://doi.org/10.1177/25152459211007467

Schmidt, S. (2009). Shall we really do it again? The powerful concept of replication is neglected in the social sciences. Review of General Psychology, 13(2), 90–100. https://doi.org/10.1037/a0015108

Schapira, M., The Open Lab Notebook Consortium, & Harding, R. J. (2019). Open laboratory notebooks: Good for science, good for society, good for scientists. F1000Research, 8:87. https://doi.org/10.12688/f1000research.17710.2

Shenton, A. K. (2004). Strategies for ensuring trustworthiness in qualitative research projects. Education for Information, 22(2), 63–75. https://doi.org/10.3233/EFI-2004-22201

Shrout, P. E., & Rodgers, J. L. (2018). Psychology, science, and knowledge construction: Broadening perspectives from the replication crisis. Annual Review of Psychology, 69(1), 487–510. https://doi.org/10.1146/annurev-psych-122216-011845

Sijtsma, K. (2016). Playing with data—Or how to discourage questionable research practices and stimulate researchers to do things right. Psychometrika, 81(1), 1–15. https://doi.org/10.1007/s11336-015-9446-0

Simonsohn, U., Nelson, L. D., & Simmons, J. P. (2014). P-curve: A key to the file-drawer. Journal of Experimental Psychology: General, 143(2), 534–547. https://doi.org/10.1037/a0033242

Smith, P. K., & Berkkun, F. (2020). How prevalent is contextual information in research on school bullying? Scandinavian Journal of Psychology, 61(1), 17–21. https://doi.org/10.1111/sjop.12537

Steneck, N. (2006). Fostering integrity in research: Definitions, current knowledge, and future directions. Science and Engineering Ethics, 12(1), 53–74. https://doi.org/10.1007/s11948-006-0006-y

Stricker, J., & Günther, A. (2019). Scientific misconduct in psychology: A systematic review of prevalence estimates and new empirical data. Zeitschrift Fur Psychologie, 227(1), 53–63. https://doi.org/10.1027/2151-2604/a000356

Travers, J. C., Cook, B. G., & Cook, L. (2017). Null hypothesis significance testing and p values. Learning Disabilities Research and Practice, 32(4), 208–215. https://doi.org/10.1111/ldrp.12147

Valdebenito, S., Ttofi, M. M., Eisner, M., & Gaffney, H. (2017). Weapon carrying in and out of school among pure bullies, pure victims and bully-victims: A systematic review and meta-analysis of cross-sectional and longitudinal studies. Aggression and Violent Behavior, 33, 62–77. https://doi.org/10.1016/j.avb.2017.01.004

van ’t Veer, A. E., & Giner-Sorolla, R. (2016). Pre-registration in social psychology—A discussion and suggested template. Journal of Experimental Social Psychology, 67, 2–12. https://doi.org/10.1016/j.jesp.2016.03.004

Vessey, J., Strout, T. D., DiFazio, R. L., & Walker, A. (2014). Measuring the youth bullying experience : A systematic review of the psychometric. Journal of School Health, 84(12).

Volk, A. A., Veenstra, R., & Espelage, D. L. (2017). So you want to study bullying? Recommendations to enhance the validity, transparency, and compatibility of bullying research. Aggression and Violent Behavior, 36, 34–43. https://doi.org/10.1016/j.avb.2017.07.003

Wagenmakers, E. J., Wetzels, R., Borsboom, D., van der Maas, H. L. J., & Kievit, R. A. (2012). An agenda for purely confirmatory research. Perspectives on Psychological Science, 7(6), 632–638. https://doi.org/10.1177/1745691612463078

Wicherts, J. M., Veldkamp, C. L. S., Augusteijn, H. E. M., Bakker, M., van Aert, R. C. M., & van Assen, M. A. L. M. (2016). Degrees of freedom in planning, running, analyzing, and reporting psychological studies: A checklist to avoid P-hacking. Frontiers in Psychology, 7, 1–12. https://doi.org/10.3389/fpsyg.2016.01832

Wiggins, B. J., & Chrisopherson, C. D. (2019). The replication crisis in psychology: An overview for theoretical and philosophical psychology. Journal of Theoretical and Philosophical Psychology, 39(4), 202–217. https://doi.org/10.1037/teo0000137

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Noret, N., Hunter, S.C., Pimenta, S. et al. Open Science: Recommendations for Research on School Bullying. Int Journal of Bullying Prevention 5, 319–330 (2023). https://doi.org/10.1007/s42380-022-00130-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42380-022-00130-0