1 Introduction

Comprehension syntax, together with simple appeals to library functions, usually provides clear, understandable, and short programs. Such a programming style for collection manipulation avoids loops and recursion as these are regarded as harder to understand and more error-prone. However, current collection-type function libraries appear lacking direct support that takes effective advantage of a linear ordering on collections for programming in the comprehension style, even when such an ordering can often be made available by sorting the collections at a linearithmic overhead. We do not argue that these libraries lack expressive power extensionally, as the functions that interest us on ordered collections are easily expressible in the comprehension style. However, they are expressed inefficiently in such a style. We give a practical example in Section 2. We sketch in Section 3 proofs that, under a suitable formal definition of the restriction that gives the comprehension style, efficient algorithms for low-selectivity database joins, for example, cannot be expressed. Moreover, in this setting, these algorithms remain inexpressible even when access is given to any single library function such as foldLeft, takeWhile, dropWhile, and zip.

We proceed to fill this gap through several results on the design of a suitable collection-type function. We notice that most functions in these libraries are defined on one collection. There is no notion of any form of general synchronized traversal of two or more collections other than zip-like mechanical lock-step traversal. This seems like a design gap: synchronized traversals are often needed in real-life applications and, for an average programmer, efficient synchronized traversals can be hard to implement correctly.

Intuitively, a “synchronized traversal” of two collections is an iteration on two collections where the “moves” on the two collections are coordinated, so that the current position in one collection is not too far from the current position in the other collection; that is, from the current position in one collection, one “can see” the current position in the other collection. However, defining the idea of “position” based on physical position, as in zip, seems restrictive. So, a more flexible notion of position is desirable. A natural and logical choice is that of a linear ordering relating items in the two collections, that is a linear ordering on the union of the two ordered collections. Also, given two collections which are sorted according to the linear orderings on their respective items, a reasonable new linear ordering on the union should respect the two linear orderings on the two original collections; that is, given two items in an original collection where the first “is before” the second in the original collection, then the first should be before the second in the linear ordering defined on the union of the two collections.

Combining the two motivations above, our main approach to reducing the complexity of the expressed algorithms is to traverse two or more sorted collections in a synchronized manner, taking advantage of relationships between the linear orders on these collections. The following summarizes our results.

An addition to the design of collection-type function libraries is proposed in Section 4. It is called Synchrony fold. Some theoretical conditions, viz. monotonicity and antimonotonicity, that characterize efficient synchronized iteration on a pair of ordered collections are presented. These conditions ensure the correct use of Synchrony fold. Synchrony fold is then shown to address the intensional expressive power gap articulated above.

Synchrony fold has the same extensional expressive power as foldLeft; it thus captures functions expressible by comprehension syntax augmented with typical collection-type function libraries. Because of this, Synchrony fold is not sufficiently precisely filling the intensional expressive power gap for comprehension syntax sans library function. A restriction to Synchrony fold is proposed in Section 5. This restricted form is called Synchrony generator. It has exactly the same extensional expressive power as comprehension syntax without any library function, but it has the intensional expressive power to express efficient algorithms for low-selectivity database joins. Synchrony generator is further shown to correspond to a rather natural generalization of the database merge join algorithm (Blasgen & Eswaran Reference Blasgen and Eswaran1977; Mishra & Eich Reference Mishra and Eich1992). The merge join was proposed half a century ago and has remained as a backbone algorithm in modern database systems for processing equijoin and some limited form of non-equijoin (Silberschatz et al. Reference Silberschatz, Korth and Sudarshan2016), especially when the result has to be outputted in a specified order. Synchrony generator generalizes it to the class of non-equijoin whose join predicate satisfies certain antimonotonicity conditions.

Previous works have proposed alternative ways for compiling comprehension syntax, to enrich the repertoire of algorithms expressible in the comprehension style. For example, Wadler & Peyton Jones (Reference Wadler and Peyton Jones2007) and Gibbons (Reference Gibbons2016) have enabled many relational database queries to be expressed efficiently under these refinements to comprehension syntax. However, these refinements only took equijoin into consideration; non-equijoin remains inefficient in comprehension syntax. In view of this and other issues, an iterator form—called Synchrony iterator—is derived from Synchrony generator in Section 6. While Synchrony iterator has the same extensional and intensional expressive power as Synchrony generator, it is more suitable for use in synergy with comprehension syntax. Specifically, Synchrony iterator makes efficient algorithms for simultaneous synchronized iteration on multiple ordered collections expressible in comprehension syntax.

Last but not least, Synchrony fold, Synchrony generator, and Synchrony iterator have an additional merit compared to other codes for synchronized traversal of multiple sorted collections. Specifically, they decompose such synchronized iterations into three orthogonal aspects, viz. relating the ordering on the two collections, identifying matching pairs, and acting on matching pairs. This orthogonality arguably makes for a more concise and precise understanding and specification of programs, hence improved reliability, as articulated by Schmidt (Reference Schmidt1986), Sebesta (Reference Sebesta2010) and Hunt & Thomas (Reference Hunt and Thomas2000).

2 Motivating example

Let us first define events as a data type.

Footnote 1

An event has a start and an end point, where start

![]() $<$

end, and typically has some additional attributes (e.g., an id) which do not concern us for now; cf. Figure 1. Events are ordered lexicographically by their start and end point: If an event y starts before an event x, then the event y is ordered before the event x; and when both events start together, the event which ends earlier is ordered before the event which ends later. Some predicates can be defined on events; for example, in Figure 1, isBefore(y, x) says event y is ordered before event x, and overlap(y, x) says events y and x overlap each other.

$<$

end, and typically has some additional attributes (e.g., an id) which do not concern us for now; cf. Figure 1. Events are ordered lexicographically by their start and end point: If an event y starts before an event x, then the event y is ordered before the event x; and when both events start together, the event which ends earlier is ordered before the event which ends later. Some predicates can be defined on events; for example, in Figure 1, isBefore(y, x) says event y is ordered before event x, and overlap(y, x) says events y and x overlap each other.

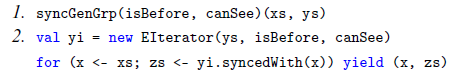

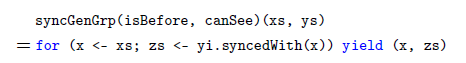

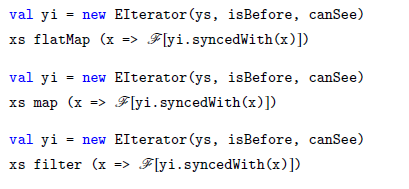

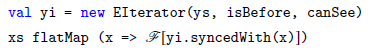

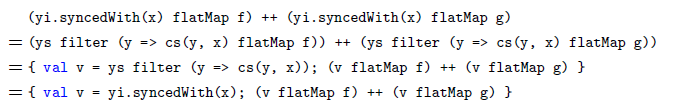

Fig. 1. A motivating example. The functions ov1(xs, ys) and ov2(xs, ys) are equal on inputs xs and ys which are sorted lexicographically by their start and end point. While ov1(xs, ys) has quadratic time complexity

![]() $O(|\mathtt{xs}| \cdot |\mathtt{ys}|)$

, ov2(xs, ys) has time complexity

$O(|\mathtt{xs}| \cdot |\mathtt{ys}|)$

, ov2(xs, ys) has time complexity

![]() $O(|\mathtt{xs}| + k |\mathtt{ys}|)$

when each event in ys overlaps fewer than k events in xs.

$O(|\mathtt{xs}| + k |\mathtt{ys}|)$

when each event in ys overlaps fewer than k events in xs.

Consider two collections of events, xs: Vec[Event] and ys: Vec[Event], where

![]() $\mathtt{Vec[\cdot]}$

denotes a generic collection type, for example, a vector.

Footnote 2

$\mathtt{Vec[\cdot]}$

denotes a generic collection type, for example, a vector.

Footnote 2

The function ov1(xs, ys), defined in Figure 1, retrieves the events in xs and ys that overlap each other. Although this comprehension syntax-based definition has the important virtue of being clear and succinct, it has quadratic time complexity

![]() $O(|\mathtt{xs}| \cdot |\mathtt{ys}|)$

.

$O(|\mathtt{xs}| \cdot |\mathtt{ys}|)$

.

An alternative implementation ov2(xs, ys) is given in Figure 1 as well. On xs and ys which are sorted lexicographically by (start, end), ov1(xs, ys) = ov2(xs, ys). Notably, the time complexity of ov2(xs, ys) is

![]() $O(|\mathtt{xs}| + k |\mathtt{ys}|)$

, provided each event in ys overlaps fewer than k events in xs. The proofs for these claims will become obvious later, from Theorem 4.4.

$O(|\mathtt{xs}| + k |\mathtt{ys}|)$

, provided each event in ys overlaps fewer than k events in xs. The proofs for these claims will become obvious later, from Theorem 4.4.

The function ov1(xs, ys) exemplifies a database join, and the join predicate is overlap(y, x). Joins are ubiquitous in database queries. Sometimes, a join predicate is a conjunction of equality tests; this is called an equijoin. However, when a join predicate comprises entirely of inequality tests, it is called a non-equijoin; overlap(y, x) is a special form of non-equijoin which is sometimes called an interval join. Non-equijoin is quite common in practical applications. For example, given a database of taxpayers, a query retrieving all pairs of taxpayers where the first earns more but pays less tax than the second is an interval join. As another example, given a database of mobile phones and their prices, a query retrieving all pairs of competing phone models (i.e., the two phone models in a pair are priced close to each other) is another special form of non-equijoin called a band join.

Returning to ov1(xs, ys) and ov2(xs, ys), the upper bound k on the number of events in xs that an event in ys can overlap with is called the selectivity of the join. Footnote 3 When restricted to xs and ys which are sorted by their start and end point, ov1(xs, ys) and ov2(xs, ys) define the same function. However, their time complexity is completely different. The time complexity of ov1(xs, ys) is quadratic. On the other hand, the time complexity of ov2(xs, ys) is a continuum from linear to quadratic, depending on the selectivity k. In a real-life database query, k is often a very small number, relative to the number of entries being processed. So, in practice, ov2(xs, ys) is linear.

The definition ov1(xs, ys) has the advantage of being obviously correct, due to its being expressed using easy-to-understand comprehension syntax, whereas ov2(xs, ys) is likely to take even a skilled programmer much more effort to get right. This example is one of many functions having the following two characteristics. Firstly, these functions are easily expressible in a modern programming language using only comprehension syntax. However, this usually results in a quadratic or higher time complexity. Secondly, there are linear-time algorithms for these functions. Yet, there is no straightforward way to provide linear-time implementation for these functions using comprehension syntax without using more sophisticated features of the programming language and its collection-type libraries.

The proof for this intensional expressiveness gap in a simplified theoretical setting is outlined in the next section and is shown in full in a companion paper (Wong Reference Wong2021). It is the main objective of this paper to fill this gap as simply as possible.

3 Intensional expressiveness gap

As alluded to earlier, what we call extensional expressive power in this paper refers to the class of mappings from input to output that can be expressed, as in Fortune et al. (Reference Fortune, Leivant and O’Donnell1983) and Felleisen (Reference Felleisen1991). In particular, so long as two programs in a language

![]() $\mathcal{L}$

produce the same output given the same input, even when these two programs differ greatly in terms of time complexity, they are regarded as expressing (implementing) the same function f, and are thus equivalent and mutually substitutable.

$\mathcal{L}$

produce the same output given the same input, even when these two programs differ greatly in terms of time complexity, they are regarded as expressing (implementing) the same function f, and are thus equivalent and mutually substitutable.

However, we focus here on improving the ability to express algorithms, that is, on intensional expressive power. Specifically, as in many past works (Abiteboul & Vianu Reference Abiteboul and Vianu1991; Colson Reference Colson1991; Suciu & Wong, Reference Suciu and Wong1995; Suciu & Paredaens Reference Suciu and Paredaens1997; Van den Bussche Reference Van den Bussche2001; Biskup et al. Reference Biskup, Paredaens, Schwentick and den Bussche2004; Wong Reference Wong2013), we approach this in a coarse-grained manner by considering the time complexity of programs. In particular, an algorithm which implements a function f in

![]() $\mathcal{L}$

is considered inexpressible in a specific setting if every program implementing f in

$\mathcal{L}$

is considered inexpressible in a specific setting if every program implementing f in

![]() $\mathcal{L}$

under that setting has a time complexity higher than this algorithm.

$\mathcal{L}$

under that setting has a time complexity higher than this algorithm.

Since Scala and other general programming languages are Turing complete, in order to capture the class of programs that we want to study with greater clarity, a restriction needs to be imposed. Informally, user-programmers Footnote 4 are allowed to use comprehension syntax, collections, and tuples, but they are not allowed to use while-loops, recursion, side effects, and nested collections, and they are not allowed to define new data types and new higher-order functions (a higher-order function is a function whose result is another function or is a nested collection.) They are also not allowed to call functions in the collection-type function libraries of these programming languages, unless specifically permitted.

This is called the “first-order restriction.” Under this restriction, following Suciu & Wong (Reference Suciu and Wong1995), when a user-programmer is allowed to use a higher-order function from a collection-type library, for example, foldleft(e)(f), the function f which is user-defined can only be a first-order function. A way to think about this restriction is to treat higher-order library functions as a part of the syntax of the language, rather than as higher-order functions.

Under such a restriction, some functions may become inexpressible, and even when a function is expressible, its expression may correspond to a drastically inefficient algorithm (Suciu & Paredaens Reference Suciu and Paredaens1997; Biskup et al. Reference Biskup, Paredaens, Schwentick and den Bussche2004; Wong Reference Wong2013). In terms of extensional expressive power, the first-order restriction of our ambient language, Scala, is easily seen to be complete with respect to flat relational queries (Buneman et al. Reference Buneman, Naqvi, Tannen and Wong1995; Libkin & Wong Reference Libkin and Wong1997), which are queries that a relational database system supports (such as joins). The situation is less clear from the perspective of intensional expressive power.

This section outlines results suggesting that Scala under the first-order restriction cannot express efficient algorithms for low-selectivity joins and that this remains so even when a programmer is permitted to access some functions in Scala’s collection-type libraries. Formal proofs are provided in a companion paper (Wong Reference Wong2021).

To capture the first-order restriction on Scala, consider the nested relational calculus

![]() $\mathcal{NRC}$

of Wong (Reference Wong1996).

$\mathcal{NRC}$

of Wong (Reference Wong1996).

![]() $\mathcal{NRC}$

is a simply-typed lambda calculus with Boolean and tuple types and their usual associated operations; set types, and primitives for empty set, forming singleton sets, union of sets, and flatMap for iterating on sets;

Footnote 5

and base types with equality tests and comparison tests. Replace its set type with linearly ordered set types and assume that ordered sets, for computational complexity purposes, are traversed in order in linear time; this way, ordered sets can be thought of as lists. The replacement of set types by linearly ordered set types does not change the nature of

$\mathcal{NRC}$

is a simply-typed lambda calculus with Boolean and tuple types and their usual associated operations; set types, and primitives for empty set, forming singleton sets, union of sets, and flatMap for iterating on sets;

Footnote 5

and base types with equality tests and comparison tests. Replace its set type with linearly ordered set types and assume that ordered sets, for computational complexity purposes, are traversed in order in linear time; this way, ordered sets can be thought of as lists. The replacement of set types by linearly ordered set types does not change the nature of

![]() $\mathcal{NRC}$

in any drastic way, because

$\mathcal{NRC}$

in any drastic way, because

![]() $\mathcal{NRC}$

can express a linear ordering on any arbitrarily deeply nested combinations of tuple and set types given any linear orderings on base types; cf. Libkin & Wong (Reference Libkin and Wong1994). Next, restrict the language to its flat fragment; that is, nested sets are not allowed. This restriction has no impact on the extensional expressive power of

$\mathcal{NRC}$

can express a linear ordering on any arbitrarily deeply nested combinations of tuple and set types given any linear orderings on base types; cf. Libkin & Wong (Reference Libkin and Wong1994). Next, restrict the language to its flat fragment; that is, nested sets are not allowed. This restriction has no impact on the extensional expressive power of

![]() $\mathcal{NRC}$

with respect to functions on non-nested sets, as shown by Wong (Reference Wong1996). Denote this language as

$\mathcal{NRC}$

with respect to functions on non-nested sets, as shown by Wong (Reference Wong1996). Denote this language as

![]() $\mathcal{NRC}_1(\leq)$

, where the permitted extra primitives are listed explicitly between the brackets.

$\mathcal{NRC}_1(\leq)$

, where the permitted extra primitives are listed explicitly between the brackets.

Some terminologies are needed for stating the results. To begin, by an object, we mean the value of any combination of base types, tuples, and sets that is constructible in

![]() $\mathcal{NRC}_1(\leq)$

.

$\mathcal{NRC}_1(\leq)$

.

A level-0 atom of an object C is a constant c which has at least one occurrence in C that is not inside any set in C. A level-1 atom of an object C is a constant c which has at least one occurrence in C that is inside a set. The notations

![]() $atom^0(C)$

,

$atom^0(C)$

,

![]() $atom^1(C)$

, and

$atom^1(C)$

, and

![]() $atom^{\leq 1}(C),$

respectively, denote the set of level-0 atoms of C, the set of level-1 atoms of C, and their union. The level-0 molecules of an object C are the sets in C. The notation

$atom^{\leq 1}(C),$

respectively, denote the set of level-0 atoms of C, the set of level-1 atoms of C, and their union. The level-0 molecules of an object C are the sets in C. The notation

![]() $molecule^0(C)$

denotes the set of level-0 molecules of C. For example, suppose

$molecule^0(C)$

denotes the set of level-0 molecules of C. For example, suppose

![]() $C = (c_1, c_2, \{ (c_3, c_4), (c_5, c_6) \})$

, then

$C = (c_1, c_2, \{ (c_3, c_4), (c_5, c_6) \})$

, then

![]() $atom^0(C) = \{c_1, c_2\}$

,

$atom^0(C) = \{c_1, c_2\}$

,

![]() $atom^1(C) = \{c_3, c_4, c_5, c_6\}$

,

$atom^1(C) = \{c_3, c_4, c_5, c_6\}$

,

![]() $atom^{\leq 1}(C)$

$atom^{\leq 1}(C)$

![]() $= \{c_1, c_2, c_3, c_4, c_5, c_6\}$

, and

$= \{c_1, c_2, c_3, c_4, c_5, c_6\}$

, and

![]() $molecule^0(C) = \{ \{ (c_3, c_4), (c_5, c_6) \}\}$

.

$molecule^0(C) = \{ \{ (c_3, c_4), (c_5, c_6) \}\}$

.

The level-0 Gaifman graph of an object C is defined as an undirected graph

![]() $gaifman^0(C)$

whose nodes are the level-0 atoms of C, and edges are all the pairs of level-0 atoms of C. The level-1 Gaifman graph of an object C is defined as an undirected graph

$gaifman^0(C)$

whose nodes are the level-0 atoms of C, and edges are all the pairs of level-0 atoms of C. The level-1 Gaifman graph of an object C is defined as an undirected graph

![]() $gaifman^1(C)$

whose nodes are the level-1 atoms of C, and the edges are defined as follow: If

$gaifman^1(C)$

whose nodes are the level-1 atoms of C, and the edges are defined as follow: If

![]() $C =\{C_1,...,C_n\}$

, the edges are pairs (x,y) such that x and y are in the same

$C =\{C_1,...,C_n\}$

, the edges are pairs (x,y) such that x and y are in the same

![]() $atom^0(C_i)$

for some

$atom^0(C_i)$

for some

![]() $1\leq i\leq n$

; if

$1\leq i\leq n$

; if

![]() $C = (C_1,..., C_n)$

, the edges are pairs

$C = (C_1,..., C_n)$

, the edges are pairs

![]() $(x,y) \in gaifman^1(C_i)$

for some

$(x,y) \in gaifman^1(C_i)$

for some

![]() $1\leq i\leq n$

, and there are no other edges. The Gaifman graph of an object C is defined as

$1\leq i\leq n$

, and there are no other edges. The Gaifman graph of an object C is defined as

![]() $gaifman(C) = gaifman^0(C) \cup\ gaifman^1(C)$

; cf. Gaifman (Reference Gaifman1982). An example of a Gaifman graph is provided in Figure 2.

$gaifman(C) = gaifman^0(C) \cup\ gaifman^1(C)$

; cf. Gaifman (Reference Gaifman1982). An example of a Gaifman graph is provided in Figure 2.

Fig. 2. The Gaifman graph for an object

![]() $C = (c_1, c_2,\{ (c_3, c_4), (c_5, c_6) \}, \{ (c_3, c_7, c_8), (c_4, c_8, c_6) \})$

. This object C has two relations or level-0 molecules, underlined respectively in red and green in the figure; and two level-0 atoms, underlined in blue in the figure. An edge connecting two nodes indicates the two nodes are in the same tuple. The color of an edge is not part of the definition of a Gaifman graph, but is used here to help visualize the relation or molecule containing the tuple contributing that edge.

$C = (c_1, c_2,\{ (c_3, c_4), (c_5, c_6) \}, \{ (c_3, c_7, c_8), (c_4, c_8, c_6) \})$

. This object C has two relations or level-0 molecules, underlined respectively in red and green in the figure; and two level-0 atoms, underlined in blue in the figure. An edge connecting two nodes indicates the two nodes are in the same tuple. The color of an edge is not part of the definition of a Gaifman graph, but is used here to help visualize the relation or molecule containing the tuple contributing that edge.

Let

![]() $e(\vec{X})$

be an expression e whose free variables are

$e(\vec{X})$

be an expression e whose free variables are

![]() $\vec{X}$

. Let

$\vec{X}$

. Let

![]() $e[\vec{C}/\vec{X}]$

denote the closed expression obtained by replacing the free variables

$e[\vec{C}/\vec{X}]$

denote the closed expression obtained by replacing the free variables

![]() $\vec{X}$

by the corresponding objects

$\vec{X}$

by the corresponding objects

![]() $\vec{C}$

. Let

$\vec{C}$

. Let

![]() $e[\vec{C}/\vec{X}] \Downarrow C'$

mean the closed expression

$e[\vec{C}/\vec{X}] \Downarrow C'$

mean the closed expression

![]() $e[\vec{C}/\vec{X}]$

evaluates to the object C’; the evaluation is performed according to a typical call-by-value operational semantics (Wong Reference Wong2021).

$e[\vec{C}/\vec{X}]$

evaluates to the object C’; the evaluation is performed according to a typical call-by-value operational semantics (Wong Reference Wong2021).

It is shown by Wong (Reference Wong2021) that

![]() $\mathcal{NRC}_1(\leq)$

expressions can only manipulate their input in highly restricted local manners. In particular, expressions which have at most linear-time complexity are able to mix level-0 atoms with level-0 and level-1 atoms, but are unable to mix level-1 atoms with themselves.

$\mathcal{NRC}_1(\leq)$

expressions can only manipulate their input in highly restricted local manners. In particular, expressions which have at most linear-time complexity are able to mix level-0 atoms with level-0 and level-1 atoms, but are unable to mix level-1 atoms with themselves.

Lemma 3.1 (Wong Reference Wong2021, Lemma 3.1) Let

![]() $e(\vec{X})$

be an expression in

$e(\vec{X})$

be an expression in

![]() $\mathcal{NRC}_1(\leq)$

. Let objects

$\mathcal{NRC}_1(\leq)$

. Let objects

![]() $\vec{C}$

have the same types as

$\vec{C}$

have the same types as

![]() $\vec{X}$

, and

$\vec{X}$

, and

![]() $e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

$e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

![]() $e(\vec{X})$

has at most linear-time complexity with respect to the size of

$e(\vec{X})$

has at most linear-time complexity with respect to the size of

![]() $\vec{X}$

.

Footnote 6

Then for each

$\vec{X}$

.

Footnote 6

Then for each

![]() $(u,v) \in gaifman(C')$

, either

$(u,v) \in gaifman(C')$

, either

![]() $(u,v) \in gaifman(\vec{C})$

, or

$(u,v) \in gaifman(\vec{C})$

, or

![]() $u \in atom^0(\vec{C})$

and

$u \in atom^0(\vec{C})$

and

![]() $v \in atom^1(\vec{C})$

, or

$v \in atom^1(\vec{C})$

, or

![]() $u \in atom^1(\vec{C})$

and

$u \in atom^1(\vec{C})$

and

![]() $v \in atom^0(\vec{C})$

.

$v \in atom^0(\vec{C})$

.

Here is a grossly simplified informal argument to provide some insight on this “limited-mixing” lemma. Consider an expression

![]() $X\ \mathtt{flatMap}\ f$

, where X is the variable representing the input collection and f is a function to be performed on each element of X in the usual manner of flatMap. Then, the time complexity of this expression is

$X\ \mathtt{flatMap}\ f$

, where X is the variable representing the input collection and f is a function to be performed on each element of X in the usual manner of flatMap. Then, the time complexity of this expression is

![]() $O(n \cdot \hat{f})$

, where n is the number of items in X and

$O(n \cdot \hat{f})$

, where n is the number of items in X and

![]() $O(\hat{f})$

is the time complexity of f. Clearly,

$O(\hat{f})$

is the time complexity of f. Clearly,

![]() $O(n \cdot \hat{f})$

can be linear only when

$O(n \cdot \hat{f})$

can be linear only when

![]() $O(\hat{f}) = O(1)$

. Intuitively, this means f cannot have a subexpression of the form

$O(\hat{f}) = O(1)$

. Intuitively, this means f cannot have a subexpression of the form

![]() $X\ \mathtt{flatMap}\ g$

. Since flatMap is the sole construct in

$X\ \mathtt{flatMap}\ g$

. Since flatMap is the sole construct in

![]() $\mathcal{NRC}_1(\leq)$

for accessing and manipulating the elements of a collection, when f is passed an element of X, there is no way for it to access a different element of X if f does not have a subexpression of the form

$\mathcal{NRC}_1(\leq)$

for accessing and manipulating the elements of a collection, when f is passed an element of X, there is no way for it to access a different element of X if f does not have a subexpression of the form

![]() $X\ \mathtt{flatMap}\ g$

. So, it is not possible for f to mix the components from two different elements of X.

$X\ \mathtt{flatMap}\ g$

. So, it is not possible for f to mix the components from two different elements of X.

Unavoidably, many details are swept under the carpet in this informal argument, but are taken care of by Wong (Reference Wong2021).

This limited-mixing handicap remains when the language is further augmented with typical functions—such as dropWhile, takeWhile, and foldLeft—in collection-type libraries of modern programming languages. Even the presence of a fictitious operator, sort, for instantaneous sorting, cannot rescue the language from this handicap.

Lemma 3.2 (Wong Reference Wong2021, Lemma 5.1) Let

![]() $e(\vec{X})$

be an expression in

$e(\vec{X})$

be an expression in

![]() $\mathcal{NRC}_1(\leq \mathtt{takeWhile}, \mathtt{dropWhile}, \mathtt{sort})$

. Let objects

$\mathcal{NRC}_1(\leq \mathtt{takeWhile}, \mathtt{dropWhile}, \mathtt{sort})$

. Let objects

![]() $\vec{C}$

have the same types as

$\vec{C}$

have the same types as

![]() $\vec{X}$

, and

$\vec{X}$

, and

![]() $e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

$e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

![]() $e(\vec{X})$

has at most linear-time complexity. Then, there is a number k that depends only on

$e(\vec{X})$

has at most linear-time complexity. Then, there is a number k that depends only on

![]() $e(\vec{X})$

but not on

$e(\vec{X})$

but not on

![]() $\vec{C}$

, and a set

$\vec{C}$

, and a set

![]() $A \subseteq atom^{\leq 1}(\vec{C})$

where

$A \subseteq atom^{\leq 1}(\vec{C})$

where

![]() $|A| \leq k$

, and for each

$|A| \leq k$

, and for each

![]() $(u,v) \in gaifman(C')$

, either

$(u,v) \in gaifman(C')$

, either

![]() $(u,v) \in gaifman(\vec{C})$

, or

$(u,v) \in gaifman(\vec{C})$

, or

![]() $u \in atom^0(\vec{C})$

and

$u \in atom^0(\vec{C})$

and

![]() $v \in atom^1(\vec{C})$

, or

$v \in atom^1(\vec{C})$

, or

![]() $u \in atom^1(\vec{C})$

and

$u \in atom^1(\vec{C})$

and

![]() $v \in atom^0(\vec{C})$

, or

$v \in atom^0(\vec{C})$

, or

![]() $u \in A$

and

$u \in A$

and

![]() $v \in atom^1(\vec{C})$

, or

$v \in atom^1(\vec{C})$

, or

![]() $u \in atom^1(\vec{C})$

and

$u \in atom^1(\vec{C})$

and

![]() $v \in A$

.

$v \in A$

.

Lemma 3.3 (Wong Reference Wong2021, Lemma 5.4) Let

![]() $e(\vec{X})$

be an expression in

$e(\vec{X})$

be an expression in

![]() $\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

. Let objects

$\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

. Let objects

![]() $\vec{C}$

have the same types as

$\vec{C}$

have the same types as

![]() $\vec{X}$

, and

$\vec{X}$

, and

![]() $e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

$e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

![]() $e(\vec{X})$

has at most linear-time complexity. Then there is a number k that depends only on

$e(\vec{X})$

has at most linear-time complexity. Then there is a number k that depends only on

![]() $e(\vec{X})$

but not on

$e(\vec{X})$

but not on

![]() $\vec{C}$

, and a set

$\vec{C}$

, and a set

![]() $A \subseteq atom^{\leq 1}(\vec{C})$

where

$A \subseteq atom^{\leq 1}(\vec{C})$

where

![]() $|A| \leq k$

, such that for each

$|A| \leq k$

, such that for each

![]() $(u,v) \in gaifman(C')$

, either

$(u,v) \in gaifman(C')$

, either

![]() $(u,v) \in gaifman(\vec{C})$

, or

$(u,v) \in gaifman(\vec{C})$

, or

![]() $u \in atom^0(\vec{C})$

and

$u \in atom^0(\vec{C})$

and

![]() $v \in atom^1(\vec{C})$

, or

$v \in atom^1(\vec{C})$

, or

![]() $u \in atom^1(\vec{C})$

and

$u \in atom^1(\vec{C})$

and

![]() $v \in atom^0(\vec{C})$

, or

$v \in atom^0(\vec{C})$

, or

![]() $u \in A$

and

$u \in A$

and

![]() $v \in atom^1(\vec{C})$

, or

$v \in atom^1(\vec{C})$

, or

![]() $v \in A$

and

$v \in A$

and

![]() $u \in atom^1(\vec{C})$

.

$u \in atom^1(\vec{C})$

.

The inexpressibility of efficient algorithms for low-selectivity joins in

![]() $\mathcal{NRC}_1(\leq)$

,

$\mathcal{NRC}_1(\leq)$

,

![]() $\mathcal{NRC}_1(\leq, \mathtt{takeWhile}, \mathtt{dropWhile}, \mathtt{sort})$

, and

$\mathcal{NRC}_1(\leq, \mathtt{takeWhile}, \mathtt{dropWhile}, \mathtt{sort})$

, and

![]() $\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

can be deduced from Lemmas 3.1, 3.2, and 3.3. The argument for

$\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

can be deduced from Lemmas 3.1, 3.2, and 3.3. The argument for

![]() $\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

is provided here as an illustration. Let zip(xs, ys) be the query that pairs the ith element in xs with the ith element in ys, assuming that the two input collections xs and ys are sorted and have the same length. Without loss of generality, suppose the ith element in xs has the form

$\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

is provided here as an illustration. Let zip(xs, ys) be the query that pairs the ith element in xs with the ith element in ys, assuming that the two input collections xs and ys are sorted and have the same length. Without loss of generality, suppose the ith element in xs has the form

![]() $(o_i, x_i)$

and that in ys has the form

$(o_i, x_i)$

and that in ys has the form

![]() $(o_i, y_i)$

; suppose also that each

$(o_i, y_i)$

; suppose also that each

![]() $o_i$

occurs only once in xs and once in ys, each

$o_i$

occurs only once in xs and once in ys, each

![]() $x_i$

does not appear in ys, and each

$x_i$

does not appear in ys, and each

![]() $y_i$

does not appear in xs. Clearly, zip(xs, ys) is a low-selectivity join; in fact, its selectivity is precisely one. Let

$y_i$

does not appear in xs. Clearly, zip(xs, ys) is a low-selectivity join; in fact, its selectivity is precisely one. Let

![]() $\mathtt{xs} = \{(o_1, u_1)...,(o_n, u_n)\}$

and

$\mathtt{xs} = \{(o_1, u_1)...,(o_n, u_n)\}$

and

![]() $\mathtt{ys} = \{(o_1, v_1)...,(o_n, v_n)\}$

. Let

$\mathtt{ys} = \{(o_1, v_1)...,(o_n, v_n)\}$

. Let

![]() $C' = \{(u_1, o_1, o_1, v_1)...,(u_n, o_n, o_n, v_n)\}$

. Then zip(xs, ys)

$C' = \{(u_1, o_1, o_1, v_1)...,(u_n, o_n, o_n, v_n)\}$

. Then zip(xs, ys)

![]() $= C'$

. Then,

$= C'$

. Then,

![]() $gaifman(C') = \{(u_1, v_1)...,(u_n, v_n)\}\ \cup\ \Delta$

where

$gaifman(C') = \{(u_1, v_1)...,(u_n, v_n)\}\ \cup\ \Delta$

where

![]() $\Delta$

are the edges involving the

$\Delta$

are the edges involving the

![]() $o_j$

’s in gaifman(C’). Clearly, for

$o_j$

’s in gaifman(C’). Clearly, for

![]() $1 \leq i \leq n$

,

$1 \leq i \leq n$

,

![]() $(u_i, v_i) \in gaifman(C')$

but

$(u_i, v_i) \in gaifman(C')$

but

![]() $(u_i, v_i) \not\in gaifman(\mathtt{xs}, \mathtt{ys}) = \mathtt{xs} \cup \mathtt{ys}$

. Now, for a contradiction, suppose

$(u_i, v_i) \not\in gaifman(\mathtt{xs}, \mathtt{ys}) = \mathtt{xs} \cup \mathtt{ys}$

. Now, for a contradiction, suppose

![]() $\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

has a linear-time implementation for zip. Then, by Lemma 3.3, either

$\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

has a linear-time implementation for zip. Then, by Lemma 3.3, either

![]() $u_i \in atom^0(\mathtt{xs}, \mathtt{ys})$

, or

$u_i \in atom^0(\mathtt{xs}, \mathtt{ys})$

, or

![]() $v_i \in atom^0(\mathtt{xs}, \mathtt{ys})$

, or

$v_i \in atom^0(\mathtt{xs}, \mathtt{ys})$

, or

![]() $u_i \in A$

, or

$u_i \in A$

, or

![]() $v_i \in A$

for some A whose size is independent of xs and ys. However, xs and ys are both sets; thus,

$v_i \in A$

for some A whose size is independent of xs and ys. However, xs and ys are both sets; thus,

![]() $atom^o(\mathtt{xs}, \mathtt{ys}) = \{\}$

. This means A has to contain every

$atom^o(\mathtt{xs}, \mathtt{ys}) = \{\}$

. This means A has to contain every

![]() $u_i$

or

$u_i$

or

![]() $v_i$

. So,

$v_i$

. So,

![]() $|A| \geq n = |\mathtt{xs}| = |\mathtt{ys}|$

cannot be independent of xs and ys. This contradiction implies there is no linear-time implementation of zip in

$|A| \geq n = |\mathtt{xs}| = |\mathtt{ys}|$

cannot be independent of xs and ys. This contradiction implies there is no linear-time implementation of zip in

![]() $\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

.

$\mathcal{NRC}_1(\leq, \mathtt{foldleft}, \mathtt{sort})$

.

A careful reader may realize that

![]() $\mathcal{NRC}_1(\leq)$

does not have the head and tail primitives commonly provided for collection types in programming languages. However, the absence of head and tail in

$\mathcal{NRC}_1(\leq)$

does not have the head and tail primitives commonly provided for collection types in programming languages. However, the absence of head and tail in

![]() $\mathcal{NRC}_1(\leq)$

is irrelevant in the context of this paper. To see this, consider these two functions: take

$\mathcal{NRC}_1(\leq)$

is irrelevant in the context of this paper. To see this, consider these two functions: take

![]() $_n$

(xs) which returns in O(n) time the first n elements of xs, and drop

$_n$

(xs) which returns in O(n) time the first n elements of xs, and drop

![]() $_n$

(xs) which drops in O(n) time the first n elements of xs, when xs is ordered. So, head(xs) = take

$_n$

(xs) which drops in O(n) time the first n elements of xs, when xs is ordered. So, head(xs) = take

![]() $_1$

(xs) and tail(xs) = drop

$_1$

(xs) and tail(xs) = drop

![]() $_1$

(xs). The proof given by Wong (Reference Wong2021) for Lemma 3.2 can be copied almost verbatim to obtain an analogous limited-mixing result for

$_1$

(xs). The proof given by Wong (Reference Wong2021) for Lemma 3.2 can be copied almost verbatim to obtain an analogous limited-mixing result for

![]() $\mathcal{NRC}_1(\leq, \mathtt{take_n}, \mathtt{drop_n}, \mathtt{sort})$

.

$\mathcal{NRC}_1(\leq, \mathtt{take_n}, \mathtt{drop_n}, \mathtt{sort})$

.

Since zip is a manifestation of the intensional expressive power gap of

![]() $\mathcal{NRC}_1(\leq)$

and its extensions above, one might try to augment the language with zip as a primitive. This makes it trivial to supply an efficient implementation of zip. Unfortunately, this does not escape the limited-mixing handicap either.

$\mathcal{NRC}_1(\leq)$

and its extensions above, one might try to augment the language with zip as a primitive. This makes it trivial to supply an efficient implementation of zip. Unfortunately, this does not escape the limited-mixing handicap either.

Lemma 3.4 (Wong Reference Wong2021, Lemma 5.7) Let

![]() $e(\vec{X})$

be an expression in

$e(\vec{X})$

be an expression in

![]() $\mathcal{NRC}_1(\leq, \mathtt{zip}, \mathtt{sort})$

. Let objects

$\mathcal{NRC}_1(\leq, \mathtt{zip}, \mathtt{sort})$

. Let objects

![]() $\vec{C}$

have the same types as

$\vec{C}$

have the same types as

![]() $\vec{X}$

, and

$\vec{X}$

, and

![]() $e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

$e[\vec{C}/\vec{X}] \Downarrow C'$

. Suppose

![]() $e(\vec{X})$

has at most linear-time complexity. Then, there is a number k that depends only on

$e(\vec{X})$

has at most linear-time complexity. Then, there is a number k that depends only on

![]() $e(\vec{X})$

but not on

$e(\vec{X})$

but not on

![]() $\vec{C}$

, and an undirected graph K where the nodes are a subset of

$\vec{C}$

, and an undirected graph K where the nodes are a subset of

![]() $atom^{\leq 1}(\vec{C})$

and each node w of K has degree at most n k, n is the number of times w appears in

$atom^{\leq 1}(\vec{C})$

and each node w of K has degree at most n k, n is the number of times w appears in

![]() $\vec{C}$

, such that for each

$\vec{C}$

, such that for each

![]() $(u,v) \in gaifman(C')$

, either

$(u,v) \in gaifman(C')$

, either

![]() $(u,v) \in gaifman(\vec{C}) \cup\ K$

, or

$(u,v) \in gaifman(\vec{C}) \cup\ K$

, or

![]() $u \in atom^0(\vec{C})$

and

$u \in atom^0(\vec{C})$

and

![]() $v \in atom^1(\vec{C})$

, or

$v \in atom^1(\vec{C})$

, or

![]() $u \in atom^1(\vec{C})$

and

$u \in atom^1(\vec{C})$

and

![]() $v \in atom^0(\vec{C})$

.

$v \in atom^0(\vec{C})$

.

It follows from Lemma 3.4 that there is no linear-time implementation of ov1(xs, ys) in

![]() $\mathcal{NRC}_1(\leq, \mathtt{zip}, \mathtt{sort})$

. To see this, suppose for a contradiction that there is an expression

$\mathcal{NRC}_1(\leq, \mathtt{zip}, \mathtt{sort})$

. To see this, suppose for a contradiction that there is an expression

![]() $f(\mathtt{xs}, \mathtt{ys})$

in

$f(\mathtt{xs}, \mathtt{ys})$

in

![]() $\mathcal{NRC}_1(\leq, \mathtt{zip}, \mathtt{sort})$

that implements ov1(xs, ys) with time complexity

$\mathcal{NRC}_1(\leq, \mathtt{zip}, \mathtt{sort})$

that implements ov1(xs, ys) with time complexity

![]() $O(|\mathtt{xs}| + h |\mathtt{ys}|)$

when each event in ys overlaps fewer than h events in xs. Let

$O(|\mathtt{xs}| + h |\mathtt{ys}|)$

when each event in ys overlaps fewer than h events in xs. Let

![]() $k_0$

be the k induced by Lemma 3.4 on f. Suppose without loss of generality that no start and end points in xs appears in ys, and vice versa. Then setting

$k_0$

be the k induced by Lemma 3.4 on f. Suppose without loss of generality that no start and end points in xs appears in ys, and vice versa. Then setting

![]() $h > k_0$

produces the desired contradiction.

$h > k_0$

produces the desired contradiction.

![]() $\mathcal{NRC}_1(\leq)$

is designed to express the same functions and algorithms that first-order restricted Scala is able to express. A bare-bone fragment of Scala that corresponds to

$\mathcal{NRC}_1(\leq)$

is designed to express the same functions and algorithms that first-order restricted Scala is able to express. A bare-bone fragment of Scala that corresponds to

![]() $\mathcal{NRC}_1(\leq)$

can be described as follows. In terms of data types: Base types such as Boolean, Int, and String are included. The operators on base types are restricted to

$\mathcal{NRC}_1(\leq)$

can be described as follows. In terms of data types: Base types such as Boolean, Int, and String are included. The operators on base types are restricted to

![]() $=$

and

$=$

and

![]() $\leq$

tests. Other operators on base types (e.g., functions from base types to base types) can generally be included without affecting the limited-mixing lemmas. Tuple types over base types (i.e., all tuple components are base types) are included. The operators on tuple types are the tuple constructor and the tuple projection. A collection type is included, and the Scala

$\leq$

tests. Other operators on base types (e.g., functions from base types to base types) can generally be included without affecting the limited-mixing lemmas. Tuple types over base types (i.e., all tuple components are base types) are included. The operators on tuple types are the tuple constructor and the tuple projection. A collection type is included, and the Scala

![]() $\mathtt{Vector[\cdot]}$

is a convenient choice as a generic collection type; however, only vectors of base types and vectors of tuples of base types are included. The operators on vectors are the vector constructor, the flatMap on vectors, the vector append ++, and the vector emptiness test; when restricted to these operators, vectors essentially behave as sets. It is also possible to use other Scala collection types—for example,

$\mathtt{Vector[\cdot]}$

is a convenient choice as a generic collection type; however, only vectors of base types and vectors of tuples of base types are included. The operators on vectors are the vector constructor, the flatMap on vectors, the vector append ++, and the vector emptiness test; when restricted to these operators, vectors essentially behave as sets. It is also possible to use other Scala collection types—for example,

![]() $\mathtt{List[\cdot]}$

—instead of

$\mathtt{List[\cdot]}$

—instead of

![]() $\mathtt{Vector[\cdot]}$

, so long as the operators are restricted to a constructor, append ++, and emptiness test. Some other common operators on collection types, for example, head and tail, can also be included, though adding these would make the language correspond to

$\mathtt{Vector[\cdot]}$

, so long as the operators are restricted to a constructor, append ++, and emptiness test. Some other common operators on collection types, for example, head and tail, can also be included, though adding these would make the language correspond to

![]() $\mathcal{NRC}_1(\leq, \mathtt{take}_1, \mathtt{drop}_1)$

instead of

$\mathcal{NRC}_1(\leq, \mathtt{take}_1, \mathtt{drop}_1)$

instead of

![]() $\mathcal{NRC}_1(\leq),$

and, as explained earlier, this does not impact the limited-mixing lemmas. In terms of general programming constructs: Defining functions whose return types are any of the data types above (i.e., return types are not allowed to be function types and nested collection types), making function calls, and using comprehension syntax and if-then-else are all permitted. Although pared to such a bare bone, this highly restricted form of Scala retains sufficient expressive power; for example, all flat relational queries can be easily expressed using it. In the rest of this manuscript, we say “first-order restricted Scala” to refer to this bare-bone fragment.

$\mathcal{NRC}_1(\leq),$

and, as explained earlier, this does not impact the limited-mixing lemmas. In terms of general programming constructs: Defining functions whose return types are any of the data types above (i.e., return types are not allowed to be function types and nested collection types), making function calls, and using comprehension syntax and if-then-else are all permitted. Although pared to such a bare bone, this highly restricted form of Scala retains sufficient expressive power; for example, all flat relational queries can be easily expressed using it. In the rest of this manuscript, we say “first-order restricted Scala” to refer to this bare-bone fragment.

Thus, Lemma 3.1 implies there is no efficient implementation of low-selectivity joins, including ov1(xs, ys), in first-order restricted Scala. Lemma 3.2 implies there is no efficient implementation of low-selectivity joins in first-order restricted Scala even when the programmer is given access to takeWhile and dropWhile. Lemma 3.3 implies there is no efficient implementation of low-selectivity joins in first-order restricted Scala even when the programmer is given access to foldLeft. Lemma 3.4 implies there is no efficient implementation of low-selectivity joins in first-order restricted Scala even when the programmer is given access to zip. Moreover, these limitations remain even when the programmer is further given the magical ability to do sorting infinitely fast.

4 Synchrony fold

Comprehension syntax is typically translated into nested flatMap’s, each flatMap iterating independently on a single collection. Consequently, like any function defined using comprehension syntax, the function ov1(xs, ys) in Figure 1 is forced to use nested loops to process its input. While it is able to return correct results even for an unsorted input, it overkills and overpays a price in its quadratic time complexity when its input is already appropriately sorted. In fact, ov1(xs, ys) is still overpaying the quadratic-time complexity price when its input is unsorted, because sorting can always be performed when needed for a relatively affordable linearithmic overhead.

In contrast, the function ov2(xs, ys) in Figure 1 is linear in time complexity when selectivity is low, which is much more efficient than ov1(xs, ys). There is one fundamental explanation for this efficiency: The inputs xs and ys are sorted, and ov2(xs, ys) directly exploits this sortedness to iterate on xs and ys in “synchrony,” that is in a coordinated manner, akin to the merge step in a merge sort (Knuth Reference Knuth1973) or a merge join (Blasgen & Eswaran Reference Blasgen and Eswaran1977; Mishra & Eich Reference Mishra and Eich1992). However, its codes are harder to understand and to get right.It is desirable to have an easy-to-understand-and-check linear-time implementation that is as efficient as ov2(xs, ys) but using only comprehension syntax, without the acrobatics of recursive functions, while loops, etc. This leads us to the concepts of Synchrony fold, Synchrony generator, and Synchrony iterator. Synchrony fold is presented in this section. Synchrony generator and iterator are presented later in Sections 5 and 6, respectively.

4.1 Theory of Synchrony fold

The function ov2(xs, ys) exploits the sortedness and the relationship between the orderings of xs and ys. In Scala’s collection-type function libraries, functions such as foldLeft are also able to exploit the sortedness of their input. Yet there is no way of individually using foldLeft and other collection-type library functions mentioned earlier—as suggested by Lemmas 3.2, 3.3, and 3.4—to obtain linear-time implementation of low-selectivity joins, without defining recursive functions, while-loops, etc. The main reason is that these library functions are mostly defined on a single input collection. Hence, it is hard for them to exploit the relationship between the orderings on two collections. And there is no obvious way to process two collections using any one of these library functions alone, other than in a nested-loop manner, unless the ambient programming language has more sophisticated ways to compile comprehensions (Wadler & Peyton Jones Reference Wadler and Peyton Jones2007; Marlow et al. Reference Marlow, Peyton-Jones, Kmett and Mokhov2016), or unless multiple library functions are used together.

Scala’s collection-type libraries do provide the function zip which pairs up elements of two collections according to their physical position in the two collections, viz. first with first, second with second, and so on. However, by Lemma 3.4, this mechanical pairing by zip cannot be used to implement efficient low-selectivity joins, which require more general notions of pairing where pairs can form from different positions in the two collections.

So, we propose syncFold, a generalization of foldLeft that iterates on two collections in a more flexible and synchronized manner. For this, we need to relate positions in two collections by introducing two logical predicates isBefore(y, x) and canSee(y, x), which are supplied to syncFold as two of its arguments. Informally, isBefore(y, x) means that, when we are iterating on two collections xs and ys, in a synchronized manner, we should encounter the item y in ys before we encounter the item x in xs. And canSee(y, x) means that the item y in ys corresponds to or matches the item x in xs; in other words, x and y form a pair which is of interest. Note that an item y “corresponds to or matches” an item x does not necessarily mean the two items are the same. For example, when items are events as defined in Section 2, in the context of ov1 and ov2, an event y corresponds to or matches an event x means the two events overlap each other. Obviously, an item does not need to be an atomic object; it can be a tuple or an object having a more complex type.

The isBefore(y, x) and canSee(y, x) predicates are characterized by the monotonicity and antimonotonicity conditions defined below and depicted in Figure 3. To provide formal definitions, let the notation

![]() $(\mathtt{x} \ll \mathtt{y}\ |\ \mathtt{zs})$

mean “an occurrence of x appears physically before an occurrence of y in the collection zs.” That is,

$(\mathtt{x} \ll \mathtt{y}\ |\ \mathtt{zs})$

mean “an occurrence of x appears physically before an occurrence of y in the collection zs.” That is,

![]() $(\mathtt{x} \ll \mathtt{y}\ |\ \mathtt{zs})$

if and only if there are

$(\mathtt{x} \ll \mathtt{y}\ |\ \mathtt{zs})$

if and only if there are

![]() $i < j$

such that

$i < j$

such that

![]() $\mathtt{x} = \mathtt{z_i}$

and

$\mathtt{x} = \mathtt{z_i}$

and

![]() $\mathtt{y} = \mathtt{z_j}$

, where z

$\mathtt{y} = \mathtt{z_j}$

, where z

![]() $_1$

, z

$_1$

, z

![]() $_2$

, …, z

$_2$

, …, z

![]() $_n$

are the items in zs listed in their order of appearance in zs. Note that

$_n$

are the items in zs listed in their order of appearance in zs. Note that

![]() $(\mathtt{x} \ll \mathtt{x}\ |\ \mathtt{zs})$

if and only if x occurs at least twice in zs.

$(\mathtt{x} \ll \mathtt{x}\ |\ \mathtt{zs})$

if and only if x occurs at least twice in zs.

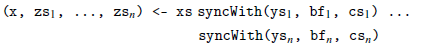

Fig. 3. Visualization of monotonicity and antimonotonicity. Two collections xs and ys are sorted according to some orderings, as denoted by the two arrows. The isBefore predicate is represented by the relative horizontal positions of items x

![]() $_i$

and y

$_i$

and y

![]() $_j$

; that is, if y

$_j$

; that is, if y

![]() $_j$

has a horizontal position to the left of x

$_j$

has a horizontal position to the left of x

![]() $_i$

, then y

$_i$

, then y

![]() $_j$

is before x

$_j$

is before x

![]() $_i$

. The canSee predicate is represented by the shaded green areas. (a) If y

$_i$

. The canSee predicate is represented by the shaded green areas. (a) If y

![]() $_1$

is before x

$_1$

is before x

![]() $_1$

and cannot see x

$_1$

and cannot see x

![]() $_1$

, then y

$_1$

, then y

![]() $_1$

is also before and cannot see any x

$_1$

is also before and cannot see any x

![]() $_2$

which comes after x

$_2$

which comes after x

![]() $_1$

. So, every x

$_1$

. So, every x

![]() $_i$

that matches y

$_i$

that matches y

![]() $_1$

has been seen; it is safe to move forward to y

$_1$

has been seen; it is safe to move forward to y

![]() $_2$

. (b) If y

$_2$

. (b) If y

![]() $_1$

is not before x

$_1$

is not before x

![]() $_1$

and cannot see x

$_1$

and cannot see x

![]() $_1$

, then any y

$_1$

, then any y

![]() $_2$

which comes after y

$_2$

which comes after y

![]() $_1$

is also not before and cannot see x

$_1$

is also not before and cannot see x

![]() $_1$

. So, every y

$_1$

. So, every y

![]() $_j$

that matches x

$_j$

that matches x

![]() $_1$

has been seen; it is safe to move forward to x

$_1$

has been seen; it is safe to move forward to x

![]() $_2$

.

$_2$

.

Also, a sorting key of a collection zs is a function

![]() $\phi(\cdot)$

with an associated linear ordering

$\phi(\cdot)$

with an associated linear ordering

![]() $<_{\phi}$

on its codomain such that, for every pair of items x and y in zs where

$<_{\phi}$

on its codomain such that, for every pair of items x and y in zs where

![]() $\phi(\mathtt{x}) \neq \phi(\mathtt{y})$

, it is the case that

$\phi(\mathtt{x}) \neq \phi(\mathtt{y})$

, it is the case that

![]() $(\mathtt{x} \ll \mathtt{y}\ |\ \mathtt{zs})$

if and only if

$(\mathtt{x} \ll \mathtt{y}\ |\ \mathtt{zs})$

if and only if

![]() $\phi(\mathtt{x}) <_{\phi} \phi(\mathtt{y})$

. Note that a collection may have zero, one, or more sorting keys. Two sorting keys

$\phi(\mathtt{x}) <_{\phi} \phi(\mathtt{y})$

. Note that a collection may have zero, one, or more sorting keys. Two sorting keys

![]() $\mathtt{\phi(\cdot)}$

and

$\mathtt{\phi(\cdot)}$

and

![]() $\mathtt{\psi(\cdot)}$

are said to have comparable codomains if their associated linear orderings are identical; that is for every zITB and z’,

$\mathtt{\psi(\cdot)}$

are said to have comparable codomains if their associated linear orderings are identical; that is for every zITB and z’,

![]() $\mathtt{z} <_{\phi} \mathtt{z}'$

if and only if

$\mathtt{z} <_{\phi} \mathtt{z}'$

if and only if

![]() $\mathtt{z} <_{\psi} \mathtt{z}'$

. For convenience, in this situation, we write

$\mathtt{z} <_{\psi} \mathtt{z}'$

. For convenience, in this situation, we write

![]() $<$

to refer to

$<$

to refer to

![]() $<_{\phi}$

and

$<_{\phi}$

and

![]() $<_{\psi}$

.

$<_{\psi}$

.

Definition 4.1 (Monotonicity of isBefore) An isBefore predicate is monotonic with respect to two collections

![]() $(\mathtt{xs}, \mathtt{ys})$

, which are not necessarily of the same type, if it satisfies the conditions below.

$(\mathtt{xs}, \mathtt{ys})$

, which are not necessarily of the same type, if it satisfies the conditions below.

-

1. If

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

, then for all y in ys: isBefore(y, x) implies isBefore(y, x’).

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

, then for all y in ys: isBefore(y, x) implies isBefore(y, x’). -

2. If

$(\mathtt{y}' \ll \mathtt{y}\ |\ \mathtt{ys})$

, then for all x in xs: isBefore(y, x) implies

$(\mathtt{y}' \ll \mathtt{y}\ |\ \mathtt{ys})$

, then for all x in xs: isBefore(y, x) implies

$\mathtt{isBefore(y', x)}$

.

$\mathtt{isBefore(y', x)}$

.

Definition 4.2 (Antimonotonicity of canSee) Let isBefore be monotonic with respect to

![]() $(\mathtt{xs}, \mathtt{ys})$

. A canSee predicate is antimonotonic with respect to isBefore if it satisfies the conditions below.

$(\mathtt{xs}, \mathtt{ys})$

. A canSee predicate is antimonotonic with respect to isBefore if it satisfies the conditions below.

-

1. If

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

, then for all y in ys: isBefore(y, x) and not canSee(y, x) implies not canSee(y, x’).

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

, then for all y in ys: isBefore(y, x) and not canSee(y, x) implies not canSee(y, x’). -

2. If

$(\mathtt{y} \ll \mathtt{y}'\ |\ \mathtt{ys})$

, then for all x in xs: not isBefore(y, x) and not canSee(y, x) implies not canSee(y’, x).

$(\mathtt{y} \ll \mathtt{y}'\ |\ \mathtt{ys})$

, then for all x in xs: not isBefore(y, x) and not canSee(y, x) implies not canSee(y’, x).

To appreciate the monotonicity conditions, imagine two collections xs and ys are being merged without duplicate elimination into a combined list zs, in a manner that is consistent with the isBefore predicate and the physical order of appearance in xs and ys. To do this, let xs comprises x

![]() $_1$

, x

$_1$

, x

![]() $_2$

, …, x

$_2$

, …, x

![]() $_m$

as its elements and

$_m$

as its elements and

![]() $(\mathtt{x_1} \ll \mathtt{x_2} \ll \cdots \ll \mathtt{x_m} | \mathtt{xs})$

; let ys comprises y

$(\mathtt{x_1} \ll \mathtt{x_2} \ll \cdots \ll \mathtt{x_m} | \mathtt{xs})$

; let ys comprises y

![]() $_1$

, y

$_1$

, y

![]() $_2$

, …, y

$_2$

, …, y

![]() $_n$

as its elements and

$_n$

as its elements and

![]() $(\mathtt{y_1} \ll \mathtt{y_2} \ll \cdots \ll \mathtt{y_n} | \mathtt{ys})$

; and let z

$(\mathtt{y_1} \ll \mathtt{y_2} \ll \cdots \ll \mathtt{y_n} | \mathtt{ys})$

; and let z

![]() $_i$

denote the ith element of zs. As there is no duplicate elimination, each z

$_i$

denote the ith element of zs. As there is no duplicate elimination, each z

![]() $_i$

is necessarily a choice between some element x

$_i$

is necessarily a choice between some element x

![]() $_j$

in xs and y

$_j$

in xs and y

![]() $_k$

in ys, and

$_k$

in ys, and

![]() $i = j + k - 1$

, unless all elements of xs or ys have already been chosen earlier. Let

$i = j + k - 1$

, unless all elements of xs or ys have already been chosen earlier. Let

![]() $\alpha(i)$

be the index of the element x

$\alpha(i)$

be the index of the element x

![]() $_j$

, that is j; and

$_j$

, that is j; and

![]() $\beta(i)$

be the index of the element y

$\beta(i)$

be the index of the element y

![]() $_k$

, that is k. Obviously,

$_k$

, that is k. Obviously,

![]() $\alpha(1) = \beta(1) = 1$

. And zs is necessarily constructed as follows: If

$\alpha(1) = \beta(1) = 1$

. And zs is necessarily constructed as follows: If

![]() $\alpha(i) > m$

or isBefore(

$\alpha(i) > m$

or isBefore(

![]() $\mathtt{y}_{\beta(i)}$

,

$\mathtt{y}_{\beta(i)}$

,

![]() $\mathtt{x}_{\alpha(i)}$

), then z

$\mathtt{x}_{\alpha(i)}$

), then z

![]() $_i$

=

$_i$

=

![]() $\mathtt{y}_{\beta(i)}$

,

$\mathtt{y}_{\beta(i)}$

,

![]() $\alpha(i+1) = \alpha(i)$

, and

$\alpha(i+1) = \alpha(i)$

, and

![]() $\beta(i+1) = \beta(i) + 1$

; otherwise, z

$\beta(i+1) = \beta(i) + 1$

; otherwise, z

![]() $_i$

= x

$_i$

= x

![]() $_{\alpha(i)}$

,

$_{\alpha(i)}$

,

![]() $\alpha(i+1) = \alpha(i) + 1$

, and

$\alpha(i+1) = \alpha(i) + 1$

, and

![]() $\beta(i+1) = \beta(i)$

.

$\beta(i+1) = \beta(i)$

.

Notice that in constructing zs above, only the isBefore predicate is used. The existence of a monotonic predicate isBefore with respect to

![]() $(\mathtt{xs}, \mathtt{ys})$

does not require xs and ys to be ordered by any sorting keys. For example, an “always true” isBefore predicate simply puts all elements of ys before all elements of xs when merging them into zs as described above. However, such trivial isBefore predicates have limited use.

$(\mathtt{xs}, \mathtt{ys})$

does not require xs and ys to be ordered by any sorting keys. For example, an “always true” isBefore predicate simply puts all elements of ys before all elements of xs when merging them into zs as described above. However, such trivial isBefore predicates have limited use.

When xs and ys are ordered by some sorting keys, more useful monotonic isBefore predicates are definable. For example, as an easy corollary of the construction of zs above, if xs and ys are ordered according to some sorting keys

![]() $\phi(\cdot)$

and

$\phi(\cdot)$

and

![]() $\psi(\cdot)$

with comparable codomains (i.e.,

$\psi(\cdot)$

with comparable codomains (i.e.,

![]() $<_{\phi}$

and

$<_{\phi}$

and

![]() $<_{\psi}$

are identical and thus can be denoted simply as

$<_{\psi}$

are identical and thus can be denoted simply as

![]() $<$

), then a predicate defined as

$<$

), then a predicate defined as

![]() $\mathtt{isBefore(y, x)} = \psi(\mathtt{y}) < \phi(\mathtt{x})$

is guaranteed monotonic with respect to

$\mathtt{isBefore(y, x)} = \psi(\mathtt{y}) < \phi(\mathtt{x})$

is guaranteed monotonic with respect to

![]() $(\mathtt{xs}, \mathtt{ys})$

. To see this, without loss of generality, suppose for a contradiction that

$(\mathtt{xs}, \mathtt{ys})$

. To see this, without loss of generality, suppose for a contradiction that

![]() $(\mathtt{x_i} \ll \mathtt{x_j} | \mathtt{xs})$

,

$(\mathtt{x_i} \ll \mathtt{x_j} | \mathtt{xs})$

,

![]() $\phi(\mathtt{x}_i) \neq \phi(\mathtt{x}_j)$

, and isBefore(y, x)

$\phi(\mathtt{x}_i) \neq \phi(\mathtt{x}_j)$

, and isBefore(y, x)

![]() $_i$

, but not isBefore(y, x)

$_i$

, but not isBefore(y, x)

![]() $_j$

. This means

$_j$

. This means

![]() $\phi(\mathtt{x}_i) < \phi(\mathtt{x}_j)$

,

$\phi(\mathtt{x}_i) < \phi(\mathtt{x}_j)$

,

![]() $\psi(\mathtt{y}) < \phi(\mathtt{x}_i)$

, but

$\psi(\mathtt{y}) < \phi(\mathtt{x}_i)$

, but

![]() $\psi(\mathtt{y}) \not< \phi(\mathtt{x}_j)$

. This gives the desired contradiction that

$\psi(\mathtt{y}) \not< \phi(\mathtt{x}_j)$

. This gives the desired contradiction that

![]() $\phi(\mathtt{x}_j) < \phi(\mathtt{x}_j)$

. This

$\phi(\mathtt{x}_j) < \phi(\mathtt{x}_j)$

. This

![]() $\mathtt{isBefore(y, x)} = \psi(\mathtt{y}) < \phi(\mathtt{x})$

is a natural bridge between the two sorted collections. Specifically, define

$\mathtt{isBefore(y, x)} = \psi(\mathtt{y}) < \phi(\mathtt{x})$

is a natural bridge between the two sorted collections. Specifically, define

![]() $\omega(i) = \phi(\mathtt{z}_i)$

if

$\omega(i) = \phi(\mathtt{z}_i)$

if

![]() $\mathtt{z}_i$

is from xs and

$\mathtt{z}_i$

is from xs and

![]() $\omega(i) = \psi(\mathtt{z}_i)$

if

$\omega(i) = \psi(\mathtt{z}_i)$

if

![]() $\mathtt{z}_i$

is from ys; and let

$\mathtt{z}_i$

is from ys; and let

![]() $\omega(\mathtt{zs})$

denote the collection comprising

$\omega(\mathtt{zs})$

denote the collection comprising

![]() $\omega(1)$

, …,

$\omega(1)$

, …,

![]() $\omega(n+m)$

in this order. Then,

$\omega(n+m)$

in this order. Then,

![]() $(\omega(i) \ll \omega(j)\ |\ \omega(\mathtt{zs}))$

implies

$(\omega(i) \ll \omega(j)\ |\ \omega(\mathtt{zs}))$

implies

![]() $\omega(i) \leq \omega(j)$

. That is,

$\omega(i) \leq \omega(j)$

. That is,

![]() $\omega(\mathtt{zs})$

is linearly ordered by

$\omega(\mathtt{zs})$

is linearly ordered by

![]() $<$

, the associated linear ordering shared by the two sorting keys

$<$

, the associated linear ordering shared by the two sorting keys

![]() $\phi(\cdot)$

and

$\phi(\cdot)$

and

![]() $\psi(\cdot)$

of xs and ys.

$\psi(\cdot)$

of xs and ys.

To appreciate the antimonotonicity conditions, one may eliminate the double negatives and read these antimonotonicity conditions as: (1) If isBefore(y, x) and

![]() $(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

, then canSee(y, x’) implies canSee(y, x); and (2) If not isBefore(y, x) and

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

, then canSee(y, x’) implies canSee(y, x); and (2) If not isBefore(y, x) and

![]() $(\mathtt{y} \ll \mathtt{y}'\ |\ \mathtt{ys})$

, then canSee(y’, x) implies canSee(y, x). Imagine that the x’s and y’s are placed on the same straight line, from left to right, in a manner consistent with isBefore. Then, if canSee is antimonotonic to isBefore, its antimonotonicity implies a “right-sided” convexity. That is, if y can see an item x of xs to its right, then it can see all xs items between itself and this x. Similarly, if x can be seen by an item y of ys to its right, then it can be seen by all ys items between itself and this y. No “left-sided” convexity is required or implied however.

$(\mathtt{y} \ll \mathtt{y}'\ |\ \mathtt{ys})$

, then canSee(y’, x) implies canSee(y, x). Imagine that the x’s and y’s are placed on the same straight line, from left to right, in a manner consistent with isBefore. Then, if canSee is antimonotonic to isBefore, its antimonotonicity implies a “right-sided” convexity. That is, if y can see an item x of xs to its right, then it can see all xs items between itself and this x. Similarly, if x can be seen by an item y of ys to its right, then it can be seen by all ys items between itself and this y. No “left-sided” convexity is required or implied however.

It follows that any canSee predicate which is reflexive and convex always satisfies the antimonotonicity conditions when isBefore satisfies the monotonicity conditions. So, we can try checking convexity and reflexivity of canSee first, which is a more intuitive task. Moreover, though this will not be discussed here, certain optimizations—which are useful in a parallel distributed setting—are enabled when canSee is reflexive and convex. Nonetheless, we must stress that the converse is not true. That is, an antimonotonic canSee predicate needs not be reflexive or convex; for example, the overlap(y, x) predicate from Figure 1 is an example of a nonconvex antimonotonic predicate, and the inequality

![]() $m < n$

of two integers is an example of a nonreflexive convex antimonotonic predicate.

$m < n$

of two integers is an example of a nonreflexive convex antimonotonic predicate.

Proposition 4.3 (Reflexivity and convexity imply antimonotonicity) Let xs and ys be two collections, which are not necessarily of the same type. Let zs be a collection of some arbitrary type. Let

![]() $\phi: \mathtt{xs} \to \mathtt{zs}$

be a sorting key of xs and

$\phi: \mathtt{xs} \to \mathtt{zs}$

be a sorting key of xs and

![]() $\psi: \mathtt{ys} \to \mathtt{zs}$

be a sorting key of ys. Then isBefore is monotonic with respect to

$\psi: \mathtt{ys} \to \mathtt{zs}$

be a sorting key of ys. Then isBefore is monotonic with respect to

![]() $(\mathtt{xs}, \mathtt{ys})$

, and canSee is antimonotonic with respect to isBefore, if there are predicates

$(\mathtt{xs}, \mathtt{ys})$

, and canSee is antimonotonic with respect to isBefore, if there are predicates

![]() $<_{\mathtt{zs}}$

and

$<_{\mathtt{zs}}$

and

![]() $\triangleleft_{\mathtt{zs}}$

such that all the conditions below are satisfied.

$\triangleleft_{\mathtt{zs}}$

such that all the conditions below are satisfied.

-

1.

$\phi$

preserves order:

$\phi$

preserves order:

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

implies

$(\mathtt{x} \ll \mathtt{x}'\ |\ \mathtt{xs})$

implies

$(\phi(\mathtt{x}) \ll \phi(\mathtt{x}')\ |\ \mathtt{zs})$

$(\phi(\mathtt{x}) \ll \phi(\mathtt{x}')\ |\ \mathtt{zs})$

-

2.

$\psi$

preserves order:

$\psi$

preserves order:

$(\mathtt{y} \ll \mathtt{y}'\ |\ \mathtt{ys})$

implies

$(\mathtt{y} \ll \mathtt{y}'\ |\ \mathtt{ys})$

implies

$(\psi(\mathtt{y}) \ll \psi(\mathtt{y}')\ |\ \mathtt{zs})$

$(\psi(\mathtt{y}) \ll \psi(\mathtt{y}')\ |\ \mathtt{zs})$

-

3.

$<_{\mathtt{zs}}$

preserves isBefore: isBefore(y, x) if and only if

$<_{\mathtt{zs}}$

preserves isBefore: isBefore(y, x) if and only if

$\psi(\mathtt{y}) <_{\mathtt{zs}} \phi(\mathtt{x})$

$\psi(\mathtt{y}) <_{\mathtt{zs}} \phi(\mathtt{x})$

-

4.

$<_{\mathtt{zs}}$

is monotonic with respect to

$<_{\mathtt{zs}}$

is monotonic with respect to

$(\mathtt{zs}, \mathtt{zs})$

$(\mathtt{zs}, \mathtt{zs})$

-

5.

$\triangleleft_{\mathtt{zs}}$

preserves canSee: canSee(y, x) if and only if

$\triangleleft_{\mathtt{zs}}$

preserves canSee: canSee(y, x) if and only if

$\psi(\mathtt{y}) \triangleleft_{\mathtt{zs}} \phi(\mathtt{x})$

$\psi(\mathtt{y}) \triangleleft_{\mathtt{zs}} \phi(\mathtt{x})$

-

6.

$\triangleleft_{\mathtt{zs}}$

is reflexive: for all z in zs,

$\triangleleft_{\mathtt{zs}}$

is reflexive: for all z in zs,

$\mathtt{z} \triangleleft_{\mathtt{zs}} \mathtt{z}'$

$\mathtt{z} \triangleleft_{\mathtt{zs}} \mathtt{z}'$

-

7.

$\triangleleft_{\mathtt{zs}}$

is convex: for all z

$\triangleleft_{\mathtt{zs}}$

is convex: for all z

$_0$

in zs and

$_0$

in zs and

$(\mathtt{z} \ll \mathtt{z}' \ll \mathtt{z}''\ |\ \mathtt{zs})$

,

$(\mathtt{z} \ll \mathtt{z}' \ll \mathtt{z}''\ |\ \mathtt{zs})$

,

$\mathtt{z} \triangleleft_{\mathtt{zs}} \mathtt{z}_0$

and

$\mathtt{z} \triangleleft_{\mathtt{zs}} \mathtt{z}_0$

and

$\mathtt{z}'' \triangleleft_{\mathtt{zs}} \mathtt{z}_0$

implies

$\mathtt{z}'' \triangleleft_{\mathtt{zs}} \mathtt{z}_0$

implies

$\mathtt{z}' \triangleleft_{\mathtt{zs}} \mathtt{z}_0$

; and

$\mathtt{z}' \triangleleft_{\mathtt{zs}} \mathtt{z}_0$

; and

$\mathtt{z}_0 \triangleleft_{\mathtt{zs}} \mathtt{z}$

and

$\mathtt{z}_0 \triangleleft_{\mathtt{zs}} \mathtt{z}$

and

$\mathtt{z}_0 \triangleleft_{\mathtt{zs}} \mathtt{z}''$

implies

$\mathtt{z}_0 \triangleleft_{\mathtt{zs}} \mathtt{z}''$

implies

$\mathtt{z}_0 \triangleleft_{\mathtt{zs}} \mathtt{z}'$

$\mathtt{z}_0 \triangleleft_{\mathtt{zs}} \mathtt{z}'$

In particular, when

![]() $\mathtt{xs} = \mathtt{ys} = \mathtt{zs}$

, and

$\mathtt{xs} = \mathtt{ys} = \mathtt{zs}$

, and

![]() $\mathtt{isBefore}$

is monotonic with respect to

$\mathtt{isBefore}$

is monotonic with respect to

![]() $(\mathtt{xs}, \mathtt{ys})$

and thus

$(\mathtt{xs}, \mathtt{ys})$

and thus

![]() $(\mathtt{zs}, \mathtt{zs})$

, conditions 1 to 5 above are trivially satisfied by setting the identity function as

$(\mathtt{zs}, \mathtt{zs})$

, conditions 1 to 5 above are trivially satisfied by setting the identity function as

![]() $\phi$

and

$\phi$

and

![]() $\psi$

, isBefore as

$\psi$

, isBefore as

![]() $<_{\mathtt{zs}}$

, and canSee as

$<_{\mathtt{zs}}$

, and canSee as

![]() $\triangleleft_{\mathtt{zs}}$

. Thus, a reflexive and convex canSee is also antimonotonic.

$\triangleleft_{\mathtt{zs}}$

. Thus, a reflexive and convex canSee is also antimonotonic.

The antimonotonicity conditions provide two rules for moving to the next x or the next y; cf. Figure 3. Specifically, by Antimonotonicity Condition 1, when the current y in ys is before the current x in xs, and this y cannot “see” (i.e., does not match) this x, then this y cannot see any of the following items in xs either. Therefore, it is not necessary to try matching the current y to the rest of the items in xs, and we can move on to the next item in ys. On the other hand, according to Antimonotonicity Condition 2, when the current y in ys is not before the current x in xs, and this y cannot see this x, then all subsequent items in ys cannot see this x either. Therefore, it is not necessary to try matching the current x to the rest of the items in ys, and we can safely move on to the next item in xs.