Abstract

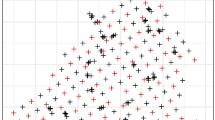

Euclidean distance k-nearest neighbor (k-NN) classifiers are simple nonparametric classification rules. Bootstrap methods, widely used for estimating the expected prediction error of classification rules, are motivated by the objective of calculating the ideal bootstrap estimate of expected prediction error. In practice, bootstrap methods use Monte Carlo resampling to estimate the ideal bootstrap estimate because exact calculation is generally intractable. In this article, we present analytical formulae for exact calculation of the ideal bootstrap estimate of expected prediction error for k-NN classifiers and propose a new weighted k-NN classifier based on resampling ideas. The resampling-weighted k-NN classifier replaces the k-NN posterior probability estimates by their expectations under resampling and predicts an unclassified covariate as belonging to the group with the largest resampling expectation. A simulation study and an application involving remotely sensed data show that the resampling-weighted k-NN classifier compares favorably to unweighted and distance-weighted k-NN classifiers.

Similar content being viewed by others

References

Bailey T. and Jain A.K. 1978. A note on distance-weighted k-nearest neighbor rules. IEEE Transactions on Systems, Man and Cybernetics 8: 311–313.

Dudani S.A. 1976. The distance-weighted k-nearest-neighbor rule. IEEE Transactions on Systems, Man and Cybernetics 6: 325–327.

Efron B. 1982. The Jackknife, the Bootstrap, and Other Resampling Plans, Volume 38 of CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM.

Efron B. 1983. Estimating the error rate of a prediction rule: Improvement on cross-validation. Journal of the American Statistical Association 78: 316–331.

Efron B. and Tibshirani R. 1993. An Introduction to the Bootstrap. Chapman and Hall, London.

Efron B. and Tibshirani R. 1997. Improvements on cross-validation: The 632+ bootstrap method. Journal of the American Statistical Association 92: 548–560.

LeBlanc M. and Tibshirani R. 1996. Combining estimates in regression and classification. Journal of the American Statistical Association 91: 1641–1658.

Macleod J.E.S., Luk A., and Titterington D.M. 1987. A re-examination of the distance-weighted k-nearest-neighbor classification rule. IEEE Transactions on Systems, Man and Cybernetics 17: 689–696.

McLauchlan G.J. 1992. Discriminant Analysis and Statistical Pattern Recognition. Wiley, New York.

Mojirsheibani M. 1999. Combining classifiers via discretization. Journal of the American Statistical Association 94: 600–609.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Steele, B.M., Patterson, D.A. Ideal bootstrap estimation of expected prediction error for k-nearest neighbor classifiers: Applications for classification and error assessment. Statistics and Computing 10, 349–355 (2000). https://doi.org/10.1023/A:1008933626919

Issue Date:

DOI: https://doi.org/10.1023/A:1008933626919