Abstract

Imaging through a scattering medium is a challenging task. We propose and demonstrate an interferenceless incoherent opto-digital technique for 3D imaging through a scatterer with a single lens and a digital camera. The light diffracted from a point object is modulated by a scattering mask. The modulated wavefront is projected on an image sensor using a spherical lens and the impulse response is recorded. An object is placed at the same axial location as the point object and another intensity pattern is recorded with identical experimental conditions and with the same scattering mask. The image of the object is reconstructed by a cross-correlation between a reconstructing function and the object hologram. For 3D imaging, a library of reconstructing functions are created corresponding to different axial locations. The different planes of the object are reconstructed by a cross-correlation of the object hologram with the corresponding reconstructing functions.

Similar content being viewed by others

Introduction

Light is scattered when it encounters an optically inhomogeneous media and it is often a difficult task to image objects through scattering media1,2,3. However, this fundamental problem needs to be addressed in order to image through naturally occurring inhomogeneous scatterers such as biological tissues4, fog5, and other turbid media6. Various coherent digital holography techniques have been developed to image through a scattering medium7,8,9. While different techniques are being developed to nullify or minimize the effects of a scatterer in order to image objects through it, in other studies, scattering has also been used as a tool to improve the characteristics of imaging. For instance, a scattering medium has been employed to improve the lateral resolution of imaging10,11. Recently, digital scattering masks with controllable scattering ranks have been used as tools to improve axial12 and lateral resolution13 in imaging applications.

Laser-based imaging techniques have been the first choice for imaging through scattering medium owing to their high optical power7,8,9,11. However, the inclusion of laser into the optics configuration results in a high cost and creates many undesirable imaging effects including edge ringing and speckle. In References12,13 an incoherent source of light is used which reduces the cost of the imaging system, makes it eye safe and also improves the lateral resolution. However, the use of one and two spatial light modulators (SLMs) respectively, increases the cost of the optical configuration. A simple, lensless, low-cost incoherent imaging system has been proposed and demonstrated for imaging objects through a scattering medium14. However, the technique is limited to 2D imaging and the reconstruction of the image involves the use of a time-consuming iterative Fienup type algorithm15. Similarly, another incoherent super-resolution microscopy technique using a scattering mask16 has also been shown, requiring an iterative and also time-consuming Richardson–Lucy deconvolution algorithm17 for the reconstruction of the object and lacking 3D imaging capability. A lensless 3D imager18 using incoherent light based on compressive sensing has been recently shown.

Wavefront shaping techniques with dynamic feedback have been developed to image objects through turbid media. However, these techniques involve time-consuming dynamic corrections with complicated algorithms and also requires an SLM19,20. There are numerous imaging techniques to image objects through a scattering medium such as Monte Carlo analysis in two-photon fluorescence21, confocal imaging with an annular pupil22, absorption study23, etc. Another coherent digital holography technique has been demonstrated for 3D imaging and phase retrieval but the optical configuration is complex with many optical components24.

An incoherent digital holography based adaptive optics technique25,26 was demonstrated based on Fresnel incoherent correlation holography technique27 to image through turbid media but the technique requires an interferometer to record a guide star and object holograms. Another lensless incoherent 3D imaging28 technique for retrieving objects embedded between dynamic scatterers has been proposed and experimentally demonstrated, but it requires a reference point at an off-axis point for calibration purpose, thus making the setup difficult to align. The use of a reference point together with an object induces certain limitations on the setup such as a limited field of view. Recently, a scatter-plate microscope29 which uses the scatterer as a tunable objective lens of the microscope has been demonstrated which can detect objects through scattering layers, but the reconstruction procedure has not shown 3D imaging capability. Similarly, another incoherent imaging technique30 was recently demonstrated which utilizes a known reference object to reconstruct the object of interest, but 3D imaging was not shown. A phase-diversity non-invasive speckle imaging method31 was reported which can image through a thin scatterer. However, the method requires multiple camera shots from different positions of the sensor and 3D imaging is not demonstrated.

In this study, we propose an interferenceless, motionless, incoherent digital holography technique without any SLM and laser for imaging objects through scattering sheet. Unlike the above-mentioned techniques, the method does not involve any iterative algorithm14,15,16,17, dynamic corrections19,20, and complicated experimental setup21,22,23,24,25,26. For 2D imaging, the technique enables to observe the hidden object through the scatterer. For 3D imaging, the scatterer becomes the tool which enables 3D imaging by a single camera shot, or by two shots when higher SNR is desired.

Methodology

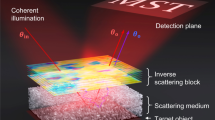

The optical configuration of the proposed technique is shown in Fig. 1. Light from an incoherent source is used to critically illuminate a point object using a lens L0. The light diffracted from the point object is diffused by a thin scattering sheet located at a distance of z s from the object. The diffused light is collected and focused by a refractive lens L1 with a focal length f1 = (1/z s + 1/z h )−1, where, z h is the distance between L1 and the image sensor. In the absence of the scatterer, a focused image of the point object is obtained on the sensor plane. Hence, the role of L1 is to project the entire optical energy into the camera aperture, and by that to maintain the optical efficiency optimally. It is well-known that a positive lens illuminated by a quasi-monochromatic point source yields, at the image plane of the point source, a 2D Fourier transform (multiplied by a quadratic phase function which is vanished by recording the intensity) of any aperture positioned between the source and the image point32. The center of the Fourier transform coincides with the image point. Therefore, if the source point is at \({\bar{r}}_{s}\), the intensity on the sensor plane is located at \({\bar{r}}_{s}{z}_{h}/{z}_{s}\).

For an object point at \({\bar{r}}_{s}\), the intensity pattern on the image sensor is given by,

where ν is the scaling operator such that ν[α]f(x) = f(αx), λ is the wavelength, the star * stands for 2D convolution, \({\Im\!\!\!\mbox{---}} \) indicates 2D Fourier transform, C is a constant, \({\bar{r}}_{0}=({x}_{0},{y}_{0})\) is the transverse location vector on the sensor plane, L represents linear function, given by, \(L(\bar{s}/z)=\exp [i2\pi {(\lambda z)}^{-1}({s}_{x}x+{s}_{y}y)]\) and Φ r is the caustic phase profile of the scatterer. The intensity I PSH is the impulse response of the system termed as the point spread hologram (PSH). For 3D imaging, the location of the point object is shifted to different axial locations and with the same scatterer, a library of PSHs is created.

The intensity of a general 2D object can be considered as a collection of shifted independent point sources and can be expressed as

where each a j is a positive real constant. The multi-points 2D object is placed at the same axial location as the point object and an intensity pattern is recorded through the same scatterer. Since the system from the input object to the camera plane is linear and space invariant, the intensity of the image sensor is given by

In other words, the intensity on the camera plane is the convolution between the object O and the PSH, i.e. \({I}_{OBJ}=O\ast {I}_{PSH}\). The goal is to reconstruct the object O from the camera intensity I OBJ . To figure out the best way to reconstruct the object, we consider the Fourier transform of the camera intensity as the following,

where, \({\tilde{I}}_{OBJ},\tilde{O}\,\) and \(\,{\tilde{I}}_{PSH}\) are 2D Fourier transforms of \({I}_{OBJ},O\,{\rm{and}}\,{I}_{PSH}\), respectively. Apparently, to reconstruct the object from \({\tilde{I}}_{OBJ}\) of Eq. (4), one needs to multiply \({\tilde{I}}_{OBJ}\) with the inverse filter \({|{\tilde{I}}_{PSH}|}^{-1}\exp (-i\,\text{arg}\{{\tilde{I}}_{PSH}\})\) and inversely Fourier transform the product. However, such operation gains the noise on the spectral plane to an unaccepted level. Hence, a more practical strategy is to multiply \({\tilde{I}}_{OBJ}\) with the filter \({|{\mathop{I}\limits^{ \sim }}_{PSH}|}^{\gamma }\exp (-i\,\text{arg}\{{\mathop{I}\limits^{ \sim }}_{PSH}\})\), where γ is a real number chosen as the number that yields minimum error between the reconstructed and the ideal objects. Using such filter, the images are reconstructed with a transverse magnification M T = (z h /z s ). The reconstructed image can be expressed as,

where Λ is a delta-like function and \({\bar{r}}_{R}=({x}_{R},{y}_{R})\) is the transverse location vector on the reconstruction plane.

The reconstructed image is usually accompanied by a relatively strong background noise12,13. The SNR can be improved by synthesizing a bipolar hologram for both, the point and the object, by recording two intensity patterns using two different scattering sheets. The first intensity patterns I PSH1 and I OBJ1 are recorded using a first scattering sheet. Similarly, I PSH2 and I OBJ2 are recorded using a different independent second scattering sheet. The bipolar holograms for the point object (H PSH = I PSH1 − I PSH2 ) and for the object (H OBJ = I OBJ1 − I OBJ2 ) are synthesized by subtracting their respective intensity patterns. Consequently, in terms of the spatial spectrum of Eq. (4), the zeroth order of the spectrum is minimized by synthesizing the bipolar holograms, which makes the magnitude of \(\,{\tilde{I}}_{PSH}\) and \({\tilde{I}}_{OBJ}\) more uniform than the case of the single camera shot. Thus, each reconstructed point becomes sharper with less background noise. The SNR can be further improved based on the assumption that any two different scatterers have a negligible cross-correlation value compared to their autocorrelation value and so by averaging the complex reconstructions using several sets of scatterer pairs, can reduce the background noise further.

The optimal value of γ was searched in the ranges from −1 to 1, with γ = −1 corresponding to an inverse filter, γ = 0 representing a phase-only filter and γ = 1 is a matched filter, accordingly. Along this range, we calculated the mean square error (MSE) given by,

where \(\hat{O}\) denotes the image matrix on the image sensor of the system of Fig. 1, without the scatterer, and O is the reconstructed image with the scatterer. M and N are the number of rows and columns of the matrices, respectively. The reconstructed object has been normalized by a constant μ33,34. As is shown in Fig. 2 the minimum MSE is obtained in the case of averaging over 3 sets of scatterer pairs, for γ = −0.1. Note, that although a single bipolar hologram yields a minimal MSE for γ = −0.2, the minimum MSE of the average reconstructions is obtained for γ = −0.1, in each bipolar hologram.

Experiments

The schematic of the experimental setup is shown in Fig. 3. The setup consists of three illumination channels with identical high power LEDs (Thorlabs LED635L, 170 mW, λ = 635 nm, Δλ = 15 nm) emitting light at λ = 635 nm. Three channels have been adjusted such that a pinhole and two objects can be illuminated simultaneously so that the accurate axial locations of the pinhole and the objects can be validated. Three identical refractive lenses L0, L0’ and L0” were used to critically illuminate three objects: (1) element 6 of group 2 in the United States Air Force (USAF) resolution chart with 7.13 lp/mm, (2) numeric digit 6 corresponding to the element 6 of group 2 in the United States Air Force (USAF) resolution chart, and (3) a pinhole with a diameter of approximately 100 μm. In the single plane experiment, the distance between the pinhole and the scatterer (z s ) is z s = 11.7 cm. The distance from lens L1 with a focal length f1 = 5 cm to the image sensor (GigE vision GT Prosilica, 2750 × 2200 pixels, 4.54 μm pixel pitch) is 9 cm calculated from the equation z h = z s f1/(z s − f1). The diameter of the lens L1 is 5 cm imposing a numerical aperture (NA) of approximately 0.2 (D/2z s ). The corresponding lateral resolution is ≈ 2 μm (0.61λ/NA). However, the larger diameter of the pinhole prevents achieving the highest possible lateral resolution as allowed by the NA. As a consequence, the lateral resolution of the optical system is dictated by the diameter of the pinhole. A pinhole with a smaller diameter can yield a higher lateral resolution but might decrease the light power and subsequently the signal to noise ratio (SNR). In the present setup, due to the power constraints of the given LED, it is impossible to use a smaller pinhole. The scatterer is a low-cost scattering sheet shown as an inset in Fig. 3. It can be noted that, in the absence of the scatterer, the optical setup is a three channel, single lens imaging system. The pinhole and the two objects in the three channels are aligned in the absence of the scatterer such that the pinhole is in between the center of the two objects, and also at the center of the image sensor.

Experimental Results

The experiment is carried out by attaching the scatterer close to the lens L1 and recording the intensity patterns for the pinhole IPSF and for the USAF objects I OBJ by blocking the other channels, respectively. The images of the I PSF and I OBJ are shown in Fig. 4(a) and (b), respectively. Note that the image in Fig. 4(b) is actually the image of the object through the scattering sheet without any processing. Needless to say, the object cannot be directly recognized by this image. The reconstructing filter is given as \({|{\mathop{I}\limits^{ \sim }}_{PSH}|}^{\gamma }\exp (-i\,\text{arg}\{{\mathop{I}\limits^{ \sim }}_{PSH}\})\) where the optimal γ according to Fig. 2 is γ = −0.3. The image of the filter is shown in Fig. 4(c). The reconstruction results when the two objects are located at the same distance is shown in Fig. 4(d). The regular imaging of the two objects free of any scattering medium is shown in Fig. 4(e).

Intensity patterns at the image sensor of (a) I PSF , for the pinhole in the input, and (b) I OBJ , for objects in the input. (c) Image of the filter magnitude with γ = −0.3. (d) Single shot reconstruction of objects at the same plane with a filter of γ = −0.3. (e) Regular imaging without any scattering medium. Scale bar: 350 μm.

The bipolar holograms of the pinhole H PSH and object H OBJ are shown in Fig. 5(a) and (b), respectively. The reconstructed images without and with averaging are shown in Fig. 5(c) and (d), respectively, obtained with the filter parameters γ = −0.2 and γ = −0.1, respectively.

Bipolar holograms of (a) pinhole (H PSH ) and (b) objects (H OBJ ) in the system input, (c) reconstructed image by a single bipolar hologram with γ = −0.2, (d) averaged image of complex reconstructions of bipolar holograms, and (e) regular imaging of the two objects without any scattering medium. Scale bar: 350 μm.

In the next experiment, the 3D imaging capability of the imaging technique is studied. In this case, two elements in the USAF chart are considered as object 1 and object 2. Two bipolar holograms for the pinhole are synthesized using two times two intensity recordings at two different axial locations (Z1 and Z2) with a gap of ΔZ = 3 mm. A single bipolar object hologram is also synthesized from two intensity recordings with element 6 of group 2 and numeric digit 6 of group 2 separated by the same distance of 3 mm. The object hologram, H OBJ is filtered by two filters synthesized from point object holograms H PSH1 and H PSH2 , respectively. It was noted that when H OBJ is filtered by the filter of H PSH1 the image of the object 1 was reconstructed while the image of object 2 appears blurred. If H OBJ is filtered by the filter of H PSH2 the image of the object 2 is reconstructed while the image of the object 1 was blurred as shown in Fig. 6. The reconstruction results for a single shot, bipolar hologram without averaging and with averaging for the different cross-correlations between the object and the point object holograms are shown in Fig. 6. It can be observed that the bipolar hologram improves the SNR, and averaging three complex reconstructions further increases the SNR35. The reconstruction results shown in Fig. 6 confirm the 3D imaging capability of the imaging system with a scatterer. It should be noted the Fourier relation of Eq. (1) is not valid for objects outside the single plane that satisfies the imaging equation [z s = z h f1/(z h − f1)]. However, it can be shown that system properties of linearity and space invariance still exist for the other axial planes. Consequently, the reconstruction by cross-correlation with a reconstructing function calculated from the impulse response is also valid for all other axial planes.

We can also observe information concealment abilities of the technique i.e., only when the object hologram is reconstructed with a filter of the H PSH recorded using the same scatterer and with identical experimental conditions, the image of the object was reconstructed. When a different filter was processed (using another scatterer) only a noise profile was reconstructed, as is shown in Fig. 7.

The nature of the scatterer was studied using a coherent light source (He-Ne laser) with λ = 632.8 nm. The scatterer was illuminated by a plane-wave and its spatial spectrum was recorded in the front focal plane of the lens with a focal length of 2.5 cm. The cross-section of the spatial spectrum of the scatterer is shown in Fig. 8. The FWHM of the pattern was found to be 0.752 × 106 cycles/m, which means that the angle of the average scattering cone is about 26.8°.

Conclusion

In conclusion, we have proposed and demonstrated a simple incoherent interferenceless technique to image through a scattering mask with a regular lens and a digital camera. In this technique, the scatterer is first characterized by the use of a point object and the intensity impulse response is recorded. Then, the point object is shifted to different axial locations and a library of impulse responses is created. This entire process is the training phase of the method, where it is done only once and it is suitable for imaging arbitrary number of objects. Following the training phase, an object is placed within the axial limits of the impulse response library, and another intensity pattern is recorded using the same scatterer and with identical experimental conditions. The different planes of the object are reconstructed using the different intensity impulse responses recorded at the corresponding axial locations. The experiment has been demonstrated with a single camera shot, two camera shots and also with averaging using multiple camera shots. The results with averaging showed the minimal MSE. This averaging technique can be utilized to improve the SNR by averaging over a larger number of complex reconstructions with different scatterers.

Using a point source to characterize the scatterer has some disadvantages in the sense that imaging through a scattering medium can succeed only after the medium is recognized by the system. On the other hand, the training stage of the system enables 3D imaging and can work properly with various types of scatterers. This is because the scatterer is firstly characterized and its impulse response is processed in order to optimize the imaging later.

From one perspective, the proposed technique enables imaging through a scattering medium. Therefore, this technique can be useful for seeing through natural scatterers such as human skin, tissues, fog and other turbid media. The technique uses an incoherent source and therefore can be easily utilized for fluorescence imaging and self-luminous stellar objects. From another perspective, this technique can be used to convert any regular imaging system into a 3D imager by using an easily available paper scatterer. While this technique has been demonstrated using only one lens, it can be extended to imaging systems with multiple optical components as well. The lateral resolution is limited by the 100 μm pinhole. Nonetheless, the resolution can be improved using a smaller pinhole and a powerful light source. However, it was noticed that the reconstruction was not successful for larger objects, larger than element 4 of group 2 in USAF. Therefore, additional studies are necessary to characterize the different aspects of the imaging system with a scatterer.

References

Maradudin, A. & Mills, D. Scattering and absorption of electromagnetic radiation by a semi-infinite medium in the presence of surface roughness. Phys. Rev. B 11, 1392–1415 (1975).

Agarwal, G. S. Integral equation treatment of scattering from rough surfaces. Phys. Rev. B 14, 846–848 (1976).

Martin, O. J. F., Girard, C. & Dereux, A. Generalized field propagator for electromagnetic scattering and light confinement. Phys. Rev. Lett. 74, 526–529 (1995).

Cruz, J. M. D., Pastirk, I., Comstock, M., Lozovoy, V. V. & Dantus, M. Use of coherent control methods through scattering biological tissue to achieve functional imaging. PNAS 101, 16996–17001 (2004).

Psaltis, D. & Papadopoulos, I. N. Imaging: The fog clears. Nature 491, 197–198 (2012).

Sudarsanam, S. et al. Real-time imaging through strongly scattering media: seeing through turbid media, instantly. Sci. Rep. 6, 25033, https://doi.org/10.1038/srep25033 (2016).

Leith, E. et al. Imaging through scattering media with holography. JOSA A9, 1148–1153 (1992).

Bashkansky, M. & Reintjes, J. Imaging through a strong scattering medium with nonlinear optical field cross-correlation techniques. Opt. Lett. 18, 2132–2134 (1993).

Arons, E. & Dilworth, D. Analysis of Fourier synthesis holography for imaging through scattering materials. Appl. Opt. 34, 1841–1847 (1995).

Charnotskii, M. I., Myakinin, V. A. & Zavorotnyy, V. U. Observation of superresolution in nonisoplanatic imaging through turbulence. JOSA A7, 1345–1350 (1990).

Choi, Y. et al. Optical Imaging with the use of a scattering lens. IEEE Journal of Selected Topics in Quantum Electronics 20, 61–73 (2014).

Vijayakumar, A., Kashter, Y., Kelner, R. & Rosen, J. Coded aperture correlation holography–a new type of incoherent digital holograms. Opt. Exp. 24, 12430–12441 (2016).

Kashter, Y., Vijayakumar, A. & Rosen, J. Resolving images by blurring - a new superresolution method using a scattering mask between the observed objects and the hologram recorder. Optica 4, 932–939 (2017).

Katz, O., Heidmann, P., Fink, M. & Gigan, S. Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nature Photonics 8, 784–790 (2014).

Fienup, J. R. Phase retrieval algorithms: a comparison. Appl. Opt. 21, 2758–2769 (1982).

Edrei, E. & Scarcelli, G. Memory-effect based deconvolution microscopy for super-resolution imaging through scattering media. Sci. Rep. 6, 33558 (2016).

Lucy, L. B. An iterative technique for the rectification of observed distributions. Astron. J. 79, 745–754 (1974).

Antipa, N. et al. DiffuserCam: Lensless single-exposure 3D imaging. Preprint at https://arxiv.org/abs/1708.02410 (2017).

Nixon, M. et al. Real-time wavefront shaping through scattering media by all-optical feedback. Nature Photonics. 7, 919–924 (2013).

Vellekoop, I. M. Feedback-based wavefront shaping. Opt. Exp. 23, 12189–12206 (2015).

Blanca, C. M. & Saloma, C. Monte Carlo analysis of two-photon fluorescence imaging through a scattering medium. Appl. Opt. 37, 8092–8102 (1998).

Gu, M., Tannous, T. & Sheppard, J. R. Effect of an annular pupil on confocal imaging through highly scattering media. Opt. Lett. 21, 312–314 (1996).

Yoo, K. M., Liu, F. & Alfano, R. R. Imaging through a scattering wall using absorption. Opt. Lett. 16, 1068–1070 (1991).

Vinu, R. V., Kim, K., Somkuwar, A. S., Park, Y. & Singh, R. K. Single-shot optical imaging through scattering medium using digital in-line holography. Preprint at https://arxiv.org/abs/1603.07430 (2016).

Kim, M. K. Adaptive optics by incoherent digital holography. Opt. Lett. 37, 2694–2696 (2012).

Kim, M. K. Incoherent digital holographic adaptive optics. Appl. Opt. 52, A117–A130 (2013).

Rosen, J. & Brooker, G. Digital spatially incoherent Fresnel holography. Opt. Lett. 32, 912–914 (2007).

Singh, A. K., Naik, D. N., Pedrini, G., Takeda, M. & Osten, W. Exploiting scattering media for exploring 3D objects. Light science & applications 6, e16219 (2017).

Singh, A. K., Pedrini, G., Takeda, M. & Osten, W. Scatter-plate microscope for lensless microscopy with diffraction limited resolution. Sci. Rep. 7, 10687 (2017).

Xu, X. et al. Imaging objects through scattering layers and around corners by retrieval of the scattered point spread function. Preprint at https://arxiv.org/abs/1709.10175 (2017).

Wu, T., Dong, J., Shao, X. & Gigan, S. Imaging through a thin scattering layer and jointly retrieving the point-spread-function using phase-diversity. Preprint at https://arxiv.org/abs/1708.02410 (2017).

Goodman, J. W. Introduction to Fourier optics (Roberts and Company Publishers), (2005).

Vijayakumar, A. & Rosen, J. Interferenceless Coded Aperture Correlation Holography – A new technique for recording incoherent digital holograms without two wave interference. Opt. Exp. 25, 13883–13896 (2017).

Jennison, B. K., Allebach, J. P. & Sweeney, D. W. Iterative approaches to computer-generated holography. Opt. Eng. 28, 629–637 (1989).

Vijayakumar, A., Kashter, Y., Kelner, R. & Rosen, J. Coded aperture correlation holography system with improved performance [Invited]. Appl. Opt. 56, F67–F77 (2017).

Acknowledgements

The work was supported by the Israel Science Foundation (ISF) (Grant No. 1669/16) and by the Israel Ministry of Science and Technology (MOST).

Author information

Authors and Affiliations

Contributions

J.R. carried out theoretical analysis for the research. S.M., A.V., and M.K. performed the experiments. The manuscript was written by S.M., A.V., M.K., and J.R. All authors discussed the results and contributed to the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mukherjee, S., Vijayakumar, A., Kumar, M. et al. 3D Imaging through Scatterers with Interferenceless Optical System. Sci Rep 8, 1134 (2018). https://doi.org/10.1038/s41598-018-19344-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-19344-8

This article is cited by

-

Annular multi-focal-phase mask multiplexing based large depth of field imaging by interferenceless coded aperture correlation holography

Scientific Reports (2023)

-

3D single shot lensless incoherent optical imaging using coded phase aperture system with point response of scattered airy beams

Scientific Reports (2023)

-

Interferenceless coded aperture correlation holography with point spread holograms of isolated chaotic islands for 3D imaging

Scientific Reports (2022)

-

A compact single channel interferometer to study vortex beam propagation through scattering layers

Scientific Reports (2020)

-

Extending the depth-of-field of imaging systems with a scattering diffuser

Scientific Reports (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.