Abstract

Useful materials must satisfy multiple objectives, where the optimization of one objective is often at the expense of another. The Pareto front reports the optimal trade-offs between these conflicting objectives. Here we use a self-driving laboratory, Ada, to define the Pareto front of conductivities and processing temperatures for palladium films formed by combustion synthesis. Ada discovers new synthesis conditions that yield metallic films at lower processing temperatures (below 200 °C) relative to the prior art for this technique (250 °C). This temperature difference makes possible the coating of different commodity plastic materials (e.g., Nafion, polyethersulfone). These combustion synthesis conditions enable us to to spray coat uniform palladium films with moderate conductivity (1.1 × 105 S m−1) at 191 °C. Spray coating at 226 °C yields films with conductivities (2.0 × 106 S m−1) comparable to those of sputtered films (2.0 to 5.8 × 106 S m−1). This work shows how a self-driving laboratoy can discover materials that provide optimal trade-offs between conflicting objectives.

Similar content being viewed by others

Introduction

Self-driving laboratories combine automation and artificial intelligence to accelerate the discovery and optimization of materials1,2,3. The increasing flexibility of laboratory automation is enabling self-driving laboratories to manipulate and measure a broader set of experimental variables4. Consequently, a growing number of self-driving laboratories are being developed across a range of fields5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28. While many self-driving laboratories are able to test multiple experimental variables, most optimize for only a single objective (e.g., process parameter, material property)7,8,9,10,11,12,13,14,15,16. This situation is not consistent with most practical applications, where multiple objectives need to be simultaneously optimized29,30,31,32,33,34,35. Consider, for example, how a solar cell must be optimized for voltage, current, and fill factor to yield a high power conversion efficiency36,37; how an electrolyzer must form products at low voltages and high reaction rates and selectivities38; and, how structural alloys are optimized for both strength and toughness31,35. These and other applications motivate the emerging use of self-driving laboratories for multiobjective optimization18,19,20,21,22,23,24,25,26,27,28.

The optimization of materials for multiple objectives can be challenging because improving one objective often compromises another (e.g., decreasing the bandgap of the light-absorbing material in a photovoltaic cell increases the photocurrent but decreases the voltage39). As a result, there is often no single champion material, but rather a set of materials exhibiting trade-offs between objectives (Fig. 1). The set of materials with the best possible trade-offs lie at the Pareto front. Materials on the Pareto front cannot be improved for one objective without compromising one or more other objectives. Most self-driving laboratories used for multiobjective optimization, however, identify only a single optimal material based on preferences specified in advance of the experiment18,19,20,21,22,23,24,25.

No single optimal material exists when searching for materials that satisfy two or more conflicting objectives (e.g., film conductivity and processing temperature). Rather, there is a set of materials that offer the best possible tradeoffs between the objectives (indicated by the blue curve). The state-of-the-art materials that offer the best known compromises between the two objectives form the experimentally observed Pareto front (black points).

Here, we use a self-driving laboratory to map out an entire Pareto front27,28. We apply this approach to thin film materials for the first time by mapping out a trade-off between film conductivity and processing temperature. In doing so, our self-driving laboratory identifies previously untested conditions that decrease the temperature required for the combustion synthesis of palladium films from 250 to 190 °C40. This finding increases the scope of polymeric substrates that palladium can be deposited on by combustion synthesis to include Nafion41, polyethersulfone42, and heat-stabilized polyethylene napththalate42. Our self-driving laboratory also identifies conditions suitable for spray coating homogeneous films on larger substrates with conductivities approaching those of films made by vacuum deposition methods. The approach presented here is highly relevant to the materials sciences because it identifies optimal materials for every preferred tradeoff between objectives.

Results

Autonomously discovering a Pareto front

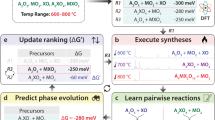

We upgraded the hardware and software of our existing self-driving laboratory, Ada8, (Fig. 2) to study the combustion synthesis of conducting palladium films. This upgraded self-driving laboratory was designed to map out a Pareto front that shows the tradeoff between the temperature at which the films are processed and the film conductivity. We selected combustion synthesis as an optimization problem because it is a solution-based method for making functional metal coatings. This method, however, has not yet been scaled and has not been proven for making high-quality, conductive metal films40,43,44. Combustion synthesis can form coatings at lower temperatures, enabling the potential use of inexpensive polymeric substrates45,46, but film conductivity typically decreases with processing temperature43. This situation presents a trade-off: to what extent can the conductivity be maximized while the processing temperature is minimized? The answer to this question would enable the researcher to determine, for example, what types of substrates could be layered with a metal coating of certain conductivity. We therefore leveraged Ada to effectively study the numerous compositional47,48 and processing variables43,49 that influence processing temperatures and the corresponding conductivities.

a Schematic of the Ada self-driving laboratory. Ada consists of two robots (N9 and UR5e) with overlapping work envelopes. These robots work together to synthesize and characterize thin film samples. The N9 robot is a 4-axis arm equipped to mix, drop cast, and anneal precursors to create thin-film samples. The N9 also performs imaging and 4-point probe conductance measurements on the films it creates. The UR5 robot is a larger 6-axis arm equipped to transport samples to additional modules, including an XRF microscope. b Steps in the automated experimental workflow. Each iteration of the experiment produces a single, drop-cast, thin-film sample; images of the sample before and after annealing; an XRF map of the quantity of palladium in the film; and a map of the film conductance measured by the 4-point-probe at different locations on the sample. After the sample is characterized, the film conductivity is calculated and the qEHVI algorithm is used to autonomously plan the next experiment. All scale bars are 5 mm.

For this study we configured Ada to manipulate four variables: fuel identity, fuel-to-oxidizer ratio, precursor solution concentration, and annealing temperature (Fig. 3a). We confined the study to mixtures of two fuels, glycine and acetylacetone, that we independently identified to yield conductive films at temperatures below 300 °C. The fuel-to-oxidizer ratio was varied because it controls product oxidation in bulk combustion syntheses50,51. The precursor concentration influences the morphology of the drop-casted films. Finally, we varied the processing temperature which may influence the conductivity through solvent removal, precursor decomposition, film densification, impurity removal, grain growth, oxidation, or cracking52,53,54.

a Maps of the combustion synthesis conditions required to obtain experimental outcomes on the Pareto front. Sampled points not on the Pareto front are shown in gray. The combustion synthesis reaction, with parameters manipulated during the optimization highlighted, is shown. In this reaction x controls the fuel blend and φ is the fuel-to-oxidizer ratio, as calculated using Jain’s method (see Supplementary Methods). The fuel-to-oxidizer ratio used is scaled by k = 36/5(8 - 5x) to account for the differing reducing valences of the acetylacetone and glycine fuels. b The empirical Pareto fronts from each of the four campaigns (solid lines) reveal the observed trade-off between temperature and conductivity. The experimental points which define the fronts are shown with open markers. Sampled points not on the Pareto front are shown in gray.

Flexible automation4 enabled us to upgrade Ada (Fig. 2a) by coupling a larger, 6-axis robot to the existing smaller, 4-axis robot. The smaller robot (Fig. 2b) deposited and characterized the thin films8, while the larger robot transported the samples to a commercial X-ray fluorescence (XRF) microscope for elemental analysis. These two robots jointly executed a 7-step experimental workflow (Fig. 2c, see “Methods” section). First, a combustion synthesis precursor solution was formulated from stock solutions and then drop-cast onto a glass microscope slide. The resulting precursor droplet was imaged and then annealed in a forced-convection oven to form a film. The film was subsequently characterized by XRF microscopy, imaging, and 4-point probe conductance mapping. The conductivity of each film was determined by combining the conductance with a film thickness estimated by XRF (see “Autonomous workflow step 7” in “Methods” section, Supplementary Fig. 2). Finally, the conductivity and processing temperature for each film were passed to a multiobjective Bayesian optimization algorithm55 to plan the next experiment based on all the available data (see “Autonomous workflow step 8” in “Methods” section). The algorithm we used is called q-expected hypervolume improvement (qEHVI)55.

All of the steps in the autonomous workflow were performed without human intervention at a typical rate of two samples an hour. Ada could run unattended for 40–60 experiments until the necessary consumables (e.g., pipettes tips, mixing vials, glass substrates, and precursors; see “Methods” section) were exhausted. We used Ada to execute a total of 253 combustion synthesis experiments that explored a wide range of pertinent composition and processing variables.

The qEHVI algorithm is one of a number of a posteriori multiobjective optimization algorithms designed to identify the Pareto front55,56,57,58. These multiobjective optimization methods are known as a posteriori methods because preferred solutions are selected after the optimization. We chose to use an a posteriori method for this exploratory study, because we sought to identify a range of Pareto-optimal outcomes rather than a single optimal point. We selected the qEHVI algorithm because previously reported benchmarks show that the qEHVI algorithm often resolves the Pareto front in fewer experiments than other algorithms.55

The qEHVI algorithm directed our self-driving laboratory to quantify the trade-off between film conductivity and annealing temperature (Fig. 3). We manually selected eight synthesis conditions spanning most of the design space to provide initialization data for the qEHVI algorithm (Supplementary Table 1). After executing these initial experiments, Ada executed more than 50 iterative qEHVI-guided experiments to map the Pareto front of annealing temperature and conductivity. We performed this autonomous optimization campaign in quadruplicate. Each replicate generated a Pareto front showing a clear trade-off between temperature and conductivity (Fig. 3b).

The synthesis conditions tested during the optimization are shown in Fig. 3a; the conditions that created materials on the Pareto front are highlighted. The data revealed that the optimal precursors typically were those of concentrations near 6 mg mL−1, fuel-to-oxidizer ratios below 1, and fuel blends consisting primarily of acetylacetone. Notably, our experiments did not reveal a single optimal synthesis condition. The conditions required to obtain the maximum conductivity depended in part on the annealing temperature. Specifically, conductive films created below 200 °C required precursors with predominantly acetylacetone fuel. At higher temperatures, however, glycine-rich fuel blends also yielded samples on the Pareto front. The data shows how the fuel-to-oxidizer ratio could vary widely for fuels rich in acetylacetone yet still yield films on the Pareto front. The Pareto-optimal samples resulting from glycine-rich fuel blends, however, did not exhibit a wide range of fuel to oxidizer values. These observations highlight the richness of the data generated by the self-driving laboratory.

Quantification of algorithm performance

We used computer simulations to quantify the benefit of the qEHVI algorithm relative to random search (an open-loop sampling technique that does not use feedback from the experiment to determine which experiment to do next). These simulations were performed by running both the random and qEHVI sampling techniques on a response surface fit to the experimental data (see “Methods” section). Scenarios with and without experimental noise were simulated by adding synthetic noise to the response surface as appropriate (see “Models of the experimental response surface and noise” in “Methods” section).The hypervolume (i.e., the area under the Pareto front) was used to measure the progress of optimization (Fig. 4a). We used acceleration factor (Fig. 4b) and enhancement factor (Fig. 4c) to compare our closed-loop sampling using qEHVI to open-loop sampling using random search; see “Methods” section59. In a noise-free scenario, qEHVI required less than 100 samples to outperform 10,000 random samples (Fig. 4a). The performance of the qEHVI algorithm degraded in the presence of simulated experimental noise (see “Methods” section), but still exceeded the performance of random search. This performance decrease due to noise emphasizes the importance of minimizing experimental noise when developing a self-driving experiment. Benchmarks comparing other closed-loop and open-loop sampling techniques yielded similar results (see Supplementary Fig. 11). These findings highlight how self-driving laboratories can effectively search large materials design spaces without requiring extremely high throughput.

a The hypervolumes achieved by the simulated qEHVI and random searches. The median (solid line) and interquartile range (shaded bands) of the results are shown for simulations with and without simulated experimental noise. b The acceleration factors for the qEHVI algorithm relative to random search. The geometric mean is also shown (dashed line). c The enhancement factor for the qEHVI algorithm relative to random search.

Translation of discovery to a scalable manufacturing process

The practical application of combustion synthesis would require the deposition of uniform films over large areas that are inaccessible to drop casting. On this basis, we set out to combine palladium combustion synthesis with ultrasonic spray coating60. We sprayed precursors directly onto a preheated glass substrate60 (Fig. 5a, see “Methods” section). The precursors decomposed in less than five minutes to yield reflective, conductive palladium films (Fig. 5b). An XRF map of the films (Fig. 5c) showed improved homogeneity relative to the drop-cast films (Fig. 2b).

a Spray coating apparatus. An ultrasonic nozzle attached to an overhead XYZ stage (not visible) is used to spray palladium combustion synthesis precursors onto glass substrates placed on a hot plate with an aluminum fixture. The precursors decompose to yield palladium films. Scale bar is 3 cm. b Photograph of a typical resulting film on a 3″ × 1″ glass substrate. Sharp edges were produced by masking the substrate using Kapton tape. c XRF map of the sample pictured in b. To aid visualization, the photograph in panel a has been flipped horizontally. Scale bars in b, c are 1 cm.

We performed additional spray coating experiments to verify that the trends observed in the autonomous optimization translate to spray coating (i.e., that conductive palladium films can be obtained below 200 °C and that the film conductivity increases with temperature). Specifically, we spray coated palladium films using three recipes from the autonomously identified Pareto front, with temperatures of 191, 200, and 226 °C (Fig. 6 and Supplementary Table 2). Triplicate samples were spray coated using each recipe. All three recipes yielded films approximately 50–60 nm thick, as measured by XRF microscopy (see “Methods” section). All the films were relatively uniform, with spatial variations in the film thickness less than 5% of the mean within an 8 mm × 20 mm region at the center of each sample (see “Methods” section and Supplementary Table 3). Spatial variations in the conductivity within the same region were measured using the robot and were less than 18% of the mean for all samples (see “Methods” section and Supplementary Table 3). The film conductivity of the lowest temperature recipe (T = 191 °C) was 1.1 × 105 S m−1, which is approximately 1% of the bulk conductivity of palladium61. The film conductivity can increase by more than an order of magnitude when the spray coating temperature is increased by 35 °C. The highest temperature recipe tested (T = 226 °C) yielded palladium films with a conductivity of 2.0 × 106 S m−1, which is comparable to the conductivities of sputtered palladium films reported in the literature (2.0–5.8 × 106 S m−1; Fig. 6)62,63,64. These findings create new opportunities to deposit palladium films without vacuum onto large-area substrates, including an expanded range of temperature-sensitive polymers (e.g., Nafion41, polyethersulfone42, and heat-stabilized polyethylene naphthalate42). One application of this deposition process could be the fabrication of large, supported palladium membranes for more cost-effective electrocatalytic palladium membrane reactors65.

The conductivity values for sputtered films62,63,64 and bulk palladium61 are from previous literature. The spray coating recipes are taken directly from the Pareto front and are given in Supplementary Table 2. For the spray combustion data (see also Supplementary Table 3), each point shows the conductivity of one of the three replicate samples for each recipe. The bars show the average conductivity across all three replicates for each recipe.

Discussion

Here, we mapped out a Pareto front between film processing temperature and conductivity using a self-driving laboratory guided by the qEHVI multi-objective optimization algorithm. This tradeoff is just one example of the conflicting objectives routinely faced by materials scientists to which our method could be applied. Our approach eliminates the need for the researcher to specify preferences between competing objectives in advance of the experiment, and also produces a richer, more valuable data set. In this case, the temperature–conductivity Pareto front is more useful than optimizing conductance for a fixed temperature limit because processing temperature limits vary depending on the application. Our self-driving laboratory also identified synthesis conditions that translated to a scalable spray-coating method for depositing high-quality, high-conductivity palladium films at temperatures above 190 °C. This work shows how self-driving laboratories can potentially accelerate the translation of materials to industry, where satisfying multiple objectives is essential.

Methods

Materials

MeCN (CAS 75-05-8; high-performance liquid chromatography (HPLC) grade, ≥99.9% purity), glycine (CAS 56-40-6, ACS reagent grade, >98.5% purity) and acetylacetone (CAS 123-54-6; ≥99% purity) were purchased from Sigma-Aldrich. Urea (CAS 57-13-6, ultra-pure; heavy metal content 0.01 ppm) was purchased from Schwarz/Mann. Palladium(II) nitrate hydrate (Pd(NO3)2•H2O; Pd ~40% m/m; 99.9% Pd purity, CAS 10102-05-3) was purchased from Strem Chemicals, Inc. All chemicals were used as received without further purification.

Manual preparation of stock solutions

The self-driving laboratory is provided with starting materials in the form of stock solutions which are prepared manually and then placed in capped 2 mL HPLC vials in a tray where they can be accessed by the self-driving laboratory. All solutions were prepared at a concentration of 12 mg mL−1. The Pd(NO3)2•H2O solution was prepared using MeCN as a solvent while all other solutions were prepared using deionized H2O.

Preparation of glass substrates and other consumables

In addition to stock solutions, the self-driving laboratory uses consumable glass substrates (75 mm × 25 mm × 1 mm microscope slides; VWR catalog no. 16004-430), 2 mL HPLC vials (Canadian Life Science), and 200 µL pipettes (Biotix, M-0200-BC). These are placed in appropriate racks and trays for access by the robotics.

The HPLC vials and pipettes were used as received, whereas the microscope slides were cleaned by sequential sonication in detergent, deionized water, acetone, and isopropanol for 10 min each8. Wells of 18 mm diameter were then created on the microscope slides using a sprayed enamel coating (DEM-KOTE enamel finish) and circular masks placed at the center of each slide (Supplementary Fig. 1). The wells serve to confine the precursor solution before it dries.

Self-driving laboratory

The self-driving laboratory consists of a precision 4-axis laboratory robot (N9, North Robotics) coupled with a 6-axis collaborative robot (UR5e, Universal Robotics). The 4-axis robot is equipped to perform entire thin film deposition and characterization workflows and is described in our previous work8. The 6-axis robot enables samples to be transferred to a variety of additional modules, including the XRF microscope used here. Both robots are equipped with vacuum-based tools for substrate handling. All robots and instruments were controlled by a PC with software written in Python.

Overview of autonomous robotic workflow

The majority of operations in the autonomous robotic workflow are performed by the 4-axis laboratory robot. Samples are transported between the 4-axis robot and the XRF microscope by the 6-axis robot.

The 4-axis robot prepared each sample by combining stock solutions to form a precursor mixture, drop casting this precursor onto a glass slide, and then annealing the sample in a forced convection oven (Supplementary Fig. 1). The samples were characterized by white light photography before and after annealing, X-ray fluorescence microscopy, and 4-point-probe conductance measurements. The resulting data was then automatically analyzed using a custom data pipeline implemented in Python. Finally, the result of the experiment was fed to a Bayesian optimizer which used an expected hypervolume improvement acquisition function to select the next experiment to be performed. Each of these steps is described in further detail below.

Autonomous workflow step 1: mix precursors

The 4-axis robot formulated each precursor by pipetting varying volumes of the stock solutions described above into a clean 2 mL HPLC vial. Gravimetric feedback from an analytical balance (ZSA120, Scientech) was used to minimize and record pipetting errors. The precursor was mixed by repeated aspiration and dispensing.

Autonomous workflow step 2: drop cast precursor

The 4-axis robot used a vacuum-based substrate handling tool to place a clean glass slide onto a tray. This robot then created a thin film sample by using a pipette to drop cast 98 µL of the precursor into a predefined well on the slide. The solution was ejected from the pipette at a rate of 5 µL s−1 from a height of approximately 1.5 mm above the top surface of the substrate.

Autonomous workflow step 3: image precursor droplet

The 4-axis robot acquired visible-light photographs of each sample before annealing. This robot positioned samples 90 mm below a camera (FLIR Blackfly S USB3; BFS-U3-120S4C-CS) using a Sony 12.00 MP CMOS sensor (IMX226) and an Edmund Optics 25 mm C Series Fixed Focal Length Imaging Lens (#59–871). The C-mount lens was connected to the CS-mount camera using a Thorlabs CS- to C-Mount Extension Adapter, 1.00″-32 Threaded, 5 mm Length (CML05). The sample was illuminated from the direction of the camera using a MIC-209 3-W ring light. For imaging, the lens was opened to f/1.4, and black flocking paper (Thorlabs BFP1) was placed 10 cm behind the sample.

Autonomous workflow step 4: annealing

After drop casting, the 4-axis robot used the substrate handling tool to transport the precursor-coated slide into a purpose-built miniature convection oven for annealing at a variable temperature between 180 and 280 °C. The most important features of the oven are a low-thermal mass construction (lightweight aluminum frame with glass-fiber insulation) and internal and external fans. These features enable rapid heating and cooling of the sample (Supplementary Fig. 12). A pneumatically actuated lid enables robotic access to the sample. The oven employs a ceramic heating element (P/N 3559K23, McMaster Carr) controlled by a PID temperature controller (P/N CN7523, Omega Engineering). A type-K thermocouple located in the oven air space provides temperature feedback to the controller. In the experiments performed here, the sample was inserted into the oven which was then ramped at 40 °C per minute to the temperature set point, which was then held for 450 s. Upon completion of the hold, the oven lid was opened and a cooling fan turned on to blow ambient temperature air through the oven and over the sample. The sample was removed from the oven after the temperature dropped below 60 °C. The oven was further cooled to below 40 °C prior to loading of the next sample.

Autonomous workflow step 5: XRF imaging and data analysis

The self-driving laboratory acquired hyperspectral X-ray fluorescence (XRF) images of each sample using a Bruker M4 TORNADO X-ray fluorescence microscope equipped with a customized sample fixture. Samples were transported to the XRF microscope by the UR5e 6-axis robotic arm equipped with a vacuum-based substrate handling tool similar to the one used by the 4-axis N9 robot. A dedicated exchange tray accessible to both robots enabled samples to be passed from one robot to the other.

The XRF microscope has a rhodium X-ray source operated at 50 kV/600 µA/30 W and polycapillary X-ray optics yielding a 25 µm spot size on the sample. The instrument employs twin 30 mm2 silicon drift detectors and achieves an energy resolution of 10 eV. Hyperspectral images were taken over a 20 mm × 20 mm area at a resolution of 125 × 125 pixels. The XRF spectra obtained (reported in counts) were scaled by the integration time (50 ms) and the energy resolution (10 eV) to yield units of counts s−1 eV−1.

To quantify the relative amount of palladium in the film, the palladium Lyman-alpha X-ray fluorescence line (2.837 keV) was integrated from 2.6 to 3.2 keV. The resulting counts were converted to film thickness estimates by applying a calibration factor obtained using reference samples (see below). Ninety-seven points of interest are defined within the XRF hypermap of the sample, as defined in Supplementary Fig. 3. For each point of interest, the average XRF counts per second were calculated over a 3 mm × 3 mm area.

Autonomous workflow step 6: image annealed film

The self-driving laboratory acquired visible light photographs (as described in step 3) of each sample after annealing.

Autonomous workflow step 7: film conductivity measurement

After hyperspectral XRF imaging, the sample was returned by the UR5e robot to the N9 robot for film conductance measurements. Four-point probe conductance measurements were performed with a Keithley Series K2636B System Source Meter instrument connected to a Signatone four-point probe head (part number SP4-40045TBN; 0.040-inch tip spacing, 45 g pressure, and tungsten carbide tips with 0.010-inch radii) by a Signatone triax to BNC feedthrough panel (part number TXBA-M160-M). The source current was stepped from 0 to 1 mA in 0.2 mA steps. After each current step, the source meter was stabilized for 0.1 s and the voltage across the inner probes was then averaged for three cycles of the 60 Hz power line (i.e., for 0.05 s) and recorded. Conductance measurements were made on the same 97 points of interest as analyzed in the XRF data, as defined in Supplementary Fig. 3.

The film conductivity was calculated using a custom data analysis pipeline implemented in Python using the open-source Luigi framework66. This pipeline combined conductance data and XRF data to estimate the film conductivity at each of the 97 points of interest on the sample.

For each set of current–voltage measurements at each position on each sample, the RANSAC robust linear fitting algorithm67 was used to extract the conductance (dI/dV). The voltage compliance limit of the K2636B was set to 10 V and voltage measurements greater than 10 V were therefore considered to have saturated the Source Meter instrument and automatically discarded by the data analysis pipeline.

The conductivity of the thin films was then calculated by combining the 4-point-probe conductance data with the film thicknesses estimated by XRF:

where dI/dV is the conductance from the 4-point-probe measurement, t is estimated film thickness from the XRF measurements, and σ is conductivity.

Due to the poor morphology of the drop-cast films, a robust conductivity estimation scheme was employed. First, conductance data was excluded for any measurement positions with zero conductance. Next, outliers were excluded from the remaining conductance data using a kernel density exclusion method (see below). Outliers were also excluded from the XRF film thickness estimates using the same exclusion method. Conductivities were calculated for each position on the sample for which neither conductance nor XRF data was excluded. The mean of these conductivities was returned to the optimizer (see below). In cases where all points were discarded, a mean conductivity of 0 was reported.

The outlier kernel density exclusion method was performed by calculating Gaussian kernel density estimates for the conductance and XRF data, normalizing the density between 0 and 1, and rejecting data points with a kernel density below 0.3. Bandwidths of 5 × 10−3 μΩ−1 m−1 and 5 × 103 cps were used for the conductance and XRF data, respectively.

Autonomous workflow step 8: algorithmic experiment planning

The experiment parameters for each optimization experiment performed on the autonomous laboratory were determined by the qEHVI55 multiobjective Bayesian optimization algorithm. In brief, this algorithm proposes experiments expected to increase the area underneath the Pareto front by the largest amount. More formally, the algorithm proposes a batch of q experiments (q = 1 here, but q could be increased to exploit parallelized experimentation), which are collectively expected to increase the hypervolume between the Pareto front and a reference point by the largest amount. The hypervolume is a generalization of volume to an arbitrary number of dimensions; this generalization supports optimization with more than two objectives. The reference point must be specified prior to the optimization and specifies a minimum value of interest for each objective.

The algorithm involves two major conceptual steps: modeling the objectives from data and proposing the next experiment. In the configuration used here, each objective is assumed to be independent and is modeled with an independent gaussian process (see Supplementary Figs. 5 and 6). Based on the models for each objective, the expectation value of the hypervolume improvement associated with any candidate experiment can be computed; the candidate experiment with the largest expected hypervolume improvement is selected. We ran the qEHVI algorithm using the implementation available in the open-source BoTorch Bayesian optimization library68,69. We used a temperature reference point at the upper limit of the experiment (280 °C) so that any outcome with a processing temperature below this upper limit would be targeted. We used a dynamic conductivity reference point set to 5% of the running observed maximum conductivity. This dynamic reference point ensured that the optimization would identify Pareto-optimal outcomes over a wide range of conductivity values and did not require prior knowledge of the scale of conductivity values expected. We used heteroskedastic Gaussian processes to model both the conductivity and the temperature68. We assigned each conductivity point an uncertainty equal to 20% of its value, which is comparable to the repeatability of the experiment (Supplementary Fig. 13). Zero uncertainty was assigned to the temperature values, which were manipulated rather than responding variables and were trivial to model.

Calibration of XRF signal against reference samples

To enable palladium film thickness to be estimated from the XRF signal, a calibration procedure was performed on sputtered palladium reference samples having four different nominal thicknesses (10, 50, 100, and 250 nm). These samples were characterized by profilometry and XRF. A linear relationship between the film thickness and the XRF counts was observed (see Supplementary Fig. 2). This relationship was used to estimate the thickness of each sample from the XRF data.

The reference samples were sputtered onto clean glass microscope slides (see cleaning procedure above) using a Univex 250 sputter deposition system with a DC magnetron source at 100 W and an argon working pressure of 5 × 10−6 bar. The deposition chamber base pressure is 5 × 10−9 bar. Films were deposited after 1 min of pre-sputtering. The substrate holder rotated at 10 rpm. Nominal film thickness was monitored using a quartz crystal microbalance mounted in the sputter chamber. A 2-inch diameter palladium sputter target was used (99.99%, ACI Alloys). Step edges for profilometry were obtained by placing strips of Kapton™ tape onto the substrates prior to sputtering and removing these after sputtering. The substrates were rinsed with acetone and IPA to remove any Kapton™ tape residue prior to performing profilometry.

Profilometry was performed on the reference samples using a Bruker DektakXT stylus profilometer. XRF was performed on the reference samples using the same settings used for the drop-casted samples during the optimization campaigns (see above).

Deposition and characterization of spray-coated samples

The spray coater was built from an ultrasonic nozzle (Microspray, USA) mounted to a custom motorized XYZ gantry system (Zaber Technologies Inc., Canada) above a hot plate (PC-420D, Corning, USA). Precursor ink was fed to the nozzle by a syringe pump (cavro centris pump PN: 30098790-B, Tecan Trading AG, Switzerland). The ultrasonic spray nozzle was operated at 3 W and 120 kHz. For each recipe, a total of 700 µL of precursor was sprayed onto a glass substrate (75 mm × 25 mm × 1 mm microscope slides; VWR catalog no. 16004-430) placed on a custom aluminum fixture mounted to the hotplate. Approximate substrate temperatures were measured using a thermocouple attached to a glass substrate with thermal cement. This instrumented substrate was placed at a position on the hotplate fixture symmetrical to the position where the substrates to be coated were placed. The hotplate power was adjusted until the steady-state temperature of the instrumented substrate was within 4 °C of the desired temperature before spray coating each of the recipes reported here. To achieve consistent thermal contact between the substrates and the hotplate fixture, both the instrumented substrate and substrate to be coated were affixed to the hotplate with thermal paste (TG-7, Thermaltake Technology Co., Taiwan). The spray coater nozzle speed was 5.1 mm s−1, the nozzle-to-substrate distance was 15 mm, the spray flow rate was 2 µL s−1, and the carrier gas flow rate was 7 L min−1. When spraying, the nozzle moved in a serpentine pattern consisting of twelve 50 mm lines with 25 mm spacing (see an illustration of the pattern in Supplementary Fig. 14). The coating on each sample was produced by repeating this spray pattern three times with no delay between passes. After spray coating, the samples were left to anneal on the hot plate for 5 min.

The spray-coated palladium films were characterized at 26 locations on a 2 × 13 grid within an 8 × 20 mm region of interest at the center of the film (see Supplementary Fig. 14). The amount of palladium at each location was measured using the XRF microscope and converted to a film thickness estimate by applying the same calibration method used for the drop-cast films. The film conductance at each location was measured using the 4-point probe system on the robot described above. The film conductivity was calculated at each of the 26 measurement locations by combining the 4-point probe conductance and XRF film thickness values for that location using Eq. (1). The mean and standard deviations of the 26 resulting thickness and conductivity values are reported for each film in Supplementary Table 3.

Computer simulations of optimization algorithm performance

Computer simulations were used to study the performance of the qEHVI algorithm for optimizing the combustion synthesis experiments. A model of the experimental response surface was built from the experimental data. Experimental optimizations were then simulated by sampling the model using grid search, random search, Sobol sampling, and the qEHVI and qParEGO algorithms. Optimization performance was quantified with and without simulated experimental noise. The performance of qEHVI relative to random sampling was quantified using the acceleration factor (AF) and enhancement factor (EF) metrics59. These simulation procedures are described in more detail below.

Models of the experimental response surface and noise

Gaussian process regression was used to create a model of the experimental response surface using the combined data from all four optimization campaigns. This model predicts the experimental outputs (i.e., annealing temperature and conductivity) from the experimental inputs (i.e., fuel-to-oxidizer ratio, fuel blend, total concentration, and annealing temperature). Our model is composed of two separate Gaussian processes, as implemented by the scikit-learn Python package67. Each Gaussian process is regressed on a single experimental output (i.e., either temperature or conductivity) and all four of the experimental inputs. The kernels used for the conductivity (kcond) and temperature (ktemp) models are:

where klin is a constant kernel, kSE is a squared exponential kernel, and knoise is a white noise kernel, and * indicates that the lengthscale of the kernel is fixed to 1.

The four types of input data and two types of output data were each normalized prior to training the model. To simplify the optimization to be strictly a maximization problem, the temperature values (which must be minimized) were multiplied by negative one. The leave-one-out cross-validation residuals (LOOCV; Supplementary Figs. 4, 9, and 10 and Supplementary Table 4) are comparable to the measured experimental uncertainties (Supplementary Table 1). We also plotted the LOOCV residuals as a function of each input (Supplementary Fig. 6), each modeled output (Supplementary Fig. 7), and sampling order (Supplementary Fig. 8) and observed that the distribution of the residuals was largely random.

For the simulations with no noise, the model posterior means were used directly to represent the experiment. For simulations with experimental noise, noisy conductivity values were simulated by randomly sampling a modified Maxwell–Boltzmann distribution. First, the Maxwell–Boltzmann distribution was flipped across the y-axis by negating the x term. Second, the mean of this Maxwell–Boltzmann distribution was set to the noiseless model posterior mean. Finally, the variance of the Maxwell–Boltzmann distribution was set to be equal to the noise level (or variance) of the white noise kernel. We chose to employ Maxwell–Boltzmann noise to model the experimental noise because of the tendency of drop-casted samples to exhibit a wide range of downwards deviations in the apparent conductivity due to the poor sample morphology.

The Maxwell–Boltzmann probability density function is

where a is the distribution parameter. Since we set the variance of Maxwell–Boltzmann distribution (\({\sigma }_{{{{{{\mathrm{B}}}}}}}^{2}\)) equal to the white noise kernel noise level (\({\sigma }_{{{{{{{\mathrm{noise}}}}}}}}^{2}\))

We computed the distribution parameter for the Maxwell–Boltzmann-distributed simulated experimental noise as:

If subtracting the Maxwell–Boltzmann noise from the posterior mean resulted in a value less than zero, the noisy model value was set to zero.

Sampling strategies

To compare the performance of closed-loop and open-loop approaches, several sampling strategies (grid search, random search, Sobol sampling, and the qEHVI and qParEGO algorithms) were used to sample both the noise-free and noisy experimental models. Random sampling was performed by generating samples from a uniform distribution across the entire normalized input space. Sobol sampling was performed with a scrambling technique such that each Sobol sequence is unique to yield a statistically meaningful distribution of optimizations70. qEHVI and qParEGO are initiated with ten scrambled Sobol points. The qEHVI algorithm was configured as it was for the physical experiments detailed above. The qParEGO algorithm was configured using the default settings, except for the reference point which was configured in the same way done for qEHVI. The complete benchmarking results are shown in Supplementary Fig. 11.

Simulated optimization campaigns

The performance of each sampling strategy (grid, random, Sobol, qParEGO, and qEHVI) was determined both with and without experimental noise. Each simulated optimization campaign was performed for 1000 replicates and 100 experimental iterations, except for random which was performed for 100,000 iterations for use in the acceleration calculations (shown in Fig. 4b). If an optimization algorithm produced an error during optimization, then that replicate was removed and repeated.

Pareto front and hypervolume

The Pareto front is defined by the set of samples for which no other sample simultaneously improves all the objectives. When assessing the performance of a simulation, the hypervolume was computed using the least desirable value of each objective function (the nadir objective vector) as the reference point (i.e., zero conductivity and 280 °C temperature). This reference point was held constant for evaluating all of the simulated optimization campaigns. We assessed the performance of the noisy optimizations such that the only difference between the noisy and noise-free optimizations was the information provided to the optimization algorithm. To perform this assessment, we followed the procedure described by Bakshy and coworkers58 wherein the hypervolume for optimizations using the noisy experimental model were calculated from the equivalent point from the noiseless model. Since each objective of the model is normalized to the range [0, 1], the hypervolume of the model is in the range [0, 1]. For each simulated optimization campaign, the normalized hypervolume was calculated at each iteration. When calculating acceleration and enhancement factors, each of the 1000 simulated qEHVI campaigns was compared to each of the 1000 simulated random campaigns, resulting in 1,000,000 comparisons.

Calculation of acceleration and enhancement factor

The acceleration factor quantifies how much faster one sampling technique is than another (Eq. 5). For example, if sampling technique B requires 40 samples to reach the performance attained by technique A after 20 samples, the acceleration factor of A relative to B at 20 samples is 2.

where \({{{{{\rm{A}}}}}}{{{{{{\rm{F}}}}}}}_{{{{{{\rm{A}}}}}}:{{{{{\rm{B}}}}}}}\left({n}_{{{{{{\mathrm{a}}}}}}}\right)\) is the acceleration of technique A with respect to B at \({n}_{{{{{{\mathrm{a}}}}}}}\) samples, and \({P}_{i}(n)\) is the performance of technique i at n samples. Note that it is possible for sampling technique A to outperform B such that there exists no value of \({n}_{{{{{{\mathrm{b}}}}}}}\) where \({P}_{{{{{{\mathrm{B}}}}}}}\ge {P}_{{{{{{\mathrm{A}}}}}}}\). In these cases, more samples with technique B are required to make the comparison, otherwise \({{{{{\rm{A}}}}}}{{{{{{\rm{F}}}}}}}_{{{{{{\rm{A}}}}}}:{{{{{\rm{B}}}}}}}\) is not calculable. If \({{{{{\rm{A}}}}}}{{{{{{\rm{F}}}}}}}_{{{{{{\rm{A}}}}}}:{{{{{\rm{B}}}}}}}\) is not calculable, then a lower bound acceleration factor is calculated by assuming that the slow sampling technique would beat the fast sampling technique if it observed one more sample. The acceleration factor in Fig. 4b was reported until these lower bound estimates compose more than 25% of all of the acceleration comparisons.

The enhancement factor of one sampling technique with respect to another for a given number of samples is defined as the ratio of their performance values for the same number of observations (Eq. 6). For example, if sampling technique A reaches a performance value of 7 after 20 samples, and technique B reaches a performance value of 2 after 20 samples, the enhancement of technique A is 3.5 at 20 samples.

where \({{{{{\rm{E}}}}}}{{{{{{\rm{F}}}}}}}_{{{{{{\rm{A}}}}}}:{{{{{\rm{B}}}}}}}(n)\) is the acceleration factor of sampling technique A with respect to B after n samples. When \({P}_{{{{{{\mathrm{A}}}}}}}\left(n\right)=0\) and \({P}_{{{{{{\mathrm{B}}}}}}}\left(n\right)=0\), then \({{{{{{\rm{EF}}}}}}}_{{{{{{\rm{A}}}}}}:{{{{{\rm{B}}}}}}}\left(n\right)=1\). When \({P}_{{{{{{\mathrm{A}}}}}}}\left(n\right) \, > \, 0\) and \({P}_{{{{{{\mathrm{B}}}}}}}\left(n\right) \, > \, 0\), then \({{{{{\rm{E}}}}}}{{{{{{\rm{F}}}}}}}_{{{{{{\rm{A}}}}}}:{{{{{\rm{B}}}}}}}\left(n\right)\) is not calculable. To compare the AF and EF from the repeated simulations, the median, geometric mean and interquartile range were calculated.

Data availability

The raw and processed data generated by the self-driving laboratory in this study is available at https://github.com/berlinguette/ada. All other data related to this paper is available from the corresponding author upon request.

Code availability

All code used in this study was based on open-source Python packages listed in the supplementary information.

References

Tabor, D. P. et al. Accelerating the discovery of materials for clean energy in the era of smart automation. Nat. Rev. Mater. 3, 5–20 (2018).

Stein, H. S. & Gregoire, J. M. Progress and prospects for accelerating materials science with automated and autonomous workflows. Chem. Sci. 10, 9640–9649 (2019).

Häse, F., Roch, L. M. & Aspuru-Guzik, A. Next-generation experimentation with self-driving laboratories. Trends Chem. 1, 282–291 (2019).

MacLeod, B. P., Parlane, F. G. L., Brown, A. K., Hein, J. E. & Berlinguette, C. P. Flexible automation accelerates materials discovery. Nat. Mater. https://doi.org/10.1038/s41563-021-01156-3 (2021).

Ament, S. et al. Autonomous materials synthesis via hierarchical active learning of nonequilibrium phase diagrams. Science Advances 7, eabg4930 (2021).

Bash, D. et al. Multi‐fidelity high‐throughput optimization of electrical conductivity in P3HT‐CNT composites. Adv. Funct. Mater. 2102606 (2021).

Nikolaev, P. et al. Autonomy in materials research: a case study in carbon nanotube growth. npj Comput. Mater. 2, 16031 (2016).

MacLeod, B. P. et al. Self-driving laboratory for accelerated discovery of thin-film materials. Sci. Adv. 6, eaaz8867 (2020).

Langner, S. et al. Beyond ternary OPV: high-throughput experimentation and self-driving laboratories optimize multicomponent systems. Adv. Mater. 32, e1907801 (2020).

Li, J. et al. Autonomous discovery of optically active chiral inorganic perovskite nanocrystals through an intelligent cloud lab. Nat. Commun. 11, 2046 (2020).

Gongora, A. E. et al. A Bayesian experimental autonomous researcher for mechanical design. Sci. Adv. 6, eaaz1708 (2020).

Burger, B. et al. A mobile robotic chemist. Nature 583, 237–241 (2020).

Wang, L., Karadaghi, L. R., Brutchey, R. L. & Malmstadt, N. Self-optimizing parallel millifluidic reactor for scaling nanoparticle synthesis. Chem. Commun. 56, 3745–3748 (2020).

Shimizu, R., Kobayashi, S., Watanabe, Y., Ando, Y. & Hitosugi, T. Autonomous materials synthesis by machine learning and robotics. APL Mater. 8, 111110 (2020).

Dave, A. et al. Autonomous discovery of battery electrolytes with robotic experimentation and machine learning. Cell Rep. Phys. Sci. 1, 100264 (2020).

Deneault, J. R. et al. Toward autonomous additive manufacturing: Bayesian optimization on a 3D printer. MRS Bull. https://doi.org/10.1557/s43577-021-00051-1 (2021).

Hall, B. L. et al. Autonomous optimisation of a nanoparticle catalysed reduction reaction in continuous flow. Chem. Commun. https://doi.org/10.1039/d1cc00859e (2021).

Krishnadasan, S., Brown, R. J. C., deMello, A. J. & deMello, J. C. Intelligent routes to the controlled synthesis of nanoparticles. Lab Chip 7, 1434–1441 (2007).

Moore, J. S. & Jensen, K. F. Automated multitrajectory method for reaction optimization in a microfluidic system using online IR analysis. Org. Process Res. Dev. 16, 1409–1415 (2012).

Walker, B. E., Bannock, J. H., Nightingale, A. M. & deMello, J. C. Tuning reaction products by constrained optimisation. React. Chem. Eng. 2, 785–798 (2017).

Salley, D. et al. A nanomaterials discovery robot for the Darwinian evolution of shape programmable gold nanoparticles. Nat. Commun. 11, 2771 (2020).

Epps, R. W. et al. Artificial chemist: an autonomous quantum dot synthesis bot. Adv. Mater. 32, e2001626 (2020).

Christensen, M. et al. Data-science driven autonomous process optimization. Commun. Chem. 4, 1–12 (2021).

Mekki-Berrada, F. et al. Two-step machine learning enables optimized nanoparticle synthesis. npj Comput. Mater. 7, 1–10 (2021).

Abdel-Latif, K. et al. Self‐driven multistep quantum dot synthesis enabled by autonomous robotic experimentation in flow. Adv. Intell. Syst. 3, 2000245 (2021).

Grizou, J., Points, L. J., Sharma, A. & Cronin, L. A curious formulation robot enables the discovery of a novel protocell behavior. Sci. Adv. 6, eaay4237 (2020).

Schweidtmann, A. M. et al. Machine learning meets continuous flow chemistry: automated optimization towards the Pareto front of multiple objectives. Chem. Eng. J. 352, 277–282 (2018).

Cao, L. et al. Optimization of formulations using robotic experiments driven by machine learning DoE. Cell Rep. Phys. Sci. 2, 100295 (2021).

Maaliou, O. & McCoy, B. J. Optimization of thermal energy storage in packed columns. Sol. Energy 34, 35–41 (1985).

Ahmadi, M. H., Ahmadi, M. A., Bayat, R., Ashouri, M. & Feidt, M. Thermo-economic optimization of Stirling heat pump by using non-dominated sorting genetic algorithm. Energy Convers. Manag. 91, 315–322 (2015).

Li, Z., Pradeep, K. G., Deng, Y., Raabe, D. & Tasan, C. C. Metastable high-entropy dual-phase alloys overcome the strength-ductility trade-off. Nature 534, 227–230 (2016).

Zhang, L. et al. Correlated metals as transparent conductors. Nat. Mater. 15, 204–210 (2016).

Park, H. B., Kamcev, J., Robeson, L. M., Elimelech, M. & Freeman, B. D. Maximizing the right stuff: The trade-off between membrane permeability and selectivity. Science 356, eaab0530 (2017).

Oviedo, F. et al. Bridging the gap between photovoltaics R&D and manufacturing with data-driven optimization. Preprint at https://arxiv.org/2004.13599v1 (2020).

Liu, L. et al. Making ultrastrong steel tough by grain-boundary delamination. Science 368, 1347–1352 (2020).

Ramirez, I., Causa’, M., Zhong, Y., Banerji, N. & Riede, M. Key tradeoffs limiting the performance of organic photovoltaics. Adv. Energy Mater. 8, 1703551 (2018).

Kirkey, A., Luber, E. J., Cao, B., Olsen, B. C. & Buriak, J. M. Optimization of the bulk heterojunction of all-small-molecule organic photovoltaics using design of experiment and machine learning approaches. ACS Appl. Mater. Interfaces 12, 54596–54607 (2020).

Ren, S. et al. Molecular electrocatalysts can mediate fast, selective CO2 reduction in a flow cell. Science 365, 367–369 (2019).

Baumeler, T. et al. Minimizing the trade-off between photocurrent and photovoltage in triple-cation mixed-halide perovskite solar cells. J. Phys. Chem. Lett. 11, 10188–10195 (2020).

Voskanyan, A. A., Li, C.-Y. V. & Chan, K.-Y. Catalytic palladium film deposited by scalable low-temperature aqueous combustion. ACS Appl. Mater. Interfaces 9, 33298–33307 (2017).

Mauritz, K. A. & Moore, R. B. State of understanding of nafion. Chem. Rev. 104, 4535–4585 (2004).

MacDonald, W. A. et al. Latest advances in substrates for flexible electronics. J. Soc. Inf. Disp. 15, 1075 (2007).

Kim, M.-G., Kanatzidis, M. G., Facchetti, A. & Marks, T. J. Low-temperature fabrication of high-performance metal oxide thin-film electronics via combustion processing. Nat. Mater. 10, 382–388 (2011).

Hennek, J. W., Kim, M.-G., Kanatzidis, M. G., Facchetti, A. & Marks, T. J. Exploratory combustion synthesis: amorphous indium yttrium oxide for thin-film transistors. J. Am. Chem. Soc. 134, 9593–9596 (2012).

Perelaer, J. et al. Printed electronics: the challenges involved in printing devices, interconnects, and contacts based on inorganic materials. J. Mater. Chem. 20, 8446–8453 (2010).

Li, D., Lai, W.-Y., Zhang, Y.-Z. & Huang, W. Printable transparent conductive films for flexible electronics. Adv. Mater. 30, 1704738 (2018).

Cochran, E. A. et al. Role of combustion chemistry in low-temperature deposition of metal oxide thin films from solution. Chem. Mater. 29, 9480–9488 (2017).

Wang, B. et al. Marked cofuel tuning of combustion synthesis pathways for metal oxide semiconductor films. Adv. Electron. Mater. 5, 1900540 (2019).

Plassmeyer, P. N., Mitchson, G., Woods, K. N., Johnson, D. C. & Page, C. J. Impact of relative humidity during spin-deposition of metal oxide thin films from aqueous solution precursors. Chem. Mater. 29, 2921–2926 (2017).

Kumar, A., Wolf, E. E. & Mukasyan, A. S. Solution combustion synthesis of metal nanopowders: copper and copper/nickel alloys. AIChE J. 57, 3473–3479 (2011).

Manukyan, K. V. et al. Solution combustion synthesis of nano-crystalline metallic materials: mechanistic studies. J. Phys. Chem. C 117, 24417–24427 (2013).

Mitzi, D. Solution Processing of Inorganic Materials (Wiley, 2008).

Cochran, E. A., Woods, K. N., Johnson, D. W., Page, C. J. & Boettcher, S. W. Unique chemistries of metal-nitrate precursors to form metal-oxide thin films from solution: materials for electronic and energy applications. J. Mater. Chem. A 7, 24124–24149 (2019).

Pujar, P., Gandla, S., Gupta, D., Kim, S. & Kim, M. Trends in low‐temperature combustion derived thin films for solution‐processed electronics. Adv. Electron. Mater. 6, 2000464 (2020).

Daulton, S., Balandat, M. & Bakshy, E. Differentiable Expected Hypervolume Improvement for Parallel Multi-Objective Bayesian Optimization. Advances in Neural Information Processing Systems 33 (eds. Larochelle, H. et al.) 9851–9864 (Curran Associates, Inc., 2020).

Knowles, J. ParEGO: a hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans. Evol. Comput. 10, 50–66 (2006).

Paria, B., Kandasamy, K. & Póczos, B. A Flexible Framework for Multi-Objective Bayesian Optimization using Random Scalarizations. In Proceedings of The 35th Uncertainty in Artificial Intelligence Conference (eds. Adams, R. P. & Gogate, V.) 115 766–776 (PMLR, 2020).

Daulton, S., Balandat, M. & Bakshy, E. Parallel Bayesian Optimization of Multiple Noisy Objectives with Expected Hypervolume Improvement. Advances in Neural Information Processing Systems 34 (eds. Ranzato, M. et al.) (Curran Associates, Inc., 2021).

Rohr, B. et al. Benchmarking the acceleration of materials discovery by sequential learning. Chem. Sci. 11, 2696–2706 (2020).

Yu, X. et al. Spray-combustion synthesis: efficient solution route to high-performance oxide transistors. Proc. Natl Acad. Sci. USA 112, 3217–3222 (2015).

Matula, R. A. Electrical resistivity of copper, gold, palladium, and silver. J. Phys. Chem. Ref. Data 8, 1147–1298 (1979).

Shi, Y. S. Electrical resistivity of RF sputtered Pd films. Phys. Lett. A 319, 555–559 (2003).

Hloch, H. & Wissmann, P. The electrical resistivity of thin pd films grown on Si(111). Phys. Status Solidi A 145, 521–526 (1994).

Anton, R., Häupl, K., Rudolf, P. & Wißmann, P. Electrical and structural properties of thin palladium films. Z. f.ür. Naturforsch. A 41, 665–670 (1986).

Delima, R. S., Sherbo, R. S., Dvorak, D. J., Kurimoto, A. & Berlinguette, C. P. Supported palladium membrane reactor architecture for electrocatalytic hydrogenation. J. Mater. Chem. A 7, 26586–26595 (2019).

Bernhardsson, E. & Freider, E. L. https://github.com/spotify/luigi.

Pedregosa, F. et al. Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Balandat, M. et al. BoTorch: a framework for efficient Monte-Carlo Bayesian optimization. Advances in Neural Information Processing Systems 33 (eds. Larochelle, H. et al.) 21524–21538 (Curran Associates, Inc., 2020).

Bakshy, E. et al. Advances in Neural Information Processing Systems vol. 31 (The MIT Press, 2018).

Owen, A. B. Scrambling Sobol’ and Niederreiter–Xing Points. J. Complex. 14, 466–489 (1998).

Acknowledgements

The authors are grateful to Natural Resources Canada’s Energy Innovation Program (EIP2-MAT-001) for financial support. The authors are grateful to the Canadian Natural Science and Engineering Research Council (RGPIN-2018-06748), Canadian Foundation for Innovation (229288), Canadian Institute for Advanced Research (BSE-BERL-162173), and Canada Research Chairs for financial support. B.P.M., F.G.L.P., T.D.M. and C.P.B. acknowledge support from the SBQMI’s Quantum Electronic Science and Technology Initiative, the Canada First Research Excellence Fund, and the Quantum Materials and Future Technologies Program. We would like to acknowledge the many open-source software communities without whose efforts this project would not have been possible. Please see the supplementary information for further details.

Author information

Authors and Affiliations

Contributions

C.P.B. conceived and supervised the project. B.P.M., K.E.D. and F.G.L.P. designed and performed the autonomous optimization experiments. M.B.R., K.O., C.W., K.E.D., O.P., M.S.E., B.P.M. and F.G.L.P. developed the robotic hardware. M.S.E., O.P. and K.E.D. developed and configured the robotic control software. F.G.L.P. and T.H.H. developed the data analysis software with input from N.T., K.E.D. and B.P.M., F.G.L.P., M.M. and B.P.M. performed and analyzed the simulations. M.S.E. and B.P.M. configured the EVHI optimization algorithm and interfaced it with the self-driving laboratory. K.O., H.N.C. and C.C.R. developed the spray coater hardware and software. C.C.R. performed the spray coating experiments. N.T. performed additional data analysis. D.J.D. performed additional experiments. All authors participated in the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

MacLeod, B.P., Parlane, F.G.L., Rupnow, C.C. et al. A self-driving laboratory advances the Pareto front for material properties. Nat Commun 13, 995 (2022). https://doi.org/10.1038/s41467-022-28580-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-28580-6

This article is cited by

-

Machine intelligence-accelerated discovery of all-natural plastic substitutes

Nature Nanotechnology (2024)

-

AlphaFlow: autonomous discovery and optimization of multi-step chemistry using a self-driven fluidic lab guided by reinforcement learning

Nature Communications (2023)

-

Knowledge-integrated machine learning for materials: lessons from gameplaying and robotics

Nature Reviews Materials (2023)

-

Data-driven development of an oral lipid-based nanoparticle formulation of a hydrophobic drug

Drug Delivery and Translational Research (2023)

-

Autonomous optimization of non-aqueous Li-ion battery electrolytes via robotic experimentation and machine learning coupling

Nature Communications (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.