Abstract

Machine vision technology has taken huge leaps in recent years, and is now becoming an integral part of various intelligent systems, including autonomous vehicles and robotics. Usually, visual information is captured by a frame-based camera, converted into a digital format and processed afterwards using a machine-learning algorithm such as an artificial neural network (ANN)1. The large amount of (mostly redundant) data passed through the entire signal chain, however, results in low frame rates and high power consumption. Various visual data preprocessing techniques have thus been developed2,3,4,5,6,7 to increase the efficiency of the subsequent signal processing in an ANN. Here we demonstrate that an image sensor can itself constitute an ANN that can simultaneously sense and process optical images without latency. Our device is based on a reconfigurable two-dimensional (2D) semiconductor8,9 photodiode10,11,12 array, and the synaptic weights of the network are stored in a continuously tunable photoresponsivity matrix. We demonstrate both supervised and unsupervised learning and train the sensor to classify and encode images that are optically projected onto the chip with a throughput of 20 million bins per second.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Mead, C. A. & Mahowald, M. A. A silicon model of early visual processing. Neural Netw. 1, 91–97 (1988).

Lichtsteiner, P., Posch, C. & Delbruck, T. A. 128×128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576 (2008).

Cottini, N., Gottardi, M., Massari, N., Passerone, R. & Smilansky, Z. A. 33 μW 64×64 pixel vision sensor embedding robust dynamic background subtraction for EVENT detection and scene interpretation. IEEE J. Solid-State Circuits 48, 850–863 (2013).

Kyuma, K. et al. Artificial retinas—fast, versatile image processors. Nature 372, 197–198 (1994).

Posch, C., Serrano-Gotarredona, T., Linares-Barranco, B. & Delbruck, T. Retinomorphic event-based vision sensors: bioinspired cameras with spiking output. Proc. IEEE 102, 1470–1484 (2014).

Zhou, F. et al. Optoelectronic resistive random access memory for neuromorphic vision sensors. Nat. Nanotechnol. 14, 776–782 (2019).

Manzeli, S., Ovchinnikov, D., Pasquier, D., Yazyev, O. V. & Kis, A. 2D transition metal dichalcogenides. Nat. Rev. Mater. 2, 17033 (2017).

Mueller, T. & Malic, E. Exciton physics and device application of two-dimensional transition metal dichalcogenide semiconductors. npj 2D Mater. Appl. 2, 29 (2018).

Pospischil, A., Furchi, M. M. & Mueller, T. Solar-energy conversion and light emission in an atomic monolayer p–n diode. Nat. Nanotechnol. 9, 257–261 (2014).

Baugher, B. W., Churchill, H. O., Yang, Y. & Jarillo-Herrero, P. Optoelectronic devices based on electrically tunable p–n diodes in a monolayer dichalcogenide. Nat. Nanotechnol. 9, 262–267 (2014).

Ross, J. S. et al. Electrically tunable excitonic light-emitting diodes based on monolayer WSe2 p–n junctions. Nat. Nanotechnol. 9, 268–272 (2014).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Li, C. et al. Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2018).

Kim, K. H. et al. A functional hybrid memristor crossbar-array/CMOS system for data storage and neuromorphic applications. Nano Lett. 12, 389–395 (2012).

Shen, Y. et al. Deep learning with coherent nanophotonic circuits. Nat. Photon. 11, 441–446 (2017).

Lin, X. et al. All-optical machine learning using diffractive deep neural networks. Science 361, 1004–1008 (2018).

Hamerly, R., Bernstein, L., Sludds, A., Soljačić, M. & Englund, D. Large-scale optical neural networks based on photoelectric multiplication. Phys. Rev. X 9, 021032 (2019).

Psaltis, D., Brady, D., Gu, X. G. & Lin, S. Holography in artificial neural networks. Nature 343, 325–330 (1990).

Kolb, H. How the retina works: much of the construction of an image takes place in the retina itself through the use of specialized neural circuits. Am. Sci. 91, 28–35 (2003).

Jeong, K.-H., Kim, J. & Lee, L. P. Biologically inspired artificial compound eyes. Science 312, 557–561 (2006).

Choi, C. et al. Human eye-inspired soft optoelectronic device using high-density MoS2-graphene curved image sensor array. Nat. Commun. 8, 1664 (2017).

Schwarte, R. et al. New electro-optical mixing and correlating sensor: facilities and applications of the photonic mixer device (PMD). In Proc. SPIE Sensors, Sensor Systems, and Sensor Data Processing Vol. 3100, 245–253 (SPIE, 1997).

Sugeta, T., Urisu, T., Sakata, S. & Mizushima, Y. Metal-semiconductor-metal photodetector for high-speed optoelectronic circuits. Jpn. J. Appl. Phys. 19, 459 (1980).

Wachter, S., Polyushkin, D. K., Bethge, O. & Mueller, T. A microprocessor based on a two-dimensional semiconductor. Nat. Commun. 8, 14948 (2017).

Goossens, S. et al. Broadband image sensor array based on graphene–CMOS integration. Nat. Photon. 11, 366–371 (2017).

Bishop, C. M. Training with noise is equivalent to Tikhonov regularization. Neural Comput. 7, 108–116 (1995).

Bengio, Y. in Neural Networks: Tricks of the Trade Vol. 7700 (eds Montavon G. et al.) 437–478 (Springer, 2012).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Massicotte, M. et al. Dissociation of two-dimensional excitons in monolayer WSe2. Nat. Commun. 9, 1633 (2018).

Li, D. et al. Two-dimensional non-volatile programmable p–n junctions. Nat. Nanotechnol. 12, 901–906 (2017).

Lv, L. et al. Reconfigurable two-dimensional optoelectronic devices enabled by local ferroelectric polarization. Nat. Commun. 10, 3331 (2019).

Bertolazzi, S., Krasnozhon, D. & Kis, A. Nonvolatile memory cells based on MoS2/graphene heterostructures. ACS Nano 7, 3246–3252 (2013).

Salakhutdinov, R. & Hinton, G. Semantic hashing. Int. J. Approx. Reason. 50, 969–978 (2009).

Castellanos-Gomez, A. et al. Deterministic transfer of two-dimensional materials by all-dry viscoelastic stamping. 2D Mater. 1, 011002 (2014).

Gerchberg, R. W. & Saxton, W. O. A practical algorithm for the determination of the phase from image and diffraction plane pictures. Optik 35, 237–246 (1972).

Acknowledgements

We thank B. Limbacher for discussions and A. Kleinl, M. Paur and F. Dona for technical assistance. We acknowledge financial support by the Austrian Science Fund FWF (START Y 539-N16) and the European Union (grant agreement number 785219 Graphene Flagship and Marie Sklodowska-Curie Individual Fellowship OPTOvanderWAALS, grant ID 791536).

Author information

Authors and Affiliations

Contributions

T.M. conceived the experiment. L.M. designed and built the experimental setup, programmed the machine-learning algorithms, carried out the measurements and analysed the data. J.S. fabricated the ANN vision sensor. S.W. and D.K.P. contributed to the sample fabrication. A.J.M.-M. fabricated and characterized the floating-gate detector. L.M., J.S. and T.M. prepared the manuscript. All authors discussed the results and commented on the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature thanks Yang Chai, Frank Koppens and Sangyoun Lee for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 Photodiode array uniformity.

Gate tunability of the responsivities of all 27 photodetectors. One of the detector elements (pixel 7, subpixel 2) did not show any response to light (due to a broken electrical wire), which, however, had no crucial influence on the overall system performance.

Extended Data Fig. 2 Photodiode characteristics.

a, Current–voltage characteristic curve under dark (blue) and illuminated (green) conditions. The series resistance Rs and shunt resistance Rsh are ~106 Ω and 109 Ω, respectively. For zero-bias operation, we estimate a noise-equivalent power of NEP = Ith/R ≈ 10−13 W Hz−1/2, where R ≈ 60 mA W−1 is the (maximum) responsivity and \({I}_{{\rm{th}}}=\sqrt{4{k}_{{\rm{B}}}T\Delta f/{R}_{{\rm{sh}}}}\) the thermal noise, where kB is the Boltzmann constant, Δf is the bandwidth and T is the temperature. b, Dependence of the short-circuit photocurrent on the light intensity for different split-gate voltages. Importantly, the response is linear (I ∝ P), as assumed in equation (1).

Extended Data Fig. 3

Circuit of the ANN photodiode array.

Extended Data Fig. 4 Experimental setup.

a, Experimental setup for training the classifier and the autoencoder. CW, continuous wave. b, Experimental setup for time-resolved measurements. TIA, transimpedance amplifier. A pulse generator triggers the pulsed laser as well as the oscilloscope. c, Photograph of the optical setup (for schematic see Fig. 2d). d, Flow chart of the training algorithm. The blue shaded boxes are interactions with the ANN photodiode array.

Extended Data Fig. 5 Classifier training.

Photoresponsivity values of all 27 photodetectors with σ = 0.3 training data. a, b, Initial (a) and epoch 30 (b) responsivity values. The weights for the σ = 0.2 and σ = 0.4 training data are similar. c, Measured currents over all epochs for a specific projected letter and at all three noise levels. d, Histogram of the initial and final responsivity values for the three different noise levels.

Extended Data Fig. 6 Comparison with computer simulation.

Classifier training of the analogue vision sensor (solid lines) and simulation of the system on a computer (dashed lines) for different data noise levels σ. The same ANN architecture, input data, effective learning rate and starting weights have been used. The same accuracy and loss are eventually reached after training. The slightly slower convergence of the analogue implementation compared with the simulation reflects the nonidealities (defective subpixel, device-to-device variations) of the former. Further discussion on the impact of nonidealities is provided in Extended Data Fig. 10.

Extended Data Fig. 7 Training datasets.

a, b, Dataset of 30 epochs of classifier (a) and autoencoder training (b) with a test data noise level of σ = 0.4 and σ = 0.15 respectively.

Extended Data Fig. 8 Autoencoder photoresponsivities/weights.

a, b, Initial (a) and epoch 30 (b) encoder photoresponsivity values (left) and decoder weights (right).

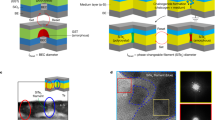

Extended Data Fig. 9 Floating-split-gate photodiode with memory.

a, Schematic of the floating gate photodiode. The addition of 2-nm-thick Au layers, sandwiched between Al2O3 and hexagonal boron nitride (hBN), enables the storage of electric charge when a gate voltage is applied to the device, acting as a floating-gate memory. b, Electronic characteristic curves of the photodiode operated in p–n, n–p and p–p configurations. c, The ability of the device to ‘remember’ the previous configuration can be verified from the time-resolved photocurrent measurement. The measurement is performed as follows: the back-gate voltages are set to VG1 = +5 V and VG2 = −5 V and are then disconnected, that is, there is no longer an applied gate voltage and the only electric field is that generated by the charge stored on the floating electrodes. The short-circuit photocurrent is then measured upon optical illumination. The light is then switched off, at ~1,100 s, with a corresponding drop of the photocurrent to zero. After ~1,600 s, the light is switched on again, causing the current to reach its initial value, and then a smaller value when the intensity of the light is reduced (~1,700 s). After ~2,300 s, the opposite voltage configuration is applied to the back gates (VG1 = −5 V and VG2 = +5 V), inducing a polarity inversion that also remains permanent. Now, a positive photocurrent (red line) is obtained.

Extended Data Fig. 10 Robustness of the network.

a, Detector uniformity, extracted from Extended Data Fig. 1. The fitted Gaussian probability distribution has a standard deviation of σ = 0.205 (40 mA W−1 V−1). b, Monte Carlo simulation of a vision sensor with detector responsivities of a given standard deviation. (The photodetectors of the actual device have a measured photoresponsivity standard deviation of 0.205.) Trained on the MNIST database of handwritten digits, the classifier has 784 pixels and 10 subpixels per pixel. For each data point, 50 random photoresponsivity variations were evaluated. c, Accuracy dependence on the number of (randomly chosen) defective subpixels. The same ANN and Monte Carlo simulation scheme as in b were used. For each data point, 50 random sets of modified photoresponsivities were evaluated.

Rights and permissions

About this article

Cite this article

Mennel, L., Symonowicz, J., Wachter, S. et al. Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020). https://doi.org/10.1038/s41586-020-2038-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-020-2038-x

This article is cited by

-

Differential perovskite hemispherical photodetector for intelligent imaging and location tracking

Nature Communications (2024)

-

Nonvolatile and reconfigurable two-terminal electro-optic duplex memristor based on III-nitride semiconductors

Light: Science & Applications (2024)

-

Broadband nonlinear modulation of incoherent light using a transparent optoelectronic neuron array

Nature Communications (2024)

-

High performance artificial visual perception and recognition with a plasmon-enhanced 2D material neural network

Nature Communications (2024)

-

Bio-inspired “Self-denoising” capability of 2D materials incorporated optoelectronic synaptic array

npj 2D Materials and Applications (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.