Abstract

A major challenge of climate change adaptation is to assess the effect of changing weather on human health. In spite of an increasing literature on the weather-related health subject, many aspect of the relationship are not known, limiting the predictive power of epidemiologic models. The present paper proposes new models to improve the performances of the currently used ones. The proposed models are based on functional data analysis (FDA), a statistical framework dealing with continuous curves instead of scalar time series. The models are applied to the temperature-related cardiovascular mortality issue in Montreal. By making use of the whole information available, the proposed models improve the prediction of cardiovascular mortality according to temperature. In addition, results shed new lights on the relationship by quantifying physiological adaptation effects. These results, not found with classical model, illustrate the potential of FDA approaches.

Similar content being viewed by others

Introduction

Climate change adaptation is an important challenge for years to come. With the expected increasing number of heat wave events as well as other meteorological changes, human health could greatly be impacted by climate change1. As a consequence, the number of studies outlining the impacts of weather on human health has grown rapidly during the last few years2,3,4. Health issues of interest can either be general mortality or morbidity5,6,7, specific diseases such as respiratory illnesses8,9 or cardiovascular diseases (CVD)10,11,12,13.

In order to estimate the relationship between a meteorological exposure and a health issue, studies commonly use nonlinear models allowing for delayed effects between the exposure and the response. These models can either be generalized additive models (GAM) with lagged exposures14 or distributed lag nonlinear models (DLNM)15. These models have allowed, for instance, the estimation of the acute effect of heat as well as the more latent but very important effect of cold on mortality3. However, they are not able to estimate other important aspects of weather-related health, such as the impact of seasonal anomalies (e.g. early heat waves or late cold spells) or the potential impact of intra-day variations. Not accounting for these effects significantly hinders the predictive performances of classical models and thus the assessment of the impacts of climate change. As a consequence, there is a need for innovative statistical models.

The present study aims at modelling two little known aspects of temperature-related cardiovascular mortality in the Montreal’s metropolitan area (Canada): (i) the effect of the previous 24 hour temperatures on mortality during summer and (ii) the evolution of the relationship between temperature and mortality along the whole year. To study these aspects, it is hereby proposed to consider the recently developed functional regression models16. Functional regression is a subset of functional data analysis models (FDA)17 which represent a statistical framework to deal with continuous curves (rather called functions or functional data) instead of series of discrete data points. The difference between classical time series and functional data is illustrated in Fig. 1. The FDA framework is hereby summoned because of its capacity to represent natural processes such as the intrinsically continuous environmental ones like temperatures. By unveiling the continuous process generating the observed discrete data points, the FDA framework makes use of the whole information available. This leads to a better understanding and in fine, to better predictive performances of the phenomena of interest.

The first objective of the present study is to model the effect of the previous 24 hour temperature variations on summer cardiovascular mortality in the Montreal’s metropolitan area. For this, we utilise hourly measurements of temperature and daily cardiovascular mortality. Classical models are not able to integrate these data directly since the explanatory and response variables are on different time steps (there are 24 temperature values for each mortality observation). Using each hourly measurement as a different explanatory variable in a multiple regression model would lead to unstable estimates because of the high collinearity between them, as well as the high number of coefficients to estimate which increases the uncertainty18. These issues are addressed by the functional linear model for scalar response (SFLM, schematized in Fig. 2a) which is a linear model taking one or several functional variables as exposure to predict a scalar response. It could be seen as the most popular of all functional regression models19,20,21 since it extends this wide problem of predicting a value according to the temporal evolution of one or several predictors. In the SFLM, the successive hourly measurements of temperature during the previous day can be considered as a single functional observation. This extends the classical model since the whole temperature is used (the whole daily curve) instead of an aggregation of this information (e.g. mean or maximum temperature) where valuable information could be lost. Therefore, the first part of the study applies the SFLM with the daily mortality count as response and the previous 24 hour temperature curve as explanatory variable (see the Methods section). This first part of the study is thereafter referred to as ‘Application 1’.

The second objective of the study is to model the evolution of the relationship between temperature and cardiovascular mortality over the whole year. Epidemiologic studies usually only model the relationship along the temperature dimension, while it is sometimes suggested that the month of occurrence of temperature events also have its importance on mortality22. This gap can be filled with the fully functional linear model (FFLM, schematized in Fig. 2b), which is even more general than the SFLM since it expresses a functional response according to at least one functional predictor in the most exhaustive way. The second part of the study (‘Application 2’) uses the FFLM to predict the annual mortality curve using the same year temperature curve. It accounts for the seasonal changes of the relationship (being different between summer and winter for instance). Thus, the FFLM addresses the issue of seasonal anomalies exposed above. In the general model, each time step t of the response curve could depend on any time s of the exposure curve (see Fig. 2b), rather than the fixed time t only17. Since the annual sequence of mortality and temperature data points are considered as a single curve, the FFLM naturally takes into account their smooth variations16. Like the SFLM, the FFLM is especially well suited for time series with potential lag effects and, in this case, it is restricted to the influence of the past time period of the temperature on the time step t of cardiovascular mortality.

The next section presents the result of Applications 1 and 2 to show the effect of previous 24 hour temperature curve and the yearly evolution of the relationship. Their results are then compared to those of the classical models applied to the same issues to show the new insights and predictive improvements brought by functional models. These results are then the basis for the discussion about the advantageous properties of functional regression, both qualitatively and quantitatively.

Results

The data for each of the two following applications are summarized in Table 1. Details about their collection can be found in the Methods section at the end of the manuscript. The Method section also provides mathematical details about the two functional regression models considered in the study.

Application 1: daily mortality – hourly temperatures (with SFLM)

Application 1 is a short-term application using the SFLM in order to detail how cardiovascular mortality is impacted by the evolution of temperature during the previous day. Application 1 focuses on summer mortality (i.e. June to August) in order to increase the knowledge about the cardiovascular mortality response to summer heat. This period of the year is especially of major importance because of heat waves and their importance in climate change adaptation23,24,25. This is particularly true in southern Québec26.

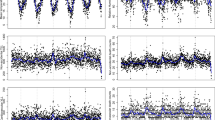

To be more accurate, the response yi of the model is the cardiovascular mortality count at day i, and does not need any preprocessing to be used in the SFLM. However, the exposure is the 24 hour temperature curve of day i − 1, xi−1(s) (\(s\in [0;24]\)). The functional observations xi−1(s) need to be constructed from hourly temperature measurements (see the Data section), through smoothing. We refer to the Methods section for mathematical details about constructing these functional observations. A representative subset of the resulting curves xi−1(s) is displayed in Fig. 3, along with the original hourly measurements. It shows that temperature measurements were already smooth, and that the obtained functional data xi−1(s) fit almost perfectly the measurements.

In the SFLM, since the exposure is functional and the response is scalar, the relationship between them (i.e. the regression coefficient) takes the form of a function β(s) depicting the particular effect of each hour s of xi−1(s) on yi.This functional coefficient β(s), estimated on the Montreal metropolitan area data, is shown in Fig. 4 along with its 95% confidence interval. In the present application, β(s) is interpreted as a lag-response relationship with an increased resolution to estimate the intraday lags. It explains why the ends of the curve do not coincide in Fig. 4.

Figure 4 shows a positive effect of the previous 24-hour temperatures for almost all day. The curve especially shows high values during the morning (5–12 AM) and the evening (4–10 PM). This result clearly shows the role of hot mornings and evenings in explaining the next day’s cardiovascular over-mortality. It suggests that the presence of periods of lower temperatures during the day is important to avoid over-mortality episodes during the day. Note that this phenomenon is usually acknowledged by the presence of minimum temperature in the definition of heat waves27. This is a result that is expected from reality, but which cannot be shown by commonly used regression models.

Application 2: annual mortality – annual temperatures (with FFLM)

Application 2 uses the FFLM to extend the relationship between temperature and cardiovascular mortality to a longer scale. The response in the present application is the annual curve of cardiovascular mortality (yi(t), \(t\in [0;\,365]\)) and the predictor is the curve of temperature during the same year (xi(s), \(s\in [0;\,365]\)). Therefore, Application 2 focuses more on the overall relationship between temperature and cardiovascular mortality. Its main interest is to transcribe the evolution of the relationship shape over the year, which is expected to be different according to the season.

One could have noted that the response is considered as a continuous function although mortality is discrete by nature. From a mathematical point of view, an underlying Poisson process actually generates death occurrences which are then counted each day to yield the classical mortality data28. Available daily data are in fact counts from occurrences happening continually which are generated from a continuous rate function (the expected number of occurrences)29. Therefore, constructing functional data by smoothing the daily count data consists in estimating the underlying rate function.

Application 2 uses the FFLM meaning that, for both the cardiovascular mortality response and the temperature exposure, functional observations need to be constructed from daily measurements. The resulting curves for year 1983 are displayed in Fig. 5 as a representative example. Note that the smoothness of the resulting curves is objectively determined through a cross-validation criterion (CV, see Methods section). Figure 5a shows that the smoothing of cardiovascular mortality considers high-frequency variations as noise, but that the curves represent the overall evolution over the year. This model complements Application 1 by focusing on the long term evolution of mortality. In contrast to mortality, constructed temperature series (Fig. 5b) match almost perfectly the measured data points.

Since both the response and the exposure are functional, the relationship between them takes the form of a bi-dimensional functional coefficient, i.e. a surface. In addition, this surface is constrained to be non-null only when \(s\in [t-60;\,t]\), which means that it represents the relationship between the mortality and the temperature curves for the preceding 60 days only.

The estimation of the surface obtained with Montreal’s data is shown in Fig. 6. It shows a very smooth and mostly negative surface, indicating increases in mortality for below average temperatures. Hence, overall cold weather induces more deaths than heat in Montreal. This effect of cold is especially strong during the fall season (September to November) as well as during the spring season (end of April to the beginning of June). Note also that the surface is close to zero in winter and shows even a positive relationship between January temperatures and February/March mortality. The latter suggests a mortality displacement effect following January cold spells, which is not usually observed during this period of the year30.

Estimated relationship between the annual mortality curve and the temperature of the same year. The color represents the value of the coefficient, red being positive, black negative and white null. Note that the seemingly low values of the coefficient are explained by its continuous nature (the relationship is spread across the whole surface).

The results shown here could suggest an adaptation of the population (either ‘sociological’ or ‘physiological’)31 by showing that below average temperatures cause more excess mortality during the fall season when the population is not yet well prepared, than during winter when it is common. This type of effect cannot be seen with classical methods such as DLNM, and has especially never been observed in the province of Quebec12,32.

Comparison with classical models

The use of functional regression models seems to unveil aspects of the relationship that were not detected by the models commonly used in the field. We now wonder if this is relevant, i.e. if these aspects help predicting yet unobserved mortality. First, Application 1 (based on the SFLM) concerns the relationship between mortality and the previous 24-hour temperature. For a fair comparison, classical models are applied with the same data as the SFLM, i.e. a GAM is performed with mortality count of day i as response and temperature with lag 1 as exposure. In order to cover different cases, several models are fit with different temperature variables, i.e. mean, maximum and minimum temperatures although no differences in predictive power have been observed33, as well as the diurnal range34.

Table 2 shows the prediction mean square error (RMSE) of each classical model as well as the SFLM. These RMSEs are computed through 5-fold blocked-CV, i.e. each data year corresponds to a fold. This particular version of CV is necessary in the case of time series data35. Results show that the SFLM do have a lower prediction error. Therefore, considering the whole curve of a day’s temperature instead of a single value not only brings new information but also improves predictive ability.

Application 2 (based on FFLM) uses classical daily data (see Table 1) and can be compared to the widely used DLNM15, which is fitted on the exact same data. The DLNM is fitted with the same lags than the FFLM, i.e. 60 days. Apart from the maximum lag, the DLNM specifications follow those used by a recent international study3, i.e. with internal B-spline nodes placed at the 10th, 75th and 90th percentiles along the temperature dimension and at equally spaced values on the log scale for the lag dimension.

Table 2 shows the RMSE of the DLNM and FFLM, obtained through 31-fold blocked CV (one fold per year). For the FFLM, the predicted mortality curves are discretized for each day to obtain a daily predicted mortality time series of the same size than the original data. Using this time series, the standard RMSE formula can be computed. It shows RMSE values of 4.45 for the DLNM and 4.31 for the FFLM, indicating hence a better predictive performance for the functional model. Note that, unlike the DLNM, the FFLM achieves these performances while being linear.

Conceptually, the FFLM includes a “time-of-year” aspect, while the DLNM only considers absolute temperatures and is blind to the period of occurrence of temperatures. This time of year aspect could nonetheless be included in a DLNM through an interaction term with a day-of-year variable36. Adding this interaction, the RMSE of the DLNM drops to 4.35, which is still slightly above the FFLM’s RMSE. This result is not presented here since it does not correspond to the current practice in the literature and has never been performed with the whole year (only summer season).

Discussion

The present paper intends to introduce functional regression as an alternative approach to classical models in weather-related health studies. Comparison results show that the predictive performance of functional regression is not vastly superior to those of GAMs and DLNMs. Indeed, the differences in RMSE are small compared to the magnitude of daily cardiovascular mortality in Montreal which presents a mean of 17 death and a standard deviation of 5 deaths. However, functional regression models present several conceptual and practical advantages over the classical models, as discussed below.

The first strength of the functional regression models proposed in the present paper is the natural addition of a time-of-day or time-of-year aspect. Indeed, the SFLM and FFLM acknowledge that temperature may not have the same influence on mortality according to the time in the period studied. This aspect of models allows shedding new knowledge about the relationship between temperature and mortality, both at the intra-day and yearly levels. Note that the two applications respectively consider daily and annual curves since these are the most obvious and frequently used time periods to consider. Other time periods (e.g. a week, a month or several years) can however be considered according to the objective of the study, prior physio-pathological knowledge and the available data.

Barnett et al.33 tried to identify the better measures of temperature for mortality prediction purposes. Evaluating different possibilities such as mean, minimum, maximum temperature as well as other indices based on temperature, the study concluded that none was absolutely better than the others. One of the most interesting properties of the functional framework is that it makes this question irrelevant since the majority of the measures usually considered are automatically included in a temperature curve. In addition, functional regression models address the issue of serial dependence between successive measurements since curves can usually be considered independent37.

All the strengths of functional regression models exposed above participate to the main interest of the functional framework: unveiling new aspects of the weather-health relationships. The SFLM indicates that temperature has an influence at particular periods during the day, i.e. during morning and evening. This latter result corresponds to an intuitive knowledge, since minimum temperatures are included in heat wave definitions to account for this phenomenon38, but do not usually appear in regression models’ results. Note that this knowledge could be further refined by considering temperature curves spanning several days as exposure.

Classical results of temperature-related mortality (usually obtained using a DLNM)15 mainly represent a dichotomy between extreme heat and cold. Mechanisms accounting for the evolution of the relationship are starting to be included in DLNMs36,39. However, this is usually carried out through interaction terms which are difficult to interpret. The FFLM includes these aspects in a straightforward manner and allows a complete and easy interpretation of results. In particular, the FFLM outlines that a cold spell may not have the same impact whether it happens during fall of winter seasons, i.e. it allows observing the population adaptation phenomenon (either physiological or sociological habits). These results are obtained by fitting a single model, and support previous evidence5, obtained through the fit of multiple models. Finally, note that studies on the extremes are also of interest and should complement studies similar to the present one40.

The functional regression models applied in the present paper are linear for clarity purposes. However, the true relationship might not be linear. Indeed, even when isolating specific periods such as morning and evening for Application 1, or summer and winter for Application 2, the effect could be present only above certain thresholds. A perspective is then to apply nonlinear functional models to similar applications to the ones performed in the present paper. Such models have recently been developed, with, for instance, the functional GAM41 or the recently developed general functional models42,43.

The main limitation of functional models lies in their gluttony of data. Indeed, considering long time periods (such as in Application 2 here) necessitates a large amount of data to obtain a meaningful set of curves. This limitation is exacerbated for nonlinear functional models, further justifying the use of linear ones in the present study. Note, however, that large databases are increasingly available with health and weather services, meaning that this limitation will no longer be relevant in a few years.

Introducing functional models in environmental epidemiology opens new potential directions, but research in this topic needs to be pursued. Indeed, the applications shown here are examples designed to introduce the reader to functional linear models, but merely touched the full range of possibilities offered by the FDA framework. Hence, these models may prove even more useful with more exhaustive applications including several predictors (e.g. atmospheric pollutants, precipitations, population demographic data, etc…) and eventually more data curves.

Methods

Data

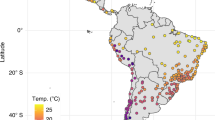

The data are those of the Montreal’s metropolitan area, i.e. the extended area of the city including all its suburbs. This is the most densely populated area of the province of Quebec and one of the most populous in Canada. The large population allows for enough daily CVD mortality to obtain a meaningful link between mortality and temperatures. Moreover, the area is small and flat enough to consider the weather to be homogeneous27.

The considered health data corresponds to the daily number of deaths associated to cardiovascular diseases as the primary cause from 1981 to 2011 (N = 31 years and n = 11322 days). Cardiovascular diseases include ischaemic heart diseases (I20-I25 in the tenth version of the international classification of diseases, ICD-10), heart failure (I50 in the ICD-10), cerebrovascular diseases and Transient cerebral ischaemic attacks (G45, H34.0, H34.1, I60, I61, I63 and 255 I64 in the ICD-10). Corresponding ICD-9 codes are used for the years they were in use during the period of interest (i.e. before 2000). The data were supplied by the Institut national de santé publique du Québec of the Quebec province.

Temperature data come from two different sources for the two applications (Table 1). For Application 1, data are provided by the Ministère du Développement durable, de l’Environnement et de la lutte contre les changements climatiques [Ministry of Sustainable Development, the Environment and Fight against Climate Change]. These data are available hourly from 2007 to 2015. In Application 1, only the hot summer season is considered (June, July and August) since heat waves are susceptible to happen in southern Quebec during these months44,45. The common window with mortality data includes the months of June to August of the years 2007 to 2011, which corresponds to N1 = 460 days.

Since Application 2 uses annual curves, temperature data are provided by Environment and Climate Change Canada in order to possess enough years to perform the FFLM analysis. Data corresponds to daily values from 1981 to 2011, which is the same period as the CVD mortality data described previously. Hence, we have N2 = 31 years of data containing 365 days each (note that 29th of February where removed to keep the same number of measurement each year).

Functional data analysis

Functional data analysis (FDA) has been introduced by Ramsay in 198246 and more recently popularized17. FDA is the subject of an increasing amount of research, both in the theoretical and applied sides. The former have led to the development of the functional counterparts of many traditional statistical techniques such as regression models47,48,49, principal component analysis17, canonical correlation analysis50, classification51 and spatial methods52. In the applied side, the functional framework has improved the modeling in a variety of domains such as ecology53, hydrology54,55,56, marketing57, medicine58,59,60 as well as atmospheric pollution modelling61. The analyses of the present paper are performed using the packages fda and FDboost of the R software62,63,64.

Smoothing functional data

Functional data yi(t), generated by a functional variable y(t), are continuous curves which can be seen as infinite dimensional vectors. Indeed a value yi(t) exists for every t in a continuous interval T. Functional observations yi(t) are constructed by smoothing splines from the observed measurements yi(tl). In the present study, the nodes of the smoothing splines are equally spaced along the interval T. To limit the number of subjective choices, the number of nodes (which controls the smoothness of resulting curves yi(t)) is chosen by leave-one-out cross-validation.

Functional linear model for scalar response

The functional linear model for scalar response (SFLM) used in Application 1 is mathematically expressed as

where yi is the total mortality count of day i, s(i) is a smooth function component designed to control for long-term trend (i.e. a natural spline with a knot every three months), β0 the traditional intercept, and xi−1(s) (\(s\in [0;24]\)) is the temperature curve of day i−1. The functional coefficient of interest is β1(s) which expresses the effect of each time s of the temperature curve on the mortality count yi. The estimation method of β1(s) (and its confidence intervals, CI) can be found in the technical appendix.

Note that FDA lacks asymptotic theory to compute parametric confidence intervals (CI) for \({\hat{\beta }}_{1}(t)\). Therefore, the CIs displayed in Fig. 4 are computed through the non-parametric method of wild bootstrap65.

Fully functional linear model

The fully functional linear model (FFLM) of Application 2 expresses the cardiovascular mortality function yi(t) of year i, at time \(t\in [0;\,365]\) as

where s(i) is a smooth function of the year (\(i\in \{1,\,\ldots ,\,31\}\)) to control for the overall cardiovascular mortality trend, β0(t) is the functional intercept, xi(s) is the temperature curve of year i and β1(s, t) is the surface indicating the effect of xi(s) on yi(t). In Application 2, the constraint is added that \(s\in [t-60;\,t]\) 43. The latter constraint indicates that the maximum lag chosen is 60 days, a value selected because it is slightly higher than the maximum lag found in the literature for mean daily temperature, which is 40 days66. As for the SFLM, the estimation details are deferred to the technical appendix.

References

Patz, J. A., Frumkin, H., Holloway, T., Vimont, D. J. & Haines, A. Climate change: challenges and opportunities for global health. JAMA (2014).

Dukić, V. et al. The Role of Weather in Meningitis Outbreaks in Navrongo, Ghana: A Generalized Additive Modeling Approach. JABES 17, 442–460 (2012).

Gasparrini, A. et al. Mortality risk attributable to high and low ambient temperature: a multicountry observational study. The Lancet 386, 369–375 (2015).

Davis, R. E., Dougherty, E., McArthur, C., Huang, Q. S. & Baker, M. G. Cold, dry air is associated with influenza and pneumonia mortality in Auckland, New Zealand. Influenza and other respiratory viruses 10, 310–3 (2016).

Lee, M. et al. Acclimatization across space and time in the effect of temperature on mortality: a time-series analysis. Environmental Health (2014).

Vanos, J. K., Cakmak, S., Kalkstein, L. S. & Yagouti, A. Association of weather and air pollution interactions on daily mortality in 12 Canadian cities. Air Qual. Atmos. Health 8, 307–320 (2015).

Sugg, M. M., Konrad, C. E. & Fuhrmann, C. M. Relationships between maximum temperature and heat-related illness across North Carolina, USA. Int. J. Biometeorol. 60, 663–675 (2016).

Soneja, S. et al. Exposure to extreme heat and precipitation events associated with increased risk of hospitalization for asthma in Maryland, USA. Environ. Health 15 (2016).

Qiu, H. et al. Effects of coarse particulate matter on emergency hospital admissions for respiratory diseases: a time-series analysis in Hong Kong. Environmental health perspectives 120, 572–576 (2012).

Yang, C. et al. Long-term variations in the association between ambient temperature and daily cardiovascular mortality in Shanghai, China. Science of The Total Environment 538, 524–530 (2015).

Phung, D. et al. The effects of high temperature on cardiovascular admissions in the most populous tropical city in Vietnam. Environmental Pollution 208(Part A), 33–39 (2016).

Bayentin, L. et al. Spatial variability of climate effects on ischemic heart disease hospitalization rates for the period 1989–2006 in Quebec, Canada. International Journal of Health Geographics 9, 5 (2010).

Masselot, P. et al. EMD-regression for modelling multi-scale relationships, and application to weather-related cardiovascular mortality. Science of The Total Environment 612, 1018–1029 (2018).

Dominici, F., McDermott, A., Zeger, S. L. & Samet, J. M. On the Use of Generalized Additive Models in Time-Series Studies of Air Pollution and Health. American Journal of Epidemiology 156, 193–203 (2002).

Gasparrini, A., Armstrong, B. & Kenward, M. G. Distributed lag non-linear models. Statistics in Medicine 29, 2224–2234 (2010).

Morris, J. S. Functional Regression. Annual Review of Statistics and its Applications 2 (2015).

Ramsay, J. O. & Silverman, B. W. Functional data analysis. (Wiley Online Library, 2005).

Marx, B. D. & Eilers, P. H. Generalized linear regression on sampled signals and curves: a P-spline approach. Technometrics 41, 1–13 (1999).

Cardot, H., Ferraty, F. & Sarda, P. Functional linear model. Statistics & Probability Letters 45, 11–22 (1999).

Cardot, H., Ferraty, F. & Sarda, P. Spline estimators for the functional linear model. Statistica Sinica 13, 571–592 (2003).

Goldsmith, J., Bobb, J., Crainiceanu, C. M., Caffo, B. & Reich, D. Penalized Functional Regression. Journal of Computational and Graphical Statistics 20, 830–851 (2011).

Hajat, S., Kovats, R. S., Atkinson, R. W. & Haines, A. Impact of hot temperatures on death in London: a time series approach. Journal of Epidemiology and Community Health 56, 367–372 (2002).

Li, T., Horton, R. M. & Kinney, P. L. Projections of seasonal patterns in temperature- related deaths for Manhattan, New York. Nature Clim. Change 3, 717–721 (2013).

Oliver, E. C. J. et al. The unprecedented 2015/16 Tasman Sea marine heatwave. Nat. Commun. 8 (2017).

Ballester, J., Robine, J.-M., Herrmann, F. R. & Rodó, X. Long-term projections and acclimatization scenarios of temperature-related mortality in Europe. Nature Communications 2, 358 (2011).

Khaliq, M. N., Ouarda, T., St-Hilaire, A. & Gachon, P. Bayesian change-point analysis of heat spell occurrences in Montreal, Canada. Int. J. Climatol. 27, 805–818 (2007).

Chebana, F., Martel, B., Gosselin, P., Giroux, J.-X. & Ouarda, T. B. A general and flexible methodology to define thresholds for heat health watch and warning systems, applied to the province of Québec (Canada). International journal of biometeorology 57, 631–644 (2012).

Schwartz, J. et al. Methodological issues in studies of air pollution and daily counts of deaths or hospital admissions. Journal of Epidemiology and Community Health 50, S3–11 (1996).

Kingman, J. F. C. Poisson Processes. In Encyclopedia of Biostatistics, https://doi.org/10.1002/0470011815.b2a07042 (John Wiley & Sons, Ltd, 2005).

Analitis, A. et al. Effects of Cold Weather on Mortality: Results From 15 European Cities Within the PHEWE Project. Am J Epidemiol 168, 1397–1408 (2008).

Robinson, P. J. On the Definition of a Heat Wave. Journal of Applied Meteorology 40, 762–775 (2001).

Doyon, B., Bélanger, D. & Gosselin, P. The potential impact of climate change on annual and seasonal mortality for three cities in Québec, Canada. International Journal of Health Geographics 7, 23 (2008).

Barnett, A. G., Tong, S. & Clements, A. C. A. What measure of temperature is the best predictor of mortality? Environmental Research 110, 604–611 (2010).

Vutcovici, M., Goldberg, M. & Valois, M. -F. Effects of diurnal variations in temperature on non-accidental mortality among the elderly population of Montreal, Québec, 1984–2007. Int J Biometeorol 1–10, https://doi.org/10.1007/s00484-013-0664-9 (2013).

Bergmeir, C. & Benítez, J. M. On the use of cross-validation for time series predictor evaluation. Information Sciences 191, 192–213 (2012).

Gasparrini, A. et al. Changes in Susceptibility to Heat During the Summer: A Multicountry Analysis. Am J Epidemiol 183, 1027–1036 (2016).

Cuevas, A., Febrero, M. & Fraiman, R. Linear functional regression: The case of fixed design and functional response. Canadian Journal of Statistics 30, 285–300 (2002).

Perkins, S. E. & Alexander, L. V. On the Measurement of Heat Waves. Journal of Climate 26, 4500–4517 (2013).

Gasparrini, A. et al. Temporal Variation in Heat–Mortality Associations: A Multicountry Study. Environ Health Perspect 123, 1200–1207 (2015).

Chiu, Y., Chebana, F., Abdous, B., Bélanger, D. & Gosselin, P. Mortality and morbidity peaks modeling: An extreme value theory approach. Statistical Methods in Medical Research 0962280216662494 (2016).

McLean, M. W., Hooker, G., Staicu, A.-M., Scheipl, F. & Ruppert, D. Functional Generalized Additive Models. Journal of Computational and Graphical Statistics 23, 249–269 (2014).

Brockhaus, S., Scheipl, F., Hothorn, T. & Greven, S. The functional linear array model. Statistical Modelling 15, 279–300 (2015).

Brockhaus, S., Melcher, M., Leisch, F. & Greven, S. Boosting flexible functional regression models with a high number of functional historical effects. Statistics and Computing 1–14, https://doi.org/10.1007/s11222-016-9662-1 (2016).

Khaliq, M. N., Ouarda, T., Gachon, P. & Sushama, L. Stochastic modeling of hot weather spells and their characteristics. Clim. Res. 47, 187–199 (2011).

Slonosky, V. C. Daily minimum and maximum temperature in the St-Lawrence Valley, Quebec: two centuries of climatic observations from Canada. Int. J. Climatol. 35, 1662–1681 (2015).

Ramsay, J. When the data are functions. Psychometrika 47, 379–396 (1982).

Ramsay, J. O. & Dalzell, C. Some tools for functional data analysis. Journal of the Royal Statistical Society. Series B (Methodological) 539–572 (1991).

Hastie, T. & Mallows, C. A Statistical View of Some Chemometrics Regression Tools: Discussion. Technometrics 35, 140–143 (1993).

Ferraty, F. & Vieu, P. Nonparametric models for functional data, with application in regression, time series prediction and curve discrimination. Nonparametric Statistics 16, 111–125 (2004).

He, G., Müller, H.-G. & Wang, J.-L. Functional canonical analysis for square integrable stochastic processes. Journal of Multivariate Analysis 85, 54–77 (2003).

Fischer, A. Quantization and clustering with Bregman divergences. Journal of Multivariate Analysis 101, 2207–2221 (2010).

Dabo-Niang, S. & Rhomari, N. Kernel regression estimation in a Banach space. Journal of Statistical Planning and Inference 139, 1421–1434 (2009).

Bel, L., Bar-Hen, A., Petit, R. & Cheddadi, R. Spatio-temporal functional regression on paleoecological data. Journal of Applied Statistics 38, 695–704 (2011).

Chebana, F., Dabo-Niang, S. & Ouarda, T. B. M. J. Exploratory functional flood frequency analysis and outlier detection. Water Resources Research 48, W04514 (2012).

Masselot, P., Dabo-Niang, S., Chebana, F. & Ouarda, T. B. M. J. Streamflow forecasting using functional regression. Journal of Hydrology 538, 754–766 (2016).

Ternynck, C., Ben Alaya, M. A., Chebana, F., Dabo-Niang, S. & Ouarda, T. B. M. J. Streamflow hydrograph classification using functional data analysis. Journal of Hydrometeorology (2016).

Sood, A., James, G. M. & Tellis, G. J. Functional regression: A new model for predicting market penetration of new products. Marketing Science 28, 36–51 (2009).

Ratcliffe, S. J., Leader, L. R. & Heller, G. Z. Functional data analysis with application to periodically stimulated foetal heart rate data. I: Functional regression. Statistics in Medicine 21, 1103–1114 (2002).

Ratcliffe, S. J., Heller, G. Z. & Leader, L. R. Functional data analysis with application to periodically stimulated foetal heart rate data. II: Functional logistic regression. Statistics in Medicine 21, 1115–1127 (2002).

Hosseini-Nasab, M. & Mirzaei, Z. Functional analysis of glaucoma data. Statistics in Medicine 33, 2077–2102 (2014).

Arisido, M. W. Functional measure of ozone exposure to model short-term health effects. Environmetrics 27, 306–317 (2016).

Ramsay, J., Wickham, H., Graves, S. & Hooker, G. fda: Functional data analysis. R package version 2 (2011).

Brockhaus, S. & Ruegamer, D. FDboost: Boosting Functional Regression Models. (2016).

R Core Team. R: A language and environment for statistical computing. (R Foundation for Statistical Computing, 2015).

Gonzalez-Manteiga, W. & Martinez-Calvo, A. Bootstrap in functional linear regression. J. Stat. Plan. Infer. 141, 453–461 (2011).

Zanobetti, A., Wand, M. P., Schwartz, J. & Ryan, L. M. Generalized additive distributed lag models: quantifying mortality displacement. Biostatistics 1, 279–292 (2000).

Acknowledgements

The authors wish to thank the Fonds vert du gouvernement du Québec for funding the study, and the Ministry of Sustainable Development, Environment and the Fight against Climate Change as well as Environment and Climate Change Canada for access to temperature data. Finally, the authors would like to thank Jean-Xavier Giroux and Yohann Chiu of INRS-ETE for their relevant comments throughout the study. The authors thank the Editor, Prof. Andy Morse, and two anonymous reviewers whose comments helped considerably improve the quality of the manuscript.

Author information

Authors and Affiliations

Contributions

P.M. performed the study, including the statistical analysis and writing the manuscript. F.C. gave the guidelines of the study, supervised the statistical analysis helped writing and editing the manuscript. T.O. helped writing and editing the manuscript. D.B. and P.G. gave access to data, directed the epidemiologic problematic and helped writing the manuscript. Finally, AS helped writing the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Masselot, P., Chebana, F., Ouarda, T.B.M.J. et al. A new look at weather-related health impacts through functional regression. Sci Rep 8, 15241 (2018). https://doi.org/10.1038/s41598-018-33626-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-33626-1

Keywords

This article is cited by

-

A functional regression model for the retrospective assessment of asbestos exposure among Venetian dock workers

Environmental and Ecological Statistics (2024)

-

A quantitative method for estimating the adaptedness in a physiological study

Theoretical Biology and Medical Modelling (2019)

-

Nonstationary warm spell frequency analysis integrating climate variability and change with application to the Middle East

Climate Dynamics (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.