Abstract

Age-related hearing loss typically affects the hearing of high frequencies in older adults. Such hearing loss influences the processing of spoken language, including higher-level processing such as that of complex sentences. Hearing aids may alleviate some of the speech processing disadvantages associated with hearing loss. However, little is known about the relation between hearing loss, hearing aid use, and their effects on higher-level language processes. This neuroimaging (fMRI) study examined these factors by measuring the comprehension and neural processing of simple and complex spoken sentences in hard-of-hearing older adults (n = 39). Neither hearing loss severity nor hearing aid experience influenced sentence comprehension at the behavioral level. In contrast, hearing loss severity was associated with increased activity in left superior frontal areas and the left anterior insula, but only when processing specific complex sentences (i.e. object-before-subject) compared to simple sentences. Longer hearing aid experience in a sub-set of participants (n = 19) was associated with recruitment of several areas outside of the core speech processing network in the right hemisphere, including the cerebellum, the precentral gyrus, and the cingulate cortex, but only when processing complex sentences. Overall, these results indicate that brain activation for language processing is affected by hearing loss as well as subsequent hearing aid use. Crucially, they show that these effects become apparent through investigation of complex but not simple sentences.

Similar content being viewed by others

Introduction

Age-related hearing loss typically affects an older adult's ability to hear high frequencies. This type of hearing loss is often left untreated, with large numbers of hard-of-hearing older adults not using a hearing aid in their daily life. For example, only an estimated 14% of hard-of-hearing people over the age of 50 in the US use a hearing aid1 and only an estimated 9% of hard-of-hearing people between age 60 and 69 in Canada use a hearing aid2. The consequences of this are largely unknown but highly relevant, as many countries face an aging population and untreated hearing loss is associated with cognitive decline3,4,5 and incident dementia6.

Hearing loss also affects the processing of spoken language. While this may sound like a logical consequence of degraded auditory input, the effects seem to go beyond the auditory level, also affecting higher-level linguistic processing. For example, adults with mild-to-moderate hearing loss have problems with the comprehension of complex sentences (especially at higher speech rates7), respond slower to comprehension questions about complex sentences8, and show increased sentence processing times9 compared to normal-hearing peers.

The neural processing of speech typically relies on a network that includes the middle and superior temporal gyrus and the inferior frontal gyrus10. Speech processing predominantly takes place in the left hemisphere, which is the dominant hemisphere for language processing, although corresponding areas in the right hemisphere are also part of the network10. Neurophysiological research has shown that hearing loss is associated with decreased activity in cortical regions associated with this speech processing network11,12 as well as in subcortical regions11 during auditory sentence processing. In contrast, hearing loss increases recruitment of regions beyond the traditional speech processing network when processing spoken language, specifically of frontal areas13,14. Such increased ecruitment may be a compensatory mechanism, without which communicative abilities would suffer15,16. However, compensating for perceptual difficulties caused by degraded input in hard-of-hearing individuals is effortful17 and may therefore interfere with other taxing processes, such as higher-level language processing (cf.18,19).

Age-related hearing loss can be treated quite effectively through the fitting of hearing aids. Recent research has shown that 3 months after being fitted with a hearing aid, hard-of-hearing subjects report improved speech understanding and decreased listening effort in everyday life20. Moreover, hearing aids may alleviate some of the speech processing disadvantages associated with hearing loss. Specifically, hearing aid users compared to non-users show shorter processing times under similar hearing conditions9,21, which seem to level out when non-users are fitted with hearing aids for 6 months22. Similarly, improvements in speech identification have been found following hearing aid fitting23,24.

Regarding the neurophysiological effects of hearing aid use, findings are less clear. A recent study25 using EEG source reconstruction found increased activity in auditory, frontal, and pre-frontal areas for hard-of-hearing subjects without hearing aids compared to a control group when processing visual symbols. In addition, more severe hearing loss correlated with increased right auditory activation. The same hard-of-hearing subjects showed a reversal of these effects following the use of bilateral hearing aids for 6 months. In addition, subjects' speech perception in noise improved as a result of using hearing aids. In contrast, Dawes et al.26 found no neural effects of hearing aid fitting on the processing of simple tones using EEG measures. Hwang et al.27 provided subjects with one hearing aid in order to compare effects on the aided versus the unaided ear. Using fMRI measures, they found decreased activity—compared to a baseline measure before hearing aid fitting—in the superior temporal gyrus following the presentation of speech sounds to the unaided ear as well as the aided ear. Finally, Habicht et al.28 used fMRI measures and found decreased activity in superior and middle frontal regions outside of the speech processing network in hearing aid users compared to hard-of-hearing unaided listeners when processing speech in noise. In addition, they found increased activity in the lingual gyri and the left precuneus with longer hearing aid use when processing complex sentences. Since decreased activity in frontal regions has been found when hearing aid use was used as a grouping factor27,28, but increased activity in other regions has been found to correlate with length of hearing aid use28, both the location and the direction of the influence of hearing aid use on neural processing remain unclear. In addition, many of these studies had small sample sizes (e.g., groups of 827 or 1328 participants) and in Habicht et al.28 the significance thresholds were not corrected for multiple comparisons. As the authors28 acknowledge the accompanying higher risk of false positives and the need for follow-up studies, it is clear that more research on hearing aid use in relation to sentence processing is needed. Thus, even though there are some indications of behavioral as well as neural effects of hearing aid use, the relationship between hearing loss, the use of hearing aids, and their effects on higher-level language processes in relation to neural changes is not well understood yet. In addition, the influence of the duration of hearing aid use on linguistic processing has as of yet not been examined in a structured manner.

In this study, we investigate the correlation between hearing loss severity and hearing aid use on the one hand and the comprehension and neural processing of simple and complex sentences on the other. We used an experimental paradigm in which participants were presented with auditory recordings of simple and complex sentences. Specifically, we manipulated word order to create sentences of different complexities in German. Simple sentences were as in (1), where the subject of the sentence precedes the object and the adjunct is in third position. Complex sentences were derived from that by two word order changes. These two changes are assumed to involve different types of syntactic processing29,30. The first change involved putting the object before the subject. This leads to increased processing cost18,31,32. The second change involved changing the position of the adverb, from the third position (which is assumed to be the default, canonical position) to the first. Finally, these two word order changes were combined to create, presumably, the most complex structure shown in (2). An overview of all sentence structures is shown in Table 1.

(1) | DerNOM Hund | berührt | am Montag | denACC Igel |

|---|---|---|---|---|

TheNOM dog | touches | on Monday | theACC hedgehog | |

[Subject] | [Verb] | [Adjunct] | [Object] | |

The dog touches the hedgehog on Monday | ||||

(2) | Am Montag | berührt | denACC Igel | derNOM Hund |

|---|---|---|---|---|

On Monday | touches | theACC hedgehog | theNOM dog | |

[Adjunct] | [Verb] | [Object] | [Subject] | |

The dog touches the hedgehog on Monday | ||||

Furthermore, by using different sentence structures, we could reduce the predictability of the upcoming sentence. The sentences therefore require continuous linguistic processing for correct interpretation. Also, some effects of hearing loss or hearing aid use might only emerge when a task is sufficiently difficult, such as the processing of complex sentences [e.g.,18,31,32]. Thus, effects of these hearing-related measures may be found during the processing of complex sentences but not during the processing of simple sentences (as in28). Individual measures of hearing loss and the duration of hearing aid use (hearing aid experience) were obtained from each participant, so that their associations with sentence processing could be investigated. In addition, a non-verbal secondary task (as part of a dual-task paradigm) was included to increase the load on participants and therefore to potentially elicit stronger effects of hearing loss and hearing aid experience. Dual-task situations are common in everyday life. Crucially, the secondary task (a fixation cross change detection task) was domain-unspecific (i.e. non-auditory, non-linguistic) and therefore increased load in a different domain than the increase caused by complex sentences. Specifically, the task was aimed at increasing demands of visual attention and executive control without taxing working memory or interfering with auditory processing. This way, it can be examined whether sources of processing load in different modalities [domain-specific (sentence complexity) vs. domain-general (secondary task)] are affected by hearing loss and hearing aid experience in different ways.

Based on the literature presented above, we formulated two main hypotheses. Firstly, we expected increased hearing loss to be associated with less successful comprehension of complex sentences, especially in dual task situations. At the neural level, we expected increased hearing loss to be associated with decreased activity in cortical regions related to the speech processing network11, and increased activity in frontal regions outside of this network13,14 (Hypothesis 1). Secondly, we expected increased hearing aid experience to alleviate some of the effects of hearing loss and to therefore show opposite effects. Based on this, we expected increased hearing aid experience to be associated with improved comprehension of complex sentences, increased activity in cortical regions related to the speech processing network, and decreased activity in frontal regions outside of this network (Hypothesis 2). Based on previous research28, we expect the strongest effects of hearing aid experience to emerge in the processing of complex sentences (compared to simple sentences). If this is the case, it would reflect effects in higher-level language processing rather than auditory processing.

Methods

Participants

39 older adults participated in the study (19 females; mean age 65.5; age range 54–73). All participants showed mild-to-moderate binaural sloping age-related hearing loss with a group-average Pure Tone Average (PTA)-high (the average hearing loss at 2, 4, 6, and 8 kHz) of 48.5 dB HL (see Fig. 1 for audiograms). An overview of the participants' demographic, audiological, and cognitive characteristics is given in Table 2. The group of participants included both hearing aid users who used their hearing aid for a minimum of 4 h daily (n = 19; mean age 65.5 ± 5.1) and non-users who had never used a hearing aid (n = 20; mean age 65.6 ± 4.1). The hearing aid users had been using a hearing aid for an average of 6.1 years (hearing aid experience). Our original idea was to compare groups of hearing aid users and non-users directly, but in Germany it is difficult to find elderly participants with more severe (i.e. moderate) hearing loss who do not already use hearing aids. As a consequence, we were not able to get a sufficient number of participants with similar hearing loss, hence the hearing aid users had more severe hearing loss on average than the participants without hearing aids (PTA-high respectively 60.8 ± 6.1 and 36.8 ± 7.8), rendering a group comparison inappropriate. We therefore decided to investigate the influence of hearing aid use within the group of hearing aid users as a function of the length of use. In addition, the influence of hearing loss severity will be investigated in the combined sample. All participants were right-handed, had normal or corrected-to-normal vision, were native speakers of German, had no contraindications for MRI, and reported no language or neurological disorders. Participants showed normal cognitive functioning at a group level as determined by the Montreal Cognitive Assessment task (mean score of 27.2 out of 3033). Informed consent was obtained from all participants prior to the start of the study and all participants received a monetary compensation afterwards. The study was approved by the ethics committee of the University of Oldenburg (“Kommission für Forschungsfolgenabschätzung und Ethik”, approval number Drs. 28/2017) and carried out in accordance with the Declaration of Helsinki.

Materials and design

Sentences were taken from a previous study on the processing of different word orders34, based on the German OLACS corpus35. All sentences contained a Subject (S), a transitive Verb (V), a temporal Adjunct (A), and an Object (O). The position of the adjunct (first or third) and the order of the subject compared to the object (subject-before-object or object-before-subject) were manipulated. This resulted in four sentence conditions (see Table 1), varying in word order and consequently in sentence complexity. SVAO is the canonical, and hence simple word order in German. OVAS, AVSO, and AVOS are non-canonical, more complex word orders in German, with AVOS arguably being the most complex (see34). Finally, a condition without sentences (i.e. a silent condition) was included to serve as a baseline of neural activity in the analyses. A fixation cross was displayed on the screen during sentence (or baseline) presentation.

After the sentences were presented to the participants auditorily over headphones, two pictures were presented for a picture selection task (pictures taken from34 based on9,32). Both pictures contained both characters, but differed in which character performed the action mentioned in the sentence. More specifically, one picture displayed the correct character performing the mentioned action (i.e. in the examples from Table 1: the dog) on the second character (i.e. the hedgehog). The other picture displayed the exact reversal, namely the second character performing the mentioned action (i.e. in the examples from Table 1: the hedgehog) on the other character (i.e. in this case, the dog). Participants could select the picture that matched the meaning of the sentence best with a button press; left button (right index finger) for the left picture and right button (right middle finger) for the right picture. In the silent baseline condition, participants could select a picture randomly. The experiment included a dual task condition in half of the trials in order to examine the effect of non-modality specific processing load. In this condition, a secondary task had to be performed alongside the primary sentence processing task. In this secondary task, the horizontal or the vertical bar of the fixation cross changed in size exactly once during the sentence presentation. Participants were instructed to press a button when they noticed this change (for more details on this paradigm see34).

Each trial consisted of the sentence presentation (3.5 s), the picture selection task (3.5 s), and a jitter of 0.3–0.7 s before and after the sentence presentation. The experiment used a within-subjects design with 240 trials (24 trials per condition with 10 conditions, namely 4 sentence conditions and one baseline condition in both single and dual task condition). These were distributed over two sessions (120 trials each). Each session was subsequently divided into six blocks (20 trials each), which alternatingly presented the single and the dual task condition. Instructions on the screen before the start of each block informed participants about which condition would come next. Two pseudo-randomized test-lists were created to counter any order effects.

Visual stimuli were presented by a projector (DATAPixx2, VPixx Technologies Inc.) on a screen, which was positioned behind the MRI (distance of 50 cm from eye to screen). Stimulus presentation was controlled by Presentation software (version 18.3, NeuroBehavioral Systems, Inc., Berkeley, CA, www.neurobs.com).

Procedure

The study comprised the linguistic experiment described above as well as various cognitive measurements. Informed consent, questionnaires (asking for age, hearing aid use, listening effort), audiogram measurements, the Comprehensive Trail-Making Test (CTMT;36) as a measure of cognitive flexibility, the Digit Span task as a measure of working memory capacity, and a practice for the main experiment were performed outside the MRI scanner. Inside the MRI scanner, noise cancellation was applied via MR compatible headphones (Opto Active, Optoacoustics Ltd, Israel). Participants were tested without hearing aids. The sound intensity of the stimuli from the main linguistic experiment was adjusted to 80% intelligibility for each participant individually using the Oldenburg (Matrix) Sentence Test37,38,39 to correct for participants' hearing and for background noise inside the scanner. A second practice round for the main experiment, the main experiment (two sessions; approx. 18 min each), a structural scan (T1), and resting state and DTI measurements (not reported here) were performed inside the scanner. Between the two sessions of the main experiment, participants had a 10- to 15-min break outside the scanner, in which they completed a German vocabulary test40 as a measure of verbal intelligence. The complete study took around three hours.

Behavioral data analysis

The behavioral data analyses were performed in the statistical computing software R (version 3.6.2, www.r-project.org41). The rates of correct responses (dependent variable) were examined for each sentence condition in the single and the dual task. Binomial generalized linear mixed-effect-based models were used to investigate the influence of sentence condition, task condition, PTA-high, and hearing aid experience (hearing aid use in years, only for the 19 participants who were hearing aid users) on the rate of correct responses. The warranted inclusion of fixed factors, as well as that of several covariates (age, digit span, CTMT, vocabulary) was examined by means of model comparisons. A maximal converging random effects structure was used. The best model included sentence condition (with SVAO as the baseline), session (i.e. before vs. after the break), and vocabulary as fixed factors. Task condition, PTA-high, hearing aid experience, age, digit span, and CTMT did not improve the model. Post-hoc pairwise comparisons (Bonferroni corrected) were applied to examine the differences between each of the sentence conditions. The relation between age on the one hand and PTA-high and hearing aid experience on the other was examined to check whether higher age correlated with more hearing loss and/or longer hearing aid experience, but this was not the case.

MRI data acquisition

MRI data acquisition was performed with a 3 T Siemens Magnetom Prisma MRI scanner using a 20-channel head coil. T2*-weighted gradient echo planar imaging (EPI) with BOLD contrast was used (TR = 1800 ms, TE = 30 ms, flip angle = 75 degrees, df = 20, slice thickness = 3 mm, FOV = 192 cm, 33 slices). 600 whole-brain volumes were acquired in the first session; 617 whole-brain volumes were acquired in the second session (the first 17 scans constituted 4 warm-up trials for participants and were removed to eliminate magnetic saturation effects). Anatomical images were recorded with a 3-D T1-weighted MP-RAGE sequence (TR = 2000s, TE = 2.07 ms, flip angle = 9°, slice thickness = 0.75 mm, 224 slices). The mean signal-to-noise ratio of the two experimental sessions was 2.9 (s.d. = 0.3; calculated using the MRIQC-tool42).

fMRI data analysis

(Pre)processing and analysis was performed in SPM12 (Statistical Parametric Mapping, Wellcome Department of Imaging Neuroscience, University College London, http://www.fil.ion.ucl.ac.uk/spm). Preprocessing steps included motion correction and realignment estimation, coregistration, segmentation, and normalization to the Montreal Neurological Institute (MNI) space using normalization parameters obtained from the segmentation of the anatomical T1-weighted image. In addition, the functional data were smoothed with a Gaussian filter (8 mm FWHM kernel; default smoothing kernel in SPM).

General linear models were used for first-level analyses per participant, applying a high-pass filter of 128 s, accounting for serial correlations with an AR(1) model, and using the six head movement parameters obtained in the realignment estimation as regressors. The four sentence types were modelled as boxcar functions (from sentence onset until the onset of the pictures; 3.5 s + jitter) and convolved with a canonical hemodynamic response function. Since no behavioral effects of task condition (i.e. single vs. dual task) were found, we first examined the differences between sentence processing (i.e. the contrast all sentence conditions > baseline condition without sound) in the two different task conditions in first-level and subsequently second-level comparisons. As the second-level comparison showed no differences between the processing of sentences in the single task condition compared to the dual task condition, we collapsed over the two task conditions in all subsequent analyses. This decreases the number of comparisons and increases their power. Next, planned first-level analyses were used to individually calculate estimates for the contrasts (1) SVAO > baseline (the effects of canonical sentence processing) (2) OVAS > SVAO (the effects of object-before-subject word order), (3) AVSO > SVAO (the effects of adjunct-first word order), and (4) AVOS > SVAO (the effects of adjunct-first, object-before-subject word order). After that, we calculated estimates for the contrast of all sentences together > baseline in order to examine whether sentence processing in general shows effects of hearing loss or hearing aid experience. Furthermore, we calculated estimates for each of the complex sentences compared to the baseline (i.e. OVAS > baseline, AVSO > baseline, and AVOS > baseline), to check for consistency of results across the different sentence types.

To investigate the relation between the level of hearing loss and sentence processing, we ran F-contrasts at a group level using the first-level estimates, with PTA-high, age, cognitive measures (digit span, CTMT, vocabulary), and a binary dummy variable for hearing aid use entered as covariates. In addition, to investigate the relation between the hearing aid use and sentence processing, we ran separate F-contrasts for the hearing aid users only, with years of hearing aid experience, PTA-high, age, and cognitive measures (digit span, CTMT, vocabulary) entered as covariates. Effects are reported as significant when they exceed a cluster-level (Family-Wise Error) corrected threshold of p < 0.05 (with a p < 0.001 cluster-forming threshold). When the F-contrasts indicated significant effects of the covariate of interest (hearing loss or hearing aid experience), we followed up with simple t-contrasts to determine the direction of the covariate effect. In addition, a simple t-contrast was performed to examine the neural activity in simple sentence processing compared to the silent baseline (SVAO > baseline) as an overall check of auditory- and language-processing-related activity during canonical sentence processing. All peaks are reported in MNI-space. Localization of the corresponding brain regions was achieved with SPM12 and the Yale BioImage Suite Package (http://sprout022.sprout.yale.edu/mni2tal/mni2tal.html).

Finally, to make sure that the observed effects of hearing aid experience were not driven by extreme cases, we performed additional correlation analyses with Spearman's ρ. This correlation coefficient has low sensitivity to outliers as it limits data points to the value of their rank. These correlations were done with the parameter estimates for each participant extracted from SPM12 and performed within the statistical computing software R.

Results

Behavioral results

The results from the picture selection task, i.e. the sentence comprehension results, are presented in Fig. 2 and Table 3. The results showed effects of sentence condition, with participant performing better on the simple SVAO sentences than on all three types of complex sentences (OVAS β = − 1.48; z = − 16.39; p < 0.001, AVSO β = − 0.25; z = − 2.54; p < 0.05, AVOS β = − 1.72; z = − 18.99; p < 0.001). This shows that the canonical SVAO sentences are easiest to interpret. Based on visual inspection of Fig. 2, the opposite condition, AVOS, with adjunct-first and object-before-subject word order, seems most difficult to interpret. This was confirmed by pairwise comparisons, which showed that the rate of correct responses was different between all complex sentence conditions (all p's < 0.05), with AVOS sentences having the lowest rate of correct responses. Task condition (single or dual), PTA-high, hearing aid experience, age, digit span, and CTMT did not improve the model and therefore we found no evidence of these factors influencing the rate of correct responses. Participants' score on the vocabulary task showed a positive relation with their rate of correct responses (ß = 0.09; z = 10.82; p < 0.001).

Performance on the picture selection task. Boxplot showing the median and first and third quartile of the percentage of correct responses on the picture selection task as a function of sentence type and load. Statistically significant differences in correct responses were found between all sentence conditions, with performance being highest on canonical SVAO sentences and lowest on AVOS sentences. No significant effect of task condition (single or dual) was found. No significant correlations between performance and PTA-high (hearing loss) or hearing aid experience were found. This figure was created in R (version 3.6.2, www.r-project.org41).

Imaging results

The results of the overall check of auditory- and language-processing-related activity during canonical sentence processing in the experiment (main effect of the contrast SVAO > baseline) showed an increase in neural activity related to sentence processing, with a large activation cluster with its peak in the left superior temporal gyrus. These results show that evidence of sentence processing was robustly detected in the data; more detailed results of this comparison are presented in Table S1 and Fig. S1 in the Supplementary Information online.

Hearing loss

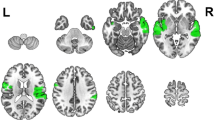

The potential relationship between level of hearing loss (as defined by PTA-high) and sentence processing was investigated in the contrast between the canonical SVAO sentence and the baseline condition without sound (SVAO > baseline), as well as in the contrasts between the three complex sentence conditions and the canonical sentence condition (i.e. OVAS > SVAO, AVSO > SVAO, and AVOS > SVAO). This enables examining the impact of hearing loss severity on simple sentence processing, as well as its impact on complex compared to simple sentence processing. Correlations between the level of hearing loss and the processing of object-before-subject word order (OVAS > SVAO) were found in the left anterior insula (BA13) and the left superior frontal gyrus (BA6; see Table 4, Fig. 3). Follow-up t-contrasts showed that these correlations were positive, thus showing increased activity for processing the more complex sentences in these regions with more severe hearing loss.

Neural activity associated with hearing loss. Increased neural activity for the processing of object-before-subject word order (OVAS > SVAO) with increasing hearing loss (PTA-high; Family-Wise Error corrected on the cluster level, threshold of p < 0.05). Inflated brain images were created with SPM12 (http://www.fil.ion.ucl.ac.uk/spm); the colors reflect the F values from the F-contrasts.

All other comparisons rendered no significant effects of hearing loss. This included the comparisons of all sentences together compared to the baseline and of each of the complex sentences compared to the baseline (i.e. OVAS > baseline, AVSO > baseline, and AVOS > baseline). Interestingly, that means that no effects of hearing loss were found in general sentence processing, simple sentence processing, or in complex sentence processing compared to silence. Rather, the effects only emerge when examining the difference between complex sentence processing (of object-before-subject sentences) compared to simple sentence processing. Importantly, the found effects occur when hearing aid use, age, and cognitive measures are corrected for.

As an additional, exploratory, analysis, we examined whether similar patterns of association between hearing loss and complex sentence processing can be seen in other sentence types. We therefore extracted the parameter estimates (Beta values) for each participant at the two peaks from Table 4 for the contrasts of SVAO > baseline, AVSO > SVAO, and AVOS > SVAO. The significant correlations in the contrast of OVAS > SVAO were also included. The relation between the Beta values in these contrasts and hearing loss are presented in the Supplementary Information online. Supplementary Fig. S2 shows that the positive relation between neural activity and hearing loss as found in the OVAS > SVAO contrast does not appear to be present in the other contrasts.

Hearing aid experience

The relationship between years of hearing aid experience and sentence processing was investigated, for the 19 participants who were daily hearing aid users, in the same sentence contrasts as above. Correlations between years of hearing aid use and complex sentence processing were found for the adjunct-first, object-before-subject word order (AVOS > SVAO) in several areas outside of the core speech processing areas including the cerebellum, right precentral gyrus, and right central operculum (see Table 5, Fig. 4). Follow-up t-contrasts showed that these correlations were positive, thus showing increased activity with longer hearing aid use.

Neural activity associated with hearing aid experience. Increased neural activity for complex sentence processing (AVOS > SVAO) with longer hearing aid experience (i.e., years of hearing aid use) (Family-Wise Error corrected on the cluster level, threshold of p < 0.05). Inflated brain images and slice images were created with SPM12 (http://www.fil.ion.ucl.ac.uk/spm); the colors reflect the F values from the F-contrasts.

Scatter plots of the correlation between hearing aid experience and the parameter estimates (Beta values) for each participant at the five peaks from Table 5 are presented in Fig. S3 (top row) in the Supplementary Information. As can be seen in the scatter plots, there are two participants who have considerably longer hearing aid experience (13 and 19 years) than the other participants and could potentially be outliers. To make sure that the observed correlations are not driven by extreme cases, we additionally performed correlation analyses with Spearman's ρ. The results show that the correlations are still significant in the Cerebellar vermal lobules I–V, the right precentral gyrus, right central operculum, and the right posterior cingulate cortex (i.e. the first four peak regions from Table 5) when accounting for outliers. The correlation coefficients at these peaks were respectively 0.56, 0.50, 0.91, and 0.82 (with respective significance values (p values) of 0.01, 0.03, < 0.001, and < 0.001). At the last peak region from Table 5, the right middle occipital gyrus, the correlation (coefficient 0.41) did not reach significance when correcting for outliers (p = 0.08).

All other relations between different sentence contrasts and hearing aid experience rendered no significant effects. This includes the contrast of the adjunct-first, object-before-subject word order (AVOS) compared to the baseline. As the AVOS > baseline contrast rendered no significant effects of hearing aid experience but the AVOS > SVAO contrast did, this suggests that effects of hearing aid experience can be found only when examining the specific processing differences between simple and complex sentences; that is, general sentence processing does not seem to change with hearing aid experience.

As an exploratory analysis, we additionally examined whether similar, although less salient, patterns of association between hearing aid experience and sentence processing can be seen in other comparisons. To this end, we extracted the parameter estimates (Beta values) for each participant in each contrast at the five peaks presented in Table 5 and present these graphically in relation to their years of hearing aid experience in the Supplementary Information online. As can be seen in Supplementary Fig. S3, there are clear positive relations between neural activity and years of hearing aid experience in the AVOS > SVAO contrast, which is the contrast that showed significant results in Table 5 and Fig. 4, in all five regions. In addition, the other two contrasts reflecting complex sentence processing, OVAS > SVAO and AVSO > SVAO, also show positive trends in all five regions. Thus, similar neural activation patterns emerge for the other complex sentences, although these did not reach significance in the whole-brain analyses. In contrast, canonical sentence processing (i.e. the SVAO > baseline contrast) shows no evidence of a positive relation between neural activity and years of hearing aid experience.

Taken together, these results show effects of hearing loss severity and of hearing aid experience on the neural processing of auditory complex sentences, with hearing loss severity modulating activity mostly in superior frontal areas and the insula and hearing aid experience modulating activity in various regions outside of the core speech processing areas.

Discussion

We investigated the effects of hearing loss and hearing aid use on higher-level language processes. We did not find any behavioral effects of hearing loss severity or hearing aid experience on the comprehension of simple or complex sentences. In addition, the dual-task paradigm did not influence behavioral or neural sentence processing. At a neural level, however, both hearing loss severity and hearing aid experience correlated with functional activity during sentence processing. More specifically, hearing loss severity was associated with increased activity in left superior frontal areas and the left insula, but only when processing complex object-before-subject sentences. Longer hearing aid experience in turn was associated with recruitment of several areas outside of the core speech processing network, including the cerebellum, right precentral gyrus, and right cingulate cortex, when processing the most complex sentences used in the experiment, adjunct-first object-before-subject sentences.

As stated above, the dual-task paradigm did not influence behavioral or neural sentence processing. Importantly, the secondary task was a perceptual task of fixation cross change detection, which was specifically designed to interact at a domain-unspecific (i.e. non-auditory, non-linguistic) level. Our findings for the dual-task paradigm are not directly in line with previous studies, which report frontal recruitment as reflecting the involvement of domain-general executive functions to compensate for hearing loss14,15,43. Notably, previous studies typically used stimuli that interfere or interact directly with the primary task (e.g., auditory noise—as in degraded speech—or a dual speaker). Thus, the frontal activations frequently observed with domain-specific stimuli may reflect domain-specific rather than general cognitive control. This should be taken into account in future research.

Effects of age-related hearing loss

We expected increased hearing loss to be associated with decreased activity in cortical regions related to the speech processing network, and increased activity in frontal regions outside of this network (Hypothesis 1). In line with the latter expectation, the neuroimaging results showed that individuals with higher levels of age-related hearing loss exhibited increased activity in the left superior frontal gyrus when processing complex object-before-subject sentences. These results show evidence of functional changes in frontal regions related to hearing loss, in line with previous research13,14. These findings support the idea that that frontal region recruitment is a cognitive compensatory process in hearing loss44,45 and that neural-functional changes in elderly hard of hearing go beyond the auditory cortex13,46.

In addition, effects of hearing loss were found in the left anterior insula. Although not part of the core language processing network10, the left anterior insula has previously been associated with speech processing47,48. It has been suggested that the insula may function as a hub connecting and mediating higher-order cognitive functions in speech and language processing47, and as such could play a compensatory role similar to that of the frontal regions. Furthermore, increased activity in the insula has been shown to reflect effortful listening14,49. Interestingly, as similar correlations between hearing loss and activity in the insula were absent in the other sentence contrasts in our study, these effects might only become apparent through investigation of specific—grammatically more complex—sentence structures.

Contrary to our expectations, we found no decrease in activity in cortical regions of the speech processing network in relation to hearing loss. Effects in temporal regions are frequently found to be associated with hearing loss, with decreased activity in these areas associated with poorer hearing11,13. A possible explanation for the missing decrease in activity may be that in the previously mentioned studies, sound intensity levels of the stimuli were constant across participants. In contrast, we individually determined sound intensity levels for each participant, which led to increased sound intensity levels with increasing hearing loss. Although sound levels are known to drive auditory activation (see Schreiner and Malone50 for an overview), a recent study shows that it is actually individuals' perceived loudness that drives this51. Specifically, a study in young normal-hearing participants demonstrated that the BOLD signal in several auditory cortex regions seems to be a linear reflection of perceived loudness but not sound intensity level51. The absence of effects in cortical regions of the speech processing network in our study therefore potentially suggests that the effects found in earlier studies could be explained by perceptual difficulties rather than linguistic processing. However, this is mere speculation and the question remains open for further investigation. Complicating this issue, hearing loss has been associated with reduced gray matter volume in the primary auditory cortex11,52, among other brain volume changes53,54 (although some studies found no effects, see55).

It should also be noted that in our study, hearing aid users and non-users were combined when investigating the effects of hearing loss, although the use of a hearing aid in daily life was corrected for in the analyses. While this is not uncommon in other research9,56, it presents a confound that might explain the absence of results in regions related to the speech network. In addition, larger sample sizes should be investigated in future work to increase statistical power. Overall, our findings reconfirm the compensatory role of the frontal lobe in speech processing for individuals with hearing loss. However, the precise nature of functional changes in the engagement of other regions, specifically the insula and temporal regions, remains to be investigated further, especially in relation to the processing of higher-level language.

Finally, our predictions included the expectation that hearing loss would lead to less successful comprehension of complex sentences based on previous research7,56,57. Yet, in our experiment, although sentence comprehension as such was relatively low for more complex sentence conditions, this did not correlate with severity of hearing loss. Potential explanations for this are similar to those discussed in relation to the neural findings: individual adjustments of sound intensity, limited sample size, or the true absence of an effect. Overall, the finding of modulated neural activity in relation to hearing loss severity in combination with the absence of comprehension difficulties suggests that compensatory mechanisms are being applied successfully, limiting the consequences of hearing loss for communication15,16.

Effects of hearing aid experience

Regarding the effects of hearing aid experience, we expected increased activity in cortical regions related to the speech processing network, and decreased activity in frontal regions outside of this network with longer hearing aid experience (Hypothesis 2). The neuroimaging results showed that individuals with longer hearing aid experience exhibited increased activity in several cortical regions outside of the temporal cortex for complex sentence processing. Thus, effects outside of the speech processing network were observed, but the directionality of the effects was different from our hypothesis. Specifically, increased activity in relation to longer hearing aid experience was found in the cerebellum, right precentral gyrus, right central operculum, right posterior cingulate cortex, and right middle occipital gyrus. Whereas some of these regions are related to the speech processing network, specifically the central operculum in the inferior frontal gyrus10,58, the majority of these regions are not typically associated with speech processing—although connections to speech processing regions by means of for example the fronto-occipital fasciculus exist10. The cerebellum has previously been associated with regulating language59, auditory processing60,61 and more specifically processing of auditory complex sentences34,62. Similarly, whereas typically associated with visual processing, occipital areas have previously been associated with auditory processing12,28,34,63,64—although most of these studies12,34,63,64 found peak activities located in the inferior rather than the middle occipital gyrus. Notably, the correlation of hearing aid experience with activity in the right middle occipital gyrus did not survive outlier correction, and should therefore be interpreted with caution. The right posterior cingulate cortex is part of the limbic system and has also previously been associated with language processing14,65,66.

Finally, the involvement of frontal regions was predicted based on previous work13,14,44,45 and by findings of Habicht et al.28. Precentral activity, as found in our study, is traditionally associated with motor activity, but has commonly been found to play a role in language processing as well10. However, Habicht et al.28 found decreased rather than increased activity in right frontal areas and in left and right cerebellar areas for hearing aided compared to unaided listeners. The same study found increased activity in several brain regions with longer hearing aid experience, but these regions—lingual gyri and the left precuneus—show little overlap with the regions identified in the current experiment. Thus, we could not replicate the findings of Habicht et al.28. However, similarly to the findings of Habicht et al.28 we found increased activity in several brain regions correlating with longer hearing aid experience. As increased activity is generally seen as a sign of processing effort, at first glance these findings seem surprising. One possible explanation could be that as hearing aid users were tested in the MRI scanner without their hearing aids, long-term users were affected by the absence of their hearing aid most, leading to more effortful processing of complex sentences. As this is just one possible explanation, it is clear that more research on (neural) speech processing in hearing aid users is needed. In light of this, the current study can be seen as providing a new list of potential brain regions to further investigate in relation to hearing aid use and experience.

Interestingly, the effect of hearing aid experience in our experiment was only observed in the most complex sentence condition. Similar but less salient patterns (i.e. not significant in whole-brain analyses) were observed for the other complex sentences, but not for the canonical sentences. This has important consequences for future research on the influence of hearing aid experience, which often does not include complex sentences (but see28). This finding is likely related to the more taxing nature of more complex sentences18,31,32, which are therefore more likely to invoke compensatory mechanisms. Overall, this research gives one of the first indications of changes in cortical recruitment associated with longer hearing aid experience, but explicitly calls for further research to confirm its findings. These neural adaptations illustrate that the brain adapts to the use of hearing aids and that this influences higher-level language processing (i.e. the processing of complex sentences).

Lastly, we expected improved comprehension of complex sentences following previous findings of improvements in speech identification following hearing aid fitting [e.g.,23]. No behavioral effects were found in the current study, although we do not interpret this as evidence of an absence of effects of hearing aid experience on sentence processing, especially as effects were found at a neural level. In our view, these results merely signal that evaluating processing at a neural level is a more sensitive measure than comprehension at a behavioral level; the latter might be indistinguishable in different individuals even though compensatory cortical neuroplasticity is involved. Neural measures, in contrast, can pick up changes in processing that are not necessarily reflected in performance.

Conclusion

In the current study, we investigated the influence of hearing loss and hearing aid experience on sentence processing. To this end, we ran an fMRI study in which participants processed simple and complex auditory sentences. In comprehension, we found no influence of hearing loss severity or hearing aid experience on sentence comprehension. In neural processing, however, hearing loss severity was associated with increased activity in left superior frontal and left anterior insular areas, but only when processing complex object-before-subject sentences. Longer hearing aid experience was associated with the recruitment of several areas outside of the core speech processing network, including the cerebellum, right precentral gyrus, and right cingulate cortex when processing the most complex sentences used in the experiment. We interpret these findings as illustrating that the brain adapts to the use of hearing aids and that this influences language processing, indicating that it is important to investigate the adaptive capacity of the brain with regard to the effect of hearing aid use and language processing.

Data availability

The datasets analyzed during the current study are available from the corresponding author on reasonable request.

References

Chien, W. & Lin, F. R. Prevalence of hearing aid use among older adults in the United States. Arch. Intern. Med. 172(3), 292–293. https://doi.org/10.1001/archinternmed.2011.1408 (2012).

Feder, K., Michaud, D., Ramage-Morin, P., McNamee, J. & Beauregard, Y. Prevalence of hearing loss among Canadians aged 20 to 79: Audiometric results from the 2012/2013 Canadian health measures survey. Health Rep. 26(7), 18–25 (2015).

Armstrong, N. M. et al. Temporal sequence of hearing impairment and cognition in the Baltimore Longitudinal Study of Aging. J. Gerontol. Ser. A Biol. Sci. Med. Sci. 75(3), 574–580. https://doi.org/10.1093/gerona/gly268 (2020).

Lin, F. R. et al. Hearing loss and cognition in the Baltimore Longitudinal Study of Aging. Neuropsychology 25(6), 763–770. https://doi.org/10.1037/a0024238 (2011).

Lin, F. R. et al. Hearing loss and cognitive decline in older adults. JAMA Internal Med. 173(4), 293. https://doi.org/10.1001/jamainternmed.2013.1868 (2013).

Lin, F. R. et al. Hearing loss and incident dementia. Arch. Neurol. 68(2), 214–220. https://doi.org/10.1001/archneurol.2010.362 (2011).

Wingfield, A., McCoy, S. L., Peelle, J. E., Tun, P. A. & Cox, C. L. Effects of adult aging and hearing loss on comprehension of rapid speech varying in syntactic complexity. J. Am. Acad. Audiol. 17(7), 487–497. https://doi.org/10.3766/jaaa.17.7.4 (2006).

Tun, P. A., Benichov, J. & Wingfield, A. Response latencies in auditory sentence comprehension: Effects of linguistic versus perceptual challenge. Psychol. Aging 25(3), 730–735. https://doi.org/10.1037/a0019300 (2010).

Wendt, D., Kollmeier, B. & Brand, T. How hearing impairment affects sentence comprehension: Using eye fixations to investigate the duration of speech processing. Trends Hear. 19, 1–18. https://doi.org/10.1177/2331216515584149 (2015).

Friederici, A. D. Language In Our Brain: The Origins Of A Uniquely Human Capacity (The MIT Press, 2017).

Peelle, J. E., Troiani, V., Grossman, M. & Wingfield, A. Hearing loss in older adults affects neural systems supporting speech comprehension. J. Neurosci. 31(35), 12638–12643. https://doi.org/10.1523/JNEUROSCI.2559-11.2011 (2011).

Vogelzang, M., Thiel, C. M., Rosemann, S., Rieger, J. W. & Ruigendijk, E. When hearing does not mean understanding: On the neural processing of syntactically complex sentences by listeners with hearing loss. J. Speech Lang. Hear. Res. 64(1), 250–262. https://doi.org/10.1044/2020_JSLHR-20-00262 (2021).

Campbell, J. & Sharma, A. Compensatory changes in cortical resource allocation in adults with hearing loss. Front. Syst. Neurosci. 7(OCT), 71. https://doi.org/10.3389/fnsys.2013.00071 (2013).

Erb, J. & Obleser, J. Upregulation of cognitive control networks in older adults’ speech comprehension. Front. Syst. Neurosci. 7(DEC), 116. https://doi.org/10.3389/fnsys.2013.00116 (2013).

Peelle, J. E. & Wingfield, A. The neural consequences of age-related hearing loss. Trends Neurosci. 39(7), 486–497. https://doi.org/10.1016/j.tins.2016.05.001 (2016).

Reuter-Lorenz, P. A. & Cappell, K. A. Neurocognitive aging and the compensation hypothesis. Curr. Dir. Psychol. Sci. 17(3), 177–182. https://doi.org/10.1111/j.1467-8721.2008.00570.x (2008).

Rönnberg, J. et al. The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Front. Syst. Neurosci. 7, 31. https://doi.org/10.3389/FNSYS.2013.00031 (2013).

Carroll, R. & Ruigendijk, E. The effects of syntactic complexity on processing sentences in noise. J. Psycholinguist. Res. 42(2), 139–159. https://doi.org/10.1007/s10936-012-9213-7 (2013).

Mattys, S. L., Brooks, J. & Cooke, M. Recognizing speech under a processing load: Dissociating energetic from informational factors. Cogn. Psychol. 59(3), 203–243. https://doi.org/10.1016/J.COGPSYCH.2009.04.001 (2009).

Holube, I., Von Gablenz, P., Kowalk, U. & Bitzer, J. Listening effort and hearing-aid benefit of older adults in everyday life in SPPL2020: 2nd Workshop On Speech Perception and Production Across The Lifespan (ed. Taschenberger, L.) 66–67. https://doi.org/10.5281/zenodo.3732382 (2020).

Habicht, J., Kollmeier, B. & Neher, T. Are experienced hearing aid users faster at grasping the meaning of a sentence than inexperienced users? An eye-tracking study. Trends Hear. 20, 1–13. https://doi.org/10.1177/2331216516660966 (2016).

Habicht, J., Finke, M. & Neher, T. Auditory acclimatization to bilateral hearing aids. Ear Hear. 39(1), 161–171. https://doi.org/10.1097/AUD.0000000000000476 (2018).

Gatehouse, S. The time course and magnitude of perceptual acclimatization to frequency responses: Evidence from monaural fitting of hearing aids. J. Acoust. Soc. Am. 92(3), 1258–1268. https://doi.org/10.1121/1.403921 (1992).

Munro, K. J. & Lutman, M. E. The effect of speech presentation level on measurement of auditory acclimatization to amplified speech. J. Acoust. Soc. Am. 114(1), 484–495. https://doi.org/10.1121/1.1577556 (2003).

Glick, H. A. & Sharma, A. Cortical neuroplasticity and cognitive function in early-stage, mild-moderate hearing loss: Evidence of neurocognitive benefit from hearing aid use. Front. Neurosci. 14, 93. https://doi.org/10.3389/fnins.2020.00093 (2020).

Dawes, P., Munro, K. J., Kalluri, S. & Edwards, B. Auditory acclimatization and hearing aids: Late auditory evoked potentials and speech recognition following unilateral and bilateral amplification. J. Acoust. Soc. Am. 135(6), 3560–3569. https://doi.org/10.1121/1.4874629 (2014).

Hwang, J. H., Wu, C. W., Chen, J. H. & Liu, T. C. Changes in activation of the auditory cortex following long-term amplification: An fMRI study. Acta Otolaryngol. 126(12), 1275–1280. https://doi.org/10.1080/00016480600794503 (2006).

Habicht, J., Behler, O., Kollmeier, B. & Neher, T. Exploring differences in speech processing among older hearing-impaired listeners with or without hearing aid experience: Eye-tracking and fMRI measurements. Front. Neurosci. 13, 1–14. https://doi.org/10.3389/fnins.2019.00420 (2019).

Cinque, G. Types of A’-Dependencies (MIT Press, 1990).

Rizzi, L. The fine structure of the left periphery. In Elements of Grammar: A Handbook of Generative Syntax (ed. Haegeman, L.) 281–337 (Kluwer, 1997). https://doi.org/10.1007/978-94-011-5420-8_7.

Bader, M. & Bayer, J. Case And Linking In Language Comprehension: Evidence From German (Springer, 2006).

Wendt, D., Brand, T. & Kollmeier, B. An eye-tracking paradigm for analyzing the processing time of sentences with different linguistic complexities. PLoS One 9, 6. https://doi.org/10.1371/journal.pone.0100186 (2014).

Nasreddine, Z. S. et al. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 53(4), 695–699. https://doi.org/10.1111/j.1532-5415.2005.53221.x (2005).

Vogelzang, M., Thiel, C. M., Rosemann, S., Rieger, J. W. & Ruigendijk, E. Neural mechanisms underlying the processing of complex sentences: An fMRI study. Neurobiol. Lang. 1(2), 226–248. https://doi.org/10.1162/nol_a_00011 (2020).

Uslar, V. N. et al. Development and evaluation of a linguistically and audiologically controlled sentence intelligibility test. J. Acoust. Soc. Am. 134(4), 3039–3056. https://doi.org/10.1121/1.4818760 (2013).

Reynolds, C. R. Comprehensive TrailMaking Test (Pro-Ed, 2002).

Wagener, K. C., Kühnel, V. & Kollmeier, B. Entwicklung und Evaluation eines Satztests für die deutsche Sprache I: Design des Oldenburger Satztests [Development and evaluation of a German sentence test I: Design of the Oldenburg sentence test]. Z. Audiol. 38, 4–15 (1999).

Wagener, K. C., Brand, T. & Kollmeier, B. Entwicklung und Evaluation eines Satztests für die deutsche Sprache II: Optimierung des oldenburger satztests [Development and evaluation of a german sentence test part II: Optimization of the Oldenburg sentence test]. Z. Audiol. 38, 44–56 (1999).

Wagener, K. C., Brand, T. & Kollmeier, B. Entwicklung und Evaluation eines Satztests für die deutsche Sprache III: Evaluation des Oldenburger Satztests [Development and evaluation of a German sentence test Part III: Evaluation of the Oldenburg sentence test]. Z. Audiol. 38, 86–95 (1999).

Schmidt, K.-H. & Metzler, P. Wortschatztest [MultipleChoice Word Test] (Beltz Test GmbH, 1992).

R Core Team. R: A Language and Environment for Statistical Computing. https://www.R-project.org/ (R Foundation for Statistical Computing, Vienna, Austria, 2019).

Esteban, O. et al. MRIQC: Advancing the automatic prediction of image quality in MRI from unseen sites. PLoS One 12(9), e0184661. https://doi.org/10.1371/journal.pone.0184661 (2017).

Peelle, J. E. Listening effort: How the cognitive consequences of acoustic challenge are reflected in brain and behavior. Ear Hear. 39, 204–214. https://doi.org/10.1097/AUD.0000000000000494 (2018).

Glick, H. & Sharma, A. Cross-modal plasticity in developmental and age-related hearing loss: Clinical implications. Hear. Res. 343, 191–201. https://doi.org/10.1016/j.heares.2016.08.012 (2017).

Rosemann, S. & Thiel, C. M. Audio-visual speech processing in age-related hearing loss: Stronger integration and increased frontal lobe recruitment. Neuroimage 175, 425–437. https://doi.org/10.1016/J.NEUROIMAGE.2018.04.023 (2018).

Husain, F. T., Carpenter-Thompson, J. R. & Schmidt, S. A. The effect of mild-to-moderate hearing loss on auditory and emotion processing networks. Front. Syst. Neurosci. 8, 10. https://doi.org/10.3389/fnsys.2014.00010 (2014).

Oh, A., Duerden, E. G. & Pang, E. W. The role of the insula in speech and language processing. Brain Lang. 135, 96–103. https://doi.org/10.1016/j.bandl.2014.06.003 (2014).

Adank, P. The neural bases of difficult speech comprehension and speech production: Two Activation Likelihood Estimation (ALE) meta-analyses. Neuroimage 122, 42–54. https://doi.org/10.1016/j.bandl.2012.04.014 (2012).

Erb, J. et al. The brain dynamics of rapid perceptual adaptation to adverse listening conditions. J. Neurosci. 33(26), 10688–10697. https://doi.org/10.1523/JNEUROSCI.4596-12.2013 (2013).

Schreiner, C. E. & Malone, B. J. Representation of loudness in the auditory cortex. In The Human Auditory System (eds Aminoff, M. J. et al.) 73–84 (Elsevier, 2015). https://doi.org/10.1016/B978-0-444-62630-1.00004-4.

Behler, O. & Uppenkamp, S. The representation of level and loudness in the central auditory system for unilateral stimulation. Neuroimage 139, 176–188. https://doi.org/10.1016/j.neuroimage.2016.06.025 (2016).

Husain, F. T. et al. Neuroanatomical changes due to hearing loss and chronic tinnitus: A combined VBM and DTI study. Brain Res. 1369, 74–88. https://doi.org/10.1016/j.brainres.2010.10.095 (2011).

Alfandari, D. et al. Brain volume differences associated with hearing impairment in adults. Trends Hear. https://doi.org/10.1177/2331216518763689 (2018).

Mudar, R. A. & Husain, F. T. Neural alterations in acquired age-related hearing loss. Front. Psychol. 7, 828. https://doi.org/10.3389/fpsyg.2016.00828 (2016).

Rosemann, S. & Thiel, C. M. Neuroanatomical changes associated with age-related hearing loss and listening effort. Brain Struct. Funct. 225(9), 2689–2700. https://doi.org/10.1007/s00429-020-02148-w (2020).

Carroll, R., Uslar, V., Brand, T. & Ruigendijk, E. Processing mechanisms in hearing-impaired listeners: Evidence from reaction times and sentence interpretation. Ear Hear. 37(6), e391–e401. https://doi.org/10.1097/AUD.0000000000000339 (2016).

McCoy, S. L. et al. Hearing loss and perceptual effort: Downstream effects on older adults’ memory for speech. Q. J. Exp. Psychol. Sect. A 58(1), 22–33. https://doi.org/10.1080/02724980443000151 (2005).

Friederici, A. D., Bahlmann, J., Heim, S., Schubotz, R. I. & Anwander, A. The brain differentiates human and non-human grammars: Functional localization and structural connectivity. Proc. Natl. Acad. Sci. USA 103(7), 2458–2463 (2006).

Starowicz-Filip, A. et al. The role of the cerebellum in the regulation of language functions. Psychiatr. Pol. 51(4), 661–671. https://doi.org/10.12740/PP/68547 (2017).

Baumann, O. et al. Consensus paper: The role of the cerebellum in perceptual processes. Cerebellum (Lond., Engl.) 14(2), 197–220. https://doi.org/10.1007/s12311-014-0627-7 (2015).

Schmahmann, J. D. Cerebellum in Alzheimer’s disease and frontotemporal dementia: Not a silent bystander. Brain 139, 1314–1318 (2016).

Fengler, A., Meyer, L. & Friederici, A. D. How the brain attunes to sentence processing: Relating behavior, structure, and function. Neuroimage 129, 268–278 (2016).

Shetreet, E. & Friedmann, N. The processing of different syntactic structures: fMRI investigation of the linguistic distinction between wh-movement and verb movement. J. Neurolinguist. 27(1), 1–17. https://doi.org/10.1016/j.jneuroling.2013.06.003 (2014).

Peelle, J. E., McMillan, C., Moore, P., Grossman, M. & Wingfield, A. Dissociable patterns of brain activity during comprehension of rapid and syntactically complex speech: Evidence from fMRI. Brain Lang. 91(3), 315–325. https://doi.org/10.1016/j.bandl.2004.05.007 (2004).

Xiao, Y., Friederici, A. D., Margulies, D. & Brauer, J. Longitudinal changes in resting-state fMRI from age 5 to age 6 years covary with language development. Neuroimage 128, 116–124 (2016).

Yeatman, J. D., Ben-Shachar, M., Glover, G. H. & Feldman, H. M. Individual differences in auditory sentence comprehension in children: An exploratory event-related functional magnetic resonance imaging investigation. Brain Lang. 114(2), 72–79. https://doi.org/10.1016/j.bandl.2009.11.006 (2010).

Acknowledgements

This work was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany's Excellence Strategy – EXC 2177/1—Project ID 390895286. This work was supported by the Neuroimaging Unit of the Carl von Ossietzky Universität Oldenburg funded by grants from the German Research Foundation (3T MRI INST 184/152-1 FUGG and MEG INST 184/148-1 FUGG). We would like to thank all the participants for their participation. We would additionally like to thank Jan Michalsky for his help with recording the stimuli, Rebecca Carroll for her help with recording and preparing the stimuli, our students and research assistants (Regina Hert, Laura Peters, Anne Lina Voß, and Charlotte Sielaff) for their help with the data collection, and Gülsen Yanç and Katharina Grote for their support during MRI data acquisition. We would like to thank Tina Schmitt for her help with the SNR analysis. We thank an anonymous reviewer for suggesting the analyses of each of the complex sentences compared to the baseline, which were not planned and were thus carried out post hoc.

Funding

Open Access funding enabled and organized by Projekt DEAL.. Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

M.V., E.R., J.W.R. and C.M.T. designed the study. M.V. and S.R. conducted data acquisition and analysis. All authors interpreted the results and contributed to the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vogelzang, M., Thiel, C.M., Rosemann, S. et al. Effects of age-related hearing loss and hearing aid experience on sentence processing. Sci Rep 11, 5994 (2021). https://doi.org/10.1038/s41598-021-85349-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-85349-5

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.