Abstract

We combine after-action review and needs-assessment frameworks to describe the four most pervasive contemporary methodological challenges faced by international business (IB) researchers, as identified by authors of Journal of International Business Studies articles: Psychometrically deficient measures (mentioned in 73% of articles), idiosyncratic samples or contexts (mentioned in 62.2% of articles), less-than-ideal research designs (mentioned in 62.2% of articles), and insufficient evidence about causal relations (mentioned in 8.1% of articles). Then, we offer solutions to address these challenges: demonstrating why and how the conceptualization of a construct is accurate given a particular context, specifying whether constructs are reflective or formative, taking advantage of the existence of multiple indicators to measure multi-dimensional constructs, using particular samples and contexts as vehicles for theorizing and further theory development, seeking out particular samples or contexts where hypotheses are more or less likely to be supported empirically, using Big Data techniques to take advantage of untapped sources of information and to re-analyze currently available data, implementing quasi-experiments, and conducting necessary-condition analysis. Our article aims to advance IB theory by tackling the most typical methodological challenges and is intended for researchers, reviewers and editors, research consumers, and instructors who are training the next generation of scholars.

Nous combinons les cadres de l’examen après action et de l’évaluation des besoins pour décrire les quatre défis méthodologiques contemporains les plus répandus auxquels sont confrontés les chercheurs en international business (IB), tels qu’identifiés par les auteurs des articles du Journal of International Business Studies : Mesures psychométriquement déficientes (mentionnées dans 73% des articles), échantillons ou contextes idiosyncrasiques (mentionnés dans 62.2% des articles), plans de recherche non idéaux (mentionnés dans 62.2% des articles) et preuves insuffisantes sur les relations causales (mentionnées dans 8.1% des articles). Nous proposons ensuite des solutions pour relever ces défis : démontrer pourquoi et comment la conceptualisation d’une construction est exacte dans un contexte particulier, préciser si les constructions sont réfléchies ou formatrices, tirer parti de l’existence de multiples indicateurs pour mesurer les constructions multidimensionnelles, utiliser des échantillons et des contextes particuliers comme véhicules pour la formalisation et le développement ultérieur de la théorie, rechercher des échantillons ou des contextes particuliers où les hypothèses sont plus ou moins susceptibles d’être soutenues empiriquement, utiliser les techniques du Big Data pour tirer parti de sources d’information inexploitées et réanalyser les données actuellement disponibles, mettre en œuvre des quasi-expériences et effectuer l’analyse des conditions nécessaires. Notre article vise à faire progresser les théories en IB en abordant les défis méthodologiques les plus typiques et s’adresse aux chercheurs, aux réviseurs et aux éditeurs, aux utilisateurs de recherche et aux instructeurs qui forment la prochaine génération d’universitaires.

Combinamos los marcos de la revisión después la acción y la valoración de necesidades para describir los cuatro retos metodológicos contemporáneos más dominantes enfrentados por los investigadores de negocios internacionales (IB por sus iniciales en inglés), tal como lo identifican los autores de los artículos del Journal of International Business Studies: Medidas psicométricamente deficientes (mencionado en 73% de los artículos), muestras idiosincráticas o contextos (mencionado en 62,2% de los artículos), y evidencia insuficiente de las relaciones causales (mencionado en 8,1% de los artículos). Luego, ofrecemos soluciones para abordar estos retos: demostrando por qué y cómo la conceptualización de un constructo es exacto dado un contexto particular, especificando si los constructos son reflexivos o formativos, aprovechando la existencia de múltiples indicadores para medir los constructos multidimensionales, usando muestras particulares y contextos como vehículos para teorizar y avanzar el desarrollo de teoría, buscando muestras particulares o contextos en donde las hipótesis son más o menos propensas a ser apoyadas empíricamente, usando técnicas de Big Data para aprovechar fuentes de información no explotadas y re-analizar los datos disponibles actualmente, implementando cuasi-experimentos, y llevando a cabo análisis de condición necesaria. Nuestro artículo tiene como objetivo avanzar la teoría de negocios internacionales tratando de resolver los retos metodológicos más típicos y está dirigido a investigadores, evaluadores y editores, consumidores de investigación, e instructores quienes están entrenando la próxima generación de académicos.

Combinamos modelos de revisão pós-ação e de avaliação de necessidades para descrever os quatro desafios metodológicos contemporâneos mais difundidos enfrentados por pesquisadores de negócios internacionais (IB), conforme identificados pelos autores dos artigos do Journal of International Business Studies: Medidas psicometricamente deficientes (mencionadas em 73% dos artigos), amostras ou contextos idiossincráticos (mencionadas em 62,2% dos artigos), designs de pesquisa abaixo do ideal (mencionados em 62,2% dos artigos) e evidências insuficientes sobre relações causais (mencionadas em 8,1% dos artigos). Em seguida, oferecemos soluções para enfrentar esses desafios: demonstrar por que e como a conceitualização de um construto é precisa dado um contexto específico, especificando se os construtos são reflexivos ou formativos, aproveitando a existência de vários indicadores para medir construtos multidimensionais, usando amostras e contextos específicos como veículos para teorizar e desenvolver teorias adicionais, buscando amostras ou contextos específicos em que hipóteses são mais ou menos propensas a serem empiricamente suportadas, usando técnicas de Big Data para tirar proveito de fontes de informação inexploradas e analisar novamente dados atualmente disponíveis, implementando quase experimentos e conduzindo análises de condições necessárias. Nosso artigo tem como objetivo avançar a teoria em IB por enfrentar os desafios metodológicos mais típicos e destina-se a pesquisadores, revisores e editores, consumidores de pesquisa e instrutores que estão treinando a próxima geração de acadêmicos.

Chinese

我们结合事后回顾和需求评估框架来描述国际商务 (IB) 研究人员面临的四种最普遍的正如《国际商务研究期刊》诸多文章的作者所指出的当代方法论挑战: 心理测量有缺陷的量表(在73%的文章中提及)、特质样本或情境(在62.2%的文章中提及)、欠佳的研究设计(在62.2%的文章中提及)、以及因果关系证据不足(在8.1%的文章中提及)。然后, 我们提供了解决这些挑战的方案: 展示在特定情境下构建的概念化为什么以及如何精确, 指定构建是反射性的或是形成性的, 利用现存的多个指标来衡量多维构建, 使用特定样本和情境作为理论化和进一步理论开发的工具, 寻找假设或多或少被实证支持的特定的样本或情境, 使用大数据技术来利用未开发的信息源并重新分析当前可获数据, 实施准实验并进行必要的条件分析。我们的文章旨在通过应对最典型的方法论挑战来推进IB理论, 针对的是正在培训下一代学者的研究人员、审稿者和编辑、研究的消费者和教员。

Similar content being viewed by others

INTRODUCTION

Several recently published reviews and reflections have highlighted the increasing diversity of international business (IB) research in terms of its disciplinary bases, theoretical and conceptual underpinnings, topics, and methodologies (e.g., Aguinis, Cascio & Ramani, 2017; Cantwell & Brannen, 2016; Griffith, Cavusgil & Xu, 2008; Liesch, Hakanson, McGaughey, Middleton & Cretchley, 2011; Shenkar, 2004; Verbeke & Calma, 2017). Also, a recently published edited volume describes best practices in IB research methods (Eden, Nielsen & Verbeke, 2020). Our article complements and goes beyond these efforts by identifying contemporary methodological challenges faced by IB researchers, as described by Journal of International Business Studies (JIBS) authors themselves, and offering solutions to each of these challenges. Therefore, our article makes the following contributions. First, our proposed solutions are mostly based on innovations outside of IB (i.e., organizational behavior, human resource management, strategy, psychology, and entrepreneurship). Also, they are readily available and can be implemented without substantial effort or cost. Second, our proposed solutions extend current knowledge by identifying new insights and opportunities. As one example, in their chapters in Eden et al.’s (2020) edited volume, Doty & Astakhova (2020) and van Witteloostuijn, Eden & Chang (2020) referred to challenges posed by the use of psychometrically deficient constructs, and suggested traditional solutions such as the use of multisource data and observable constructs. We extend these suggestions by identifying additional solutions (i.e., using reflective versus formative indicators; using Big Data) that offer new avenues for IB researchers facing this challenge. Third, we provide examples of research that has implemented our proposed solutions to illustrate that our recommendations are realistic and not just wishful thinking. Overall, our goal is to help advance IB theory by addressing the most typical methodological challenges. Our article is intended for researchers with the typical methodological training offered by IB doctoral programs, journal reviewers and editors, research consumers, and instructors who are training the next generation of scholars.

Our approach is based on combining two inter-related frameworks: an after-action review (AAR) and a needs assessment. Popularized by the military, an AAR is a “continuous learning process reflecting the desire to sustain performance or the need to change behavior in order to effect more favorable outcomes” (Salter & Klein, 2007: 5). Importantly, AARs do not seek to assign blame. Instead, they adopt an objective, non-punitive stance that allows for a reflective examination of past performance, with the goal of improving future outcomes (Morrison & Meliza, 1999). We complement our AAR approach with a needs-assessment framework drawn from the training-and-development literature (Aguinis & Kraiger, 2009; Noe, 2017). In this approach, information about challenges forms the basis of future training and development efforts aimed at addressing gaps between current and desired levels of knowledge, skills, and performance (Cascio & Aguinis, 2019; Noe, 2017). Based on these two approaches, we conducted a review and content analysis to uncover the most pervasive contemporary methodological challenges, as identified by the authors of all empirical articles published in JIBS from January through December 2018. In addition, to make our article most useful and relevant for IB readers, we illustrate the methodological challenges by referring to variables used in IB research specifically. We then present solutions that can help IB researchers address these challenges.

We pause here to clarify two important issues. First, because we examined published JIBS articles, the methodological challenges we identify represent only those that survived the review process. In other words, each of the articles probably had other methodological challenges, but the authors addressed them as their manuscripts improved during the review process based on feedback provided by the reviewers and the editor. We discuss this issue in more detail in the section titled “Limitations and Suggestions for Future Research.” Second, our focus on recent JIBS articles is not intended to target this journal, or more broadly, the field of IB. For example, authors of articles published in Academy of Management Journal (AMJ), Strategic Management Journal (SMJ), Journal of Management (JOM), and Journal of Applied Psychology (JAP) have identified some of the challenges also referred to by JIBS authors.1 So, we focus on JIBS as a case study. Also, in implementing our combined AAR-needs-assessment approach, our intention is not to cast blame. Rather, our hope is that, over time, the potential solutions we outline will be used not only in JIBS but also in other IB journals, such as the Journal of World Business, Global Strategy Journal, Journal of International Management, and Management and Organization Review, among others.

Established theories of human performance provide an explanation for and help us understand the existence of the methodological challenges we identified. Specifically, these likely exist due to a combination of three factors: (a) researcher motivation; (b) researcher and reviewer knowledge, skills, and abilities (KSAs); and (c) context (Aguinis, 2019; Van Iddekinge, Aguinis, Mackey & DeOrtentiis, 2018). Regarding motivation, researchers receive highly valued incentives for publishing in top journals, regardless of an article’s methodological limitations (Aguinis, Cummings, Ramani & Cummings, 2020b; Rasheed & Priem, 2020). Second, regarding KSAs, given the fast pace of methodological developments, many reviewers are unable to update their methodological repertoire and may not be as familiar with the latest methodological innovations (Aguinis, Hill & Bailey, 2020). Similarly, researcher KSAs are affected by financial constraints and the decreased number of opportunities for doctoral students and more seasoned researchers alike to receive university-sponsored state-of-the-science methodological training (Aguinis, Ramani & Alabduljader, 2018). Third, regarding context, there are established and consensually accepted methodological practices that are passed on from generation to generation of researchers – and these practices are difficult to change, even if novel and better approaches become available (Aguinis, Gottfredson & Joo, 2013; Nielsen, Eden & Verbeke, 2020). A human-performance theoretical framework that includes motivation, KSAs, and context allows us to understand why these methodological challenges exist and persist and also to offer suggestions for how to move forward in the future.

CONTEMPORARY METHODOLOGICAL CHALLENGES

We reviewed all 43 articles published in JIBS from January through December 2018, excluding editorials, reviews, and conceptual articles (the total number of all types of articles was 66). Following the procedure implemented by Brutus, Aguinis & Wassmer (2013) in their review of the management literature, we identified self-reported methodological challenges by examining the Limitations, Future Directions, Robustness Checks, and similar sections in each article’s Discussion section. We used dummy coding (1 = yes, 0 = no) to count how many articles mentioned each particular challenge.2

A legitimate question is whether JIBS authors are sufficiently transparent in acknowledging methodological challenges (Aguinis et al., 2018). It was reassuring to find that most JIBS articles (approximately 72%) explicitly acknowledged methodological challenges that potentially influenced substantive results and conclusions. We suspect that many of these challenges are included in published articles as a result of the review process and at the request of reviewers or editors.

Table 1 presents the four most frequently mentioned methodological challenges: (i) psychometrically deficient measures, (ii) idiosyncratic samples or contexts, (iii) less-than-ideal research designs, and (iv) insufficient evidence about causal relations. Because these challenges are so pervasive, this list is likely to be familiar to many IB researchers, journal editors, and reviewers, as well as consumers of research.

Because we do not wish to identify authors, Table 1 shows aggregate results. For the same reason, the description of each of the four most frequently mentioned challenges does not refer to any specific articles. Rather, we provide generalized statements to show how authors typically refer to each of the challenges.3

The methodological challenge that was most frequent and was mentioned in 73% of the articles was that the measures used were psychometrically deficient. Authors typically reported this challenge using one of two formats:

-

“Our study used [X] to measure construct [Z]. A challenge of this approach is that [X] does not fully capture the construct [Z].”

-

“Our study used [X] as a measure of construct [Z]. However, others have noted that data regarding [X] is subject to manipulation or error and therefore may not be sufficiently reliable as a way to measure [Z].”

The methodological challenge that was mentioned second most frequently referred to the use of idiosyncratic samples or contexts (62.2%). Authors referred to this challenge using the following general phrasing:

-

“Our study examined the relationship between [X] and [Y] in the context of or using a sample drawn from [Z]. A challenge of this approach is that results obtained and conclusions drawn may be altered significantly when examined in a different context (e.g., country, time-period) or when using a different sample (e.g., a more diverse group of firms, publicly listed versus non-public firms).”

The methodological challenge that was mentioned third most frequently was the use of a less-than-ideal research design (62.2%). This challenge referred to variables included, excluded, or not measured, and the manner in which the data were collected. Authors typically reported this challenge using one of these three formats:

-

“We did not include/exclude/measure/control for [X] due to limitations in the dataset used.”

-

“We examined the relationship between [X] and [Y] at the level of [Z] (e.g., firm, industry, country), but these relationships might differ based on additional levels of analysis such as [W] (e.g., industry, country, geographic cluster).”

-

“We captured data regarding the relationship between [X] and [Y] during a particular period, but these relationships might change if we had more longitudinal data.”

The fourth methodological challenge, which was not nearly as frequent as the previous three (mentioned in 8.1% of the articles), involved insufficient evidence about causal relations. Authors generally described this challenge as follows:

-

“Because we used method [Z] (e.g., archival data, cross-sectional survey) to study the relationship between [X] and [Y], our results and conclusions should not be interpreted as implying a causal link between the constructs.”

Interestingly, there are differences in the results of our analysis based on articles published in JIBS compared to those by Brutus et al. (2013) based on articles published in AMJ, Administrative Science Quarterly (ASQ), JAP, and JOM. For example, compared to Brutus et al. (2013; Table 5), issues of psychometrically deficient measures, idiosyncratic samples or contexts, and less-than-ideal research designs are much more prevalent in IB research, while issues of insufficient evidence about causal relations are much less frequent. Specifically, we found that 73% of JIBS articles reported issues related to psychometrically deficient measures. In contrast, the average across the four journals examined by Brutus et al. (2013) was 25%. Similarly, idiosyncratic samples or contexts are reported in 62.2% of JIBS articles versus 32%, as reported by Brutus et al. (2013). The values for less-than-ideal research designs are 62.2% for JIBS versus an average of 6% across AMJ, ASQ, JAP, and JOM. However, JIBS articles are much less likely (8%) to note issues of insufficient evidence about causal relations compared to the four journals examined by Brutus et al. (34%).

Based on the aforementioned results and comparisons, while some broad dimensions of methodological limitations seem to apply across fields, IB researchers face a unique combination of methodological challenges, and those are in many ways distinct from the concerns expressed by authors of articles in management journals. These differences are likely due to the fact that, as noted by Eden et al. (2020: 11), “IB refers to a complex set of phenomena, which require attention to both similarities and differences between domestic and foreign operations at multiple levels of analysis.” Thus, methodological challenges in IB result, at least in part, from substantive questions about processes that are locally embedded, relationally enacted, and iteratively unfolding (Poulis & Poulis, 2018) – what Norder, Sullivan, Emich & Sawhney (2020) recently called “local-seeking global.”

PROPOSED SOLUTIONS

As a preview of the material that follows, Table 2 provides a summary of our proposed solutions for each of the methodological challenges we described in the previous section, together with sources that describe each of the solutions in more detail. Furthermore, we include both ex-ante solutions (e.g., regarding measures and research design), as well as ex-post solutions (e.g., using Big Data and necessary-conditions analysis). We do so because while ex-ante solutions are ideal (Aguinis & Vandenberg, 2014), they may not always be feasible. In such cases, ex-post solutions can be a satisfactory alternative.

Solutions for Challenge #1: Psychometrically Deficient Measures

A measure is considered psychometrically deficient if it fails to represent the desired construct in a comprehensive manner (Aguinis, Henle & Ostroff, 2001). Using such measures provides an incomplete (i.e., deficient) understanding of the construct. Consequently, estimates of relations between the focal and other constructs are usually underestimated (i.e., observed effect-size estimates are smaller than their true values). For example, measures of patent counts and patent citations are common in IB research. In our review, we found that these measures have been used in JIBS articles as proxies for constructs as diverse as knowledge sourcing and innovation performance. Furthermore, the articles using these measures mentioned that an important methodological challenge was the inability of patent-based measures to represent the intended construct comprehensively. A similar challenge involving psychometrically deficient measures applies to data used to assess other key IB constructs such as research and development (R&D) investment and R&D intensity.

Recent research in IB has identified some potential solutions. These include specifying decision rules used to select variables (Aguinis et al., 2017); calls for IB researchers to work together to identify clear standards (Delios, 2020; Peterson & Muratova, 2020); and an illustration and recommendation regarding the specific construct of cultural distance (Beugelsdijk, Ambos & Nell, 2018). We offer three additional solutions to address the challenge of psychometrically deficient measures.

First, consider that an ounce of prevention is worth a pound of cure (cf. Aguinis & Vandenberg, 2014). In other words, the first solution is for researchers to evaluate whether the measure they are considering might be psychometrically deficient before they collect and analyze data. Future IB research can begin by examining the literature to determine if the measure has been employed previously to represent more than one construct. If so, it is necessary to provide one or more theoretical arguments to explain why it is appropriate to use the measure to assess the focal construct. It is also necessary to demonstrate why and how the conceptualization of the construct is accurate, given the context of the study (Ketchen, Ireland & Baker, 2013). An example of a study that adopted such a solution is Banerjee, Venaik & Brewer’s (2019) article on understanding corporate political activity (CPA) using the integration-responsiveness framework. The authors briefly reviewed past approaches to studying CPA, and then outlined how their conceptualization aligned with the focus of their study.

Second, most constructs in IB research are multidimensional in nature (e.g., organizational innovation). So, another solution for the psychometric-deficiency challenge is to follow the three-step process outlined by Edwards (2001) as follows. The first step is to specify whether the construct is reflective (i.e., measures are indicators of a superordinate construct) or formative (i.e., measures are aggregated to form the construct). For example, consider the construct of organizational innovation, which has been examined in several JIBS articles using measures such as product innovation, total number of patent applications, number of patents per employee, process innovation, and administrative innovation, among others. A reflective conceptualization implies that there exists an unobserved latent variable (i.e., organizational innovation), and that these measures are observable embodiments of this latent variable (Edwards, 2011). In contrast, a formative conceptualization implies that these measures are building blocks of an underlying latent variable (i.e., organizational innovation), which is defined by some combination of these measures (Edwards, 2011).

The second step is to identify different dimensions of the construct and the implications of conceptualizing it in this manner. Because each measure in a reflective conceptualization captures all relevant information about the construct, different measures may be omitted, as they provide interchangeable information (Edwards, 2001, 2011). Therefore, researchers may use just one measure to fully describe organizational innovation. However, in a formative conceptualization, each measure captures a different aspect of the construct, and omitting a measure detracts from the overall understanding of the construct (Edwards, 2001, 2011). Therefore, researchers should use multiple measures and combine the scores to arrive at a composite value of organizational innovation.

The third and final step involves using analytical techniques based on the conceptualization of the construct as reflective or formative (Edwards, 2001). For example, while reflective measures are expected to have high inter-correlations, formative measures do not need to demonstrate high internal consistency (Edwards, 2011). Researchers can take similar steps regarding issues such as measurement equivalence, measurement error, model identification, and model fit (Diamantopoulos & Papadopoulos, 2010; Edwards, 2001, 2011; Vandenberg & Lance, 2000). Besides organizational innovation, some examples of IB constructs that may be conceptualized as reflective or formative include international business pressures (Coltman, Devinney, Midgley & Venaik, 2008), export coordination and export performance (Diamantopoulos, 1999; Diamantopoulos & Siguaw, 2006), and exploration and exploitation (Nielsen & Gudergan, 2012). Overall, following these three steps will provide IB researchers with evidence on whether psychometric deficiency is a concern, regardless of the manner in which the construct is conceptualized.

Third, yet another solution when facing challenges regarding the choice and operationalization of measures is to use multiple rather than single indicators. This is possible even when a particular database includes a single-item measure. Specifically, it is possible to gain a more comprehensive understanding of the construct in question by using two or more measures that provide alternative information. In the case of organizational innovation, researchers may utilize multiple measures such as, for example, the number of new products introduced, degree of “newness” of the products, degree of technological advancement of products versus competitors' offerings, and process improvements to capture information about different facets of the construct. Another approach is to cross-reference measures of the construct across different databases (Boyd, Gove & Hitt, 2005; Cascio, 2012). For instance, returning to the example of patent-based measures, rather than relying only on patent counts or patent citations, future research can use both when examining constructs related to innovation, knowledge, or technology (Ketchen et al., 2013).

Solutions for Challenge #2: Idiosyncratic Samples or Contexts

The highly diverse nature of IB research and its local-seeking global theories (Norder et al., 2020) makes the challenge of using idiosyncratic samples or contexts particularly salient. For example, Teagarden, Von Glinow, and Mellahi (2018) noted that much of IB research is about contextualizing business. Our results showed that challenges mentioned in JIBS articles include testing theories (i) in a single country with a particular form of governance, (ii) during a particular time-period, (iii) using relations between two specific countries, and (iv) examining a particular product category in a particular market in a particular group of countries.

For researchers wrestling with methodological challenges stemming from the specific sample or context in which they test their theories, we offer two solutions. The first is to re-conceptualize this methodological challenge. Specifically, rather than treating it as a methodological limitation, a particular sample or context can be used as a vehicle for theorizing and further theoretical development. For example, using a specifically targeted sample or context can help expand the understanding of the focal theory’s boundary conditions, provide support for a theory or part of a theory that was previously lacking, or improve our understanding of practical implications of interventions based on the theory’s propositions (Aguinis, Villamor, Lazzarini, Vassolo, Amorós, & Allen, 2020c; Bamberger & Pratt, 2010; Makadok, Burton & Barney, 2018). Adopting this solution will also help future IB researchers answer numerous calls (e.g., Antonakis, 2017; Leavitt, Mitchell & Peterson, 2010) for greater clarity and precision in theories and theorizing.

The second solution is to embrace the uniqueness of the challenge posed by sample or context specificity. That is, future IB research can specifically seek out samples or contexts where hypotheses are more likely or less likely to be supported empirically, thereby putting focal theories at risk of falsification (Bamberger & Pratt, 2010; Leavitt et al., 2010). Adopting this solution is akin to the “case-study” approach (e.g., Eisenhardt, Graebner & Sonenshein, 2016; Gibbert & Ruigrok, 2010; Tsang, 2014). Future IB research can also use unique samples and contexts as individual settings for a multiple-case-design approach, thereby examining the explanatory power of the theory under similar, yet subtly different, conditions. For example, an examination of the role of historic relations between governments on multinational operations may include the same construct or outcome (e.g., foreign investment, knowledge transfer between subsidiaries) using two sets of countries that share similar historic ties of cooperation or competition, such as China and Japan, and Japan and South Korea. A good example of the potential application of this solution is the study by Ambos, Fuchs, and Zimmermann (2020) examining how headquarters and subsidiaries manage tensions that arise from demands for local integration and global responsiveness.

Solutions for Challenge #3: Less-than-Ideal Research Design

Concerns regarding research-design issues are not new to IB research. For example, Peterson, Arregle & Martin (2012) and Martin (2020) discussed levels of analysis. Similarly, Chang, van Witteloostuijn & Eden (2010), Doty & Astakhova (2020), and van Witteloostuijn et al., (2020) addressed common method variance. We contribute to this discussion by identifying how challenges associated with the effects of less-than-ideal research design for theory building and testing – including those associated with levels of analysis and common method variance – can be addressed, at least in part, by relying on developments in the use of Big Data.

Big Data is often characterized by three V’s: volume, velocity, and variety (Laney, 2001). Volume refers to the sheer scale of the data, velocity is about the speed with which the data are generated as well as the speed of the analytics process required to meet those demands, and variety is about the many forms that Big Data can take – including structured numeric data, text documents, audio, video, and social media (Chen & Wojcik, 2016). It is not unusual to refer to Big Data using other terms, such as data mining, knowledge discovery in databases, data or predictive analytics, or data science (Harlow & Oswald, 2016). As such, Big Data differs from commonly used large datasets (e.g., Bloomberg Business, Compustat, ILOSTAT, Orbis, Osiris) that collect large quantities of data on a predefined list of measures over a specific period, as well as from researchers’ own data-collection efforts that seek information regarding a limited set of measures over a pre-determined period. It is important to note that Big Data is not the same as “more data,” particularly when they are of dubious quality. Indeed, ensuring the veracity and value of the data is an important consideration when using Big-Data approaches (Braun, Kuljanin & DeShon, 2018; Iafrate, 2015; IBM, 2020; Zhang, Yang, Chen & Li, 2018). Nor is Big Data the solution to fundamental challenges, such as how constructs are defined and operationalized in the first place (Podsakoff, MacKenzie & Podsakoff, 2016). Nevertheless, accurate Big Data – sometimes referred to as “Smart Data” – represents a unique opportunity to address some of the challenges posed by less-than-ideal research designs (Tonidandel, King & Cortina, 2018).

Big Data can provide unique insights by allowing researchers to examine previously untapped complementary sources of information (George, Haas & Pentland, 2014). For example, consider a study about the ownership or governance structures of firms and the situation that traditional datasets do not provide sufficient information regarding these variables. A Big-Data approach can rely on examining articles in newspapers and other business and current-event outlets, publicly available financial and other company filings (e.g., Securities and Exchange Commission filings such as the 10-K, 10-Q, or 8-K reports), and reports and white papers issued by watchdog groups (e.g., Citizens for Responsibility and Ethics in Washington, D.C.). Adopting a Big-Data approach will allow future IB researchers to gain additional insights based on variables not included in public datasets or measured during their data collection, and also when the data included are limited or censored in terms of levels of analysis (e.g., only firm-level data measured), or period-of-data collection (e.g., data only collected for certain years).

Another way to leverage Big-Data techniques to address the methodological challenge of less-than-ideal research design is by re-analyzing currently available data. Returning to the example of patent-based measures, higher-quality research designs will result from using the Big Data technique of, for example, text mining (George, Osinga, Lavie & Scott, 2016). Text mining is a data-analytic technique that infers knowledge about desired constructs from unstructured data in the form of text, and is particularly useful when analyzing Big Data (O’Mara-Eves, Thomas, McNaught, Miwa & Ananiadou, 2015). In our example, future IB research can use text mining to analyze the co-occurrence of words within patents, or infer meaning from common terms used across patents, to gain a deeper and more comprehensive perspective about knowledge creation and transfer (George et al., 2016). Together, using Big Data approaches to examine additional sources of information and re-analyze existing data can help IB researchers address previously identified challenges stemming from issues related to levels of analysis and common method variance.

We readily acknowledge that the prospect of gathering supplemental information using Big-Data methods such as web scraping and text mining may seem daunting. However, there is a growing number of resources, including packages using the free software, R (e.g., Munzert, Rubba, Meißner & Nyhuis, 2014; Silge & Robinson, 2016), and tutorials and resource articles (e.g., Cheung & Jak, 2016; Kosinski, Wang, Lakkaraju & Leskovec, 2016; Landers, Brusso, Cavanaugh & Collmus, 2016; McKenny, Aguinis, Short & Anglin, 2018; Tonidandel et al., 2018). These are particularly useful for those with little or no background or experience with Big Data. Moreover, we see great potential in implementing Big-Data solutions in the specific IB domains of international environment and cross-country comparative studies. For example, IB researchers may use Big Data to expand their examinations of questions related to how business processes relate to organizational performance across different countries. These include, for example, marketing strategies and the use of consumer-behavior data (e.g., Hofacker, Malthouse & Sultan, 2016; Matz & Netzer, 2017), managerial metrics (e.g., Mintz, Currim, Steenkamp & de Jong, 2020), knowledge sharing (e.g., Haas, Criscuolo & George, 2015), and capital-allocation decisions (e.g., Sun, Zhao & Sun, 2020).

Solutions for Challenge #4: Insufficient Evidence About Causal Relations

Like other fields, IB research seeks to study and understand change; it is, therefore, inherently interested in the issue of causality. Indeed, many have noted the need for IB to address the issue of causality to enable greater theoretical progress. For example, Cuervo-Cazurra, Andersson, Brannen, Nielsen & Reuber (2016), and Reeb, Sakakibara & Mahmood (2012) noted the potential of using experimental designs to address causality and alternative explanations. Similarly, Reeb et al. (2012), and Meyer, van Witteloostuijn & Beugelsdijk (2017) encouraged researchers to be more careful in how they refer to the results of their own studies to avoid implying unwarranted causality. Finally, Shaver (2020) alluded to the potential of Big Data to help improve causal inferences.

Demonstrating causality is not possible in the absence of critical research-design features such as the existence of different levels of the independent variables (Eden, Stone-Romero & Rothstein, 2015; Lonati, Quiroga, Zehnder & Antonakis, 2018). In other words, “it is not possible to put right with statistics what has been done wrong by design” (Cook & Steiner, 2010: 57). This issue is especially challenging for IB research because it is typically conducted in settings that are not conducive to randomized experimental designs (Eden, 2017). We propose two solutions – one related to design and one related to analysis – that will help address this methodological challenge.

First, future IB research can make greater use of quasi-experimental designs (Eden, 2017; Lonati et al., 2018; Stone-Romero & Rosopa, 2008). Such designs allow for non-random assignment of units (e.g., employees, firms, industries, countries) to different conditions or for naturally occurring (as opposed to controlled) variation in the independent variable. Doing so combines elements from both non-experimental field studies and randomized experiments to improve confidence in causal inferences (Abadie, Diamond & Hainmueller, 2015; Antonakis, Bendahan, Jacquart & Lalive, 2010; Banerjee & Duflo, 2009; Cuervo-Cazurra, Mudambi, Pedersen & Piscitello, 2017). Quasi-experimental designs are particularly well suited for IB research that examines constructs such as changes in government policy, organizational expansion, innovation, and foreign direct investment decisions (e.g., Castellani, Mariotti & Piscitello, 2008; Grant & Wall, 2009; Kogut & Zander, 2000). They are also useful when, as happens often in IB research, a change in an outcome due to a change in an independent variable manifests itself only after a period of time. Examples of quasi-experiments in recent IB-related research include Buckley, Chen, Clegg & Voss’s (2018) study of risk propensity in foreign direct investment location; Huvaj & Johnson’s (2019) investigation of the effect of organizational complexity on innovation; and Vandor & Franke’s (2016) examination of the impact of cross-cultural experience on opportunity-recognition capabilities. Additional examples include how monthly and quarterly earnings estimates might influence a multinational corporation’s expansion plans (Irani & Oesch, 2016), how knowledge-sharing and innovation spread across local and foreign subsidiaries (Wang, Noe & Wang, 2014), and the impact of home-country corporate social responsibility efforts on foreign operations (Flammer & Luo, 2017).

The second solution relates to data analysis. Specifically, future IB research can use necessary-conditions analysis (NCA; Dul, 2016). NCA is a data-analytic technique that helps identify the variables that are essential for achieving a particular outcome (Dul, 2016). Most data-analytic techniques that are commonly used (e.g., OLS and other types of multiple and multivariate regression, multilevel modeling, and structural-equation modeling) generally operate on the basis of a compensatory or additive logic. In that approach, variables can compensate for each other in predicting an outcome, and certain variables may be completely absent (i.e., have a value of zero). NCA relies on a multiplicative logic in which the lack of a single variable eliminates the possibility of achieving an outcome (Dul, 2016; Dul, Hak & Goertz, 2010). By understanding which variables are critical to the presence of a desired outcome, NCA can help future IB researchers gain a better understanding of causal effects when examining a particular outcome.

For example, consider the goal of examining how firms differ in their approaches to entering foreign markets. A study addressing this issue may examine potential determinants such as overseas market similarity and potential, company internal competitiveness, company familiarity with entering overseas markets, and company internal cash reserves (Koch, 2001). Using the resulting estimates, such as regression coefficients from additive data-analytic techniques, provides information on how each of these variables differentially covaries with the outcome. In addition, these results may indicate that the absence of a particular variable – say, lack of familiarity with entering overseas markets – can be compensated for by higher levels of another – say, company internal cash reserves. While useful, these results do not provide information about whether the lack of familiarity causes firms to differ in how they approach foreign-market entry. In contrast, NCA relies on a multiplicative logic. Using NCA provides information not only on whether the lack of familiarity causes firms to choose different strategies but also the minimum level of familiarity needed for choosing one approach over another. Note that in both instances the data analyzed are the same (e.g., archival, cross-sectional survey), and it is only the data-analytical technique that differs. Therefore, combining NCA with additive data-analytical techniques can help future IB researchers improve knowledge about underlying causal structures and causal chains even when using data collected with non-experimental designs.

Finally, we wish to clarify that NCA is not simply a new form of data mining in which theory does not play an important role. Instead, NCA is an analytical technique that can help IB researchers advance theory by applying the principles of strong inference (Leavitt et al., 2010) to examine competing theory-driven hypotheses. Because NCA operates on a “necessary but not sufficient” logic, it can only be used to identify determinants whose absence would prevent an outcome from being achieved, but not the determinants that will produce the outcome (Dul, 2016). For example, in the illustration in the previous paragraph, NCA can identify whether or not lack of familiarity causes differences in foreign-market entry strategies. However, it cannot provide insight into how other determinants influence the adoption of different foreign-market entry strategies. Accordingly, NCA represents a complement for, not a replacement of, current analytical approaches such as, for example, OLS regression. Examples of IB-related studies that have used NCA include the role of contracts and trust in driving innovation (Van der Valk, Sumo, Dul & Schroeder, 2016), and the link between specific firm capabilities and performance (Tho, 2018).

LIMITATIONS AND SUGGESTIONS FOR FUTURE RESEARCH

Our specific goal was to identify contemporary methodological challenges faced by IB researchers, as described by JIBS authors themselves, and to offer solutions to each of these challenges. In this section, we offer suggestions for future research that would go beyond and expand upon our article’s specific goals.

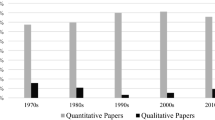

First, we focused on articles published in a single volume of JIBS. Although we have no reason to believe that the 2018 volume represents an outlier, future research would benefit from a longitudinal examination of trends to gain insights on possible changes in methodological challenges over time. For example, Cascio and Aguinis (2008) content-analyzed all articles published in the Journal of Applied Psychology and Personnel Psychology from January 1963 to May 2007 to identify the relative attention devoted to each of 15 broad topical areas and 50 more specific subareas in the field of industrial and organizational psychology. Regarding the evolution of research methodology, Aguinis, Pierce, Bosco & Muslin (2009) content analyzed the 193 articles published in the first ten volumes (1998 to 2007) of Organizational Research Methods to understand which research design, measurement, and data-analysis topics had become more or less popular over time. A similar longitudinal analysis based on JIBS and other IB journals focusing on methodological challenges would help us understand not only limitations over time, and what changes and improvements have taken place, but also provide insights into the importance of these limitations in relation to the cumulative nature of IB knowledge. For example, there is a possibility that certain limitations (e.g., insufficient evidence about causal relations) are directly linked to methodological improvements over time (e.g., the ability to conduct field quasi-experiments and true experiments). The introduction and popularization of methodological improvements (e.g., less frequent use of cross-sectional research designs) would then be linked to a concomitant decrease in particular methodological limitations (e.g., decreased concern about insufficient evidence about causal relations). Additional potential insights from future longitudinal research may show that definitions and measures of common IB constructs such as cultural distance have indeed improved over time (Beugelsdijk et al. 2018; Peterson & Muratova, 2020), thereby leading to less-frequent concerns about psychometric properties in certain substantive domains. Finally, improvements in measures over time could also have concomitant implications for the use of control variables, given that their psychometric properties have important implications for the validity and fit of models. Unfortunately, this information is seldom investigated or reported (Bernerth & Aguinis, 2016; Nielsen & Raswant, 2018). Overall, we see great value in future research adopting a longitudinal approach to examining methodological challenges and limitations.

Second, as noted earlier, we did not examine which challenges were added to or removed from an article through the peer-review process – and which ones authors chose to address. Another extension of our work, therefore, would be to access reviewer comments and possibly also contact authors to understand the reasons for the various methodological choices they made and which challenges and limitations they chose to disclose, or not disclose, and the reasons why. Relatedly, Green, Tonidandel & Cortina (2016) were able to access 304 editors’ and reviewers’ letters for 69 manuscripts submitted to Journal of Business and Psychology and coded the statistical and methodological issues raised in the reviewing process. As a result, they were able to draw conclusions about the comments and suggestions that reviewers and editors made about methodological issues when making recommendations and decisions to accept or reject manuscripts. This same approach could be used with IB manuscripts to learn about the process that resulted in specific methodological challenges and limitations being included in a published article – and why.

A third issue that we believe warrants future research is an investigation of the disclosure of methodological challenges and limitations in relation to researchers’ motivations and the prevailing reward systems in universities (Aguinis et al. 2020b; Rasheed & Priem, 2020). Disclosing a study’s limitations is clearly an important factor to understand the rigor, value, and usefulness of the knowledge that has been produced. Yet, as Nielsen et al. (2020: 7) noted, “scholars often view the benefits from research integrity as accruing only in the long term and primarily to society as a whole. In the short term, pressure to publish and the desire for tenure and promotion may be much more salient.” The pressure to publish in what are considered top journals has never been higher. The motto “a win is a win” used in sports is now often used to describe the publication of a paper in a prestigious journal (Aguinis et al. 2020b). Many of us have witnessed faculty-recruiting as well as promotion-and-tenure committees discuss how many A-level publications a candidate has produced and how many A-level publications are needed for a favorable decision, while the unique intellectual value and methodological rigor of a publication do not receive nearly the same amount of attention (Aguinis et al. 2020b). Given this situation, there are now calls to improve the transparency and disclosure of all methodological procedures (Aguinis et al., 2017; Aguinis & Solarino, 2019; Delios, 2020), including challenges and limitations. For these changes to happen, however, reward systems, including journal-submission policies, may need to change. To this point, Aguinis, Banks, Rogelberg, and Cascio (2020a) offered ten actionable recommendations for reducing questionable research practices that do not require a substantial amount of time or resources on the part of journals, professional associations, and funding agencies. These include (1) updating the knowledge-production process (e.g., preregistration of quantitative and qualitative primary studies); (2) updating knowledge-transfer and knowledge-sharing processes (e.g., an online archive for each journal article where authors can voluntarily place any study materials they wish to share); (3) changing the incentive structure (e.g., best-paper award and acknowledgement based on open-science criteria); (4) improving access to training resources (i.e., open-access training for authors and reviewers); and (5) promoting shared values (i.e., editorial statements that null results, outliers, “messy” findings, and exploratory analyses can advance scientific knowledge if a study’s methodology is rigorous).

Finally, there are several additional methodological challenges, some of which are discussed in the chapters included in Eden et al. (2020), which we did not address in our article. Examples include the extent to which robustness checks and sensitivity tests are frequent and useful in IB research and the extent to which power analysis is conducted and reported in IB research. These are important methodological topics that certainly warrant future investigation, even though they were not mentioned frequently in the articles included in our review.

CONCLUSION

As IB research becomes more diverse and complex, there is an increased awareness that methodological approaches require attention to both similarities and differences between domestic and foreign operations at multiple levels of analysis (Eden et al., 2020). Such approaches must be able to provide answers to local-seeking global questions about processes that are locally embedded, relationally enacted, and iteratively unfolding. Careful attention to methodological challenges is critical for ensuring continued IB theoretical advancements and the relevance of practical contributions. Adopting a perspective that combined an AAR with a needs-assessment approach, and using JIBS as a case study, we described the four most frequently mentioned contemporary methodological challenges identified by JIBS authors themselves. These are: psychometrically deficient measures, idiosyncratic samples or contexts, less-than-ideal research designs, and insufficient evidence about causal relations. Building upon existing work, and particularly chapters included in the edited volume by Eden et al. (2020), we proposed solutions that rely mostly on methodological innovations from outside of IB that can be used to address each of these challenges. Our article can be used as a resource by journal editors and reviewers to anticipate which methodological challenges they are most likely to encounter in the manuscripts they consider for possible publication. IB researchers can use our article as a resource to anticipate and address these challenges before they collect and analyze data. Instructors can also use our article for training the next generation of IB scholars. Overall, we hope that it will serve as a catalyst for theory advancements in future empirical research not only in JIBS but also in other journals in IB and related fields.

Notes

-

1

For example, an article in JAP (Wolfson, Tannenbaum, Mathieu & Maynard, 2018) noted that the measures they used to assess performance might have been psychometrically deficient. Similarly, an article in SMJ (Furr & Kapoor, 2018) urged caution when interpreting their findings due to the use of an idiosyncratic sample or context. As another example, an article in AMJ (Miron-Spektor, Ingram, Keller, Smith & Lewis, 2018) noted that the study had a less-than-ideal research design. Finally, a JOM article (Kuypers, Guenter & van Emmerik, 2018), noted that a limitation of the study was insufficient evidence about causal relations. As we describe in the next section of our article, these are the four most pervasive methodological challenges mentioned by JIBS authors.

-

2

Similar to Brutus et al. (2013), we utilized a methodological lens to analyze limitations noted by authors in published research. However, a major difference is that Brutus et al.’s (2013) main goal was to improve the manner in which authors report the limitations of their research. That is, they attempted to influence ex-post decisions regarding the transparency and usefulness of author-disclosed limitations that might affect a study’s results and conclusions. In contrast, our goal is to help advance IB theory by addressing the most typical methodological challenges. That is, our goal is to offer ex-ante and ex-post solutions to help researchers make theoretical advancements by overcoming these methodological limitations.

-

3.

In the interest of transparency, we make the complete list of articles (including which article referred to which challenge), available upon request.

REFERENCES

Abadie, A., Diamond, A., & Hainmueller, J. 2015. Comparative politics and the synthetic control method. American Journal of Political Science, 59(2): 495–510.

Aguinis, H. 2019. Performance management (4th ed.). Chicago, IL: Chicago Business Press.

Aguinis, H., & Solarino, A. M. (2019). Transparency and replicability in qualitative research: The case of interviews with elite informants. Strategic Management Journal, 40(8), 1291–1315.

Aguinis, H., Banks, G. C., Rogelberg, S., & Cascio, W. F. 2020a. Actionable recommendations for narrowing the science-practice gap in open science. Organizational Behavior and Human Decision Processes, 158: 27–35.

Aguinis, H., Cascio, W. F., & Ramani, R. S. 2017. Science’s reproducibility and replicability crisis: International business is not immune. Journal of International Business Studies, 48(6): 653–663.

Aguinis, H., Cummings, C., Ramani, R. S., & Cummings, T. G. 2020b. “An A is an A:” The new bottom line for valuing academic research. Academy of Management Perspectives, 34(1): 135–154.

Aguinis, H., Gottfredson, R. K., & Joo, H. 2013. Best-practice recommendations for defining, identifying, and handling outliers. Organizational Research Methods, 16(2): 270–301.

Aguinis, H., Henle, C. A., & Ostroff, C. 2001. Measurement in work and organizational psychology. In N. Anderson, D. S. Ones, H. K. Sinangil, & C. Viswesvaran (Eds.), Handbook of industrial, work and organizational psychology (Vol. 1, pp. 27–50). London: Sage.

Aguinis, H., Hill, N. S., & Bailey, J. R. (2020). Best practices in data collection and preparation: Recommendations for reviewers, editors, and authors. Organizational Research Methods. https://doi.org/10.1177/1094428119836485.

Aguinis, H., & Kraiger, K. 2009. Benefits of training and development for individuals and teams, organizations, and society. Annual Review of Psychology, 60: 451–474.

Aguinis, H., Pierce, C. A., Bosco, F. A., & Muslin, I. S. 2009. First decade of Organizational Research Methods: Trends in design, measurement, and data-analysis topics. Organizational Research Methods, 12(1): 69–112.

Aguinis, H., Ramani, R. S., & Alabduljader, N. 2018. What you see is what you get? Enhancing methodological transparency in management research. Academy of Management Annals, 12(1): 83–110.

Aguinis, H., & Vandenberg, R. J. 2014. An ounce of prevention is worth a pound of cure: Improving research quality before data collection. Annual Review of Organizational Psychology and Organizational Behavior, 1(1): 569–595.

Aguinis, H., Villamor, I., Lazzarini, S. G., Vassolo, R. S., Amorós, J. E., & Allen, D. G. 2020c. Conducting management research in Latin America: Why and what’s in it for you? Journal of Management, 46(5): 615–636.

Ambos, T. C., Fuchs, S. H., & Zimmermann, A. (2020). Managing interrelated tensions in headquarters–subsidiary relationships: The case of a multinational hybrid organization. Journal of International Business Studies, 51(6), 906–932.

Antonakis, J. 2017. On doing better science: From thrill of discovery to policy implications. The Leadership Quarterly, 28(1): 5–21.

Antonakis, J., Bendahan, S., Jacquart, P., & Lalive, R. 2010. On making causal claims: A review and recommendations. The Leadership Quarterly, 21(6): 1086–1120.

Bamberger, P. A., & Pratt, M. G. 2010. From the editors: Moving forward by looking back: Reclaiming unconventional research contexts and samples in organizational scholarship. Academy of Management Journal, 53(4): 665–671.

Banerjee, A. V., & Duflo, E. 2009. The experimental approach to development economics. Annual Review of Economics, 1(1): 151–178.

Banerjee, S., Venaik, S., & Brewer, P. 2019. Analyzing corporate political activity in MNC subsidiaries through the integration-responsiveness framework. International Business Review, 28(5): 101498.

Bernerth, J., & Aguinis, H. 2016. A critical review and best-practice recommendations for control variable usage. Personnel Psychology, 69(1): 229–283.

Beugelsdijk, S., Ambos, B., & Nell, P. C. 2018. Conceptualizing and measuring distance in international business research: Recurring questions and best practice guidelines. Journal of International Business Studies, 49(9): 1113–1137.

Boyd, B. K., Gove, S., & Hitt, M. A. 2005. Construct measurement in strategic management research: Illusion or reality? Strategic Management Journal, 26(3): 239–257.

Braun, M. T., Kuljanin, G., & DeShon, R. P. 2018. Special considerations for the acquisition and wrangling of Big Data. Organizational Research Methods, 21(3): 633–659.

Brutus, S., Aguinis, H., & Wassmer, U. 2013. Self-reported limitations and future directions in scholarly reports: Analysis and recommendations. Journal of Management, 39(1): 48–75.

Buckley, P. J., Chen, L., Clegg, L. J., & Voss, H. 2018. Risk propensity in the foreign direct investment location decision of emerging multinationals. Journal of International Business Studies, 49(2): 153–171.

Cantwell, J., & Brannen, M. Y. 2016. The changing nature of the international business field, and the progress of JIBS. Journal of International Business Studies, 47(9): 1023–1031.

Cascio, W. F. 2012. Methodological issues in international HR management research. The International Journal of Human Resource Management, 23(12): 2532–2545.

Cascio, W. F., & Aguinis, H. 2008. Research in industrial and organizational psychology from 1963 to 2007: Changes, choices, and trends. Journal of Applied Psychology, 93(5): 1062–1081.

Cascio, W. F., & Aguinis, H. 2019. Applied psychology in talent management (8th ed.). Thousand Oaks, CA: Sage.

Castellani, D., Mariotti, I., & Piscitello, L. 2008. The impact of outward investments on parent company’s employment and skill composition: Evidence from the Italian case. Structural Change and Economic Dynamics, 19(1): 81–94.

Chang, S. J., van Witteloostuijn, A., & Eden, L. 2010. From the Editors: Common method variance in international business research. Journal of International Business Studies, 41(2): 178–184.

Chen, E. E., & Wojcik, S. P. 2016. A practical guide to big data research in psychology. Psychological Methods, 21(4): 458–474.

Cheung, M. W. L., & Jak, S. 2016. Analyzing big data in psychology: A split/analyze/meta-analyze approach. Frontiers in Psychology, 7: 738.

Coltman, T., Devinney, T. M., Midgley, D. F., & Venaik, S. 2008. Formative versus reflective measurement models: Two applications of formative measurement. Journal of Business Research, 61(12): 1250–1262.

Cook, T. D., & Steiner, P. M. 2010. Case matching and the reduction of selection bias in quasi-experiments: The relative importance of pretest measures of outcome, of unreliable measurement, and of mode of data analysis. Psychological Methods, 15(1): 56–68.

Cuervo-Cazurra, A., Andersson, U., Brannen, M. Y., Nielsen, B. B., & Reuber, A. R. 2016. From the Editors: Can I trust your findings? Ruling out alternative explanations in international business research. Journal of International Business Studies, 47(8): 881–897.

Cuervo-Cazurra, A., Mudambi, R., Pedersen, T., & Piscitello, L. 2017. Research methodology in global strategy research. Global Strategy Journal, 7(3): 233–240.

Delios, A. 2020. Science’s reproducibility and replicability crisis: A commentary. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 67–74). Cham: Springer.

Diamantopoulos, A. 1999. Export performance measurement: Reflective versus formative indicators. International Marketing Review, 16(6): 444–457.

Diamantopoulos, A., & Papadopoulos, N. 2010. Assessing the cross-national invariance of formative measures: Guidelines for international business researchers. Journal of International Business Studies, 41(2): 360–370.

Diamantopoulos, A., & Siguaw, J. A. 2006. Formative versus reflective indicators in organizational measure development: A comparison and empirical illustration. British Journal of Management, 17(4): 263–282.

Doty, D. H., & Astakhova, M. 2020. Common method variance in international business research: A commentary. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 399–408). Cham: Springer.

Dul, J. 2016. Necessary condition analysis (NCA): Logic and methodology of “necessary but not sufficient” causality. Organizational Research Methods, 19(1): 10–52.

Dul, J., Hak, T., Goertz, G., & Voss, C. 2010. Necessary condition hypotheses in operations management. International Journal of Operations & Production Management, 30(11): 1170–1190.

Eden, D. 2017. Field experiments in organizations. Annual Review of Organizational Psychology and Organizational Behavior, 4(1): 91–122.

Eden, L., Nielsen, B. B., & Verbeke, A. (Eds.). 2020. Research methods in international business. Cham: Springer.

Eden, D., Stone-Romero, E. F., & Rothstein, H. R. 2015. Synthesizing results of multiple randomized experiments to establish causality in mediation testing. Human Resource Management Review, 25(4): 342–351.

Edwards, J. R. 2001. Multidimensional constructs in organizational behavior research: An integrative analytical framework. Organizational Research Methods, 4(2): 144–192.

Edwards, J. R. 2011. The fallacy of formative measurement. Organizational Research Methods, 14(2): 370–388.

Eisenhardt, K. M., Graebner, M. E., & Sonenshein, S. 2016. Grand challenges and inductive methods: Rigor without rigor mortis. Academy of Management Journal, 59(4): 1113–1123.

Flammer, C., & Luo, J. 2017. Corporate social responsibility as an employee governance tool: Evidence from a quasi-experiment. Strategic Management Journal, 38(2): 163–183.

Furr, N., & Kapoor, R. 2018. Capabilities, technologies, and firm exit during industry shakeout: Evidence from the global solar photovoltaic industry. Strategic Management Journal, 39(1): 33–61.

George, G., Haas, M. R., & Pentland, A. 2014. From the editors: Big data and management. Academy of Management Journal, 57(2): 321–326.

George, G., Osinga, E. C., Lavie, D., & Scott, B. A. 2016. From the editors: Big data and data science methods for management research. Academy of Management Journal, 59(5): 1493–1507.

Gibbert, M., & Ruigrok, W. 2010. The “what” and “how” of case study rigor: Three strategies based on published work. Organizational Research Methods, 13(4): 710–737.

Grant, A. M., & Wall, T. D. 2009. The neglected science and art of quasi-experimentation: Why-to, when-to, and how-to advice for organizational researchers. Organizational Research Methods, 12(4): 653–686.

Green, J. P., Tonidandel, S., & Cortina, J. M. 2016. Getting through the gate: Statistical and methodological issues raised in the reviewing process. Organizational Research Methods, 19(3): 402–432.

Griffith, D. A., Cavusgil, S. T., & Xu, S. 2008. Emerging themes in international business research. Journal of International Business Studies, 39(7): 1220–1235.

Haas, M. R., Criscuolo, P., & George, G. 2015. Which problems to solve? Online knowledge sharing and attention allocation in organizations. Academy of Management Journal, 58(3): 680–711.

Harlow, L. L., & Oswald, F. L. 2016. Big data in psychology: Introduction to the special issue. Psychological Methods, 21(4): 447–457.

Hofacker, C. F., Malthouse, E. C., & Sultan, F. 2016. Big Data and consumer behavior: Imminent opportunities. Journal of Consumer Marketing, 33(2): 89–97.

Huvaj, M. N., & Johnson, W. C. 2019. Organizational complexity and innovation portfolio decisions: Evidence from a quasi-natural experiment. Journal of Business Research, 98: 153–165.

Iafrate, F. 2015. From big data to smart data. Hoboken, NJ: Wiley.

IBM. (2020). Extracting business value from the 4V’s of big data. Retrieved August 4, 2020 from https://www.ibmbigdatahub.com/infographic/extracting-business-value-4-vs-big-data.

Irani, R. M., & Oesch, D. 2016. Analyst coverage and real earnings management: Quasi-experimental evidence. Journal of Financial and Quantitative Analysis, 51(2): 589–627.

Ketchen, D. J., Jr., Ireland, R. D., & Baker, L. T. 2013. The use of archival proxies in strategic management studies: Castles made of sand? Organizational Research Methods, 16(1): 32–42.

Koch, A. J. 2001. Factors influencing market and entry mode selection: Developing the MEMS model. Marketing Intelligence & Planning, 19(5): 351–361.

Kogut, B., & Zander, U. 2000. Did socialism fail to innovate? A natural experiment of the two Zeiss companies. American Sociological Review, 65(2): 169–190.

Kosinski, M., Wang, Y., Lakkaraju, H., & Leskovec, J. 2016. Mining big data to extract patterns and predict real-life outcomes. Psychological Methods, 21(4): 493–506.

Kuypers, T., Guenter, H., & van Emmerik, H. 2018. Team turnover and task conflict: A longitudinal study on the moderating effects of collective experience. Journal of Management, 44(4): 1287–1311.

Landers, R. N., Brusso, R. C., Cavanaugh, K. J., & Collmus, A. B. 2016. A primer on theory-driven web scraping: Automatic extraction of big data from the internet for use in psychological research. Psychological Methods, 21(4): 475–492.

Laney, D. 2001. 3D data management: Controlling data volume, velocity and variety. META Group Research Note, 6: 70–73.

Leavitt, K., Mitchell, T. R., & Peterson, J. 2010. Theory pruning: Strategies to reduce our dense theoretical landscape. Organizational Research Methods, 13(4): 644–667.

Liesch, P. W., Håkanson, L., McGaughey, S. L., Middleton, S., & Cretchley, J. 2011. The evolution of the international business field: A scientometric investigation of articles published in its premier journal. Scientometrics, 88(1): 17–42.

Lonati, S., Quiroga, B. F., Zehnder, C., & Antonakis, J. 2018. On doing relevant and rigorous experiments: Review and recommendations. Journal of Operations Management, 64(1): 19–40.

Makadok, R., Burton, R., & Barney, J. 2018. A practical guide for making theory contributions in strategic management. Strategic Management Journal, 39(6): 1530–1545.

Martin, X. 2020. Multilevel models in international business research: Broadening the scope of application, and further reflections. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 439–446). Cham: Springer.

Matz, S. C., & Netzer, O. 2017. Using big data as a window into consumers’ psychology. Current Opinion in Behavioral Sciences, 18: 7–12.

McKenny, A. F., Aguinis, H., Short, J. C., & Anglin, A. H. 2018. What doesn’t get measured does exist: Improving the accuracy of computer-aided text analysis. Journal of Management, 44(7): 2909–2933.

Meyer, K. E., Witteloostuijn, A., & Beugelsdijk, S. 2017. What’s in a p? Reassessing best practices for conducting and reporting hypothesis-testing research. Journal of International Business Studies, 48(5): 535–551.

Mintz, O., Currim, I. S., Steenkamp, J. B., & de Jong, M. 2020. Managerial metric use in marketing decisions across 16 countries: A cultural perspective. Journal of International Business Studies. https://doi.org/10.1057/s41267-019-00259-z.

Miron-Spektor, E., Ingram, A., Keller, J., Smith, W. K., & Lewis, M. W. 2018. Microfoundations of organizational paradox: The problem is how we think about the problem. Academy of Management Journal, 61(1): 26–45.

Morrison, J. E., & Meliza, L. L. 1999. Foundations of the after-action review process. Arlington, VA: United States Army Research Institute for the Behavioral and Social Sciences. Retrieved August 4, 2020 from https://apps.dtic.mil/dtic/tr/fulltext/u2/a368651.pdf.

Munzert, S., Rubba, C., Meißner, P., & Nyhuis, D. 2014. Automated data collection with R: A practical guide to web scraping and text mining. Hoboken, NJ: Wiley.

Nielsen, B. B., Eden, L., & Verbeke, A. 2020. Research methods in international business: Challenges and advances. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 3–41). Cham: Springer.

Nielsen, B. B., & Gudergan, S. 2012. Exploration and exploitation fit and performance in international strategic alliances. International Business Review, 21(4): 558–574.

Nielsen, B. B., & Raswant, A. 2018. The selection, use, and reporting of control variables in international business research: A review and recommendations. Journal of World Business, 53(6): 958–968.

Noe, R. A. 2017. Employee training and development (7th ed.). New York, NY: McGraw-Hill Education.

Norder, K., Sullivan, D., Emich, K., & Sawhney, A. (2020). Re-anchoring the ontology of IB: A reply to Poulis & Poulis. Academy of Management Perspectives. https://doi.org/10.5465/amp.2019.0106.

O’Mara-Eves, A., Thomas, J., McNaught, J., Miwa, M., & Ananiadou, S. 2015. Using text mining for study identification in systematic reviews: A systematic review of current approaches. Systematic Reviews, 4(1): 5.

Peterson, M. F., Arregle, J. L., & Martin, X. 2012. Multilevel models in international business research. Journal of International Business Studies, 43(5): 451–457.

Peterson, M. F., & Muratova, Y. 2020. Distance in international business research: A commentary. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 499–505). Cham: Springer.

Podsakoff, P. M., MacKenzie, S. B., & Podsakoff, N. P. 2016. Recommendations for creating better concept definitions in the organizational, behavioral, and social sciences. Organizational Research Methods, 19(2): 159–203.

Poulis, K., & Poulis, E. 2018. International business as disciplinary tautology: An ontological perspective. Academy of Management Perspectives, 32(4): 517–531.

Rasheed, A. A., & Priem, R. L. 2020. “An A is An A”: We have met the enemy, and he is us! Academy of Management Perspectives, 341: 155–163.

Reeb, D., Sakakibara, M., & Mahmood, I. P. 2012. From the Editors: Endogeneity in international business research. Journal of International Business Studies, 43(3): 211–218.

Salter, M. S., & Klein, G. E. 2007. After-action reviews: Current observations and recommendations. Vienna, VA: Wexford Group International Inc. Retrieved August 4, 2020 from https://apps.dtic.mil/dtic/tr/fulltext/u2/a463410.pdf.

Shaver, J. M. 2020. Endogeneity in international business research: A commentary. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 377–382). Cham: Springer.

Sheng, J., Amankwah-Amoah, J., & Wang, X. 2017. A multidisciplinary perspective of big data in management research. International Journal of Production Economics, 191: 97–112.

Shenkar, O. 2004. One more time: International business in a global economy. Journal of International Business Studies, 35(2): 161–171.

Silge, J., & Robinson, D. 2016. tidytext: Text mining and analysis using tidy data principles in R. Journal of Open Source Software, 1(3): 37.

Stone-Romero, E. F., & Rosopa, P. J. 2008. The relative validity of inferences about mediation as a function of research design characteristics. Organizational Research Methods, 11(2): 326–352.

Sun, W., Zhao, Y., & Sun, L. 2020. Big data analytics for venture capital application: Towards innovation performance improvement. International Journal of Information Management, 50: 557–565.

Teagarden, M. B., Von Glinow, M. A., & Mellahi, K. 2018. Contextualizing international business research: Enhancing rigor and relevance. Journal of World Business, 53(3): 303–306.

Tho, N. D. 2018. Firm capabilities and performance: A necessary condition analysis. Journal of Management Development, 37(4): 322–332.

Tonidandel, S., King, E. B., & Cortina, J. M. 2018. Big data methods: Leveraging modern data analytic techniques to build organizational science. Organizational Research Methods, 21(3): 525–547.

Tsang, E. W. 2014. Case studies and generalization in information systems research: A critical realist perspective. The Journal of Strategic Information Systems, 23(2): 174–186.

Van der Valk, W., Sumo, R., Dul, J., & Schroeder, R. G. 2016. When are contracts and trust necessary for innovation in buyer–supplier relationships? A necessary condition analysis. Journal of Purchasing and Supply Management, 22(4): 266–277.

Van Iddekinge, C. H., Aguinis, H., Mackey, J. D., & DeOrtentiis, P. S. 2018. A meta-analysis of the interactive, additive, and relative effects of cognitive ability and motivation on performance. Journal of Management, 44(1): 249–279.

van Witteloostuijn, A., Eden, L., & Chang, S. J. 2020. Common method variance in international business research: Further reflections. In L. Eden, B. B. Nielsen, & A. Verbeke (Eds.), Research methods in international business (pp. 409–413). Cham: Springer.

Vandenberg, R. J., & Lance, C. E. 2000. A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods, 3(1): 4–70.

Vandor, P., & Franke, N. 2016. See Paris and… found a business? The impact of cross-cultural experience on opportunity recognition capabilities. Journal of Business Venturing, 31(4): 388–407.

Verbeke, A., & Calma, A. 2017. Footnotes on JIBS 1970–2016. Journal of International Business Studies, 48(9): 1037–1044.

Wang, S., Noe, R. A., & Wang, Z. M. 2014. Motivating knowledge sharing in knowledge management systems: A quasi-field experiment. Journal of Management, 40(4): 978–1009.

Wolfson, M. A., Tannenbaum, S. I., Mathieu, J. E., & Maynard, M. T. 2018. A cross-level investigation of informal field-based learning and performance improvements. Journal of Applied Psychology, 103(1): 14–36.

Zhang, Q., Yang, L. T., Chen, Z., & Li, P. 2018. A survey on deep learning for big data. Information Fusion, 42: 146–157.

ACKNOWLEDGEMENTS

We thank Alain Verbeke and four Journal of International Business Studies anonymous reviewers for highly constructive feedback on previous drafts.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Accepted by Alain Verbeke, Area Editor, 28 June 2020. This article has been with the authors for two revisions.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aguinis, H., Ramani, R.S. & Cascio, W.F. Methodological practices in international business research: An after-action review of challenges and solutions. J Int Bus Stud 51, 1593–1608 (2020). https://doi.org/10.1057/s41267-020-00353-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1057/s41267-020-00353-7