-

PDF

- Split View

-

Views

-

Cite

Cite

Qingyu Chen, Nagesh C Panyam, Aparna Elangovan, Karin Verspoor, BioCreative VI Precision Medicine Track system performance is constrained by entity recognition and variations in corpus characteristics, Database, Volume 2018, 2018, bay122, https://doi.org/10.1093/database/bay122

Close - Share Icon Share

Abstract

Precision medicine aims to provide personalized treatments based on individual patient profiles. One critical step towards precision medicine is leveraging knowledge derived from biomedical publications—a tremendous literature resource presenting the latest scientific discoveries on genes, mutations and diseases. Biomedical natural language processing (BioNLP) plays a vital role in supporting automation of this process. BioCreative VI Track 4 brings community effort to the task of automatically identifying and extracting protein–protein interactions (PPi) affected by mutations (PPIm), important in the precision medicine context for capturing individual genotype variation related to disease.

We present the READ-BioMed team’s approach to identifying PPIm-related publications and to extracting specific PPIm information from those publications in the context of the BioCreative VI PPIm track. We observe that current BioNLP tools are insufficient to recognise entities for these two tasks; the best existing mutation recognition tool achieves only 55% recall in the document triage training set, while relation extraction performance is limited by the low recall performance of gene entity recognition. We develop the models accordingly: for document triage, we develop term lists capturing interactions and mutations to complement BioNLP tools, and select effective features via a feature contribution study, whereas an ensemble of BioNLP tools is employed for relation extraction.

Our best document triage model achieves an F-score of 66.77% while our best model for relation extraction achieved an F-score of 35.09% over the final (updated post-task) test set. Impacting the document triage task, the characteristics of mutations are statistically different in the training and testing sets. While a vital new direction for biomedical text mining research, this early attempt to tackle the problem of identifying genetic variation of substantial biological significance highlights the importance of representative training data and the cascading impact of tool limitations in a modular system.

Introduction

Precision medicine is an emerging field (1), aiming to provide specialized medical treatments on the basis of individual patient characteristics, including their genotype, phenotype and other diagnostics (2). Primary biomedical databases represent an extraordinary collective volume of work, comprised of millions of contributions from the biomedical research community over decades (3). For instance, PubMed, the primary biomedical literature database, contains over 28 million biomedical publications (https://www.ncbi.nlm.nih.gov/pubmed/). This literature represents a critical information source for precision medicine, but the vast quantities of unstructured text make it challenging to identify and navigate relevant evidence. Biomedical Natural Language Processing (BioNLP) can be applied to address this problem, with the aim of automatically transforming publications into structured, searchable data. Two primary BioNLP tasks relevant to precision medicine are named entity recognition, e.g. as applied to recognize mentions of mutations in articles (4) and relation extraction, e.g. to identify interactions, such as protein–protein interactions (PPI), between biological entities described in papers (5). Few attempts have been made to closely integrate these tasks to understand higher-level interactions between them; recent efforts such as LitVar (6) emphasize sentence-level co-occurrence of entities but do not consider higher-order interactions between entities and relations. In the context of precision medicine, identification and extraction of PPI affected by mutations (PPIm) described in the literature (8) supports synthesis and, in turn, deeper understanding of the biological impacts of genetic variation.

The BioCreative VI Track 4 aimed to bring community effort to tackle this particular challenge (7). It established a gold standard dataset, consisting of 5509 biomedical articles that were manually annotated for PPIm statements (7). The track offered two related tasks for participation: (i) document triage, classifying whether or not a document is relevant to PPIm and returning the top-ranked relevant documents; and (ii) relation extraction, examining the document to identify specific protein pairs whose interaction is affected by a mutation. These tasks aim to support curation of the information relevant to precision medicine such that effort by human biocurators to catalogue PPIm facts is more effective; biocurators can focus on the top-ranked relevant documents without tedious manual examination of large quantities of irrelevant documents. This in turn facilitates precision medicine.

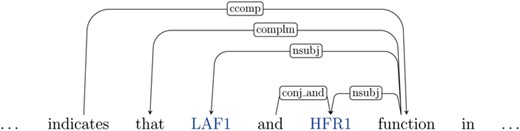

An example of a relevant PPIm relation that is expected to be identified in the relation extraction task appears in the sentence `LAF1, an R2R3-MYB factor, interacts with HFR1, a basic helix–loop–helix (bHLH) factor, and this interaction is abolished by the R97A mutation in the LAF1 R2R3 domain.’ [PMID:17699755], where the `LAF1–HFR1’ interaction is impacted by the R97A mutation in LAF1.

In the official results of this shared task presented at the BioCreative VI workshop, our best document triage model achieved over 88% recall, achieving the third highest recall amongst 22 submissions, while our best model for relation extraction achieved a Micro F1-score of 37.17%, ranking second amongst six submissions just behind the top team at 37.29%. Updated results provided post-workshop after correction of the test set lift our system to top rank across three out of four measures (described in detail below).

In this work, we present the READ-Biomed team system developed for BioCreative VI Track 4, for detection of documents relevant to PPIm and extraction of PPIm relations, substantially extending the original system description paper (9). Specifically, there are three main additional contributions beyond our original submission.

We provide an in-depth investigation of the original training set, by quantifying the effectiveness of a range of standard BioNLP tools for biological entity recognition. Application of these tools is a typical first important step in biocuration workflows and text mining pipelines. The analysis shows that existing BioNLP tools alone are not sufficient for recognising mutations or interactions. For example, the best mutation recognizer can achieve only ∼55% recall in the document triage data set; similarly, the maximum recall performance achievable by any relation extraction system is 56% on the provided data set when only using the standardly available tools. This suggests that the proposed models should leverage other techniques beyond standard BioNLP tools. It also shows that achieving high recall, especially for document triage task, is essential: low recall of relevant documents has propagated impacts on the later relation extraction step.

We study our models more comprehensively than in the original submission. This includes a detailed description of the feature engineering; providing a feature contribution study to quantify the effects of different sets of features in both tasks. For document triage, we leverage BioNLP tools and term lists to better capture mutations and interactions and experiment with several classification algorithms to detect relevant documents. The best model achieves ∼89% recall, significantly overcoming the limitations of the current BioNLP tools. For relation extraction, we study machine learning methods using term lists and dependency graph kernels and a heuristically designed co-occurrence based approach. Coupled with standard BioNLP tools for entity recognition, we achieve an F-score of 35.09 and 34.92% on the test using the machine learning and co-occurrence based approaches, respectively.

We provide an in-depth error analysis of our performance on both focus tasks, and a case study for a document triage model. The results collectively show that mutation characteristics have different impacts on the two tasks. For document triage, the model performance is dramatically decreased due to statistically significant differences in mutation characteristics between the training and testing set.

Analysis of the training set

Much work in BioNLP applies supervised machine learning to learn task models. Building supervised learning models, in general, consists of examining the characteristics of the training sets, developing features accordingly, choosing appropriate models, training and validating these models via standard evaluation metrics, e.g. precision and recall for classification tasks, and ultimately applying the developed models to the testing sets. Underlying this approach, there are two critical and implicit assumptions: (i) the characteristics of the testing set are similar to the provided training set (i.e. derived from similar distributions), such that the models capturing the important features and characteristics from the training set can be applied to the testing set and (ii) while the characteristics are similar, the testing set instances are novel; they are ‘hidden’ during the training process, and the evaluation over the testing set is a test of the generalisation of the trained model to unseen instances. Under these assumptions, analysis of the characteristics of the training set is the first step in developing supervised models. In this section, we investigate the BioCreative VI Track 4 training set and illustrate how it relates to the two focus tasks.

PPIm document triage

The document triage task is framed as a binary text classification task, i.e. classifying whether a document is relevant for PPIm or not. However, the task is related to a targeted information retrieval task, where the objective is to output documents ranked in order of relevance. The notion of relevance is context-dependent (10); a document in the context of this task is relevant if it describes PPI impacted by mutations. In other words, a relevant document must (i) mention at least one mutation, (ii) describe at least one protein interaction and (iii) indicate that there is some change in the interaction that can be considered to be caused by the mutation. To classify a document, standard text classification methods make use of tokens in the text; in the context of the PPIm task, terms corresponding to mutations, proteins and interactions may be pre-identified using entity recognition tools and given special status. A number of popular BioNLP entity recognition tools have been widely used for such entities; for instance, tmVar (4, 11), GNormPlus (12) and PIE the search (13) can be used to identify mutations, genes/proteins and possible interactions, respectively. PIE the search outputs the probability that a document contains an interaction, whereas tmVar and GNormPlus outputs individual recognized entities.

Thus, as the first step to examining the characteristics of the training set, we apply existing BioNLP entity recognition tools to recognize mutations and interactions. We quantify (i) how different the relevant and non-relevant documents are in terms of entities, e.g. if there are many more mutations identified in the relevant instances, it may be an important feature to distinguish the two classes and (ii) how well the tools identify mutations and interactions in the PPIm-relevant instances in the training set. Given the task definition, relevant documents would be expected to mention at least one mutation and some interaction; such annotations may be important features for supervised models learned for the task.

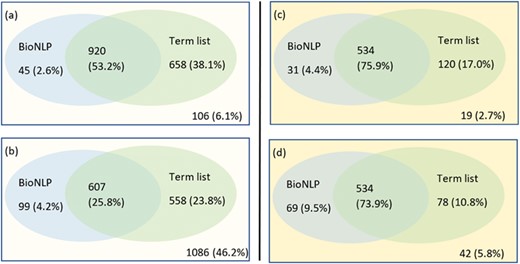

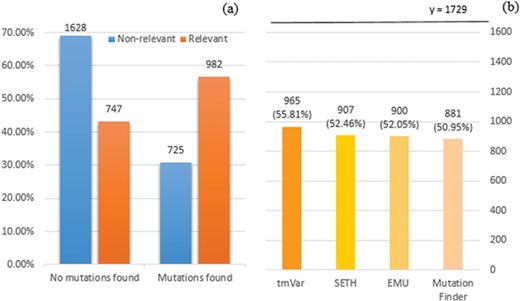

We employed tmVar (4), SETH (13), EMU (14) and MutationFinder (15) to recognize mutations in the training set. These tools have been used in a range of applications, such as evidence attribution for biological database curation (16) and genotype–phenotype extraction for precision medicine (17). The tools have been shown to have complementary coverage (18). Figure 1 presents the corresponding results: 1(a) shows the number of documents having mutations identified by at least one tool, whereas 1(b) specifically shows the number of relevant documents having mutations identified by individual tools. Overall it shows that relevant documents include identified mutation mentions more often than non-relevant documents, 56.8% vs 30.8% (and in fact, relevant documents could be expected to have 100% in reality), which shows that mutation related information from BioNLP tools can be potentially important to distinguish relevant from non-relevant documents. Nevertheless, the performance of the tools is relatively low; in this task the best tool identifies mutations in only 56% of relevant documents. The main reason for this gap is that many mutations or interactions are mentioned through general references rather than precise descriptions of individual mutations: as an example, `mutagenesis’ is the only term describing mutations in the paper (PMID:20485264 from the training set); this general mention is not detected by the tools. This suggests the necessity of alternative approaches to complement tools identifying specific mutations.

(a) Distribution of documents having a mutation identified by at least one of four mutation detection tools. Relevant: documents labelled as relevant for PPIm in the training set, otherwise non-relevant. Mutations found: documents have a mutation mention identified by at least one of the tools, otherwise no mutations found. The y-axis corresponds to the proportion relative to the relevant or non-relevant document collections, respectively. For instance, almost 70% of non-relevant documents (1628 out of 2353) have no detected mutation mentions. (b) Distribution of relevant documents having mutations identified by individual tools; y = 1729 is the total number of relevant documents, in which we would expect to have at least one mutation mentioned per task definition.

The exploration of protein interaction characteristics in the training set yields more consistent results. We show in Figure 2 the result of applying PIE `the search’ as the representative BioNLP tool for interaction extraction. Figure 2(a) and (b) represent the probability score distribution for non-relevant and relevant documents, respectively. Similarly to the mutation characteristics, relevant documents on average have higher probability score; however, still over 37% of relevant documents have a relatively low probability score, less than 0.7. Given that PIE `the search’ has demonstrated very high precision at high confidence, and overall good F1-score of ∼0.62 for PPI extraction in prior studies, it is surprising not to see a larger proportion of the PPIm-relevant documents with high-confidence extractions of PPIs.

Distribution of the probability of documents having interactions identified by PIE the search. The x-axis displays the probability score output by PIE the search (normalized to [0, 1]); a higher score indicates higher probability of having interaction. The y-axis reflects the number of documents. (a) and (b) represent the distribution for non-relevant (blue) and relevant documents (orange), respectively.

Relation extraction

In this task, the goal is to extract interacting gene pairs from a document, where that interaction is affected by a mutation. Towards this goal, the task offers a collection of PubMed documents with relation annotations and a limited set of entity annotations. We illustrate these details with a sample sentence taken from the document with PMID:10037723, with a given PPIm relation annotated for the protein pair (4292, 5395), where these numbers correspond to the normalized gene identifiers for the genes `hMLH1’ and `hPMS2’.

`Interestingly, two HNPCC missense alterations (Q542L and L582V) contained within the consensus interaction region displayed no effect on interaction with hPMS2, suggesting that they may affect other functions of hMLH1.'

The entities mentioned in the above text, such as the disease HNPCC, the mutation Q542L and the gene hPMS2, are shown highlighted. Note that the annotations of PPIm relations provided in the data are document-level rather than annotated to specific mentions of genes in the text. This means that there is no guarantee that the two protein/gene mentions for a PPIm relation co-occur in the same sentence, and we do not know precisely where in the text the PPIm relation is expressed.

Our approach to relation extraction is to cast it as a supervised classification task over pairs of entity mentions (full details of our approach will be provided below). From the positively classified set of entity mention pairs, we map each entity mention to its equivalent normalized entity identifier and output pairs of entity identifiers that are in a relation. Therefore, for the PPIm relation extraction task, we must first perform entity recognition of gene mentions in the text, along with normalisation to NCBI Gene identifiers. We use the relation annotations provided in the document for training our classifier, but we found that the entity annotations provided in the task dataset are not comprehensive. For example, the protein mention `hMLH1’ in the above sentence is not annotated in the dataset. Further, the gene-annotations provided in the PPIm dataset are limited, as only those genes that participate in a PPIm relation are annotated. Therefore, we used GNormPlus in our earlier work (1), to get a broader set of gene annotations. In this work, we extend our investigation to include another entity annotator, namely the Pubtator web API (21, 22) and explain in detail how the different entity annotators differ from each other.

Differences in entity annotators:

In our original work for the shared task in BioCreative-VI Track 4, we started with a clean slate approach by stripping all existing entity annotations from the training set. We then used GNormPlus (15) as sole entity annotator over the training and test datasets. In the current work, we investigate the impact of different annotation tools, starting with the default set of entity annotations provided in the task datasets (referred to as `Task annotations’ hereafter), GNormPlus and then Pubtator. The Task annotations are created manually and are of gold standard but do not represent a realistic entity annotation scheme. This is because they only contain annotations of those entities that participate directly in a PPIm relationship. In other words, the Task annotation scheme assumes knowledge of PPIm relations in the document and further encodes this knowledge by leaving out the annotations of entities that do not participate in any PPIm relation. In contrast, the external entity annotation tools, namely GNormPlus and Pubtator, represent more realistic annotation schemes, although they too are affected by errors. Our main motivation to include Pubtator in the current work is because it offers annotations for other event types such as mutations (incorporating the tmVar tool studied above), species names and chemicals, whose modeling can likely improve the relation extraction performance. We note that Pubtator too uses GNormPlus internally for gene annotations. However, there is a difference in the versions: Pubtator uses an older version of GNormPlus than is currently available for download. In this article, when we refer to GNormPlus, we mean the latest version of GNormPlus (15). An entity annotation can be considered as a triple (document id, character span, entity id). The output of applying the entity annotators is a set of entity annotations, which can be directly merged (union) when the underlying text span does not overlap. Therefore, integrating the non-gene annotations from Pubtator is straightforward, but it is not clear as to how to combine different entity annotations that overlap with each other. This is illustrated in this sample text from document PMID:17724026:

`Immunoprecipitations were performed in myocytes expressing PKCzeta using PKC phospho-motif antibodies to determine the phosphorylation of cTnI, cTnT, tropomyosin, myosin-binding protein C, and desmin.’

For the above sentence GNorm Plus annotates the substring `myosin-binding protein C, and desmin’ as a gene, but the corresponding Task annotation is just for the substring `desmin’. These differences can impact the relation extraction pipeline, such as entity masking steps, tokenisation, parsing and the overall feature representation of the sentence. Entity annotations can also differ on the entity ID, which is harder to resolve, as illustrated in this sample from the document PMID:10067897:

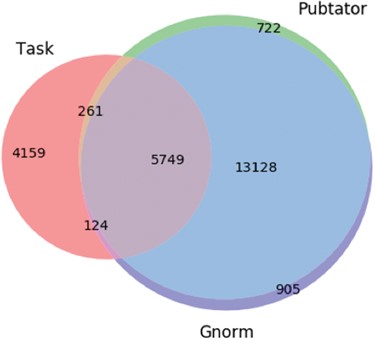

Here, the character span `Pbx1’ is annotated with id 32567 by Pubtator and with id 5087 by GNorm. Gene 32567 relates to an extra denticle in Drosophilia (see https://www.ncbi.nlm.nih.gov/gene/32567) and 5087 (see https://www.ncbi.nlm.nih.gov/gene/5087) is a gene that influences skeletal programming in mammals, but these two different genes share a common name `Pbx1’; this leads to the confusion. The extent of differences in the different annotation tools are illustrated in Figure 3.`Together, these protein-DNA and protein-protein interactions define the general principles by which homeotic proteins interact with Extradenticle (or Pbx1) to affect development along the anterior-posterior axis of animals.’

Venn diagram showing the differences in the gene entity annotations over the PPIm dataset. An entity annotation is regarded as a triple (document id, character span, entity id). The three sets are the set of annotations given in the task dataset (Task) and from the two entity annotators namely Pubtator and GNormPlus (GNorm).

Ensemble of entity annotators

We followed a simple heuristic approach to consolidate the entity annotations from the different sources. We include all non-gene entity annotations from Pubtator. When two entity annotations e1, e2 overlap, i.e. if they refer to common text segments in a document, they are combined into a single annotation, using the following guidelines:

Take the minimal superstring that encompasses the character spans of both e1 and e2 as the character span of the resultant entity annotation.

We prioritize the annotations in the following order: Task, GNorm Plus and Pubtator. For overlapping annotations, we take the gene id from the annotation source with higher priority.

A summary of the distribution of entity annotations in the Relation Extraction Dataset is presented in Table 1.

Distribution of entities as recognised by different entity annotators in the PPIm dataset

| Count . | Annotator . | Training set . | Test set . |

|---|---|---|---|

| Number of documents | - | 597 | 632 |

| Number of PPI relations | Task | 760 | 868 |

| Gene annotations | Task | 8832 | 1461 |

| GNorm | 8677 | 11229 | |

| Pubtator | 8801 | 11059 | |

| Annotator ensemble | 10897 | 11665 | |

| Mutations | Pubtator | 557 | 1722 |

| Species | Pubtator | 1102 | 948 |

| Count . | Annotator . | Training set . | Test set . |

|---|---|---|---|

| Number of documents | - | 597 | 632 |

| Number of PPI relations | Task | 760 | 868 |

| Gene annotations | Task | 8832 | 1461 |

| GNorm | 8677 | 11229 | |

| Pubtator | 8801 | 11059 | |

| Annotator ensemble | 10897 | 11665 | |

| Mutations | Pubtator | 557 | 1722 |

| Species | Pubtator | 1102 | 948 |

Distribution of entities as recognised by different entity annotators in the PPIm dataset

| Count . | Annotator . | Training set . | Test set . |

|---|---|---|---|

| Number of documents | - | 597 | 632 |

| Number of PPI relations | Task | 760 | 868 |

| Gene annotations | Task | 8832 | 1461 |

| GNorm | 8677 | 11229 | |

| Pubtator | 8801 | 11059 | |

| Annotator ensemble | 10897 | 11665 | |

| Mutations | Pubtator | 557 | 1722 |

| Species | Pubtator | 1102 | 948 |

| Count . | Annotator . | Training set . | Test set . |

|---|---|---|---|

| Number of documents | - | 597 | 632 |

| Number of PPI relations | Task | 760 | 868 |

| Gene annotations | Task | 8832 | 1461 |

| GNorm | 8677 | 11229 | |

| Pubtator | 8801 | 11059 | |

| Annotator ensemble | 10897 | 11665 | |

| Mutations | Pubtator | 557 | 1722 |

| Species | Pubtator | 1102 | 948 |

The limitation imposed by entity annotators on the maximum relation extraction recall achievable can be seen in Table 2.

Maximum recall achievable by our relation extraction system with different entity annotation schemes

| Entity annotation . | Maximum recall achievable by relation extraction . |

|---|---|

| GNormPlus | 55.95 |

| GNormPlus + Pubtator | 56.30 |

| Task annotations | 1.0 |

| Annotator ensemble | 1.0 |

| Entity annotation . | Maximum recall achievable by relation extraction . |

|---|---|

| GNormPlus | 55.95 |

| GNormPlus + Pubtator | 56.30 |

| Task annotations | 1.0 |

| Annotator ensemble | 1.0 |

Maximum recall achievable by our relation extraction system with different entity annotation schemes

| Entity annotation . | Maximum recall achievable by relation extraction . |

|---|---|

| GNormPlus | 55.95 |

| GNormPlus + Pubtator | 56.30 |

| Task annotations | 1.0 |

| Annotator ensemble | 1.0 |

| Entity annotation . | Maximum recall achievable by relation extraction . |

|---|---|

| GNormPlus | 55.95 |

| GNormPlus + Pubtator | 56.30 |

| Task annotations | 1.0 |

| Annotator ensemble | 1.0 |

Summary of the training set study

The examination of the training set characteristics gives three implications: (i) entity recognition is important for both tasks; for example, entities recognized by BioNLP tools can be important features to differentiate relevant and non-relevant documents; (ii) while entity recognition by BioNLP tools is important, complementary approaches are necessary given that many mutations and interactions of the relevant documents cannot be identified; and (iii) the proposed model should have a high recall for both tasks. Arguably, recall is not an effective evaluation metric in general information retrieval domains (23) and is not in bioinformatics domains either, such as biological sequence database retrieval (24). In this task, however, it is critical since the standard BioNLP tools have a relatively low recall in entity recognition as shown above. Arguably, recall is even more important for the document triage task; the relation extraction system is reliant on having previously identified relevant documents.

Methods

In this section, we describe the models we developed for the two tasks that form part of BioCreative VI Track 4.

Document triage models

For document triage, we develop a range of features and quantify the importance of the features via a feature contribution study using a simple logistic regression classifier. The above analysis on the training set shows that while mutations and interactions are important features to distinguish relevant and non-relevant documents, using BioNLP tools alone cannot find many relevant mutation and interaction mentions. We therefore use other complementary approaches to develop features, as described below.

Feature engineering

We develop a set of features based on a variety of characteristics of the text, according to four primary aspects. We calculate distinct features for both sentences and paragraphs (structure-based), considering both terms identified as key biological concepts and any word (perspective-based) and then considering either individual occurrence, a co-occurrence of two or three terms (occurrence-based). Each of these is represented in terms of various quantities (count-based).

The structure-based aspect defines features based on the structure of the document; in this task, there are two relevant structures: features developed from paragraphs (that is, the title and the abstract) and sentences respectively (that is, each sentence in the paragraph). For instance, the number of genes in total identified in a paragraph by BioNLP tools is a feature derived from paragraph-level analysis, whereas the number of sentences having mutations identified by BioNLP tools is a feature derived from sentences.

The perspective-based aspect defines features according to the perspective through which key terms in the texts are identified: based on BioNLP tools, matched to a pre-defined term list, or both.

In BioNLP systems, term lists that capture important entities are often used to complement automatic BioNLP tools (25–27). We chose three BioNLP tools: tmVar (4) to detect mutations (although Figure 1 shows the ensemble of four tools has the highest number of mutations detected, tmVar alone detects nearly all of them and others are almost strict subsets of mutations identified by tmVar), GNormPlus (12) to detect genes and interactions (if the number of genes detected is greater than or equal to two (2)) and PIE the search (13) to output the probability score. Given that PIE the search outputs the probability score rather than specific entities, we use the score as a separate feature.

Given that BioNLP tools cannot find most of the entities, as we showed above, term lists are particularly useful in this task. We develop three term lists for `mutations’, `interactions’ and `degrees’ (changes to interactions due to mutations), respectively.

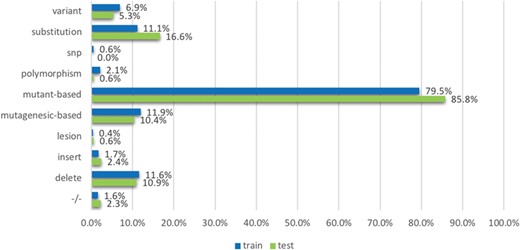

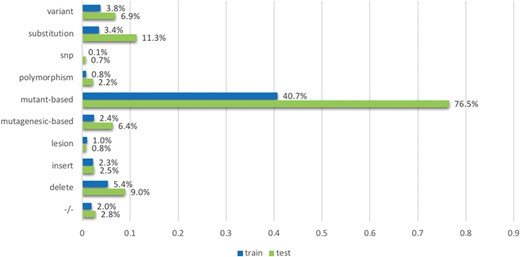

The `mutation’ term list contains terms which were based on the observations from existing mutation resources such as the Variome corpus (28). The list is split into `strong’ and `weak’ terms. `Strong’ terms are mostly unambiguously used to describe mutations in the literature: mutant-based terms (`mutation, mutant, and mutants/variant’), `delete’, `insertion’, `substitution’, −/−, +/−, the terms `polymorphism’, `SNP’, `lesion’, mutagenesis-based terms (`mutagenesis, mutagenic, mutagenetic and mutagenesis/delete’), `deleterious’ and `variant’. The `weak’ terms considered include `change’, ‘exchange’, ‘damage’, ‘remove’, ‘replace’, ‘disorder’, ‘deficiency’, ‘virulence’ and ‘truncation’. While they are used to describe mutations, they may also be used in other contexts; for instance, the term change can be used in `DNA base change’ but can also be used in ‘changes in the distribution’. We process strong and weak terms separately when developing features: ‘strong’ terms themselves can indicate the mentions of mutations directly; in contrast, ‘weak’ terms can indicate the mentions of mutations only if the amino acids (such as Alanine and Arginine) are co-mentioned in the text. We use the amino acid patterns provided in a previous study (29) to detect the amino acids.

The `interaction’ term list contains 30 terms describing interactions frequently in literature, such as ‘interact’, ‘complex’, ‘bound’, ‘bind’, ‘regulate’, ‘kinase’, ‘acetylation’, ‘phosphorylation’ and many others.

We further create a list of 23 `degree’ terms that are indicative of the impact a mutation may have on a protein interaction. For example, `increase’ is a term often used to describe that the presence of a mutation increases the level of interaction, including ‘degrade’, ‘decrease’, ‘strengthen’, ‘enhance’, ‘reduce’ and ‘impair’. These degree terms are derived from molecular interactions ontologies (from www.ebi.ac.uk/ols/ontologies/mi). All the term lists are available in the repository. For the terms mentioned above, we applied stemming to the original text and then did exact matching to identify them in the texts.

The occurrence-based aspect is based on whether interactions, mutations and degrees appear in three ways: `individual’, where interactions or mutations exist alone; `co-occurrence’, where interactions and mutations appear together within the relevant structural scope and `triplet’, whether a mutation–degree–interaction triplet appears. Since documents relevant for the PPIm task must have both interactions and mutations mentioned, features derived from this category can potentially distinguish positive documents from negatives.

Quantitative features are determined for each combination of the above aspect using a choice from the counting-based aspect. If an entity or a co-occurrence of two entities is detected from a single perspective, i.e. either based on BioNLP tools or term lists, we count the `total’ number of mentions or the number of `unique’ entities. In contrast, if an entity is detected through both perspectives, there are three possibilities: (i) `intersection’: for example, the number of entity or co-occurrence mentions identified by both BioNLP tools and the term lists; (ii) `union’: the count of entities or co-occurrences where either approach has identified them; and (iii) `complement’: for example, GNormPlus finds two genes and the mutation term lists finds a mutation in a sentence; in this case, BioNLP tools and term lists complement each other.

We incorporate two additional quantitative features: (i) the probability score from PIE the search is a separate feature as mentioned above and (ii) the `impact’ of a sentence based on the co-occurrence of interactions and mutations using the simple algorithm shown in Algorithm 1. In this case, the impact score is a real-valued number between 0 and 1.

The algorithm measures the 'impact' of a sentence. If there is a co-occurrence relationship, identified either using intersection, union or complement as mentioned in Counting-based category, it firstly calculates the mutation and interaction scores by summing the total mentions and normalising to [0, 1]. Then it calculates the impact score based on the weight of mutation and interaction scores (α and β). The default weights are 0.5, meaning that mutations and interactions are equally important.

Overall, we develop features by combining choices within these different aspects. For example, the number of sentences containing both interactions and mutations identified by BioNLP tools follows the `Sentence-BioConcept-Co-occurrence-Total’ aspect settings.

Feature contribution study results. Each set of features in a row is added to the existing feature set; for example, term paragraph individual features represent the new features added to the baseline features. The description of a set is consistent with the description of feature aspects. 2-gram: every two sentences. Fold 1 represents the first fold using 10-fold cross-validation, same for other folds. Table 4 provides the detailed descriptions of the best feature set found in the feature contribution study

| . | Fold 1 . | 2 . | 3 . | 4 . | 5 . | 6 . | 7 . | 8 . | 9 . | 10 . | F1 mean (±std) . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6390 | 0.6418 | 0.6457 | 0.6118 | 0.6435 | 0.6577 | 0.6611 | 0.6461 | 0.6286 | 0.5714 | 0.6347 (±0.0262) |

| Term paragraph individual features (S1) | 0.6796 | 0.6523 | 0.7120 | 0.6538 | 0.6832 | 0.7143 | 0.6610 | 0.7119 | 0.6991 | 0.6386 | 0.6806 (±0.0281) |

| Term paragraph co-occurrence features (S2) | 0.6839 | 0.6541 | 0.7065 | 0.6474 | 0.6814 | 0.7128 | 0.6629 | 0.7139 | 0.7012 | 0.6488 | 0.6813 (±0.0266) |

| BioNLP paragraph individual features (S3) | 0.6753 | 0.6542 | 0.7215 | 0.6538 | 0.6868 | 0.7363 | 0.6685 | 0.7155 | 0.7012 | 0.6667 | 0.6880 (±0.0292) |

| BioNLP paragraph co-occurrence features (S4) | 0.6753 | 0.6584 | 0.7215 | 0.6538 | 0.6923 | 0.7363 | 0.6722 | 0.7135 | 0.6972 | 0.6667 | 0.6887 (±0.0281) |

| Term sentence individual feature (S5) | 0.6753 | 0.6522 | 0.7139 | 0.6431 | 0.6904 | 0.7415 | 0.6685 | 0.7155 | 0.7012 | 0.6588 | 0.6860 (±0.0318) |

| Term sentence co-occurrence feature (S6) | 0.7386 | 0.6847 | 0.7538 | 0.6688 | 0.6885 | 0.7558 | 0.7193 | 0.7348 | 0.7169 | 0.6586 | 0.7120 (±0.0349) |

| BioNLP sentence individual feature (S7) | 0.7386 | 0.6828 | 0.7570 | 0.6731 | 0.6885 | 0.7609 | 0.7158 | 0.7348 | 0.7169 | 0.6606 | 0.7129 (±0.0353) |

| BioNLP sentence co-occurrence feature (S8) | 0.7320 | 0.6826 | 0.7468 | 0.6688 | 0.6921 | 0.7520 | 0.7123 | 0.7313 | 0.7169 | 0.6727 | 0.7108 (±0.0303) |

| Term & BioNLP sentence feature (S9) | 0.7284 | 0.6903 | 0.7558 | 0.6730 | 0.6957 | 0.7572 | 0.7033 | 0.7308 | 0.7234 | 0.6607 | 0.7118 (±0.0328) |

| Term & BioNLP sentence triplet feature (S10) | 0.7273 | 0.6825 | 0.7538 | 0.6730 | 0.6940 | 0.7461 | 0.7139 | 0.7534 | 0.7077 | 0.6786 | 0.7130 (±0.0311) |

| Term & BioNLP sentence triplet 2-gram feature (S11) | 0.7273 | 0.6807 | 0.7661 | 0.6752 | 0.6959 | 0.7532 | 0.7174 | 0.7520 | 0.7099 | 0.6806 | 0.7158 (±0.0332) |

| Full feature set plus boosting | 0.7571 | 0.7086 | 0.7646 | 0.7108 | 0.7059 | 0.7809 | 0.7500 | 0.7627 | 0.7251 | 0.6899 | 0.7355 (±0.0311) |

| . | Fold 1 . | 2 . | 3 . | 4 . | 5 . | 6 . | 7 . | 8 . | 9 . | 10 . | F1 mean (±std) . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6390 | 0.6418 | 0.6457 | 0.6118 | 0.6435 | 0.6577 | 0.6611 | 0.6461 | 0.6286 | 0.5714 | 0.6347 (±0.0262) |

| Term paragraph individual features (S1) | 0.6796 | 0.6523 | 0.7120 | 0.6538 | 0.6832 | 0.7143 | 0.6610 | 0.7119 | 0.6991 | 0.6386 | 0.6806 (±0.0281) |

| Term paragraph co-occurrence features (S2) | 0.6839 | 0.6541 | 0.7065 | 0.6474 | 0.6814 | 0.7128 | 0.6629 | 0.7139 | 0.7012 | 0.6488 | 0.6813 (±0.0266) |

| BioNLP paragraph individual features (S3) | 0.6753 | 0.6542 | 0.7215 | 0.6538 | 0.6868 | 0.7363 | 0.6685 | 0.7155 | 0.7012 | 0.6667 | 0.6880 (±0.0292) |

| BioNLP paragraph co-occurrence features (S4) | 0.6753 | 0.6584 | 0.7215 | 0.6538 | 0.6923 | 0.7363 | 0.6722 | 0.7135 | 0.6972 | 0.6667 | 0.6887 (±0.0281) |

| Term sentence individual feature (S5) | 0.6753 | 0.6522 | 0.7139 | 0.6431 | 0.6904 | 0.7415 | 0.6685 | 0.7155 | 0.7012 | 0.6588 | 0.6860 (±0.0318) |

| Term sentence co-occurrence feature (S6) | 0.7386 | 0.6847 | 0.7538 | 0.6688 | 0.6885 | 0.7558 | 0.7193 | 0.7348 | 0.7169 | 0.6586 | 0.7120 (±0.0349) |

| BioNLP sentence individual feature (S7) | 0.7386 | 0.6828 | 0.7570 | 0.6731 | 0.6885 | 0.7609 | 0.7158 | 0.7348 | 0.7169 | 0.6606 | 0.7129 (±0.0353) |

| BioNLP sentence co-occurrence feature (S8) | 0.7320 | 0.6826 | 0.7468 | 0.6688 | 0.6921 | 0.7520 | 0.7123 | 0.7313 | 0.7169 | 0.6727 | 0.7108 (±0.0303) |

| Term & BioNLP sentence feature (S9) | 0.7284 | 0.6903 | 0.7558 | 0.6730 | 0.6957 | 0.7572 | 0.7033 | 0.7308 | 0.7234 | 0.6607 | 0.7118 (±0.0328) |

| Term & BioNLP sentence triplet feature (S10) | 0.7273 | 0.6825 | 0.7538 | 0.6730 | 0.6940 | 0.7461 | 0.7139 | 0.7534 | 0.7077 | 0.6786 | 0.7130 (±0.0311) |

| Term & BioNLP sentence triplet 2-gram feature (S11) | 0.7273 | 0.6807 | 0.7661 | 0.6752 | 0.6959 | 0.7532 | 0.7174 | 0.7520 | 0.7099 | 0.6806 | 0.7158 (±0.0332) |

| Full feature set plus boosting | 0.7571 | 0.7086 | 0.7646 | 0.7108 | 0.7059 | 0.7809 | 0.7500 | 0.7627 | 0.7251 | 0.6899 | 0.7355 (±0.0311) |

Feature contribution study results. Each set of features in a row is added to the existing feature set; for example, term paragraph individual features represent the new features added to the baseline features. The description of a set is consistent with the description of feature aspects. 2-gram: every two sentences. Fold 1 represents the first fold using 10-fold cross-validation, same for other folds. Table 4 provides the detailed descriptions of the best feature set found in the feature contribution study

| . | Fold 1 . | 2 . | 3 . | 4 . | 5 . | 6 . | 7 . | 8 . | 9 . | 10 . | F1 mean (±std) . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6390 | 0.6418 | 0.6457 | 0.6118 | 0.6435 | 0.6577 | 0.6611 | 0.6461 | 0.6286 | 0.5714 | 0.6347 (±0.0262) |

| Term paragraph individual features (S1) | 0.6796 | 0.6523 | 0.7120 | 0.6538 | 0.6832 | 0.7143 | 0.6610 | 0.7119 | 0.6991 | 0.6386 | 0.6806 (±0.0281) |

| Term paragraph co-occurrence features (S2) | 0.6839 | 0.6541 | 0.7065 | 0.6474 | 0.6814 | 0.7128 | 0.6629 | 0.7139 | 0.7012 | 0.6488 | 0.6813 (±0.0266) |

| BioNLP paragraph individual features (S3) | 0.6753 | 0.6542 | 0.7215 | 0.6538 | 0.6868 | 0.7363 | 0.6685 | 0.7155 | 0.7012 | 0.6667 | 0.6880 (±0.0292) |

| BioNLP paragraph co-occurrence features (S4) | 0.6753 | 0.6584 | 0.7215 | 0.6538 | 0.6923 | 0.7363 | 0.6722 | 0.7135 | 0.6972 | 0.6667 | 0.6887 (±0.0281) |

| Term sentence individual feature (S5) | 0.6753 | 0.6522 | 0.7139 | 0.6431 | 0.6904 | 0.7415 | 0.6685 | 0.7155 | 0.7012 | 0.6588 | 0.6860 (±0.0318) |

| Term sentence co-occurrence feature (S6) | 0.7386 | 0.6847 | 0.7538 | 0.6688 | 0.6885 | 0.7558 | 0.7193 | 0.7348 | 0.7169 | 0.6586 | 0.7120 (±0.0349) |

| BioNLP sentence individual feature (S7) | 0.7386 | 0.6828 | 0.7570 | 0.6731 | 0.6885 | 0.7609 | 0.7158 | 0.7348 | 0.7169 | 0.6606 | 0.7129 (±0.0353) |

| BioNLP sentence co-occurrence feature (S8) | 0.7320 | 0.6826 | 0.7468 | 0.6688 | 0.6921 | 0.7520 | 0.7123 | 0.7313 | 0.7169 | 0.6727 | 0.7108 (±0.0303) |

| Term & BioNLP sentence feature (S9) | 0.7284 | 0.6903 | 0.7558 | 0.6730 | 0.6957 | 0.7572 | 0.7033 | 0.7308 | 0.7234 | 0.6607 | 0.7118 (±0.0328) |

| Term & BioNLP sentence triplet feature (S10) | 0.7273 | 0.6825 | 0.7538 | 0.6730 | 0.6940 | 0.7461 | 0.7139 | 0.7534 | 0.7077 | 0.6786 | 0.7130 (±0.0311) |

| Term & BioNLP sentence triplet 2-gram feature (S11) | 0.7273 | 0.6807 | 0.7661 | 0.6752 | 0.6959 | 0.7532 | 0.7174 | 0.7520 | 0.7099 | 0.6806 | 0.7158 (±0.0332) |

| Full feature set plus boosting | 0.7571 | 0.7086 | 0.7646 | 0.7108 | 0.7059 | 0.7809 | 0.7500 | 0.7627 | 0.7251 | 0.6899 | 0.7355 (±0.0311) |

| . | Fold 1 . | 2 . | 3 . | 4 . | 5 . | 6 . | 7 . | 8 . | 9 . | 10 . | F1 mean (±std) . |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 0.6390 | 0.6418 | 0.6457 | 0.6118 | 0.6435 | 0.6577 | 0.6611 | 0.6461 | 0.6286 | 0.5714 | 0.6347 (±0.0262) |

| Term paragraph individual features (S1) | 0.6796 | 0.6523 | 0.7120 | 0.6538 | 0.6832 | 0.7143 | 0.6610 | 0.7119 | 0.6991 | 0.6386 | 0.6806 (±0.0281) |

| Term paragraph co-occurrence features (S2) | 0.6839 | 0.6541 | 0.7065 | 0.6474 | 0.6814 | 0.7128 | 0.6629 | 0.7139 | 0.7012 | 0.6488 | 0.6813 (±0.0266) |

| BioNLP paragraph individual features (S3) | 0.6753 | 0.6542 | 0.7215 | 0.6538 | 0.6868 | 0.7363 | 0.6685 | 0.7155 | 0.7012 | 0.6667 | 0.6880 (±0.0292) |

| BioNLP paragraph co-occurrence features (S4) | 0.6753 | 0.6584 | 0.7215 | 0.6538 | 0.6923 | 0.7363 | 0.6722 | 0.7135 | 0.6972 | 0.6667 | 0.6887 (±0.0281) |

| Term sentence individual feature (S5) | 0.6753 | 0.6522 | 0.7139 | 0.6431 | 0.6904 | 0.7415 | 0.6685 | 0.7155 | 0.7012 | 0.6588 | 0.6860 (±0.0318) |

| Term sentence co-occurrence feature (S6) | 0.7386 | 0.6847 | 0.7538 | 0.6688 | 0.6885 | 0.7558 | 0.7193 | 0.7348 | 0.7169 | 0.6586 | 0.7120 (±0.0349) |

| BioNLP sentence individual feature (S7) | 0.7386 | 0.6828 | 0.7570 | 0.6731 | 0.6885 | 0.7609 | 0.7158 | 0.7348 | 0.7169 | 0.6606 | 0.7129 (±0.0353) |

| BioNLP sentence co-occurrence feature (S8) | 0.7320 | 0.6826 | 0.7468 | 0.6688 | 0.6921 | 0.7520 | 0.7123 | 0.7313 | 0.7169 | 0.6727 | 0.7108 (±0.0303) |

| Term & BioNLP sentence feature (S9) | 0.7284 | 0.6903 | 0.7558 | 0.6730 | 0.6957 | 0.7572 | 0.7033 | 0.7308 | 0.7234 | 0.6607 | 0.7118 (±0.0328) |

| Term & BioNLP sentence triplet feature (S10) | 0.7273 | 0.6825 | 0.7538 | 0.6730 | 0.6940 | 0.7461 | 0.7139 | 0.7534 | 0.7077 | 0.6786 | 0.7130 (±0.0311) |

| Term & BioNLP sentence triplet 2-gram feature (S11) | 0.7273 | 0.6807 | 0.7661 | 0.6752 | 0.6959 | 0.7532 | 0.7174 | 0.7520 | 0.7099 | 0.6806 | 0.7158 (±0.0332) |

| Full feature set plus boosting | 0.7571 | 0.7086 | 0.7646 | 0.7108 | 0.7059 | 0.7809 | 0.7500 | 0.7627 | 0.7251 | 0.6899 | 0.7355 (±0.0311) |

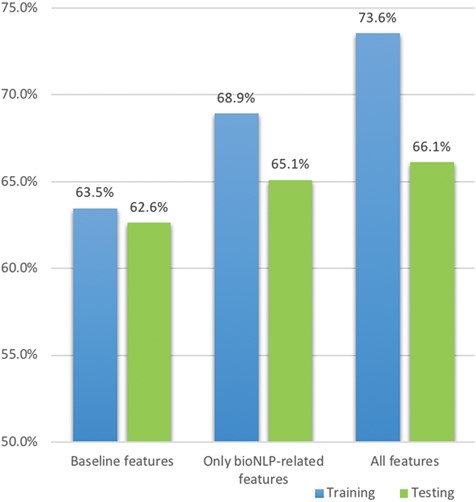

Feature importance

Since there are many features created in this approach, we perform a feature contribution study to find the best combination. To create a baseline model, we first performed simple text processing on the original document, including case-folding, tokenising, removing stop words and punctuation and stemming, using the NLTK package (30). We then create tf-idf matrices for processed tokens in each document. Finally, a logistic regression model is built using the tf-idf matrices as features with Scikit-learn (31). Tf-idf weighting, quantifying the importance of a term based on its frequency as well as penalising very frequently occurring terms across the documents, is widely used in text classification and information retrieval (32, 33); similarly, logistic regression is often used as a baseline model for text classification since it is robust to sparse matrices (tf-idf matrices are often sparse such that a small proportion of terms have very high frequency whereas other terms have negligible occurrences) and outputs the probability score of the classification (34, 35). This baseline achieves a 63.5% F1 score, which is consistent with the baseline results reported by the task organizers (8).

After creating the baseline model, we introduce additional features based on the feature aspects and measure the updated performance. The results in Table 3 show the importance of different feature sets and the best feature set using boosting logistic regression gives an ∼10% increase on the F1 score as well as only increases the standard deviation by ∼0.5%; the detailed description of these best features are shown in Table 4. We find the following:

Term lists are effective, giving an increase of ∼4% F1 score even when considering only paragraph level;

Co-occurrence features are more effective at sentence level; for example, both co-occurrence features from BioNLP tools and term lists at paragraph level only give ∼0.8% additional improvement, whereas the counterparts at sentence level increase the score by ∼3%;

Using term lists and BioNLP tools together can further improve the F1 score by 0.5%. Co-occurrence (mutations and interactions) often occur within one sentence, while triplets (mutation–degree–interaction) often occur in two sentences.

Detailed descriptions of the best feature sets found via the feature contribution study in Table 3

| Feature set . | Feature ID . | Description . | Aspects . |

|---|---|---|---|

| S1 | F1 | Number of interactions identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total |

| F2 | Number of unique interactions identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| F3 | Number of mutations identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total | |

| F4 | Number of unique mutations identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| S2 | F5 | Number of interactions and mutations in total across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Total |

| F6 | Number of unique interactions and mutations across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Unique | |

| S3 | F7 | Number of genes identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total |

| F8 | Number of unique genes identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F9 | Number of mutations identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total | |

| F10 | Number of unique mutations identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F11 | The probability of the paragraph containing interactions by BioNLP tools (using PIE the search) | Paragraph-BioConcept-Individual-Total (probability) | |

| S4 | F12 | Number of interactions and mutations in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Occurrence-Total |

| S5 | F13 | Number of sentences containing mutations by term lists | Sentence-Term-Individual-Total |

| F14 | Number of sentences containing interactions by term lists | Sentence-Term-Individual-Total | |

| S6 | F15 | Number of sentences containing both interactions and mutations by term lists | Sentence-Term-Occurrence-Total |

| S7 | F16 | Number of sentences containing mutations by BioNLP tools | Sentence-BioConcept-Individual-Total |

| F17 | Number of sentences containing genes by BioNLP tools | Sentence-BioConcept-Individual-Total | |

| S8 | F18 | Number of sentences containing both interactions and mutations by BioNLP tools | Sentence-BioConcept-Occurrence-Total |

| S9 | F19 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools | Sentence-Both-Occurrence-Union |

| F20 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools using complementary approach | Sentence-Both-Occurrence-Complement | |

| S10 | F21 | Number of sentences containing mutation-impact-interaction triplets by term lists | Sentence-Term-Triplet-Total |

| F22 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools | Sentence-Both-Triplet-Union | |

| F23 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools or one another | Sentence-Both-Triplet-Complement | |

| S11 | F24 | Same as F17, but on number of sentence 2-grams | Sentence-Term-Triplet-Total |

| F25 | Same as F18, but on number of sentence 2-grams | Sentence-Both-Triplet-Union | |

| F26 | Same as F19, but on number of sentence 2-grams | Sentence-Both-Triplet-Complement | |

| F27 | Same as F22, but using average probability of sentences instead of number of sentences | Sentence-Both-Triplet-Complement (probability) |

| Feature set . | Feature ID . | Description . | Aspects . |

|---|---|---|---|

| S1 | F1 | Number of interactions identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total |

| F2 | Number of unique interactions identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| F3 | Number of mutations identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total | |

| F4 | Number of unique mutations identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| S2 | F5 | Number of interactions and mutations in total across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Total |

| F6 | Number of unique interactions and mutations across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Unique | |

| S3 | F7 | Number of genes identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total |

| F8 | Number of unique genes identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F9 | Number of mutations identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total | |

| F10 | Number of unique mutations identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F11 | The probability of the paragraph containing interactions by BioNLP tools (using PIE the search) | Paragraph-BioConcept-Individual-Total (probability) | |

| S4 | F12 | Number of interactions and mutations in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Occurrence-Total |

| S5 | F13 | Number of sentences containing mutations by term lists | Sentence-Term-Individual-Total |

| F14 | Number of sentences containing interactions by term lists | Sentence-Term-Individual-Total | |

| S6 | F15 | Number of sentences containing both interactions and mutations by term lists | Sentence-Term-Occurrence-Total |

| S7 | F16 | Number of sentences containing mutations by BioNLP tools | Sentence-BioConcept-Individual-Total |

| F17 | Number of sentences containing genes by BioNLP tools | Sentence-BioConcept-Individual-Total | |

| S8 | F18 | Number of sentences containing both interactions and mutations by BioNLP tools | Sentence-BioConcept-Occurrence-Total |

| S9 | F19 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools | Sentence-Both-Occurrence-Union |

| F20 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools using complementary approach | Sentence-Both-Occurrence-Complement | |

| S10 | F21 | Number of sentences containing mutation-impact-interaction triplets by term lists | Sentence-Term-Triplet-Total |

| F22 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools | Sentence-Both-Triplet-Union | |

| F23 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools or one another | Sentence-Both-Triplet-Complement | |

| S11 | F24 | Same as F17, but on number of sentence 2-grams | Sentence-Term-Triplet-Total |

| F25 | Same as F18, but on number of sentence 2-grams | Sentence-Both-Triplet-Union | |

| F26 | Same as F19, but on number of sentence 2-grams | Sentence-Both-Triplet-Complement | |

| F27 | Same as F22, but using average probability of sentences instead of number of sentences | Sentence-Both-Triplet-Complement (probability) |

Detailed descriptions of the best feature sets found via the feature contribution study in Table 3

| Feature set . | Feature ID . | Description . | Aspects . |

|---|---|---|---|

| S1 | F1 | Number of interactions identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total |

| F2 | Number of unique interactions identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| F3 | Number of mutations identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total | |

| F4 | Number of unique mutations identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| S2 | F5 | Number of interactions and mutations in total across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Total |

| F6 | Number of unique interactions and mutations across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Unique | |

| S3 | F7 | Number of genes identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total |

| F8 | Number of unique genes identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F9 | Number of mutations identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total | |

| F10 | Number of unique mutations identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F11 | The probability of the paragraph containing interactions by BioNLP tools (using PIE the search) | Paragraph-BioConcept-Individual-Total (probability) | |

| S4 | F12 | Number of interactions and mutations in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Occurrence-Total |

| S5 | F13 | Number of sentences containing mutations by term lists | Sentence-Term-Individual-Total |

| F14 | Number of sentences containing interactions by term lists | Sentence-Term-Individual-Total | |

| S6 | F15 | Number of sentences containing both interactions and mutations by term lists | Sentence-Term-Occurrence-Total |

| S7 | F16 | Number of sentences containing mutations by BioNLP tools | Sentence-BioConcept-Individual-Total |

| F17 | Number of sentences containing genes by BioNLP tools | Sentence-BioConcept-Individual-Total | |

| S8 | F18 | Number of sentences containing both interactions and mutations by BioNLP tools | Sentence-BioConcept-Occurrence-Total |

| S9 | F19 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools | Sentence-Both-Occurrence-Union |

| F20 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools using complementary approach | Sentence-Both-Occurrence-Complement | |

| S10 | F21 | Number of sentences containing mutation-impact-interaction triplets by term lists | Sentence-Term-Triplet-Total |

| F22 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools | Sentence-Both-Triplet-Union | |

| F23 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools or one another | Sentence-Both-Triplet-Complement | |

| S11 | F24 | Same as F17, but on number of sentence 2-grams | Sentence-Term-Triplet-Total |

| F25 | Same as F18, but on number of sentence 2-grams | Sentence-Both-Triplet-Union | |

| F26 | Same as F19, but on number of sentence 2-grams | Sentence-Both-Triplet-Complement | |

| F27 | Same as F22, but using average probability of sentences instead of number of sentences | Sentence-Both-Triplet-Complement (probability) |

| Feature set . | Feature ID . | Description . | Aspects . |

|---|---|---|---|

| S1 | F1 | Number of interactions identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total |

| F2 | Number of unique interactions identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| F3 | Number of mutations identified in total across the paragraph by term lists | Paragraph-Term-Individual-Total | |

| F4 | Number of unique mutations identified across the paragraph by term lists | Paragraph-Term-Individual-Unique | |

| S2 | F5 | Number of interactions and mutations in total across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Total |

| F6 | Number of unique interactions and mutations across the paragraph by term lists if co-occurrence exists | Paragraph-Term-Occurrence-Unique | |

| S3 | F7 | Number of genes identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total |

| F8 | Number of unique genes identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F9 | Number of mutations identified in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Total | |

| F10 | Number of unique mutations identified across the paragraph by BioNLP tools | Paragraph-BioConcept-Individual-Unique | |

| F11 | The probability of the paragraph containing interactions by BioNLP tools (using PIE the search) | Paragraph-BioConcept-Individual-Total (probability) | |

| S4 | F12 | Number of interactions and mutations in total across the paragraph by BioNLP tools | Paragraph-BioConcept-Occurrence-Total |

| S5 | F13 | Number of sentences containing mutations by term lists | Sentence-Term-Individual-Total |

| F14 | Number of sentences containing interactions by term lists | Sentence-Term-Individual-Total | |

| S6 | F15 | Number of sentences containing both interactions and mutations by term lists | Sentence-Term-Occurrence-Total |

| S7 | F16 | Number of sentences containing mutations by BioNLP tools | Sentence-BioConcept-Individual-Total |

| F17 | Number of sentences containing genes by BioNLP tools | Sentence-BioConcept-Individual-Total | |

| S8 | F18 | Number of sentences containing both interactions and mutations by BioNLP tools | Sentence-BioConcept-Occurrence-Total |

| S9 | F19 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools | Sentence-Both-Occurrence-Union |

| F20 | Number of sentences containing both interactions and mutations either by term lists or BioNLP tools using complementary approach | Sentence-Both-Occurrence-Complement | |

| S10 | F21 | Number of sentences containing mutation-impact-interaction triplets by term lists | Sentence-Term-Triplet-Total |

| F22 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools | Sentence-Both-Triplet-Union | |

| F23 | Number of sentences containing mutation-impact-interaction triplets by term lists or BioNLP tools or one another | Sentence-Both-Triplet-Complement | |

| S11 | F24 | Same as F17, but on number of sentence 2-grams | Sentence-Term-Triplet-Total |

| F25 | Same as F18, but on number of sentence 2-grams | Sentence-Both-Triplet-Union | |

| F26 | Same as F19, but on number of sentence 2-grams | Sentence-Both-Triplet-Complement | |

| F27 | Same as F22, but using average probability of sentences instead of number of sentences | Sentence-Both-Triplet-Complement (probability) |

We use the best feature set determined through this study, together with tf-idf term weightings, to train boosting logistic regression, Support Vector Machine (SVM) and Random Forest models for classification.

Relation extraction models

For PPIm relation extraction in the shared task, we explored two methods: (i) graph kernels based on dependency parsing of sentences; and (ii) a co-occurrence based relation extraction system. In this work, we have extended our evaluation to include a feature-based classifier using word bigrams. These methods are described in detail in this section.

Graph kernel-based approach:

We used a two-stage approach of dataset preprocessing to generate candidate gene pairs, followed by a supervised classifier that detects PPIm relations amongst the candidate entity pairs. These steps are described below.

‘Preprocessing’: In the pre-processing stage, we use the Turku Event Extraction System (TEES) (36) to parse each document into a set of sentences and the dependency graph representation of these sentences. For example, considering the previous example again:

… LAF1, an R2R3-MYB factor, interacts with HFR1, a basic helix-loop-helix (bHLH) factor, and this interaction is abolished by the R97A mutation in the LAF1 R2R3 domain. ... This result indicates that LAF1 and HFR1 function in largely independent pathways. LAF1, an R2R3-MYB factor, interacts with HFR1 …

The dependency graph representation of a snippet from this above text is illustrated in Figure 4.

Illustration of the (partial) dependency graph for the sentence `This result indicates that LAF1 and HFR1 function in largely independent pathways’. The entities (genes) are shown in blue.

Next, we use the entity annotations corresponding to this document, to obtain a list of gene mentions in this document, such as LAF1 and HFR1. All possible pairs of genes are generated as candidate relations. Note that the two mentions in a pair may be separated by zero or more sentence boundaries. We refer to these as sentence-level relations and non-sentence relations, respectively. Sentence-level relations are processed using the dependency graph of the underlying sentence. For example, the entity pair (LAF1, HFR1) is represented by the dependency graph shown in Figure 4. For non-sentence relations, we pick the two dependency graphs of the sentences containing the relevant entity mentions. These two graphs are connected by inserting a special edge between their root nodes and the resultant graph is used to represent the cross-sentence entity pair, following the approach of our prior work (50).

‘Entity masking’: Given an entity mention pair and its dependency graph representation, we replace the labels of the nodes corresponding to the two Proteins with generic strings such as ‘Protein1’ and ‘Protein2’ in the dependency graph. These are the nodes whose corresponding tokens in the sentence overlaps with the character span of given entity mentions. Entity mentions in the sentence that are not the primary arguments are replaced with generic strings such as ‘Protein_Other’, ‘Mutation_Other’, ‘Species_Other’ and ‘Chemical_Other’, based on the entity type to which it belongs to. This step is shown to be effective in improving generalisation in prior event extraction studies (37). Also, these special strings serve the purpose of imparting the entity information to the dependency graph, by discriminating the main event arguments (gene pairs) from the rest of the tokens. These modified dependency graphs are used as examples for a graph kernel-based classifier that is described below. Including additional entity types such as mutations, was found to help improve the performance of the relation extractor. In the next step, we prune the candidate list of entity pairs as described below.

The candidate examples generated for relation classification with different entity annotation schemes

| Type . | Entity annotator . | Training set . | Testing set . | ||

|---|---|---|---|---|---|

| Positive examples | Negative examples | Positive examples | Negative examples | ||

| Sentence-level relations | Task | 4115 | 532 | 264 | 152 |

| GNormPlus | 1709 | 4697 | 2829 | 5780 | |

| GNormPlus + Pubtator | 1783 | 4822 | 2865 | 5835 | |

| GNormPlus + Pubtator + Task | 4058 | 4533 | 3097 | 6030 | |

| Non-sentence level relations | Task | 412 | 162 | 556 | 178 |

| GNormPlus | 89 | 4616 | 321 | 10105 | |

| GNormPlus + Pubtator | 89 | 4734 | 338 | 10190 | |

| GNormPlus + Pubtator + Task | 430 | 5317 | 872 | 11719 | |

| Type . | Entity annotator . | Training set . | Testing set . | ||

|---|---|---|---|---|---|

| Positive examples | Negative examples | Positive examples | Negative examples | ||

| Sentence-level relations | Task | 4115 | 532 | 264 | 152 |

| GNormPlus | 1709 | 4697 | 2829 | 5780 | |

| GNormPlus + Pubtator | 1783 | 4822 | 2865 | 5835 | |

| GNormPlus + Pubtator + Task | 4058 | 4533 | 3097 | 6030 | |

| Non-sentence level relations | Task | 412 | 162 | 556 | 178 |

| GNormPlus | 89 | 4616 | 321 | 10105 | |

| GNormPlus + Pubtator | 89 | 4734 | 338 | 10190 | |

| GNormPlus + Pubtator + Task | 430 | 5317 | 872 | 11719 | |

The candidate examples generated for relation classification with different entity annotation schemes

| Type . | Entity annotator . | Training set . | Testing set . | ||

|---|---|---|---|---|---|

| Positive examples | Negative examples | Positive examples | Negative examples | ||

| Sentence-level relations | Task | 4115 | 532 | 264 | 152 |

| GNormPlus | 1709 | 4697 | 2829 | 5780 | |

| GNormPlus + Pubtator | 1783 | 4822 | 2865 | 5835 | |

| GNormPlus + Pubtator + Task | 4058 | 4533 | 3097 | 6030 | |

| Non-sentence level relations | Task | 412 | 162 | 556 | 178 |

| GNormPlus | 89 | 4616 | 321 | 10105 | |

| GNormPlus + Pubtator | 89 | 4734 | 338 | 10190 | |

| GNormPlus + Pubtator + Task | 430 | 5317 | 872 | 11719 | |

| Type . | Entity annotator . | Training set . | Testing set . | ||

|---|---|---|---|---|---|

| Positive examples | Negative examples | Positive examples | Negative examples | ||

| Sentence-level relations | Task | 4115 | 532 | 264 | 152 |

| GNormPlus | 1709 | 4697 | 2829 | 5780 | |

| GNormPlus + Pubtator | 1783 | 4822 | 2865 | 5835 | |

| GNormPlus + Pubtator + Task | 4058 | 4533 | 3097 | 6030 | |

| Non-sentence level relations | Task | 412 | 162 | 556 | 178 |

| GNormPlus | 89 | 4616 | 321 | 10105 | |

| GNormPlus + Pubtator | 89 | 4734 | 338 | 10190 | |

| GNormPlus + Pubtator + Task | 430 | 5317 | 872 | 11719 | |

‘Filtering self-relations’: We used a heuristic rule of filtering out all self-relations, i.e. we disallow a PPIm relation between identical gene IDs. We were motivated by the intuition that a gene or protein typically does not interact with itself. We found that self-relation filtering improves the F-score substantially on both the training set (0.2554 to 0.2834) and test set (0.2960 to 0.3355). However, it also limits the recall performance of our relation extractor, as 6% of relations in the test set are self-relations. For example, in the document id 17074813, titled ‘Differential regulation of B-raf isoforms by phosphorylation and auto-inhibitory mechanisms’, the gene B-raf with NCBI ID 673, contains a valid PPIm self-relation annotation (673, 673).

In the final step, the resulting set of candidate entity pairs are input to a binary classifier for training the model and at test phase for identifying relations. The statistics of the examples generated for classification are listed in Table 5.

‘Relation classification’: Given entity mention pairs and the corresponding dependency graphs from the preprocessing step described above, we train a supervised classifier as follows. For every entity mention pair in the training set, we look up on their corresponding entity ids (NCBI gene id) in the relation annotations to determine if there is a PPIm relation. Such examples are labelled positive and the rest are labelled negative. We separated the sentence level relations and non-sentence level relations into two separate classification pipelines and trained two separate binary SVM classifiers for these pipelines. We used the Approximate Subgraph Matching (ASM) kernel with the SVM classifiers, as ASM kernel is designed primarily to work with edge labelled dependency graphs for relation classification. The ASM kernel translates an input graph into a high-dimensional feature representation. More details about the ASM kernel are available in (53). We implemented our classifier with the scikit-learn (31) library in Python, using the SGD classifier with hinge loss. The class weights were set to 2:1 for the sentence classifier and 6:1 for the non-sentence classifier. In the final stage, the entity mention pairs generated from the PPIm test set are classified and from the positively labelled entity mention pairs, we extract their corresponding entity ids (NCBI gene ids) and output the union of these as the document level relations.

`Document relevance score and mutation context’: The document triage task associates each document with a score that represents the probability of it holding PPIm relations. We experimented with using this relevance score as an additional feature for the relation extraction task. We also experimented with using a set of specialized terms to help recognize mutation mentions in a sentence with the hypothesis that it improves relation extraction performance. This is the same list of terms that was found to improve document triage classification performance and is described in detail in the feature engineering section of document triage classification above.

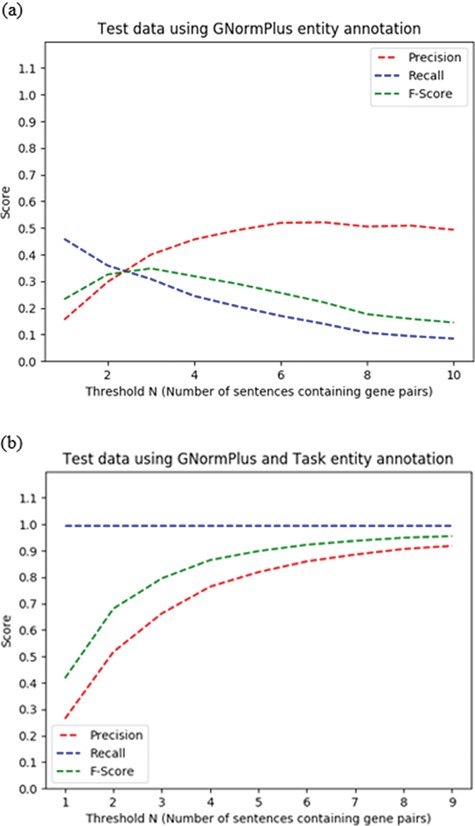

Co-occurrence-based PPIm extraction

In the co-occurrence based approach, we use GNormPlus (12) for protein entity recognition and LingPipe (http://alias-i.com/lingpipe/index.html) for delimiting sentences. We begin by identifying all pairs of protein mentions that occur within a single sentence.

Document triage task performance over the training set using 10-fold cross-validation

| Model . | Training time (sec) . | Prediction time (sec) . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|---|---|

| Baseline | 0.5885 (±0.0949) | 0.0006 (±0.0001) | 0.6839 (±0.0303) | 0.6485 (±0.0353) | 0.6229 (±0.0353) | 0.6347 (±0.0262) |

| LR (boosting) | 26.7562 (±4.0662) | 0.0500 (±0.0133) | 0.7580 (±0.0262) | 0.7058 (±0.0313) | 0.7684 (±0.0368) | 0.7355 (±0.0311) |

| SVM | 65.8464 (±0.8162) | 1.1460 (±0.0398) | 0.7479 (±0.0287) | 0.7097 (±0.0305) | 0.7102 (±0.0443) | 0.7095 (±0.0322) |

| RF | 829.0522 (±54.4509) | 8.4559 (±0.1722) | 0.7457 (±0.0370) | 0.6651 (±0.0322) | 0.7946 (±0.0243) | 0.7236 (±0.0227) |

| Model . | Training time (sec) . | Prediction time (sec) . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|---|---|

| Baseline | 0.5885 (±0.0949) | 0.0006 (±0.0001) | 0.6839 (±0.0303) | 0.6485 (±0.0353) | 0.6229 (±0.0353) | 0.6347 (±0.0262) |

| LR (boosting) | 26.7562 (±4.0662) | 0.0500 (±0.0133) | 0.7580 (±0.0262) | 0.7058 (±0.0313) | 0.7684 (±0.0368) | 0.7355 (±0.0311) |

| SVM | 65.8464 (±0.8162) | 1.1460 (±0.0398) | 0.7479 (±0.0287) | 0.7097 (±0.0305) | 0.7102 (±0.0443) | 0.7095 (±0.0322) |

| RF | 829.0522 (±54.4509) | 8.4559 (±0.1722) | 0.7457 (±0.0370) | 0.6651 (±0.0322) | 0.7946 (±0.0243) | 0.7236 (±0.0227) |

Document triage task performance over the training set using 10-fold cross-validation

| Model . | Training time (sec) . | Prediction time (sec) . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|---|---|

| Baseline | 0.5885 (±0.0949) | 0.0006 (±0.0001) | 0.6839 (±0.0303) | 0.6485 (±0.0353) | 0.6229 (±0.0353) | 0.6347 (±0.0262) |

| LR (boosting) | 26.7562 (±4.0662) | 0.0500 (±0.0133) | 0.7580 (±0.0262) | 0.7058 (±0.0313) | 0.7684 (±0.0368) | 0.7355 (±0.0311) |

| SVM | 65.8464 (±0.8162) | 1.1460 (±0.0398) | 0.7479 (±0.0287) | 0.7097 (±0.0305) | 0.7102 (±0.0443) | 0.7095 (±0.0322) |

| RF | 829.0522 (±54.4509) | 8.4559 (±0.1722) | 0.7457 (±0.0370) | 0.6651 (±0.0322) | 0.7946 (±0.0243) | 0.7236 (±0.0227) |

| Model . | Training time (sec) . | Prediction time (sec) . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|---|---|

| Baseline | 0.5885 (±0.0949) | 0.0006 (±0.0001) | 0.6839 (±0.0303) | 0.6485 (±0.0353) | 0.6229 (±0.0353) | 0.6347 (±0.0262) |

| LR (boosting) | 26.7562 (±4.0662) | 0.0500 (±0.0133) | 0.7580 (±0.0262) | 0.7058 (±0.0313) | 0.7684 (±0.0368) | 0.7355 (±0.0311) |

| SVM | 65.8464 (±0.8162) | 1.1460 (±0.0398) | 0.7479 (±0.0287) | 0.7097 (±0.0305) | 0.7102 (±0.0443) | 0.7095 (±0.0322) |

| RF | 829.0522 (±54.4509) | 8.4559 (±0.1722) | 0.7457 (±0.0370) | 0.6651 (±0.0322) | 0.7946 (±0.0243) | 0.7236 (±0.0227) |

Our approach then applies three heuristics to filter protein pairs:

Filter out any self-relationships (i.e. a protein cannot interact with itself), as for the graph-based approach.

Given that an abstract describes at least one PPIm relationship, the more mentions a protein pair has in such an abstract, the more likely it is that the pair participates in a PPIm relationship. We considered the number of sentences N containing a given protein pair to define a threshold to extract protein pairs.

A default rule that applies if a protein pair falls below the threshold but is the only pair mentioned in a sentence that also contains the word ‘interact’. The choice of ‘interact’ as the trigger word was based on it being the most frequent term used to express protein interaction relationships in the training set.

We set the co-mention sentence threshold N for heuristic H2 empirically, based on the best F-score on the training set for N ranging from 1–4, selecting N > = 3, requiring a co-occurring protein pair to appear in three or more sentences in the abstract.

Results

We present the results from cross-validation testing over the training set, as well as the results on the latest official test set for both tasks.

Document triage

The experimental results for document triage are collectively shown in Tables 6 and 7. We perform 10-fold cross-validation to evaluate the performance of the models. We report four evaluation metrics: mean average precision (the precision based on the rankings of returned relevant documents), precision (the proportion of classified relevant documents that are indeed relevant), recall (the proportion of correctly classified relevant documents over the total number of relevant documents) and F1 (the harmonic mean of precision and recall). The choices of these evaluation metrics are based on the use cases of the document triage task. The task needs to support retrieval of relevant documents for biocurators or biologists: the model ideally should return the highly relevant documents that it classifies at the top so that biocurators can examine the top documents more carefully without exhaustively looking for all the returned documents. Ranked precision is an important information retrieval metric that quantifies this criterion (38). Likewise, the model should also find most of the relevant documents over the entire search space in a precise manner, where precision and recall are used as the primary measures in classification tasks.

Document triage task performance on the test set

| Model . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|

| Baseline | 0.6329 | 0.5852 | 0.6733 | 0.6262 |

| LR (BOOSTING) | 0.6822 | 0.5783 | 0.7713 | 0.6610 |

| SVM | 0.6721 | 0.5936 | 0.7116 | 0.6473 |

| RF | 0.6744 | 0.5361 | 0.8849 | 0.6677 |

| Model . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|

| Baseline | 0.6329 | 0.5852 | 0.6733 | 0.6262 |

| LR (BOOSTING) | 0.6822 | 0.5783 | 0.7713 | 0.6610 |

| SVM | 0.6721 | 0.5936 | 0.7116 | 0.6473 |

| RF | 0.6744 | 0.5361 | 0.8849 | 0.6677 |

Document triage task performance on the test set

| Model . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|

| Baseline | 0.6329 | 0.5852 | 0.6733 | 0.6262 |

| LR (BOOSTING) | 0.6822 | 0.5783 | 0.7713 | 0.6610 |

| SVM | 0.6721 | 0.5936 | 0.7116 | 0.6473 |

| RF | 0.6744 | 0.5361 | 0.8849 | 0.6677 |

| Model . | Ranked precision . | Precision . | Recall . | F1 . |

|---|---|---|---|---|

| Baseline | 0.6329 | 0.5852 | 0.6733 | 0.6262 |

| LR (BOOSTING) | 0.6822 | 0.5783 | 0.7713 | 0.6610 |

| SVM | 0.6721 | 0.5936 | 0.7116 | 0.6473 |

| RF | 0.6744 | 0.5361 | 0.8849 | 0.6677 |

Relation Extraction Performance with different modes of entity annotation. Performance measurements for the training set are based on 10-fold cross validation. Best results for training and test sets are highlighted, for entity recognition with standard BioNLP tools

| Entity annotation . | Relation extraction method . | Training set . | Test set . | ||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | ||

| Oracle | ASM with sentence level relations only | 0.8472 | 0.8112 | 0.8288 | 0.7605 | 0.2923 | 0.4223 |

| + Non-sentence relation extraction | 0.8373 | 0.8830 | 0.8595 | 0.7632 | 0.8826 | 0.8186 | |

| + Relevance info | 0.8308 | 0.8883 | 0.8586 | 0.7665 | 0.8803 | 0.8195 | |