Abstract

The IceCube Collaboration has observed a high-energy astrophysical neutrino flux and recently found evidence for neutrino emission from the blazar TXS 0506\(+\)056. These results open a new window into the high-energy universe. However, the source or sources of most of the observed flux of astrophysical neutrinos remains uncertain. Here, a search for steady point-like neutrino sources is performed using an unbinned likelihood analysis. The method searches for a spatial accumulation of muon-neutrino events using the very high-statistics sample of about 497,000 neutrinos recorded by IceCube between 2009 and 2017. The median angular resolution is \(\sim 1^\circ \) at 1 TeV and improves to \(\sim 0.3^\circ \) for neutrinos with an energy of 1 PeV. Compared to previous analyses, this search is optimized for point-like neutrino emission with the same flux-characteristics as the observed astrophysical muon-neutrino flux and introduces an improved event-reconstruction and parametrization of the background. The result is an improvement in sensitivity to the muon-neutrino flux compared to the previous analysis of \(\sim 35\%\) assuming an \(E^{-2}\) spectrum. The sensitivity on the muon-neutrino flux is at a level of \(E^2 \mathrm {d} N /\mathrm {d} E = 3\cdot 10^{-13}\,\mathrm {TeV}\,\mathrm {cm}^{-2}\,\mathrm {s}^{-1}\). No new evidence for neutrino sources is found in a full sky scan and in an a priori candidate source list that is motivated by gamma-ray observations. Furthermore, no significant excesses above background are found from populations of sub-threshold sources. The implications of the non-observation for potential source classes are discussed.

Similar content being viewed by others

1 Introduction

Astrophysical neutrinos are thought to be produced by hadronic interactions of cosmic-rays with matter or radiation fields in the vicinity of their acceleration sites [1]. Unlike cosmic-rays, neutrinos are not charged and are not deflected by magnetic fields and thus point back to their origin. Moreover, since neutrinos have a relatively small interaction cross section, they can escape from the sources and do not suffer absorption on their way to Earth. Hadronic interactions of high-energy cosmic rays may also result in high-energy or very-high-energy gamma-rays. Since gamma-rays can also arise from the interaction of relativistic leptons with low-energy photons, only neutrinos are directly linked to hadronic interactions. The most commonly assumed neutrino-flavor flux ratios in the sources result in equal or nearly equal flavor flux ratios at Earth [2]. Thus about 1/3 of the astrophysical neutrinos are expected to be muon neutrinos and muon anti-neutrinos.

In 2013, the IceCube Collaboration reported the observation of an unresolved, astrophysical, high-energy, all-flavor neutrino flux, consistent with isotropy, using a sample of events which begin inside the detector (‘starting events’) [3, 4]. This observation was confirmed by the measurement of an astrophysical high-energy muon-neutrino flux using the complementary detection channel of through-going muons, produced in neutrino interactions in the vicinity of the detector [5,6,7]. Track-like events from through-going muons are ideal to search for neutrino sources because of their relatively good angular resolution. However, to date, the sources of this flux have not been identified.

In 2018, first evidence of neutrino emission from an individual source was observed for the blazar TXS 0506\(+\)056 [8, 9]. Multi-messenger observations following up a high-energy muon neutrino event on September 22, 2017 resulted in the detection of this blazar being in flaring state. Furthermore, evidence was found for an earlier neutrino burst from the same direction between September 2014 and March 2015. However, the total neutrino flux from this source is less than 1% of the total observed astrophysical flux. Furthermore, the stacking of the directions of known blazars has revealed no significant excess of astrophysical neutrinos at the locations of known blazars. This indicates that blazars from the 2nd Fermi-LAT AGN catalogue contribute less than about 30% to the total observed neutrino flux assuming an unbroken power-law spectrum with spectral index of \(-2.5\) [10]. The constraint weakens to about 40–80% of the total observed neutrino flux assuming a spectral index of \(-2\) [8]. Note that these results are model dependent and an extrapolation beyond the catalog is uncertain. No other previous searches have revealed a significant source or source class of astrophysical neutrinos [11,12,13,14,15,16,17,18,19,20,21].

Here, a search for point-like sources is presented that takes advantage of the improved event selection and reconstruction of a muon-neutrino sample developed in [6] and the increased livetime of eight years [7] between 2009 and 2017. The best description of the sample includes a high-energy astrophysical neutrino flux given by a single power-law with a spectral index of \(2.19\pm 0.10\) and a flux normalization, at \(100\,\mathrm {TeV}\), of \(\Phi _{100\,\mathrm {TeV}} = 1.01^{+0.26}_{-0.23}\times 10^{-18}\,\mathrm {GeV}^{-1}\,\mathrm {cm}^{-2}\,\mathrm {s}^{-1}\,\mathrm {sr}^{-1}\), resulting in 190–2145 astrophysical neutrinos in the event sample. Compared to the previous time-integrated point source publication by IceCube [14, 16, 22,23,24], this analysis is optimized for sources that show similar energy spectra as the measured astrophysical muon-neutrino spectrum. Furthermore, a high-statistics Monte Carlo parametrization of the measured data, consisting of astrophysical and atmospherical neutrinos and including systematic uncertainties, is used to model the background expectation and thus increases the sensitivity.

Within this paper, the following tests are discussed: (1) a full sky scan for the most significant source in the Northern hemisphere, (2) a test for a population of sub-threshold sources based on the result of the full sky scan, (3) a search based on an a priori defined catalog of candidate objects motivated by gamma-ray observations [16], (4) a test for a population of sub-threshold sources based on the result of the a priori defined catalog search, and (5) a test of the recently observed blazar TXS 0506\(+\)056. The tests are described in Sect. 3.4 and their results are given in Sect. 4.

2 Data sample

Top: Muon neutrino and anti-neutrino effective area averaged over the Northern hemisphere as function of \(\log _{10}\) of neutrino energy. Middle: Median neutrino angular resolution as function of \(\log _{10}\) of neutrino energy. Bottom: Central 90% neutrino energy range for atmospheric (astrophysical) neutrinos as solid (dashed) line for each declination. Lines show the livetime weighted averaged of all sub-samples. Plots for individual seasons can be found in the supplemental material

IceCube is a cubic-kilometer neutrino detector with 5160 digital optical modules installed on 86 cable strings in the clear ice at the geographic South Pole between depths of 1450 m and 2450 m [25, 26]. The neutrino energy and directional reconstruction relies on the optical detection of Cherenkov radiation emitted by secondary particles produced in neutrino interactions in the surrounding ice or the nearby bedrock. The produced Cherenkov light is detected by digital optical modules (DOMs) each consisting of a 10 inch photomultiplier tube [27], on-board read-out electronics [28] and a high-voltage board, all contained in a spherical glass pressure vessel. Light propagation within the ice can be parametrized by the scattering and absorption behavior of the antarctic ice at the South Pole [29]. The detector construction finished in 2010. During construction, data was taken in partial detector configurations with 59 strings (IC59) from May 2009 to May 2010 and with 79 strings (IC79) from May 2010 to May 2011 before IceCube became fully operational.

For events arriving from the Southern hemisphere, the trigger rate in IceCube is dominated by atmospheric muons produced in cosmic-ray air showers. The event selection is restricted to the Northern hemisphere where these muons are shielded by the Earth. Additionally, events are considered down to \(-5^\circ \) declination, where the effective overburden of ice is sufficient to strongly attenuate the flux of atmospheric muons. Even after requiring reconstructed tracks from the Northern hemisphere, the event rate is dominated by mis-reconstructed atmospheric muons. However, these mis-reconstructed events can be reduced to less than 0.3% of the background using a careful event selection [6, 7]. As the data were taken with different partial configurations of IceCube, the details of the event selections are different for each season. At final selection level, the sample is dominated by atmospheric muon neutrinos from cosmic-ray air showers [6]. These atmospheric neutrinos form an irreducible background to astrophysical neutrino searches and can be separated from astrophysical neutrinos on a statistical basis only.

In total, data with a livetime of 2780.85 days are analyzed containing about 497, 000 events at the final selection level. A summary of the different sub-samples is shown in Table 1.

The performance of the event selection can be characterized by the effective area of muon-neutrino and anti-neutrino detection, the point spread function and the central 90% energy range of the resulting event sample. The performance is evaluated with a full detector Monte Carlo simulation [26].

The effective area \(A_\mathrm {eff}^{\nu +\bar{\nu }}\) quantifies the relation between neutrino and anti-neutrino fluxes \(\phi _{\nu +\bar{\nu }}\) with respect to the observed rate of events \(\frac{\mathrm {d}N_{\nu +\bar{\nu }}}{\mathrm {d}t}\):

where \(\varOmega \) is the solid angle, \(\theta ,\phi \) are the detector zenith and azimuth angle and \(E_\nu \) is the neutrino energy. The effective area for muon neutrinos and muon anti-neutrinos averaged over the Northern hemisphere down to \(-5^\circ \) declination is shown in Fig. 1 (top).

At high energies, the muon direction is well correlated with the muon-neutrino direction (\(< 0.1^\circ \) deviation above 10 TeV) and the muon is reconstructed with a median angular uncertainty \(\varDelta \Psi _\nu \) of about \(0.6^\circ \) at \(10\,\mathrm {TeV}\). All events have been reconstructed with an improved reconstruction based on the techniques described in [30, 31]. The median angular resolution is shown in Fig. 1 (middle). The median angular resolution at a neutrino energy of \(1\,\mathrm {TeV}\) is about \(1^\circ \) and decreases for higher energies to about \(0.3^\circ \) at \(1\,\mathrm {PeV}\).

The central 90% energy range is shown in Fig. 1 (bottom) as a function of \(\sin \delta \), with declination \(\delta \). Energy ranges are calculated using the precise best-fit parametrization of the experimental sample. The energy range stays mostly constant as function of declination but shifts to slightly higher energies near the horizon. The central 90% energy range of atmospheric neutrinos is about \(200\,\mathrm {GeV}\)–\(10\,\mathrm {TeV}\).

In Fig. 2, the ratio of effective area (top) and median angular resolution (bottom) of the sub-sample IC86 2012–2016 and the sample labeled 2012–2015 from previous time-integrated point source publication by IceCube is shown [16]. The differences in effective area are declination dependent. When averaged over the full Northern hemisphere, the effective area produced by this event selection is smaller than that in [16] at low neutrino energies but is larger above \(100\,\mathrm {TeV}\). The median neutrino angular resolution \(\varDelta \Psi _\nu \) improves at \(10\,\mathrm {TeV}\) by about 10% compared to the reconstruction used in [16] and improves up to 20% at higher energies. The event sample for the season from May 2011 to May 2012 has an overlap of about 80% with the selection presented in Ref. [16] using the same time range.

Ratio of effective area (top) and median angular resolution (bottom) of the sub-sample IC86 2012–2016 and the sample labeled 2012–2015 from previous publication of time-integrated point source searches by IceCube is shown [16]

3 Unbinned likelihood method

3.1 Likelihood & test statistics

The data sample is tested for a spatial clustering of events with an unbinned likelihood method described in [32] and used in the previous time-integrated point source publications by IceCube [14, 16, 22,23,24]. At a given position \(\mathbf {x}_s\) in the sky, the likelihood function for a source at this position, assuming a power law energy spectrum with spectral index \(\gamma \), is given by

where i is an index of the observed neutrino events, N is the total number of events, \(n_s\) is the number of signal events and \(P(\gamma )\) is a prior term. \(S_{i}\) and \(B_{i}\) are the signal and background probability densities evaluated for event i. The likelihood is maximized with respect to the source parameters \(n_s\ge 0\) and \(1 \le \gamma \le 4\) at each tested source position in the sky given by its right ascension and declination \(\mathbf {x}_s=(\alpha _s, \delta _s)\).

The signal and background probability density functions (PDF) \(S_i\) and \(B_i\) factorize into a spatial and an energy factor

where \(\mathbf {x}_i=(\alpha _i, \delta _i)\) is the reconstructed right ascension \(\alpha _i\) and declination \(\delta _i\), \(E_i\) is the reconstructed energy [33] and \(\sigma _i\) is the event-by-event based estimated angular uncertainty of the reconstruction of event i [22, 34].

A likelihood ratio test is performed to compare the best-fit likelihood to the null hypothesis of no significant clustering \(\mathscr {L}_0 = \prod _i B_{i}\). The likelihood ratio is given by

with best-fit values \(\hat{n}_s\) and \(\hat{\gamma }\), which is used as a test statistic.

The sensitivity of the analysis is defined as the median expected 90% CL upper limit on the flux normalization in case of pure background. In addition, the discovery potential is defined as the signal strength that leads to a \(5\,\sigma \) deviation from background in 50% of all cases.

In previous point source publications by IceCube [14, 16, 22,23,24], the spatial background PDF \(B^\mathrm {spat}\) and the energy background PDF \(B^\mathrm {ener}\) were estimated from the data. Given the best-fit parameters obtained from [6] and good data/Monte Carlo agreement, it is, however, possible to get a precise parametrization of the atmospheric and diffuse astrophysical components, including systematic uncertainties. By doing this, it is possible to take advantage of the high statistics of the full detector simulation data sets which can be used to generate smooth PDFs optimized for the sample used in this work. Thus this parametrization of the experimental data allows us to obtain a better extrapolation to sparsely populated regions in the energy-declination plane than by using only the statistically limited experimental data. This comes with the drawback that the analysis can only be applied to the Northern hemisphere since no precise parametrization is available for the Southern hemisphere. Generating PDFs from full detector simulations has already been done in previous publications for the energy signal PDF \(S^\mathrm {ener}\), as it is not possible to estimate this PDF from data itself. The spatial signal PDF \(S^\mathrm {spat}\) is still assumed to be Gaussian with an event individual uncertainty of \(\sigma _i\).

It is known from the best-fit parametrization of the sample that the data contain astrophysical events. The astrophysical component has been parametrized by an unbroken power-law with best-fit spectral index of \(2.19\pm 0.10\) [7]. In contrast to the previous publication of time-integrated point source searches by IceCube [16], which uses a flat prior on the spectral index in the range \(1 \le \gamma \le 4\), this analysis focuses on those sources that produce the observed spectrum of astrophysical events by adding a Gaussian prior \(P(\gamma )\) on the spectral index in Eq. (2) with mean 2.19 and width 0.10. As the individual source spectra are not strongly constrained by the few events that contribute to a source, the prior dominates the fit of \(\gamma \) and thus the spectral index is effectively fixed allowing only for small variations. Due to the prior, the likelihood has reduced effective degrees of freedom to model fluctuations. As a result, the distribution of the test statistic in the case of only background becomes steeper which results in an improvement of the discovery potential assuming an \(E^{-2}\) source spectrum.

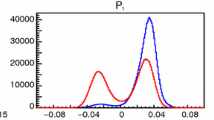

However, due to the reduced freedom of the likelihood by the prior on the spectral index about 80% of background trials yield \(\hat{n}_s = 0\) and thus \(TS=0\). This pile-up leads to an over-estimation of the median 90% upper limit as the median is degenerate and the flux sensitivity is artificially over-estimated. Thus a different definition for the TS is introduced for \(\hat{n}_s=0\). Allowing for negative \(\hat{n}_s\) can lead to convergence problems due to the second free parameter of \(\gamma \). Assuming \(\hat{n}_s=0\) is already close to the minimum of \(\log \mathscr {L}\), \(\log \mathscr {L}\) can be approximated as a parabola. The likelihood is extended in a Taylor series up to second order around \(n_s=0\). The Taylor series gives a parabola for which the value of the extremum can be calculated from the first and second order derivative of the likelihood at \(n_s=0\). This value is used as test statistic

for likelihood fits that yield \(\hat{n}_s=0\). With this definition, the pile-up of \(\hat{n}_s\) is spread towards negative values of TS and the median of the test statistic is no longer degenerate. Using this method, the sensitivity which had been overestimated due to the pile-up at \(n_s=0\) can be recovered.

3.2 Pseudo-experiments

To calculate the performance of the analysis, pseudo-experiments containing only background and pseudo-experiments with injected signal have been generated.

In this search for astrophysical point sources, atmospheric neutrinos and astrophysical neutrinos from unresolved sources make up the background. Using the precise parametrization of the reconstructed declination and energy distributionFootnote 1 from Ref. [7], pseudo-experiments are generated using full detector simulation events. Due to IceCube’s position at the South Pole and the high duty cycle of > 99% [26], the background PDF is uniform in right ascension.

As a cross check, background samples are generated by scrambling experimental data uniformly in right ascension. The declination and energy of the events are kept fixed. This results in a smaller sampled range of event energy and declination compared to the Monte Carlo-based pseudo-experiments. In the Monte Carlo-based pseudo-experiments, events are sampled from the simulated background distributions, and thus are not limited to the values of energy and declination present in the data when scrambling. P-values for tests presented in Sect. 4 are calculated using the Monte Carlo method and are compared to the data scrambling method for verification (values in brackets).

Signal is injected according to a full simulation of the detector. Events are generated at a simulated source position assuming a power law energy distribution. The number of injected signal events is calculated from the assumed flux and the effective area for a small declination band around the source position. In this analysis, the declination band was reduced compared to previous publication of time-integrated point source searches by IceCube [16], resulting in a more accurate modelling of the effective area. This change in signal modeling has a visible effect on the sensitivity and discovery potential, especially at the horizon and at the celestial pole. The effect can be seen in Fig. 3 by comparing the solid (small bandwidth) and dotted (large bandwidth) lines. The bandwidth is optimized by taking into account the effect of averaging over small declination bands and limited simulation statistics to calculate the effective area. As the bandwidth cannot be made too narrow, an uncertainty of about 8% on the flux limit calculation arises and is included in the systematic error.

3.3 Sensitivity & discovery potential

The sensitivity and discovery potential for a single point source is calculated for an unbroken power law flux according to

In Fig. 3, the sensitivity and discovery potential as function of \(\sin \delta \) is shown. Note that Fig. 3 shows \(E_\nu ^2 \frac{\mathrm {d}N_{\nu _\mu +\bar{\nu }_\mu }}{\mathrm {d}E_\nu } = \phi _0 E_0^2\) which is constant in neutrino energy for an \(E^{-2}\) flux. The sensitivity corresponds to a 90% CL averaged upper limit and the discovery potential gives the median source flux for which a \(5\sigma \) discovery would be expected. The flux is given as a muon neutrino plus muon anti-neutrino flux. For comparison, the sensitivity and discovery potential from the previous publication of time-integrated point source searches by IceCube [16] are shown. Despite only a moderate increase of livetime, this analysis outperforms the analysis in [16] by about 35% for multiple reasons: (1) the use of an improved angular reconstruction, (2) a slightly better optimized event selection near the horizon, (3) the use of background PDFs in the likelihood that are optimized on the parametrization from [6, 7] which improves sensitivity especially for higher energies, (4) the fact that due to the prior on the spectral index the number of source hypotheses is reduced which results in a steeper falling background TS distribution, and (5) the use of negative TS values which avoids overestimating the sensitivity, especially in the celestial pole region (\(\sin \delta \sim 1\)), where the background changes rapidly in \(\sin \delta \). In Fig. 4, the differential discovery potentials for three different declination bands are shown.

3.4 Tested hypothesis

3.4.1 Full sky scan

A scan of the full Northern hemisphere from \(90^\circ \) down to \(-3^\circ \) declination has been performed. The edge at \(-3^\circ \) has been chosen to avoid computational problems due to fast changing PDFs at the boundary of the sample at \(-5^\circ \). The scan is performed on a grid with a resolution of about \(0.1^\circ \). The grid was generated using the HEALPix pixelization schemeFootnote 2 [35]. For each grid point, the pre-trial p-value is calculated. As the test statistic shows a slight declination dependence, the declination dependent TS is used to calculate local p-values. TS distributions have been generated for 100 declinations equally distributed in \(\sin \delta \). \(10^6\) trials have been generated for each declination. Below a TS value of 5, the p-value is determined directly from trials. Above \(TS=5\), an exponential function is fitted to the tail of the distribution which is used to calculate p-values above \(TS=5\). A Kolmogorov–Smirnov test [36, 37] and a \(\chi ^2\) test are used to verify the agreement of the fitted function and the distribution.

The most significant point on the sky produced by the scan is selected using the pre-trial p-value. Since many points are tested in this scan, a trial correction has to be applied. Therefore, the procedure is repeated with background pseudo-experiments as described in Sect. 3.2. By comparing the local p-values from the most significant points in the background sample to the experimental pre-trial p-value, the post-trial p-value is calculated. The final p-value is calculated directly from \(\sim 3500\) trials.Footnote 3

3.4.2 Population test in the full sky scan

Upper Panel: Number of local warm spots with p-values smaller that \(p_\mathrm {thres}\) as function of \(p_\mathrm {thres}\). The observed number of local spots are shown as solid black line. The background expectation is shown as dashed line with \(1\sigma \), \(2\sigma \) and \(3\sigma \) intervals corresponding to Poisson statistics. Lower Panel: Local Poisson p-value for given \(p_\mathrm {thres}\). The most significant point is indicated by a dotted vertical line

Due to the large number of trials, only very strong sources would be identified in a full sky scan, which attempts to quantify only the most significant source. However, the obtained TS values can be tested also for a significant excess of events from multiple weaker sources without any bias towards source positions. This is done by counting p-values of local warm spots where the p-values are smaller than a preset threshold. An excess of counts with respect to the expectation from pure background sky maps can indicate the presence of multiple weak sources.

From the full sky scan, local spots with \(p_\mathrm {local} < 10^{-2} \) and a minimal separation of \(1^\circ \) are selected. The number of expected local spots \(\lambda \) with a p-value smaller than \(p_\mathrm {thres}\) is estimated from background pseudo-experiments and shown in Fig. 5 as dashed line. The background expectation was found to be Poisson distributed. The threshold value is optimized to give the most significant excess above background expectation using the Poisson probability

to find an excess of at least n spots. Due to the optimization of the threshold in the range on \(2< -\log _{10}p_\mathrm {thres} < \infty \), the result has to be corrected for trials as well. To include this correction, the full sky scan population test is performed on background pseudo-experiments to calculate the post-trial p-value.

3.4.3 A priori source list

The detectability of sources suffers from the large number of trials within the full sky scan and thus individual significant source directions may become insignificant after the trial correction. However, gamma-ray data can help to preselect interesting neutrino source candidates. A standard IceCube and ANTARES a priori source list, containing 34 prominent candidate sources for high-energy neutrino emission on the Northern hemisphere has been tested [16], reducing the trial factor to about the number of sources in the catalog. The source catalog is summarized in Table 2. The sources were selected mainly based on observations in gamma rays and belong to various object classes. The sources from this list are tested individually with the unbinned likelihood from Eq. (2). For this test, p-values are calculated from \(10^6\) background trials without using any extrapolation. Then the most significant source is selected and a trial-correction, derived from background pseudo-experiments, is applied. Note that some sources such as MGRO J1908\(+\)06, SS 433, and Geminga are spatially extended with an apparent angular size of up to several degrees, which is larger than IceCube’s point spread function. In such cases, the sensitivity of the analysis presented in this paper is reduced. E.g., for an extension of 1\(^\circ \), the sensitivity on the neutrino flux decreases by \(\sim 20\%\) [24].

3.4.4 Population test in the a priori source list

Similar to the population test in the full sky scan, an excess of several sources with small but not significant p-values in the a priori source list can indicate a population of weak sources. Therefore, the k most significant p-values of the source list are combined using a binomial distribution

of p-values that are larger than a threshold \(p_k\). Here, \(N=34\) is the total number of sources in the source list. The most significant combination is used as a test statistic and assessed against background using pseudo-experiments.

3.4.5 Monitored source list

IceCube and ANTARES have tested the a priori source list for several years with increasingly sensitive analyses [16, 22,23,24]. Changing the source list posterior may lead to a bias on the result. However, not reporting on recently seen, interesting sources would also ignore progress in the field. A definition of an unbiased p-value is not possible as these were added later. Therefore, a second list with sources is tested to report on an updated source catalog. In this work, this second catalog so far comprises only TXS 0506+056, for which evidence for neutrino emission has been observed.

3.5 Systematic uncertainties

The p-values for the tested hypotheses are determined with simulated pseudo-experiments assuming only background (see also Sect. 3.2). These experiments are generated using the full detector Monte Carlo simulation, weighted to the best-fit parametrization from Ref. [7]. This parametrization includes the optimization of nuisance parameters accounting for systematic uncertainties resulting in very good agreement between experimental data and Monte Carlo. Because of this procedure, the p-values are less affected by statistical fluctuations that would occur when estimating p-values from scrambled experimental data as well as the effect of fixed event energies during scrambling. However, a good agreement of the parametrization with experimental data is a prerequisite of this method. As a cross check, p-values are also calculated using scrambled experimental data. These p-values are given for comparison in brackets in Sect. 4. We find that the two methods show very similar results confirming the absence of systematic biases.

The calculation of the absolute neutrino flux normalization based on Monte Carlo simulations is affected by systematic uncertainties. These uncertainties influence the reconstruction performance and the determination of the effective area. Here, the dominant uncertainties are found to be the absolute optical efficiency of the Cherenkov light production and detection in the DOMs [27], the optical properties (absorption, scattering) of the South Pole ice [38], and the photo-nuclear interaction cross sections of high energy muons [39,40,41,42,43,44,45].

The systematic uncertainties on the sensitivity flux normalization is evaluated by propagating changed input values on the optical efficiency, ice properties and cross section values through the entire likelihood analysis for a signal energy spectrum of \(\mathrm {d}N/\mathrm {d}E_\nu \propto E_\nu ^{-2}\). Changing the optical efficiencies by \(\pm 10\%\) results in a change of the flux normalization by \(\pm 7.5\%\). The ice properties have been varied by (+10%, 0%), (0%, +10%) and (\(-7.1\)%, \(-7.1\)%) in the values of absorption and scattering length. The resulting uncertainty of the flux normalization is \(\pm 5.3\%\). To study the effect of the photo-nuclear interactions of high energy muons, the models in Refs. [39,40,41,42,43,44,45] have been used, which give a flux normalization variation of \(\pm 5.1\%\). Note, that these models are outdated and represent the extreme cases from common literature. Thus, the systematic uncertainty is estimated conservatively. The systematic uncertainties are assumed to be independent and are added in quadrature, yielding a total systematic uncertainty of \(\pm 10.5\%\) for the \(\nu _\mu + \bar{\nu }_\mu \) flux normalization. One should note that additionally, the modeling of point-like sources yields an uncertainty of about \(\pm 8\%\) as discussed in Sect. 3.2.

Since the sample is assumed to be purely muon neutrino and muon anti-neutrino events, only \(\nu _\mu + \bar{\nu }_\mu \) fluxes are considered. However, \(\nu _\tau \) and \(\bar{\nu }_\tau \) may also contribute to the observed astrophysical neutrinos in the data sample. Taking \(\nu _\tau \) and \(\bar{\nu }_\tau \) fluxes into account and assuming an equal flavor ratio at Earth, the sensitivity of the per-flavor flux normalization improves, depending on the declination, by 2.6–4.3%. The expected contamination from \(\nu _e\) and \(\bar{\nu }_e\) is negligible.

The relative systematic uncertainty is comparable with the systematic uncertainties quoted in previous publications of time integrated point source searches by IceCube [16]. In addition, the systematic effect due to the chosen finite bandwidth is included in this analysis.

4 Results

No significant clustering was found in any of the hypotheses tests beyond the expectation from background. Both the full-sky scan of the Northern hemisphere and the p-values from the source list are compatible with pure background. The p-values given in this section are calculated by pseudo-experiments based on Monte Carlo simulation weighted to the best-fit parametrization of the sample (see Sect. 3.2). For verification, p-values calculated by pseudo-experiments from scrambled experimental data are given in brackets.

4.1 Sky scan

The pre-trial p-value map of the Northern hemisphere scan is shown in Fig. 6. The hottest spot in the scan is indicated by a black circle and is located at \(\alpha = 177.89^\circ \) and \(\delta =23.23^\circ \) (J2000) with the Galactic coordinates \(b_\mathrm {gal} = 75.92^\circ \), \(l_\mathrm {gal}=-134.33^\circ \). The best-fit signal strength is \(\hat{n}_s = 21.32\) (\(\Phi ^{\nu _\mu +\bar{\nu }_\mu }_{100\,\mathrm {TeV}}=1.4\cdot 10^{-19}\,\mathrm {GeV}^{-1}\mathrm {cm}^{-2}\mathrm {s}^{-1}\) assuming \(\hat{\gamma }=2.20\)) with a fitted spectral index of \(\hat{\gamma } = 2.20\) close to the prior of 2.19. The TS-value is 21.63 which corresponds to \(p_\mathrm {local}=10^{-5.97}\). The post-trial corrected p-value is 26.5% (29.9%) and is thus compatible with background. A zoom into the local p-value landscape around the hottest spot position and the observed events is shown in Fig. 7. Events are shown as small circles where the area of the circle is proportional to the median \(\log _{10}\) of neutrino energy assuming the diffuse best-fit spectrum. The closest gamma-ray source from the Fermi 3FGL and Fermi 3FHL catalogs [46, 47] is 3FHL J1150.3\(+\)2418 which is about 1.1\(^\circ \) away from the hottest spot. The chance probability to find a 3FGL or 3FHL source within 1.1\(^\circ \) is 25%, which is estimated from all-sky pseudo-experiments. At the source location of 3FHL J1150.3\(+\)2418, the TS value is 8.02 which is inconsistent with the best-fit point at the \(3.6\,\sigma \) level, if assuming Wilks theorem with one degree of freedom [48].

Local p-value landscape around the source position of the most significant spot in the sky scan in equatorial coordinates (J2000). Neutrino event arrival directions are indicated by small circles where the area of the circles is proportional to the median \(\log _{10}\) of neutrino energy assuming the diffuse best-fit spectrum. The p-value is evaluated at the point where the black lines cross

4.2 Population test in the sky scan

In Fig. 5, the number of spots with p-values below \(p_\mathrm {thres}\) are shown together with the expectation from background. The most significant deviation was found for \(p_\mathrm {thres} = 0.5\%\) where 454.3 spots were expected and 492 were observed with a p-value of \(p_\mathrm {poisson}=4.17\%\). Correcting the result for trials gives a p-value of 42.0% (54.3%) and thus the result is compatible with background.

Single-flavor neutrino and anti-neutrino flux per source vs number of sources. An unbroken \(E^{-2}\) power law and equal fluxes of the sources at Earth are assumed. Solid lines show 90% CL upper limits and dashed lines indicate the sensitivity. Upper limits and sensitivity are calculated assuming that background consists of atmospheric neutrinos only and exclude an astrophysical component. Thus the limits are conservative, especially for small number of sources. For comparison, the results from [16, 49] are given. The dotted line gives the flux per source that saturates the diffuse flux from Ref. [7]

As no significant deviation from the background hypothesis has been observed, exclusion limits are calculated as 90% CL upper limits with Neyman’s method [50] for the benchmark scenario of a fixed number of sources \(N_\mathrm {sources}\), all producing the same flux at Earth. Upper limits are calculated assuming that background consists of atmospheric neutrinos only, excluding an astrophysical component from background pseudo-experiment generation. Excluding the astrophysical component from background is necessary as the summed injected flux makes up a substantial part of the astrophysical flux in case of large \(N_\mathrm {sources}\). However, this will over-estimate the flux sensitivity for small \(N_\mathrm {sources}\). More realistic source scenarios are discussed in Sect. 5. This rather unrealistic scenario does not depend on astrophysical and cosmological assumptions about source populations and allows for a comparison between the analysis power of different analyses directly. The sensitivity and upper limits for \(N_\mathrm {source}\) sources is shown in Fig. 8 together with the analyses from [16, 49].Footnote 4 This analysis finds the most stringent exclusion limits for small number of sources to date. The gain in sensitivity compared to Ref. [16] is consistent with the gain in the sensitivity to a single point source.

4.3 A priori source list

Sensitivity (dashed) and \(5\sigma \) discovery potential (solid) of the flux normalization for an \(E^{-2}\) source spectrum as function of the \(\sin \delta \). For comparison, the lines from [16] are shown as well. 90% CL Neyman upper limits on the flux normalization for sources in the a priori and monitored source list are shown as circles and squares, respectively

Local p-value landscapes around the source position of 4C 38.41 (left) and MGRO J1908\(+\)06 (right) in equatorial coordinates (J2000). Neutrino event arrival directions are indicated by small circles where the area of the circle is proportional to the median \(\log _{10}\) of neutrino energy assuming the diffuse best-fit spectrum. The p-value is evaluated at the point where the black lines cross

Local p-value landscapes around the source position of Cyg A (left) and TXS 0506\(+\)056 (right) in equatorial coordinates (J2000). Neutrino event arrival directions are indicated by small circles where the area of the circle is proportional to the median \(\log _{10}\) of neutrino energy assuming the diffuse best-fit spectrum. The p-value is evaluated at the point where the black lines cross

The fit results of sources in the a priori source list are given in Table 2. The most significant source with a local p-value of 0.8% is 4C 38.41, which is a flat spectrum radio quasar (FSRQ) at a redshift of \(z=1.8\). Taking into account that 34 sources have been tested, a post-trial p-value of 23.7% (20.3%) is calculated from background pseudo-experiments which is compatible with background.

As no significant source has been found, 90% CL upper limits are calculated assuming an unbroken power law with spectral index of \(-2\) using Neyman’s method [50]. The 90% CL upper limit flux is summarized in Table 2 and shown in Fig. 9. In case of under-fluctuations, the limit was set to the sensitivity level of the analysis. Note that 90% upper limits can exceed the discovery potential as long as the best-fit flux is below the discovery potential.

Interestingly, a total of three sources, 4C 38.41, MGRO J1908\(+\)06 and Cyg A, have a local p-value below or close to 1%. The p-value landscapes and observed events around these three sources are shown in Figs. 10 and 11.

4.4 Population test in the a priori source list

The most significant combination of p-values from the a priori source list is given when combining the three most significant p-values, i.e. \(k=3\), with \(2.59\sigma \) as shown in Fig. 12. The comparison with background pseudo-experiments yields a trial-corrected p-value of 6.6% (4.1%) which is not significant.

4.5 Monitored source list

The best-fit results for TXS 0506\(+\)056 in the monitored source list are given in Table 3. Note that the event selection ends in May 2017 and thus does not include the time of the alert ICECUBE-170922A [51] that led to follow-up observations and the discovery of \(\gamma \)-ray emission from that blazar up to 400 GeV. The data, however, include the earlier time-period of the observed neutrino flare. The local p-value here is found to be 2.93%. This is less significant than the reported significance of the time-dependent flare in [8] but is consistent with the reported time-integrated significances in [8], when taking into account that this analysis has a prior on the spectral index of the source flux and does not cover the same time-range as in [8].

The local p-value landscape around TXS 0506\(+\)056 is shown in Fig. 11 together with the observed event directions of this sample.

5 Implications on source populations

The non-detection of a significant point-like source and the non-detection of a population of sources within the sky scan is used to put constrains on realistic source populations. In the following calculation, source populations are characterized by their effective \(\nu _\mu + \bar{\nu }_\mu \) single-source luminosity \(L_{\nu _\mu + \bar{\nu }_\mu }^\mathrm {eff}\) and their local source density \(\rho _0^\mathrm {eff}\). Using the software tool FIRESONGFootnote 5 [52], the resulting source count distribution \(\frac{\mathrm {d}N}{\mathrm {d}\Phi }\) as a function of the flux \(\Phi \) for source populations are calculated for sources within \(z<10\) and representations of this population are simulated. To calculate the source count distribution, FIRESONG takes the source density \(\rho \), luminosity distribution, source evolution, cosmological parameters, the energy range of the flux and the spectral index into account. Following Ref. [53], sources are simulated with a log-normal distribution with median \(L_{\nu _\mu + \bar{\nu }_\mu }^\mathrm {eff}\) and a width of 0.01 in \(\log _{10}(L_{\nu _\mu + \bar{\nu }_\mu }^\mathrm {eff})\) which corresponds to a standard candle luminosity. The evolution of the sources was chosen to follow the parametrization of star formation rate from Hopkins and Beacom [54] assuming a flat universe with \(\varOmega _{M,0} =0.308\), \(\varOmega _{\lambda ,0} = 0.692\) and \(h = 0.678\) [55]. The energy range of the flux at Earth was chosen as \(10^4\)–\(10^7\,\mathrm {GeV}\) to calculate the effective muon neutrino luminosities of sources.

Generating pseudo-experiments with signal components corresponding to the flux distribution obtained from FIRESONG, 90% CL upper limits are calculated in the \(\rho _0^\mathrm {eff}\)–\(L_{\nu _\mu + \bar{\nu }_\mu }^\mathrm {eff}\) plane for various spectral indices assuming that background consists of atmospheric neutrinos only, as described in Sect. 4.2. The 90% CL upper limit is calculated based on the fact that the strongest source of a population does not give a p-value in the sky scan that is larger than the observed one. The 90% upper limits are shown as dashed lines in Fig. 13. In addition, 90% CL upper limits are calculated by comparing the largest excess measured with the population test in the sky scan. These 90% upper limits are shown as solid lines in Fig. 13. Populations that are compatible at the \(1\sigma \) and \(3\sigma \) level with the diffuse flux measured in [7] are shown as blue shaded band. 90% CL upper limits have been calculated assuming an \(E^{-2}\) power-law flux. The same has been performed for an \(E^{-2.19}\) power-law flux, which is the diffuse best-fit for this sample (this result can be found in the supplementary material). The computation of upper limits becomes very computing-intensive for large source densities. Therefore, the computation of the upper limits, resulting from the sky scan, are extrapolated to larger source densities (indicated by dotted line in Fig. 13). It can be seen that for large effective source densities and small effective luminosities, the limit resulting from the population analysis goes \(\propto 1 / L_{\nu _\mu + \bar{\nu }_\mu }^\mathrm {eff}\) which is the same scaling as one would expect from a diffuse flux. Indeed it is found that an excess of diffuse high-energy events, i.e. sources from which only one neutrino are detected, leads to a p-value excess in the population analysis. This is a result of taking the energy of the event into account in the likelihood. Limits from the hottest spot in the sky scan are a bit stronger for large effective luminosities while upper limits from the population test become stronger at about \(L_{\nu _\mu + \bar{\nu }_\mu }^\mathrm {eff} \sim 10^{52} \frac{\mathrm {erg}}{\mathrm {yr}}\).

6 Implications for individual source models

In Sect. 4.3, constraints on source fluxes assuming \(\mathrm {d}N / \mathrm {d}E_\nu \propto E_\nu ^{-2}\) have been calculated. However, more specific neutrino flux models can be obtained using \(\gamma \)-ray data. In pion decays, both neutrinos and \(\gamma \)-rays are produced. Thus \(\gamma \)-ray data can be used to construct models for neutrino emission under certain assumptions. Here, models for sources of the a priori source list are tested. For each model, the Model Rejection Factor (MRF) is calculated which is the ratio between the predicted flux and the 90% CL upper limit. In addition, the expected experimental result in the case of pure background is also calculated giving the MRF sensitivity. The energy range that contributes 90% to the sensitivity has been calculated by folding the differential discovery potential at the source position (similar to Fig. 4) with the flux prediction. Models for which the MRF sensitivity is larger than 10 are not discussed here.

The first source tested is the Crab Nebula, which is a Pulsar Wind Nebula (PWN) and the brightest source in TeV \(\gamma \)-rays. Despite the common understanding that the emission from PWNe is of leptonic nature, see e.g. [61], neutrinos can be produced by subdominant hadronic emission. Predictions for neutrino fluxes from the Crab Nebula are proposed, e.g. by Amato et al. [56] and Kappes et al. [57]. The prediction by Amato et al. assumes pion production is dominated by p–p interactions and the target density is given by \(n_t = 10\,\mu M_{N_\odot } R_\mathrm {pc}^{-3}\, \mathrm {cm}^{-3}\) with \(M_{N_\odot }\) the mass of the supernova ejecta in units of solar masses. Moreover, \(R_\mathrm {pc}\) is the radius of the supernova in units of \(\mathrm {pc}\) and \(\mu \) is an unknown factor of the order of \(1\le \mu \le 20\) that takes into account e.g. the intensity and structures of magnetic fields within the PWN. Here \(\mu =20\) and a proton luminosity of 60% of the total PWN luminosity for Lorentz factors of \(\varGamma = 10^4,\, 10^5,\,10^6,\,10^7\) are used to provide a result that is model-independent and complementary to [56]. The model prediction by Kappes et al., assumes a dominant production of \(\gamma \)-rays of the HESS \(\gamma \)-ray spectrum [62] by p–p interactions.

The model predictions, sensitivity and 90% CL upper limit are shown in Fig. 14 and are listed in Table 4. Sensitivity and upper limits are shown for the central energy range that contributes 90% to the sensitivity.

For the model of Kappes et al., the sensitivity is very close to the model prediction while for Amato et al. with \(\varGamma =10^7\), the sensitivity is a factor of three lower than the prediction. The 90% CL upper limits are listed in Table 4. They are slightly higher but still constrain the models by Amato et al.

Another very interesting class of sources are active galactic nuclei (AGN). Here, the models being tested come from Ref. [59] for Mrk 421, a BL Lacertae object (BL Lac) that was found in spatial and energetic agreement with a high-energy starting event and from Ref. [58] for 3C273 and 3C454.3 which are flat spectrum radio quasars (FSRQ). The models, sensitivities and 90% CL upper limits are shown in Fig. 15 and the MRF are listed in Table 4.

The sensitivities for 3C273 and Mrk 421 are well below the model prediction and the 90% CL upper limits are at about 40% of the model flux. For 3C454.3, the sensitivity is a factor 2.8 above the model prediction. Since 3C454.3 is one of the few sources with a local p-value below \(\sim 2.5\%\), the 90% CL upper limit is much larger.

Another tested model was derived for the source G40.5-0.5 which is a galactic supernova remnant [60]. This supernova remnant can be associated with the TeV source MGRO J1908\(+\)06 which is the second most significant source in the a priori source catalog, although the association of G40.5-0.5 with MGRO J1908\(+\)06 is not distinct [63]. In addition, the pulsar wind nebula powered by PSR J1907\(+\)0602 may contribute to the TeV emission of the MGRO J1908\(+\)06 region. However, here the tested model for the SNR G40.5-0.5 is adapted from Ref. [60]. The model, sensitivity and 90% CL upper limit are shown in Fig. 16 and are listed in Table 4.

Differential source flux for SNR G40.5-0.5. The solid line gives the model prediction, the thick line gives the 90% CL upper limit and the dashed line indicates the sensitivity flux. The 90% CL upper limit and sensitivity are shown in the energy range that contributes 90% to the sensitivity. G40.5-0.5 is associated with MGRO J1908\(+\)06

The sensitivity of this analysis is a factor 1.4 above the model prediction and not yet sensitive to this model. As MGRO J1908+06 is the second most significant source in the catalog, with a local p-value of \(<\,1\%\), the upper limit lies nearly a factor of five above the model prediction.

7 Conclusions

Eight years of IceCube data have been analyzed for a time-independent clustering of through-going muon neutrinos using an unbinned likelihood method. The analysis includes a full sky search of the Northern hemisphere down to a declination of \(-3^\circ \) for a significant hot spot as well as an analysis of a possible cumulative excess of a population of weak sources. Furthermore, source-candidates from an a priori catalog and a catalog of monitored sources are tested individually and again for a cumulative excess.

The analysis method has been optimized for the observed energy spectrum of high-energy astro-physical muon neutrinos [6] and a number of improvements with respect to the previously published search [16] have been incorporated. By implementing these improvements, a sensitivity increase of about 35% has been achieved.

No significant source was found in the full-sky scan of the Northern hemisphere and the search for significant neutrino emission from objects on a a priori source list results in a post-trial p-value of 23.7% (20.3%), compatible with background. Also the tests for populations of sub-threshold sources revealed no significant excess.

Three sources on the a priori source-list, 4C 38.41, MGRO J1908\(+\)06 and Cyg A, have pre-trial p-values of only about 1%. However, these excesses are not significant. The source TXS 0506\(+\)056 in the catalog of monitored sources has a p-value of 2.9 %. This is consistent with the time-integrated p-value in [8] for the assumed prior on the spectral index.

Based on these results, the most stringent limits on high-energy neutrino emission from point-like sources are obtained. In addition, models for neutrino emission from specific sources are tested. The model [56] for the Crab Nebula is excluded for \(\varGamma \ge 10^6 \) as well as the predictions for 3C273 [58] and Mrk 421 [59]. In addition to these specific models, an exclusion of source populations as a function of local source density and single-source luminosity are derived by calculating the source count distribution for a realistic cosmological evolution model.

Data Availability Statement

This manuscript has associated data in a data repository. [Authors’ comment: Data are publicly released on a regular basis by IceCube at https://icecube.wisc.edu/science/data/access/. The data used in this publication will be made available at this URL.]

Notes

In Ref. [7], the reconstructed zenith-energy distribution has been parametrized, although, due to IceCube’s unique position at the geographic South Pole the zenith can be directly converted to declination.

Hierarchical Equal Area isoLatitude Pixelation of a sphere (HEALPix), http://healpix.sourceforge.net/.

The background distribution of the local p-value \(p_\mathrm {local}\) for the most significant point is described by \(d\mathscr {P} = N (1-p_\mathrm {local})^{N-1} dp_\mathrm {local}\), with an effective number of trials N that is fitted to \(241,000\pm 9000\). A rough approximation of this trial factor can be calculated by dividing the solid angle of the Northern hemisphere \(\sim 2\pi \) by the squared median angular resolution. Considering that highest energy events dominate the sensitivity, we use \(0.3^\circ \) for the median angular resolution. Thus we get \(2\pi /(0.3^\circ )^2 \approx 229000\) effective trials, which is in the same order of magnitude as the determined value.

The 90% CL upper limit from Ref. [16] has been recalculated to account for an incorrect treatment of signal acceptance in the original publication.

FIRst Extragalactic Simulation Of Neutrinos and Gamma-rays (FIRESONG), https://github.com/ChrisCFTung/FIRESONG.

References

T.K. Gaisser, F. Halzen, T. Stanev, Phys. Rep. 258, 173 (1995) (erratum: Phys. Rep. 271, 355, 1996). arXiv:hep-ph/9410384

H. Athar, C.S. Kim, J. Lee, Mod. Phys. Lett. A 21, 1049 (2006). arXiv:hep-ph/0505017

M.G. Aartsen et al., (IceCube Collaboration). Science 342, 1242856 (2013). arXiv:1311.5238

C. Kopper (IceCube Collaboration), PoS ICRC2017, 981 (2017). arXiv:1710.01191

M.G. Aartsen et al., (IceCube Collaboration). Phys. Rev. Lett. 115, 081102 (2015). arXiv:1507.04005

M.G. Aartsen et al., (IceCube Collaboration). Astrophys. J. 833, 3 (2016). arXiv:1607.08006

C. Haack (IceCube Collaboration), PoS ICRC2017, 1005 (2017). arXiv:1710.01191

M.G. Aartsen et al., (IceCube Collaboration). Science 361, 147 (2018). arXiv:1807.08794

M. G. Aartsen et al., (Liverpool Telescope, MAGIC, H.E.S.S., AGILE, Kiso, VLA/17B-403, INTEGRAL, Kapteyn, Subaru, HAWC, Fermi-LAT, ASAS-SN, VERITAS, Kanata, IceCube, Swift NuSTAR), Science 361, eaat1378 (2018). arXiv:1807.08816

M.G. Aartsen et al., (IceCube Collaboration). Astrophys. J. 835, 45 (2017). arXiv:1611.03874

M.G. Aartsen et al., (IceCube Collaboration). Astrophys. J. 805, L5 (2015). arXiv:1412.6510

M. G. Aartsen et al., (IceCube Collaboration). Astrophys. J. 807, 46 (2015), arXiv:1503.00598

S. Adrián-Martínez et al., (ANTARES Collaboration & IceCube Collaboration). Astrophys. J. 823, 65 (2016). arXiv:1511.02149

M.G. Aartsen et al., (IceCube Collaboration). Astrophys. J. 824, L28 (2016). arXiv:1605.00163

M.G. Aartsen et al., (IceCube Collaboration), J. Inst. 11, P11009 (2016). arXiv:1610.01814

M.G. Aartsen et al., (IceCube Collaboration). Astrophys. J. 835, 151 (2017). arXiv:1609.04981

M.G. Aartsen et al., (IceCube Collaboration), Astrophys. J. 843, 112 (2017). arXiv:1702.06868

M.G. Aartsen et al., (IceCube Collaboration). A&A 607, A115 (2017). arXiv:1702.06131

A. Albert et al., (ANTARES Collaboration & IceCube Collaboration & LIGO Scientific Collaboration & Virgo Collaboration), Phys. Rev. D 96, 022005 (2017). arXiv:1703.06298

M.G. Aartsen et al., (IceCube Collaboration), Astrophys. J. 846, 136 (2017). arXiv:1705.02383

M.G. Aartsen et al., (IceCube Collaboration), Astrophys. J. 849, 67 (2017). arXiv:1707.03416

R. Abbasi et al., (IceCube Collaboration), Astrophys. J. 732, 18 (2011). arXiv:1012.2137

M.G. Aartsen et al., (IceCube Collaboration), Astrophys. J. 779, 132 (2013). arXiv:1307.6669

M.G. Aartsen et al., (IceCube Collaboration), Astrophys. J. 796, 109 (2014). arXiv:1406.6757

A. Achterberg et al., (IceCube Collaboration), Astropart. Phys. 26, 155 (2006). arXiv:astro-ph/0604450

M.G. Aartsen et al., (IceCube Collaboration), J. Inst. 12, P03012 (2017). arXiv:1612.05093

R. Abbasi et al., (IceCube Collaboration), Nucl. Instrum. Methods A 618, 139 (2010). arXiv:1002.2442

R. Abbasi et al., (IceCube Collaboration), Nucl. Instrum. Methods A 601, 294 (2009). arXiv:0810.4930

M.G. Aartsen et al., (IceCube Collaboration), Nucl. Instrum. Methods A 711, 73 (2013). arXiv:1301.5361

J. Ahrens et al., (AMANDA Collaboration), Nucl. Instrum. Methods A 524, 169 (2004). arXiv:astroph/0407044

K. Schatto, Stacked searches for high-energy neutrinos from blazars with IceCube. Ph.D. thesis, Johannes Gutenberg-Universität (2014)

J. Braun et al., Astropart. Phys. 29, 299 (2008). arXiv:0801.1604

R. Abbasi et al., (IceCube Collaboration), Nucl. Instrum. Methods A 703, 190 (2013). arXiv:1208.3430

T. Neunhöffer, Astropart. Phys. 25, 220 (2006). arXiv:astro-ph/0403367

K.M. Górski et al., Astrophys. J. 622, 759 (2005). arXiv:astro-ph/0409513

A. Kolmogorov, Inst. Ital. Attuari Giorn. 4, 83 (1933)

N.V. Smirnov, Bull. Math. Univ. Moscou 2, 3 (1939)

M.G. Aartsen et al., (IceCube Collaboration), J. Inst. 9, P03009 (2014). arXiv:1311.4767

L.B. Bezrukov, E.V. Bugaev, Sov. J. Nucl. Phys. 33, 1195 (1981)

E.V. Bugaev, Y.V. Shlepin, Nucl. Phys. B 122, 341 (2003)

E.V. Bugaev, Y.V. Shlepin, Phys. Rev. D 67, 034027 (2003). arXiv:hep-ph/0203096

E. Bugaev, T. Montaruli, Y. Shlepin, I. Sokalski, Astropart. Phys. 21, 491 (2004). arXiv:hep-ph/0312295

H. Abramowicz, E.M. Levin, A. Levy, U. Maor, Phys. Lett. B 269, 465 (1991)

H. Abramowicz , A. Levy, DESY 97-251. arXiv:hepph/9712415

J.-H. Koehne et al., Comput. Phys. Commun. 184, 2070 (2013)

F. Acero et al., (Fermi-LAT Collaboration), Astrophys. J. Suppl. 218, 23 (2015). arXiv:1501.02003

M. Ajello et al., (Fermi-LAT Collaboration), Astrophys. J. Suppl. 232, 18 (2017). arXiv:1702.00664

S.S. Wilks, Ann. Math. Stat. 9, 60 (1938)

T. Glauch, A. Turcati (IceCube Collaboration), PoS ICRC2017, 1014 (2017). arXiv:1710.01179

J. Neyman, Philos. Trans. R. Soc. A 236, 333 (1937)

IceCube Collaboration, GRB coordinates network. Circ. Serv. 21916 (2017)

I. Taboada, C.F. Tung, J. Wood (HAWC Collaboration), PoS ICRC2017, 663 (2017). arXiv:1801.09545

K. Murase, E. Waxman, Phys. Rev. D 94, 103006 (2016). arXiv:1607.01601

A.M. Hopkins, J.F. Beacom, Astrophys. J. 651, 142 (2006). arXiv:astro-ph/0601463

P.A.R. Ade et al., (Planck Collaboration), A&A 594, A13 (2016). arXiv:1502.01589

E. Amato, D. Guetta, P. Blasi, A&A 402, 827 (2003)

A. Kappes, J. Hinton, C. Stegmann, F.A. Aharonian, Astrophys. J. 661, 1348 (2007). arXiv:astroph/0607286

A. Reimer, PoS ICRC2015, 1123 (2015)

M. Petropoulou, S. Dimitrakoudis, P. Padovani, A. Mastichiadis, E. Resconi, Mon. Not. R. Astron. Soc. 448, 2412 (2015). arXiv:1501.07115

M. Mandelartz, J. Becker Tjus, Astropart. Phys. 65, 80 (2015). arXiv:1301.2437

J. Aleksić et al., (MAGIC Collaboration), JHEAp 5–6, 30 (2015). arXiv:1406.6892

F. Aharonian et al., (HESS Collaboration), A&A 457, 899 (2006). arXiv:astro-ph/0607333

E. Aliu et al., (VERITAS Collaboration), Astrophys. J. 787, 166 (2014). arXiv:1404.7185

Acknowledgements

The IceCube collaboration acknowledges the significant contributions to this manuscript from René Reimann. The authors gratefully acknowledge the support from the following agencies and institutions: USA – U.S. National Science Foundation-Office of Polar Programs, U.S. National Science Foundation-Physics Division, Wisconsin Alumni Research Foundation, Center for High Throughput Computing (CHTC) at the University of Wisconsin-Madison, Open Science Grid (OSG), Extreme Science and Engineering Discovery Environment (XSEDE), U.S. Department of Energy-National Energy Research Scientific Computing Center, Particle astrophysics research computing center at the University of Maryland, Institute for Cyber-Enabled Research at Michigan State University, and Astroparticle physics computational facility at Marquette University; Belgium – Funds for Scientific Research (FRS-FNRS and FWO), FWO Odysseus and Big Science programmes, and Belgian Federal Science Policy Office (Belspo); Germany – Bundesministerium für Bildung und Forschung (BMBF), Deutsche Forschungsgemeinschaft (DFG), Helmholtz Alliance for Astroparticle Physics (HAP), Initiative and Networking Fund of the Helmholtz Association, Deutsches Elektronen Synchrotron (DESY), and High Performance Computing cluster of the RWTH Aachen; Sweden – Swedish Research Council, Swedish Polar Research Secretariat, Swedish National Infrastructure for Computing (SNIC), and Knut and Alice Wallenberg Foundation; Australia – Australian Research Council; Canada – Natural Sciences and Engineering Research Council of Canada, Calcul Québec, Compute Ontario, Canada Foundation for Innovation, WestGrid, and Compute Canada; Denmark – Villum Fonden, Danish National Research Foundation (DNRF), Carlsberg Foundation; New Zealand – Marsden Japan – Japan Society for Promotion of Science (JSPS) and Institute for Global Prominent Research (IGPR) of Chiba University; Korea – National Research Foundation of Korea (NRF); Switzerland – Swiss National Science Foundation (SNSF).

Author information

Authors and Affiliations

Consortia

Appendices

Appendix A: Performance of individual sub-samples

The quality and statistical power of a sample, w.r.t. a search for point-like sources, can be characterized by the effective area of muon-neutrino and anti-neutrino detection, the point spread function and the central 90% energy range (see Sect. 2). As the data were taken with different partial configurations of IceCube, the details of the event selections are different for each season. In Fig. 1 the livetime average of all sub-samples is shown. In Fig. 17 the effective area, point spread function and central 90% energy range are shown for each sub-sample individually. The plot shows that – despite of different detector configurations and event selections – the characteristics of the event samples are similar.

Top: Muon neutrino and anti-neutrino effective area averaged over the Northern hemisphere as function of \(\log _{10}\) of neutrino energy. Middle: Median neutrino angular resolution as function of \(\log _{10}\) of neutrino energy. Bottom: Central 90% neutrino energy range for atmospheric (astrophysical) neutrinos as solid (dashed) line for each declination. Lines are labeled by there sub-season

Appendix B: Results for diffuse best-fit spectral index

An \(E^{-2}\) power-law is often used as benchmark model and for a comparison between publications. However, the diffuse best-fit spectral index is \(\gamma =2.19\), which is, given the uncertainties is not consistent with \(\gamma =2\). Therefore, the sensitivity and discovery potential for single point sources are recalculated using this spectral index. In Fig. 18, the sensitivity and discovery potential for an \(E^{-\gamma }\) spectrum are shown with \(\gamma =2.0\) and \(\gamma =2.19\). The flux normalization is evaluated at a pivot energy of \(100\,\mathrm {TeV}\). The sensitivity and discovery potential for the assumed spectral indices turn out to be very similar.

Sensitivity and discovery potential on the flux normalization at \(100\,\mathrm {TeV}\) for an \(E^{-\gamma }\) power-law spectrum. Lines are given for \(\gamma =2.0\) as in Fig. 3 and the diffuse best-fit spectral index of \(\gamma =2.19\)

Same as Fig. 13 but for \(\gamma =2.19\)

In addition also the 90% CL upper limit on source populations as described in Sect. 5 are recalculated for a spectral index of \(\gamma =2.19\). The upper limit are shown in Fig. 19. Comparing with Fig. 13, there is no strong indication of a dependence on the spectral index.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

IceCube Collaboration., Aartsen, M.G., Ackermann, M. et al. Search for steady point-like sources in the astrophysical muon neutrino flux with 8 years of IceCube data. Eur. Phys. J. C 79, 234 (2019). https://doi.org/10.1140/epjc/s10052-019-6680-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-019-6680-0