Abstract

We investigate the performance of a jet identification algorithm based on interaction networks (JEDI-net) to identify all-hadronic decays of high-momentum heavy particles produced at the LHC and distinguish them from ordinary jets originating from the hadronization of quarks and gluons. The jet dynamics are described as a set of one-to-one interactions between the jet constituents. Based on a representation learned from these interactions, the jet is associated to one of the considered categories. Unlike other architectures, the JEDI-net models achieve their performance without special handling of the sparse input jet representation, extensive pre-processing, particle ordering, or specific assumptions regarding the underlying detector geometry. The presented models give better results with less model parameters, offering interesting prospects for LHC applications.

Similar content being viewed by others

1 Introduction

Jets are collimated cascades of particles produced at particle accelerators. Quarks and gluons originating from hadron collisions, such as the proton-proton collisions at the CERN Large Hadron Collider (LHC), generate a cascade of other particles (mainly other quarks or gluons) that then arrange themselves into hadrons. The stable and unstable hadrons’ decay products are observed by large particle detectors, reconstructed by algorithms that combine the information from different detector components, and then clustered into jets, using physics-motivated sequential recombination algorithms such as those described in Refs. [1,2,3]. Jet identification, or tagging, algorithms are designed to identify the nature of the particle that initiated a given cascade, inferring it from the collective features of the particles generated in the cascade.

Pictorial representations of the different jet categories considered in this paper. Left: jets originating from quarks or gluons produce one cluster of particles, approximately cone-shaped, developing along the flight direction of the quark or gluon that started the cascade. Center: when produced with large momentum, a heavy boson decaying to quarks would result in a single jet, made of 2 particle clusters (usually referred to as prongs). Right: a high-momentum \(\mathrm {t} \rightarrow \mathrm {W} \mathrm {b} \rightarrow \mathrm {q} \overline{\mathrm {q}} ^{\prime }\mathrm {b} \) decay chain results in a jet composed of three prongs

Traditionally, jet tagging was meant to distinguish three classes of jets: light flavor quarks \(\mathrm {q} =\mathrm {u},\mathrm {d},\mathrm {s},\mathrm {c} \), gluons \(\mathrm {g} \), or bottom quarks (\(\mathrm {b} \)). At the LHC, due to the large collision energy, new jet topologies emerge. When heavy particles, e.g. \(\mathrm {W} \), \(\mathrm {Z} \), or Higgs (\(\mathrm {H} \)) bosons or the top quark, are produced with large momentum and decay to all-quark final states, the resulting jets are contained in a small solid angle. A single jet emerges from the overlap of two (for bosons) or three (for the top quark) jets, as illustrated in Fig. 1. These jets are characterized by a large invariant mass (computed from the sum of the four-momenta of their constituents) and they differ from ordinary quark and gluon jets, due to their peculiar momentum flow around the jet axis.

Several techniques have been proposed to identify these jets by using physics-motivated quantities, collectively referred to as “jet substructure” variables. A review of the different techniques can be found in Ref. [4]. As discussed in the review, approaches based on deep learning (DL) have been extensively investigated (see also Sect. 2), processing sets of physics-motivated quantities with dense layers or raw data representations (e.g. jet images or particle feature lists) with more complex architectures (e.g. convolutional or recurrent networks).

In this work, we compare the typical performance of some of these approaches to what is achievable with a novel jet identification algorithm based on an interaction network (JEDI-net). Interaction networks [5] (INs) were designed to decompose complex systems into distinct objects and relations, and reason about their interactions and dynamics. One of the first uses of INs was to predict the evolution of physical systems under the influence of internal and external forces, for example, to emulate the effect of gravitational interactions in n-body systems. The n-body system is represented as a set of objects subject to one-on-one interactions. The n bodies are embedded in a graph and these one-on-one interaction functions, expressed as trainable neural networks, are used to predict the post-interaction status of the n-body system. We study whether this type of network generalizes to a novel context in high energy physics. In particular, we represent a jet as a set of particles, each of which is represented by its momentum and embedded as a vertex in a fully-connected graph. We use neural networks to learn a representation of each one-on-one particle interactionFootnote 1 in the jet, which we then use to define jet-related high-level features (HLFs). Based on these features, a classifier associates each jet to one of the five categories shown in Fig. 1.

For comparison, we consider other classifiers based on different architectures: a dense neural network (DNN) [6] receiving a set of jet-substructure quantities, a convolutional neural network (CNN) [7,8,9] receiving an image representation of the transverse momentum (\(p_{\mathrm {T}} \)) flow in the jet,Footnote 2 and a recurrent neural network (RNN) with gated recurrent units [10] (GRUs), which process a list of particle features. These models can achieve state-of-the-art performance although they require additional ingredients: the DNN model requires processing the constituent particles to pre-compute HLFs, the GRU model assumes an ordering criterion for the input particle feature list, and the CNN model requires representing the jet as a rectangular, regular, pixelated image. Any of these aspects can be handled in a reasonable way (e.g. one can use a jet clustering metric to order the particles), sometimes sacrificing some detector performance (e.g., with coarser image pixels than realistic tracking angular resolution, in the case of many models based on CNN). It is then worth exploring alternative solutions that could reach state-of-the-art performance without making these assumptions. In particular, it is interesting to consider architectures that directly takes as input jet constituents and are invariant for their permutation. This motivated the study of jet taggers based on recursive [11], graph networks [12, 13], and energy flow networks [14]. In this context, we aim to investigate the potential of INs.

This paper is structured as follows: we provide a list of related works in Sect. 2. In Sect. 3, we describe the utilized data set. The structure of the JEDI-net model is discussed in Sect. 4 together with the alternative architectures considered for comparison. Results are shown in Sect. 5. Sections 6 and 7 discuss what the JEDI-net learns when processing the graph and quantify the amount of resources needed by the tagger, respectively. We conclude with a discussion and outlook for this work in Sect. 8. “Appendix A” describes the design and optimization of the alternative models.

2 Related work

Jet tagging is one of the most popular LHC-related tasks to which DL solutions have been applied. Several classification algorithms have been studied in the context of jet tagging at the LHC [15,16,17,18,19,20,21,22] using DNNs, CNNs, or physics-inspired architectures. Recurrent and recursive layers have been used to construct jet classifiers starting from a list of reconstructed particle momenta [11,12,13]. Recently, these different approaches, applied to the specific case of top quark jet identification, have been compared in Ref. [23]. While many of these studies focus on data analysis, work is underway to apply these algorithms in the early stages of LHC real-time event processing, i.e. the trigger system. For example, Ref. [24] focuses on converting these models into firmware for field programmable gate arrays (FPGAs) optimized for low latency (less than 1 \(\upmu \)s). If successful, such a program could allow for a more resource-efficient and effective event selection for future LHC runs.

Graph neural networks have also been considered as jet tagging algorithms [25, 26] as a way to circumvent the sparsity of image-based representations of jets. These approaches demonstrate remarkable categorization performance. Motivated by the early results of Ref. [25], graph networks have been also applied to other high energy physics tasks, such as event topology classification [27, 28], particle tracking in a collider detector [29], pileup subtraction at the LHC [30], and particle reconstruction in irregular calorimeters [31].

3 Data set description

This study is based on a data set consisting of simulated jets with an energy of \(p_{\mathrm {T}} \approx 1\) TeV, originating from light quarks \(\mathrm {q} \), gluons \(\mathrm {g} \), \(\mathrm {W} \) and \(\mathrm {Z} \) bosons, and top quarks produced in \(\sqrt{s} = 13\,\text {TeV} \) proton-proton collisions. The data set was created using the configuration and parametric description of an LHC detector described in Refs. [24, 32], and is available on the Zenodo platform [33,34,35,36].

Distributions of the 16 high-level features used in this study, described in Ref. [24]

Average \(100\times 100\) images for the five jet classes considered in this study: \(\mathrm {q} \) (top left), \(\mathrm {g} \) (top center), \(\mathrm {W} \) (top right), \(\mathrm {Z} \) (bottom left), and top jets (bottom right). The temperature map represents the amount of \(p_{\mathrm {T}} \) collected in each cell of the image, measured in GeV and computed from the scalar sum of the \(p_{\mathrm {T}} \) of the particles pointing to each cell

Example of \(100\times 100\) images for the five jet classes considered in this study: \(\mathrm {q} \) (top-left), \(\mathrm {g} \) (top-right), \(\mathrm {W} \) (center-left), \(\mathrm {Z} \) (center-right), and top jets (bottom). The temperature map represents the amount of \(p_{\mathrm {T}} \) collected in each cell of the image, measured in GeV and computed from the scalar sum of the \(p_{\mathrm {T}} \) of the particles pointing to each cell

Jets are clustered from individual reconstructed particles, using the anti-\(k_{\mathrm {T}} \) algorithm [3, 37] with jet-size parameter \(R=0.8\). Three different jet representations are considered:

A list of 16 HLFs, described in Ref. [24], given as input to a DNN. The 16 distributions are shown in Fig. 2 for the five jet classes.

An image representation of the jet, derived by considering a square with pseudorapidity and azimut distances \(\varDelta \eta =\varDelta \phi =2R\), centered along the jet axis. The image is binned into \(100\times 100\) pixels. Such a pixel size is comparable to the cell of a typical LHC electromagnetic calorimeter, but much coarser than the typical angular resolution of a tracking device for the \(p_{\mathrm {T}} \) values relevant to this task. Each pixel is filled with the scalar sum of the \(p_{\mathrm {T}} \) of the particles in that region. These images are obtained by considering the 150 highest-\(p_{\mathrm {T}} \) constituents for each jet. This jet representation is used to train a CNN classifier. The average jet images for the five jet classes are shown in Fig. 3. For comparison, a randomly chosen set of images is shown in Fig. 4.

A constituent list for up to 150 particles, in which each particle is represented by 16 features, computed from the particle four-momenta: the three Cartesian coordinates of the momentum (\(p_x\), \(p_y\), and \(p_z\)), the absolute energy E, \(p_{\mathrm {T}} \), the pseudorapidity \(\eta \), the azimuthal angle \(\phi \), the distance \(\varDelta R = \sqrt{\varDelta \eta ^2 + \varDelta \phi ^2}\) from the jet center, the relative energy \(E^{\mathrm {rel}} = E^\mathrm {particle}/E^\mathrm {jet}\) and relative transverse momentum \(p_{\mathrm {T}} ^{\mathrm {rel}} = p_{\mathrm {T}} ^\mathrm {particle}/p_{\mathrm {T}} ^\mathrm {jet}\) defined as the ratio of the particle quantity and the jet quantity, the relative coordinates \(\eta ^{\mathrm {rel}}=\eta ^\mathrm {particle} - \eta ^\mathrm {jet}\) and \(\phi ^{\mathrm {rel}}=\phi ^\mathrm {particle} - \phi ^\mathrm {jet}\) defined with respect to the jet axis, \(\cos {\theta }\) and \(\cos {\theta ^{\mathrm {rel}}}\) where \(\theta ^\mathrm {rel} = \theta ^\mathrm {particle} - \theta ^\mathrm {jet}\) is defined with respect to the jet axis, and the relative \(\eta \) and \(\phi \) coordinates of the particle after applying a proper Lorentz transformation (rotation) as described in Ref. [38]. Whenever less than 150 particles are reconstructed, the list is filled with zeros. The distributions of these features considering the 150 highest-\(p_{\mathrm {T}} \) particles in the jet are shown in Fig. 5 for the five jet categories. This jet representation is used for a RNN with a GRU layer and for JEDI-net.

4 JEDI-net

In this work, we apply an IN [5] architecture to learn a representation of a given input graph (the set of constituents in a jet) and use it to accomplish a classification task (tagging the jet). One can see the IN architecture as a processing algorithm to learn a new representation of the initial input. This is done replacing a set of input features, describing each individual vertex of the graph, with a set of engineered features, specific of each vertex but whose values depend on the connection between the vertices in the graph.

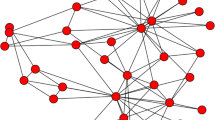

The starting point consists of building a graph for each input jet. The \(N_O\) particles in the jet are represented by the vertices of the graph, fully interconnected through directional edges, for a total of \(N_E = N_O \times (N_O-1)\) edges. An example is shown in Fig. 6 for the case of a three-vertex graph. The vertices and edges are labeled for practical reasons, but the network architecture ensures that the labeling convention plays no role in creating the new representation.

Once the graph is built, a receiving matrix (\(R_R\)) and a sending matrix (\(R_S\)) are defined. Both matrices have dimensions \(N_O \times N_E\). The element \((R_R)_{ij}\) is set to 1 when the \(i^{\mathrm {th}}\) vertex receives the \(j^{\mathrm {th}}\) edge and is 0 otherwise. Similarly, the element \((R_S)_{ij}\) is set to 1 when the \(i^{\mathrm {th}}\) vertex sends the \(j^{\mathrm {th}}\) edge and is 0 otherwise. In the case of the graph of Fig. 6, the two matrices take the form:

The input particle features are represented by an input matrix I. Each column of the matrix corresponds to one of the graph vertices, while the rows correspond to the P features used to represent each vertex. In our case, the vertices are the particles inside the jet, each represented by its array of features (i.e., the 16 features shown in Fig. 5). Therefore, the I matrix has dimensions \(P \times N_O\).

The I matrix is processed by the IN in a series of steps, represented in Fig. 7. The I matrix is multiplied by the \(R_R\) and \(R_S\) matrices and the two resulting matrices are then concatenated to form the B matrix, having dimension \(2P \times N_E\):

Each column of the B matrix represents an edge, i.e. a particle-to-particle interaction. The 2P elements of each column are the features of the sending and receiving vertices for that edge. Using this information, a \(D_E\)-dimensional hidden representation of the interaction edge is created through a trainable function \(f_R: \mathbb {R}^{2P} \mapsto \mathbb {R}^{D_E}\). This gives a matrix E with dimensions \(D_E \times N_E\). The cumulative effects of the interactions received by a given vertex are gathered by summing the \(D_E\) hidden features over the edges arriving to it. This is done by computing \(\overline{E} = E R_R^\top \) with dimensions \(D_E \times N_O\), which is then appended to the initial input matrix I:

At this stage, each column of the C matrix represents a constituent in the jet, expressed as a \((P+D_E)\)-dimensional feature vector, containing the P input features and the \(D_E\) hidden features representing the combined effect of the interactions with all the connected particles. A trainable function \(f_O: \mathbb {R}^{P + D_E} \mapsto \mathbb {R}^{D_O}\) is used to build a post-interaction representation of each jet constituent. The function \(f_O\) is applied to each column of C to build the post-interaction matrix O with dimensions \(D_O \times N_O\).

A final classifier \(\phi _C\) takes as input the elements of the O matrix and returns the probability for that jet to belong to each of the five categories. This is done in two ways: (i) in one case, we define the quantities \(\overline{O}_i = \sum _j O_{ij}\), where j is the index of the vertex in the graph (the particle, in our case), and the \(i\in [0, D_E]\) index runs across the \(D_E\) outputs of the \(f_O\) function. The \(\overline{O}\) quantities are used as input to \(\phi _C: \mathbb {R}^{D_O} \mapsto \mathbb {R}^{N}\). This choice allows to preserve the independence of the architecture on the labeling convention adopted to build the I, \(R_R\), and \(R_S\) matrices, at the cost of losing some discriminating information in the summation. (ii) Alternatively, the \(\phi _C\) matrix is defined directly from the \(D_O \times N_O\) elements of the O matrix, flattened into a one-dimensional array. The full information from O is preserved, but \(\phi _C\) assumes an ordering of the \(N_O\) input objects. In our case, we rank the input particles in descending order by \(p_{\mathrm {T}} \).

The trainable functions \(f_O\), \(f_R\), and \(\phi _C\) consist of three DNNs. Each of them has two hidden layers, the first (second) having \(N_n^1\) (\(N_n^2 = \lfloor N_n^1/2 \rfloor \)) neurons. The model is implemented in PyTorch [39] and trained using an NVIDIA GTX1080 GPU. The training (validation) data set consists of 630,000 (240,000) examples, while 10,000 events are used for testing purposes.

The architecture of the three trainable functions is determined by minimizing the loss function through a Bayesian optimization, using the GpyOpt library [40], based on Gpy [41]. We consider the following hyperparameters:

The number of output neurons of the \(f_R\) network, \(D_E\) (between 4 and 14).

The number of output neurons of the \(f_O\) network, \(D_O\) (between 4 and 14).

The number of neurons \(N_n^1\) in the first hidden layer of the \(f_O\), \(f_R\), and \(\phi _C\) network (between 5 and 50).

The activation function for the hidden and output layers of the \(f_R\) network: ReLU [42], ELU [43], or SELU [44] functions.

The activation function for the hidden and output layers of the \(f_O\) network: ReLU, ELU, or SELU.

The activation function for the hidden layers of the \(\phi _C\) network: ReLU, ELU, or SELU.

In addition, the output neurons of the \(\phi _C\) network are activated by a softmax function. A learning rate of \(10^{-4}\) is used. For a given network architecture, the network parameters are optimized by minimizing the categorical cross entropy. The Bayesian optimization is repeated four times. In each case, the input particles are ordered by descending \(p_{\mathrm {T}} \) value and the first 30, 50, 100, or 150 particles are considered. The parameter optimization is performed on the training data set, while the loss for the Bayesian optimization is estimated on the validation data set.

Tables 1 and 2 summarize the result of the Bayesian optimization for the JEDI-net architecture with and without the sum over the columns of the O matrix, respectively. The best result of each case, highlighted in bold, is used as a reference for the rest of the paper.

For comparison, three alternative models are trained on the three different representations of the same data set described in Sect. 3: a DNN model taking as input a list of HLFs, a CNN model processing jet images, and a recurrent model applying GRUs on the same input list used for JEDI-net. The three benchmark models are optimized through a Bayesian optimization procedure, as done for the INs. Details of these optimizations and the resulting best models are discussed in “Appendix A”.

5 Results

Figure 8 shows the receiver operating characteristic (ROC) curves obtained for the optimized JEDI-net tagger in each of the five jet categories, compared to the corresponding curves for the DNN, CNN, and GRU alternative models. The curves are derived by fixing the network architectures to the optimal values based on Table 2 and “Appendix A” and performing a k-fold cross-validation training, with \(k=10\). The solid lines represent the average ROC curve, while the shaded bands quantify the \(\pm 1\) RMS dispersion. The area under the curve (AUC) values, reported in the figure, allow for a comparison of the performance of the different taggers.

The algorithm’s tagging performance is quantified computing the true positive rate (TPR) values for two given reference false positive rate (FPR) values (10% and 1%). The comparison of the TPR values gives an assessment of the tagging performance in a realistic use case, typical of an LHC analysis. Table 3 shows the corresponding FPR values for the optimized JEDI-net taggers, compared to the corresponding values for the benchmark models. The largest TPR value for each class is highlighted in bold. As shown in Fig. 8 and Table 3, the two JEDI-net models outperform the other architectures in almost all cases. The only notable exception is the tight working point of the top-jet tagger, for which the DNN model gives a TPR higher by about \(2\%\), while the CNN and GRU models give much worse performance.

The TPR values for the two JEDI-net models are within \(1\%\). The only exception is observed for the tight working points of the \(\mathrm {W} \) and \(\mathrm {Z} \) taggers, for which the model using the \(\overline{O}\) sums shows a drop in TPR of \(\sim 4\%\). In this respect, the model using summed \(\overline{O}\) features is preferable (despite this small TPR loss), given the reduced model complexity (see Sect. 7) and its independence on the labeling convention for the particles embedded in the graph and for the edges connecting them.

ROC curves for JEDI-net and the three alternative models, computed for gluons (top-left), light quarks (top-right), \(\mathrm {W} \) (center-left) and \(\mathrm {Z} \) (center-right) bosons, and top quarks (bottom). The solid lines represent the average ROC curves derived from 10 k-fold trainings of each model. The shaded bands around the average lines are represent one standard deviation, computed with the same 10 k-fold trainings

Two-dimensional distributions between (top to bottom) \(\overline{O}_1\) and constituents multiplicty, \(\overline{O}_4\) and \(\tau _1^{(\beta =2)}\), \(\overline{O}_2\) and \(\tau _3^{(\beta =1)}\), \(\overline{O}_9\) and \(\tau _3^{(\beta =2)}\), for jets originating from (right to left) gluons, light flavor quarks, \(\mathrm {W} \) bosons, \(\mathrm {Z} \) bosons, and top quarks. For each distribution, the linear correlation coefficient \(\rho \) is reported

6 What did JEDI-net learn?

In order to characterize the information learned by JEDI-net, we consider the \(\overline{O}\) sums across the \(N_O\) vertices of the graph (see Sect. 4) and we study their correlations to physics motivated quantities, typically used when exploiting jet substructure in a search. We consider the HLF quantities used for the DNN model and the N-subjettiness variables \(\tau _N^{(\beta )}\) [47], computed with angular exponent \(\beta =1,2\).

Not all the \(\overline{O}\) sums exhibit an obvious correlation with the considered quantities, i.e., the network engineers high-level features that encode other information than what is used, for instance, in the DNN model.

Nevertheless, some interesting correlation pattern between the physics motivated quantities and the \(\overline{O}_i\) sums is observed. The most relevant examples are given in Fig. 9, where the 2D histograms and the corresponding linear correlation coefficient (\(\rho \)) are shown. The correlation between \(\overline{O}_1\) and the particle multiplicity in the jet is not completely unexpected. As long as the O quantities aggregated across the graph have the same order of magnitude, the corresponding sum \(\overline{O}\) would be proportional to jet-constituent multiplicity.

The strong correlation between the \(\overline{O}_4\) and \(\tau ^{(\beta =2)}_{1}\) (with \(\rho \) values between 0.69 and 0.97, depending on the jet class) is much less expected. The \(\tau _1^\beta \) quantities assume small values when the jet constituents can be arranged into a single sub-jet inside the jet. Aggregating information from the constituent momenta across the jet, the JEDI-net model based on the \(\overline{O}\) quantities learns to build a quantity very close to \(\tau ^{(\beta =2)}_1\). The last two rows of Fig. 9 show two intermediate cases: the correlation between \(\overline{O}_2\) and \(\tau ^{(\beta =1)}_3\) and between \(\overline{O}_9\) and \(\tau ^{(\beta =2)}_3\). The two \(\overline{O}\) sums considered are correlated to the corresponding substructure quantities, but with smaller (within 0.48 and 0.77) correlation coefficients.

7 Resource comparison

Table 4 shows a comparison of the computational resources needed by the different models discussed in this paper. The best-performing JEDI-net model has more than twice the number of trainable parameters than the DNN and GRU model, but approximately a factor of 6 less parameters than the CNN model. The JEDI-net model based on the summed \(\overline{O}\) features achieves comparable performance with about a factor of 4 less parameters, less than the DNN and GRU models. While being far from expensive in terms of number of parameters, the JEDI-net models are expensive in terms of the number of floating point operations (FLOP). The simple model based on \(\overline{O}\) sums, using as input a sequence of 150 particles, uses 458 MFLOP. The increase is mainly due to the scaling with the number of vertices in the graph. Many of these operations are the \(0\times \) and \(1\times \) products involving the elements of the \(R_R\) and \(R_S\) matrices. The cost of these operations could be reduced with an IN implementation optimized for inference, e.g., through an efficient sparse-matrix representation.

In addition, we quote in Table 4 the average inference time on a GPU. The inference time is measured applying the model to 1000 events, as part of a Python application based on TensorFlow [48]. To this end, the JEDI-net models, implemented and trained in PyTorch, are exported to ONNX [49] and then loaded as TensorFlow graph. The quoted time includes loading the data, which occurs for the first inference and is different for different event representations, that is smaller for the JEDI-net models than for the CNN models. The GPU used is an NVIDIA GTX 1080 with 8 GB memory, mounted on a commercial desktop with an Intel Xeon CPU, operating at a frequency of 2.60 GHz. The tests were executed in Python 3.7, with no other concurrent process running on the machine. Given the larger number of operations, the GPU inference time for the two IN models is much larger than for the other models.

The current IN algorithm is costly to deploy in the online selection environment of a typical LHC experiment. A dedicated R&D effort is needed to reduce the resource consumption in a realistic environment in order to benefit from the improved accuracy that INs can achieve. For example, one could trade model accuracy for reduced resource needs by applying neural network pruning [50, 51], reducing the numerical precision [52, 53], and limiting the maximum number of particles in each jet representation.

8 Conclusions

This paper presents JEDI-net, a jet tagging algorithm based on interaction networks. Applied to a data set of jets from light-flavor quarks, gluons, vector bosons, and top quarks, this algorithm achieves better performance than models based on dense, convolutional, and recurrent neural networks, trained and optimized with the same procedure on the same data set. As other graph networks, JEDI-net offers several practical advantages that make it particularly suitable for deployment in the data-processing workflows of LHC experiments: it can directly process the list of jet constituent features (e.g. particle four-momenta), it does not assume specific properties of the underlying detector geometry, and it is insensitive to any ordering principle applied to the input jet constituents. For these reasons, the implementation of this and other graph networks is an interesting prospect for future runs of the LHC. On the other hand, the current implementation of this model demands large computational resources and a large inference time, which make the use of these models problematic for real-time selection and calls for a dedicated program to optimize the model deployment on typical L1 and HLT environments.

The quantities engineered by one of the trained IN models exhibit interesting correlation patterns with some of the jet substructure quantities proposed in literature, showing that the model is capable of learning some of the relevant physics in the problem. On the other hand, some of the engineered quantities do not exhibit striking correlation patterns, implying the possibility of a non trivial insight to be gained by studying these quantities.

Notes

Here, we refer to the abstract message-passing interaction represented by the edges of the graph and not the physical interactions due to quantum chromodynamics, which occur before the jet constituents emerge from the hadronization process.

We use a Cartesian coordinate system with the z axis oriented along the beam axis, the x axis on the horizontal plane, and the y axis oriented upward. The x and y axes define the transverse plane, while the z axis identifies the longitudinal direction. The azimuthal angle \(\phi \) is computed from the x axis. The polar angle \(\theta \) is used to compute the pseudorapidity \(\eta = -\log (\tan (\theta /2))\). We use natural units such that \(c=\hbar =1\) and we express energy in units of electronVolt (eV) and its prefix multipliers.

References

Y.L. Dokshitzer, G.D. Leder, S. Moretti, B.R. Webber, JHEP 08, 001 (1997). https://doi.org/10.1088/1126-6708/1997/08/001

S. Catani, Y.L. Dokshitzer, M.H. Seymour, B.R. Webber, Nucl. Phys. B 406, 187 (1993). https://doi.org/10.1016/0550-3213(93)90166-M

M. Cacciari, G.P. Salam, G. Soyez, JHEP 04, 063 (2008). https://doi.org/10.1088/1126-6708/2008/04/063

A.J. Larkoski, I. Moult, B. Nachman, (2017). arXiv:1709.04464

P.W. Battaglia, R. Pascanu, M. Lai, D. Rezende, K. Kavukcuoglu, (2016). arXiv:1612.00222

J. Orbach, Arch. Gen. Psychiatry 7(3), 218 (1962). https://doi.org/10.1001/archpsyc.1962.01720030064010

K. Fukushima, Biol. Cybern. 36, 193 (1980)

Y.L. Cun, B. Boser, J.S. Denker, R.E. Howard, W. Habbard, L.D. Jackel, D. Henderson, Chap. Handwritten Digit Recognition with a Back-propagation Network (Morgan Kaufmann Publishers Inc., San Francisco, 1990), pp. 396–404

A. Waibel, T. Hanazawa, G. Hinton, K. Shikano, K.J. Lang, Chap. Phoneme Recognition Using Time-delay Neural Networks (Morgan Kaufmann Publishers Inc., San Francisco, 1990), pp. 393–404

K. Cho, B. van Merrienboer, D. Bahdanau, Y. Bengio, CoRR (2014). arXiv:1409.1259

G. Louppe, K. Cho, C. Becot, K. Cranmer, JHEP 01, 057 (2019). https://doi.org/10.1007/JHEP01(2019)057

S. Egan, W. Fedorko, A. Lister, J. Pearkes, C. Gay (2017). arXiv:1711.09059

T. Cheng, Comput. Softw. Big Sci. 2(1), 3 (2018). https://doi.org/10.1007/s41781-018-0007-y

P.T. Komiske, E.M. Metodiev, J. Thaler, JHEP 01, 121 (2019). https://doi.org/10.1007/JHEP01(2019)121

L. de Oliveira, M. Kagan, L. Mackey, B. Nachman, A. Schwartzman, JHEP 07, 069 (2016). https://doi.org/10.1007/JHEP07(2016)069

D. Guest, J. Collado, P. Baldi, S.C. Hsu, G. Urban, D. Whiteson, Phys. Rev. D 94(11), 112002 (2016). https://doi.org/10.1103/PhysRevD.94.112002

S. Macaluso, D. Shih, JHEP 10, 121 (2018). https://doi.org/10.1007/JHEP10(2018)121

K. Datta, A.J. Larkoski, JHEP 03, 086 (2018). https://doi.org/10.1007/JHEP03(2018)086

A. Butter, G. Kasieczka, T. Plehn, M. Russell, SciPost Phys. 5(3), 028 (2018). https://doi.org/10.21468/SciPostPhys.5.3.028

G. Kasieczka, T. Plehn, M. Russell, T. Schell, JHEP 05, 006 (2017). https://doi.org/10.1007/JHEP05(2017)006

P.T. Komiske, E.M. Metodiev, M.D. Schwartz, JHEP 01, 110 (2017). https://doi.org/10.1007/JHEP01(2017)110

A. Schwartzman, M. Kagan, L. Mackey, B. Nachman, L. De Oliveira, J. Phys. Conf. Ser. 762(1), 012035 (2016). https://doi.org/10.1088/1742-6596/762/1/012035

A. Butter et al., SciPost Phys. 7, 014 (2019). https://doi.org/10.21468/SciPostPhys.7.1.014

J. Duarte et al., JINST 13(07), P07027 (2018). https://doi.org/10.1088/1748-0221/13/07/P07027

I. Henrion, et al., In Proceedings of the Deep Learning for Physical Sciences Workshop at NIPS (2017). https://dl4physicalsciences.github.io/files/nips_dlps_2017_29.pdf

H. Qu, L. Gouskos, (2019). arXiv:1902.08570

M. Abdughani, J. Ren, L. Wu, J.M. Yang, J. High Energy Phys. 2019, (2019). https://doi.org/10.1007/JHEP08(2019)055

N. Choma, F. Monti, L. Gerhardt, T. Palczewski, Z. Ronaghi, P. Prabhat, W. Bhimji, M. Bronstein, S. Klein, J. Bruna, 386–391 (2018). https://doi.org/10.1109/ICMLA.2018.00064

S. Farrell, et al., In 4th International Workshop Connecting The Dots 2018 (CTD2018) (Seattle, Washington, 2018(20-22 Mar 2018)

J. Arjona Martínez, O. Cerri, M. Pierini, M. Spiropulu, J.R. Vlimant, Eur. Phys. J. Plus 134(7), 333 (2019). https://doi.org/10.1140/epjp/i2019-12710-3

S.R. Qasim, J. Kieseler, Y. Iiyama, M. Pierini, Eur. Phys. J. C 79(7), 608 (2019). https://doi.org/10.1140/epjc/s10052-019-7113-9

E. Coleman, M. Freytsis, A. Hinzmann, M. Narain, J. Thaler, N. Tran, C. Vernieri, JINST 13(01), T01003 (2018). https://doi.org/10.1088/1748-0221/13/01/T01003

J.M. Duarte, et al., HLS4ML LHC Jet dataset (30 particles) (Zenodo, 2020). https://doi.org/10.5281/zenodo.3601436

J.M. Duarte, et al., HLS4ML LHC Jet dataset (50 particles) (Zenodo, 2020). https://doi.org/10.5281/zenodo.3601443

J.M. Duarte, et al., HLS4ML LHC Jet dataset (100 particles) (Zenodo, 2020). https://doi.org/10.5281/zenodo.3602254

J.M. Duarte, et al., HLS4ML LHC Jet dataset (150 particles) (Zenodo, 2020). https://doi.org/10.5281/zenodo.3602260

M. Cacciari, G.P. Salam, G. Soyez, Eur. Phys. J. C 72, 1896 (2012). https://doi.org/10.1140/epjc/s10052-012-1896-2

J. Pearkes, W. Fedorko, A. Lister, C. Gay, (2017). arXiv:1704.02124

A. Paszke, et al., In NIPS-W (2017). https://openreview.net/pdf?id=BJJsrmfCZ

The GPyOpt authors. GPyOpt: A bayesian optimization framework in python (2016). http://github.com/SheffieldML/GPyOpt

GPy. GPy: A gaussian process framework in python (2012). http://github.com/SheffieldML/GPy

V. Nair, G.E. Hinton, In Proceedings of ICML, vol. 27 (2010), pp. 807–814

D. Clevert, T. Unterthiner, S. Hochreiter, CoRR (2015). arXiv:1511.07289

G. Klambauer, T. Unterthiner, A. Mayr, S. Hochreiter, CoRR (2017). arXiv:1706.02515

D.P. Kingma, J. Ba, CoRR (2014). arXiv:1412.6980

M.D. Zeiler, CoRR (2012). arXiv:1212.5701

J. Thaler, K. Van Tilburg, JHEP 03, 015 (2011). https://doi.org/10.1007/JHEP03(2011)015

M. Abadi et al., TensorFlow: Large-scale machine learning on heterogeneous systems (2015). Software available from https://tensorflow.org

J. Bai, F. Lu, K. Zhang et al., Onnx: Open neural network exchange (2019). https://github.com/onnx/onnx

Y. LeCun, J.S. Denker, S.A. Solla, In Advances in Neural Information Processing Systems 2, ed. by D.S. Touretzky (Morgan-Kaufmann, 1990), pp. 598–605. https://openreview.net/pdf?id=OM0jvwB8jIp57ZJjtNEZ

S. Han, H. Mao, W.J. Dally, CoRR (2015). arXiv:1510.00149

Y. Cheng, D. Wang, P. Zhou, T. Zhang, CoRR (2017). arXiv:1710.09282

S. Gupta, A. Agrawal, K. Gopalakrishnan, P. Narayanan, CoRR (2015). arXiv:1502.02551

T. Dozat, In ICLR Workshop (2016)

Acknowledgements

We are grateful to Caltech and the Kavli Foundation for their support of undergraduate student research in cross-cutting areas of machine learning and domain sciences. We would also like to thank the Taylor W. Lawrence Research Fellowship and Mellon Mays Fellowship for supporting E. A. M. and making this research effort possible. This work was conducted at “iBanks,” the AI GPU cluster at Caltech. We acknowledge NVIDIA, SuperMicro and the Kavli Foundation for their support of “iBanks”. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement \(\hbox {n}^o\) 772369) and is partially supported by the U.S. Department of Energy, Office of High Energy Physics Research under Caltech Contract No. DE-SC0011925. J. M. D. is supported by Fermi Research Alliance, LLC under Contract No. DE-AC02-07CH11359 with the U.S. Department of Energy, Office of Science, Office of High Energy Physics.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

Alternative models

The three benchmark models considered in this work are derived through a Bayesian optimization of their hyperparameters, performed using the GpyOpt library [40], based on Gpy [41]. For each iteration, the training is performed using early stopping to prevent over-fitting and to allow a fair comparison between different configurations. The data set for training (validation) consists of 630,000 (240,000) jets, with 10,000 jets used for testing purposes. The loss for the Bayesian optimization is estimated on the validation data set. The CNN and GRU networks are trained on four different input data sets, obtained considering the first 30, 50, 100, or 150 highest-\(p_{\mathrm {T}} \) jet constituents. The DNN model is trained on quantities computed from the full list of particles.

The DNN model consists on a multilayer perceptron, alternating dense layers to dropout layers. The optimal architecture is determined optimizing the following hyperparameters:

Number of dense layers (\(N_{DL}\)) between 1 and 3.

Number of neurons per dense layer (\(n_{n}\)): \(10, 20, \ldots , 100\).

Activation functions for the dense layers: ReLU, ELU, or SELU.

Dropout rate: Between 0.1 and 0.4.

Batch size: 50, 100, 200, or 500.

Optimization algorithm: Adam, Nadam [54], or AdaDelta.

The optimization process gives as output an optimal architecture with three hidden layers of 80 neurons each, activated by ELU functions. The best dropout rate is found to be 0.11, when a batch size of 50 and the Adam optimizer are used. This optimized network gives a loss of 0.66 and an accuracy of 0.76.

The CNN model consists of two-dimensional convolutional layers with batch normalization, followed by a set of dense layers. A \(2\times 2\) max pooling layer is applied after the fist convolutional layer. The optimal architecture is derived optimizing the following hyperparameters:

Number of convolutional layers \(N_{CL}\) between 1 and 3.

Number of convolutional filters \(n_{f}\) in each layer (10, 15, 20, 25, or 30).

Convolutional filter size: \(3\times 3\), \(5\times 5\), \(7\times 7\), or \(9\times 9\).

Max pooling filter size: \(2\times 2\), \(3\times 3\), or \(5\times 5\).

Activation functions for the convolutional layers (ReLU, ELU, or SELU).

Number of dense layers \(N_{DL}\) between 1 and 3.

Number of neurons \(n_{n}\) per dense layer: \(10, 20, \ldots , 60\).

Activation functions for the dense layers: ReLU, ELU, or SELU.

Dropout rate: Between 0.1 and 0.4.

Batch size: 50, 100, 200, or 500.

Optimization algorithm: Adam, Nadam, or AdaDelta.

The stride of the convolutional filters is fixed to 1 and “same” padding is used. Table 5 shows the optimal sets of hyperparameter values, obtained for the four different data set representations. While the optimal networks are equivalent in performance, we select the network obtained for \(\le 50\) constituents, because it has the smallest number of parameters.

The recurrent model consists of a GRU layer feeding a set of dense layers. The following hyperparameters are considered:

Number of GRU units: 50, 100, 200, 300, 400, or 500.

Activation functions for the GRU layers: ReLU, ELU, or SELU.

Number of dense layers: Between 1 and 4.

Number of neurons per dense layer: 10, 20, \(\ldots \), 100.

Activation functions for the dense layers: ReLU, ELU, or SELU.

Dropout rate: between 0.1 and 0.4.

Batch size: 50, 100, 200, or 500.

Optimization algorithm: Adam, Nadam, or AdaDelta.

The best hyperparameter values are listed in Table 6. As for the CNN model, the best performance is obtained when the list of input particles is truncated at 50 elements.

Performance on public top tagging data set

In this appendix, we retrain and evaluate the performance of JEDI-net on a public top tagging data set [19, 23] used to benchmark many neural networks architectures for the task of differentiating top quark jets from light quark jets. To select the hyperparameters of the model (with and without the sum over particles), we performed a Bayesian optimization. We scan \(N_n^1\) from 16 to 256, \(D_E\) from 4 to 64, \(D_O\) from 4 to 64, ReLU, ELU, or SELU activation functions for \(f_R\), \(f_O\), and \(\phi _C\), and either the Adam or Adadelta optimizers with an initial learning rate of \(10^{-3}\). We report three metrics for the performance of the network on the top tagging data set: model accuracy, area under the ROC curve (AUC), and background rejection power at a fixed signal efficiency of 30%, \(1/\epsilon _B (\epsilon _S=30\%)\). In Table 7, the accuracy, AUC, and \(1/\epsilon _B (\epsilon _S=30\%)\) values are listed for each model considered. The performance of JEDI-net compared to other models developed for this data set is approaching state-of-the-art [23].

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Moreno, E.A., Cerri, O., Duarte, J.M. et al. JEDI-net: a jet identification algorithm based on interaction networks. Eur. Phys. J. C 80, 58 (2020). https://doi.org/10.1140/epjc/s10052-020-7608-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-020-7608-4