Abstract

Additional jet activity in dijet events is measured using \({pp}\) collisions at ATLAS at a centre-of-mass energy of \(7\,\mathrm{TeV}\), for jets reconstructed using the \({\mathrm{anti}\hbox {-}{k_t}}\) algorithm with radius parameter \({R=0.6}\). This is done using variables such as the fraction of dijet events without an additional jet in the rapidity interval bounded by the dijet subsystem and correlations between the azimuthal angles of the dijet s. They are presented, both with and without a veto on additional jet activity in the rapidity interval, as a function of the scalar average of the transverse momenta of the dijet s and of the rapidity interval size. The double differential dijet cross section is also measured as a function of the interval size and the azimuthal angle between the dijet s. These variables probe differences in the approach to resummation of large logarithms when performing QCD calculations. The data are compared to powheg, interfaced to the pythia 8 and herwig parton shower generators, as well as to hej with and without interfacing it to the ariadne parton shower generator. None of the theoretical predictions agree with the data across the full phase-space considered; however, powheg+pythia 8 and hej+ariadne are found to provide the best agreement with the data. These measurements use the full data sample collected with the ATLAS detector in \(7\,\mathrm{TeV}\) \({pp}\) collisions at the LHC and correspond to integrated luminosities of \(36.1\,\mathrm{pb}^{-1}\) and \(4.5\,\mathrm{fb}^{-1}\) for data collected during 2010 and 2011, respectively.

Similar content being viewed by others

Measurement of dijet azimuthal decorrelation in pp collisions at $$\sqrt{s}=8\,\mathrm{TeV} $$

Avoid common mistakes on your manuscript.

1 Introduction

The large hadron collider (LHC) has opened up a new kinematic regime to test perturbative QCD (pQCD) using measurements of jet production. Next-to-leading-order QCD predictions for inclusive jet and dijet cross section s have been found to describe the data at the highest measured energies [1–7]. However, purely fixed-order calculations are expected to describe the data poorly wherever higher-order corrections to a given observable are important. In such cases, higher orders in perturbation theory must be resummed; this resummation is typically performed in terms of \({\ln (1/ x)}\), where \({x}\) is Bjorken-\(x\), the Balitsky–Fadin–Kuraev–Lipatov (BFKL) approach [8–11], or in terms of \({\ln (Q^2)}\), where \({Q^2}\) is the virtuality of the interaction, the Dokshitzer–Gribov–Lipatov–Altarelli–Parisi (DGLAP) approach [12–14]. These resummations provide approximations that are most valid in phase-space regions for which the resummed terms provide a dominant contribution to the observable. Such a situation exists in dijet topologies when the two jets have a large rapidity separation or when a veto is applied to additional jet activity in the rapidity interval bounded by the dijet system [15]. In these regions of phase-space, higher order corrections proportional to the rapidity separation and the logarithm of the scalar average of the transverse momenta of the dijets become increasing important: these must be summed to all orders to obtain accurate theoretical predictions.

When studying these phase-space regions, a particularly interesting observable is the gap fraction, \({f\left( {Q_{0}} \right) }\), defined as \({f\left( {Q_{0}} \right) = \sigma _{\mathrm {jj}}\left( {Q_{0}} \right) /\sigma _{\mathrm {jj}}}\) where \({\sigma _{\mathrm {jj}}}\) is the inclusive dijet cross section and \({\sigma _{\mathrm {jj}}\left( {Q_{0}} \right) }\) is the cross section for dijet production in the absence of jets with transverse momentum greater than \({Q_{0}}\) in the rapidity interval bounded by the dijet system. The variable \({Q_{0}}\) is referred to as the veto scale. In the limit of large rapidity separation, \({\Delta y}\), between the jet centroids, the gap fraction is expected to be sensitive to BFKL dynamics [16, 17]. Alternatively, when the scalar average of the transverse momenta of the dijet s, \({\overline{p_{\mathrm{T}}}}\), is much larger than the veto scale, the effects of wide-angle soft gluon radiation may become important [18–20]. Finally, dijet production via \({t}\)-channel colour-singlet exchange [21] is expected to provide an increasingly important contribution to the total dijet cross section when both of these limits are approached simultaneously. The mean number of jets above the veto scale in the rapidity interval between the dijet s is presented as an alternative measurement of hard jet emissions in the rapidity interval.

A complementary probe of higher-order QCD effects can be made by studying the azimuthal angle between the jets in the dijet system, \({\Delta \phi }\). A purely \({2\rightarrow 2}\) partonic scatter produces final-state partons back-to-back in azimuthal angle. Any additional quark or gluon emission alters the balance between the partons and produces an azimuthal decorrelation, the predicted magnitude of which is different for fixed-order calculations, BFKL-inspired resummations and DGLAP-inspired resummations [22]. In particular, the azimuthal decorrelation is expected to increase with increasing rapidity separation if BFKL effects are present [23, 24]. To discriminate between DGLAP-like and BFKL-like behaviour, the azimuthal angular moments \({\langle \cos \left( n \left( \pi - \Delta \phi \right) \right) \rangle }\) where \({n}\) is an integer and the angled brackets indicate the profiled mean over all events, have been proposed [23–25]. In addition, taking the ratio of different angular moments is predicted to enhance BFKL effects [24, 26, 27].

Previous measurements have been made of dijet production for which a strict veto, of order of \({\Lambda _\text {QCD}}\), was imposed on the emission of additional radiation in the inter-jet region, so-called “rapidity gap” events, at HERA [28–30] and at the Tevatron [31–35]. At the LHC, measurements of forward rapidity gaps and dijet production have been made by ATLAS [36, 37], while ratios of exclusive-to-inclusive dijet cross section s have been measured at CMS [38]. Azimuthal decorrelations for central dijet s have also been measured at the LHC by ATLAS [39] and CMS [40] and before that by D0 at the Tevatron [41]. Finally, a previous study of azimuthal angular decorrelations for widely separated dijet s was made by D0 at the Tevatron [42].

This paper presents measurements of the gap fraction and the mean number of jets in the rapidity interval as functions of both the dijet rapidity separation and the scalar average of the transverse momenta of the dijet s. Measurements of the first azimuthal angular moment, the ratio of the first two moments and the double-differential cross section s as functions of \({\Delta \phi }\) and \({\Delta y}\) are also presented, both for an inclusive dijet sample and for events where a jet veto is imposed. Previous results are extended out to a dijet rapidity separation of \({{\Delta y} = 8}\) as well as to dijet transverse momenta up to \({{\overline{p_{\mathrm{T}}}} = 1.5\,\mathrm{TeV}}\), the effective kinematic limits of the ATLAS detector for \({pp}\) collisions at a centre-of-mass energy, \({\sqrt{s}=7\,\mathrm{TeV}}\). The measurements are obtained using the full \({pp}\) collision datasets recorded during 2010 and 2011, corresponding to integrated luminosities of \({36.1\pm 1.3}\,\mathrm{pb}^{-1}\) and \({4.5\pm 0.1}\,\mathrm{fb}^{-1}\), respectively [43]. The two datasets are used in complementary areas of phase-space: the data collected during 2010 are used in the full rapidity range covered by the detector, probing large rapidity separations, with a veto scale of \(20\,\mathrm{GeV}\), while the data collected during 2011 are used in a restricted rapidity range with a veto scale of \(30\,\mathrm{GeV}\) but can access higher values of \({\overline{p_{\mathrm{T}}}}\).

The content of the paper is as follows. Section 2 describes the ATLAS detector followed by Sect. 3, which details the Monte Carlo simulation samples used. Jet reconstruction and event selection are presented in Sect. 4 and Sect. 5 respectively. The correction for detector effects is shown in Sect. 6 and discussion of systematic uncertainties on the measurement is in Sect. 7. Section 8 discusses the theoretical predictions before the results are presented in Sect. 9. Finally, the conclusions are given in Sect. 10.

2 The ATLAS detector

ATLAS [44] is a general-purpose detector surrounding one of the interaction points of the LHC. The main detector components relevant to this analysis are the inner tracking detector and the calorimeters; in addition, the minimum bias trigger scintillators (MBTS) are used for selecting events during early data taking. The inner tracking detector covers the pseudorapidity range \({{|{\eta } |} <2.5}\) Footnote 1 and has full coverage in azimuthal angle. There are three main components to the inner tracker. In order, moving outwards from the beam-pipe, these are the silicon pixel detector, the silicon microstrip detector and the straw-tube transition–radiation tracker. These components are arranged in concentric layers and immersed in a \(2\,\mathrm{T}\) magnetic field provided by the superconducting solenoid magnet.

The calorimeter is also divided into sub-detectors, providing overall coverage for \({{|{\eta } |} <4.9}\). The electromagnetic calorimeter, covering the region \({{|{\eta } |} <3.2}\), is a high-granularity sampling detector in which the active medium is liquid argon (LAr) interspaced with layers of lead absorber. The hadronic calorimeters are divided into three sections: a tile scintillator/steel calorimeter is used in both the barrel (\({{|{\eta } |} <1.0}\)) and extended barrel cylinders (\({0.8<{|{\eta } |} <1.7}\)) while the hadronic endcap (\({1.5<{|{\eta } |} <3.2}\)) consists of LAr/copper calorimeter modules. The forward calorimeter measures both electromagnetic and hadronic energy in the range \({3.2<{|{\eta } |} <4.9}\) using LAr/copper and LAr/tungsten modules.

The MBTS system consists of 32 scintillator counters, organized into two disks with one on each side of the detector. They are located in front of the end-cap calorimeter cryostats and cover the region \({2.1<{|{\eta } |} <3.8}\).

The online trigger selection used in this analysis employs the minimum bias and calorimeter jet triggers [45]. The minimum bias triggers are only available at the hardware level, while the calorimeter triggers have both hardware and software levels. The Level-1 (L1) hardware-based trigger provides a fast, but low-granularity, reconstruction of energy deposited in towers in the calorimeter; the Level-2 (L2) software implements a simple jet reconstruction algorithm in a window around the region triggered at L1; and finally the Event Filter (EF) performs a more detailed jet reconstruction procedure taking information from the entirety of the detector. The efficiency of jet triggers is determined using a bootstrap method, starting from the fully efficient MBTS trigger [45]. Between March and August of 2010, only L1 information was used to select events; both the L1 and L2 stages were used for the remainder of the 2010 data-taking period and all three levels were required for data taken during 2011.

3 Monte Carlo event simulation

Simulated proton–proton collisions at \({\sqrt{s} = 7\,\mathrm{TeV}}\) were generated using the pythia 6.4 [46] program. These were used only to derive systematic uncertainties and to correct for detector effects; for this purpose they are compared against uncorrected data. Additional samples used to compare theoretical predictions to the data are described in Sect. 8.

The pythia program implements leading-order (LO) QCD matrix elements for \({2\rightarrow 2}\) processes followed by \({p_{\mathrm{T}}}\)-ordered parton showers and the Lund string hadronisation model. The underlying event in pythia is modelled by multiple-parton interactions interleaved with the initial-state parton shower.

The events were generated using the MRST LO* parton distribution functions (PDFs) [47, 48]. Samples which simulated the data-taking conditions during 2010 (2011) used version 6.423 (6.425) of the generator, together with the ATLAS AMBT1 [49] (Perugia 2011 [50]) underlying event tune. For the samples simulating the data-taking conditions from 2011, additional \(pp\) collisions were overlaid onto the hard scatter in the correct proportions to replicate this effect in the data. The final-state particles were passed through a detailed geant4 [51] simulation of the ATLAS detector [52] before being reconstructed using the same software used to process data.

4 Jet reconstruction

The collision events selected by the ATLAS trigger system were fully reconstructed offline. Energy deposits in the calorimeter left by electromagnetic and hadronic showers were calibrated to the electromagnetic (EM) scaleFootnote 2. Three-dimensional topological clusters (“topoclusters”) [53] were constructed from seed calorimeter cells according to an iterative procedure designed to suppress electronic noise [54]. Each of these was then treated as a massless particle with direction given by its energy-weighted barycentre. The topoclusters were then passed as input to the FastJet [55] implementation of the \({\mathrm{anti}\hbox {-}{k_t}}\) jet algorithm [56] with distance parameter \({R=0.6}\) and full four-momentum recombination.

The jets built by the \({\mathrm{anti}\hbox {-}{k_t}}\) algorithm were then calibrated in a multi-step procedure. Additional energy arising from “in-time pileup” (simultaneous \(pp\) collisions within a single bunch crossing) was subtracted using a correction derived from data. Each event was required to have at least one primary vertex, reconstructed using two or more tracks, each with \({{p_{\mathrm{T}}} > 400\,\mathrm{MeV}}\) and the primary vertex with the highest \({\sum {p_{\mathrm{T}}^2}}\) of tracks associated with it was identified as the origin of the hard scatter. The jet position was recalibrated to point to this identified hard scatter primary vertex, rather than the geometric centre of the detector. A series of \({p_{\mathrm{T}}}\)- and \({\eta }\)-dependent energy correction factors derived from simulated events were used to correct for the response of the detector to jets. For the data collected during 2011, additional calibration steps were applied. Energy contributions, which were usually negative, coming from “out-of-time pileup” (residual electronic effects from previous \(pp\) collisions) were corrected for using an offset correction derived using simulation.

A final in situ calibration, using \({Z}\)+jet balance, \({\gamma }\)+jet balance and multi-jet balance, was then applied to correct for residual differences in jet response between the simulation and data. The calibration procedure is described in more detail elsewhere [57, 58].

5 Event selection

The measurements were performed using only the data from specific runs and run periods in which the detector, trigger and reconstructed physics objects satisfied data-quality selection criteria. Beam background was rejected by requiring at least one primary vertex in each event while selection requirements were applied to the hard-scatter vertex to minimise contamination from pileup. For data collected during 2010, the event was required to have only one primary vertex with five or more associated tracks; the proportion of such events was 93 % in the early low-luminosity runs, falling to 21 % in the high-luminosity runs at the end of the year. For data collected during 2011, the hard-scatter vertex was required to have at least three associated tracks. Jets arising from pileup were rejected using the jet vertex fraction (JVF). The JVF takes all tracks matched to the jet of interest and measures the ratio of \({\sum {p_{\mathrm{T}}}}\) from tracks which originated in the hard-scatter vertex to the \({\sum {p_{\mathrm{T}}}}\) of all tracks matched to the jet. For this analysis only jets with \({\text {JVF}>0.75}\) were used; all other jets were considered to have arisen from pileup and were therefore ignored. Due to the limited coverage of the tracking detectors, the JVF is only available for jets satisfying \({{|y|} < 2.4}\), which limits the acceptance in rapidity for 2011 data.

Due to the high instantaneous luminosity reached by the LHC, only high-threshold jet triggers remained unprescaled throughout the data-taking period in question. Jets with transverse momentum below the lowest-threshold unprescaled trigger were therefore only recorded using prescaled triggers. For data collected during 2011, \({\overline{p_{\mathrm{T}}}}\) was used to determine the most appropriate trigger to use for each event. Among all of the triggers determined to be fully efficient at the particular \({\overline{p_{\mathrm{T}}}}\) in question, the one which had been least prescaled was selected: only events passing this trigger were considered.

For the data collected during 2010, it was necessary to combine triggers from the central region (\({{|{\eta } |} \le 3.2}\)) and the forward region (\({3.1 < {|{\eta } |} \le 4.9}\)) of the detector, due to the large \({\Delta y}\) span under consideration. In each of these regions, efficiency curves as a function of jet transverse momentum were calculated for each trigger on a per-jet basis, rather than the per-event basis described above. The triggers were ordered according to their prescales and a lookup table was created, showing the point at which each trigger reached a plateau of 99 % efficiency.

An appropriate trigger was chosen for each of the two leading (highest transverse momentum) jets in each event; this was the lowest prescale trigger to have reached its efficiency plateau at the relevant \({p_{\mathrm{T}}}\) and \({|y|}\). The dijet event was then accepted if the event satisfied the trigger appropriate to the leading jet, the subleading jet or both. This procedure maximised event acceptance, since the random factor inherent in the trigger prescale meant that some events could be accepted based on the properties of the subleading jet even when the appropriate trigger for the leading jet had not fired. In order to combine overlapping triggers with different prescales, the procedure detailed in Ref. [59] was followed. In some less well-instrumented or malfunctioning regions of the detector, the per-jet trigger efficiency plateau occurred at less than the usual 99 % point. This introduced a measurable trigger inefficiency, which was corrected for by weighting events containing jets in these regions by the inverse of the efficiency.

Jets were required to have transverse momentum \({p_{\mathrm{T}}} > 20 (30)\,\mathrm{GeV}\) for data collected during 2010 (2011), thus ensuring that they remained in a region for which the jet energy scale had been evaluated (see Sect. 4). Jets were restricted in rapidity to \({{|y|} < 4.4}\) for data collected in 2010, with a stricter requirement of \({{|y|} < 2.4}\) applied in 2011 to ensure that the JVF could be determined for all jets. The two leading jets satisfying these criteria were then identified as the dijet system of interest. The event was rejected if the transverse momentum of the leading jet was below \(60\,\mathrm{GeV}\) or if that of the subleading jet was below \(50\,\mathrm{GeV}\). For the data collected during 2011, a minimum rapidity separation, \({{\Delta y} \ge 1}\), was required to enhance the physics of interest. The veto scale, \({Q_{0}}\), was set to \({{p_{\mathrm{T}}} > 20 (30)\,\mathrm{GeV}}\) for data collected in 2010 (2011).

Jet cleaning criteria [60, 61] were developed in order to reject fake jets, those which come from cosmic rays, beam halo or detector noise. These criteria also removed jets which were badly measured due to falling into poorly instrumented regions. Events collected in 2010 (2011) were rejected if they contained any jet with transverse momentum \({{p_{\mathrm{T}}} > 20 (30)\,\mathrm{GeV}}\) that failed these cleaning cuts. This requirement was also applied to the simulated samples where appropriate.

Additionally, a problem developed in the LAr calorimeter during 2011 running, resulting in a region in which energies were not properly recorded. As a result, a veto was applied to events that had at least one jet with \({{p_{\mathrm{T}}} > 30\,\mathrm{GeV}}\) falling in the vicinity of this region during the affected data-taking periods. This effect was replicated in the relevant simulation samples, which were reweighted to the data to ensure that an identical proportion of such events were included.

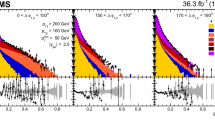

In total, 1188583 events were accepted from the data collected in 2010, with 852030 of these being gap events: those with no additional jets above the veto scale in the rapidity interval between the dijet s. For data collected during 2011, 1411676 events were accepted, with 938086 of these being gap events. Data from 2010 and from 2011 were compared after being analysed separately and were found to agree to within the experimental uncertainties in a region kinematically accessible with both datasets. Figure 1 shows the comparison between detector level data and pythia 6.4 simulation in dijet events. The normalised number of events is presented as a function of \({\Delta y}\) in Fig. 1a and of \({\overline{p_{\mathrm{T}}}}\) in Fig. 1b. In both cases, and in all similar distributions, the pythia 6.4 event generator and geant4 detector simulation give a fair description of the uncorrected data.

Comparison between uncorrected data (black points) and detector level pythia 6.4 Monte Carlo events (solid line). Statistical errors on the data are shown. The normalised distribution of events is presented as a function of a \({\Delta y}\) and b \({\overline{p_{\mathrm{T}}}}\). The ratio of the pythia 6.4 prediction to the data is shown in the bottom panel

6 Correction for detector effects

Before comparing to theoretical predictions, the data are corrected for all experimental effects so that they correspond to the particle-level final state. This comprises all stable particles, defined as those with a proper lifetime longer than \(10\,\mathrm{ps}\), including muons and neutrinos from decaying hadrons [62]. The correction for detector resolutions and inefficiencies is made by unfolding the measured distributions using the Bayesian procedure [63] implemented in the RooUnfold framework [64].

Bayesian unfolding entails using simulated events to calculate a transfer matrix which encodes bin-to-bin migrations between particle-level distributions and the equivalent reconstructed distributions at detector level. A series of bin transition probabilities are obtained from the matrix, and Bayes’ theorem is used to calculate the corresponding inverse probabilities; the process is then repeated iteratively. In this paper, the unfolding is performed using the pythia 6.4 samples described in Sect. 3 and the number of iterations is set to two throughout, as this was found to be sufficient to achieve convergence.

As the results shown here are constructed from multi-dimensional distributions, this must also be taken into account when unfolding. Each distribution is unfolded in two or three dimensions, with these dimensions being relevant combinations of \({\Delta \phi }\), \({\Delta y}\), \({\overline{p_{\mathrm{T}}}}\), \({\cos \left( \pi - \Delta \phi \right) }\), \({\cos \left( 2 \Delta \phi \right) }\) and the classification of the event as gap or non-gap. This means that the transfer matrices are four-dimensional or six-dimensional, rather than the usual two-dimensional case. This allows the effect of all possible bin migrations to be evaluated.

The statistical uncertainties are estimated by performing pseudo-experiments [65]. Each event in data is assigned a weight drawn from a Poisson distribution with unit mean for each pseudo-experiment and these weighted events are used to build a series of one thousand replicas for each distribution. Each of these replicas is unfolded, and the root-mean-squared spread around the nominal value is used to measure the statistical error on the unfolded result.

Possible bias arising from mismodelling of the distributions considered here is evaluated by performing a self-consistency check using pythia 6.4 events. The pythia 6.4 simulation is reweighted on an event-by-event basis using a three-dimensional function which is chosen in such a way as to ensure that the output of this reweighting step will approximate the uncorrected detector level data. For the pythia sample simulated to replicate 2010 data-taking conditions, the reweighting is carried out as a function of \({\Delta y}\), \({\Delta \phi }\) and the highest \({p_{\mathrm{T}}}\) among jets in the rapidity interval; for the sample simulated to replicate 2011 data-taking conditions, \({\overline{p_{\mathrm{T}}}}\) is used instead of \({\Delta y}\). This reweighted detector level pythia 6.4 sample is then unfolded using the original transfer matrix and the result is compared with the particle-level spectrum, which was itself implicitly modified through the event-by-event reweighting. Any remaining difference between these distributions is then taken as a systematic uncertainty associated with the unfolding procedure.

7 Systematic uncertainties

For the most part, the dominant systematic uncertainty on these measurements is the one coming from the jet energy scale (JES) calibration. This uncertainty was determined using a combination of in situ calibration techniques, as detailed earlier, test-beam data and Monte Carlo modelling [57, 58]. It comprises 13 independent components for data taken in 2010 and 64 components for the 2011 data. The uncertainty components fully account for the differences in jet calibration discussed in Sect. 4, thus ensuring that data collected in the two years are fully compatible within their uncertainties.

For each component, all jet energies and transverse momenta are shifted up or down by one standard deviation of the uncertainty and the shifted jets are then passed through the full analysis chain. The measured distributions are unfolded and compared to the nominal distribution; the difference between these is taken as the uncertainty for the component in question. These fractional differences are then combined in quadrature, since the components are uncorrelated, to compute the jet energy scale uncertainty.

The uncertainty on the energy resolution of jets (JER) is derived in situ, using dijet balance techniques and the bisector method; it is then cross-checked through comparison with simulation [66]. Jet angular resolutions are estimated using simulated events and cross-checked using in situ techniques, where good agreement is observed with the simulation. These resolution uncertainties are propagated through the unfolding procedure by smearing the energy or angle of each reconstructed jet in each simulated event by a Gaussian function, with its width given by the quadratic difference between the nominal resolution and the resolution after shifting by the resolution uncertainty. This procedure is repeated one thousand times for each jet, to remove the effects of statistical fluctuations. The resulting smeared events are used to calculate a modified transfer matrix incorporating the resolution uncertainty; this matrix is then used to unfold the data. The ratio of this distribution to the distribution unfolded using the nominal transfer matrix is taken as a systematic uncertainty.

The trigger efficiency correction which is applied to events with jets falling into poorly measured detector regions also has an associated systematic uncertainty. This is determined by increasing or decreasing the measured inefficiencies by an absolute shift of 10 %, with a maximum efficiency of 100 %. The full correction procedure is then carried out using these new correction factors and the difference between this and the nominal distribution is taken as a systematic uncertainty on the measurement.

The effect of statistical fluctuations in the samples used to derive these uncertainties is also estimated by performing pseudo-experiments. Each event in the sample is assigned a weight drawn from a Poisson distribution with unit mean for each pseudo-experiment and these weighted events are used to build a series of one thousand replicas of the transfer matrices. These replicas are then used to unfold the nominal data sample; the root-mean-squared spread around the nominal value provides the systematic error due to the limited statistical precision of the Monte Carlo samples used.

For each of the systematic variations considered, the pseudo-experiment approach applied to the data is used to determine statistical uncertainties on each distribution and correlations between bins. Each fractional uncertainty was smoothed to remove these statistical fluctuations before the uncertainties were combined. To do this, each systematic component was rebinned until each bin showed a statistically significant deviation from the nominal value. This rebinned distribution was then smoothed using a Gaussian kernel and the smoothed function was evaluated at each of the original set of bin centres. The overall fractional uncertainty was then obtained by summing the individual sources in quadrature.

The uncertainty on the unfolding procedure, estimated as described in Sect. 6 is also important in some distributions. There is also an additional uncertainty on the luminosity calibration which is not included here. For the cross section s this is \(3.5\,\%\) while it cancels for all other distributions.

Other sources of uncertainty were examined, found to be negligible and therefore ignored. Specifically, residual pileup contributions from soft-scatter vertices were studied by dividing the data into two subsamples coming from high-luminosity and low-luminosity runs. The disagreement between these subsamples was found to be negligible and hence no separate systematic uncertainty was assigned. The effect of varying the cut applied on the JVF was also studied and was found to produce differences in the detector-level distributions. However, such differences were well-reproduced by the pythia 6.4 sample and the resulting deviations after unfolding were much smaller than other uncertainties. Accordingly, no systematic uncertainty was assigned here either.

Figure 2 shows the summary of systematic uncertainties for two sample distributions: Fig. 2a for the gap fraction as a function of \({\Delta y}\) and Fig. 2b for the \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) distribution as a function of \({\overline{p_{\mathrm{T}}}}\).

Summary of systematic uncertainties on a the gap fraction as a function of \({\Delta y}\) and b the \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) distribution as a function of \({\overline{p_{\mathrm{T}}}}\). Here the systematic uncertainties from the jet energy scale (dark dashes), Monte Carlo statistical precision (dark dots), jet energy resolution (dark dashed-dots), unfolding (light dashes), jet \({\phi }\) resolution (light dots) and from residual trigger inefficiencies (light dashed-dots) are shown. The total systematic uncertainty (light cross-hatched area) is also shown

8 Theoretical predictions

Two state-of-the-art theoretical predictions, namely High Energy Jets (HEJ) [16, 67] and the powheg Box [68–70], are considered in this paper.

hej provides a leading-logarithmic (LL) calculation of the perturbative terms that dominate the production of multi-jet events when the jets span a large range in rapidity [16, 67, 71]. This formalism resums logarithms relevant in the Mueller–Navelet [72] limit, and incorporates a contribution from all final states with at least two hard jets. The purely partonic multi-jet output from hej can also be interfaced to the ariadne parton shower framework [73] to evolve the prediction to the hadron-level final state [74]. The ariadne program is based on the colour-dipole cascade model [75] in which gluon emissions are modelled as radiation from colour-connected partons, and provides soft and collinear radiation down to the hadronic scale, using pythia 6.4 for hadronisation. This accounts for radiation in the rapidity region outside the multi-jet system modelled by hej and represents a contribution from small-\(x\), BFKL-like, logarithmic terms.

The powheg Box (version r2169) provides a full next-to-leading-order dijet calculation and is interfaced to either pythia 8 [76] (AU2 tune with \(\alpha _\text {s}\) matching for the ISR [77]) or herwig [78] (AUET1 tune [79]) to provide all-order resummation of soft and collinear emissions using the parton shower approximation. The advantage over the simple \({2\rightarrow 2}\) matrix elements is that the emission of an additional third hard parton is calculated exactly in pQCD, allowing observables that depend on the third jet to be calculated with good accuracy [80].

The prediction provided by powheg uses the DGLAP formalism, while that provided by hej is based on BFKL. For all theoretical predictions, events were generated using the CT10 PDF set [81]. The orthogonal error sets provided as part of the CT10 PDF set were used in order to evaluate the uncertainty inherent in the PDF, at the 68 % confidence level, following the CTEQ prescription [82]. The default choice for the renormalisation and factorisation scales was the transverse momentum of the leading parton in each event. The uncertainty due to higher-order corrections was estimated for the powheg prediction by increasing and decreasing the scale by a factor of two and taking the envelope of these variations. For the hej predictions, an envelope of nineteen scale variations was considered. These were constructed by varying each scale upwards and downwards by factors of 2 and \(\sqrt{2}\), but excluding those cases where the ratio between the two scale factors was greater than two.

The scale uncertainty and PDF uncertainties were combined in quadrature to construct an overall uncertainty for each prediction. The PDF uncertainties are small across all of the phase-space regions considered in this paper and hence the predominant contribution to the uncertainty comes from the scale uncertainty. Theoretical uncertainties on the hej+ariadne prediction are not currently calculable and are not shown here. The range covered by the central values of the powheg+pythia 8 and powheg+herwig predictions gives an estimate of the uncertainty inherent in the parton shower matching procedure. This range, together with the uncertainty band on the powheg+pythia 8 prediction, can be considered together as a total theoretical uncertainty on the NLO+DGLAP prediction that can be compared to the predictions given by hej and hej+ariadne.

Finally, in order to allow comparisons against the fully corrected data distributions presented in this paper, the partons from hej or the final-state particles from hej+ariadne, powheg+pythia 8 and powheg+herwig were clustered together using the same jet algorithm and parameters as for the data.

9 Results and discussion

The fully corrected data are compared to next-to-leading-order theoretical predictions from powheg and hej, as explained in Sect. 8. The powheg prediction is presented after parton showering, hadronisation and underlying event simulation with either pythia 8 or herwig. As the uncertainties on these two powheg predictions are highly correlated, uncertainties are only shown on the powheg+pythia 8 prediction, with only the central value of the powheg+herwig prediction presented. Two hej curves are presented: one a pure parton-level prediction and the second after interfacing with the ariadne parton shower. The central value of the hej+ariadne prediction is shown, together with the statistical uncertainty on this prediction.

9.1 Gap fraction and mean jet multiplicity

Figures 3 and 4 show the gap fraction and the number of jets in the rapidity gap, respectively, as functions of \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\). Naïvely, it is expected from pQCD that the number of events passing the jet veto should be exponentially suppressed as a function of \({\Delta y}\) and \({\ln {\left( \overline{p_{\mathrm{T}}}/Q_{0}\right) }}\) due to the exchange of colour in the \({t}\)-channel [19]. However, non-exponential behaviour may become apparent in the tails of these distributions as the steeply falling parton distribution functions can reduce the probability of additional quark and gluon radiation from the dijet system and increase the gap fraction [15]. This can be understood by considering the behaviour at extreme values of \({\Delta y}\) or \({\overline{p_{\mathrm{T}}}}\), when all of the collision energy is used to create the dijet pair and little is available for additional radiation. As the gap fraction is expected to be smooth it must therefore begin increasing at some point, so as to reach unity when this kinematic limit is obtained. Furthermore, since the cross section for QCD colour-singlet exchange increases with jet separation [21], any contribution from such processes would also lead to an increase in the gap fraction at large \({\Delta y}\).

The measured gap fraction (black dots) as a function of a \({\Delta y}\) and b \({\overline{p_{\mathrm{T}}}}\). The inner error bars represent statistical uncertainty while the outer error bars represent the quadrature sum of the systematic and statistical uncertainties. For comparison, the predictions from parton-level hej (light-shaded cross-hatched band), hej+ariadne (mid-shaded dotted band), powheg+pythia 8 (dark-shaded hatched band) and powheg+herwig (dotted line) are also included. The ratio of the theory predictions to the data is shown in the bottom panel

The mean number of jets above the veto threshold in the rapidity interval bounded by the dijet system measured in data as a function of a \({\Delta y}\) and b \({\overline{p_{\mathrm{T}}}}\). For comparison, the hej, hej+ariadne, powheg+pythia 8 and powheg+herwig predictions are presented in the same way as Fig. 3

The data do indeed show exponential behaviour in Fig. 3 at low values of \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\), but deviate from purely exponential behaviour at the highest values of \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\), with the gap fraction reaching a plateau in both distributions. For the \({\overline{p_{\mathrm{T}}}}\) distribution, this plateau is qualitatively reproduced by all the predictions considered here, even those which do not provide good overall agreement with the data. The plateau observed in data for the \({\Delta y}\) distribution is not, however, as prominent in any of the theoretical predictions, which all continue to fall as \({\Delta y}\) increases. A similar excess was observed in previous experiments [28–35] and was attributed to colour-singlet exchange effects. However, here the spread of theoretical predictions is too large to allow definite conclusions to be drawn and improved calculations are needed before a quantitative statement can be made.

In the high-\({\Delta y}\) region, both powheg predictions slightly underestimate the gap fraction and hence overestimate the mean jet multiplicity in the rapidity interval. Partonic hej slightly overestimates the gap fraction for intermediate values of \({\Delta y}\). Interfacing hej to ariadne improves the description of the data across the \({\Delta y}\) spectrum.

powheg+pythia 8, which resums soft and collinear emissions through the parton shower approximation, provides a good description of the gap fraction and the mean jet multiplicity distributions as a function of \({\overline{p_{\mathrm{T}}}}\). On the other hand, the powheg+herwig model, which also provides a similar resummation, consistently predicts too much jet activity across the \({\overline{p_{\mathrm{T}}}}\) range. Conversely, hej, which does not attempt to resum these soft and collinear terms, provides a poor description of the data in the large \({\ln {\left( \overline{p_{\mathrm{T}}}/Q_{0}\right) }}\) limit. Significantly improved agreement with the data is seen when interfacing hej to the ariadne parton shower model, which performs a resummation of these terms. In fact, the prediction from hej+ariadne is similar to that from powheg+pythia 8 for most values of \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\).

9.2 Azimuthal decorrelations

Figure 5 shows the \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) and \({\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle \) distributions, as functions of \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\), for inclusive dijet events. For the azimuthal moments, \({\langle \cos \left( n \left( \pi - \Delta \phi \right) \right) \rangle }\), a decrease (increase) in azimuthal correlation manifests as a decrease (increase) in the azimuthal moment. As the dijet s deviate from a back-to-back topology, the second azimuthal moment falls more rapidly than the first (in the region \({{\Delta \phi } > \pi /2}\) where the majority of events lie). The ratio \({{\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle }\) is, therefore, expected to show a similar, but more pronounced, dependence on azimuthal correlation to that seen in the moments.

The measured a, b \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) and c, d \({{\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle }\) distributions as a function of a, c \({\Delta y}\) and b, d \({\overline{p_{\mathrm{T}}}}\). For comparison, the hej, hej+ariadne, powheg+pythia 8 and powheg+herwig predictions are presented in the same way as Fig. 3

The data show the expected qualitative behaviour of a decrease in azimuthal correlation with increasing \({\Delta y}\) and increase in azimuthal correlation with increasing \({\overline{p_{\mathrm{T}}}}\). Both powheg predictions underestimate the degree of azimuthal correlation except in the high-\({\overline{p_{\mathrm{T}}}}\) region, while hej predicts too much azimuthal correlation. In both cases, the changing degree of correlation with \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\) is, for the most part, well described by the predictions. The largest differences between the predictions and the data are seen at high \({\Delta y}\) and low \({\overline{p_{\mathrm{T}}}}\).

Additionally, it can be seen that the separation between theoretical predictions for the ratio \({\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle \) is significantly greater than for the \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) distribution alone, considering the uncertainties of these predictions. This means that the ratio gives enhanced discrimination between the DGLAP-like powheg and BFKL-like hej predictions, as predicted by theoretical calculations [83]. Here, neither hej nor powheg provide good agreement with the data. However, the hej+ariadne prediction gives a good description of the data for both low-\({\overline{p_{\mathrm{T}}}}\) and for \({\Delta y}\).

Figure 6 shows the corresponding \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) and \({{\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle }\) distributions for events that pass the veto requirement on additional jet activity in the rapidity interval bounded by the dijet system. In this case, with the jet veto suppressing additional quark and gluon radiation, the spectra show the opposite behaviour, namely a slight increase in correlation with \({\Delta y}\), which now agrees with the rise seen in the \({\overline{p_{\mathrm{T}}}}\) distribution. This can be explained by considering that as \({\Delta y}\) or \({\overline{p_{\mathrm{T}}}}\) increase, the veto requirement imposes an increasingly back-to-back topology on the dijet system. The spread of theoretical predictions is again large in each distribution, with the powheg predictions having too much decorrelation and hej predicting too little decorrelation.

The measured a, b \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) and c, d \({\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle \) distributions, for gap events as a function of a, c \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) \({\Delta y}\) and b, d \({\langle \cos \left( \pi - \Delta \phi \right) \rangle }\) \({\overline{p_{\mathrm{T}}}}\). The veto scale is \({Q_{0}}\) = 20 \((30)\,\mathrm{GeV}\) for data collected during 2010 (2011). For comparison, the hej, hej+ariadne, powheg+pythia 8 and powheg+herwig predictions are presented in the same way as Fig. 3

The use of the ariadne parton shower again brings hej into better agreement with the data, although not as well as in the inclusive case. The best agreement is given by powheg+pythia 8, especially in the highest \({\overline{p_{\mathrm{T}}}}\) bins. hej agrees less well with the data than in the inclusive case, showing that this region of widely separated hard jets without additional radiation in the event is not well reproduced by the hej calculation. A quantitative statement about the degree of agreement seen here between hej+ariadne and the data cannot, however, be made in the absence of theoretical uncertainties on this calculation.

Figures 7 and 8 show the double-differential dijet cross section s as functions of \({\Delta \phi }\) and \({\Delta y}\) for inclusive and gap events respectively. The predictions from powheg+pythia 8 and powheg+herwig provide a good overall description of the measured cross section s, within the experimental and theoretical uncertainties, with the only notable deviations occurring at high \({\Delta \phi }\) in the lowest \({\Delta y}\) bins. This is in agreement with the observations in previous ATLAS studies [1]. hej underestimates the cross section seen in data throughout the \({\Delta y}\) range, although it provides a good description of the overall shape. This underestimate is noticeably enhanced when only gap events are considered, which is a regime far from the wide-angle, hard-emission limit for which the underlying resummation procedure is valid.

The measured double-differential cross section s (black points) as a function of \({\Delta \phi }\) for eight slices in \({\Delta y}\). For comparison, the hej, hej+ariadne, powheg+pythia 8 and powheg+herwig predictions are presented in the same way as Fig. 3. In a the absolute comparison is shown, while in b the ratios of the predictions to the data are shown

The measured double-differential cross section s as a function of \({\Delta \phi }\) for eight slices in \({\Delta y}\), for gap events. The veto scale is \({{Q_{0}} = 20\,\mathrm{GeV}}\). For comparison, the hej, hej+ariadne, powheg+pythia 8 and powheg+herwig predictions are presented in the same way as Fig. 3. In a the absolute comparison is shown, while in b the ratios of the predictions to the data are shown

10 Summary

Theoretical predictions based on perturbative QCD are tested by studying dijet events in extreme regions of phase space. Measurements of the gap fraction, as a function of both the rapidity separation and the average dijet transverse momentum, together with the azimuthal decorrelation are presented as functions of \({\Delta y}\) and \({\overline{p_{\mathrm{T}}}}\), extending previous studies up to eight rapidity units in \({\Delta y}\) and \(1.5\,\mathrm{TeV}\) in \({\overline{p_{\mathrm{T}}}}\). The measurements are used to investigate the predicted breakdown of DGLAP evolution and the appearance of BFKL effects by comparing the data to the all-order resummed leading-logarithmic calculations of hej and the full next-to-leading-order calculations of powheg. The full data sample collected with the ATLAS detector in \(7\,\mathrm{TeV}\) \(pp\) collisions at the LHC is used, corresponding to integrated luminosities of \({36.1}\,\mathrm{pb}^{-1}\) and \({4.5}\,\mathrm{fb}^{-1}\) for data collected during 2010 and 2011 respectively.

The data show the expected behaviour of a reduction of gap events, or equivalently, an increase in jet activity, for large values of \({\overline{p_{\mathrm{T}}}}\) and \({\Delta y}\), together with an associated rise in the number of jets in the rapidity interval. The azimuthal moments show an increase in correlation with increasing \({\overline{p_{\mathrm{T}}}}\) and an increase in (de)correlation with increasing \({\Delta y}\) for gap (all) events. The expected increase in cross section with \({\Delta \phi }\) is also seen.

The powheg+pythia 8 prediction provides a reasonable description of the data in most distributions, but shows disagreement in some areas of phase-space, particularly for the inclusive azimuthal distributions in the limit of large \({\Delta y}\) or small \({\overline{p_{\mathrm{T}}}/Q_{0}}\). When the herwig parton shower is used instead of pythia 8, the agreement with data worsens as herwig predicts too many jets above the veto scale. The partonic hej prediction provides a poor description of the data in most of the distributions presented, with the exception of the gap fraction and jet multiplicity distributions as a function of \({\Delta y}\). The addition of the ariadne parton shower, which accounts for some of the soft and collinear terms ignored in the hej approximation, brings the prediction closer to powheg+pythia 8.

No single theoretical prediction is able to simultaneously describe the data over the full phase-space region considered here; in general, however, the best agreement is given by powheg+pythia 8 and hej+ariadne. The variable best able to discrimate between the DGLAP-like prediction from powheg+pythia 8 and the BFKL-like prediction from hej is \({{\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle }\). Here, it can clearly be seen that neither of these predictions describe the data accurately as a function of either \({\Delta y}\) or \({\overline{p_{\mathrm{T}}}}\). It should be noted, however, that when the inclusive event sample is considered, the hej+ariadne model, a combination of BFKL-like parton dynamics with the colour-dipole cascade model, provides a good description of \({{\langle \cos \left( 2 \Delta \phi \right) \rangle }/ {\langle \cos \left( \pi - \Delta \phi \right) \rangle } \rangle }\) in the large \({\Delta y}\) and small \({\overline{p_{\mathrm{T}}}}\) regions, where the powheg models show some divergence from the data.

In most of the phase-space regions presented, the experimental uncertainty is smaller than the spread of theoretical predictions. These disparities between predictions represent a genuine difference in the modelling of the underlying physics and the data can, therefore, provide a crucial input for constraining parton-shower models in the future—particularly in the case of QCD radiation between widely separated or high transverse momentum dijet s. Improved theoretical predictions are essential before any conclusions can be drawn about the presence or otherwise of BFKL effects or colour-singlet exchange in these data.

Notes

ATLAS uses a right-handed coordinate system with its origin at the nominal interaction point (IP) in the centre of the detector and the \({z}\)-axis along the beam pipe. The \({x}\)-axis points from the IP to the centre of the LHC ring, and the \({y}\)-axis points upward. Cylindrical coordinates \({(r,\phi )}\) are used in the transverse plane, \({\phi }\) being the azimuthal angle around the beam pipe. The pseudorapidity is defined in terms of the polar angle \({\theta }\) as \({\eta =-\ln \tan (\theta /2)}\). The rapidity of a particle with respect to the beam axis is defined as \({y = (1/2)\ln \left[ (E+p_z)/(E-p_z)\right] }\).

The electromagnetic scale is the basic calorimeter signal scale for the ATLAS calorimeters. It gives the correct response for the energy deposited in electromagnetic showers, but it does not correct for the different response for hadrons.

References

ATLAS Collaboration, Eur. Phys. J. C 71, 1512 (2011). arXiv:1009.5908 [hep-ph]

CMS Collaboration, Phys. Lett. B 700, 187–206 (2011). arXiv:1104.1693 [hep-ex]

CMS Collaboration, Phys. Rev. Lett. 107, 132001 (2011). arXiv:1106.0208 [hep-ex]

ATLAS Collaboration, Phys. Rev. D 86, 014022 (2012). arXiv:1112.6297 [hep-ex]

CMS Collaboration, Phys. Rev. D 87(11), 112002 (2013). arXiv:1212.6660 [hep-ex]

ATLAS Collaboration, Eur. Phys. J. C 73, 2509 (2013). arXiv:1304.4739 [hep-ex]

ATLAS Collaboration, arXiv:1312.3524 [hep-ex]

L. Lipatov, Sov. J. Nucl. Phys. 23, 338–345 (1976)

E. Kuraev, L. Lipatov, V.S. Fadin, Sov. Phys. JETP 44, 443–450 (1976)

E. Kuraev, L. Lipatov, V.S. Fadin, Sov. Phys. JETP 45, 199–204 (1977)

I. Balitsky, L. Lipatov, Sov. J. Nucl. Phys. 28, 822–829 (1978)

V. Gribov, L. Lipatov, Sov. J. Nucl. Phys. 15, 438–450 (1972)

G. Altarelli, G. Parisi, Nucl. Phys. B 126, 298 (1977)

Y.L. Dokshitzer, Sov. Phys. JETP 46, 641–653 (1977)

R.M. Duran Delgado, J.R. Forshaw, S. Marzani, M.H. Seymour, JHEP 1108, 157 (2011). arXiv:1107.2084 [hep-ph]

J.R. Andersen, J.M. Smillie, JHEP 1001, 039 (2010). arXiv:0908.2786 [hep-ph]

J.R. Forshaw, A. Kyrieleis, M. Seymour, JHEP 0506, 034 (2005). arXiv:hep-ph/0502086

J.R. Forshaw, A. Kyrieleis, M. Seymour, JHEP 0608, 059 (2006). arXiv:hep-ph/0604094

J. Forshaw, J. Keates, S. Marzani, JHEP 0907, 023 (2009). arXiv:0905.1350 [hep-ph]

J.R. Forshaw, M.H. Seymour, A. Siodmok, JHEP 1211, 066 (2012). arXiv:1206.6363 [hep-ph]

A.H. Mueller, W.-K. Tang, Phys. Lett. B 284, 123–126 (1992)

C. Marquet, C. Royon, Phys. Rev. D 79, 034028 (2009). arXiv:0704.3409 [hep-ph]

A. Sabio Vera, F. Schwennsen, Nucl. Phys. B 776, 170–186 (2007). arXiv:hep-ph/0702158

M. Angioni, G. Chachamis, J. Madrigal, A. Sabio Vera, Phys. Rev. Lett. 107, 191601 (2011). arXiv:1106.6172 [hep-th]

A. Sabio Vera, Nucl. Phys. B 746, 1–14 (2006). arXiv:hep-ph/0602250

B. Ducloué, L. Szymanowski, S. Wallon, PoS QNP 2012, 165 (2012). arXiv:1208.6111 [hep-ph]

B. Ducloue, L. Szymanowski, S. Wallon, JHEP 1305, 096 (2013). arXiv:1302.7012 [hep-ph]

ZEUS Collaboration, M. Derrick et al., Phys. Lett. B 369, 55–68 (1996). arXiv:hep-ex/9510012

H1 Collaboration, C. Adloff et al., Eur. Phys. J. C 24, 517–527 (2002). arXiv:hep-ex/0203011 [hep-ex]

ZEUS Collaboration, S. Chekanov et al., Eur. Phys. J. C 50, 283–297 (2007). arXiv:hep-ex/0612008

D0 Collaboration, S. Abachi et al., Phys. Rev. Lett. 72, 2332–2336 (1994)

CDF Collaboration, F. Abe et al., Phys. Rev. Lett. 74, 855–859 (1995)

CDF Collaboration, F. Abe et al., Phys. Rev. Lett. 80, 1156–1161 (1998)

CDF Collaboration, F. Abe et al., Phys. Rev. Lett. 81, 5278–5283 (1998)

D0 Collaboration, B. Abbott et al., Phys. Lett. B 440, 189–202 (1998). arXiv:hep-ex/9809016

ATLAS Collaboration, JHEP 1109, 053 (2011). arXiv:1107.1641 [hep-ex]

ATLAS Collaboration, Eur. Phys. J. C 72, 1926 (2012). arXiv:1201.2808 [hep-ex]

CMS Collaboration, Eur. Phys. J. C 72, 2216 (2012). arXiv:1204.0696 [hep-ex]

ATLAS Collaboration, Phys. Rev. Lett. 106, 172002 (2011). arXiv:1102.2696 [hep-ex]

CMS Collaboration, Phys. Rev. Lett. 106, 122003 (2011). arXiv:1101.5029 [hep-ex]

D0 Collaboration, V. Abazov et al. Phys. Rev. Lett. 94, 221801 (2005). arXiv:hep-ex/0409040

D0 Collaboration Collaboration, S. Abachi et al., Phys. Rev. Lett. 77, 595–600 (1996). arXiv:hep-ex/9603010

ATLAS Collaboration, Eur. Phys. J. C 73, 2518 (2013). arXiv:1302.4393 [hep-ex]

ATLAS Collaboration, JINST 3, S08003 (2008)

ATLAS Collaboration, Eur. Phys. J. C 72, 1849 (2012). arXiv:1110.1530 [hep-ex]

T. Sjostrand, S. Mrenna, P.Z. Skands, JHEP 0605, 026 (2006). arXiv:hep-ph/0603175

A. Martin, W. Stirling, R. Thorne, G. Watt, Eur. Phys. J. C63, 189–285 (2009). arXiv:0901.0002 [hep-ph]

A. Sherstnev, R. Thorne, arXiv:0807.2132 [hep-ph]

ATLAS Collaboration, New J. Phys. 13, 053033 (2011). arXiv:1012.5104 [hep-ex]

P.Z. Skands, Phys. Rev. D 82, 074018 (2010). arXiv:1005.3457 [hep-ph]

GEANT4 Collaboration, S. Agostinelli et al., Nucl. Instrum. Meth. A 506, 250–303 (2003)

ATLAS Collaboration, Eur. Phys. J. C 70, 823–874 (2010). arXiv:1005.4568 [physics.ins-det]

M. Aharrouche et al., Nucl. Instrum. Meth. A 614, 400–432 (2010)

ATLAS Collaboration, ATL-LARG-PUB-2009-001-2. http://cds.cern.ch/record/1112035

M. Cacciari, G.P. Salam, Phys. Lett. B 641, 57–61 (2006). arXiv:hep-ph/0512210

M. Cacciari, G.P. Salam, G. Soyez, JHEP 0804, 063 (2008). arXiv:0802.1189 [hep-ph]

ATLAS Collaboration, Eur. Phys. J. C 73, 2304 (2013). arXiv:1112.6426 [hep-ex]

ATLAS Collaboration, arXiv:1406.0076 [hep-ex]

V. Lendermann et al., Nucl. Instrum. Meth. A 604, 707–718 (2009). arXiv:0901.4118 [hep-ex]

ATLAS Collaboration, ATLAS-CONF-2010-038. http://cds.cern.ch/record/1277678

ATLAS Collaboration, ATLAS-CONF-2012-020. http://cds.cern.ch/record/1430034

C. Buttar et al., arXiv:0803.0678 [hep-ph]

G. D’Agostini, arXiv:1010.0632 [physics.data-an]

T. Adye, arXiv:1105.1160 [physics.data-an]

B. Efron, Ann. Stat. 7, 1–26 (1979)

ATLAS Collaboration, Eur. Phys. J. C 73, 2306 (2013). arXiv:1210.6210 [hep-ex]

J.R. Andersen, J.M. Smillie, Phys. Rev. D 81, 114021 (2010). arXiv:0910.5113 [hep-ph]

P. Nason, JHEP 0411, 040 (2004). arXiv:hep-ph/0409146

S. Frixione, P. Nason, C. Oleari, JHEP 0711, 070 (2007). arXiv:0709.2092 [hep-ph]

S. Alioli, P. Nason, C. Oleari, E. Re, JHEP 1006, 043 (2010). arXiv:1002.2581 [hep-ph]

J.R. Andersen, J.M. Smillie, JHEP 1106, 010 (2011). arXiv:1101.5394 [hep-ph]

A.H. Mueller, H. Navelet, Nucl. Phys. B 282, 727 (1987)

L. Lonnblad, Comput. Phys. Commun. 71, 15–31 (1992)

J.R. Andersen, L. Lonnblad, J.M. Smillie, JHEP 1107, 110 (2011). arXiv:1104.1316 [hep-ph]

L. Lonnblad, Z. Phys. C 65, 285–292 (1995)

T. Sjostrand, S. Mrenna, P.Z. Skands, Comput. Phys. Commun. 178, 852–867 (2008). arXiv:0710.3820 [hep-ph]

ATLAS Collaboration, ATL-PHYS-PUB-2012-003. http://cds.cern.ch/record/1474107

G. Corcella et al., JHEP 0101, 010 (2001). arXiv:hep-ph/0011363

ATLAS Collaboration, ATL-PHYS-PUB-2010-014. http://cds.cern.ch/record/1303025

S. Alioli, K. Hamilton, P. Nason, C. Oleari, E. Re, JHEP 1104, 081 (2011). arXiv:1012.3380 [hep-ph]

J. Pumplin et al., JHEP 0207, 012 (2002). arXiv:0201195 [hep-ph]

J.M. Campbell, J. Huston, W. Stirling, Rept. Prog. Phys. 70, 89 (2007). arXiv:hep-ph/0611148

D. Colferai, F. Schwennsen, L. Szymanowski, S. Wallon, JHEP 1012, 026 (2010). arXiv:1002.1365 [hep-ph]

Acknowledgments

The hej and hej+ariadne predictions used in this paper were produced by Jeppe Andersen, Jack Medley and Jennifer Smillie. We thank CERN for the very successful operation of the LHC, as well as the support staff from our institutions without whom ATLAS could not be operated efficiently. We acknowledge the support of ANPCyT, Argentina; YerPhI, Armenia; ARC, Australia; BMWF and FWF, Austria; ANAS, Azerbaijan; SSTC, Belarus; CNPq and FAPESP, Brazil; NSERC, NRC and CFI, Canada; CERN; CONICYT, Chile; CAS, MOST and NSFC, China; COLCIENCIAS, Colombia; MSMT CR, MPO CR and VSC CR, Czech Republic; DNRF, DNSRC and Lundbeck Foundation, Denmark; EPLANET, ERC and NSRF, European Union; IN2P3-CNRS, CEA-DSM/IRFU, France; GNSF, Georgia; BMBF, DFG, HGF, MPG and AvH Foundation, Germany; GSRT and NSRF, Greece; ISF, MINERVA, GIF, I-CORE and Benoziyo Center, Israel; INFN, Italy; MEXT and JSPS, Japan; CNRST, Morocco; FOM and NWO, Netherlands; BRF and RCN, Norway; MNiSW and NCN, Poland; GRICES and FCT, Portugal; MNE/IFA, Romania; MES of Russia and ROSATOM, Russian Federation; JINR; MSTD, Serbia; MSSR, Slovakia; ARRS and MIZŠ, Slovenia; DST/NRF, South Africa; MINECO, Spain; SRC and Wallenberg Foundation, Sweden; SER, SNSF and Cantons of Bern and Geneva, Switzerland; NSC, Taiwan; TAEK, Turkey; STFC, the Royal Society and Leverhulme Trust, United Kingdom; DOE and NSF, United States of America. The crucial computing support from all WLCG partners is acknowledged gratefully, in particular from CERN and the ATLAS Tier-1 facilities at TRIUMF (Canada), NDGF (Denmark, Norway, Sweden), CC-IN2P3 (France), KIT/GridKA (Germany), INFN-CNAF (Italy), NL-T1 (Netherlands), PIC (Spain), ASGC (Taiwan), RAL (UK) and BNL (USA) and in the Tier-2 facilities worldwide.

Author information

Authors and Affiliations

Department of Physics, University of Adelaide, Adelaide, Australia

P. Jackson, L. Lee, N. Soni & M. J. White

Physics Department, SUNY Albany, Albany, NY, USA

J. Bouffard, W. Edson, J. Ernst, A. Fischer, S. Guindon & V. Jain

Department of Physics, University of Alberta, Edmonton, AB, Canada

A. I. Butt, P. Czodrowski, D. M. Gingrich, R. W. Moore, J. L. Pinfold, A. Saddique, A. Sbrizzi & F. Vives Vaque

Department of Physics, Ankara University, Ankara, Turkey; Department of Physics, Gazi University, Ankara, Turkey; Division of Physics, TOBB University of Economics and Technology, Ankara, Turkey; Turkish Atomic Energy Authority, Ankara, Turkey

O. Cakir, A. K. Ciftci, R. Ciftci, H. Duran Yildiz, S. Kuday, S. Sultansoy, I. Turk Cakir & M. Yilmaz

LAPP, CNRS/IN2P3 and Université de Savoie, Annecy-le-Vieux, France

Z. Barnovska, N. Berger, M. Delmastro, L. Di Ciaccio, T. K. O. Doan, S. Elles, C. Goy, T. Hryn’ova, S. Jézéquel, H. Keoshkerian, I. Koletsou, R. Lafaye, J. Levêque, V. P. Lombardo, N. Massol, H. Przysiezniak, G. Sauvage, E. Sauvan, M. Schwoerer, O. Simard, T. Todorov & I. Wingerter-Seez

High Energy Physics Division, Argonne National Laboratory, Argonne, IL, USA

L. Asquith, B. Auerbach, R. E. Blair, S. Chekanov, J. T. Childers, E. J. Feng, A. T. Goshaw, T. LeCompte, J. Love, D. Malon, D. H. Nguyen, L. Nodulman, A. Paramonov, L. E. Price, J. Proudfoot, R. W. Stanek, P. van Gemmeren, A. Vaniachine, R. Yoshida & J. Zhang

Department of Physics, University of Arizona, Tucson, AZ, USA

E. Cheu, K. A. Johns, V. Kaushik, C. L. Lampen, W. Lampl, X. Lei, R. Leone, P. Loch, R. Nayyar, F. O’grady, J. P. Rutherfoord, M. A. Shupe, B. Toggerson, E. W. Varnes & J. Veatch

Department of Physics, The University of Texas at Arlington, Arlington, TX, USA

A. Brandt, D. Côté, S. Darmora, K. De, A. Farbin, J. Griffiths, H. K. Hadavand, L. Heelan, H. Y. Kim, M. Maeno, P. Nilsson, N. Ozturk, R. Pravahan, M. Sosebee, B. Spurlock, A. R. Stradling, G. Usai, A. Vartapetian, A. White & J. Yu

Physics Department, University of Athens, Athens, Greece

S. Angelidakis, A. Antonaki, S. Chouridou, D. Fassouliotis, N. Giokaris, P. Ioannou, K. Iordanidou, C. Kourkoumelis, A. Manousakis-Katsikakis & N. Tsirintanis

Physics Department, National Technical University of Athens, Zografou, Greece

T. Alexopoulos, M. Byszewski, M. Dris, E. N. Gazis, G. Iakovidis, K. Karakostas, N. Karastathis, S. Leontsinis, S. Maltezos, K. Ntekas, E. Panagiotopoulou, Th. D. Papadopoulou, G. Tsipolitis & S. Vlachos

Institute of Physics, Azerbaijan Academy of Sciences, Baku, Azerbaijan

O. Abdinov & F. Khalil-zada

Institut de Física d’Altes Energies and Departament de Física de la Universitat Autònoma de Barcelona, Barcelona, Spain

M. Bosman, R. Caminal Armadans, M. P. Casado, M. Casolino, M. Cavalli-Sforza, M. C. Conidi, A. Cortes-Gonzalez, T. Farooque, S. Fracchia, V. Giangiobbe, G. Gonzalez Parra, S. Grinstein, A. Juste Rozas, I. Korolkov, E. Le Menedeu, I. Lopez Paz, M. Martinez, L. M. Mir, J. Montejo Berlingen, A. Pacheco Pages, C. Padilla Aranda, X. Portell Bueso, I. Riu, F. Rubbo, V. Sorin, A. Succurro, M. F. Tripiana & S. Tsiskaridze

Institute of Physics, University of Belgrade, Belgrade, Serbia; Vinca Institute of Nuclear Sciences, University of Belgrade, Belgrade, Serbia

T. Agatonovic-Jovin, P. Cirkovic, A. Dimitrievska, J. Krstic, J. Mamuzic, M. Marjanovic, D. S. Popovic, Dj. Sijacki, Lj. Simic & M. Vranjes Milosavljevic

Department for Physics and Technology, University of Bergen, Bergen, Norway

T. Buanes, O. Dale, G. Eigen, A. Kastanas, W. Liebig, A. Lipniacka, B. Martin dit Latour, P. L. Rosendahl, H. Sandaker, T. B. Sjursen, L. Smestad, B. Stugu & M. Ugland

Physics Division, Lawrence Berkeley National Laboratory and University of California, Berkeley, CA, USA

R. M. Barnett, J. Beringer, J. Biesiada, G. Brandt, J. Brosamer, P. Calafiura, L. M. Caminada, F. Cerutti, A. Ciocio, R. N. Clarke, M. Cooke, K. Copic, S. Dube, K. Einsweiler, S. Farrell, M. Garcia-Sciveres, M. Gilchriese, C. Haber, M. Hance, B. Heinemann, I. Hinchliffe, T. R. Holmes, M. Hurwitz, L. Jeanty, W. Lavrijsen, C. Leggett, P. Loscutoff, Z. Marshall, C. C. Ohm, A. Ovcharova, S. Pagan Griso, K. Potamianos, A. Pranko, D. R. Quarrie, M. Shapiro, A. Sood, M. J. Tibbetts, V. Tsulaia, J. Virzi, H. Wang, W.-M. Yao & D. R. Yu

Department of Physics, Humboldt University, Berlin, Germany

E. Bergeaas Kuutmann, F. M. Giorgi, S. Grancagnolo, G. H. Herbert, R. Herrberg-Schubert, I. Hristova, O. Kind, H. Kolanoski, H. Lacker, T. Lohse, A. Nikiforov, L. Rehnisch, P. Rieck, H. Schulz, S. Stamm, D. Wendland & M. zur Nedden

Albert Einstein Center for Fundamental Physics and Laboratory for High Energy Physics, University of Bern, Bern, Switzerland

M. Agustoni, H. P. Beck, A. Cervelli, A. Ereditato, V. Gallo, S. Haug, T. Kruker, L. F. Marti, F. Meloni, B. Schneider, F. G. Sciacca, M. E. Stramaglia, S. A. Stucci & M. S. Weber

School of Physics and Astronomy, University of Birmingham, Birmingham, UK

B. M. M. Allbrooke, L. Aperio Bella, H. S. Bansil, J. Bracinik, D. G. Charlton, A. S. Chisholm, A. C. Daniells, C. M. Hawkes, S. J. Head, S. J. Hillier, M. Levy, R. D. Mudd, J. A. Murillo Quijada, P. R. Newman, K. Nikolopoulos, J. D. Palmer, M. Slater, J. P. Thomas, P. D. Thompson, P. M. Watkins, A. T. Watson, M. F. Watson & J. A. Wilson

Department of Physics, Bogazici University, Istanbul, Turkey; Department of Physics, Dogus University, Istanbul, Turkey; Department of Physics Engineering, Gaziantep University, Gaziantep, Turkey

M. Arik, A. J. Beddall, A. Beddall, A. Bingul, S. A. Cetin, S. Istin & V. E. Ozcan

INFN Sezione di Bologna, Bologna, Italy; Dipartimento di Fisica e Astronomia, Università di Bologna, Bologna, Italy

G. L. Alberghi, L. Bellagamba, D. Boscherini, A. Bruni, G. Bruni, M. Bruschi, D. Caforio, M. Corradi, S. De Castro, R. Di Sipio, L. Fabbri, M. Franchini, A. Gabrielli, B. Giacobbe, F. M. Giorgi, P. Grafström, I. Massa, L. Massa, A. Mengarelli, M. Negrini, M. Piccinini, A. Polini, L. Rinaldi, M. Romano, C. Sbarra, N. Semprini-Cesari, R. Spighi, S. A. Tupputi, S. Valentinetti, M. Villa & A. Zoccoli

Physikalisches Institut, University of Bonn, Bonn, Germany

O. Arslan, P. Bechtle, I. Brock, M. Cristinziani, W. Davey, K. Desch, J. Dingfelder, W. Ehrenfeld, G. Gaycken, Ch. Geich-Gimbel, L. Gonella, P. Haefner, S. Hageböeck, D. Hellmich, S. Hillert, F. Huegging, J. Janssen, G. Khoriauli, P. Koevesarki, V. V. Kostyukhin, J. K. Kraus, J. Kroseberg, H. Krüger, C. Lapoire, M. Lehmacher, T. Lenz, A. M. Leyko, J. Liebal, C. Limbach, T. Loddenkoetter, S. Mergelmeyer, L. Mijović, K. Mueller, G. Nanava, T. Nattermann, T. Obermann, D. Pohl, B. Sarrazin, S. Schaepe, M. J. Schultens, T. Schwindt, F. Scutti, J. A. Stillings, N. Tannoury, J. Therhaag, K. Uchida, M. Uhlenbrock, A. Vogel, E. von Toerne, P. Wagner, T. Wang, N. Wermes, P. Wienemann, L. A. M. Wiik-Fuchs, B. T. Winter, K. H. Yau Wong, R. Zimmermann & S. Zimmermann

Department of Physics, Boston University, Boston, MA, USA

S. P. Ahlen, C. Bernard, K. M. Black, J. M. Butler, L. Dell’Asta, L. Helary, M. Kruskal, B. A. Long, J. T. Shank, Z. Yan & S. Youssef

Department of Physics, Brandeis University, Waltham, MA, USA

C. Amelung, G. Amundsen, G. Artoni, J. R. Bensinger, L. Bianchini, C. Blocker, L. Coffey, E. A. Fitzgerald, S. Gozpinar, G. Sciolla, A. Venturini, S. Zambito & K. Zengel

Universidade Federal do Rio De Janeiro COPPE/EE/IF, Rio de Janeiro, Brazil; Federal University of Juiz de Fora (UFJF), Juiz de Fora, Brazil; Federal University of Sao Joao del Rei (UFSJ), Sao Joao del Rei, Brazil; Instituto de Fisica, Universidade de Sao Paulo, São Paulo, Brazil

Y. Amaral Coutinho, L. P. Caloba, A. S. Cerqueira, M. A. B. do Vale, M. Donadelli, M. A. L. Leite, C. Maidantchik, L. Manhaes de Andrade Filho, F. Marroquim, A. A. Nepomuceno & J. M. Seixas

Physics Department, Brookhaven National Laboratory, Upton, NY, USA

D. L. Adams, K. Assamagan, M. Begel, H. Chen, V. Chernyatin, R. Debbe, M. Ernst, B. Gibbard, H. A. Gordon, X. Hu, A. Klimentov, A. Kravchenko, F. Lanni, D. Lissauer, D. Lynn, H. Ma, T. Maeno, J. Metcalfe, E. Mountricha, P. Nevski, H. Okawa, D. Oliveira Damazio, F. Paige, S. Panitkin, D. V. Perepelitsa, M.-A. Pleier, V. Polychronakos, S. Protopopescu, M. Purohit, V. Radeka, S. Rajagopalan, G. Redlinger, J. Schovancova, S. Snyder, P. Steinberg, H. Takai, A. Undrus, T. Wenaus & S. Ye

National Institute of Physics and Nuclear Engineering, Bucharest, Romania; Physics Department, National Institute for Research and Development of Isotopic and Molecular Technologies, Cluj Napoca, Romania; University Politehnica Bucharest, Bucharest, Romania; West University in Timisoara, Timisoara, Romania

C. Alexa, E. Badescu, V. Boldea, S. I. Buda, I. Caprini, M. Caprini, A. Chitan, M. Ciubancan, S. Constantinescu, C.-M. Cuciuc, P. Dita, S. Dita, O. A. Ducu, A. Jinaru, J. Maurer, A. Olariu, D. Pantea, G. A. Popeneciu, M. Rotaru, G. Stoicea, A. Tudorache & V. Tudorache

Departamento de Física, Universidad de Buenos Aires, Buenos Aires, Argentina

G. Otero y Garzon, R. Piegaia, H. Reisin & S. Sacerdoti

Cavendish Laboratory, University of Cambridge, Cambridge, UK

M. Arratia, N. Barlow, J. R. Batley, F. M. Brochu, W. Buttinger, J. R. Carter, J. D. Chapman, G. Cottin, S. T. French, J. A. Frost, T. P. S. Gillam, J. C. Hill, S. Kaneti, T. J. Khoo, C. G. Lester, T. Mueller, M. A. Parker, D. Robinson, T. Sandoval, M. Thomson, C. P. Ward, S. Williams & I. Yusuff

Department of Physics, Carleton University, Ottawa, ON, Canada

A. Bellerive, G. Cree, D. Di Valentino, T. Koffas, J. Lacey, W. A. Leight, J. F. Marchand, T. G. McCarthy, I. Nomidis, F. G. Oakham, G. Pásztor, F. Tarrade, R. Ueno, M. G. Vincter & K. Whalen

CERN, Geneva, Switzerland

R. Abreu, M. Aleksa, N. Andari, G. Anders, F. Anghinolfi, A. J. Armbruster, O. Arnaez, G. Avolio, M. A. Baak, M. Backes, M. Backhaus, M. Battistin, O. Beltramello, M. Bianco, J. A. Bogaerts, J. Boyd, H. Burckhart, S. Campana, M. D. M. Capeans Garrido, T. Carli, A. Catinaccio, A. Cattai, M. Cerv, D. Chromek-Burckhart, A. Dell’Acqua, A. Di Girolamo, B. Di Girolamo, F. Dittus, D. Dobos, A. Dudarev, M. Dührssen, N. Ellis, M. Elsing, P. Farthouat, P. Fassnacht, S. Feigl, S. Fernandez Perez, S. Franchino, D. Francis, D. Froidevaux, V. Garonne, F. Gianotti, D. Gillberg, J. Glatzer, J. Godlewski, L. Goossens, B. Gorini, H. M. Gray, M. Hauschild, R. J. Hawkings, M. Heller, C. Helsens, A. M. Henriques Correia, L. Hervas, A. Hoecker, Z. Hubacek, M. Huhtinen, M. R. Jaekel, S. Jakobsen, H. Jansen, R. M. Jungst, M. Kaneda, T. Klioutchnikova, A. Krasznahorkay, K. Lantzsch, M. Lassnig, G. Lehmann Miotto, B. Lenzi, P. Lichard, D. Macina, S. Malyukov, B. Mandelli, L. Mapelli, B. Martin, A. Marzin, A. Messina, J. Meyer, A. Milic, G. Mornacchi, A. M. Nairz, Y. Nakahama, G. Negri, M. Nessi, B. Nicquevert, M. Nordberg, S. Palestini, T. Pauly, H. Pernegger, K. Peters, B. A. Petersen, K. Pommès, A. Poppleton, G. Poulard, S. Prasad, M. Rammensee, M. Raymond, C. Rembser, L. Rodrigues, S. Roe, A. Ruiz-Martinez, A. Salzburger, D. O. Savu, D. Schaefer, S. Schlenker, K. Schmieden, C. Serfon, A. Sfyrla, C. A. Solans, G. Spigo, H. J. Stelzer, F. A. Teischinger, H. Ten Kate, L. Tremblet, A. Tricoli, C. Tsarouchas, G. Unal, D. van der Ster, N. van Eldik, M. C. van Woerden, W. Vandelli, R. Vigne, R. Voss, R. Vuillermet, P. S. Wells, T. Wengler, S. Wenig, P. Werner, H. G. Wilkens, J. Wotschack, C. J. S. Young & L. Zwalinski

Enrico Fermi Institute, University of Chicago, Chicago, IL, USA

J. Alison, K. J. Anderson, A. Boveia, Y. Cheng, G. Facini, M. Fiascaris, R. W. Gardner, Y. Ilchenko, A. Kapliy, H. L. Li, S. Meehan, C. Melachrinos, F. S. Merritt, D. W. Miller, Y. Okumura, P. U. E. Onyisi, M. J. Oreglia, B. Penning, J. E. Pilcher, M. J. Shochet, L. Tompkins, I. Vukotic & J. S. Webster

Departamento de Física, Pontificia Universidad Católica de Chile, Santiago, Chile; Departamento de Física, Universidad Técnica Federico Santa María, Valparaiso, Chile

W. K. Brooks, E. Carquin, M. A. Diaz, S. Kuleshov, R. Pezoa, F. Prokoshin, M. Vogel & R. White

Institute of High Energy Physics, Chinese Academy of Sciences, Beijing, China; Department of Modern Physics, University of Science and Technology of China, Hefei, Anhui, China; Department of Physics, Nanjing University, Nanjing, Jiangsu, China; School of Physics, Shandong University, Jinan, Shandong, China; Physics Department, Shanghai Jiao Tong University, Shanghai, China

Y. Bai, L. Chen, S. Chen, Y. Fang, C. Feng, J. Gao, P. Ge, L. Guan, L. Han, Y. Jiang, S. Jin, B. Li, L. Li, Y. Li, J. B. Liu, K. Liu, M. Liu, Y. Liu, F. Lu, L. L. Ma, Q. Ouyang, H. Peng, H. Ren, L. Y. Shan, H. Y. Song, X. Sun, J. Wang, D. Xu, L. Xu, H. Yang, L. Yao, X. Zhang, Z. Zhao, C. G. Zhu, H. Zhu, Y. Zhu & X. Zhuang

Laboratoire de Physique Corpusculaire, Clermont Université and Université Blaise Pascal and CNRS/IN2P3, Clermont-Ferrand, France

D. Boumediene, E. Busato, D. Calvet, S. Calvet, J. Donini, E. Dubreuil, N. Ghodbane, G. Gilles, Ph. Gris, C. Guicheney, H. Liao, D. Pallin, D. Paredes Hernandez, F. Podlyski, C. Santoni, T. Theveneaux-Pelzer, L. Valery & F. Vazeille

Nevis Laboratory, Columbia University, Irvington, NY, USA

A. Altheimer, T. Andeen, A. Angerami, T. Bain, G. Brooijmans, Y. Chen, B. Cole, J. Guo, D. Hu, E. W. Hughes, S. Mohapatra, N. Nikiforou, J. A. Parsons, V. Perez Reale, M. I. Scherzer, E. N. Thompson, F. Tian, P. M. Tuts, D. Urbaniec, E. Wulf & L. Zhou

Niels Bohr Institute, University of Copenhagen, Copenhagen, Denmark

A. Alonso, M. Dam, G. Galster, J. B. Hansen, J. D. Hansen, P. H. Hansen, M. D. Joergensen, A. E. Loevschall-Jensen, J. Monk, L. E. Pedersen, T. C. Petersen, A. Pingel, M. Simonyan, L. A. Thomsen, C. Wiglesworth & S. Xella

INFN Gruppo Collegato di Cosenza, Laboratori Nazionali di Frascati, Frascati, Italy; Dipartimento di Fisica, Università della Calabria, Rende, Italy

M. Capua, G. Crosetti, L. La Rotonda, A. Mastroberardino, A. Policicchio, D. Salvatore, V. Scarfone, M. Schioppa, G. Susinno & E. Tassi

Faculty of Physics and Applied Computer Science, AGH University of Science and Technology, Kraków, Poland; Marian Smoluchowski Institute of Physics, Jagiellonian University, Kraków, Poland

L. Adamczyk, T. Bold, W. Dabrowski, M. Dwuznik, M. Dyndal, I. Grabowska-Bold, D. Kisielewska, S. Koperny, T. Z. Kowalski, B. Mindur, M. Palka, M. Przybycien & A. Zemla

The Henryk Niewodniczanski Institute of Nuclear Physics, Polish Academy of Sciences, Kraków, Poland

E. Banas, P. A. Bruckman de Renstrom, J. J. Chwastowski, D. Derendarz, E. Gornicki, Z. Hajduk, W. Iwanski, A. Kaczmarska, K. Korcyl, Pa. Malecki, A. Olszewski, J. Olszowska, E. Stanecka, R. Staszewski, M. Trzebinski, A. Trzupek, M. W. Wolter, B. K. Wosiek, K. W. Wozniak & B. Zabinski

Physics Department, Southern Methodist University, Dallas, TX, USA

T. Cao, A. Firan, J. Hoffman, S. Kama, R. Kehoe, A. S. Randle-Conde, S. J. Sekula, R. Stroynowski, H. Wang & J. Ye

Physics Department, University of Texas at Dallas, Richardson, TX, USA

J. M. Izen, M. Leyton, X. Lou, H. Namasivayam & K. Reeves

DESY, Hamburg and Zeuthen, Germany

S. Argyropoulos, N. Asbah, M. F. Bessner, I. Bloch, S. Borroni, S. Camarda, J. A. Dassoulas, C. Deterre, J. Dietrich, M. Filipuzzi, C. Friedrich, A. Glazov, L. S. Gomez Fajardo, K.-J. Grahn, I. M. Gregor, A. Grohsjean, M. Haleem, P. G. Hamnett, C. Hengler, K. H. Hiller, J. Howarth, Y. Huang, M. Jimenez Belenguer, J. Katzy, J. S. Keller, N. Kondrashova, T. Kuhl, M. Lisovyi, E. Lobodzinska, K. Lohwasser, M. Medinnis, K. Mönig, T. Naumann, R. Peschke, E. Petit, V. Radescu, I. Rubinskiy, R. Schaefer, G. Sedov, S. Shushkevich, D. South, M. Stanescu-Bellu, M. M. Stanitzki, P. Starovoitov, N. A. Styles, K. Tackmann, P. Vankov, J. Wang, C. Wasicki, M. A. Wildt, E. Yatsenko & E. Yildirim

Institut für Experimentelle Physik IV, Technische Universität Dortmund, Dortmund, Germany

I. Burmeister, H. Esch, C. Gössling, J. Jentzsch, C. A. Jung, R. Klingenberg & T. Wittig

Institut für Kern- und Teilchenphysik, Technische Universität Dresden, Dresden, Germany

P. Anger, F. Friedrich, J. P. Grohs, C. Gumpert, M. Kobel, K. Leonhardt, W. F. Mader, M. Morgenstern, O. Novgorodova, C. Rudolph, U. Schnoor, F. Siegert, F. Socher, S. Staerz, A. Straessner, A. Vest & S. Wahrmund

Department of Physics, Duke University, Durham, NC, USA

A. T. H. Arce, D. P. Benjamin, A. Bocci, B. Cerio, E. Kajomovitz, A. Kotwal, M. C. Kruse, L. Li, S. Li, M. Liu, S. H. Oh, C. S. Pollard & C. Wang

SUPA-School of Physics and Astronomy, University of Edinburgh, Edinburgh, UK

W. Bhimji, T. M. Bristow, P. J. Clark, F. A. Dias, N. C. Edwards, F. M. Garay Walls, P. C. F. Glaysher, R. D. Harrington, C. Leonidopoulos, V. J. Martin, C. Mills, B. J. O’Brien, S. A. Olivares Pino, M. Proissl, K. E. Selbach, B. H. Smart, A. Washbrook & B. M. Wynne

INFN Laboratori Nazionali di Frascati, Frascati, Italy

A. Annovi, M. Antonelli, H. Bilokon, V. Chiarella, M. Curatolo, R. Di Nardo, B. Esposito, C. Gatti, P. Laurelli, G. Maccarrone, K. Prokofiev, A. Sansoni, M. Testa & E. Vilucchi

Fakultät für Mathematik und Physik, Albert-Ludwigs-Universität, Freiburg, Germany

S. Amoroso, H. Arnold, C. Betancourt, M. Boehler, R. Bruneliere, F. Buehrer, D. Büscher, E. Coniavitis, V. Consorti, V. Dao, A. Di Simone, M. Fehling-Kaschek, M. Flechl, C. Giuliani, G. Herten, K. Jakobs, T. Javůrek, P. Jenni, F. Kiss, K. Köneke, A. K. Kopp, S. Kuehn, S. Lai, U. Landgraf, R. Madar, K. Mahboubi, W. Mohr, M. Pagáčová, U. Parzefall, T. C. Rave, M. Ronzani, F. Rühr, Z. Rurikova, N. Ruthmann, C. Schillo, E. Schmidt, M. Schumacher, P. Sommer, J. E. Sundermann, K. K. Temming, V. Tsiskaridze, F. C. Ungaro, H. von Radziewski, T. Vu Anh, M. Warsinsky, C. Weiser, M. Werner & S. Zimmermann

Section de Physique, Université de Genève, Geneva, Switzerland