Abstract

The large rate of multiple simultaneous proton–proton interactions, or pile-up, generated by the Large Hadron Collider in Run 1 required the development of many new techniques to mitigate the adverse effects of these conditions. This paper describes the methods employed in the ATLAS experiment to correct for the impact of pile-up on jet energy and jet shapes, and for the presence of spurious additional jets, with a primary focus on the large 20.3 \(\mathrm{fb}^{-1}\) data sample collected at a centre-of-mass energy of \(\sqrt{s} = 8~\mathrm {TeV} \). The energy correction techniques that incorporate sophisticated estimates of the average pile-up energy density and tracking information are presented. Jet-to-vertex association techniques are discussed and projections of performance for the future are considered. Lastly, the extension of these techniques to mitigate the effect of pile-up on jet shapes using subtraction and grooming procedures is presented.

Similar content being viewed by others

1 Introduction

The success of the proton–proton (\(pp\)) operation of the Large Hadron Collider (LHC) at \(\sqrt{s} = 8~\mathrm {TeV} \) led to instantaneous luminosities of up to \(7.7\times 10^{33}\) cm\(^{-2}\) s\(^{-1}\) at the beginning of a fill. Consequently, multiple \(pp\) interactions occur within each bunch crossing. Averaged over the full data sample, the mean number of such simultaneous interactions (pile-up) is approximately 21. These additional collisions are uncorrelated with the hard-scattering process that typically triggers the event and can be approximated as contributing a background of soft energy depositions that have particularly adverse and complex effects on jet reconstruction. Hadronic jets are observed as groups of topologically related energy deposits in the ATLAS calorimeters, and therefore pile-up affects the measured jet energy and jet structure observables. Pile-up interactions can also directly generate additional jets. The production of such pile-up jets can occur from additional \(2\rightarrow 2\) interactions that are independent of the hard-scattering and from contributions due to soft energy deposits that would not otherwise exceed the threshold to be considered a jet. An understanding of all of these effects is therefore critical for precision measurements as well as searches for new physics.

The expected amount of pile-up (\(\mu \)) in each bunch crossing is related to the instantaneous luminosity (\(\mathcal L _0\)) by the following relationship:

where \(n_\mathrm{c}\) is the number of colliding bunch pairs in the LHC, \(f_\mathrm {rev} = 11.245\) kHz is the revolution frequency [1], and \(\sigma _{\mathrm {inelastic}}\) is the \(pp\) inelastic cross section. When the instantaneous luminosity is measured by integrating over many bunch crossings, Eq. (1) yields the average number of interactions per crossing, or \(\langle \mu \rangle \). The so-called in-time pile-up due to additional \(pp\) collisions within a single bunch crossing can also be accompanied by out-of-time pile-up due to signals from collisions in other bunch crossings. This occurs when the detector and/or electronics integration time is significantly larger than the time between crossings, as is the case for the liquid-argon (LAr) calorimeters in the ATLAS detector. The measured detector response as a function of \(\langle \mu \rangle \) in such cases is sensitive to the level of out-of-time pile-up. The distributions of \(\langle \mu \rangle \) for both the \(\sqrt{s} = 7~\mathrm {TeV} \) and \(\sqrt{s} = 8~\mathrm {TeV} \) runs (collectively referred to as Run 1) are shown in Fig. 1. The spacing between successive proton bunches was 50 ns for the majority of data collected during Run 1. This bunch spacing is decreased to 25 ns for LHC Run 2. Out-of-time pile-up contributions are likely to increase with this change. However, the LAr calorimeter readout electronics are also designed to provide an optimal detector response for a 25 ns bunch spacing scenario, and thus the relative impact of the change to 25 ns may be mitigated, particularly in the case of the calorimeter response (see Sect. 2).

The different responses of the individual ATLAS subdetector systems to pile-up influence the methods used to mitigate its effects. The sensitivity of the calorimeter energy measurements to multiple bunch crossings, and the LAr EM calorimeter in particular, necessitates correction techniques that incorporate estimates of the impact of both in-time and out-of-time pile-up. These techniques use the average deposited energy density due to pile-up as well as track-based quantities from the inner tracking detector (ID) such as the number of reconstructed primary vertices (\(N_{\mathrm{PV}}\)) in an event. Due to the fast response of the silicon tracking detectors, this quantity is not affected by out-of-time pile-up, to a very good approximation.

Resolving individual vertices using the ATLAS ID is a critical task in accurately determining the origin of charged-particle tracks that point to energy deposits in the calorimeter. By identifying tracks that originate in the hard-scatter primary vertex, jets that contain significant contamination from pile-up interactions can be rejected. These approaches provide tools for reducing or even obviating the effects of pile-up on the measurements from individual subdetector systems used in various stages of the jet reconstruction. The result is a robust, stable jet definition, even at very high luminosities.

The first part of this paper describes the implementation of methods to partially suppress the impact of signals from pile-up interactions on jet reconstruction and to directly estimate event-by-event pile-up activity and jet-by-jet pile-up sensitivity, originally proposed in Ref. [2]. These estimates allow for a sophisticated pile-up subtraction technique in which the four-momentum of the jet and the jet shape are corrected event-by-event for fluctuations due to pile-up, and whereby jet-by-jet variations in pile-up sensitivity are automatically accommodated. The performance of these new pile-up correction methods is assessed and compared to previous pile-up corrections based on the number of reconstructed primary vertices and the instantaneous luminosity [3, 4]. Since the pile-up subtraction is the first step of the jet energy scale (JES) correction in ATLAS, these techniques play a crucial role in establishing the overall systematic uncertainty of the jet energy scale. Nearly all ATLAS measurements and searches for physics beyond the Standard Model published since the end of the 2012 data-taking period utilise these methods, including the majority of the final Run 1 Higgs cross section and coupling measurements [5–9].

The second part of this paper describes the use of tracks to assign jets to the hard-scatter interaction. By matching tracks to jets, one obtains a measure of the fraction of the jet energy associated with a particular primary vertex. Several track-based methods allow the rejection of spurious calorimeter jets resulting from local fluctuations in pile-up activity, as well as real jets originating from single pile-up interactions, resulting in improved stability of the reconstructed jet multiplicity against pile-up. Track-based methods to reject pile-up jets are applied after the full chain of JES corrections, as pile-up jet tagging algorithms.

The discussion of these approaches proceeds as follows. The ATLAS detector is described in Sect. 2 and the data and Monte Carlo simulation samples are described in Sect. 3. Section 4 describes how the inputs to jet reconstruction are optimised to reduce the effects of pile-up on jet constituents. Methods for subtracting pile-up from jets, primarily focusing on the impacts on calorimeter-based measurements of jet kinematics and jet shapes, are discussed in Sect. 5. Approaches to suppressing the effects of pile-up using both the subtraction techniques and charged-particle tracking information are then presented in Sect. 6. Lastly, techniques that aim to correct jets by actively removing specific energy deposits that are due to pile-up, are discussed in Sect. 7.

2 The ATLAS detector

The ATLAS detector [10, 11] provides nearly full solid angle coverage around the collision point with an inner tracking system covering the pseudorapidity range \(|\eta |<2.5\),Footnote 1 electromagnetic and hadronic calorimeters covering \(|\eta |<4.9\), and a muon spectrometer covering \(|\eta |<2.7\).

The ID comprises a silicon pixel tracker closest to the beamline, a microstrip silicon tracker, and a straw-tube transition radiation tracker at radii up to 108 cm. These detectors are layered radially around each other in the central region. A thin superconducting solenoid surrounding the tracker provides an axial 2 T field enabling the measurement of charged-particle momenta. The overall ID acceptance spans the full azimuthal range in \(\phi \) for particles originating near the nominal LHC interaction region [12–14]. Due to the fast readout design of the silicon pixel and microstrip trackers, the track reconstruction is only affected by in-time pile-up. The efficiency to reconstruct charged hadrons ranges from 78 % at \(p_{\text {T}} ^{\mathrm {track}}=500\) MeV to more than 85 % above \(10~\mathrm {GeV}\), with a transverse impact parameter (\(d_0\)) resolution of 10 \(\upmu \)m for high-momentum particles in the central region. For jets with \(p_{\text {T}}\) above approximately \(500~\mathrm {GeV}\), the reconstruction efficiency for tracks in the core of the jet starts to degrade because these tracks share many clusters in the pixel tracker, creating ambiguities when matching the clusters with track candidates, and leading to lost tracks.

The high-granularity EM and hadronic calorimeters are composed of multiple subdetectors spanning \(|\eta |\le 4.9\). The EM barrel calorimeter uses a LAr active medium and lead absorbers. In the region \(|\eta | < 1.7\), the hadronic (Tile) calorimeter is constructed from steel absorber and scintillator tiles and is separated into barrel (\(|\eta |<1.0\)) and extended barrel (\(0.8<|\eta |<1.7\)) sections. The calorimeter end-cap (\(1.375<|\eta |<3.2\)) and forward (\(3.1<|\eta |<4.9\)) regions are instrumented with LAr calorimeters for EM and hadronic energy measurements. The response of the calorimeters to single charged hadrons—defined as the energy (E) reconstructed for a given charged hadron momentum (p), or E / p—ranges from 20 to 80 % in the range of charged hadron momentum between 1–30 GeV and is well described by Monte Carlo (MC) simulation [15]. In contrast to the pixel and microstrip tracking detectors, the LAr calorimeter readout is sensitive to signals from the preceding 12 bunch crossings during 50 ns bunch spacing operation [16, 17]. For the 25 ns bunch spacing scenario expected during Run 2 of the LHC, this increases to 24 bunch crossings. The LAr calorimeter uses bipolar shaping with positive and negative output which ensures that the average signal induced by pile-up averages to zero in the nominal 25 ns bunch spacing operation. Consequently, although the LAr detector will be exposed to more out-of-time pile-up in Run 2, the signal shaping of the front-end electronics is optimised for this shorter spacing [16, 18], and is expected to cope well with the change. The fast readout of the Tile calorimeter, however, makes it relatively insensitive to out-of-time pile-up [19]. The LAr barrel has three EM layers longitudinal in shower depth (EM1, EM2, EM3), whereas the LAr end-cap has three EM layers (EMEC1, EMEC2, EMEC3) in the range \(1.5<|\eta |<2.5\), two layers in the range \(2.5<|\eta |<3.2\) and four hadronic layers (HEC1, HEC2, HEC3, HEC4). In addition, there is a pre-sampler layer in front of the LAr barrel and end-cap EM calorimeter (PS). The transverse segmentation of both the EM and hadronic LAr end-caps is reduced in the region between \(2.5<|\eta |<3.2\) compared to the barrel layers. The forward LAr calorimeter has one EM layer (FCal1) and two hadronic layers (FCal2, FCal3) with transverse segmentation similar to the more forward HEC region. The Tile calorimeter has three layers longitudinal in shower depth (Tile1, Tile2, Tile3) as well as scintillators in the gap region spanning (\(0.85<|\eta |<1.51\)) between the barrel and extended barrel sections.

3 Data and Monte Carlo samples

This section provides a description of the data selection and definitions of objects used in the analysis (Sect. 3.1) as well as of the simulated event samples to which the data are compared (Sect. 3.2).

3.1 Object definitions and event selection

The full 2012 \(pp\) data-taking period at a centre-of-mass energy of \(\sqrt{s} = 8~\mathrm {TeV} \) is used for these measurements presented here. Events are required to meet baseline quality criteria during stable LHC running periods. The ATLAS data quality (DQ) criteria reject data with significant contamination from detector noise or issues in the read-out [20] based upon individual assessments for each subdetector. These criteria are established separately for the barrel, end-cap and forward regions, and they differ depending on the trigger conditions and reconstruction of each type of physics object (for example jets, electrons and muons). The resulting dataset corresponds to an integrated luminosity of \(20.3 \pm 0.6\) \(\mathrm{fb}^{-1}\) following the methodology described in Ref. [21].

To reject non-collision backgrounds [22], events are required to contain at least one primary vertex consistent with the LHC beam spot, reconstructed from at least two tracks each with \(p_{\text {T}} ^{\mathrm {track}}>400\) MeV. The primary hard-scatter vertex is defined as the vertex with the highest \(\sum (p_{\text {T}} ^{\mathrm {track}})^2\). To reject rare events contaminated by spurious signals in the detector, all anti-\(k_{t}\) [23, 24] jets with radius parameter \(R=0.4\) and \(p_{\text {T}} ^\mathrm {jet} >20 \mathrm {GeV}\) (see below) are required to satisfy the jet quality requirements that are discussed in detail in Ref. [22] (and therein referred to as the “looser” selection). These criteria are designed to reject non-collision backgrounds and significant transient noise in the calorimeters while maintaining an efficiency for good-quality events greater than 99.8 % with as high a rejection of contaminated events as possible. In particular, this selection is very efficient in rejecting events that contain fake jets due to calorimeter noise.

Hadronic jets are reconstructed from calibrated three-dimensional topo-clusters [25]. Clusters are constructed from calorimeter cells that are grouped together using a topological clustering algorithm. These objects provide a three-dimensional representation of energy depositions in the calorimeter and implement a nearest-neighbour noise suppression algorithm. The resulting topo-clusters are classified as either electromagnetic or hadronic based on their shape, depth and energy density. Energy corrections are then applied to the clusters in order to calibrate them to the appropriate energy scale for their classification. These corrections are collectively referred to as local cluster weighting, or LCW, and jets that are calibrated using this procedure are referred to as LCW jets [4].

Jets can also be built from charged-particle tracks (track-jets) using the identical anti-\(k_{t}\) algorithm as for jets built from calorimeter clusters. Tracks used to construct track-jets have to satisfy minimal quality criteria, and they are required to be associated with the hard-scatter vertex.

The jets used for the analyses presented here are primarily found and reconstructed using the anti-\(k_{t}\) algorithm with radius parameters \(R = 0.4, 0.6\) and 1.0. In some cases, studies of groomed jets are also performed, for which algorithms are used to selectively remove constituents from a jet. Groomed jets are often used in searches involving highly Lorentz-boosted massive objects such as W / Z bosons [26] or top quarks [27]. Unless noted otherwise, the jet trimming algorithm [28] is used for groomed jet studies in this paper. The procedure implements a \(k_{t}\) algorithm [29, 30] to create small subjets with a radius \(R_\mathrm{sub} =0.3\). The ratio of the \(p_{\text {T}}\) of these subjets to that of the jet is used to remove constituents from the jet. Any subjets with \(p_{\mathrm{T}i}/p_{\text {T}} ^\mathrm {jet} < f_\mathrm{cut} \) are removed, where \(p_{\mathrm{T}i}\) is the transverse momentum of the ith subjet, and \(f_\mathrm{cut} =0.05\) is determined to be an optimal setting for improving mass resolution, mitigating the effects of pile-up, and retaining substructure information [31]. The remaining constituents form the trimmed jet.

The energy of the reconstructed jet may be further corrected using subtraction techniques and multiplicative jet energy scale correction factors that are derived from MC simulation and validated with the data [3, 4]. As discussed extensively in Sect. 5, subtraction procedures are critical to mitigating the jet energy scale dependence on pile-up. Specific jet energy scale correction factors are then applied after the subtraction is performed. The same corrections are applied to calorimeter jets in MC simulation and data to ensure consistency when direct comparisons are made between them.

Comparisons are also made to jets built from particles in the MC generator’s event record (“truth particles”). In such cases, the inputs to jet reconstruction are stable particles with a lifetime of at least 10 ps (excluding muons and neutrinos). Such jets are referred to as generator-level jets or truth-particle jets and are to be distinguished from parton-level jets. Truth-particle jets represent the measurement for a hermetic detector with perfect resolution and scale, without pile-up, but including the underlying event.

Trigger decisions in ATLAS are made in three stages: Level-1, Level-2 and the Event Filter. The Level-1 trigger is implemented in hardware and uses a subset of detector information to reduce the event rate to a design value of at most 75 kHz. This is followed by two software-based triggers, Level-2 and the Event Filter, which together reduce the event rate to a few hundred Hz. The measurements presented in this paper primarily use single-jet triggers. The rate of events in which the highest transverse momentum jet is less than about \(400~\mathrm {GeV}\) is too high to record more than a small fraction of them. The triggers for such events are therefore pre-scaled to reduce the rates to an acceptable level in an unbiased manner. Where necessary, analyses compensate for the pre-scales by using weighted events based upon the pre-scale setting that was active at the time of the collision.

3.2 Monte Carlo simulation

Two primary MC event generator programs are used for comparison to the data. PYTHIA 8.160 [32] with the ATLAS A2 tunable parameter set (tune) [33] and the CT10 NLO parton distribution function (PDF) set [34] is used for the majority of comparisons. Comparisons are also made to the HERWIG++ 2.5.2 [35] program using the CTEQ6L1 [36] PDF set along with the UE7-2 tune [37], which is tuned to reproduce underlying-event data from the LHC experiments. MC events are passed through the full GEANT4 [38] detector simulation of ATLAS [39] after the simulation of the parton shower and hadronisation processes. Identical reconstruction and trigger, event, quality, jet and track selection criteria are then applied to both the MC simulation and to the data.

In some cases, additional processes are used for comparison to data. The \(Z\) boson samples used for the validation studies are produced with the POWHEG-BOX v1.0 generator [40–42] and the SHERPA 1.4.0 [43] generator, both of which provide NLO matrix elements for inclusive \(Z\) boson production. The CT10 NLO PDF set is also used in the matrix-element calculation for these samples. The modelling of the parton shower, multi-parton interactions and hadronisation for events generated using POWHEG-BOX is provided by PYTHIA 8.163 with the AU2 underlying-event tune [33] and the CT10 NLO PDF set. These MC samples are thus referred to as POWHEG+PYTHIA 8 samples. PYTHIA is in turn interfaced with PHOTOS [44] for the modelling of QED final-state radiation.

Pile-up is simulated for all samples by overlaying additional soft \(pp\) collisions which are also generated with PYTHIA 8.160 using the ATLAS A2 tune and the MSTW2008LO PDF set [45]. These additional events are overlaid onto the hard-scattering events according to the measured distribution of the average number \(\langle \mu \rangle \) of \(pp\) interactions per bunch crossing from the luminosity detectors in ATLAS [21, 46] using the full 8 TeV data sample, as shown in Fig. 1. The proton bunches were organised in four trains of 36 bunches with a 50 ns spacing between the bunches. Therefore, the simulation also contains effects from out-of-time pile-up. The effect of this pile-up history for a given detector system is then determined by the size of the readout time window for the relevant electronics. As an example, for the central LAr calorimeter barrel region, which is sensitive to signals from the preceding 12 bunch crossings during 50 ns bunch spacing operation, the digitization window is set to 751 ns before and 101 ns after the simulated hard-scattering interaction.

4 Topological clustering and cluster-level pile-up suppression

The first step for pile-up mitigation in ATLAS is at the level of the constituents used to reconstruct jets. The topological clustering algorithm incorporates a built-in pile-up suppression scheme to limit the formation of clusters produced by pile-up depositions as well as to limit the growth of clusters around highly energetic cells from hard-scatter signals. The key concept that allows this suppression is the treatment of pile-up as noise, and the use of cell energy thresholds based on their energy significance relative to the total noise.

Topological clusters are built using a three-dimensional nearest-neighbour algorithm that clusters calorimeter cells with energy significance \(|E_\mathrm{cell}|/\sigma ^\mathrm{noise}>4\) for the seed, iterates among all neighbouring cells with \(|E_\mathrm{cell}|/\sigma ^\mathrm{noise}>2\), and that finally adds one additional layer of cells \(|E_\mathrm{cell}|/\sigma ^\mathrm{noise}>0\) when no further nearest-neighbours exceed the \(2\sigma \) threshold at the boundary (not allowed to extend to next-to-nearest neighbours). The total cell noise, \(\sigma ^\mathrm{noise}\), is the sum in quadrature of the cell noise due to the readout electronics and the cell noise that is due to pile-up (\(\sigma _\mathrm{pile-up}^\mathrm{noise}\)). The pile-up noise for a given cell is evaluated from Monte Carlo simulation and is defined to be the RMS of the energy distribution resulting from pile-up particles for a given number of \(pp\) collisions per bunch crossing (determined by \(\langle \mu \rangle \)) and a given bunch spacing \(\Delta t\). It is technically possible to adjust the pile-up noise for specific data-taking periods depending on \(\langle \mu \rangle \), but it was kept fixed for the entire Run 1 \(8 \mathrm {TeV}\) dataset.

By adjusting the pile-up noise value, topological clustering partially suppresses the formation of clusters created by pile-up fluctuations, and it reduces the number of cells included in jets. Raising the pile-up noise value effectively increases the threshold for cluster formation and growth, significantly reducing the effects of pile-up on the input signals to jet reconstruction.

a Per-cell electronic noise (\(\langle \mu \rangle =0\)) and b total noise per cell at high luminosity corresponding to \(\langle \mu \rangle =30\) interactions per bunch crossing with a bunch spacing of \(\Delta t = 50\) ns, in MeV, for each calorimeter layer. The different colours indicate the noise in the pre-sampler (PS), the up to three layers of the LAr calorimeter (EM), the up to three layers of the Tile calorimeter (Tile), the four layers of the hadronic end-cap calorimeter (HEC), and the three layers of the forward calorimeter (FCal). The total noise, \(\sigma ^\mathrm{noise}\), is the sum in quadrature of electronic noise and the expected RMS of the energy distribution corresponding to a single cell

Figure 2 shows the electronic and pile-up noise contributions to cells that are used to define the thresholds for the topological clustering algorithm. In events with an average of 30 additional pile-up interactions (\(\langle \mu \rangle =30\)), the noise from pile-up depositions is approximately a factor of 2 larger than the electronic noise for cells in the central electromagnetic calorimeter, and it reaches \(10~\mathrm {GeV}\) in FCal1 and FCal2. This high threshold in the forward region translates into a reduced topo-cluster occupancy due to the coarser segmentation of the forward calorimeter, and thus a smaller probability that a given event has a fluctuation beyond \(4\sigma \). The implications of this behaviour for the pile-up \(p_{\text {T}}\) density estimation are discussed in Sect. 5.1.

The value of \(\langle \mu \rangle \) at which \(\sigma _\mathrm{pile-up}^\mathrm{noise}\) is evaluated for a given data-taking period is chosen to be high enough that the number of clusters does not grow too large due to pile-up and at the same time low enough to retain as much signal as possible. For a Gaussian noise distribution the actual \(4\sigma \) seed threshold leads to an increase in the number of clusters by a factor of 5 if the noise is underestimated by 10 %. Therefore \(\sigma _\mathrm{pile-up}^\mathrm{noise}\) was set to the pile-up noise corresponding to the largest expected \(\langle \mu \rangle \) rather than the average or the lowest expected value. For 2012 (2011) pile-up conditions, \(\sigma _\mathrm{pile-up}^\mathrm{noise}\) was set to the value of \(\sigma _\mathrm{pile-up}^\mathrm{noise}\) corresponding to \(\langle \mu \rangle =30\) (\(\langle \mu \rangle =8\)).

The local hadron calibration procedure for clusters depends on the value of \(\sigma ^\mathrm{noise}\) since this choice influences the cluster size and thus the shape variables used in the calibration. Therefore, the calibration constants are re-computed for each \(\sigma ^\mathrm{noise}\) configuration. For this reason, a single, fixed value of \(\sigma ^\mathrm{noise}\) is used for entire data set periods in order to maintain consistent conditions.

5 Pile-up subtraction techniques and results

The independence of the hard-scattering process from additional pile-up interactions in a given event results in positive or negative shifts to the reconstructed jet kinematics and to the jet shape. This motivates the use of subtraction procedures to remove these contributions to the jet. Early subtraction methods [3, 4] for mitigating the effects of pile-up on the jet transverse momentum in ATLAS relied on an average offset correction (\(\langle \mathcal {O} ^\mathrm{jet} \rangle \)),

In these early approaches, \(\langle \mathcal {O} ^\mathrm{jet} \rangle \) is determined from in-situ studies or MC simulation and represents an average offset applied to the jet \(p_{\text {T}}\). This offset is parametrised as a function of \(\eta \), \(N_{\mathrm{PV}}\) and \(\langle \mu \rangle \). Such methods do not fully capture the fluctuations of the pile-up energy added to the calorimeter on an event-by-event basis; that component is only indirectly estimated from its implicit dependence on \(N_{\mathrm{PV}}\). Moreover, no individual jet’s information enters into this correction and thus jet-by-jet fluctuations in the actual offset of that particular jet \(p_{\text {T}}\), \(\mathcal {O} ^\mathrm{jet}\), or the jet shape, cannot be taken into account. Similar methods have also been pursued by the CMS collaboration [47], as well a much more complex approaches that attempt to mitigate the effects of pile-up prior to jet reconstruction [48, 49].

The approach adopted for the final Run 1 ATLAS jet energy scale [4] is to estimate \(\mathcal {O} ^\mathrm{jet}\) on an event-by-event basis. To accomplish this, a measure of the jet’s susceptibility to soft energy depositions is needed in conjunction with a method to estimate the magnitude of the effect on a jet-by-jet and event-by-event basis. A natural approach is to define a jet area (\(A^{\mathrm {jet}}\)) [50] in \(\eta \)–\(\phi \) space along with a pile-up \(p_{\text {T}}\) density, \(\rho \). The offset can then be determined dynamically for each jet [2] using

Nearly all results published by ATLAS since 2012 have adopted this technique for correcting the jet kinematics for pile-up effects. The performance of this approach, as applied to both the jet kinematics and the jet shape, is discussed below.

5.1 Pile-up event \(p_{\text {T}}\) density \(\rho \)

One of the key parameters in the pile-up subtraction methods presented in this paper is the estimated pile-up \(p_{\text {T}}\) density characterised by the observable \(\rho \). The pile-up \(p_{\text {T}}\) density of an event can be estimated as the median of the distribution of the density of many \(k_{t}\) jets, constructed with no minimum \(p_{\text {T}}\) threshold [29, 30] in the event. Explicitly, this is defined as

where each \(k_{t}\) jet i has transverse momentum \(p_{\mathrm {T}, i}^\mathrm {jet}\) and area \(A^{\mathrm {jet}} _{i}\), and it is defined with a nominal radius parameter \(R_{k_{t}} =0.4\). The chosen radius parameter value is the result of a dedicated optimisation study, balancing two competing effects: the sensitivity to biases from hard-jet contamination in the \(\rho \) calculation when \(R_{k_{t}} \) is large, and statistical fluctuations when \(R_{k_{t}} \) is small. The sensitivity to the chosen radius value is not large, but measurably worse performance was observed for radius parameters larger than 0.5 and smaller than 0.3.

The use of the \(k_{t}\) algorithm in Eq. (4) is motivated by its sensitivity to soft radiation and thus no minimum \(p_{\text {T}} \) selection is applied to the \(k_{t}\) jets that are used. In ATLAS, the inputs to the \(k_{t}\) jets used in the \(\rho \) calculation are positive-energy calorimeter topo-clusters within \(|\eta | \le 2.0\). The \(\eta \) range chosen for calculating \(\rho \) is motivated by the calorimeter occupancy, which is low in the forward region relative to the central region. The cause of the low occupancy in the forward region is complex and is intrinsically related to the calorimeter segmentation and response. The coarser calorimeter cell size at higher \(|\eta |\) [10], coupled with the noise suppression inherent in topological clustering, plays a large role. Since topo-clusters are seeded according to significance relative to (electronic and pile-up) noise rather than an absolute threshold, having a larger number of cells (finer segmentation) increases the probability that the energy of one cell fluctuates up to a significant value due to (electronic or pile-up) noise. With the coarser segmentation in the end-cap and forward regions beginning near \(|\eta |= 2.5\) (see Fig. 2), this probability becomes smaller, and clusters are predominantly seeded only by the hard-scatter signal. In addition, the likelihood that hadronic showers overlap in a single cell increases along with the probability that fluctuations in the calorimeter response cancel, which affects the energy deposited in the cell. The mean \(\rho \) measured as a function of \(\eta \) is shown in Fig. 3. The measurements are made in narrow strips in \(\eta \) which are \(\Delta \eta = 0.7\) wide and shifted in steps of \(\delta \eta = 0.1\) from \(\eta = - 4.9\) to 4.9. The \(\eta \) reported in Fig. 3 is the central value of each strip. The measured \(\rho \) in each strip quickly drops to nearly zero beyond \(|\eta |\simeq 2\). Due to this effectively stricter suppression in the forward region, a calculation of \(\rho \) in the central region gives a more meaningful measure of the pile-up activity than the median over the entire \(\eta \) range, or an \(\eta \)-dependent \(\rho \) calculated in slices across the calorimeter.

Distributions of \(\rho \) in both data and MC simulation are presented in Fig. 4 for SHERPA and POWHEG+PYTHIA 8. Both MC generators use the same pile-up simulation model. The event selection used for these distributions corresponds to \(Z (\rightarrow \mu \mu )\)+jets events where a \(Z\) boson (\(p_{\text {T}} ^{Z} >30\) GeV) and a jet (\(|\eta |<2.5\) and \(p_{\text {T}} >20\) GeV) are produced back-to-back (\(\Delta \phi (Z,\mathrm{leading~jet})>2.9\)). Both MC simulations slightly overestimate \(\rho \), but agree well with each other. Small differences between the MC simulations can be caused by different modelling of the soft jet spectrum associated with the hard-scattering and the underlying event.

The distribution of estimated pile-up \(p_{\text {T}}\) density, \(\rho \), in \(Z (\rightarrow \mu \mu )\)+jets events using data and two independent MC simulation samples (SHERPA and POWHEG+PYTHIA 8). Both MC generators use the same pile-up simulation model (PYTHIA 8.160), and this model uses the \(\langle \mu \rangle \) distribution for \(8 \mathrm {TeV}\) data shown in Fig. 1. \(\rho \) is calculated in the central region using topo-clusters with positive energy within \(|\eta | \le 2.0\)

Since \(\rho \) is computed event-by-event, separately for data and MC, a key advantage of the jet area subtraction is that it reduces the pile-up uncertainty from detector mismodelling effects. This is because different values of \(\rho \) are determined in data and simulation depending on the measured pile-up activity rather than using a predicted value for \(\rho \) based on MC simulations.

5.2 Pile-up energy subtraction

The median \(p_{\text {T}}\) density \(\rho \) provides a direct estimate of the global pile-up activity in each event, whereas the jet area provides an estimate of an individual jet’s susceptibility to pile-up. Equation (2) can thus be expressed on a jet-by-jet basis using Eq. (3) instead of requiring an average calculation of the offset, \(\langle \mathcal {O} \rangle \). This yields the following pile-up subtraction scheme:

There are two ways in which pile-up can contribute energy to an event: either by forming new clusters, or by overlapping with signals from the hard-scattering event. Because of the noise suppression inherent in topological clustering, only pile-up signals above a certain threshold can form separate clusters. Low-energy pile-up deposits can thus only contribute measurable energy to the event if they overlap with other deposits that survive noise suppression. The probability of overlap is dependent on the transverse size of EM and hadronic showers in the calorimeter, relative to the size of the calorimeter cells. Due to fine segmentation, pile-up mainly contributes extra clusters in the central regions of the calorimeter where \(\rho \) is calculated (\(|\eta |\lesssim 2\)).

As discussed in Sect. 2, the details of the readout electronics for the LAr calorimeter can result in signals associated with out-of-time pile-up activity. If out-of-time signals from earlier bunch crossings are isolated from in-time signals, they may form negative energy clusters, which are excluded from jet reconstruction and the calculation of \(\rho \). However, overlap between the positive jet signals and out-of-time activity results in both positive and negative modulation of the jet energy. Due to the long negative component of the LAr pulse shape, the probability is higher for an earlier bunch crossing to negatively contribute to signals from the triggered event than a later bunch crossing to contribute positively. This feature results in a negative dependence of the jet \(p_{\text {T}}\) on out-of-time pile-up. Such overlap is more probable at higher \(|\eta |\), due to coarser segmentation relative to the transverse shower size. In addition, the length of the bipolar pulse is shorter in the forward calorimeters, which results in larger fluctuations in the out-of-time energy contributions to jets in the triggered event since the area of the pulse shape must remain constant. As a result, forward jets have enhanced sensitivity to out-of-time pile-up due to the larger impact of fluctuations of pile-up energy depositions in immediately neighbouring bunch crossings.

Since the \(\rho \) calculation is dominated by lower-occupancy regions in the calorimeter, the sensitivity of \(\rho \) to pile-up does not fully describe the pile-up sensitivity of the high-occupancy region at the core of a high-\(p_{\text {T}}\) jet. The noise suppression provided by the topological clustering procedure has a smaller impact in the dense core of a jet where significant nearby energy deposition causes a larger number of small signals to be included in the final clusters than would otherwise be possible. Furthermore, the effects of pile-up in the forward region are not well described by the median \(p_{\text {T}}\) density as obtained from positive clusters in the central region. A residual correction is therefore necessary to obtain an average jet response that is insensitive to pile-up across the full \(p_{\text {T}}\) range.

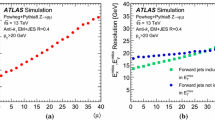

Figure 5 shows the \(\eta \) dependence of the transverse momentum of anti-\(k_{t}\) \(R = 0.4\) jets on \(N_{\mathrm{PV}}\) (for fixed \(\langle \mu \rangle \)) and on \(\langle \mu \rangle \) (for fixed \(N_{\mathrm{PV}}\)). Separating these dependencies probes the effects of in-time and out-of-time pile-up, respectively, as a function of \(\eta \). These results were obtained from linear fits to the difference between the reconstructed and the true jet \(p_{\text {T}}\) (written as \(p_{\text {T}} ^{\mathrm {reco}}- p_\mathrm{T}^\mathrm{true} \)) as a function of both \(N_{\mathrm{PV}}\) and \(\langle \mu \rangle \). The subtraction of \(\rho \times A^{\mathrm {jet}} \) removes a significant fraction of the sensitivity to in-time pile-up. In particular, the dependence decreases from nearly \(0.5~\mathrm {GeV}\) per additional vertex to \(\lesssim 0.2~\mathrm {GeV}\) per vertex, or a factor of 3–5 reduction in pile-up sensitivity. This reduction in the dependence of the \(p_{\text {T}} \) on pile-up does not necessarily translate into a reduction of the pile-up dependence of other jet observables. Moreover, some residual dependence on \(N_{\mathrm{PV}}\) remains. Figure 5b shows that \(\rho \times A^{\mathrm {jet}} \) subtraction has very little effect on the sensitivity to out-of-time pile-up, which is particularly significant in the forward region. The dependence on \(N_{\mathrm{PV}}\) is evaluated in bins of \(\langle \mu \rangle \), and vice versa. Both dependencies are evaluated in bins of \(p_\mathrm{T}^\mathrm{true}\) and \(\eta \) as well. The slope of the linear fit as a function of \(N_{\mathrm{PV}}\) does not depend significantly on \(\langle \mu \rangle \), or vice versa, within each \((p_\mathrm{T}^\mathrm{true}, \eta )\) bin. In other words, there is no statistically significant evidence for non-linearity or cross-terms in the sensitivity of the jet \(p_{\text {T}}\) to in-time or out-of-time pile-up for the values of \(\langle \mu \rangle \) seen in 2012 data. A measurable effect of such non-linearities may occur with the shorter bunch spacing operation, and thus increased out-of-time pile-up effects, expected during Run 2 of the LHC. Measurements and validations of this sort are therefore important for establishing the sensitivity of this correction technique to such changes in the operational characteristics of the accelerator.

Dependence of the reconstructed jet \(p_{\text {T}}\) (anti-\(k_{t}\), \(R = 0.4\), LCW scale) on a in-time pile-up measured using \(N_{\mathrm{PV}}\) and b out-of-time pile-up measured using \(\langle \mu \rangle \). In each case, the dependence of the jet \(p_{\text {T}}\) is shown for three correction stages: before any correction, after the \(\rho \times A^{\mathrm {jet}} \) subtraction, and after the residual correction. The error bands show the 68 % confidence intervals of the fits. The dependence was obtained by comparison with truth-particle jets in simulated dijet events, and corresponds to a truth-jet \(p_{\text {T}}\) range of 20–30 GeV

After subtracting \(\rho \times A^{\mathrm {jet}} \) from the jet \(p_{\text {T}}\), there is an additional subtraction of a residual term proportional to the number (\(N_{\mathrm{PV}}- 1\)) of reconstructed pile-up vertices, as well as a residual term proportional to \(\langle \mu \rangle \) (to account for out-of-time pile-up). This residual correction is derived by comparison to truth particle jets in simulated dijet events, and it is completely analogous to the average pile-up offset correction used previously in ATLAS [4]. Due to the preceding \(\rho \times A^{\mathrm {jet}} \) subtraction, the residual correction is generally quite small for jets with \(|\eta | < 2.1\). In the forward region, the negative dependence of jets on out-of-time pile-up results in a significantly larger residual correction. The \(\langle \mu \rangle \)-dependent term of the residual correction is approximately the same size as the corresponding term in the average offset correction of Eq. (2), but the \(N_{\mathrm{PV}}\)-dependent term is significantly smaller. This is true even in the forward region, which shows that \(\rho \) is a useful estimate of in-time pile-up activity even beyond the region in which it is calculated.

Several additional jet definitions are also studied, including larger nominal jet radii and alternative jet algorithms. Prior to the jet area subtraction, a larger sensitivity to in-time pile-up is observed for larger-area jets, as expected. Following the subtraction procedure in Eq. (5) similar results are obtained even for larger-area jet definitions. These results demonstrate that \(\rho \times A^{\mathrm {jet}} \) subtraction is able to effectively reduce the impact of in-time pile-up regardless of the jet definition, although a residual correction is required to completely remove the dependence on \(N_{\mathrm{PV}}\) and \(\langle \mu \rangle \).

In addition to the slope of the \(p_{\text {T}}\) dependence on \(N_{\mathrm{PV}}\), the RMS of the \(p_{\text {T}} ^{\mathrm {reco}}- p_\mathrm{T}^\mathrm{true} \) distribution is studied as a function of \(\langle \mu \rangle \) and \(\eta \) in Fig. 6. For this result, anti-\(k_{t}\) \(R=0.6\) jets are chosen due to their greater susceptibility to pile-up and the greater challenge they therefore pose to pile-up correction algorithms. The RMS width of this distribution is an approximate measure of the jet \(p_{\text {T}}\) resolution for the narrow truth-particle jet \(p_{\text {T}}\) ranges used in Fig. 6. These results show that the area subtraction procedure provides an approximate 20 % reduction in the magnitude of the jet-by-jet fluctuations introduced by pile-up relative to uncorrected jets and approximately a 10 % improvement over the simple offset correction. Smaller radius \(R=0.4\) jets exhibit a similar relative improvement compared to the simple offset correction. It should be noted that the pile-up activity in any given event may have significant local fluctuations similar in angular size to jets, and a global correction such as that provided by the area subtraction procedure defined in Eq. (3) cannot account for them. Variables such as the jet vertex fraction \(\mathrm{JVF}\), corrected \(\mathrm{JVF}\) or \(\mathrm{corrJVF}\), or the jet vertex tagger \(\mathrm{JVT}\) may be used to reject jets that result from such fluctuations in pile-up \(p_{\text {T}}\) density, as described in Sect. 6.

a RMS width of the \(p_{\text {T}} ^{\mathrm {reco}}- p_\mathrm{T}^\mathrm{true} \) distribution versus \(\langle \mu \rangle \) and b versus pseudorapidity \(\eta \), for anti-\(k_{t}\) \(R=0.6\) jets at the LCW scale matched to truth-particle jets satisfying \(20< p_\mathrm{T}^\mathrm{true} < 30 \mathrm {GeV}\), in simulated dijet events. A significant improvement is observed compared to the previous subtraction method (shown in red) [4]

Two methods of in-situ validation of the pile-up correction are employed to study the dependence of jet \(p_{\text {T}}\) on \(N_{\mathrm{PV}}\) and \(\langle \mu \rangle \). The first method uses track-jets to provide a measure of the jet \(p_{\text {T}}\) that is pile-up independent. This requires the presence of track-jets and so can only be used in the most central region of the detector for \(|\eta | < 2.1\). It is not statistically limited. The second method exploits the \(p_{\text {T}}\) balance between a reconstructed jet and a \(Z\) boson, using the \(p_{\text {T}} ^{Z}\) as a measure of the jet \(p_{\text {T}}\). This enables an analysis over the full (\(|\eta |<4.9\)) range of the detector, but the extra selections applied to the jet and \(Z\) boson reduce its statistical significance. The \(N_{\mathrm{PV}}\) dependence must therefore be evaluated inclusively in \(\langle \mu \rangle \) and vice versa. This results in a degree of correlation between the measured \(N_{\mathrm{PV}}\) and \(\langle \mu \rangle \) dependence.

While the pile-up residual correction is derived from simulated dijet events, the in-situ validation is done entirely using \(Z \)+jets events. In the track-jet validation, although the kinematics of the \(Z\) boson candidate are not used directly, the dilepton system is relied upon for triggering, thus avoiding any potential bias from jet triggers.

Figure 7a shows the results obtained when matching anti-\(k_{t}\) \(R=0.4\), LCW reconstructed jets to anti-\(k_{t}\) \(R=0.4\) track-jets. No selection is applied based on the calorimeter-based jet \(p_{\text {T}}\). Good agreement is observed between data and MC simulation; however, a small overcorrection is observed in the \(N_{\mathrm{PV}}\) dependence of each. For the final uncertainties on the method, this non-closure of the correction is taken as an uncertainty in the jet \(p_{\text {T}}\) dependence on \(N_{\mathrm{PV}}\).

In events where a \(Z\) boson is produced in association with one jet, momentum conservation ensures balance between the \(Z\) boson and the jet in the transverse plane. In the direct \(p_{\text {T}}\) balance method, this principle is exploited by using \(p_{\text {T}} ^{Z}\) as a proxy for the true jet \(p_{\text {T}}\). In the case of a perfect measurement of lepton energies and provided that all particles recoiling against the \(Z\) boson are included in the jet cone, the jet is expected to balance the \(Z\) boson. Therefore the estimated \(Z\) boson \(p_{\text {T}}\) is used as the reference scale, denoted by \(p_{\text {T}} ^{\mathrm {ref}}\).

Taking the mean, \(\langle \Delta p_{\text {T}} \rangle \), of the (\(\Delta p_{\text {T}} = p_{\text {T}}- p_{\text {T}} ^{\mathrm {ref}} \)) distribution, the slope \(\partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \) is extracted and plotted as a function of \(p_{\text {T}} ^{\mathrm {ref}}\), as shown in Fig. 7b. A small residual slope is observed after the jet-area correction, which is well modelled by the MC simulation, as can be seen in Fig. 7b. The mismodelling is quantified by the maximum differences between data and MC events for both \(\partial \langle \Delta p_{\text {T}} \rangle /\partial N_{\mathrm{PV}} \) and \(\partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \). These differences (denoted by \(\Delta \left( \partial \langle \Delta p_{\text {T}} \rangle /\partial N_{\mathrm{PV}} \right) \) and \(\Delta \left( \partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \right) \)) are included in the total systematic uncertainty.

a \(N_{\mathrm{PV}}\) dependence of the reconstructed \(p_{\text {T}}\) of anti-\(k_{t}\) \(R=0.4\) LCW jets after the area subtraction as a function of track-jet \(p_{\text {T}}\). b Validation results from \(Z \)+jets events showing the \(\langle \mu \rangle \) dependence as a function of the \(Z\) boson \(p_{\text {T}}\), denoted by \(p_{\text {T}} ^{\mathrm {ref}}\), for anti-\(k_{t}\) \(R=0.4\) LCW jets in the central region after the area subtraction. The points represent central values of the linear fit to \(\partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \) and the error bars correspond to the associated fitting error

The systematic uncertainties are obtained by combining the measurements from \(Z\)–jet balance and track-jet in-situ validation studies. In the central region (\(|\eta | < 2.1\)) only the track-jet measurements are used whereas \(Z\)–jet balance is used for \(2.1< |\eta | < 4.5\). In the case of the \(Z\)–jet balance in the forward region, the effects of in-time and out-of-time pile-up cannot be fully decoupled. Therefore, the \(N_{\mathrm{PV}}\) uncertainty is assumed to be \(\eta \)-independent and is thus extrapolated from the central region. In the forward region, the uncertainty on the \(\langle \mu \rangle \) dependence, \(\Delta \left( \partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \right) \), is taken to be the maximum difference between \(\partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \) in the central region and \(\partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \) in the forward region. In this way, the forward region \(\Delta \left( \partial \langle \Delta p_{\text {T}} \rangle /\partial \langle \mu \rangle \right) \) uncertainty implicitly includes any \(\eta \) dependence.

5.3 Pile-up shape subtraction

The jet shape subtraction method [51] determines the sensitivity of jet shape observables, such as the jet width or substructure shapes, to pile-up by evaluating the sensitivity of that shape to variations in infinitesimally soft energy depositions. This variation is evaluated numerically for each jet in each event and then extrapolated to zero to derive the correction.

The procedure uses a uniform distribution of infinitesimally soft particles, or ghosts, that are added to the event. These ghost particles are distributed with a number density \(\nu _{g}\) per unit in y–\(\phi \) space, yielding an individual ghost area \(A_{g} =1/\nu _{g} \). The four-momentum of ghost i is defined as

where \(g_{t}\) is the ghost transverse momentum (initially set to \(10^{-30} \mathrm {GeV}\)), and the ghosts are defined to have zero mass. This creates a uniform ghost density given by \(g_{t}/A_{g} \) which is used as a proxy for the estimated pile-up contribution described by Eq. (4). These ghosts are then incorporated into the jet finding and participate in the jet clustering. By varying the amount of ghost \(p_{\text {T}}\) density incorporated into the jet finding and determining the sensitivity of a given jet’s shape to that variation, a numerical correction can be derived. A given jet shape variable \(\mathcal{V} \) is assumed to be a function of ghost \(p_{\text {T}}\), \(\mathcal{V} (g_{t})\). The reconstructed (uncorrected) jet shape is then \(\mathcal{V} (g_{t} =0)\). The corrected jet shape can be obtained by extrapolating to the value of \(g_{t}\) which cancels the effect of the pile-up \(p_{\text {T}}\) density, namely \(g_{t} = -\rho \cdot A_{g} \). The corrected shape is then given by \(\mathcal{V} _{\mathrm {corr}} = \mathcal{V} (g_{t} = -\rho \cdot A_{g})\). This solution can be achieved by using the Taylor expansion:

The derivatives are obtained numerically by evaluating several values of \(\mathcal{V} (g_{t})\) for \(g_{t} \ge 0\). Only the first three terms in Eq. (7) are used for the studies presented here.

One set of shape variables which has been shown to significantly benefit from the correction defined by the expansion in Eq. (7) is the set of N-subjettiness observables \(\tau _N\) [52, 53]. These observables measure the extent to which the constituents of a jet are clustered around a given number of axes denoted by N (typically with \(N=1,2,3\)) and are related to the corresponding subjet multiplicity of a jet. The ratios \(\tau _2/\tau _1\) (\(\tau _{21}\)) and \(\tau _3/\tau _2\) (\(\tau _{32}\)) can be used to provide discrimination between Standard Model jet backgrounds and boosted \(W /Z \) bosons [31, 52, 54], top quarks [31, 52, 54, 55], or even gluinos [56]. For example, \(\tau _{21} \simeq 1\) corresponds to a jet that is very well described by a single subjet whereas a lower value implies a jet that is much better described by two subjets rather than one.

Two approaches are tested for correcting the N-subjettiness ratios \(\tau _{21}\) and \(\tau _{32}\). The first approach is to use the individually corrected \(\tau _N\) for the calculation of the numerators and denominators of the ratios. A second approach is also tested in which the full ratio is treated as a single observable and corrected directly. The resulting agreement between data and MC simulation is very similar in the two cases. However, for very high \(p_{\text {T}}\) jets (\(600~\mathrm {GeV}\le p_{\text {T}} ^\mathrm {jet} <800~\mathrm {GeV}\)) the first approach is preferable since it yields final ratios that are closer to the values obtained for truth-particle jets and a mean \(\langle \tau _{32} \rangle \) that is more stable against \(\langle \mu \rangle \). On the other hand, at lower jet \(p_{\text {T}}\) (\(200~\mathrm {GeV}\le p_{\text {T}} ^\mathrm {jet} <300~\mathrm {GeV}\)), applying the jet shape subtraction to the ratio itself performs better than the individual \(\tau _N\) corrections according to the same figures of merit. Since substructure studies and the analysis of boosted hadronic objects typically focus on the high jet \(p_{\text {T}}\) regime, all results shown here use the individual corrections for \(\tau _N\) in order to compute the corrected \(\tau _{21}\) and \(\tau _{32}\).

Figure 8 presents the uncorrected and corrected distributions of \(\tau _{32}\), in both the observed data and MC simulation, as well as the truth-particle jet distributions. In the case of Fig. 8b, the mean value of \(\tau _{32}\) is also presented for trimmed jets, using both the reconstructed and truth-particle jets. This comparison allows for a direct comparison of the shape subtraction method to trimming in terms of their relative effectiveness in reducing the pile-up dependence of the jet shape. Additional selections are applied to the jets used to study \(\tau _{32}\) in this case: \(\tau _{21} >0.1\) (after correction) and jet mass \(m^\mathrm{jet} >30 \mathrm {GeV}\) (after correction). These selections provide protection against the case where \(\tau _2\) becomes very small and small variations in \(\tau _3\) can thus lead to large changes in the ratio. The requirement on \(\tau _{21}\) rejects approximately 1 % of jets, whereas the mass requirement removes approximately 9 % of jets. As discussed above, the default procedure adopted here is to correct the ratio \(\tau _{21}\) by correcting \(\tau _1\) and \(\tau _2\) separately. In cases where both the corrected \(\tau _1\) and \(\tau _2\) are negative, the sign of the corrected \(\tau _{21}\) is set to negative.

The corrected N-subjettiness ratio \(\tau _{32}\) shows a significant reduction in pile-up dependence, as well as a much closer agreement with the distribution expected from truth-particle jets. Figure 8b provides comparisons between the shape subtraction procedure and jet trimming. Trimming is very effective in removing the pile-up dependence of jet substructure variables (see Ref. [31] and Sect. 7). However, jet shape variables computed after jet trimming are considerably modified by the removal of soft subjets and must be directly compared to truth-level jet shape variables constructed with trimming at the truth level as well. Comparing the mean trimmed jet \(\tau _{32}\) at truth level to the reconstructed quantity in Fig. 8b (open black triangles and open purple square markers, respectively), and similarly for the shape correction method (filled green triangles and filled red square markers, respectively) it is clear that the shape expansion correction obtains a mean value closer to the truth.

a Comparisons of the uncorrected (filled blue circles), corrected (red) distributions of the ratio of 3-subjettiness to 2-subjettiness (\(\tau _{32}\)) for data (points) and for MC simulation (solid histogram) for leading jets in the range \(600 \le p_{\text {T}} < 800~\mathrm {GeV}\). The distribution of \(\tau _{32}\) computed using stable truth particles (filled green triangles) is also included. The lower panel displays the ratio of the data to the MC simulation. b Dependence of \(\tau _{32}\) on \(\langle \mu \rangle \) for the uncorrected (filled blue circles), corrected (filled red squares) and trimmed (open purple squares) distributions for reconstructed jets in MC simulation for leading jets in the range \(600 \le p_{\text {T}} < 800~\mathrm {GeV}\). The mean value of \(\tau _{32}\) computed using stable truth particles (green) is also included

6 Pile-up jet suppression techniques and results

The suppression of pile-up jets is a crucial component of many physics analyses in ATLAS. Pile-up jets arise from two sources: hard QCD jets originating from a pile-up vertex, and local fluctuations of pile-up activity. The pile-up QCD jets are genuine jets and must be tagged and rejected using the vertex-pointing information of charged-particle tracks (out-of-time QCD jets have very few or no associated tracks since the ID reconstructs tracks only from the in-time events). Pile-up jets originating from local fluctuations are a superposition of random combinations of particles from multiple pile-up vertices, and they are generically referred to here as stochastic jets. Stochastic jets are preferentially produced in regions of the calorimeter where the global \(\rho \) estimate is smaller than the actual pile-up activity. Tracking information also plays a key role in tagging and rejecting stochastic jets. Since tracks can be precisely associated with specific vertices, track-based observables can provide information about the pile-up structure and vertex composition of jets within the tracking detector acceptance (\(|\eta |<2.5\)) that can be used for discrimination. The composition of pile-up jets depends on both \(\langle \mu \rangle \) and \(p_{\text {T}}\). Stochastic jets have a much steeper \(p_{\text {T}}\) spectrum than pile-up QCD jets. Therefore, higher-\(p_{\text {T}}\) jets that are associated with a primary vertex which is not the hard-scatter vertex are more likely to be pile-up QCD jets, not stochastic jets. On the other hand, while the number of QCD pile-up jets increases linearly with \(\langle \mu \rangle \), the rate of stochastic jets increases more rapidly such that at high luminosity the majority of pile-up jets at low \(p_{\text {T}}\) are expected to be stochastic in nature [57].

6.1 Pile-up jet suppression from subtraction

The number of reconstructed jets increases with the average number of pile-up interactions, as shown in Fig. 9 using the \(Z \)+jets event sample described in Sect. 5.1. Event-by-event pile-up subtraction based on jet areas, as described in Sect. 5.2, removes the majority of pile-up jets by shifting their \(p_{\text {T}}\) below the \(p_{\text {T}}\) threshold of \(20~\mathrm {GeV}\). This has the effect of improving the level of agreement between data and MC simulation. The phenomenon of pile-up jets is generally not well modelled, as shown in the ratio plot of Fig. 9.

The mean anti-\(k_{t}\) \(R=0.4\) LCW jet multiplicity as a function of \(\langle \mu \rangle \) in \(Z \)+jets events for jets with \(p_{\text {T}} > 20 \mathrm {GeV}\) and \(|\eta | < 2.1\). Events in this plot are required to have at least 1 jet both before and after the application of the jet-area based pile-up correction

6.2 Pile-up jet suppression from tracking

Some pile-up jets remain even after pile-up subtraction mainly due to localised fluctuations in pile-up activity which are not fully corrected by \(\rho \) in Eq. (5). Information from the tracks matched to each jet may be used to further reject any jets not originating from the hard-scatter interaction. ATLAS has developed three different track-based tagging approaches for the identification of pile-up jets: The jet vertex fraction (\(\mathrm{JVF}\)) algorithm, used in almost all physics analyses in Run 1, a set of two new variables (\(\mathrm{corrJVF}\), and \(R_\mathrm {pT}\)) for improved performance, and a new combined discriminant, the jet vertex tagger (\(\mathrm{JVT}\)) for optimal performance. While the last two approaches were developed using Run 1 data, most analyses based on Run 1 data were completed before these new algorithms for pile-up suppression were developed. Their utility is already being demonstrated for use in high-luminosity LHC upgrade studies, and they will be available to all ATLAS analyses at the start of Run 2.

6.2.1 Jet vertex fraction

The jet vertex fraction (\(\mathrm{JVF}\)) is a variable used in ATLAS to identify the primary vertex from which the jet originated. A cut on the \(\mathrm{JVF}\) variable can help to remove jets which are not associated with the hard-scatter primary vertex. Using tracks reconstructed from the ID information, the \(\mathrm{JVF}\) variable can be defined for each jet with respect to each identified primary vertex (PV) in the event, by identifying the PV associated with each of the charged-particle tracks pointing towards the given jet. Once the hard-scatter PV is identified, the \(\mathrm{JVF}\) variable can be used to select jets having a high likelihood of originating from that vertex. Tracks are assigned to calorimeter jets following the ghost-association procedure [50], which consists of assigning tracks to jets by adding tracks with infinitesimal \(p_{\text {T}}\) to the jet clustering process. Then, the \(\mathrm{JVF}\) is calculated as the ratio of the scalar sum of the \(p_{\text {T}}\) of matched tracks that originate from a given PV to the scalar sum of \(p_{\text {T}}\) of all matched tracks in the jet, independently of their origin.

\(\mathrm{JVF}\) is defined for each jet with respect to each PV. For a given jet\(_{i}\), its \(\mathrm{JVF}\) with respect to the primary vertex PV\(_{j}\) is given by:

where m runs over all tracks originating from PV\(_{j}\) Footnote 2 matched to jet\(_{i}\), n over all primary vertices in the event and l over all tracks originating from PV\(_{n}\) matched to jet\(_{i}\). Only tracks with \(p_{\text {T}} > 500~\mathrm {MeV}\) are considered in the \(\mathrm{JVF}\) calculation. \(\mathrm{JVF}\) is bounded by 0 and 1, but a value of \(-1\) is assigned to jets with no associated tracks.

For the purposes of this paper, \(\mathrm{JVF}\) is defined from now on with respect to the hard-scatter primary vertex. In the \(Z \)+jets events used for these studies of pile-up suppression, this selection of the hard-scatter primary vertex is found to be correct in at least 98 % of events. \(\mathrm{JVF}\) may then be interpreted as an estimate of the fraction of \(p_{\text {T}}\) in the jet that can be associated with the hard-scatter interaction. The principle of the \(\mathrm{JVF}\) variable is shown schematically in Fig. 10a. Figure 10b shows the \(\mathrm{JVF}\) distribution in MC simulation for hard-scatter jets and for pile-up jets with \(p_{\text {T}} > 20 \mathrm {GeV}\) after pile-up subtraction and jet energy scale correction in a \(Z(\rightarrow ee)+\)jets sample with the \(\langle \mu \rangle \) distribution shown in Fig. 1. Hard-scatter jets are calorimeter jets that have been matched to truth-particle jets from the hard-scatter with an angular separation of \(\Delta R\le 0.4\), whereas pile-up jets are defined as calorimeter jets with an angular separation to the nearest truth-particle jet of \(\Delta R>0.4\). The thresholds for truth-particle jets are \(p_{T}>10\) GeV for those originating from the hard-scatter, and \(p_{T}>4\) GeV for those originating in pile-up interactions. This comparison demonstrates the discriminating power of the \(\mathrm{JVF}\) variable.

a Schematic representation of the jet vertex fraction \(\mathrm{JVF}\) principle where f denotes the fraction of track \(p_{\text {T}}\) contributed to jet 1 due to the second vertex (PV2). b \(\mathrm{JVF}\) distribution for hard-scatter (blue) and pile-up (red) jets with \(20< p_{\text {T}} < 50 \mathrm {GeV}\) and \(|\eta |<2.4\) after pile-up subtraction and jet energy scale correction in simulated \(Z+\)jets events

While \(\mathrm{JVF}\) is highly correlated with the actual fraction of hard-scatter activity in a reconstructed calorimeter jet, it is important to note that the correspondence is imperfect. For example, a jet with significant neutral pile-up contributions may receive \(\mathrm{JVF} = 1\), while \(\mathrm{JVF} = 0\) may result from a fluctuation in the fragmentation of a hard-scatter jet such that its charged constituents all fall below the track \(p_{\text {T}}\) threshold. \(\mathrm{JVF}\) also relies on the hard-scatter vertex being well separated along the beam axis from all of the pile-up vertices. In some events, a pile-up jet may receive a high value of \(\mathrm{JVF}\) because its associated primary vertex is very close to the hard-scatter primary vertex. While this effect is very small for 2012 pile-up conditions, it will become more important at higher luminosities, as the average distance between interactions decreases as \(1/\langle \mu \rangle \). For these reasons, as well as the lower probability for producing a pile-up QCD jet at high \(p_{\text {T}}\), \(\mathrm{JVF}\) selections are only applied to jets with \(p_{\text {T}} \le 50~\mathrm {GeV}\).

The modelling of \(\mathrm{JVF}\) is investigated in \(Z (\rightarrow \mu \mu )\)+jets events using the same selection as discussed in Sect. 5.1, which yields a nearly pure sample of hard-scatter jets. By comparison to truth-particle jets in MC simulation, it was found that the level of pile-up jet contamination in this sample is close to 2 % near \(20~\mathrm {GeV}\) and almost zero at the higher end of the range near \(50~\mathrm {GeV}\). The \(\mathrm{JVF}\) distribution for the jet balanced against the Z boson in these events is well modelled for hard-scatter jets. However, the total jet multiplicity in these events is overestimated in simulated events, due to mismodelling of pile-up jets. This is shown in Fig. 11, for several different choices of the minimum \(p_{\text {T}}\) cut applied at the fully calibrated jet energy scale (including jet-area-based pile-up subtraction). The application of a \(\mathrm{JVF}\) cut significantly improves the data/MC agreement because the majority of pile-up jets fail the \(\mathrm{JVF}\) cut: across all \(p_{\text {T}}\) bins, data and MC simulation are seen to agree within 1 % following the application of a \(\mathrm{JVF}\) cut. It is also observed that the application of a \(\mathrm{JVF}\) cut results in stable values for the mean jet multiplicity as a function of \(\langle \mu \rangle \).

Figure 11 also shows the systematic uncertainty bands, which are only visible for the lowest \(p_{\text {T}}\) selection of \(20~\mathrm {GeV}\). These uncertainties are estimated by comparing the \(\mathrm{JVF}\) distributions for hard-scatter jets in data and MC simulation. The efficiency of a nominal \(\mathrm{JVF}\) cut of X is defined as the fraction of jets, well balanced against the Z boson, passing the cut, denoted by \(\mathcal {E}_\mathrm{MC}^\mathrm{nom}\) and \(\mathcal {E}_\mathrm{data}^\mathrm{nom}\) for MC events and data, respectively. The systematic uncertainty is derived by finding two \(\mathrm{JVF}\) cuts with \(\mathcal {E}_\mathrm{MC}\) differing from \(\mathcal {E}_\mathrm{MC}^\mathrm{nom}\) by \(\pm (\mathcal {E}_\mathrm{MC}^\mathrm{nom}-\mathcal {E}_\mathrm{data}^\mathrm{nom})\). The \(\mathrm{JVF}\) uncertainty band is then formed by re-running the analysis with these up and down variations in the \(\mathrm{JVF}\) cut value. Systematic uncertainties vary between 2 and 6 % depending on jet \(p_{\text {T}}\) and \(\eta \).

The mean anti-\(k_{t}\) \(R=0.4\) LCW+JES jet multiplicity as a function of \(\langle \mu \rangle \) in \(Z \)+jets events for jets with \(|\eta | < 2.1\), back-to-back with the \(Z\) boson, before and after several \(|\mathrm{JVF} |\) cuts were applied to jets with \(p_{\text {T}} < 50~\mathrm {GeV}\). Results for jets with a \(p_{\text {T}} > 20~\mathrm {GeV}\), b \(p_{\text {T}} > 30~\mathrm {GeV}\) and c \(p_{\text {T}} > 40~\mathrm {GeV}\) are shown requiring at least one jet of that \(p_{\text {T}}\). To remove effects of hard-scatter modelling the dependence on \(\langle \mu \rangle \) was fit and the MC simulation shifted so that data and simulation agree at zero pile-up, \(\langle \mu \rangle = 0\). The upper ratio plots show results before and after applying a \(|\mathrm{JVF} |\) cut of 0.25 and the lower ratio plots show the same for a cut of 0.50. The \(\mathrm{JVF}\) uncertainty is very small when counting jets with \(p_{\text {T}} > 40~\mathrm {GeV}\)

6.2.2 Improved variables for pile-up jet vertex identification

While a \(\mathrm{JVF}\) selection is very effective in rejecting pile-up jets, it has limitations when used in higher (or varying) luminosity conditions. As the denominator of \(\mathrm{JVF}\) increases with the number of reconstructed primary vertices in the event, the mean \(\mathrm{JVF}\) for signal jets is shifted to smaller values. This explicit pile-up dependence of \(\mathrm{JVF}\) results in an \(N_{\mathrm{PV}}\)-dependent jet efficiency when a minimum \(\mathrm{JVF}\) criterion is imposed to reject pile-up jets. This pile-up sensitivity is addressed in two different ways. First, by correcting \(\mathrm{JVF}\) for the explicit pile-up dependence in its denominator (\(\mathrm{corrJVF}\)) and, second, by introducing a new variable defined entirely from hard-scatter observables (\(R_\mathrm {pT}\)).

The quantity \(\mathrm{corrJVF}\) is a variable similar to \(\mathrm{JVF}\), but corrected for the \(N_{\mathrm{PV}}\) dependence. It is defined as

where \(\sum _m{p_{\mathrm{T}, m}^{\mathrm{track}}(\mathrm{PV}_0)}\) is the scalar sum of the \(p_{\text {T}}\) of the tracks that are associated with the jet and originate from the hard-scatter vertex. The term \(\sum _{n\ge 1}\sum _l p_{\mathrm{T}, l}^{\mathrm{track}}(\mathrm{PV}_n)=p_{\text {T}} ^{\mathrm {PU}}\) denotes the scalar sum of the \(p_{\text {T}}\) of the associated tracks that originate from any of the pile-up interactions.

The \(\mathrm{corrJVF}\) variable uses a modified track-to-vertex association method that is different from the one used for \(\mathrm{JVF}\). The new selection aims to improve the efficiency for b-quark jets and consists of two steps. In the first step, the vertex reconstruction is used to assign tracks to vertices. If a track is attached to more than one vertex, priority is given to the vertex with higher \(\sum (p_{\text {T}} ^{\mathrm {track}})^2\). In the second step, if a track is not associated with any primary vertex after the first step but satisfies \(|\Delta z|<3\) mm with respect to the hard-scatter primary vertex, it is assigned to the hard-scatter primary vertex. The second step targets tracks from decays in flight of hadrons that originate from the hard-scatter but are not likely to be attached to any vertex. The \(|\Delta z|<3\) mm criterion was chosen based on the longitudinal impact parameter distribution of tracks from b-hadron decays, but no strong dependence of the performance on this particular criterion was observed when the cut value was altered within 1 mm. The new 2-step track-to-vertex association method results in a significant increase in the hard-scatter jet efficiency at fixed rate of fake pile-up jets, with a large performance gain for jets initiated by b-quarks.

To correct for the linear increase of \(\langle p_{\text {T}} ^{\mathrm {PU}}\rangle \) with the total number of pile-up tracks per event (\(n_\mathrm {track}^\mathrm {PU}\)), \(p_{\text {T}} ^{\mathrm {PU}}\) is divided by \((k \cdot n_\mathrm {track}^\mathrm {PU})\), with \(k=0.01\), in the \(\mathrm{corrJVF}\) definition. The total number of pile-up tracks per event is computed from all tracks associated with vertices other than the hard-scatter vertex. The scaling factor k is approximated by the slope of \(\langle p_{\text {T}} ^{\mathrm {PU}}\rangle \) with \(n_\mathrm {track}^\mathrm {PU} \), but the resulting discrimination between hard-scatter and pile-up jets is insensitive to the choice of k.Footnote 3

a Distribution of \(\mathrm{corrJVF}\) for pile-up (PU) and hard-scatter (HS) jets with \(20< p_{\text {T}} < 30~\mathrm {GeV}\). b Primary-vertex dependence of the hard-scatter jet efficiency for \(20< p_{\text {T}} < 30~\mathrm {GeV}\) (solid markers) and \(30< p_{\text {T}} < 40~\mathrm {GeV}\) (open markers) jets for fixed cuts of \(\mathrm{corrJVF}\) (blue square) and \(\mathrm{JVF}\) (violet circle) such that the inclusive efficiency is \(90~\%\). The selections placed on \(\mathrm{corrJVF}\) and \(\mathrm{JVF}\), which depend on the \(p_{\text {T}}\) bin, are specified in the legend

Figure 12a shows the \(\mathrm{corrJVF}\) distribution for pile-up and hard-scatter jets in simulated dijet events. A value \(\mathrm{corrJVF} =-1\) is assigned to jets with no associated tracks. Jets with \(\mathrm{corrJVF} = 1\) are not included in the studies that follow due to use of signed \(\mathrm{corrJVF}\) selections. About \(1~\%\) of hard-scatter jets with \(20< p_{\text {T}} < 30~\mathrm {GeV}\) have no associated hard-scatter tracks and thus \(\mathrm{corrJVF} =0\).

Figure 12b shows the hard-scatter jet efficiency as a function of the number of reconstructed primary vertices in the event when imposing a minimum \(\mathrm{corrJVF}\) or \(\mathrm{JVF}\) requirement such that the efficiency measured across the full range of \(N_{\mathrm{PV}}\) is 90 %. For the full range of \(N_{\mathrm{PV}}\) considered, the hard-scatter jet efficiency after a selection based on \(\mathrm{corrJVF}\) is stable at \(90\%\pm 1\%\), whereas for \(\mathrm{JVF}\) the efficiency degrades by about 20 %, from 97 to 75 %. The choice of scaling factor k in the \(\mathrm{corrJVF}\) distribution does not affect the stability of the hard-scatter jet efficiency with \(N_{\mathrm{PV}}\).

The variable \(R_\mathrm {pT}\) is defined as the scalar sum of the \(p_{\text {T}}\) of the tracks that are associated with the jet and originate from the hard-scatter vertex divided by the fully calibrated jet \(p_{\text {T}}\), which includes pile-up subtraction:

The \(R_\mathrm {pT}\) distributions for pile-up and hard-scatter jets are shown in Fig. 13a. \(R_\mathrm {pT}\) is peaked at 0 and is steeply falling for pile-up jets, since tracks from the hard-scatter vertex rarely contribute. For hard-scatter jets, however, \(R_\mathrm {pT}\) has the meaning of a charged \(p_{\text {T}}\) fraction and its mean value and spread are larger than for pile-up jets. Since \(R_\mathrm {pT}\) involves only tracks that are associated with the hard-scatter vertex, its definition is at first order independent of \(N_{\mathrm{PV}}\). Figure 13b shows the hard-scatter jet efficiency as a function of \(N_{\mathrm{PV}}\) when imposing a minimum \(R_\mathrm {pT}\) and \(\mathrm{JVF}\) requirement such that the \(N_{\mathrm{PV}}\) inclusive efficiency is \(90~\%\). For the full range of \(N_{\mathrm{PV}}\) considered, the hard-scatter jet efficiency after a selection based on \(R_\mathrm {pT}\) is stable at \(90~\%\pm 1~\%\).

a Distribution of \(R_\mathrm {pT}\) for pile-up (PU) and hard-scatter (HS) jets with \(20< p_{\text {T}} < 30~\mathrm {GeV}\). b Primary-vertex dependence of the hard-scatter jet efficiency for \(20< p_{\text {T}} < 30\mathrm {GeV}\) (solid markers) and \(30< p_{\text {T}} < 40~\mathrm {GeV}\) (open markers) jets for fixed cuts of \(R_\mathrm {pT}\) (blue square) and \(\mathrm{JVF}\) (violet circle) such that the inclusive efficiency is \(90~\%\). The cut values imposed on \(R_\mathrm {pT}\) and \(\mathrm{JVF}\), which depend on the \(p_{\text {T}}\) bin, are specified in the legend

6.2.3 Jet vertex tagger

A new discriminant called the jet vertex tagger (\(\mathrm{JVT}\)) is constructed using \(R_\mathrm {pT}\) and \(\mathrm{corrJVF}\) as a two-dimensional likelihood derived using simulated dijet events and based on a k-nearest neighbour (kNN) algorithm [58]. For each point in the two-dimensional \(\mathrm{corrJVF}-R_\mathrm {pT} \) plane, the relative probability for a jet at that point to be of signal type is computed as the ratio of the number of hard-scatter jets to the number of hard-scatter plus pile-up jets found in a local neighbourhood around the point using a training sample of signal and pile-up jets with \(20< p_{\text {T}} < 50~\mathrm {GeV}\) and \(|\eta | < 2.4\). The local neighbourhood is defined dynamically as the 100 nearest neighbours around the test point using a Euclidean metric in the \(R_\mathrm {pT} \)–\(\mathrm{corrJVF} \) space, where \(\mathrm{corrJVF}\) and \(R_\mathrm {pT}\) are rescaled so that the variables have the same range.

Figure 14a shows the fake rate versus efficiency curves comparing the performance of the four variables \(\mathrm{JVF}\), \(\mathrm{corrJVF}\), \(R_\mathrm {pT}\) and \(\mathrm{JVT}\) when selecting a sample of jets with \(20< p_{\text {T}} < 50~\mathrm {GeV}\), \(|\eta |<2.4\) in simulated dijet events.

a Fake rate from pile-up jets versus hard-scatter jet efficiency curves for \(\mathrm{JVF}\), \(\mathrm{corrJVF}\), \(R_\mathrm {pT}\) and \(\mathrm{JVT}\). The widely used \(\mathrm{JVF}\) working points with cut values 0.25 and 0.5 are indicated with gold and green stars. b Primary vertex dependence of the hard-scatter jet efficiency for \(20< p_{\text {T}} < 30~\mathrm {GeV}\) (solid markers) and \(30< p_{\text {T}} < 40~\mathrm {GeV}\) (open markers) jets for fixed cuts of \(\mathrm{JVT}\) (blue square) and \(\mathrm{JVF}\) (violet circle) such that the inclusive efficiency is \(90~\%\)

The figure shows the fraction of pile-up jets passing a minimum \(\mathrm{JVF}\), \(\mathrm{corrJVF}\), \(R_\mathrm {pT}\) or \(\mathrm{JVT}\) requirement as a function of the signal-jet efficiency resulting from the same requirement. The \(\mathrm{JVT}\) performance is driven by \(\mathrm{corrJVF}\) (\(R_\mathrm {pT}\)) in the region of high signal-jet efficiency (high pile-up rejection). Using \(\mathrm{JVT}\), signal jet efficiencies of 80, 90 and \(95~\%\) are achieved for pile-up fake rates of respectively 0.4, 1.0 and \(3~\%\). When imposing cuts on \(\mathrm{JVF}\) that result in the same jet efficiencies, the pile-up fake rates are 1.3, 2.2 and \(4~\%\).

The dependence of the hard-scatter jet efficiencies on \(N_{\mathrm{PV}}\) is shown in Fig. 14b. For the full range of \(N_{\mathrm{PV}}\) considered, the hard-scatter jet efficiencies after a selection based on \(\mathrm{JVT}\) are stable within \(1~\%\).