Abstract

This article presents measurements of \(t\bar{t}\) differential cross-sections in a fiducial phase-space region, using an integrated luminosity of 3.2 fb\(^{-1}\) of proton–proton data at a centre-of-mass energy of \(\sqrt{s} = 13\) TeV recorded by the ATLAS experiment at the LHC in 2015. Differential cross-sections are measured as a function of the transverse momentum and absolute rapidity of the top quark, and of the transverse momentum, absolute rapidity and invariant mass of the \(t\bar{t}\) system. The \(t\bar{t}\) events are selected by requiring one electron and one muon of opposite electric charge, and at least two jets, one of which must be tagged as containing a b-hadron. The measured differential cross-sections are compared to predictions of next-to-leading order generators matched to parton showers and the measurements are found to be consistent with all models within the experimental uncertainties with the exception of the Powheg-Box \(+\) Herwig++ predictions, which differ significantly from the data in both the transverse momentum of the top quark and the mass of the \(t\bar{t}\) system.

Similar content being viewed by others

1 Introduction

The top quark is the heaviest fundamental particle in the standard model (SM) of particle physics. Understanding the production cross-section and kinematics of \(t\bar{t}\) pairs is an important test of SM predictions. Furthermore, \(t\bar{t}\) production is often an important background in searches for new physics and a detailed understanding of this process is therefore crucial.

At the large hadron collider (LHC), \(t\bar{t}\) pair production in proton–proton (pp) collisions at a centre-of-mass energy of \(\sqrt{s}=13\) TeV occurs predominantly via gluon fusion (90%) with small contributions from \(q\bar{q}\) annihilation (10%). Significant progress has been made in the precision of the calculations of the cross-section of this process, both inclusive and differential. Currently, calculations are available at next-to-next-to-leading order (NNLO) in perturbative QCD, including the resummation of next-to-next-to-leading logarithmic (NNLL) soft gluon terms [1,2,3,4,5,6,7,8,9,10,11].

Differential cross-sections for \(t\bar{t}\) production have been measured by the ATLAS [12,13,14] and CMS [15, 16] experiments, in events containing either one or two charged leptons, at \(\sqrt{s}=7\) TeV and \(\sqrt{s}=8\) TeV. Measurements of \(t\bar{t}\) differential cross-sections at \(\sqrt{s}=13\) TeV have also been made at the CMS experiment [17] in events containing one charged lepton. The integrated luminosity of 3.2 fb\(^{-1}\) of pp collision data collected by the ATLAS experiment at \(\sqrt{s}=13\) TeV allows the measurement of the differential cross-section as a function of the kinematic variables of the \(t\bar{t}\) system in a different kinematic regime compared to the previous LHC measurements. The inclusive cross-section has been measured at \(\sqrt{s}=13\) TeV by both the ATLAS [18] and CMS [19, 20] experiments and was found to be in agreement with the theoretical predictions. This article presents measurements of \(t\bar{t}\) differential cross-sections in terms of five different kinematic observables, both absolute and normalised to the fiducial cross-section. These observables are the transverse momentum of the top quark (\(p_{\text{ T }}(t)\)), the absolute rapidity of the top quark (|y(t)|), the transverse momentum of the \(t\bar{t}\) system (\(p_{\text{ T }}(t\bar{t})\)), the absolute rapidity of the \(t\bar{t}\) system (\(|y(t\bar{t})|\)), and the invariant mass of the \(t\bar{t}\) system (\(m(t\bar{t})\)). The distributions of these variables are unfolded to the particle level in a fiducial volume. The \(p_{\text{ T }}(t)\) and \(m(t\bar{t})\) observables are expected to be sensitive to the modelling of higher-order corrections in QCD, whereas the rapidity of the top quark and \(t\bar{t}\) system are expected to have sensitivity to the parton distribution functions (PDF) used in the simulations. The \(p_{\text{ T }}(t\bar{t})\) observable is sensitive to the amount of gluon radiation in the event and can be useful for the tuning of Monte Carlo (MC) generators. Top quarks and anti-top quarks are measured in one combined distribution for the \(p_{\text{ T }}(t)\) and |y(t)| observables, rather than studying them separately. The \(t\bar{t}\) system is reconstructed in events containing exactly one electron and one muon. Events in which a \(\tau \) lepton decays to an electron or muon are also included.

2 ATLAS detector

The ATLAS detector [21] at the LHC covers nearly the entire solid angle around the interaction point. It consists of an inner tracking detector surrounded by a thin superconducting solenoid, electromagnetic and hadronic calorimeters, and a muon spectrometer incorporating three large superconducting toroidal magnet systems. The inner-detector system is immersed in a 2 T axial magnetic field and provides charged-particle tracking in the range \(|\eta | < 2.5\).Footnote 1

The high-granularity silicon pixel detector surrounds the collision region and provides four measurements per track. The closest layer, known as the Insertable B-Layer [22, 23], was added in 2014 and provides high-resolution hits at small radius to improve the tracking performance. The pixel detector is followed by the silicon microstrip tracker, which provides four three-dimensional measurement points per track. These silicon detectors are complemented by the transition radiation tracker, which enables radially extended track reconstruction up to \(|\eta | = 2.0\). The transition radiation tracker also provides electron identification information based on the fraction of hits (typically 30 in total) passing a higher charge threshold indicative of transition radiation.

The calorimeter system covers the pseudorapidity range \(|\eta | < 4.9\). Within the region \(|\eta |< 3.2\), electromagnetic calorimetry is provided by barrel and endcap high-granularity lead/liquid-argon (LAr) electromagnetic calorimeters, with an additional thin LAr presampler covering \(|\eta | < 1.8\) to correct for energy loss in material upstream of the calorimeters. Hadronic calorimetry is provided by the steel/scintillator-tile calorimeter, segmented into three barrel structures within \(|\eta | < 1.7\), and two copper/LAr hadronic endcap calorimeters that cover \(1.5< |\eta | < 3.2\). The solid angle coverage is completed with forward copper/LAr and tungsten/LAr calorimeter modules optimised for electromagnetic and hadronic measurements respectively, in the region \(3.1< |\eta | < 4.9\).

The muon spectrometer comprises separate trigger and high-precision tracking chambers measuring the deflection of muons in a magnetic field generated by superconducting air-core toroids. The precision chamber system covers the region \(|\eta | < 2.7\) with three layers of monitored drift tubes, complemented by cathode strip chambers in the forward region, where the background is highest. The muon trigger system covers the range \(|\eta | < 2.4\) with resistive-plate chambers in the barrel, and thin-gap chambers in the endcap regions.

A two-level trigger system is used to select interesting events [24, 25]. The Level-1 trigger is implemented in hardware and uses a subset of detector information to reduce the event rate to a design value of at most 100 kHz. This is followed by the software-based high-level trigger, which reduces the event rate to 1 kHz.

3 Data and simulation samples

The pp collision data used in this analysis were collected during 2015 by ATLAS and correspond to an integrated luminosity of 3.2 fb\(^{-1}\) at \(\sqrt{s} = 13\) TeV. The data considered in this analysis were collected under stable beam conditions, and requiring all subdetectors to be operational. Each selected event includes additional interactions from, on average, 14 inelastic pp collisions in the same proton bunch crossing, as well as residual detector signals from previous bunch crossings with a 25 ns bunch spacing, collectively referred to as “pile-up”. Events are required to pass a single-lepton trigger, either electron or muon. Multiple triggers are used to select events: either triggers with low \(p_{\text {T}}\) thresholds of 24 GeV that utilise isolation requirements to reduce the trigger rate, or higher \(p_{\text {T}}\) thresholds of 50 GeV for muons or 60 and 120 GeV for electrons, with no isolation requirements to increase event acceptance.

MC simulations are used to model background processes and to correct the data for detector acceptance and resolution effects. The ATLAS detector is simulated [26] using Geant 4 [27]. A “fast simulation” [28], utilising parameterised showers in the calorimeter, but with full simulation of the inner detector and muon spectrometer, is used in the samples generated to estimate \(t\bar{t}\) modelling uncertainties. Additional pp interactions are generated using Pythia 8 (v8.186) [29] and overlaid on signal and background processes in order to simulate the effect of pile-up. The MC simulations are reweighted to match the distribution of the average number of interactions per bunch crossing that are observed in data. This process is referred to as “pile-up reweighting”. The same reconstruction algorithms and analysis procedures are applied to both data and MC simulation. Corrections derived from dedicated data samples are applied to the MC simulation in order to improve agreement with data.

The nominal \(t\bar{t}\) sample is simulated using the next-to-leading order (NLO) Powheg-Box (v2) matrix-element event generator [30,31,32] using Pythia 6 (v6.427) [33] for the parton shower (PS). Powheg-Box is interfaced to the CT10 [34] NLO PDF set while Pythia 6 uses the CTEQ6L1 PDF set [35]. A set of tuned parameters called the Perugia 2012 tune [36] is used in the simulation of the underlying event. The “\(h_{\mathrm {damp}}\)” parameter, which controls the \(p_{\text {T}}\) of the first additional gluon emission beyond the Born configuration, is set to the mass of the top quark (\(m_{t}\)). The main effect of this is to regulate the high-\(p_{\text {T}}\) emission against which the \(t\bar{t}\) system recoils. The choice of this \(h_{\mathrm {damp}}\) value was found to improve the modelling of the \(t\bar{t}\) system kinematics with respect to data in previous analyses [37]. In order to investigate the effects of initial- and final-state radiation, alternative Powheg-Box \(+\) Pythia 6 samples are generated with the renormalisation and factorisation scales varied by a factor of 2 (0.5) and using low (high) radiation variations of the Perugia 2012 tune and an \(h_{\mathrm {damp}}\) value of \(m_{t}\) (\(2m_{t}\)), corresponding to less (more) parton-shower radiation [37], referred to as “radHi” and “radLo”. These variations were selected to cover the uncertainties in the measurements of differential distributions in \(\sqrt{s}=7\) TeV data [12]. The \(h_{\mathrm {damp}}\) value for the low radiation sample is not decreased as it was found to disagree with previously published data. Alternative samples are generated using Powheg-Box (v2) and MadGraph5_aMC@NLO (v2.2.1) [38], referred to as MG5_aMC@NLO hereafter, both interfaced to Herwig++ (v2.7.1) [39], in order to estimate the effects of the choice of matrix-element event generator and parton-shower algorithm. Additional \(t\bar{t}\) samples are generated for comparisons with unfolded data using Sherpa (v2.2.0) [40], Powheg-Box (v2) \(+\) Pythia 8 as well as Powheg-Box (v2) and MG5_aMC@NLO interfaced to Herwig 7 [39, 41]. In all \(t\bar{t}\) samples, the mass of the top quark is set to 172.5 GeV. These \(t\bar{t}\) samples are described in further detail in Ref. [37].

Background processes are simulated using a variety of MC event generators. Single-top quark production in association with a W boson (Wt) is simulated using Powheg-Box v1 \(+\) Pythia 6 with the same parameters and PDF sets as those used for the nominal \(t\bar{t}\) sample and is normalised to the theoretical cross-section [42]. The higher-order overlap with \(t\bar{t}\) production is addressed using the “diagram removal” (DR) generation scheme [43]. A sample generated using an alternative “diagram subtraction” (DS) method is used to evaluate systematic uncertainties [43].

Sherpa (v2.1.1), interfaced to the CT10 PDF set, is used to model Drell–Yan production, where the dominant contribution is from \(Z/\gamma ^*\rightarrow \tau ^+\tau ^-\). For this process, Sherpa calculates matrix elements at NLO for up to two partons and at leading order (LO) for up to four partons using the OpenLoops [44] and Comix [45] matrix-element event generators. The matrix elements are merged with the Sherpa parton shower [46] using the ME \(+\) PS@NLO prescription [47]. The total cross-section is normalised to the NNLO predictions [48]. Sherpa (v2.1.1) with the CT10 PDF set is also used to simulate electroweak diboson production [49] (WW, WZ, ZZ), where both bosons decay leptonically. For these samples, Sherpa calculates matrix elements at NLO for zero additional partons, at LO for one to three additional partons (with the exception of ZZ production, for which the one additional parton is also at NLO), and using PS for all parton multiplicities of four or more. All samples are normalised using the cross-section computed by the event generator.

Events with \(t\bar{t}\) production in association with a vector boson are simulated using MG5_aMC@NLO \(+\) Pythia 8 [50], using the NNPDF2.3 PDF set and the A14 tune, as described in Ref. [51].

Background contributions containing one prompt lepton and one misidentified (“fake”) lepton, arising from either a heavy-flavour hadron decay, photon conversion, jet misidentification or light-meson decay, are estimated using samples from MC simulation. The history of the stable particles in the generator-level record is used to identify fake leptons from these processes by identifying leptons that originated from hadrons. The majority (\(\mathtt {\sim }\)90%) of fake-lepton events originate from the single-lepton \(t\bar{t}\) process, with smaller contributions arising from W + jets and \(t\bar{t}\) + vector-boson events. W + jets events are simulated using Powheg-Box + Pythia 8 with the CT10 PDF set and the AZNLO tune [52]. The t-channel single-top quark process is generated using Powheg-Box v1 + Pythia 6 with the same parameters and PDF sets as those used for the nominal \(t\bar{t}\) sample. EvtGen (v1.2.0) [53] is used for the heavy-flavour hadron decays in all samples. Other possible processes with fake leptons, such as multi-jet and Drell–Yan production, are negligible for the event selection used in this analysis.

4 Object and event selection

This analysis utilises reconstructed electrons, muons, jets and missing transverse momentum (with magnitude \(E_{\text {T}}^{\text {miss}}\)). Electron candidates are identified by matching an inner-detector track to an isolated energy deposit in the electromagnetic calorimeter, within the fiducial region of transverse momentum \(p_{\text {T}} > 25\) GeV and pseudorapidity \(|\eta | < 2.47\). Electron candidates are excluded if the calorimeter cluster is within the transition region between the barrel and the endcap of the electromagnetic calorimeter, \(1.37< |\eta | < 1.52\). Electrons are selected using a multivariate algorithm and are required to satisfy a likelihood-based quality criterion, in order to provide high efficiency and good rejection of fake electrons [54, 55]. Electron candidates must have tracks that pass the requirements of transverse impact parameter significanceFootnote 2 \(|d_0^\text {sig}|<5\) and longitudinal impact parameter \(|z_0 \sin \theta | < 0.5\) mm. Electrons must pass isolation requirements based on inner-detector tracks and topological clusters in the calorimeter which depend on \(\eta \) and \(p_{\text {T}}\). These requirements result in an isolation efficiency of 95% for an electron \(p_{\text {T}}\) of 25 GeV and 99% for an electron \(p_{\text {T}}\) above 60 GeV when determined in simulated \(Z\rightarrow e^{+}e^{-}\) events. The fake-electron rate determined in simulated \(t\bar{t}\) events is 2%. Electrons that share a track with a muon are discarded. Double counting of electron energy deposits as jets is prevented by removing the closest jet within \(\Delta R = 0.2\) of a reconstructed electron. Following this, the electron is discarded if a jet exists within \(\Delta R = 0.4\) of the electron to ensure sufficient separation from nearby jet activity.

Muon candidates are identified from muon-spectrometer tracks that match tracks in the inner detector, with \(p_{\text {T}} > 25\) GeV and \(|\eta | < 2.5\) [56]. The tracks of muon candidates are required to have a transverse impact parameter significance \(|d_0^\text {sig}|<3\) and longitudinal impact parameter \(|z_0 \sin \theta | < 0.5\) mm. Muons must satisfy quality criteria and isolation requirements based on inner-detector tracks and topological clusters in the calorimeter which depend on \(\eta \) and \(p_{\text {T}}\). These requirements reduce the contributions from fake muons and provide the same efficiency as for electrons when determined in simulated \(t\bar{t}\) events. Muons may leave energy deposits in the calorimeter that could be misidentified as a jet, so jets with fewer than three associated tracks are removed if they are within \(\Delta R = 0.4\) of a muon. Muons are discarded if they are separated from the nearest jet by \(\Delta R < 0.4\) to reduce the background from muons from heavy-flavour hadron decays inside jets.

Jets are reconstructed with the anti-\(k_t\) algorithm [57, 58], using a radius parameter of \(R = 0.4\), from topological clusters of energy deposits in the calorimeters. Jets are accepted within the range \(p_{\text {T}} > 25\) GeV and \(|\eta | < 2.5\), and are calibrated using simulation with corrections derived from data [59]. Jets likely to originate from pile-up are suppressed using a multivariate jet-vertex-tagger (JVT) [60, 61] for candidates with \(p_{\text {T}} < 60\) GeV and \(|\eta | < 2.4\). Jets are identified as candidates for containing b-hadrons using a multivariate discriminant [62], which uses track impact parameters, track invariant mass, track multiplicity and secondary vertex information to discriminate b-jets from light-quark or gluon jets (light jets). The average b-tagging efficiency is 76%, with a purity of 90%, for b-jets in simulated dileptonic \(t\bar{t}\) events.

\(E_{\text {T}}^{\text {miss}}\) is reconstructed using calibrated electrons, muons and jets [63], where the electrons and muons are required to satisfy the selection criteria above. Tracks associated with the primary vertex are used for the computation of \(E_{\text {T}}^{\text {miss}}\) from energy not associated with electrons, muons or jets. The primary vertex is defined as the vertex with the highest sum of \(p_{\text {T}}^2\) of tracks associated with it.

Signal events are selected by requiring exactly one electron and one muon of opposite electric charge, and at least two jets, at least one of which must be b-tagged. No requirements are made on the \(E_{\text {T}}^{\text {miss}}\) in the event. Using this selection, 85% of events are expected to be \(t\bar{t}\) events. The other processes that pass the signal selection are Drell–Yan (\(Z/\gamma ^{*}\rightarrow \tau ^{+}\tau ^{-}\)), diboson and single-top quark (Wt) production and fake-lepton events.

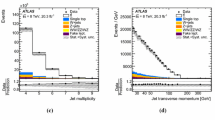

The event yields after the signal selection are listed in Table 1. The number of events observed in the signal region exceeds the prediction, but the excess is within the uncertainties. Distributions of lepton and jet \(p_{\text {T}}\) and \(E_{\text {T}}^{\text {miss}}\) are shown in Fig. 1. The \(t\bar{t}\) contribution is normalised using the predicted cross-section, calculated with the Top++2.0 program at next-to-next-to-leading order in perturbative QCD, including soft-gluon resummation to next-to-next-to-leading-logarithm order [6] and assuming a top-quark mass of 172.5 GeV. The data and prediction agree within the total uncertainty for all distributions. The \(p_{\text {T}}\) observables show a small deficit in the simulation prediction at low \(p_{\text {T}}\) which was found to be correlated with the modelling of the top-quark \(p_{\text {T}}\).

Kinematic distributions for the electron \(p_{\text {T}}\) (a), muon \(p_{\text {T}}\) (b), b-jet \(p_{\text {T}}\) (c), and \(E_{\text {T}}^{\text {miss}}\) (d) for the \(e^{{\pm }}\mu ^{\mp }\) signal selection. In all figures, the rightmost bin also contains events that are above the x-axis range. The dark uncertainty bands in the ratio plots represent the statistical uncertainties while the light uncertainty bands represent the statistical, systematic and luminosity uncertainties added in quadrature. The uncertainties quoted include uncertainties from leptons, jets, missing transverse momentum, background modelling and pile-up modelling. They do not include uncertainties from PDF or signal \(t\bar{t}\) modelling

Particle-level objects are constructed using generator-level information in the MC simulation, using a procedure intended to correspond as closely as possible to the reconstructed object and event selection. Only objects in the MC simulation with a lifetime longer than \(3 \times 10^{-11}\) s (stable) in the generator-level information are used. Particle-level electrons and muons are identified as those originating from a W-boson decay, including those via intermediate \(\tau \) leptons. The four-momenta of each electron or muon is summed with the four-momenta of all radiated photons, excluding those from hadron decays, within a cone of size \(\Delta R = 0.1\), and the resulting objects are required to have \(p_{\text {T}} > 25\) GeV and \(|\eta | < 2.5\). Particle-level jets are constructed using stable particles, with the exception of selected particle-level electrons and muons and particle-level neutrinos originating from W-boson decays, using the anti-\(k_t\) algorithm with a radius parameter of \(R = 0.4\), in the region \(p_{\text {T}} > 25\) GeV and \(|\eta | < 2.5\). Intermediate b-hadrons in the MC decay chain history are clustered in the stable-particle jets with their energies set to zero. If, after clustering, a particle-level jet contains one or more of these “ghost” b-hadrons, the jet is said to have originated from a b-quark. This technique is referred to as “ghost matching” [64]. Particle-level \(E_{\text {T}}^{\text {miss}}\) is calculated using the vector transverse-momentum sum of all neutrinos in the event, excluding those originating from hadron decays, either directly or via a \(\tau \) lepton.

Events are selected at the particle level in a fiducial phase space region with similar requirements to the phase space region at reconstruction level. Events are selected by requiring exactly one particle-level electron and one particle-level muon of opposite electric charge, and at least two particle-level jets, at least one of which must originate from a b-quark.

5 Reconstruction

The t, \(\bar{t}\), and \(t\bar{t}\) are reconstructed using both the particle-level objects and the reconstructed objects in order to measure their kinematic distributions. The reconstructed system is built using the neutrino weighting (NW) method [65].

Whereas the individual four-momenta of the two neutrinos in the final state are not directly measured in the detector, the sum of their transverse momenta is measured as \(E_{\text {T}}^{\text {miss}}\). The absence of the measured four-momenta of the two neutrinos leads to an under-constrained system that cannot be solved analytically. However, if additional constraints are placed on the mass of the top-quark, the mass of the W boson, and on the pseudorapidities of the two neutrinos, the system can be solved using the following equations:

where \(\ell _{1,2}\) are the charged leptons, \(\nu _{1,2}\) are the neutrinos, and \(b_{1,2}\) are the b-jets (or jets), representing four-momentum vectors, and \(\eta _{1},~\eta _{2}\) are the assumed \(\eta \) values of the two neutrinos. Since the neutrino \(\eta \)’s are unknown, many different assumptions of their values are tested. The possible values for \(\eta (\nu )\) and \(\eta (\bar{\nu })\) are scanned between \(-5\) and 5 in steps of 0.2.

With the assumptions about \(m_t\), \(m_W\), and values for \(\eta (\nu )\) and \(\eta (\bar{\nu })\), Eq. (1) can now be solved, leading to two possible solutions for each assumption of \(\eta (\nu )\) and \(\eta (\bar{\nu })\). Only real solutions without an imaginary component are considered. The observed \(E_{\text {T}}^{\text {miss}}\) value in each event is used to determine which solutions are more likely to be correct. A “reconstructed” \(E_{\text {T}}^{\text {miss}}\) value resulting from the neutrinos for each solution is compared to the \(E_{\text {T}}^{\text {miss}}\) observed in the event. If this reconstructed \(E_{\text {T}}^{\text {miss}}\) value matches the observed \(E_{\text {T}}^{\text {miss}}\) value in the event, then the solution with those values for \(\eta (\nu )\) and \(\eta (\bar{\nu })\) is likely to be the correct one. A weight is introduced in order to quantify this agreement:

where \(\Delta E_{x,y}\) is the difference between the missing transverse momentum computed from Eq. (1) and the observed missing transverse momentum in the x–y plane and \(\sigma _{x,y}\) is the resolution of the observed \(E_{\text {T}}^{\text {miss}}\) in the detector in the x–y plane. The assumption for \(\eta (\nu )\) and \(\eta (\bar{\nu })\) that gives the highest weight is used to reconstruct the t and \(\bar{t}\) for that event. The \(E_{\text {T}}^{\text {miss}}\) resolution is taken to be 15 GeV for both the x and y directions [63]. This choice has little effect on which solution is picked in each event. The highest-weight solution remains the same regardless of the choice of \(\sigma _{x,y}\).

In each event, there may be more than two jets and therefore many possible combinations of jets to use in the kinematic reconstruction. In addition, there is an ambiguity in assigning a jet to the t or to the \(\bar{t}\) candidate. In events with only one b-tagged jet, the b-tagged jet and the highest-\(p_{\text {T}}\) non-b-tagged jet are used to reconstruct the t and \(\bar{t}\), whereas in events with two or more b-tagged jets, the two b-tagged jets with the highest weight from the b-tagging algorithm are used.

Equation (1) cannot always be solved for a particular assumption of \(\eta (\nu )\) and \(\eta (\bar{\nu })\). This can be caused by misassignment of the input objects or through mismeasurement of the input object four-momenta. It is also possible that the assumed \(m_t\) is sufficiently different from the true value to prevent a valid solution for that event. To mitigate these effects, the assumed value of \(m_t\) is varied between the values of 168 and 178 GeV, in steps of 1 GeV, and the \(p_{\text {T}}\) of the measured jets are smeared using a Gaussian function with a width of 10% of their measured \(p_{\text {T}}\). This smearing is repeated 20 times. This allows the NW algorithm to shift the four-momenta (of the electron, muon and the two jets) and \(m_t\) assumption to see if a solution can be found. The solution which produces the highest w is taken as the reconstructed system.

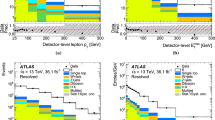

For a fraction of events, even smearing does not help to find a solution. Such events are not included in the signal selection and are counted as an inefficiency of the reconstruction. For the signal \(t\bar{t}\) MC samples, the inefficiency is \(\mathtt {\sim }\)20%. Due to the implicit assumptions about the \(m_t\) and \(m_W\), the reconstruction inefficiency found in simulated background samples is much higher (\(\mathtt {\sim }\)40% for Wt and Drell–Yan processes) and leads to a suppression of background events. Table 1 shows the event yields before and after reconstruction in the signal region. The purity of \(t\bar{t}\) events increases after reconstruction. The distributions of the experimental observables after reconstruction are shown in Fig. 2.

Particle-level t, \(\bar{t}\), and \(t\bar{t}\) objects are reconstructed following the prescriptions from the LHCTopWG, with the exception that only events with at least one b-tagged jet are allowed. Events are required to have exactly two leptons of opposite-sign electric charge (one electron and one muon), and at least two jets. The t and \(\bar{t}\) are reconstructed by considering the two particle-level neutrinos with the highest \(p_{\text {T}}\) and the two particle-level charged leptons. The charged leptons and the neutrinos are paired such that \(|m_{\nu _1,\ell _1} - m_W| + |m_{\nu _2,\ell _2} - m_W|\) is minimised. These pairs are then used as pseudo W bosons and are paired with particle-level jets such that \(|m_{W_1,j_1} - m_t| + |m_{W_2,j_2} - m_t|\) is minimised, where at least one of the jets must be b-tagged. In cases where only one particle-level b-jet is present, the particle-level jet with the highest \(p_{\text {T}}\) among the non-b-tagged jets is used as the second jet. In cases with two particle-level b-jets, both are taken. In the rare case of events with more than two particle-level b-jets, the two highest-\(p_{\text {T}}\) particle-level b-jets are used. The particle-level \(t\bar{t}\) object is constructed using the sum of the four-momenta of the particle-level t and \(\bar{t}\).

Kinematic distributions for the \(p_{\text {T}} (t)\) (a), |y(t)| (b), \(p_{\text {T}} (t\bar{t})\) (c), \(t\bar{t}\) \(|y_{t\bar{t}}|\) (d), and \(m(t\bar{t})\) (e) after reconstruction of the \(t\bar{t}\) system. In all figures, the rightmost bin also contains events that are above the x-axis range. The uncertainty bands represent the statistical uncertainties (dark) and the statistical, systematic and luminosity uncertainties added in quadrature (light). The uncertainties quoted include uncertainties on leptons, jets, \(E_{\text {T}}^{\text {miss}}\), background and pile-up modelling, and luminosity. They do not include uncertainties on PDF or signal \(t\bar{t}\) modelling

6 Unfolding

To obtain the absolute and normalised differential cross-sections in the fiducial phase space region (see Sect. 4) with respect to the \(t\bar{t}\) system variables, the distributions are unfolded to particle level using an iterative Bayesian method [66] implemented in the RooUnfold package [67]. In the unfolding, background-subtracted data are corrected for detector acceptance and resolution effects as well as for the efficiency to pass the event selection requirements in order to obtain the absolute differential cross-sections. The fiducial differential cross-sections are divided by the measured total cross-section, obtained by integrating over all bins in the differential distribution, in order to obtain the normalised differential cross-sections.

The differential cross-sections are calculated using the equation:

where i indicates the bin for the observable X, \(\Delta X_i\) is the width of bin i, \(\mathcal {L}\) is the integrated luminosity, \(\mathcal {B}\) is the branching ratio of the process (\(t\bar{t} \rightarrow b \bar{b} e^{{\pm }} \nu _e \mu ^{\mp } \nu _{\mu }\)), R is the response matrix, \(N_j^\text {obs}\) is the number of observed events in data in bin j, and \(N_j^\text {bkg}\) is the estimated number of background events in bin j. The efficiency parameter, \(\epsilon _i\) (\(\epsilon_j^{\text{fid}}\)), is used to correct for events passing the reconstructed (fiducial) event selection but not the fiducial (reconstructed) selection.

The response matrix, R, describes the detector response, and is determined by mapping the bin-to-bin migration of events from particle level to reconstruction level in the nominal \(t\bar{t}\) MC simulation. Figure 3 shows the response matrices that are used for each experimental observable, normalised such that the sum of entries in each row is equal to one. The values represent the fraction of events at particle level in bin i that are reconstructed in bin j at reconstruction level.

The binning for the observables is chosen such that approximately half of the events are reconstructed in the same bin at reconstruction level as at the particle level (corresponding to a value of approximately 0.5 in the diagonal elements of the migration matrix). Pseudo-data are constructed by randomly sampling events from the nominal \(t\bar{t}\) MC sample, to provide a number of events similar to the number expected from data. These pseudo-data are used to establish the stability of unfolding with respect to the choice of binning with pull tests. The binning choice must result in pulls consistent with a mean of zero and a standard deviation of one, within uncertainties. The choice of binning does not introduce any bias or underestimation of the statistical uncertainties. The number of iterations used in the iterative Bayesian unfolding is also optimised using pseudo-experiments. Iterations are performed until the \(\chi ^2\) per degree of freedom, calculated by comparing the unfolded pseudo-data to the corresponding generator-level distribution for that pseudo-data set, is less than unity. The optimum number of iterations is determined to be six. Tests are performed to establish that the unfolding procedure is able to successfully unfold distributions other than those predicted by the nominal MC simulation.

The response matrices for the observables obtained from the nominal \(t\bar{t}\) MC, normalised by row to unity. Each bin shows the probability for a particle-level event in bin j to be observed in a reconstruction-level bin i. White corresponds to 0 probability and the darkest green to a probability of one, where the other probabilities lie in between those shades

7 Systematic uncertainties

The measured differential cross-sections are affected by systematic uncertainties arising from detector response, signal modelling, and background modelling. The contributions from various sources of uncertainty are described in this section. Summaries of the sources of uncertainty for the absolute and normalised differential cross-sections for the \(p_{\text {T}}(t)\) are presented in Tables 2 and 3. The total systematic uncertainties are calculated by summing all of the individual systematic uncertainties in quadrature and the total uncertainty is calculated by summing the systematic and statistical uncertainties in quadrature. The effect of different groups of systematic uncertainties is shown graphically for \(p_{\text {T}}(t)\) in Fig. 4.

7.1 Signal modelling uncertainties

The following systematic uncertainties related to the modelling of the \(t\bar{t}\) system in the MC generators are considered: the choice of matrix-element generator, the hadronisation model, the choice of PDF, and the amount of initial- and final-state radiation.

Each source is estimated by using a different MC sample in the unfolding procedure. In particular, a chosen baseline MC sample is unfolded using response matrices and corrections derived from an alternative sample. The difference between the unfolded distribution in the baseline sample and the true distribution in the baseline sample is taken as the systematic uncertainty due to the signal modelling.

The choice of NLO generator (MC generator) affects the kinematic properties of the simulated \(t\bar{t}\) events and the reconstruction efficiencies. To estimate this uncertainty, a comparison between Powheg-Box and MG5_aMC@NLO (both using Herwig++ for the parton-shower simulation) is performed, with the Powheg-Box sample used as the baseline. The resulting systematic shift is used to define a symmetric uncertainty, where deviations from the nominal sample are also considered to be mirrored in the opposite direction, resulting in equal and opposite symmetric uncertainties (called symmetrising).

To evaluate the uncertainty arising from the choice of parton-shower algorithm, a sample generated using Powheg-Box + Pythia 6 is compared to the alternative sample generated with Powheg-Box + Herwig++, where both samples use “fast simulation”. The resulting uncertainty is symmetrised. The choices of NLO generator and parton-shower algorithm are dominant sources of systematic uncertainty in all observables.

The uncertainty due to the choice of PDF is evaluated using the PDF4LHC15 prescription [68]. The prescription utilises 100 eigenvector shifts derived from fits to the CT14 [69], MMHT [69] and NNPDF3.0 [70] PDF sets (PDF4LHC 100). The nominal MC sample used in the analysis is generated using the CT10 PDF set. Therefore, the uncertainty is taken to be the standard deviation of all eigenvector variations summed in quadrature with the difference between the central values of the CT14 and CT10 PDF sets (PDF extrapolation). The resulting uncertainty is symmetrised. Both PDF-based uncertainties contribute as one of the dominant systematic uncertainties.

Uncertainties arising from varying the amount of initial- and final-state radiation (radiation scale), which alters the jet multiplicity in events and the transverse momentum of the \(t\bar{t}\) system, are estimated by comparing the nominal Powheg-Box + Pythia 6 sample to samples generated with high and low radiation settings, as discussed in Sect. 3. The uncertainty is taken as the difference between the nominal and the increased radiation sample, and the nominal and the decreased radiation sample. The initial- and final-state radiation is a significant source of uncertainty in the absolute cross-section measurements but only a moderate source of uncertainty in the normalised cross-sections.

7.2 Background modelling uncertainties

The uncertainties in the background processes are assessed by repeating the full analysis using pseudo-data sets and by varying the background predictions by one standard deviation of their nominal values. The difference between the nominal pseudo-data set result and the shifted result is taken as the systematic uncertainty.

Each background prediction has an uncertainty associated with its theoretical cross-section. The cross-section for the Wt process is varied by \({\pm }\)5.3% [42], the diboson cross-section is varied by \({\pm }\)6%, and the Drell–Yan \(Z/\gamma ^*\rightarrow \tau ^+\tau ^-\) background is varied by \({\pm }\)5% based on studies of different MC generators. A 30% uncertainty is assigned to the normalisation of the fake-lepton background based on comparisons between data and MC simulation in a fake-dominated control region, which is selected in the same way as the \(t\bar{t}\) signal region but the leptons are required to have same-sign electric charges.

An additional uncertainty is evaluated for the Wt process by replacing the nominal DR sample with a DS sample, as discussed in Sect. 3, and taking the difference between the two as the systematic uncertainty.

7.3 Detector modelling uncertainties

Systematic uncertainties due to the modelling of the detector response affect the signal reconstruction efficiency, the unfolding procedure, and the background estimation. In order to evaluate their impact, the full analysis is repeated with variations of the detector modelling and the difference between the nominal and the shifted results is taken as the systematic uncertainty.

The uncertainties due to lepton isolation, trigger, identification, and reconstruction requirements are evaluated in 2015 data using a tag-and-probe method in leptonically decaying Z-boson events [56]. These uncertainties are summarised as “Lepton” in Tables 2 and 3.

The uncertainties due to the jet energy scale and resolution are extrapolated to \(\sqrt{s} = 13\) TeV using a combination of test beam data, simulation and \(\sqrt{s} = 8\) TeV dijet data [59]. To account for potential mismodelling of the JVT distribution in simulation, a 2% systematic uncertainty is applied to the jet efficiency. These uncertainties are summarised as “Jet” in Tables 2 and 3. Uncertainties due to b-tagging, summarised under “b-tagging”, are determined using \(\sqrt{s}=8\) TeV data as described in Ref. [71] for b-jets and Ref. [72] for c- and light-jets, with additional uncertainties to account for the presence of the new Insertable B-Layer detector and the extrapolation from \(\sqrt{s}=8\) TeV to \(\sqrt{s}=13\) TeV [62].

The systematic uncertainty due to the track-based terms (i.e. those tracks not associated with other reconstructed objects such as leptons and jets) used in the calculation of \(E_{\text {T}}^{\text {miss}}\) is evaluated by comparing the \(E_{\text {T}}^{\text {miss}}\) in \(Z\rightarrow \mu \mu \) events, which do not contain prompt neutrinos from the hard process, using different generators. Uncertainties associated with energy scales and resolutions of leptons and jets are propagated to the \(E_{\text {T}}^{\text {miss}}\) calculation.

The uncertainty due to the integrated luminosity is \({\pm } 2.1\)%. It is derived, following a methodology similar to that detailed in Ref. [73], from a calibration of the luminosity scale using x–y beam-separation scans performed in August 2015. The uncertainty in the pile-up reweighting is evaluated by varying the scale factors by \({\pm }1\sigma \) based on the reweighting of the average number of interactions per bunch crossing.

The uncertainties due to lepton and \(E_{\text {T}}^{\text {miss}}\) modelling are not large for any observable. For the absolute cross-sections, the uncertainty due to luminosity is not a dominant systematic uncertainty, and this uncertainty mainly cancels in the normalised cross-sections. The luminosity uncertainty does not cancel fully since it affects the background subtraction. The uncertainty due to jet energy scale and JVT is a significant source of uncertainty in the absolute cross-sections and in some of the normalised cross-sections such as for \(p_{\text {T}}(t\bar{t})\). The uncertainties due to the limited number of MC events are evaluated using pseudo-experiments. The data statistical uncertainty is evaluated using the full covariance matrix from the unfolding.

Summary of the fractional size of the absolute (a) and normalised (b) fiducial differential cross-sections as a function of \(p_{\text {T}}(t)\). Systematic uncertainties which are symmetric are represented by solid lines and asymmetric uncertainties are represented by dashed or dot–dashed lines. Systematic uncertainties from common sources, such as modelling of the \(t\bar{t}\) production, have been grouped together. Uncertainties due to luminosity or background modelling are not included. The statistical and total uncertainty sizes are indicated by the shaded bands

8 Results

The unfolded particle-level distributions for the absolute and normalised fiducial differential cross-sections are presented in Table 4. The total systematic uncertainties include all sources discussed in Sect. 7.

The unfolded normalised data are used to compare with different generator predictions. The significance of the differences of various generators, with respect to the data in each observable, are evaluated by calculating the \(\chi ^2\) and determining p-values using the number of degrees of freedom (NDF). The \(\chi ^2\) is determined using:

where \(\text {Cov}^{-1}\) is the inverse of the full bin-to-bin covariance matrix, including all statistical and systematic uncertainties, N is the number of bins, and S is a column vector of the differences between the unfolded data and the prediction. The NDF is equal to the number of bins minus one in the observable for the normalised cross-sections. In \(\text {Cov}\) and S, a single bin is removed from the calculation to account for the normalisation of the observable, signified by the \((N - 1)\) subscript. The \(\chi ^2\), NDF, and associated p-values are presented in Table 5 for the normalised cross-sections. Most generators studied agree with the unfolded data in each observable within the experimental uncertainties, with the exception of the Powheg-Box + Herwig++ MC simulation, which differs significantly from the data in both \(p_{\text {T}}(t)\) and \(m(t\bar{t})\).

The normalised differential cross-sections for all observables are compared to predictions of different MC generators in Fig. 5.

The Powheg-Box generator tends to predict a harder \(p_{\text {T}}(t)\) spectrum for the top quark than is observed in data, although the data are still consistent with the prediction within the experimental uncertainties. The MG5_aMC@NLO generator appears to agree better with the observed \(p_{\text {T}}(t)\) spectrum, particularly when interfaced to Herwig++. For the \(p_{\text {T}}(t\bar{t})\) spectrum, again little difference is observed between Powheg-Box + Pythia 6 and Pythia 8, and both generally predict a softer spectrum than the data but are also consistent within the experimental uncertainties. The MG5_aMC@NLO generator, interfaced to Pythia 8 or Herwig++ seems to agree with the data at low to medium values of \(p_{\text {T}}\) but MG5_aMC@NLO + Herwig++ disagrees at higher values. For the \(m(t\bar{t})\) observable, although the uncertainties are quite large, predictions from Powheg-Box interfaced to Pythia 6 or Pythia 8 and the MG5_aMC@NLO + Pythia 8 prediction seem higher than the observed data around 600 GeV. For the rapidity observables, all MC predictions appear to agree with the observed data, except for the high \(|y(t\bar{t})|\) region, where some of the predictions are slightly higher than the data.

9 Conclusions

Absolute and normalised differential top-quark pair-production cross-sections in a fiducial phase-space region are measured using 3.2 fb\(^{-1}\) of \(\sqrt{s} = 13\) TeV proton–proton collisions recorded by the ATLAS detector at the LHC in 2015. The differential cross-sections are determined in the \(e^{{\pm }}\mu ^{{\mp }}\) channel, for the transverse momentum and the absolute rapidity of the top quark, as well as the transverse momentum, the absolute rapidity, and the invariant mass of the top-quark pair. The measured differential cross-sections are compared to predictions of NLO generators matched to parton showers and the results are found to be consistent with all models within the experimental uncertainties, with the exception of Powheg -Box + Herwig ++, which deviates from the data in the \(p_{\text {T}}(t)\) and \(m(t\bar{t})\) observables.

Notes

ATLAS uses a right-handed coordinate system with its origin at the nominal interaction point (IP) in the centre of the detector and the z-axis along the beam pipe. The x-axis points from the IP to the centre of the LHC ring, and the y-axis points upwards. Cylindrical coordinates \((r, \phi )\) are used in the transverse plane, \(\phi \) being the azimuthal angle around the z-axis. The pseudorapidity is defined in terms of the polar angle \(\theta \) as \(\eta = -\ln \tan (\theta / 2)\). Angular distance is measured in units of \(\Delta R \equiv \sqrt{(\Delta \eta )^{2} + (\Delta \phi )^{2}}\).

The transverse impact parameter significance is defined as \(d_0^\text {sig} = d_0 / \sigma _{d_0}\), where \(\sigma _{d_0}\) is the uncertainty in the transverse impact parameter \(d_0\).

References

M. Cacciari, M. Czakon, M. Mangano, A. Mitov, P. Nason, Top-pair production at hadron colliders with next-to-next-to-leading logarithmic soft-gluon resummation. Phys. Lett. B 710, 612–622 (2012). doi:10.1016/j.physletb.2012.03.013. arXiv:1111.5869 [hep-ph]

P. Bärnreuther, M. Czakon, A. Mitov, Percent level precision physics at the tevatron: first genuine NNLO QCD corrections to \(q \bar{q} \rightarrow t \bar{t} + X\). Phys. Rev. Lett. 109, 132001 (2012). doi:10.1103/PhysRevLett.109.132001. arXiv:1204.5201 [hep-ph]

M. Czakon, A. Mitov, NNLO corrections to top-pair production at hadron colliders: the all-fermionic scattering channels. JHEP 12, 054 (2012). doi:10.1007/JHEP12(2012)054. arXiv:1207.0236 [hep-ph]

M. Czakon, A. Mitov, NNLO corrections to top pair production at hadron colliders: the quark-gluon reaction. JHEP 01, 080 (2013). doi:10.1007/JHEP01(2013)080. arXiv:1210.6832 [hep-ph]

M. Czakon, P. Fiedler, A. Mitov, Total top-quark pair-production cross section at hadron colliders through \(O(\alpha \frac{4}{S})\). Phys. Rev. Lett. 110, 252004 (2013). doi:10.1103/PhysRevLett.110.252004. arXiv:1303.6254 [hep-ph]

M. Czakon, A. Mitov, Top++: a program for the calculation of the top-pair cross-section at hadron colliders. Comput. Phys. Commun. 185, 2930 (2014). doi:10.1016/j.cpc.2014.06.021. arXiv:1112.5675 [hep-ph]

M. Guzzi, K. Lipka, S.-O. Moch, Top-quark pair production at hadron colliders: differential cross section and phenomenological applications with DiffTop. JHEP 01, 082 (2015). doi:10.1007/JHEP01(2015)082. arXiv:1406.0386 [hep-ph]

N. Kidonakis, NNNLO soft-gluon corrections for the top-quark \(p_T\) and rapidity distributions. Phys. Rev. D 91, 031501 (2015). doi:10.1103/PhysRevD.91.031501. arXiv:1411.2633 [hep-ph]

V. Ahrens, A. Ferroglia, M. Neubert, B.D. Pecjak, L.L. Yang, Renormalization-group improved predictions for top-quark pair production at hadron colliders. JHEP 09, 097 (2010). doi:10.1007/JHEP09(2010)097. arXiv:1003.5827 [hep-ph]

M. Czakon, D. Heymes, A. Mitov, High-precision differential predictions for top-quark pairs at the LHC. Phys. Rev. Lett. 116, 082003 (2016). doi:10.1103/PhysRevLett.116.082003. arXiv:1511.00549 [hep-ph]

M. Czakon, D. Heymes, A. Mitov, Dynamical scales for multi-TeV top-pair production at the LHC (2016). arXiv:1606.03350 [hep-ph]

ATLAS Collaboration, Measurements of normalized differential cross sections for \(t\bar{t}\) production in pp collisions at \(\sqrt{s}=7\) TeV using the ATLAS detector. Phys. Rev. D 90, 072004 (2014). doi:10.1103/PhysRevD.90.072004. arXiv:1407.0371 [hep-ex]

ATLAS Collaboration, Measurements of top-quark pair differential cross-sections in the lepton+jets channel in \(pp\) collisions at \(\sqrt{s}=8\) TeV using the ATLAS detector. Eur. Phys. J. C 76, 538 (2016). doi:10.1140/epjc/s10052-016-4366-4. arXiv:1511.04716 [hep-ex]

ATLAS Collaboration, Differential top-antitop cross-section measurements as a function of observables constructed from final-state particles using pp collisions at \(\sqrt{s} = 7\) TeV in the ATLAS detector. JHEP 06, 100 (2015). doi:10.1007/JHEP06(2015)100. arXiv:1502.05923 [hep-ex]

CMS Collaboration, Measurement of differential top-quark pair production cross sections in \(pp\) colisions at \(\sqrt{s}=7\) TeV. Eur. Phys. J. C 73, 2339 (2013). doi:10.1140/epjc/s10052-013-2339-4. arXiv:1211.2220 [hep-ex]

CMS Collaboration, Measurement of the differential cross section for top quark pair production in pp collisions at \(\sqrt{s} = 8\,\text{TeV} \). Eur. Phys. J. C 75, 542 (2015). doi:10.1140/epjc/s10052-015-3709-x. arXiv:1505.04480 [hep-ex]

CMS Collaboration, Measurement of differential cross sections for top quark pair production using the lepton+jets final state in proton–proton collisions at 13 TeV Submitted to: Phys. Rev. D (2016). arXiv:1610.04191 [hep-ex]

ATLAS Collaboration, Measurement of the \(t\bar{t}\) production cross-section using \(e\mu \) events with b-tagged jets in pp collisions at \(\sqrt{s}=13\) TeV with the ATLAS detector. Phys. Lett. B 761, 136–157 (2016). doi:10.1016/j.physletb.2016.08.019. arXiv:1606.02699 [hep-ex]

CMS Collaboration, Measurement of the top quark pair production cross section in proton–proton collisions at \(\sqrt{s} = 13\) TeV. Phys. Rev. Lett. 116, 052002 (2016). doi:10.1103/PhysRevLett.116.052002

CMS Collaboration, Measurement of the \(t\bar{t}\) production cross section using events in the e\(\mu \) final state in pp collisions at \(\sqrt{s} = 13\) TeV. Accepted by: Eur. Phys. J. C (2016). arXiv:1611.04040 [hep-ex]

ATLAS Collaboration, The ATLAS experiment at the CERN large hadron collider. JINST 3, S08003 (2008). doi:10.1088/1748-0221/3/08/S08003

ATLAS Collaboration, ATLAS insertable B-layer technical design report. ATLAS-TDR-19 (2010). http://cds.cern.ch/record/1291633

ATLAS Collaboration, ATLAS insertable B-layer technical design report addendum. ATLAS-TDR-19-ADD-1 (2012). http://cds.cern.ch/record/1451888

ATLAS Collaboration, Performance of the ATLAS trigger system in 2010. Eur. Phys. J. C 72, 1849 (2012). doi:10.1140/epjc/s10052-011-1849-1. arXiv:1110.1530 [hep-ex]

ATLAS Collaboration, 2015 start-up trigger menu and initial performance assessment of the ATLAS trigger using Run-2 data. ATL-DAQ-PUB-2016-001 (2016). http://cds.cern.ch/record/2136007

ATLAS Collaboration, The ATLAS simulation infrastructure. Eur. Phys. J. C 70, 823–874 (2010). doi:10.1140/epjc/s10052-010-1429-9. arXiv:1005.4568 [physics.ins-det]

S. Agostinelli et al., GEANT4: a simulation toolkit. Nucl. Instrum. Methods A 506, 250–303 (2003). doi:10.1016/S0168-9002(03)01368-8

ATLAS Collaboration, The simulation principle and performance of the ATLAS fast calorimeter simulation FastCaloSim. ATL-PHYS-PUB-2010-013 (2010). http://cds.cern.ch/record/1300517

T. Sjöstrand, S. Mrenna, P.Z. Skands, A brief introduction to PYTHIA 8.1. Comput. Phys. Commun. 178, 852–867 (2008). doi:10.1016/j.cpc.2008.01.036. arXiv:0710.3820 [hep-ph]

P. Nason, A New method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). doi:10.1088/1126-6708/2004/11/040. arXiv:hep-ph/0409146

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. JHEP 11, 070 (2007). doi:10.1088/1126-6708/2007/11/070. arXiv:0709.2092 [hep-ph]

S. Alioli, P. Nason, C. Oleari, E. Re, A general framework for implementing NLO calculations in shower Monte Carlo programs: the POWHEG BOX. JHEP 06, 043 (2010). doi:10.1007/JHEP06(2010)043. arXiv:1002.2581 [hep-ph]

T. Sjöstrand, S. Mrenna, P.Z. Skands, PYTHIA 6.4 physics and manual. JHEP 0605, 026 (2006). doi:10.1088/1126-6708/2006/05/026. arXiv:hep-ph/0603175

H.-L. Lai, M. Guzzi, J. Huston, Z. Li, P.M. Nadolsky et al., New parton distributions for collider physics. Phys. Rev. D 82, 074024 (2010). doi:10.1103/PhysRevD.82.074024. arXiv:1007.2241 [hep-ph]

D. Stump et al., Inclusive jet production, parton distributions, and the search for new physics. JHEP 10, 046 (2003). doi:10.1088/1126-6708/2003/10/046. arXiv:hep-ph/0303013

P.Z. Skands, Tuning Monte Carlo generators: the Perugia tunes. Phys. Rev. D 82, 074018 (2010). doi:10.1103/PhysRevD.82.074018. arXiv:1005.3457 [hep-ph]

ATLAS Collaboration, Simulation of top quark production for the ATLAS experiment at \(\sqrt{s} = 13\) TeV. ATL-PHYS-PUB-2016-004 (2016). http://cds.cern.ch/record/2120417

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). doi:10.1007/JHEP07(2014)079. arXiv:1405.0301 [hep-ph]

M. Bahr et al., Herwig++ physics and manual. Eur. Phys. J. C 58, 639–707 (2008). doi:10.1140/epjc/s10052-008-0798-9. arXiv:0803.0883 [hep-ph]

T. Gleisberg, S. Hoeche, F. Krauss, M. Schonherr, S. Schumann et al., Event generation with SHERPA 1.1. JHEP 02, 007 (2009). doi:10.1088/1126-6708/2009/02/007. arXiv:0811.4622 [hep-ph]

J. Bellm et al., Herwig 7.0/Herwig++ 3.0 release note. Eur. Phys. J. C 76, 196 (2016). doi:10.1140/epjc/s10052-016-4018-8. arXiv:1512.01178

N. Kidonakis, Two-loop soft anomalous dimensions for single top quark associated production with a W\(-\) or H\(-\). Phys. Rev. D 82, 054018 (2010). doi:10.1103/PhysRevD.82.054018. arXiv:1005.4451 [hep-ph]

S. Frixione, E. Laenen, P. Motylinski, B.R. Webber, C.D. White, Single-top hadroproduction in association with a W boson. JHEP 07, 029 (2008). doi:10.1088/1126-6708/2008/07/029. arXiv:0805.3067 [hep-ph]

F. Cascioli, P. Maierhofer, S. Pozzorini, Scattering amplitudes with open loops. Phys. Rev. Lett. 108, 111601 (2012). doi:10.1103/PhysRevLett.108.111601. arXiv:1111.5206 [hep-ph]

T. Gleisberg, S. Hoeche, Comix, a new matrix element generator. JHEP 12, 039 (2008). doi:10.1088/1126-6708/2008/12/039. arXiv:0808.3674 [hep-ph]

S. Schumann, F. Krauss, A parton shower algorithm based on Catani-Seymour dipole factorisation. JHEP 03, 038 (2008). doi:10.1088/1126-6708/2008/03/038. arXiv:0709.1027 [hep-ph]

S. Hoeche, F. Krauss, M. Schonherr, F. Siegert, QCD matrix elements \(+\) parton showers: the NLO case. JHEP 04, 027 (2013). doi:10.1007/JHEP04(2013)027. arXiv:1207.5030 [hep-ph]

ATLAS Collaboration, Monte Carlo generators for the production of a \(W\) or \(Z/\gamma ^*\) Boson in association with Jets at ATLAS in Run 2. ATL-PHYS-PUB-2016-003 (2016). http://cds.cern.ch/record/2120133

ATLAS Collaboration, Multi-boson simulation for 13 TeV ATLAS analyses. ATL-PHYS-PUB-2016-002 (2016). http://cds.cern.ch/record/2119986

J. Alwall, M. Herquet, F. Maltoni, O. Mattelaer, T. Stelzer, MadGraph 5: going beyond. JHEP 06, 128 (2011). doi:10.1007/JHEP06(2011)128. arXiv:1106.0522 [hep-ph]

ATLAS Collaboration, Modelling of the \(t\bar{t}H\) and \(t\bar{t}V\) \((V=W,Z)\) processes for \(\sqrt{s}=13\) TeV ATLAS analyses. ATL-PHYS-PUB-2016-005 (2016). http://cds.cern.ch/record/2120826

ATLAS Collaboration, Measurement of the \(Z/\gamma ^*\) boson transverse momentum distribution in \(pp\) collisions at \(\sqrt{s} = 7\) TeV with the ATLAS detector. JHEP 09, 55 (2014). doi:10.1007/JHEP09(2014)145. arXiv:1406.3660 [hep-ex]

D.J. Lange, The EvtGen particle decay simulation package. Nucl. Instrum. Methods A 462, 152–155 (2001). doi:10.1016/S0168-9002(01)00089-4

ATLAS Collaboration, Electron reconstruction and identification efficiency measurements with the ATLAS detector using the 2011 LHC proton–proton collision data. Eur. Phys. J. C 74, 2941 (2014). doi:10.1140/epjc/s10052-014-2941-0. arXiv:1404.2240 [hep-ex]

ATLAS Collaboration, Electron efficiency measurements with the ATLAS detector using the 2015 LHC proton–proton collision data. ATLAS-CONF-2016-024 (2016). http://cdsweb.cern.ch/record/2157687

ATLAS Collaboration, Muon reconstruction performance of the ATLAS detector in proton–proton collision data at \(\sqrt{s} =13\) TeV. Eur. Phys. J. C 76, 292 (2016). doi:10.1140/epjc/s10052-016-4120-y. arXiv:1603.05598 [hep-ex]

M. Cacciari, G.P. Salam, Dispelling the \(N^{3}\) myth for the \(k_t\) jet-finder. Phys. Lett. B 641, 57–61 (2006). doi:10.1016/j.physletb.2006.08.037. arXiv:hep-ph/0512210

M. Cacciari, G.P. Salam, G. Soyez, The anti-\(k_t\) jet clustering algorithm. JHEP 04, 063 (2008). doi:10.1088/1126-6708/2008/04/063. arXiv:0802.1189. [hep-ph]

ATLAS Collaboration, Jet calibration and systematic uncertainties for jets reconstructed in the ATLAS detector at \(\sqrt{s}=13\) TeV. ATL-PHYS-PUB-2015-015 (2015). http://cds.cern.ch/record/2037613

ATLAS Collaboration, Tagging and suppression of pileup jets with the ATLAS detector. ATLAS-CONF-2014-018 (2014). http://cds.cern.ch/record/1700870

ATLAS Collaboration, Performance of pile-up mitigation techniques for jets in \(pp\) collisions at \(\sqrt{s} = 8\) TeV using the ATLAS detector. CERN-PH-EP-2015-20 (2015). arXiv:1510.03823 [hep-ex]

ATLAS Collaboration, Expected performance of the ATLAS \(b\)-tagging algorithms in Run-2. ATL-PHYS-PUB-2015-022 (2015). http://cds.cern.ch/record/2037697

ATLAS Collaboration, Performance of missing transverse momentum reconstruction for the ATLAS detector in the first proton–proton collisions at at \(\sqrt{s}= 13\) TeV. ATL-PHYS-PUB-2015-027 (2015). http://cds.cern.ch/record/2037904

M. Cacciari, G.P. Salam, Pileup subtraction using jet areas. Phys. Lett. BB 659, 119–126 (2008). doi:10.1016/j.physletb.2007.09.077. arXiv:0707.1378 [hep-ph]

D0 Collaboration, Measurement of the top quark mass using dilepton events. Phys. Rev. Lett. 80, 2063–2068 (1998). doi:10.1103/PhysRevLett.80.2063. arXiv:hep-ex/9706014

G. D’Agostini, A multidimensional unfolding method based on Bayes’ theorem. Nucl. Instrum. Methods A 362, 487–498 (1995). doi:10.1016/0168-9002(95)00274-X

T. Adye, Unfolding algorithms and tests using RooUnfold. In Proceedings of the PHYSTAT 2011 Workshop, CERN, Geneva, Switzerland, January 2011, CERN-2011-006, pp. 313–318. arXiv:1105.1160 [physics.data-an]

J. Butterworth et al., PDF4LHC recommendations for LHC Run II. J. Phys. G 43, 023001 (2016). doi:10.1088/0954-3899/43/2/023001. arXiv:1510.03865 [hep-ph]

S. Dulat et al., New parton distribution functions from a global analysis of quantum chromodynamics. Phys. Rev. D 93, 033006 (2016). doi:10.1103/PhysRevD.93.033006. arXiv:1506.07443 [hep-ph]

NNPDF Collaboration, R.D. Ball et al., Parton distributions for the LHC Run II. JHEP 04, 040 (2015). doi:10.1007/JHEP04(2015)040. arXiv:1410.8849 [hep-ph]

ATLAS Collaboration, Calibration of \(b\)-tagging using dileptonic top pair events in a combinatorial likelihood approach with the ATLAS experiment. ATLAS-CONF-2014-004 (2014). http://cds.cern.ch/record/1664335

ATLAS Collaboration, Calibration of the performance of \(b\)-tagging for \(c\) and light-flavour jets in the 2012 ATLAS data. ATLAS-CONF-2014-046 (2014). http://cds.cern.ch/record/1741020

ATLAS Collaboration, Luminosity determination in pp collisions at \(\sqrt{s} = 8\) TeV using the ATLAS detector at the LHC (2016). arXiv:1608.03953 [hep-ex]

ATLAS Collaboration, ATLAS computing acknowledgements 2016–2017. ATL-GEN-PUB-2016-002 (2016). http://cds.cern.ch/record/2202407

Acknowledgements

We thank CERN for the very successful operation of the LHC, as well as the support staff from our institutions without whom ATLAS could not be operated efficiently. We acknowledge the support of ANPCyT, Argentina; YerPhI, Armenia; ARC, Australia; BMWFW and FWF, Austria; ANAS, Azerbaijan; SSTC, Belarus; CNPq and FAPESP, Brazil; NSERC, NRC and CFI, Canada; CERN; CONICYT, Chile; CAS, MOST and NSFC, China; COLCIENCIAS, Colombia; MSMT CR, MPO CR and VSC CR, Czech Republic; DNRF and DNSRC, Denmark; IN2P3-CNRS, CEA-DSM/IRFU, France; SRNSF, Georgia; BMBF, HGF, and MPG, Germany; GSRT, Greece; RGC, Hong Kong SAR, China; ISF, I-CORE and Benoziyo Center, Israel; INFN, Italy; MEXT and JSPS, Japan; CNRST, Morocco; NWO, Netherlands; RCN, Norway; MNiSW and NCN, Poland; FCT, Portugal; MNE/IFA, Romania; MES of Russia and NRC KI, Russian Federation; JINR; MESTD, Serbia; MSSR, Slovakia; ARRS and MIZŠ, Slovenia; DST/NRF, South Africa; MINECO, Spain; SRC and Wallenberg Foundation, Sweden; SERI, SNSF and Cantons of Bern and Geneva, Switzerland; MOST, Taiwan; TAEK, Turkey; STFC, United Kingdom; DOE and NSF, United States of America. In addition, individual groups and members have received support from BCKDF, the Canada Council, CANARIE, CRC, Compute Canada, FQRNT, and the Ontario Innovation Trust, Canada; EPLANET, ERC, ERDF, FP7, Horizon 2020 and Marie Skłodowska-Curie Actions, European Union; Investissements d’Avenir Labex and Idex, ANR, Région Auvergne and Fondation Partager le Savoir, France; DFG and AvH Foundation, Germany; Herakleitos, Thales and Aristeia programmes co-financed by EU-ESF and the Greek NSRF; BSF, GIF and Minerva, Israel; BRF, Norway; CERCA Programme Generalitat de Catalunya, Generalitat Valenciana, Spain; the Royal Society and Leverhulme Trust, United Kingdom. The crucial computing support from all WLCG partners is acknowledged gratefully, in particular from CERN, the ATLAS Tier-1 facilities at TRIUMF (Canada), NDGF (Denmark, Norway, Sweden), CC-IN2P3 (France), KIT/GridKA (Germany), INFN-CNAF (Italy), NL-T1 (Netherlands), PIC (Spain), ASGC (Taiwan), RAL (UK) and BNL (USA), the Tier-2 facilities worldwide and large non-WLCG resource providers. Major contributors of computing resources are listed in Ref. [74].

Author information

Authors and Affiliations

Consortia

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Aaboud, M., Aad, G., Abbott, B. et al. Measurements of top-quark pair differential cross-sections in the \(e\mu \) channel in pp collisions at \(\sqrt{s} = 13\) TeV using the ATLAS detector. Eur. Phys. J. C 77, 292 (2017). https://doi.org/10.1140/epjc/s10052-017-4821-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-017-4821-x