Abstract

A search for new phenomena in final states containing an \(e^+e^-\) or \(\mu ^+\mu ^-\) pair, jets, and large missing transverse momentum is presented. This analysis makes use of proton–proton collision data with an integrated luminosity of \(36.1~\mathrm {fb}^{-1}\), collected during 2015 and 2016 at a centre-of-mass energy \(\sqrt{s} = 13~\hbox {TeV}\) with the ATLAS detector at the Large Hadron Collider. The search targets the pair production of supersymmetric coloured particles (squarks or gluinos) and their decays into final states containing an \(e^+e^-\) or \(\mu ^+\mu ^-\) pair and the lightest neutralino (\(\tilde{\chi }_1^0\)) via one of two next-to-lightest neutralino (\(\tilde{\chi }_2^0\)) decay mechanisms: \(\tilde{\chi }_2^0 \rightarrow Z \tilde{\chi }_1^0\), where the Z boson decays leptonically leading to a peak in the dilepton invariant mass distribution around the Z boson mass; and \(\tilde{\chi }_2^0 \rightarrow \ell ^+\ell ^- \tilde{\chi }_1^0\) with no intermediate \(\ell ^+\ell ^-\) resonance, yielding a kinematic endpoint in the dilepton invariant mass spectrum. The data are found to be consistent with the Standard Model expectation. Results are interpreted using simplified models, and exclude gluinos and squarks with masses as large as 1.85 and 1.3 \(\text {Te}\text {V}\) at 95% confidence level, respectively.

Similar content being viewed by others

1 Introduction

Supersymmetry (SUSY) [1,2,3,4,5,6] is an extension to the Standard Model (SM) that introduces partner particles (called sparticles), which differ by half a unit of spin from their SM counterparts. For models with R-parity conservation [7], strongly produced sparticles would be pair-produced and are expected to decay into quarks or gluons, sometimes leptons, and the lightest SUSY particle (LSP), which is stable. The LSP is assumed to be weakly interacting and thus is not detected, resulting in events with potentially large missing transverse momentum (\(\varvec{ p }_{\text {T}}^{\text {miss}}\), with magnitude \(E_{\text {T}}^{\text {miss}}\)). In such a scenario the LSP could be a dark-matter candidate [8, 9].

For SUSY models to present a solution to the SM hierarchy problem [10,11,12,13], the partners of the gluons (gluinos, \(\tilde{g}\)), top quarks (top squarks, \(\tilde{t}_{\mathrm {L}}\) and \(\tilde{t}_{\mathrm {R}}\)) and Higgs bosons (higgsinos, \(\tilde{h}\)) should be close to the \(\text {Te}\text {V}\) scale. In this case, strongly interacting sparticles could be produced at a high enough rate to be detected by the experiments at the Large Hadron Collider (LHC).

Final states containing same-flavour opposite-sign (SFOS) lepton pairs may arise from the cascade decays of squarks and gluinos via several mechanisms. Decays via intermediate neutralinos (\({\tilde{\chi }}_{i}^{0}\)), which are the mass eigenstates formed from the linear superpositions of higgsinos and the superpartners of the electroweak gauge bosons, can result in SFOS lepton pairs being produced in the decay \(\tilde{\chi }_{2}^{0} \rightarrow \ell ^{+}\ell ^{-} \tilde{\chi }_{1}^{0}\). The index \(i=1,\ldots ,4\) orders the neutralinos according to their mass from the lightest to the heaviest. In such a scenario the lightest neutralino, \(\tilde{\chi }_1^0\), is the LSP. The nature of the \(\tilde{\chi }_2^0\) decay depends on the mass difference \(\Delta m_\chi \equiv m_{\tilde{\chi }_{2}^{0}} - m_{\tilde{\chi }_{1}^{0}}\), the composition of the charginos and neutralinos, and on whether there are additional sparticles with masses less than \(m_{\tilde{\chi }_{2}^{0}}\) that could be produced in the decay. In the case where \(\Delta m_\chi >m_Z\), SFOS lepton pairs may be produced in the decay \(\tilde{\chi }_{2}^{0} \rightarrow Z \tilde{\chi }_{1}^{0} \rightarrow \ell ^{+}\ell ^{-} \tilde{\chi }_{1}^{0}\), resulting in a peak in the invariant mass distribution at \(m_{\ell \ell } \approx m_Z\). For \(\Delta m_\chi < m_Z\), the decay \(\tilde{\chi }_{2}^{0} \rightarrow Z^* \tilde{\chi }_{1}^{0} \rightarrow \ell ^{+}\ell ^{-} \tilde{\chi }_{1}^{0}\) leads to a rising \(m_{\ell \ell }\) distribution with a kinematic endpoint (a so-called “edge”), the position of which is given by \(m_{\ell \ell }^{\text {max}}=\Delta m_\chi < m_Z\), below the Z boson mass peak. In addition, if there are sleptons (\(\tilde{\ell }\), the partner particles of the SM leptons) with masses less than \(m_{\tilde{\chi }_{2}^{0}}\), the \(\tilde{\chi }_2^0\) could follow the decay \(\tilde{\chi }_{2}^{0} \rightarrow \tilde{\ell }^{\pm }\ell ^{\mp } \rightarrow \ell ^{+}\ell ^{-} \tilde{\chi }_{1}^{0}\), also leading to a kinematic endpoint, but with a different position given by \(m_{\ell \ell }^{\mathrm {max}} = \sqrt{ (m^2_{\tilde{\chi }_2^0}-m^2_{\tilde{\ell }})(m^2_{\tilde{\ell }}-m^2_{\tilde{\chi }_1^0}) / m^2_{\tilde{\ell }}}\). This may occur below, on, or above the Z boson mass peak, depending on the value of the relevant sparticle masses. In the two scenarios with a kinematic endpoint, if \(\Delta m_\chi \) is small, production of leptons with low transverse momentum (\(p_{\text {T}}\)) is expected, motivating a search to specifically target low-\(p_{\text {T}}\) leptons. Section 3 and Fig. 1 provide details of the signal models considered.

This paper reports on a search for SUSY, where either an on-Z mass peak or an edge occurs in the invariant mass distribution of SFOS ee and \(\mu \mu \) lepton pairs. The search is performed using \(36.1~\mathrm {fb}^{-1}\) of pp collision data at \(\sqrt{s}=13\) \(\text {Te}\text {V}\) recorded during 2015 and 2016 by the ATLAS detector at the LHC. In order to cover compressed scenarios, i.e. where \(\Delta m_\chi \) is small, a dedicated “low-\(p_{\text {T}}\) lepton search” is performed in addition to the relatively “high-\(p_{\text {T}}\) lepton searches” in this channel, which have been performed previously by the CMS [14] and ATLAS [15] collaborations. Compared to the \(14.7~{\hbox {fb}^{-1}}\) ATLAS search [15], this analysis extends the reach in \(m_{\tilde{g}/\tilde{q}}\) by several hundred \(\text {Ge}\text {V}\) and improves the sensitivity of the search into the compressed region. Improvements are due to the optimisations for \(\sqrt{s}=13\) \(\text {Te}\text {V}\) collisions and to the addition of the low-\(p_{\text {T}}\) search, which lowers the lepton \(p_{\text {T}}\) threshold from \(>25\) to \(>7~\text {Ge}\text {V}\).

2 ATLAS detector

The ATLAS detector [16] is a general-purpose detector with almost \(4\pi \) coverage in solid angle.Footnote 1 The detector comprises an inner tracking detector, a system of calorimeters, and a muon spectrometer.

The inner tracking detector (ID) is immersed in a 2 T magnetic field provided by a superconducting solenoid and allows charged-particle tracking out to \(|\eta |=2.5\). It includes silicon pixel and silicon microstrip tracking detectors inside a straw-tube tracking detector. In 2015 a new innermost layer of silicon pixels was added to the detector and this improves tracking and b-tagging performance [17].

High-granularity electromagnetic and hadronic calorimeters cover the region \(|\eta |<4.9\). All the electromagnetic calorimeters, as well as the endcap and forward hadronic calorimeters, are sampling calorimeters with liquid argon as the active medium and lead, copper, or tungsten as the absorber. The central hadronic calorimeter is a sampling calorimeter with scintillator tiles as the active medium and steel as the absorber.

The muon spectrometer uses several detector technologies to provide precision tracking out to \(|\eta |=2.7\) and triggering in \(|\eta |<2.4\), making use of a system of three toroidal magnets.

The ATLAS detector has a two-level trigger system, with the first level implemented in custom hardware and the second level implemented in software. This trigger system reduces the output rate to about 1 kHz from up to 40 MHz [18].

3 SUSY signal models

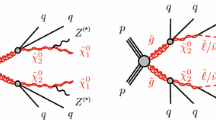

SUSY-inspired simplified models are considered as signal scenarios for this analysis. In all of these models, squarks or gluinos are directly pair-produced, decaying via an intermediate neutralino, \(\tilde{\chi }_2^0\), into the LSP (\(\tilde{\chi }_1^0\)). All sparticles not directly involved in the decay chains considered are assigned very high masses, such that they are decoupled. Three example decay topologies are shown in Fig. 1. For all models with gluino pair production, a three-body decay for \(\tilde{g}\rightarrow q \bar{q} \tilde{\chi }_2^0\) is assumed. Signal models are generated on a grid over a two-dimensional space, varying the gluino or squark mass and the mass of either the \(\tilde{\chi }_2^0\) or the \(\tilde{\chi }_1^0\).

The first model considered with gluino production, illustrated on the left of Fig. 1, is the so-called slepton model, which assumes that the sleptons are lighter than the \(\tilde{\chi }_{2}^{0}\). The \(\tilde{\chi }_{2}^{0}\) then decays either as \(\tilde{\chi }_{2}^{0} \rightarrow \tilde{\ell }^{\mp }\ell ^{\pm }; \tilde{\ell } \rightarrow \ell \tilde{\chi }_{1}^{0}\) or as \(\tilde{\chi }_{2}^{0} \rightarrow \tilde{\nu }\nu ; \tilde{\nu } \rightarrow \nu \tilde{\chi }_{1}^{0}\), the two decay channels having equal probability. In these decays, \(\tilde{\ell }\) can be \(\tilde{e}\), \(\tilde{\mu }\) or \(\tilde{\tau }\) and \(\tilde{\nu }\) can be \(\tilde{\nu }_e\), \(\tilde{\nu }_\mu \) or \(\tilde{\nu }_\tau \) with equal probability. The masses of the superpartners of the left-handed leptons are set to the average of the \(\tilde{\chi }_2^0\) and \(\tilde{\chi }_1^0\) masses, while the superpartners of the right-handed leptons are decoupled. The three slepton flavours are taken to be mass-degenerate. The kinematic endpoint in the invariant mass distribution of the two final-state leptons in this decay chain can occur at any mass, highlighting the need to search over the full dilepton mass distribution. The endpoint feature of this decay topology provides a generic signature for many models of beyond-the-SM (BSM) physics.

In the \(Z^{(*)}\) model in the centre of Fig. 1 the \(\tilde{\chi }_{2}^{0}\) from the gluino decay then decays as \(\tilde{\chi }_{2}^{0} \rightarrow Z^{(*)}\tilde{\chi }_{1}^{0}\). In both the slepton and \(Z^{(*)}\) models, the \(\tilde{g}\) and \(\tilde{\chi }_1^0\) masses are free parameters that are varied to produce the two-dimensional grid of signal models. For the gluino decays, \(\tilde{g}\rightarrow q \bar{q} \tilde{\chi }_2^0\), both models have equal branching fractions for \(q=u,d,c,s,b\). The \(\tilde{\chi }_2^0\) mass is set to the average of the gluino and \(\tilde{\chi }_1^0\) masses. The mass splittings are chosen to enhance the topological differences between these simplified models and other models with only one intermediate particle between the gluino and the LSP [19].

Three additional models with decay topologies as illustrated in the middle and right diagrams of Fig. 1, but with exclusively on-shell Z bosons in the decay, are also considered. For two of these models, the LSP mass is set to 1 \(\text {Ge}\text {V}\), inspired by SUSY scenarios with a low-mass LSP (e.g. generalised gauge mediation [20,21,22]). Sparticle mass points are generated across the \(\tilde{g}-\tilde{\chi }_2^0\) (or \(\tilde{q}-\tilde{\chi }_2^0\)) plane. These two models are referred to here as the \(\tilde{g}-\tilde{\chi }_2^0\) on-shell and \(\tilde{q}-\tilde{\chi }_2^0\) on-shell models, respectively. The third model is based on topologies that could be realised in the 19-parameter phenomenological supersymmetric Standard Model (pMSSM) [23, 24] with potential LSP masses of 100 \(\text {Ge}\text {V}\) or more. In this case the \(\tilde{\chi }_2^0\) mass is chosen to be 100 \(\text {Ge}\text {V}\) above the \(\tilde{\chi }_1^0\) mass, which can maximise the branching fraction to Z bosons. Sparticle mass points are generated across the \(\tilde{g}-\tilde{\chi }_1^0\) plane, and this model is thus referred to as the \(\tilde{g}-\tilde{\chi }_1^0\) on-shell model. For the two models with gluino pair production, the branching fractions for \(q=u,d,c,s\) are each 25%. For the model involving squark pair production, the super-partners of the u-, d-, c- and s-quarks have the same mass, with the super-partners of the b- and t-quarks being decoupled. A summary of all signal models considered in this analysis can be found in Table 1.

Example decay topologies for three of the simplified models considered. The left two decay topologies involve gluino pair production, with the gluinos following an effective three-body decay for \(\tilde{g}\rightarrow q \bar{q} \tilde{\chi }_2^0\), with \(\tilde{\chi }_{2}^{0} \rightarrow \tilde{\ell }^{\mp }\ell ^{\pm } / \tilde{\nu }\nu \) for the “slepton model” (left) and \(\tilde{\chi }_2^0\rightarrow Z^{(*)} \tilde{\chi }_1^0\) in the \(Z^{(*)}\), \(\tilde{g}-\tilde{\chi }_2^0\) or \(\tilde{g}-\tilde{\chi }_1^0\) model (middle). The diagram on the right illustrates the \(\tilde{q}-\tilde{\chi }_2^0\) on-shell model, where squarks are pair-produced, followed by the decay \(\tilde{q}\rightarrow q \tilde{\chi }_2^0\), with \(\tilde{\chi }_2^0\rightarrow Z \tilde{\chi }_1^0\)

4 Data and simulated event samples

The data used in this analysis were collected by ATLAS during 2015 and 2016, with a mean number of additional pp interactions per bunch crossing (pile-up) of approximately 14 in 2015 and 25 in 2016, and a centre-of-mass collision energy of 13 \(\text {Te}\text {V}\). After imposing requirements based on beam and detector conditions and data quality, the data set corresponds to an integrated luminosity of \(36.1~\mathrm {fb}^{-1}\). The uncertainty in the combined 2015 and 2016 integrated luminosity is \(\pm 2.1\%\). Following a methodology similar to that detailed in Ref. [25], it is derived from a calibration of the luminosity scale using \(x-y\) beam-separation scans performed in August 2015 and May 2016.

For the high-\(p_{\text {T}}\) analysis, data events were collected using single-lepton and dilepton triggers [18]. The dielectron, dimuon, and electron–muon triggers have \(p_{\text {T}} \) thresholds in the range 12–24 \(\text {Ge}\text {V}\) for the higher-\(p_{\text {T}} \) lepton. Additional single-electron (single-muon) triggers are used, with \(p_{\text {T}} \) thresholds of 60 (50) \(\text {Ge}\text {V}\), to increase the trigger efficiency for events with high-\(p_{\text {T}}\) leptons. Events for the high-\(p_{\text {T}}\) selection are required to contain at least two selected leptons with \(p_{\text {T}} >25\) \(\text {Ge}\text {V}\). This selection is fully efficient relative to the lepton triggers with the \(p_{\text {T}}\) thresholds described above.

For the low-\(p_{\text {T}}\) analysis, triggers based on \(E_{\text {T}}^{\text {miss}}\) are used in order to increase efficiency for events where the \(p_{\text {T}}\) of the leptons is too low for the event to be selected by the single-lepton or dilepton triggers. The \(E_{\text {T}}^{\text {miss}}\) trigger thresholds varied throughout data-taking during 2015 and 2016, with the most stringent being 110 \(\text {Ge}\text {V}\). Events are required to have \(E_{\text {T}}^{\text {miss}} >200~\text {Ge}\text {V}\), making the selection fully efficient relative to the \(E_{\text {T}}^{\text {miss}}\) triggers with those thresholds.

An additional control sample of events containing photons was collected using a set of single-photon triggers with \(p_{\text {T}}\) thresholds in the range 45–140 \(\text {Ge}\text {V}\). All photon triggers, except for the one with threshold \(p_{\text {T}} >120\) \(\text {Ge}\text {V}\) in 2015, or the one with \(p_{\text {T}} >140\) \(\text {Ge}\text {V}\) in 2016, were prescaled. This means that only a subset of events satisfying the trigger requirements were retained. Selected events are further required to contain a selected photon with \(p_{\text {T}} >50\) \(\text {Ge}\text {V}\).

Simulated event samples are used to aid in the estimation of SM backgrounds, validate the analysis techniques, optimise the event selection, and provide predictions for SUSY signal processes. All SM background samples used are listed in Table 2, along with the parton distribution function (PDF) set, the configuration of underlying-event and hadronisation parameters (underlying-event tune) and the cross-section calculation order in \(\alpha _{\text {S}}\) used to normalise the event yields for these samples.

The \(t\bar{t} +W\), \(t\bar{t} +Z\), and \(t\bar{t} +WW\) processes were generated at leading order (LO) in \(\alpha _{\text {S}}\) with the NNPDF2.3LO PDF set [26] using MG5_aMC@NLO v2.2.2 [27], interfaced with Pythia 8.186 [28] with the A14 underlying-event tune [29] to simulate the parton shower and hadronisation. Single-top and \(t\bar{t}\) samples were generated using Powheg Box v2 [30,31,32] with Pythia 6.428 [33] used to simulate the parton shower, hadronisation, and the underlying event. The CT10 PDF set [34] was used for the matrix element, and the CTEQ6L1 PDF set with corresponding Perugia2012 [35] tune for the parton shower. In the case of both the MG5_aMC@NLO and Powheg samples, the EvtGen v1.2.0 program [36] was used for properties of the bottom and charm hadron decays. Diboson and \(Z/\gamma ^{*}+\text {jets}\) processes were simulated using the Sherpa 2.2.1 event generator. Matrix elements were calculated using Comix [37] and OpenLoops [38] and merged with Sherpa’s own internal parton shower [39] using the ME+PS@NLO prescription [40]. The NNPDF3.0nnlo [41] PDF set is used in conjunction with dedicated parton shower tuning developed by the Sherpa authors. For Monte Carlo (MC) closure studies of the data-driven \(Z/\gamma ^{*}+\text {jets}\) estimate (described in Sect. 7.2), \(\gamma +\text {jets}\) events were generated at LO with up to four additional partons using Sherpa 2.1, and are compared with a sample of \(Z/\gamma ^{*}+\text {jets}\) events with up to two additional partons at NLO (next-to-leading order) and up to four at LO generated using Sherpa 2.1. Additional MC simulation samples of events with a leptonically decaying vector boson and photon (\(V\gamma \), where \(V=W,Z\)) were generated at LO using Sherpa 2.2.1. Matrix elements including all diagrams with three electroweak couplings were calculated with up to three partons. These samples are used to estimate backgrounds with real \(E_{\text {T}}^{\text {miss}}\) in \(\gamma +\text {jets} \) data samples.

The SUSY signal samples were produced at LO using MG5_aMC@NLO with the NNPDF2.3LO PDF set, interfaced with Pythia 8.186. The scale parameter for CKKW-L matching [42, 43] was set at a quarter of the mass of the gluino. Up to one additional parton is included in the matrix element calculation. The underlying event was modelled using the A14 tune for all signal samples, and EvtGen was adopted to describe the properties of bottom and charm hadron decays. Signal cross-sections were calculated at NLO in \(\alpha _{\text {S}}\), including resummation of soft gluon emission at next-to-leading-logarithmic accuracy (NLO+NLL) [44,45,46,47,48].

All of the SM background MC samples were passed through a full ATLAS detector simulation [49] using \(\textsc {Geant}\)4 [50]. A fast simulation [49], in which a parameterisation of the response of the ATLAS electromagnetic and hadronic calorimeters is combined with \(\textsc {Geant}\)4 elsewhere, was used in the case of signal MC samples. This fast simulation was validated by comparing a few signal samples to some fully simulated points.

Minimum-bias interactions were generated and overlaid on top of the hard-scattering process to simulate the effect of multiple pp interactions occurring during the same (in-time) or a nearby (out-of-time) bunch-crossing. These were produced using Pythia 8.186 with the A2 tune [51] and MSTW 2008 PDF set [52]. The MC simulation samples were reweighted such that the distribution of the average number of interactions per bunch crossing matches the one observed in data.

5 Object identification and selection

Jets and leptons selected for analysis are categorised as either “baseline” or “signal” objects according to various quality and kinematic requirements. Baseline objects are used in the calculation of missing transverse momentum, and to resolve ambiguity between the analysis objects in the event, while the jets and leptons used to categorise the event in the final analysis selection must pass more stringent signal requirements.

Electron candidates are reconstructed using energy clusters in the electromagnetic calorimeter matched to ID tracks. Baseline electrons are required to have \(p_{\text {T}} >10\) \(\text {Ge}\text {V}\) (\(p_{\text {T}} >7\) \(\text {Ge}\text {V}\)) in the case of the high-\(p_{\text {T}}\) (low-\(p_{\text {T}}\)) lepton selection. These must also satisfy the “loose likelihood” criteria described in Ref. [68] and reside within the region \(|\eta |=2.47\). Signal electrons are required to satisfy the “medium likelihood” criteria of Ref. [68], and those entering the high-\(p_{\text {T}}\) selection are further required to have \(p_{\text {T}} >25\) \(\text {Ge}\text {V}\). Signal-electron tracks must pass within \(|z_0\sin \theta | = 0.5\) mm of the primary vertexFootnote 2, where \(z_0\) is the longitudinal impact parameter with respect to the primary vertex. The transverse-plane distance of closest approach of the electron to the beamline, divided by the corresponding uncertainty, must be \(|d_0/\sigma _{d_0}|<5\). These electrons must also be isolated from other objects in the event, according to a \(p_{\text {T}}\)-dependent isolation requirement, which uses calorimeter- and track-based information to obtain 95% efficiency at \(p_{\text {T}} =25\) \(\text {Ge}\text {V}\) for \(Z\rightarrow ee\) events, rising to 99% efficiency at \(p_{\text {T}} =60\) \(\text {Ge}\text {V}\).

Baseline muons are reconstructed from either ID tracks matched to muon segments (collections of hits in a single layer of the muon spectrometer) or combined tracks formed in the ID and muon spectrometer [70]. They are required to satisfy the “medium” selection criteria described in Ref. [70], and for the high-\(p_{\text {T}}\) (low-\(p_{\text {T}}\)) analysis must satisfy \(p_{\text {T}}>10\) \(\text {Ge}\text {V}\) (\(p_{\text {T}}>7\) \(\text {Ge}\text {V}\)) and \(|\eta |<2.5\). Signal muon candidates are required to be isolated and have \(|z_0\sin \theta | < 0.5\) mm and \(|d_0/\sigma _{d_0}|<3\); those entering the high-\(p_{\text {T}}\) selection are further required to have \(p_{\text {T}} >25\) \(\text {Ge}\text {V}\). Calorimeter- and track-based isolation criteria are used to obtain 95% efficiency at \(p_{\text {T}} =25\) \(\text {Ge}\text {V}\) for \(Z\rightarrow \mu \mu \) events, rising to 99% efficiency at \(p_{\text {T}} =60\) \(\text {Ge}\text {V}\) [70].

Jets are reconstructed from topological clusters of energy [71] in the calorimeter using the anti-\(k_{t}\) algorithm [72, 73] with a radius parameter of 0.4 by making use of utilities within the FastJet package [74]. The reconstructed jets are then calibrated to the particle level by the application of a jet energy scale (JES) derived from 13 \(\text {Te}\text {V}\) data and simulation [75]. A residual correction applied to jets in data is based on studies of the \(p_{\text {T}} \) balance between jets and well-calibrated objects in the MC simulation and data [76]. Baseline jet candidates are required to have \(p_{\text {T}} >20\) \(\text {Ge}\text {V}\) and reside within the region \(|\eta |=4.5\). Signal jets are further required to satisfy \(p_{\text {T}} >30\) \(\text {Ge}\text {V}\) and reside within the region \(|\eta |=2.5\). Additional track-based criteria designed to select jets from the hard scatter and reject those originating from pile-up are applied to signal jets with \(p_{\text {T}} <60\) \(\text {Ge}\text {V}\) and \(|\eta |<2.4\). These are imposed by using the jet vertex tagger described in Ref. [77]. Finally, events containing a baseline jet that does not pass jet quality requirements are vetoed in order to remove events impacted by detector noise and non-collision backgrounds [78, 79]. The MV2C10 boosted decision tree algorithm [80, 81] identifies jets containing b-hadrons (b-jets) by using quantities such as the impact parameters of associated tracks and positions of any good reconstructed secondary vertices. A selection that provides 77% efficiency for tagging b-jets in simulated \(t\bar{t}\) events is used. The corresponding rejection factors against jets originating from c-quarks, tau leptons, and light quarks and gluons in the same sample for this selection are 6, 22, and 134, respectively. These tagged jets are called b-tagged jets.

Photon candidates are required to satisfy the “tight” selection criteria described in Ref. [82], have \(p_{\text {T}} >25\) \(\text {Ge}\text {V}\) and reside within the region \(|\eta |=2.37\), excluding the calorimeter transition region \(1.37<|\eta |<1.6\). Signal photons are further required to have \(p_{\text {T}} >50\) \(\text {Ge}\text {V}\) and to be isolated from other objects in the event, according to \(p_{\text {T}}\)-dependent requirements on both track-based and calorimeter-based isolation.

To avoid the duplication of analysis objects, an overlap removal procedure is applied using baseline objects. Electron candidates originating from photons radiated off of muons are rejected if they are found to share an inner detector track with a muon. Any baseline jet within \(\Delta R=0.2\) of a baseline electron is removed, unless the jet is b-tagged. For this overlap removal, a looser 85% efficiency working point is used for tagging b-jets. Any electron that lies within \(\Delta R<\mathrm { min } (0.04+(10~\text {Ge}\text {V})/p_{\text {T}},0.4)\) from a remaining jet is discarded. If a baseline muon either resides within \(\Delta R=0.2\) of, or has a track associated with, a remaining baseline jet, that jet is removed unless it is b-tagged. Muons are removed in favour of jets with the same \(p_{\text {T}}\)-dependent \(\Delta R\) requirement as electrons. Finally, photons are removed if they reside within \(\Delta R=0.4\) of a baseline electron or muon, and any jet within \(\Delta R=0.4\) of any remaining photon is discarded.

The missing transverse momentum \(\varvec{ p }_{\text {T}}^{\text {miss}}\) is defined as the negative vector sum of the transverse momenta of all baseline electrons, muons, jets, and photons [83]. Low momentum contributions from particle tracks from the primary vertex that are not associated with reconstructed analysis objects are included in the calculation of \(\varvec{ p }_{\text {T}}^{\text {miss}}\).

Signal models with large hadronic activity are targeted by placing additional requirements on the quantity \(H_{\text {T}}\), defined as the scalar sum of the \(p_{\text {T}} \) values of all signal jets. For the purposes of rejecting \(t\bar{t}\) background events, the \(m_{\mathrm {T2}} \) [84, 85] variable is used, defined as an extension of the transverse mass \(m_{\text {T}}\) for the case of two missing particles:

where \(\varvec{ p }_{\text {T},\ell a}\) is the transverse-momentum vector of the highest \(p_{\text {T}}\) (\(a=1\)) or second highest \(p_{\text {T}}\) (\(a=2\)) lepton, and \(\mathbf {x}_{\text {T,b}}\) (\(b=1,2\)) are two vectors representing the possible momenta of the invisible particles that minimize the \(m_{\mathrm {T2}}\) in the event. For typical \(t\bar{t}\) events, the value of \(m_{\mathrm {T2}}\) is small, while for signal events in some scenarios it can be relatively large.

All MC samples have MC-to-data corrections applied to take into account small differences between data and MC simulation in identification, reconstruction and trigger efficiencies. The \(p_{\text {T}} \) values of leptons in MC samples are additionally smeared to match the momentum resolution in data.

6 Event selection

This search is carried out using signal regions (SRs) designed to select events where heavy new particles decay into an “invisible” LSP, with final-state signatures including either a Z boson mass peak or a kinematic endpoint in the dilepton invariant mass distribution. In order to estimate the expected contribution from SM backgrounds in these regions, control regions (CRs) are defined in such a way that they are enriched in the particular SM process of interest and have low expected contamination from events potentially arising from SUSY signals. For signal points not excluded by the previous iteration of this analysis [15], the signal contamination in the CRs is \(<5\%\), with the exception of models with \(m_{\tilde{g}}<600\) \(\text {Ge}\text {V}\) in the higher-\(E_{\text {T}}^{\text {miss}}\) CRs of the low-\(p_{\text {T}}\) search where it can reach 20%. To validate the background estimation procedures, various validation regions (VRs) are defined so as to be analogous but orthogonal to the CRs and SRs, by using less stringent requirements than the SRs on variables used to isolate the SUSY signal, such as \(m_{\mathrm {T2}}\), \(E_{\text {T}}^{\text {miss}}\) or \(H_{\text {T}}\). VRs with additional requirements on the number of leptons are used to validate the modelling of backgrounds in which more than two leptons are expected. The various methods used to perform the background prediction in the SRs are discussed in Sect. 7.

Events entering the SRs must have at least two signal leptons (electrons or muons), where the two highest-\(p_{\text {T}}\) leptons in the event are used when defining further event-level requirements. These two leptons must have the same-flavour (SF) and oppositely signed charges (OS). For the high-\(p_{\text {T}}\) lepton analysis, in both the edge and on-Z searches, the events must pass at least one of the leptonic triggers, whereas \(E_{\text {T}}^{\text {miss}}\) triggers are used for the low-\(p_{\text {T}}\) analysis so as to select events containing softer leptons. In the cases where a dilepton trigger is used to select an event, the two leading (highest \(p_{\text {T}}\)) leptons must be matched to the objects that triggered the event. For events selected by a single-lepton trigger, at least one of the two leading leptons must be matched to the trigger object in the same way. The two leading leptons in the event must have \(p_{\text {T}} >\{50,25\}\) \(\text {Ge}\text {V}\) to pass the high-\(p_{\text {T}}\) event selection, and must have \(p_{\text {T}} >\{7,7\}\) \(\text {Ge}\text {V}\), while not satisfying \(p_{\text {T}} >\{50,25\}\) \(\text {Ge}\text {V}\), to be selected by the low-\(p_{\text {T}}\) analysis.

Since at least two jets are expected in all signal models studied, selected events are further required to contain at least two signal jets. Furthermore, for events with a \(E_{\text {T}}^{\text {miss}}\) requirement applied, the minimum azimuthal opening angle between either of the two leading jets and the \({\varvec{p}}_{\mathrm {T}}^{\mathrm {miss}}\), \(\Delta \phi (\text {jet}_{12},{\varvec{p}}_{\mathrm {T}}^{\mathrm {miss}})\), is required to be greater than 0.4 so as to remove events with \(E_{\text {T}}^{\text {miss}}\) arising from jet mismeasurements.

The selection criteria for the CRs, VRs, and SRs are summarised in Tables 3 and 4, for the high- and low-\(p_{\text {T}}\) analyses respectively. The most important of these regions are shown graphically in Fig. 2.

Schematic diagrams of the main validation and signal regions for the high-\(p_{\text {T}}\) (top) and low-\(p_{\text {T}}\) (bottom) searches. Regions where hatched markings overlap indicate the overlap between various regions. For each search (high-\(p_{\text {T}}\) or low-\(p_{\text {T}}\)), the SRs are not orthogonal; in the case of high-\(p_{\text {T}}\), the VRs also overlap. In both cases, as indicated in the diagrams, there is no overlap between SRs and VRs

For the high-\(p_{\text {T}}\) search, the leading lepton’s \(p_{\text {T}}\) is required to be at least 50 \(\text {Ge}\text {V}\) to reject additional background events while retaining high efficiency for signal events. Here, a kinematic endpoint in the \(m_{\ell \ell }\) distribution is searched for in three signal regions. In each case, it is carried out across the full \(m_{\ell \ell }\) spectrum, with the exception of the region with \(m_{\ell \ell }<12\) \(\text {Ge}\text {V}\), which is vetoed to reject low-mass Drell–Yan (DY) events, \(\Upsilon \) and other dilepton resonances. Models with low, medium and high values of \(\Delta m_{\tilde{g}} = m_{\tilde{g}} - m_{\tilde{\chi }^0_1}\) are targeted by selecting events with \(H_{\text {T}} >200, 400\) and 1200 \(\text {Ge}\text {V}\) to enter SR-low, SR-medium and SR-high, respectively. Requirements on \(E_{\text {T}}^{\text {miss}}\) are also used to select signal-like events, with higher \(E_{\text {T}}^{\text {miss}}\) thresholds probing models with higher LSP masses. For SR-low and SR-medium a cut on \(m_{\mathrm {T2}}\) of \(>70\) \(\text {Ge}\text {V}\) and \(>25\) \(\text {Ge}\text {V}\), respectively, is applied to reduce backgrounds from top-quark production. In order to make model-dependent interpretations using the signal models described in Sect. 3, a profile likelihood [86] fit to the \(m_{\ell \ell }\) shape is performed in each SR separately, with \(m_{\ell \ell }\) bin boundaries chosen to ensure a sufficient number of events for a robust background estimate in each bin and maximise sensitivity to target signal models. The \(m_{\ell \ell }\) bins are also used to form 29 non-orthogonal \(m_{\ell \ell }\) windows to probe the existence of BSM physics or to assess model-independent upper limits on the number of possible signal events. These windows are chosen so that they are sensitive to a broad range of potential kinematic edge positions. In cases where the signal could stretch over a large \(m_{\ell \ell }\) range, the exclusive bins used in the shape fit potentially truncate the lower-\(m_{\ell \ell }\) tail, and so are less sensitive. Of these windows, ten are in SR-low, nine are in SR-medium and ten are in SR-high. A schematic diagram showing the \(m_{\ell \ell }\) bin edges in the SRs and the subsequent \(m_{\ell \ell }\) windows is shown in Fig. 3. More details of the \(m_{\ell \ell }\) definitions in these windows are given along with the results in Sect. 9. Models without light sleptons are targeted by windows with \(m_{\ell \ell }<81\) \(\text {Ge}\text {V}\) for \(\Delta m_\chi < m_Z\), and by the window with \(81<m_{\ell \ell }<101\) \(\text {Ge}\text {V}\) for \(\Delta m_\chi > m_Z\). The on-Z bins of the SRs, with bin boundaries \(81<m_{\ell \ell }<101\) \(\text {Ge}\text {V}\), are each considered as one of the 29 \(m_{\ell \ell }\) windows, having good sensitivity to models with on-shell Z bosons in the final state.

For the low-\(p_{\text {T}}\) search, events are required to have at least two leptons with \(p_{\text {T}}\) \(>7\) \(\text {Ge}\text {V}\). Orthogonality with the high-\(p_{\text {T}}\) channel is imposed by rejecting events that satisfy the lepton \(p_{\text {T}}\) requirements of the high-\(p_{\text {T}}\) selection. In addition to this, events must have \(m_{\ell \ell }\) \(>4\) \(\text {Ge}\text {V}\), excluding the region between 8.4 and 11 \(\text {Ge}\text {V}\), in order to exclude the \(J/\psi \) and \(\Upsilon \) resonances. To isolate signal models with small \(\Delta m_\chi \), the low-\(p_{\text {T}}\) lepton SRs place upper bounds on the \(p_{\text {T}}^{\ell \ell }\) (\(p_{\text {T}}\) of the dilepton system) of events entering the two SRs, SRC and SRC-MET. SRC selects events with a maximum \(p_{\text {T}}^{\ell \ell }\) requirement of 20 \(\text {Ge}\text {V}\), targeting models with small \(\Delta m_\chi \). SRC-MET requires \(p_{\text {T}}^{\ell \ell }\) \(<75\) \(\text {Ge}\text {V}\) and has a higher \(E_{\text {T}}^{\text {miss}}\) threshold (500 \(\text {Ge}\text {V}\) compared with 250 \(\text {Ge}\text {V}\) in SRC), maximising sensitivity to very compressed models. Here the analysis strategy closely follows that of the high-\(p_{\text {T}}\) analysis, with a shape fit applied to the \(m_{\ell \ell }\) distribution performed independently in SRC and SRC-MET. The \(m_{\ell \ell }\) bins are used to construct \(m_{\ell \ell }\) windows from which model-independent assessments can be made. There are a total of 12 \(m_{\ell \ell }\) windows for the low-\(p_{\text {T}}\) analysis, six in each SR.

Schematic diagrams to show the \(m_{\ell \ell }\) binning used in the various SRs alongside the overlapping \(m_{\ell \ell }\) windows used for model-independent interpretations. The unfilled boxes indicate the \(m_{\ell \ell }\) bin edges for the shape fits used in the model-dependent interpretations. Each filled region underneath indicates one of the \(m_{\ell \ell }\) windows, formed of one or more \(m_{\ell \ell }\) bins, used to derive model-independent results for the given SR. In each case, the last \(m_{\ell \ell }\) bin includes the overflow

7 Background estimation

In most SRs, the dominant background processes are “flavour-symmetric” (FS), where the ratio of ee, \(\mu \mu \) and \(e\mu \) dileptonic branching fractions is expected to be 1:1:2 because the two leptons originate from independent \(W\rightarrow \ell \nu \) decays. Dominated by \(t\bar{t}\), this background, described in Sect. 7.1, also includes WW, Wt, and \(Z\rightarrow \tau \tau \) processes, and typically makes up 50–95% of the total SM background in the SRs. The FS background is estimated using data control samples of different-flavour (DF) events for the high-\(p_{\text {T}}\) search, whereas the low-\(p_{\text {T}}\) search uses such samples to normalise the dominant top-quark (\(t\bar{t}\) and Wt) component of this background, with the shape taken from MC simulation.

As all the SRs have a high \(E_{\text {T}}^{\text {miss}}\) requirement, \(Z/\gamma ^{*}+\text {jets}\) events generally enter the SRs when there is large \(E_{\text {T}}^{\text {miss}}\) originating from instrumental effects or from neutrinos from the decays of hadrons produced in jet fragmentation. This background is always relatively small, contributing less than \(10\%\) of the total background in the SRs, but is difficult to model with MC simulation. A control sample of \(\gamma +\text {jets}\) events in data, which have similar kinematic properties to those of \(Z/\gamma ^{*}+\text {jets}\) and similar sources of \(E_{\text {T}}^{\text {miss}}\), is used to model this background for the high-\(p_{\text {T}}\) search by weighting the \(\gamma +\text {jets}\) events to match \(Z/\gamma ^{*}+\text {jets}\) in another control sample, described in Sect. 7.2. For the low-\(p_{\text {T}}\) analysis, where \(Z/\gamma ^{*}+\text {jets}\) processes make up at most \(8\%\) of the background in the SRs, MC simulation is used to estimate this background.

The contribution from events with fake or misidentified leptons in the low-\(p_{\text {T}}\) SRs is at most \(20\%\), and is estimated using a data-driven matrix method, described in Sect. 7.3. The contribution to the SRs from WZ / ZZ production, described in Sect. 7.4, while small for the most part (\(<5\%\)), can be up to \(70\%\) in the on-Z bins of the high-\(p_{\text {T}}\) analysis. These backgrounds are estimated from MC simulation and validated in dedicated \(3\ell \) (WZ) and \(4\ell \) (ZZ) VRs. “Rare top” backgrounds, also described in Sect. 7.4, which include \(t\bar{t}W\), \(t\bar{t}Z\) and \(t\bar{t}WW\) processes, constitute \(<10\%\) of the SM expectation in all SRs and are estimated from MC simulation.

7.1 Flavour-symmetric backgrounds

For the high-\(p_{\text {T}}\) analysis the so-called “flavour-symmetry” method is used to estimate the contribution of the background from flavour-symmetric processes to each SR. This method makes use of three \(e\mu \) control regions, CR-FS-low, CR-FS-medium or CR-FS-high, with the same \(m_{\ell \ell }\) binning as their corresponding SR. For SR-low, SR-medium or SR-high the flavour-symmetric contribution to each \(m_{\ell \ell }\) bin of the signal regions is predicted using data from the corresponding bin from CR-FS-low, CR-FS-medium or CR-FS-high, respectively (precise region definitions can be found in Table 3). These CRs are \(>95\%\) pure in flavour-symmetric processes (estimated from MC simulation). Each of these regions has the same kinematic requirements as their respective SR, with the exception of CR-FS-high, in which the 1200 \(\text {Ge}\text {V}\) \(H_{\text {T}}\) and 200 \(\text {Ge}\text {V}\) \(E_{\text {T}}^{\text {miss}}\) thresholds of SR-high are loosened to 1100 and 100 \(\text {Ge}\text {V}\), respectively, in order to increase the number of \(e\mu \) events available to model the FS background.

The data events in these regions are subject to lepton \(p_{\text {T}} \)- and \(\eta \)-dependent correction factors determined in data. These factors are measured separately for 2015 and 2016 to take into account the differences between the triggers available in those years, and account for the different trigger efficiencies for the dielectron, dimuon and electron–muon selections, as well as the different identification and reconstruction efficiencies for electrons and muons. The estimated numbers of events in the SF channels, \(N^{\text {est}}\), are given by:

where \(N_{e\mu }^{\text {data}}\) is the number of data events observed in a given control region (CR-FS-low, CR-FS-medium or CR-FS-high). Events from non-FS processes are subtracted from the \(e\mu \) data events using MC simulation, the second term in Eq. 1, where \(N_{e\mu }^{\text {MC}}\) is the number of events from non-FS processes in MC simulation in the respective CRs. The factor \(\alpha (p_{\text {T}} ^i, \eta ^i)\) accounts for the different trigger efficiencies for SF and DF events, and \(k_{e}(p_{\text {T}} ^i, \eta ^i)\) and \(k_{\mu }(p_{\text {T}} ^i, \eta ^i)\) are the electron and muon selection efficiency factors for the kinematics of the lepton being replaced in event i. The trigger and selection efficiency correction factors are derived from the events in an inclusive on-Z selection (\(81<m_{\ell \ell }<101\, \text {GeV}\), \(\ge 2\) signal jets), according to:

where \(\epsilon ^{\text {trig}}_{ee/\mu \mu /e\mu }\) is the trigger efficiency as a function of the leading-lepton (\(\ell _1\)) kinematics and \(N_{ee}^{\text {meas}}\) \((N_{\mu \mu }^{\text {meas}})\) is the number of ee \((\mu \mu )\) data events in the inclusive on-Z region (or a DF selection in the same mass window in the case of \(\epsilon ^{\text {trig}}_{e\mu }\), for example) outlined above. Here \(k_{e}(p_{\text {T}}, \eta )\) and \(k_{\mu }(p_{\text {T}}, \eta )\) are calculated separately for leading and sub-leading leptons. The correction factors are typically within 10% of unity, except in the region \(|\eta |<0.1\) where, because of a lack of coverage of the muon spectrometer, they deviate by up to 50% from unity. To account for the extrapolation from \(H_{\text {T}} >1100\) \(\text {Ge}\text {V}\) and \(E_{\text {T}}^{\text {miss}} >100\) \(\text {Ge}\text {V}\) to \(H_{\text {T}} >1200\) \(\text {Ge}\text {V}\) and \(E_{\text {T}}^{\text {miss}} >200\) \(\text {Ge}\text {V}\) going from CR-FS-high to SR-high, an additional factor, \(f_{\text {SR}}\), derived from simulation, is applied as given in Eq. 2.

In CR-FS-high this extrapolation factor is found to be constant over the full \(m_{\ell \ell }\) range.

The FS method is validated by performing a closure test using MC simulated events, with FS simulation in the \(e\mu \) channel being scaled accordingly to predict the expected contribution in the SRs. The results of this closure test can be seen on the left of Fig. 4, where the \(m_{\ell \ell }\) distribution is well modelled after applying the FS method to the \(e\mu \) simulation. This is true in particular in SR-high, where the \(E_{\text {T}}^{\text {miss}}\)- and \(H_{\text {T}}\)-based extrapolation is applied. The small differences between the predictions and the observed distributions are used to assign an MC non-closure uncertainty to the estimate. To further validate the FS method, the full procedure is applied to data in VR-low, VR-medium and VR-high (defined in Table 3) at lower \(E_{\text {T}}^{\text {miss}}\), but otherwise with identical kinematic requirements. The FS contribution in these three VRs is estimated using three analogous \(e\mu \) regions: VR-FS-low, VR-FS-med and VR-FS-high, also defined in Table 3. In the right of Fig. 4, the estimate taken from \(e\mu \) data is shown to model the SF data well.

Validation of the flavour-symmetry method using MC simulation (left) and data (right), in SR-low and VR-low (top), SR-medium and VR-medium (middle), and SR-high and VR-high (bottom). On the left the flavour-symmetry estimate from \(t\bar{t}\), Wt, WW and \(Z\rightarrow \tau \tau \) MC samples in the \(e\mu \) channel is compared with the SF distribution from these MC samples. The MC statistical uncertainty is indicated by the hatched band. In the data plots, all uncertainties in the background expectation are included in the hatched band. The bottom panel of each figure shows the ratio of the observation to the prediction. In cases where the data point is not accommodated by the scale of this panel, an arrow indicates the direction in which the point is out of range. The last bin always contains the overflow

For the low-\(p_{\text {T}}\) search, FS processes constitute the dominant background in SRC, comprising \(>90\%\) \(t\bar{t}\), \(\sim 8\%\) Wt, with a very small contribution from WW and \(Z\rightarrow \tau \tau \). These backgrounds are modelled using MC simulation, with the dominant \(t\bar{t}\) and Wt components being normalised to data in dedicated \(e\mu \) CRs. The top-quark background normalisation in SRC is taken from CRC, while CRC-MET is used to extract the top-quark background normalisation for SRC-MET. The modelling of these backgrounds is tested in four VRs: VRA, VRA2, VRB and VRC, where the normalisation for \(t\bar{t}\) and Wt is \(1.00\pm 0.22\), \(1.01\pm 0.13\), \(1.00\pm 0.21\) and \(0.86\pm 0.13\), respectively, calculated from identical regions in the \(e\mu \) channel. Figure 5 shows a comparison between data and prediction in these four VRs. VRA probes low \(p_{\text {T}}^{\ell \ell }\) in the range equivalent to that in SRC, but at lower \(E_{\text {T}}^{\text {miss}}\), while VRB and VRC are used to check the background modelling at \(p_{\text {T}}^{\ell \ell }\) \(>20\) \(\text {Ge}\text {V}\), but with \(E_{\text {T}}^{\text {miss}}\) between 250 and 500 \(\text {Ge}\text {V}\). Owing to poor background modelling at very low \(m_{\ell \ell }\) and \(p_{\text {T}}^{\ell \ell }\), the \(m_{\ell \ell }\) range in VRA and SRC does not go below 30 \(\text {Ge}\text {V}\).

Validation of the background modelling for the low-\(p_{\text {T}}\) analysis in VRA (top left), VRA2 (top right), VRB (bottom left) and VRC (bottom right) in the SF channels. The \(t\bar{t}\) and Wt backgrounds are normalised in \(e\mu \) data samples for which the requirements are otherwise the same as in the VR in question. All uncertainties in the background expectation are included in the hatched band. The last bin always contains the overflow

7.2 \(Z/\gamma ^{*}+\text {jets}\) background

The \(Z/\gamma ^{*}+\text {jets}\) processes make up to \(10\%\) of the background in the on-Z \(m_{\ell \ell }\) bins in SR-low, SR-medium and SR-high. For the high-\(p_{\text {T}}\) analysis this background is estimated using a data-driven method that takes \(\gamma +\text {jets}\) events in data to model the \(E_{\text {T}}^{\text {miss}}\) distribution of \(Z/\gamma ^{*}+\text {jets}\). These two processes have similar event topologies, with a well-measured object recoiling against a hadronic system, and both tend to have \(E_{\text {T}}^{\text {miss}}\) that stems from jet mismeasurements and neutrinos in hadron decays. In this method, different control regions (\(\hbox {CR}\gamma \hbox {-low}\), \(\hbox {CR}\gamma \hbox {-medium}\), \(\hbox {CR}\gamma \hbox {-high}\)) are constructed, which contain at least one photon and no leptons. They have the same kinematic selection as their corresponding SRs, with the exception of \(E_{\text {T}}^{\text {miss}}\) and \(\Delta \phi (\text {jet}_{12},{\varvec{p}}_{\mathrm {T}}^\mathrm {miss})\) requirements. Detailed definitions of these regions are given in Table 3.

The \(\gamma +\text {jets}\) events in \(\hbox {CR}\gamma \hbox {-low}\), \(\hbox {CR}\gamma \hbox {-medium}\) and \(\hbox {CR}\gamma \hbox {-high}\) are reweighted such that the photon \(p_{\text {T}}\) distribution matches that of the \(Z/\gamma ^{*}+\text {jets}\) dilepton \(p_{\text {T}} \) distribution of events in CRZ-low, CRZ-medium and CRZ-high, respectively. This procedure accounts for small differences in event-level kinematics between the \(\gamma +\text {jets}\) events and \(Z/\gamma ^{*}+\text {jets}\) events, which arise mainly from the mass of the Z boson. Following this, to account for the difference in resolution between photons, electrons, and muons, which can be particularly significant at high boson \(p_{\text {T}}\), the photon \(p_{\text {T}}\) is smeared according to a \(Z\rightarrow ee\) or \(Z\rightarrow \mu \mu \) resolution function. The smearing function is derived by comparing the \({\varvec{p}}_{\mathrm {T}}^\mathrm {miss}\)-projection along the boson momentum in \(Z/\gamma ^{*}+\text {jets}\) and \(\gamma +\text {jets}\) MC events in a 1-jet control region with no other event-level kinematic requirements. A deconvolution procedure is used to avoid including the photon resolution in the Z bosons’s \(p_{\text {T}}\) resolution function. For each event, a photon \(p_{\text {T}}\) smearing \(\Delta p_{\text {T}} \) is obtained by sampling the smearing function. The photon \(p_{\text {T}}\) is shifted by \(\Delta p_{\text {T}} \), with the parallel component of the \({\varvec{p}}_{\mathrm {T}}^\mathrm {miss}\) vector being correspondingly adjusted by \(-\Delta p_{\text {T}} \).

Following this smearing and reweighting procedure, the \(E_{\text {T}}^{\text {miss}}\) of each \(\gamma +\text {jets}\) event is recalculated, and the final \(E_{\text {T}}^{\text {miss}}\) distribution is obtained after applying the \(\Delta \phi (\text {jet}_{12},{\varvec{p}}_{\mathrm {T}}^\mathrm {miss} ) > 0.4\) requirement. For each SR, the resulting \(E_{\text {T}}^{\text {miss}}\) distribution is normalised to data in the corresponding CRZ before the SR \(E_{\text {T}}^{\text {miss}}\) selection is applied. The \(m_{\ell \ell }\) distribution is modelled by binning the \(m_{\ell \ell }\) in \(Z/\gamma ^{*}+\text {jets}\) MC events as a function of the \({\varvec{p}}_{\mathrm {T}}^\mathrm {miss}\)-projection along the boson momentum, with this being used to assign an \(m_{\ell \ell }\) value to each \(\gamma +\text {jets}\) event via a random sampling of the corresponding distribution. The \(m_{\mathrm {T2}}\) distribution is modelled by assigning leptons to the event, with the direction of the leptons drawn from a flat distribution in the Z boson rest frame. The process is repeated until both leptons fall into the detector acceptance after boosting to the lab frame.

The full smearing, reweighting, and \(m_{\ell \ell }\) assignment procedure is applied to both the \(V\gamma \) MC and the \(\gamma +\text {jets}\) data events. After applying all corrections to both samples, the \(V\gamma \) contribution to the \(\gamma +\text {jets}\) data sample is subtracted to remove contamination from the main backgrounds with real \(E_{\text {T}}^{\text {miss}}\) from neutrinos. Contamination by events with fake photons in these \(\gamma +\text {jets}\) data samples is small, and as such this contribution is neglected.

The procedure is validated using \(\gamma +\text {jets}\) and \(Z/\gamma ^{*}+\text {jets}\) MC events. For this validation, the \(\gamma +\text {jets}\) MC simulation is reweighted according to the \(p_{\text {T}}\) distribution given by the \(Z/\gamma ^{*}+\text {jets}\) MC simulation. The \(Z/\gamma ^{*}+\text {jets}\) \(E_{\text {T}}^{\text {miss}}\) distribution in MC events can be seen on the left of Fig. 6 and is found to be well reproduced by \(\gamma +\text {jets}\) MC events. In addition to this, three VRs, VR-\(\Delta \phi \)-low, VR-\(\Delta \phi \)-medium and VR-\(\Delta \phi \)-high, which are orthogonal to SR-low SR-medium and SR-high due to the inverted \(\Delta \phi (\text {jet}_{12},{\varvec{p}}_{\mathrm {T}}^\mathrm {miss})\) requirement, are used to validate the method with data. Here too, as shown on the right of Fig. 6, good agreement is seen between the \(Z/\gamma ^{*}+\text {jets}\) prediction from \(\gamma +\text {jets}\) data and the data in the three VRs. The systematic uncertainties associated with this method are described in Sect. 8.

Left, the \(E_{\text {T}}^{\text {miss}}\) spectrum in \(Z/\gamma ^{*}+\text {jets}\) MC simulation compared to that of the \(\gamma +\text {jets}\) method applied to \(\gamma +\text {jets}\) MC simulation in SR-low (top), SR-medium (middle) and SR-high (bottom). No selection on \(E_{\text {T}}^{\text {miss}}\) is applied. The error bars on the points indicate the statistical uncertainty of the \(Z/\gamma ^{*}+\text {jets}\) MC simulation, and the hashed uncertainty bands indicate the statistical and reweighting systematic uncertainties of the \(\gamma +\)jet background method. Right, the \(E_{\text {T}}^{\text {miss}}\) spectrum when the method is applied to data in VR-\(\Delta \phi \)-low (top), VR-\(\Delta \phi \)-medium (middle) and VR-\(\Delta \phi \)-high (bottom). The bottom panel of each figure shows the ratio of observation (left, in MC simulation; right, in data) to prediction. In cases where the data point is not accommodated by the scale of this panel, an arrow indicates the direction in which the point is out of range. The last bin always contains the overflow

While the \(\gamma +\text {jets}\) method is used in the high-\(p_{\text {T}}\) analysis, Sherpa \(Z/\gamma ^{*}+\text {jets}\) simulation is used to model this background in the low-\(p_{\text {T}}\) analysis. This background is negligible in the very low \(p_{\text {T}}^{\ell \ell }\) SRC, and while it can contribute up to \(\sim 30\%\) in some \(m_{\ell \ell }\) bins in SRC-MET, this is in general only a fraction of a small total number of expected events. In order to validate the \(Z/\gamma ^{*}+\text {jets}\) estimate in this low-\(p_{\text {T}}\) region, the data are compared to the MC prediction in VR-\(\Delta \phi \), where the addition of a b-tagged-jet veto is used to increase the \(Z/\gamma ^{*}+\text {jets}\) event fraction. The resulting background prediction in this region is consistent with the data.

7.3 Fake-lepton background

Events from semileptonic \(t\bar{t}\), \(W\rightarrow \ell \nu \) and single top (s- and t-channel) decays enter the dilepton channels via lepton “fakes.” These can include misidentified hadrons, converted photons or non-prompt leptons from heavy-flavour decays. In the high-\(p_{\text {T}}\) SRs the contribution from fake leptons is negligible, but fakes can contribute up to \(\sim 12\%\) in SRC and SRC-MET. In the low-\(p_{\text {T}}\) analysis this background is estimated using the matrix method, detailed in Ref. [87]. In this method a control sample is constructed using baseline leptons, thereby enhancing the probability of selecting a fake lepton compared to the signal-lepton selection. For each relevant CR, VR or SR, the region-specific kinematic requirements are placed upon this sample of baseline leptons. The events in this sample in which the selected leptons subsequently pass (\(N_{\text {pass}}\)) or fail (\(N_{\text {fail}}\)) the signal lepton requirements of Sect. 5 are then counted. In the case of a one-lepton selection, the number of fake-lepton events (\(N_{\text {pass}}^{\text {fake}}\)) in a given region is then estimated according to:

Here \(\epsilon ^{\text {real}}\) is the relative identification efficiency (from baseline to signal) for genuine, prompt (“real”) leptons and \(\epsilon ^{\text {fake}}\) is the relative identification efficiency (again from baseline to signal) with which non-prompt leptons or jets might be misidentified as prompt leptons. This principle is then expanded to a dilepton selection by using a four-by-four matrix to account for the various possible real–fake combinations for the two leading leptons in an event.

The real-lepton efficiency, \(\epsilon ^{\text {real}}\), is measured in \(Z\rightarrow \ell \ell \) data events using a tag-and-probe method in CR-real, defined in Table 4. In this region the \(p_{\text {T}}\) of the leading lepton is required to be \(>40\) \(\text {Ge}\text {V}\), and only events with exactly two SFOS leptons are selected. The efficiency for fake leptons, \(\epsilon ^{\text {fake}}\), is measured in CR-fake, a region enriched with fake leptons by requiring same-sign lepton pairs. The lepton \(p_{\text {T}}\) requirements are the same as those in CR-real, with the leading lepton being tagged as the “real” lepton and the fake-lepton efficiency being evaluated using the sub-leading lepton in the event. A requirement of \(E_{\text {T}}^{\text {miss}} <125~\text {Ge}\text {V}\) is used to reduce possible contamination from non-SM processes (e.g. SUSY). In this region, the background due to prompt-lepton production, estimated from MC simulation, is subtracted from the total data contribution. Prompt-lepton production makes up \(7\%\) (\(10\%\)) of the baseline electron (muon) sample and \(10\%\) (\(60\%\)) of the signal electron (muon) sample in CR-fake. From the resulting data sample the fraction of events in which the baseline leptons pass the signal selection requirements yields the fake-lepton efficiency. The \(p_{\text {T}}\) and \(\eta \) dependence of both fake- and real-lepton efficiencies is taken into account.

This method is validated in an OS VR, VR-fakes, which covers a region of phase space similar to that of the low-\(p_{\text {T}}\) SRs, but with a DF selection. The left panel of Fig. 7 shows the level of agreement between data and prediction in this region. In the SF channels, an SS selection is used to obtain a VR, VR-SS in Table 4, dominated by fake leptons. The data-driven prediction is close to the data in this region, as shown on the right of Fig. 7. The large systematic uncertainty in this region is mainly from the flavour composition, as described in Sect. 8.

Validation of the data-driven fake-lepton background for the low-\(p_{\text {T}}\) analysis. The \(m_{\ell \ell }\) distribution in VR-fakes (left) and VR-SS (right). Processes with two prompt leptons are modelled using MC simulation. The hatched band indicates the total systematic and statistical uncertainty of the background prediction. The last bin always contains the overflow

7.4 Diboson and rare top processes

The remaining SM background contribution in the SRs is due to WZ / ZZ diboson production and rare top processes (\(t\bar{t} Z\), \(t\bar{t} W\) and \(t\bar{t} WW\)). The rare top processes contribute \(<10\%\) of the SM expectation in the SRs and are taken directly from MC simulation.

The contribution from the production of WZ / ZZ dibosons is generally small in the SRs, but in the on-Z bins in the high-\(p_{\text {T}}\) SRs it is up to \(70\%\) of the expected background, whereas in SRC-MET it is up to \(40\%\) of the expected background. These backgrounds are estimated from MC simulation, and are validated in VRs with three-lepton (VR-WZ) and four-lepton (VR-ZZ) requirements, as defined in Table 3. VR-WZ, with \(H_{\text {T}} >200\) \(\text {Ge}\text {V}\), forms a WZ-enriched region in a kinematic phase space as close as possible to the high-\(p_{\text {T}}\) SRs. In VR-ZZ an \(E_{\text {T}}^{\text {miss}} <100\) \(\text {Ge}\text {V}\) requirement is used to suppress WZ and top processes to form a region with high purity in ZZ production. The yields and kinematic distributions observed in these regions are well-modelled by MC simulation. In particular, the \(E_{\text {T}}^{\text {miss}}\), \(H_{\text {T}}\), jet multiplicity, and dilepton \(p_{\text {T}} \) distributions show good agreement. For the low-\(p_{\text {T}}\) analysis, VR-WZ-low-\(p_{\text {T}}\) and VR-ZZ-low-\(p_{\text {T}}\), defined in Table 4, are used to check the modelling of these processes at low lepton \(p_{\text {T}}\), and good modelling is also observed. Figure 8 shows the level of agreement between data and prediction in these validation regions.

The observed and expected yields in the diboson VRs. The data are compared to the sum of the expected backgrounds. The observed deviation from the expected yield normalised to the total uncertainty is shown in the bottom panel. The hatched uncertainty band includes the statistical and systematic uncertainties of the background prediction

8 Systematic uncertainties

The data-driven background estimates are subject to uncertainties associated with the methods employed and the limited number of events used in their estimation. The dominant source of uncertainty for the flavour-symmetry-based background estimate in the high-\(p_{\text {T}}\) SRs is due to the limited statistics in the corresponding DF CRs, yielding an uncertainty of between 10 and \(90\%\) depending on the \(m_{\ell \ell }\) range in question. Other systematic uncertainties assigned to this background estimate include those due to MC closure, the measurement of the efficiency correction factors and the extrapolation in \(E_{\text {T}}^{\text {miss}}\) and \(H_{\text {T}}\) in the case of SR-high.

Several sources of systematic uncertainty are associated with the data-driven \(Z/\gamma ^{*}+\text {jets}\) background prediction for the high-\(p_{\text {T}}\) analysis. The boson \(p_{\text {T}} \) reweighting procedure is assigned an uncertainty based on a comparison of the nominal results with those obtained by reweighting events using the \(H_{\text {T}}\) distribution instead. For the smearing function an uncertainty is derived by comparing the results obtained using the nominal smearing function derived from MC simulation with those obtained using a smearing function derived from data in a 1-jet control region. The full reweighting and smearing procedure is carried out using \(\gamma +\text {jets}\) MC events such that an MC non-closure uncertainty can be derived by comparing the resulting \(\gamma +\text {jets}\) MC \(E_{\text {T}}^{\text {miss}}\) distribution to that in \(Z/\gamma ^{*}+\text {jets}\) MC events. An uncertainty of 10% is obtained for the \(V\gamma \) backgrounds, based on a data-to-MC comparison in a \(V\gamma \)-enriched control region where events are required to have a photon and one lepton. This uncertainty is propagated to the final \(Z/\gamma ^{*}+\text {jets}\) estimate following the subtraction of the \(V\gamma \) background. Finally, the statistical precision of the estimate also enters as a systematic uncertainty in the final background estimate. Depending on the \(m_{\ell \ell }\) range in question, the uncertainties in the \(Z/\gamma ^{*}+\text {jets}\) prediction can vary from \(\sim 10\%\) to \(>100\%\).

For the low-\(p_{\text {T}}\) analysis the uncertainties in the fake-lepton background stem from the number of events in the regions used to measure the real- and fake-lepton efficiencies, the limited sample size of the inclusive loose-lepton sample, varying the prompt-lepton contamination in the region used to measure the fake-lepton efficiency, and from varying the region used to measure the fake-lepton efficiency. The nominal fake-lepton efficiency is compared with those measured in regions where the presence of b-tagged jets is either required or explicitly vetoed. Varying the sample composition via b-jet tagging makes up the largest uncertainty.

Theoretical and experimental uncertainties are taken into account for the signal models, as well as background processes that rely on MC simulation. A \(2.1\%\) uncertainty is applied to the luminosity measurement [25]. The jet energy scale is subject to uncertainties associated with the jet flavour composition, the pile-up and the jet and event kinematics [88]. Uncertainties in the jet energy resolution are included to account for differences between data and MC simulation [88]. An uncertainty in the \(E_{\text {T}}^{\text {miss}}\) soft-term resolution and scale is taken into account [83], and uncertainties due to the lepton energy scales and resolutions, as well as trigger, reconstruction, and identification efficiencies, are also considered. The experimental uncertainties are generally \(<1\%\) in the SRs, with the exception of those associated with the jet energy scale, which can be up to \(14\%\) in the low-\(p_{\text {T}}\) SRs.

In the low-\(p_{\text {T}}\) analysis, theoretical uncertainties are assigned to the \(m_{\ell \ell }\)-shape of the \(t\bar{t}\) and Wt backgrounds, which are taken from MC simulation. For these backgrounds an uncertainty in the parton shower modelling is derived from comparisons between samples generated with Powheg+Pythia6 and Powheg+Herwig++ [89, 90]. For \(t\bar{t}\) an uncertainty in the hard-scatter process generation is assessed using samples generated using Powheg+Pythia8 to compare with MG5_aMC@NLO+Pythia8. Samples using either the diagram subtraction scheme or the diagram removal scheme to estimate interference effects in the single-top production diagrams are used to assess an interference uncertainty for the Wt background [91]. Variations of the renormalisation and factorisation scales are taken into account for both \(t\bar{t}\) and Wt.

Again in the low-\(p_{\text {T}}\) analysis, theoretical uncertainties are assigned to the \(Z/\gamma ^{*}+\text {jets}\) background, which is also taken from MC simulation. Variations of the renormalisation, resummation and factorisation scales are taken into account, as are parton shower matching scale uncertainties. Since the \(Z/\gamma ^{*}+\text {jets}\) background is not normalised to data, a total cross-section uncertainty of 5% is assigned [92].

The WZ / ZZ processes are assigned a cross-section uncertainty of \(6\%\) [93] and an additional uncertainty of up to \(30\%\) in the SRs, which is based on comparisons between Sherpa and Powheg MC samples. Uncertainties due to the choice of factorisation, resummation and renormalisation scales are calculated by varying the nominal values up and down by a factor of two. The parton shower scheme is assigned an uncertainty from a comparison of samples generated using the schemes proposed in Ref. [39] and Ref. [94]. These scale and parton shower uncertainties are generally \(<20\%\). For rare top processes, a total uncertainty of 26% is assigned to the cross-section [27, 54,55,56].

For signal models, the nominal cross-section and its uncertainty are taken from an envelope of cross-section predictions using different PDF sets and factorisation and renormalisation scales, as described in Ref. [95].

The uncertainties that have the largest impact in each SR vary from SR-to-SR. For most of the high-\(p_{\text {T}}\) SRs the dominant uncertainty is that due to the limited numbers of events in the \(e\mu \) CRs used for the flavour-symmetric prediction. Other important uncertainties include the systematic uncertainties associated with this method and uncertainties in the \(\gamma +\text {jets}\) method for the \(Z/\gamma ^{*}+\text {jets}\) background prediction. In SRs that include the on-Z \(m_{\ell \ell }\) bin, diboson theory uncertainties also become important. The total uncertainty in the high-\(p_{\text {T}}\) SRs ranges from 12% in the most highly populated SRs to \(>100\%\) in regions where less than one background event is expected. The low-\(p_{\text {T}}\) SRs are generally impacted by uncertainties due to the limited size of the MC samples used in the background estimation, with these being dominant in SRC-MET. In SRC the theoretical uncertainties in the \(t\bar{t}\) background dominate, with these also being important in SRC-MET. The total background uncertainty in the low-\(p_{\text {T}}\) SRs is typically 10–20% in SRC and 25–35% in SRC-MET.

9 Results

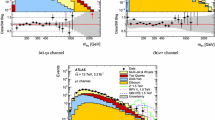

The integrated yields in the high- and low-\(p_{\text {T}}\) signal regions are compared to the expected background in Tables 5 and 6, respectively. The full \(m_{\ell \ell }\) distributions in each of these regions are compared to the expected background in Figs. 9 and 10.

As signal models may produce kinematic endpoints at any value of \(m_{\ell \ell }\), any excess must be searched for across the \(m_{\ell \ell }\) distribution. To do this a “sliding window” approach is used, as described in Sect. 6. The 41 \(m_{\ell \ell }\) windows (10 for SR-low, 9 for SR-medium, 10 for SR-high, 6 for SRC and 6 for SRC-MET) are chosen to make model-independent statements about the possible presence of new physics. The results in these \(m_{\ell \ell }\) windows are summarised in Fig. 11, with the observed and expected yields in the combined \(ee+\mu \mu \) channel for all 41 \(m_{\ell \ell }\) windows. In general the data are consistent with the expected background across the full \(m_{\ell \ell }\) range. The largest excess is observed in SR-medium with \(101<m_{\ell \ell }<201\) \(\text {Ge}\text {V}\), where a total of 18 events are observed in data, compared to an expected \(7.6 \pm 3.2\) events, corresponding to a local significance of \(2\sigma \).

Observed and expected dilepton mass distributions, with the bin boundaries considered for the interpretation, in (top left) SR-low, (top-right) SR-medium, and (bottom) SR-high of the edge search. All statistical and systematic uncertainties of the expected background are included in the hatched band. The last bin contains the overflow. One (two) example signal model(s) are overlaid on the top left (top right, bottom). For the slepton model, the numbers in parentheses in the legend indicate the gluino and \(\tilde{\chi }_1^0\) masses of the example model point. In the case of the Z model illustrated, the numbers in parentheses indicate the gluino and \(\tilde{\chi }_2^0\) masses, with the \(\tilde{\chi }_1^0\) mass being fixed at 1 \(\text {Ge}\text {V}\) in this model

Observed and expected dilepton mass distributions, with the bin boundaries considered for the interpretation, in (left) SRC and (right) SRC-MET of the low-\(p_{\text {T}}\) edge search. All statistical and systematic uncertainties of the expected background are included in the hatched band. An example signal from the \(Z^{(*)}\) model with \(m(\tilde{g})=1000~\text {Ge}\text {V}\) and \(m(\tilde{\chi }_1^0)=900~\text {Ge}\text {V}\) is overlaid

The observed and expected yields in the (overlapping) \(m_{\ell \ell }\) windows of SR-low, SR-medium, SR-high, SRC and SRC-MET. These are shown for the 29 \(m_{\ell \ell }\) windows for the high-\(p_{\text {T}}\) SRs (top) and the 12 \(m_{\ell \ell }\) windows for the low-\(p_{\text {T}}\) SRs (bottom). The data are compared to the sum of the expected backgrounds. The significance of the difference between the observed and expected yields is shown in the bottom plots. For cases where the p-value is less than 0.5 a negative significance is shown. The hatched uncertainty band includes the statistical and systematic uncertainties of the background prediction

Model-independent upper limits at 95% confidence level (CL) on the number of events (\(S^{95}\)) that could be attributed to non-SM sources are derived using the \(\text {CL}_{\text {S}}\) prescription [96], implemented in the HistFitter program [97]. A Gaussian model for nuisance parameters is used for all but two of the uncertainties. The exceptions are the statistical uncertainties in the flavour-symmetry method and MC-based backgrounds, which are treated as Poissonian nuisance parameters. This procedure is carried out using the \(m_{\ell \ell }\) windows from the high-\(p_{\text {T}}\) and low-\(p_{\text {T}}\) analyses, neglecting possible signal contamination in the CRs. For these upper limits, pseudo-experiments are used. Upper limits on the visible BSM cross-section \(\langle A\epsilon \mathrm{\sigma }\rangle _\mathrm{obs}^{95}\) are obtained by dividing the observed upper limits on the number of BSM events by the integrated luminosity. Expected and observed upper limits are given in Tables 7 and 8 for the high-\(p_{\text {T}}\) and low-\(p_{\text {T}}\) SRs, respectively. The p-values, which represent the probability of the SM background alone to fluctuate to the observed number of events or higher, are also provided using the asymptotic approximation [86].

10 Interpretation

In this section, exclusion limits are shown for the SUSY models detailed in Sect. 3. For these model-dependent exclusion limits a shape fit is performed on each of the binned \(m_{\ell \ell }\) distributions in Figs .9 and 10. The \(CL_{\text {S}}\) prescription in the asymptotic approximation is used. Experimental uncertainties are treated as correlated between signal and background events. The theoretical uncertainty of the signal cross-section is not accounted for in the limit-setting procedure. Instead, following the initial limit determination, the impact of varying the signal cross-section within its uncertainty is evaluated separately and indicated in the exclusion results. For the high-\(p_{\text {T}}\) analysis, possible signal contamination in the CRs is neglected in the limit-setting procedure; the contamination is found to be negligible for signal points near the exclusion boundaries. Signal contamination in the CRs is taken into account in the limit-setting procedure for the low-\(p_{\text {T}}\) analysis.

The top panel of Fig. 12 shows the exclusion contours in the \(m(\tilde{g})-m(\tilde{\chi }^{0}_{1})\) plane for a simplified model with gluino pair production, where the gluinos decay via sleptons. The exclusion contour shown is derived using a combination of results from the three high-\(p_{\text {T}}\) and two low-\(p_{\text {T}}\) SRs based on the best-expected sensitivity. The low-\(p_{\text {T}}\) SRs drive the limits close to the diagonal, with the high-\(p_{\text {T}}\) SRs taking over at high gluino masses. In SR-low there is good sensitivity at high gluino and high LSP masses. Around gluino mass of 1.8 \(\text {Te}\text {V}\), the observed limit drops below the expected limit by 200 \(\text {Ge}\text {V}\), where the dilepton kinematic edge is expected to occur around 800 \(\text {Ge}\text {V}\). Here the highest \(m_{\ell \ell }\) bin in SR-low (\(m_{\ell \ell }\)>501 \(\text {Ge}\text {V}\)), which is the bin driving the limit in this region, has a mild excess in data, explaining this effect. The region where the low-\(p_{\text {T}}\) search becomes the most sensitive can be seen close to the diagonal, where there is a kink in the contour at \(m(\tilde{g}) \sim 1400\) \(\text {Ge}\text {V}\). A zoomed-in view of the compressed region of phase space, the region close to the diagonal for this model, is provided in the \(m(\tilde{g})-(m(\tilde{g})-m(\tilde{\chi }^{0}_{1}))\) plane in the bottom panel of Fig. 12. Here the exclusion contour includes only the low-\(p_{\text {T}}\) regions. SRC-MET has the best sensitivity almost everywhere, except at low values of LSP mass (at the top-left of the bottom panel of Fig. 12), where SRC drives the limit. An exclusion contour derived using a combination of results from the three high-\(p_{\text {T}}\) SRs alone is overlaid, demonstrating the increased sensitivity brought by the low-\(p_{\text {T}}\) analysis.

Expected and observed exclusion contours derived from the combination of the results in the high-\(p_{\text {T}}\) and low-\(p_{\text {T}}\) edge SRs based on the best-expected sensitivity (top) and zoomed-in view of the low-\(p_{\text {T}}\) only (bottom) for the slepton signal model. The dashed line indicates the expected limits at \(95\%\) CL and the surrounding band shows the \(1\sigma \) variation of the expected limit as a consequence of the uncertainties in the background prediction and the experimental uncertainties in the signal (\(\pm 1\sigma _{\text {exp}}\)). The dotted lines surrounding the observed limit contours indicate the variation resulting from changing the signal cross-section within its uncertainty (\(\pm 1\sigma ^{\text {SUSY}}_{\text {theory}}\)). The shaded area on the upper plot indicates the observed limit on this model from Ref. [15]. In the lower plot the observed and expected contours derived from the high-\(p_{\text {T}}\) SRs alone are overlaid, illustrating the added sensitivity from the low-\(p_{\text {T}}\) SRs. Small differences between the contours in the compressed region are due to differences in interpolation between the top and bottom plot

The top panel of Fig. 13 shows the exclusion contours for the \(Z^{(*)}\) simplified model in the \(m(\tilde{g})-m(\tilde{\chi }^{0}_{1})\) plane, where on- or off-shell Z bosons are expected in the final state. Again, the low-\(p_{\text {T}}\) SRs have good coverage near the diagonal. SR-med drives the limits at high gluino mass, reaching beyond 1.6 \(\text {Te}\text {V}\). For this interpretation the contour is mostly dominated by the on-Z bin of the three edge SRs. The kink in the exclusion contour at \(m(\tilde{g})=1200\) \(\text {Ge}\text {V}\) occurs where the low-\(p_{\text {T}}\) SRs begin to dominate the sensitivity. A zoomed-in view of the compressed region of phase space where the low-\(p_{\text {T}}\) SRs dominate the sensitivity is provided in the \(m(\tilde{g})-(m(\tilde{g})-m(\tilde{\chi }^{0}_{1}))\) plane in the bottom panel of Fig. 13. Here the exclusion contour includes only the low-\(p_{\text {T}}\) regions, with the exclusion contour derived using a combination of results from the three high-\(p_{\text {T}}\) SRs alone overlaid.

Expected and observed exclusion contours derived from the combination of the results in the high-\(p_{\text {T}}\) and low-\(p_{\text {T}}\) edge SRs based on the best-expected sensitivity (top) and zoomed-in view for the low-\(p_{\text {T}}\) only (bottom) for the \(Z^{(*)}\) model. The dashed line indicates the expected limits at \(95\%\) CL and the surrounding band shows the \(1\sigma \) variation of the expected limit as a consequence of the uncertainties in the background prediction and the experimental uncertainties in the signal (\(\pm 1\sigma _{\text {exp}}\)). The dotted lines surrounding the observed limit contours indicate the variation resulting from changing the signal cross-section within its uncertainty (\(\pm 1\sigma ^{\text {SUSY}}_{\text {theory}}\)). The shaded area on the upper plot indicates the observed limit on this model from Ref. [15]. In the lower plot the observed and expected contours derived from the high-\(p_{\text {T}}\) SRs alone are overlaid, illustrating the added sensitivity from the low-\(p_{\text {T}}\) SRs. Small differences in the contours in the compressed region are due to differences in interpolation between the top and bottom plot