Abstract

We present an updated global analysis of collinearly factorized nuclear parton distribution functions (PDFs) at next-to-leading order in perturbative QCD. In comparison to our previous fit, EPPS16, the present analysis includes more data from proton-lead collisions at the Large Hadron Collider: 5 TeV double-differential CMS dijet and LHCb D-meson data, as well as 8 TeV CMS W\(^\pm \) data. These new data lead to significantly better-constrained gluon distributions at small and intermediate values of the momentum fraction x, confirming the presence of shadowing and antishadowing for gluons in large nuclei. In addition, we include Jefferson Lab measurements of deeply inelastic scattering which probe nuclear PDFs at large x and low virtualities. For the first time within the Hessian framework, we now also explore the uncertainties of nuclear PDFs due to the errors in the baseline proton PDFs. We release the results of our analysis as a new public parametrization of nuclear PDFs called EPPS21.

Similar content being viewed by others

1 Introduction

Within the past decade, the proton–nucleus (pA) program at the Large Hadron Collider (LHC) has evolved from the first exploratory steps to a level where the measurements are precise enough to constrain and thereby seriously test the universality of nuclear parton distributions (PDFs). While in our previous analysis, EPPS16 [1], the impact of the available LHC data was still somewhat limited (see also Ref. [2]) the situation has undergone a significant change: For example, it is already known that the double differential CMS dijet [3] and LHCb D-meson [4] data will both have a huge impact on the nuclear gluon PDFs [5, 6]. Other recent heavy-flavour measurements such as B-meson and vector-meson production have been identified as potential gluon constraints as well [7, 8]. The recent W\(^\pm \) measurements [9], in turn, have been argued to carry further sensitivity to the flavour separation [10] and strangeness [12] of the nuclear quark distributions. These new LHC pPb data are the main motivation for our updated global analysis of nuclear PDFs.

The fixed-target program at Jefferson Laboratory (JLab) has recently included new measurements of deeply inelastic scattering (DIS) [13,14,15]. These data probe nucleons at large momentum fractions \(x \gtrsim 0.1\), the interaction scale \(Q^2\) being typically lower than in the older fixed-target experiments. Out of these, the latest measurements by the JLab CLAS Collaboration [13] have been studied in the context of nuclear PDFs first in Ref. [16]. No visible evidence of large, nuclear-size dependent \(1/Q^2\)-type higher-twist corrections was found, which also justifies the inclusion of these data in global fits of nuclear PDFs. These data were found to be helpful in disentangling the flavour dependence of the valence-quark nuclear effects. For subsequent further studies on the JLab data, see Refs. [17, 18].

At large interaction scales \(Q \gg \Lambda _\mathrm{QCD}\), the nuclear effects in PDFs do not typically exceed some tens of percents and they are often regarded as “corrections” to the free-proton PDFs. Most of the free-proton fits indeed use data on nuclear targets with varying prescriptions in correcting for the nuclear effects. On the other hand, nuclear-PDF analyses also require a proton PDF as an input and the outcome is bound to carry some sensitivity on how the baseline is chosen. Such intertwining of the nuclear and free-proton PDFs has already been considered within the NNPDF framework: In the NNPDF4.0 [19] analysis of the free-proton PDFs the nuclear-PDF uncertainties were considered as correlated uncertainties following Ref. [20]. In the nNNPDF2.0 analysis [10], on the other hand, the uncertainties from the free-proton PDFs were propagated into nuclear PDFs.Footnote 1 In the present work, we will now carry out the latter within a Hessian prescription. Eventually, in a complete analysis, both the free- and bound-proton PDFs should be fitted simultaneously and the first steps towards this direction have also recently been taken [21, 22].

2 Nuclear PDFs and proton baseline

2.1 Parametrization of nuclear modifications

We write the bound-proton PDF \(f^{\mathrm{p}/A}_i(x,Q^2)\) as a product of the nuclear modification \(R^{\mathrm{p}/A}_i(x,Q^2)\) and the free proton PDF \(f^\mathrm{p}_i(x,Q^2)\),

Here A denotes the mass number of the nucleus and i indexes the parton flavour. Our proton baseline here is the recent set CT18ANLO [23]. The CT18A differs from the default CT18 in that it includes also the ATLAS \(7 \,\mathrm{TeV}\) data on W\(^\pm \)- and Z-boson production [24]. The inclusion of these data was found to impact primarily the strange-quark PDF and to worsen the description of the neutrino-iron dimuon data [25] in which the strange-quark PDF plays a central role. By adopting the version “A” our strange-quark baseline PDF is thus less sensitive to the data on heavy nuclei.

The PDFs of a bound neutron \(f_i^{\mathrm{n}/A}(x,Q^2)\) follow from the bound-proton PDFs by virtue of the approximate isospin symmetry,

The full nuclear PDFs that enter the cross-section calculations are always linear combinations that depend on the number of protons Z and number of neutrons \(N=A-Z\),

We define the nuclear modifications of the full nuclear PDFs by

As in our earlier fits, we prefer to parametrize the nuclear modifications \(R^{\mathrm{p}/A}_i(x,Q_0^2)\) instead of the absolute PDFs \(f^{\mathrm{p}/A}_i(x,Q_0^2)\). The two options are of course fully equivalent but since most of the observables in the analysis are normalized to measurements involving either the free proton or deuteron (whose nuclear effects we neglect – see the last paragraph of this subsection), the relative differences with respect to the free proton PDF are what truly matter.

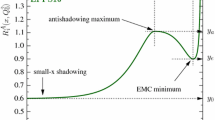

The nuclear modifications are parametrized at the charm pole-mass threshold \(Q_0=m_\mathrm{charm}=1.3 \, \mathrm{GeV}\). The value of \(m_\mathrm{charm}\) is set here by the value adopted in the CT18A analysis [23] to retain a full consistency with the baseline proton PDFs. Coming up with a decent functional form for the parametrization and deciding which parameters can be free is among the biggest challenges in the entire global analysis of nuclear PDFs. On one hand the parametrization should be flexible enough in regions where there are data constraints. On the other hand, the outcome of the fit should be physically feasible. For example, it is reasonable to expect that the nuclear effects are broadly larger in heavy nuclei like lead than what they are in a light nucleus like carbon. Coming up with the functional form finally used in the present analysis is a combination of experience from a entire chain of global fits we have performed in the past [1, 26,27,28,29], and trial and error. Our parametrization is a piecewise-smooth function defined as,

In comparison to EPPS16, we have made some adjustments to the parametrization. First, the small-x part involves the additional factor \(e^{-xa_2/x_a} - e^{-a_2}\), which increases the flexibility at small x [27]. Second, at intermediate values of x we use a functional form that is often used to parametrize the absolute PDF. The first derivatives are taken to be zero at the matching points \(x_a\) and \(x_e\) corresponding to the locations of the anticipated antishadowing maximum and EMC minimum. This fixes four parameters. Apart from the new small-x parameter \(a_2\) and the large-x parameter \(c_0\), the rest of the parameters \(a_i, b_i, c_i\) are expressed in terms of \(y_a\), \(y_e\) and \(y_0\) which correspond to the values of the function at \(x=x_a\), \(x=x_e\) and \(x=0\). The parametrization is illustrated in Fig. 1, where the variation induced by the parameter \(a_2\) is also demonstrated.

For the gluons and valence quarks the \(y_0\) parameters are determined separately for each nucleus by imposing the sum rules,

The rest of the A dependence is encoded into the height parameters \(y_i\) as,

where \(A_\mathrm{ref} = 12\), following our earlier analyses [1, 26,27,28,29]. In other words the nuclear effect – the distance from unity – is assumed to scale as a power law. For strange quarks the small-x exponent \(\gamma _{y_0}\) is modified by

so that the A dependence becomes weaker as \(y_0 \rightarrow 0\). This is to keep the strange-quark PDFs from becoming overly negative which easily leads to negative charm-production cross sections in neutrino–nucleus DIS.

The values of the strong coupling and heavy-quark pole masses are taken to be the same as in the CT18ANLO analysis [23]: the charm mass is set to \(m_c= 1.3\,\mathrm{GeV}\), the bottom-quark mass to \(m_b= 4.75\,\mathrm{GeV}\), and the strong coupling is fixed to \(\alpha _\mathrm{s}(M_\mathrm{Z}) = 0.118\), where \(M_\mathrm{Z}\) is the Z boson mass. At higher scales \(Q^2>Q^2_0\) the nuclear PDFs are obtained through solving the 2-loop [30, 31] DGLAP evolution equations [32,33,34,35] for which we use the method introduced in Ref. [36].

In the course of the analysis we also noticed that the DIS data for Li-6 and He-3 are not optimally reproduced by the monotonic power-law ansatz of Eq. (9). Therefore we have introduced extra parameters, \(f_3\) and \(f_6\), and replace the nuclear modifications \(R^{\mathrm{p}/3}_i(x,Q_0^2)\) and \(R^{\mathrm{p}/6}_i(x,Q_0^2)\) by

for all parton flavours i. The effect is larger for He-3 and keeping \(f_3=1\) would lead to a completely incorrect EMC slope in the case of JLab He-3 data. In total the EPPS21 fit involves \(N_\mathrm{param} = 24\) free parameters, see Table 1 ahead. Out of these 24 only 5 control the A dependence of the parametrization and freeing more – e.g. letting the A dependence of the gluon antishadowing peak to vary independently of the valence quarks – easily destabilizes the fit. Thus, there is more parametrization dependence e.g. in the gluon distributions of small nuclei in contrast to the case of heavy nuclei where the LHC data now provide strong constraints. To better control the A dependence, e.g. pO runs at the LHC would be most welcome [37].

As in EPPS16, the deuteron is still taken to be free from nuclear effects, \(R_i^A(x,Q^2) = 1\). In principle, as done e.g. in Ref. [21], one could include NMC data [38] on \(F_2^\mathrm{D}/F_2^\mathrm{p}\) to constrain the deuteron nuclear effects simultaneously with the other nuclear data. The nuclear effects in deuteron are expected to be below \(2\%\) [39]. However, these deuteron data are already included in the CT18 fit [23] of the free proton PDFs (our baseline) neglecting the deuteron nuclear corrections [40]. Using CT18 for deuteron (with no additional corrections) thus effectively accounts also for the deuteron nuclear effects. As a result, including these NMC data in our analysis here would thus be inconsistent, leading also to some double counting. The way the deuteron is now handled is admittedly a bit unsatisfactory and once more underscores the fact that the era of fitting the free-proton and nuclear PDFs separately starts to come to its end.

2.2 Negativity features

The parametrization of \(R_i^{\mathrm{p}/A}(x,Q^2)\) is not restricted to be strictly positive definite at the parametrization scale – whether or not the \(\overline{\mathrm{MS}}\) PDFs should be non-negative is still an open question [41, 42]. In our analysis, particularly \(R_g^{\mathrm{p}/A}\) at the parametrization scale gets easily negative at small x. However, since the central CT18 gluon distribution is valence-like – in practice zero at small x – this does not easily lead to negative cross sections even at \(Q^2 = Q^2_0\). The negativity problem of the gluon also goes away immediately above the parametrization scale. Despite the modified A dependence in Eq. (10), some error sets involve a negative strange-quark nuclear PDF at small x. Also this feature disappears rapidly with the scale evolution and negative cross sections can presumably be avoided by adopting a high-enough factorization scale. Finally, some bound-proton modifications \(R^{\mathrm{p}/A}_i\) for up and down quarks can display negative values at small x. However, this is irrelevant from the practical viewpoint as when combined to the full nucleus level in Eq. (3) such negativity features disappear due to the anticorrelation between up- and down-quark nuclear modifications in bound protons (see Fig. 10 of Ref. [1]). The negativity issues in our analysis are similar as met in other recent global analyses by other groups, see Fig. 12 ahead.

3 Observables

The observables we include in the analysis are chosen such that theoretical uncertainties from higher-order perturbative corrections, non-perturbative fragmentation functions (applied in the case of inclusive pion and D-meson production) and from the choice of the proton baseline PDFs are kept as small as possible. In practice, this means e.g. taking ratios of cross sections between different collision systems. Also, observables where final-state nuclear effects could be sizable – e.g. quarkonia hadroproduction – are not included. In Fig. 2 we sketch the x and \(Q^2\) regions probed by the data that are included in the present analysis.

3.1 Lepton–nucleus DIS

The foundations of the global fits of nuclear PDFs are built on the muon–nucleus and electron–nucleus neutral-current DIS data. There is a significant legacy of data available from SLAC [43], NMC [44, 44,45,46,47], and EMC [48] collaborations which we include in the analysis. As in EPPS16, we undo the isoscalar corrections that the experiments have in some cases imposed on the data, see Sect. 3.1 of the EPPS16 paper [1]. As a new ingredient, we now include also very recent electron–nucleus data from the JLab CLAS [14] and Hall-C [13] experiments. The CLAS data were already analyzed in Ref. [16] in the context of PDF reweighting and a good compatibility with EPPS16 was found.

The neutral-current DIS data mentioned here come either as ratios of \(F_2\) structure functions at fixed virtuality \(Q^2\) and Bjorken x,

or as ratios of cross sections,

We also include the CHORUS (anti)neutrino–nucleus charged-current DIS data [49] which help in constraining the strange-quark content of the nucleons as well as the up-quark vs. down-quark flavour separation. These neutrino data are available as absolute cross sections. To reduce the experimental and theoretical uncertainties we do as first proposed in Ref. [50] and then elaborated in the EPPS16 analysis, and normalize the data by the integrated cross section at the corresponding beam energy. For exact treatment, see Sect. 3.2 of the EPPS16 paper [1].

In the EPPS16 analysis we did not pay too much attention to the treatment of normalization errors of the neutral-current DIS data i.e. the normalization uncertainties were simply added in quadrature, point-by-point. We are now more careful in this respect and, when available, treat the normalization errors more consistently in the definition of \(\chi ^2_\mathrm{global}\), see Eq. (26) ahead.

All the DIS cross sections are computed using the SACOT-\(\chi \) (simplified Aivazis–Collins–Olness–Tung) versions of the general-mass variable flavour number scheme (GM-VFNS) [51,52,53]. This is the same scheme that is used in the CT18 analysis [23]. In the case of neutrino DIS, we include also the dominant electroweak [54] effects as well as target-mass corrections following Ref. [55]. For the neutral-current DIS there were no target-mass corrections in the EPPS16 analysis. However, they begin to play a role in the case of JLab kinematics and we thus now account for the dominant target-mass corrections also in neutral-current DIS. This is done as in Ref. [16] where the effects were studied in more detail – see also Ref. [17]. Only those DIS data points with the hadronic invariant mass \(W \ge 1.8\,\mathrm{GeV}\) are included in the analysis.

3.2 Proton–nucleus Drell–Yan

The low-mass Drell–Yan production in proton–nucleus collisions helps in disentangling between the nuclear effects in valence and sea quarks. Here, we include the E772 [56] and E866 [57] data sets in the form of nuclear ratios,

where M is the invariant mass of the produced lepton pair and \(x_{1,2} = (M/\sqrt{s})e^{\pm y}\), where y is the rapidity of the lepton pair. The differential cross sections are calculated “on fly” with no precomputed grids.

3.3 Dijet production

In the EPPS16 analysis, we used the first CMS \(5 \, \mathrm{TeV}\) single-differential dijet pPb data [58] in the form of a forward-to-backward ratio. Now, a double-differential analysis [3] of the same data sample has become available and this is what we use in the present analysis. We have already scrutinized these data in Ref. [5] where they were found to put dramatically strong constraints on the nuclear modification of the gluon PDFs in the shadowing and antishadowing regions. The observable we fit is a double ratio,

where \(\eta _\mathrm{dijet}\) and \(p_\mathrm{T}^\mathrm{ave}\) are the average pseudorapidity and average transverse momentum of the two jets that make up the dijet,

By self-normalizing the spectra separately in pp and pPb collisions, a major part of the experimental systematic uncertainties cancel and the measurement is therefore very precise. Without the self-normalization, the systematic uncertainties in typical jet measurement can reach tens of percents. In Ref. [5] the ratio of Eq. (16) was also found to be very insensitive to the choice of the baseline proton PDFs as well as to the factorization/renormalization scale variations around the central choice \(\mu = p_\mathrm{T}^\mathrm{ave}\). The NLO look-up tables (see Sect. 4.4) are constructed by using the public NLOjet++ [59] code. For more details on the implementation of the dijet cross sections, see Ref. [5].

3.4 W\(^\pm \) and Z production

In the EPPS16 fit, we already included the \(5\,\mathrm{TeV}\) W\(^\pm \) and Z production data from CMS and ATLAS [60,61,62]. These data were included as rapidity-binned forward-to-backward ratios,

where, depending on the case, the rapidity variable y refers either to the rapidity of the produced Z boson, or to the rapidity of the charged lepton resulting from the W\(^\pm \) decay. These data are included in the EPPS21 analysis in the same way. Also the CMS \(8.16\,\mathrm{TeV}\) W\(^\pm \) data from pPb collisions are now available [9] and they are significantly more precise than the \(5\,\mathrm{TeV}\) data. To make the most out these new data, we form a nuclear modification ratio by using the \(8\,\mathrm{TeV}\) W\(^\pm \) data from the p-p measurement [63],

In addition to the slightly different c.m. energies, the pp and pPb measurements do not share an exactly common rapidity binning and for some rapidity bins the ratio in Eq. (20) is taken between two nearby rapidity bins. The differences between the c.m. energies and the rapidity bins are, however, small enough that the uncertainties from the baseline proton PDFs still cancel rather well. This is demonstrated explicitly in Ref. [64], where also the rapidity binning of the mixed-energy ratio and the composition of the covariance matrix are explained. We acknowledge that there are also a few LHCb [65] and ALICE [66, 67] data points available on cross sections for producing electroweak bosons in pPb collisions. These data are not included in the current analysis as a longer lever arm in rapidity would be required to place significant constraints through nuclear modifications in the shape of the rapidity distributions. The NLO look-up tables (see Sect. 4.4) for W\(^\pm \) and Z production are constructed by using the public MCFM [68] program, taking the renormalization/factorization scale equal to the pole mass of the produced heavy boson.

3.5 D-meson production

The EPPS16 and CT14NLO uncertainties for D-meson production in forward (upper panel) and backward (lower panel) direction in p-Pb collisions. The data are from the LHCb collaboration [4]

Inclusive D-meson production in proton–nucleus collisions is known to carry a significant sensitivity on the gluon PDFs [6,7,8, 69,70,71,72,73,74]. Our EPPS21 analysis includes the double differential nuclear modification factors

measured by the LHCb collaboration at \(\sqrt{s}= 5 \, \mathrm{TeV}\) [4]. The calculations are performed in the SACOT-\(m_\mathrm{T}\) GM-VFNS scheme [75] which accounts for the finite masses of the charm quark and the produced D meson. We use the KKKS08 fragmentation functions [76]. At very low values of \(p_\mathrm{T}\), theoretical uncertainties from the QCD scale choices and treatment of the finite-mass effects become more important [6]. To reduce the impact of these uncertainties, a cut \(p_\mathrm{T} > 3 \, \mathrm{GeV}\) is applied in the present analysis. Also the uncertainties from the baseline proton PDFs turn out to be negligible in the fitted region. This is demonstrated in Fig. 3 where the CT14NLO [77] and EPPS16 uncertainties are shown for two rapidity intervals. For more details about the NLO setup, see Ref. [6].

Compared to the analysis in Ref. [6], we take here the luminosity uncertainties as correlated and not point-by-point also for this observable. Due to the separate (beam-direction reversed) data taking of the pPb forward and backward configurations, the normalization shifts can be different in the different configurations, as is allowed in our fit. The normalization uncertainties are computed as the quadratic sum of the given pPb and p-p uncertainties, separately for each beam direction.

3.6 Inclusive pion production

Historically, the inclusive pion production at RHIC was the first direct gluon constraint in the fits on nuclear PDFs [28, 78]. Until that point only weak gluon constraints were obtained from the \(Q^2\) slopes of \(R_{\mathrm{F}_2}\) at small x [79]. In the present analysis we still include the PHENIX 2007 \(\pi ^0\) data [80] taken in dAu collisions. However, due to including the D-meson and dijet data, the role of these pion data is no longer as prominent as it used to be e.g. in the EPS09 analysis [29]. They are still included to highlight the compatibility. Recently, the nCTEQ collaboration has comprehensively studied the inclusion of also other light-meson data from RHIC and LHC [81] including the uncertainties coming from the fragmentation functions. The very recent PHENIX data [82], however, seems to indicate a much stronger \(p_\mathrm{T}\) dependence of the nuclear modifications in comparison e.g. to the EPPS16 predictions, which puts a new question mark on whether these newer data can still be consistently included in the global fits. Exploring the full implications of these new data is left for future work. The look-up tables (see Sect. 4.4) for inclusive pion production are constructed by using the INCNLO [83] code interfaced with the KKP fragmentation functions [84], taking the factorization, renormalization and fragmentation scales equal to the \(p_\mathrm{T}\) of the produced pion. As with the D-mesons, we use a \(p_\mathrm{T} > 3 \, \mathrm{GeV}\) cut when fitting these data.

3.7 Pion–nucleus Drell–Yan

The pion–nucleus Drell–Yan process has potential to constrain the flavour decomposition of the valence quarks [85, 86]. Unfortunately, the precision of the data is not high enough to provide significant discrimination power on top of the DIS data. We nevertheless include the same pion-induced Drell–Yan data sets that were there in the EPPS16 fit: The E615 data [87] is for the ratios,

the NA3 data [88] for ratios,

and the NA10 data [89] for ratios,

The look-up tables (see Sect. 4.4) are constructed with MCFM [68] using the GRV pion PDFs [90]. The isospin correction applied by the NA10 collaboration is accounted for as explained in Sect. 3 of Ref. [85], and the data normalization is treated separately for the high and low energy data.

4 Analysis procedure

As our preceding fits, the core of EPPS21 is based on \(\chi ^2\) minimization followed by the Hessian uncertainty analysis. As a new ingredient we now also chart the uncertainties that originate from the uncertainties of the baseline free proton PDF. The flow of the analysis is illustrated in Fig. 4 whose content is explained below in more detail.

4.1 The global \(\chi ^2\) function

The analysis is based on minimizing the global \(\chi _\mathrm{global}^2\) figure-of-merit function. For each data set the individual \(\chi ^2\) is of the form,

where \(T_i\{a_k \}\) denote the theory values, \(D_i\) are the corresponding data values, and \(C_{ij}^{-1}\) is the inverse of the experimental covariance matrix. The global \(\chi _\mathrm{global}\) is then a sum of individual \(\chi ^2\)s. In many cases, the overall normalization uncertainty is given separately and in this case,

with

and the \(\chi ^2\) is minimized also with respect to f. When comparing the fit results with the theoretical values, we have multiplied the data values with the optimal normalization factor f, where appropriate. Effectively, we are thus neglecting here the possible D’Agostini bias [91]. The list of parameters that define our central fit is given in Table 1 and in Table 2 we list the individual data sets together with their central values of \(\chi ^2\).

4.2 Uncertainty analysis

Our uncertainty analysis leans on the standard Hessian method [92]. The global \(\chi ^2\) is expanded about the fitted minimum as,

where \(\delta a_j \equiv a_j-a_j^0\) are deviations from the best-fit values and \(\chi ^2_0\) is the fitted minimum \(\chi ^2\). The Hessian matrix has a complete set of positive-definite eigenvalues \(\epsilon _k\) and orthonormal eigenvectors \(v_j^{(k)}\),

These are used to introduce new coordinates,

In the new basis, the global \(\chi ^2\) function simplifies to

In Fig. 5 we plot the \(\chi ^2\) profiles along each eigenvector direction. In most of the cases the quadratic approximation seems to hold very well (in the plotted range) but in some cases its imperfections are also clearly visible.

Our evaluation of the Hessian matrix follows the iterative procedure discussed more detailedly in Sect. 4.1 of Ref. [1]. The best fit corresponds to the origin of the z space, \(z_i=0\), and the PDF error sets \(S_i^{\pm }\) are defined as those PDFs that correspond to definite points in the z space,

Since the Hessian matrix is diagonal in the z space, the error sets \(S_k^\pm \) can be seen to define the uncertainties due to uncorrelated sources of errors. As a result the total uncertainty for a given PDF-dependent quantity X(S) can be taken to be e.g. of the form,

Often, one defines the upward uncertainty \(\big ( \delta X \big )^+\) and the downward uncertainty \(\big ( \delta X \big )^-\) separately by the prescription [93],

i.e. the deviations above (below) the central value are added in quadrature. It is this latter definition of uncertainties that we adopt in the present paper.

The dynamically determined increases in the global \(\chi ^2\) corresponding to each eigenvector direction (see Ref. [1, Sect. 4.1]). The average of these, 50, and the EPPS21 tolerance \(\Delta \chi ^2 \approx 33\) are indicated as well

How to assign values for the parameters \(\delta z_i^\pm \) is not unique. In the present analysis we use for transparency the prescription in which we assume the probability distribution for our best-fit parameters to be Gaussian. In other words, we assume that if we would have several instances of the entire EPPS21 data collection, the obtained parameters would form a Gaussian distribution centered around our current best-fit parameters. It follows that the probability to observe a \(\chi ^2\) less than \(\chi ^2_0 + \Delta \chi ^2\) is given by

where \(N_\mathrm{param}\) is the number of fit parameters and

Requiring \(P \left( \Delta \chi ^2, N_\mathrm{param} \right) = 90\%\) results in \(\Delta \chi ^2 \approx 33\) for \(N_\mathrm{param} = 24\). This defines the tolerance of EPPS21 and the values for the parameters \(\delta z_i^\pm \) are then determined such that they increase the \(\chi ^2\) by this amount. The found values are listed in Table 3. We have also tested other ways to define the uncertainties: we have e.g. repeated the “dynamical-tolerance analysis” as done in EPPS16 (Ref. [1], Sect. 4.1). The resulting individual, dynamically set values of \(\chi ^2(S_k^\pm ) - \chi _0^2\) are shown in Fig. 6. In the big picture the resulting uncertainties are very similar as here: the resulting average dynamical tolerance is around 50, which means that the uncertainty bands differ roughly by a factor of \(\sqrt{50/33} \approx 1.2\). In other words, to obtain the uncertainties defined in the similar manner as in EPPS16, the errorbands would need to be inflated approximately by this factor. However, e.g. the implicit parametrization bias is likely to be more important than this difference.

4.3 Baseline proton uncertainties

Even though most of the observables in our analysis are ratios whose dependence on the baseline proton PDF is only weak, the cancellation is not perfect and some dependence on the chosen baseline PDF always persists. Also, the neutrino DIS cross sections that we include are normalized only to the integrated cross sections and one would expect a pronounced sensitivity on the baseline PDFs. In the present analysis, we will quantify the baseline dependence by using the CT18ANLO Hessian error sets. We do this by simply propagating the CT18ANLO uncertainty into nuclear quantities by using Eq. (36). The quantity X there could be, for example, a nuclear modification factor \(R_i^{\mathrm{p}/A}\) or an absolute nuclear PDF itself, \(f_i^{A}\), which both depend, in some complicated way through the \(\chi ^2\) minimization, on the free proton PDFs. Thus, to chart the sensitivity of nuclear PDFs on the baseline proton PDFs, we repeat the \(\chi ^2\) minimization by taking each free proton PDF error set \(S^\pm _i\) separately as our baseline in Eq. (1). By looping over all CT18ANLO error sets we can consequently propagate its uncertainties on any nuclear quantity that appears in our analysis. The full uncertainty is then formed by adding in quadrature the “nuclear errors” – uncertainties that stem from the parametrization of nuclear modifications – and the “proton errors.” The procedure here is on par with the method used in the nNNPDF fits [10]. However, here we will be able to also separate the uncertainties that stem from the nuclear parameters and those that originate from the free proton PDFs. In practice, the EPPS21 PDFs thus feature \(24(\mathrm{nuclear})+29(\mathrm{proton})=53\) error directions (i.e. 106 error sets) and the full uncertainty is obtained by extending the sum in Eq. (36) over all of these. In other words, the total uncertainty is built from two components,

For example, if the quantity X is a cross section \(\sigma \) in pPb collisions, the required cross sections are obtained schematically as

where \(f^\mathrm{p}_0\) and \(f^\mathrm{Pb}_0\) are the central free-proton and lead PDFs, and \(f^\mathrm{p}_{i, \pm }\) and \(f^\mathrm{Pb}_{i, \pm }\) are the corresponding error sets.

4.4 Look-up tables

To speed up the numerical analysis, the calculations of the LHC, RHIC, and pion–nucleus Drell–Yan processes are carried out by precomputing look-up tables. Following here the method used in the EPPS16 analysis (Sect. 3.3 of Ref. [1]), we precompute partial cross sections,

where \({\hat{\sigma }}_{j,n}\) are the partonic coefficient functions and

where the points \(x_0, x_1,\ldots x_N=1\) define a suitable grid in the x variable. The true cross sections are then obtained as simple sums,

The look-up tables are computed by using the central CT18A proton PDFs. For a complete consistency we should recompute the look-up tables also with each CT18A error set to be used when fitting the nuclear modifications with that particular CT18A error set. However, the most important observables we use in conjunction with the look-up tables turn out not very sensitive to the choice of the baseline proton PDF and we thus always use the tables computed with the central CT18A proton PDFs. The baseline dependence cancels out particularly well in the case of dijet and D-meson observables which are the most constraining data sets included via the look-up-table method. For W\(^\pm \) bosons the proton-PDF cancellation is less exact in the used ratios, but we have verified that the residual baseline uncertainties are small enough compared to the experimental uncertainties that they do not bias the nuclear-modification fitting [64], justifying the use of fixed look-up tables also in this case.

5 The EPPS21 PDFs

The EPPS21 nuclear modifications of bound protons in carbon (two leftmost columns) in lead (two rightmost columns) at the initial scale \(Q^2=1.69\,\mathrm{GeV}^2\) and at \(Q^2=10\,\mathrm{GeV}^2\). The central results are shown by thick black curves, and the nuclear error sets by green dotted curves. The blue bands correspond to the nuclear uncertainties and the purple ones to the full uncertainty (nuclear and baseline errors added in quadrature)

The EPPS21 nuclear modifications of average nucleons in carbon (two leftmost columns) in lead (two rightmost columns) at the initial scale \(Q^2=1.69\,\mathrm{GeV}^2\) and at \(Q^2=10\,\mathrm{GeV}^2\). The central results are shown by thick black curves, and the nuclear error sets by green dotted curves. The blue bands correspond to the nuclear uncertainties and the purple ones to the full uncertainty (nuclear and baseline errors added in quadrature)

The EPPS21 bound-proton nuclear modifications are shown in Fig. 7 for carbon and lead at the parametrization scale \(Q^2=1.69 \, \mathrm{GeV}^2\) as well as at \(Q^2=10 \, \mathrm{GeV}^2\). Here, as in most of the figures to follow, the blue bands denote the nuclear errors and in purple bands we indicate the full uncertainty in which the nuclear errors are added in quadrature with the uncertainties originating from the free proton PDFs. From the practical point of view, the bound-proton nuclear modifications \(R_i^\mathrm{p/A}\) are not, however, the most relevant quantities as the cross sections only see the full PDFs involving appropriate linear combinations of protons and neutrons. In Fig. 8 we therefore present the average-nucleon nuclear modifications \(R_i^{A}\) from Eq. (4) for carbon and lead at the parametrization scale \(Q^2=1.69 \, \mathrm{GeV}^2\) as well as at \(Q^2=10 \, \mathrm{GeV}^2\). Comparing Figs. 7 and 8 one clearly observes how the uncertainties in the nuclear modifications of average up- and down-quark PDFs are clearly smaller in comparison to the uncertainties in bound-proton nuclear modifications. This is to be expected as e.g. \(R_{u_\mathrm{V}}^\mathrm{p/A}\) and \(R_{d_\mathrm{V}}^\mathrm{p/A}\) are strongly anticorrelated as was demonstrated already in the context of EPPS16 analysis (Ref. [1, Fig. 10]). Since the average-nucleon modifications \(R_{u_\mathrm{V}}^\mathrm{A}\) and \(R_{d_\mathrm{V}}^\mathrm{A}\) are both linear combinations of \(R_{u_\mathrm{V}}^\mathrm{p/A}\) and \(R_{d_\mathrm{V}}^\mathrm{p/A}\), the uncertainties tend to diminish. Similar reasoning applies for the sea-quark nuclear modifications. From Fig. 8 we can see that at small-x the average up-sea modification for lead \(R_{{\overline{u}}}^\mathrm{Pb}\) seems to be clearly better constrained than the average down-sea modification \(R_{{\overline{d}}}^\mathrm{Pb}\). This is because of the factor of 4 difference between the electric charges of up and down quarks which makes the structure–function ratios four times more sensitive to \(R_{{\overline{u}}}^\mathrm{Pb}\) than to \(R_{{\overline{d}}}^\mathrm{Pb}\). For an isoscalar nucleus like carbon there is no such difference.

Towards smaller values of x the DGLAP evolution efficiently reduces the uncertainties in particular for gluons, but also for the sea quarks. This is actually one of the reasons we do not try to build in too much additional flexibility for the parametrization at small x – such variations would anyway be wiped out very quickly towards higher values of \(Q^2\) and thus be rather irrelevant for most of the applications. As we can see from Fig. 8, the strange-quark nuclear modifications are the ones that retain the largest overall uncertainties. They are indeed tricky to constrain: in principle the electroweak boson production at the LHC carries a significant contribution from the strange quarks [12]. However, these processes are sensitive to the PDFs at high interaction scales \(Q^2 \sim 10000 \, \mathrm{GeV}^2\) where the behaviour of the strange-quark PDF depends very strongly on the initial gluon distribution. As a result, even the rather precise CMS \(8 \, \mathrm{TeV}\) W\(^\pm \) data [9] are not able to restrict the strange-quark PDFs tightly. Unlike in our earlier analyses the central gluon nuclear modification does go negative for Pb and other large nuclei at the parametrization scale \(Q^2=1.69 \, \mathrm{GeV}^2\). However, the negativity disappears extremely fast – already at \(Q^2=1.80 \, \mathrm{GeV}^2\) the central gluon is entirely positive. As a result, the negativity should not be a problem in practical applications. It can be expected that the negativity of the gluon PDF will be even a bigger issue at next-to-NLO fits as the evolution at small x is faster than at NLO. It will eventually be interesting to see whether e.g. including the small x resummation [95] in the DGLAP evolution kernels will reduce the tendency of the gluon to go negative similarly as in the case of free-proton fits [96]. Likewise, including non-linear, \(1/Q^2\)-type corrections in the DGLAP evolution is expected to slow down the evolution at small x and possibly reduce the tendency of the gluon PDF to go negative [97].

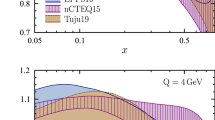

Comparisons of the EPPS21 average-nucleon nuclear modifications – i.e. those given by Eq. (4) – to the nCTEQ15WZ [94] and nNNPDF2.0 [10] nuclear PDFs are shown in Fig. 9 at \(Q^2=10\,\mathrm{GeV}^2\). The EPPS21 uncertainties correspond to the full uncertainty with the nuclear and free-proton uncertainties added in quadrature. Also the nNNPDF2.0 uncertainty includes the uncertainty that comes from the free proton. In the case of nCTEQ15WZ only the nuclear uncertainties are available. Within the plotted 90% confidence-level errors all three are observed to agree with each other. However, the widths of the uncertainty bands differ quite significantly in places and they do so for varying reasons. For example, since there was no flavour separation between \({{\overline{u}}}\) and \({{\overline{d}}}\) in nCTEQ15WZ, the corresponding uncertainty for \(R^\mathrm{Pb}_{{\overline{u}}}\) and \(R^\mathrm{Pb}_{{\overline{d}}}\) tends to be smaller than in EPPS21 or nNNPDF2.0. The nNNPDF2.0 uncertainties for \(R^\mathrm{Pb}_{{\overline{u}}}\) and \(R^\mathrm{Pb}_{{\overline{d}}}\), in turn, are larger than those of EPPS21 presumably because nNNPDF2.0 does not include the fixed-target Drell–Yan data. The strange-quark uncertainty is the largest in nCTEQ15WZ which probably follows from the exclusion of all the neutrino DIS data in the nCTEQ15WZ fit. Both EPPS21 and nNNPDF2.0 do include neutrino DIS data. A bit surprisingly, the nCTEQ15WZ has the smallest small-x gluon error even though the analysis does not involve any dijet or D-meson data. The reason for the smaller uncertainty is most likely in the more restrictive form of the adopted fit function. The largeness of the nNNPDF2.0 gluon uncertainty – particularly at small x – in comparison to EPPS21 reflects the effect of including (EPPS21) or not including (nNNPDF2.0) the dijet or D-meson data. In the case of valence-quark nuclear modifications there seems to be no clear systematics in the widths of the error bands. In principle, EPPS21 has the least-restricted W cut such that more large-x data are included in the analysis than e.g. in the nCTEQ15WZ fit. However, the nCTEQ15WZ valence-quark errors are still smaller in places. Also here, the form of the parametrization is likely to play a role.

Comparison between the EPPS21 (blue) and the EPPS16 (gray) [1] average-nucleon nuclear modifications at \(Q^2=10\,\mathrm{GeV}^2\). The EPPS21 uncertainties include the free-proton uncertainties but the EPPS16 error bands only include the nuclear uncertainty

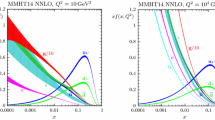

The 90% confidence-level EPPS21 PDFs in lead nucleus at the parametrization scale \(Q^2=1.69\,\mathrm{GeV}^2\) (upper panels) and at \(Q^2=10\,\mathrm{GeV}^2\) (lower panels). The central results are shown by thick black curves, the blue bands correspond to the nuclear uncertainties and the purple ones to the full uncertainty (nuclear and baseline errors added in quadrature)

In Fig. 10 we compare the average-nucleon nuclear modifications from EPPS21 and our previous analysis EPPS16. The largest differences are in the sectors of strange quarks and gluons. Thanks to the new D-meson and dijet data, the EPPS21 gluons are now much better constrained than what they are in EPPS16. The EPPS21 errors of the strange-quark nuclear modifications at small x also appear significantly smaller than those of EPPS16. In part, the smaller strange-quark errors must follow from the better constrained gluons as the two are intertwined through the DGLAP evolution.

In Fig. 11 we present the absolute EPPS21 PDFs for the full lead nucleus at \(Q^2=1.69\,\mathrm{GeV}^2\) and at \(Q^2=10\,\mathrm{GeV}^2\) together with the uncertainties broken down to the nuclear- and free-proton errors. The main conclusion to be made here is that, in most of the cases, the nuclear uncertainty is the dominant one also for the absolute PDFs. Only in \(f_u^\mathrm{Pb}\) and \(f_{{\overline{u}}}^\mathrm{Pb}\) the free-proton originating uncertainties can exceed the nuclear uncertainties in the plotted range. This is mainly so, as the uncertainty of \(R_{{\overline{u}}}^\mathrm{Pb}\) is clearly smaller than that of \(R_{{\overline{d}}}^\mathrm{Pb}\), as we saw in Fig. 8. Comparison to the two other contemporary nuclear PDFs, nCTEQ15WZ [94] and nNNPDF2.0 [10], are shown in Fig. 12. Again, all three can be observed to agree within the presented 90% confidence-level errors. The systematics between the sizes of the error bands follows the same pattern as was already seen in Fig. 9.

The JLab CLAS [14] and Hall-C data [13] compared with the EPPS21 and EPPS16 analyses. The solid blue lines show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty. The grey bands correspond to the EPPS16 results. The experimental data have been scaled with the normalization factors indicated in Table 2

The LHCb D-meson data [4] compared with the EPPS21 and EPPS16 analyses. The solid blue lines show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty. The grey bands correspond to the EPPS16 results. The points below \(p_\mathrm{T} = 3\,\mathrm{GeV}\) were not included in the analysis. The experimental data have been scaled with the normalization factors indicated in Table 2

The CMS dijet data [3] compared with the EPPS21 analysis. The solid blue lines show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty. The grey bands correspond to the EPPS16 results

The CMS \(8.16\,\mathrm{TeV}\) W\(^\pm \) data [9] for nuclear modification \(R_\mathrm{pPb}\) compared with the EPPS21 and EPPS16 fits. The solid blue lines show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty. The grey bands correspond to the EPPS16 results. The red dashed curve corresponds to the isospin effects only (only isospin effects in PDFs). The experimental data have been scaled with the normalization factors indicated in Table 2

6 Comparison with the new data

6.1 Comparison with the fitted data

In the following we demonstrate the compatibility of the EPPS21 nuclear PDFs with the experimental data. Here, we will discuss only the new data sets included in the EPPS21 analysis – comparisons of the EPPS21 results with the data that were already included in the EPPS16 analysis are provided in Appendix A. In all cases the plotted data have been multiplied by the optimal normalization factors indicated in Table 2.

The recent JLab CLAS [14] and Hall-C light-nucleus [13] DIS measurements are shown in Fig. 13. These data are new in comparison to the EPPS16 fit, and we therefore also show the EPPS16 errorbands for comparison. In the case of CLAS, the new EPPS21 results tend to lie below the EPPS16 ones but the x derivatives are almost equal. These CLAS data overlap with the older SLAC data [43] in x but probe the nuclear PDFs typically at lower \(Q^2\) than the SLAC measurements. The good simultaneous fit to both hints that no sizable A-dependent power corrections that would scale like \(Q^{-2n}\) emerge in the probed \(Q^2\) region. The Hall-C data are also new in comparison to the EPPS16 analysis and the EPPS21 errorbands are now visibly smaller for all other than the He-3 data. The larger uncertainty for He-3 follows from the new free parameter \(f_3\), using a fixed global tolerance, and that these 15 JLab data points constitute the only constraints for this new parameter. We note that only those Hall-C data points with \(W \ge 1.8\,\mathrm{GeV}\) are included in the \(\chi ^2\) analysis. However, the exact choice of the W cut is not critical here but we have checked that e.g. the first data points towards higher x (lower W) are still reproduced so that the fit is stable against small variations in the W cut.

The LHCb D-meson data shown in Fig. 14 provide stringent constraints for the nuclear gluons [6] for a wide range in x. The differences between the EPPS16 and the new EPPS21 uncertainty bands are large, the EPPS21 errors being now much smaller. Since the \(p_\mathrm{T}\) slopes of the data are rather mild in the fitted region \(p_\mathrm{T} > 3\,\mathrm{GeV}\), the normalization uncertainty can effectively compensate for variations in the gluon nuclear modifications and, as a result, the uncertainty bands appear larger than the data errors at low \(p_\mathrm{T}\). We note that even in the non-fitted region \(p_\mathrm{T} < 3\,\mathrm{GeV}\) the data are still well reproduced by the EPPS21 PDFs and in this sense we see no signs of novel small-x dynamics beyond the linear DGLAP. The data at negative rapidities rise above unity which is consistent with having a gluon antishadowing. At the very backward-most bin, the data are clearly above the predictions – we have no explanation for this behaviour and it cannot be explained by nuclear effects in PDFs.

In Fig. 15 we compare the CMS double-differential dijet measurements [3] with the EPPS21 and EPPS16 calculations. Similarly to the case of D mesons, the differences between the EPPS16 and EPPS21 uncertainty bands are significant, and the new data have squeezed the EPPS21 uncertainties considerably from EPPS16. This translates into much better constrained nuclear modifications of the gluons [5]. Towards negative values of \(\eta _\mathrm{dijet}\) one becomes increasingly sensitive to the valence quarks in the Pb nucleus which, in part, causes the downward trend towards negative \(\eta _\mathrm{dijet}\). In the bin with the largest transverse momentum \(p_\mathrm{T}^\mathrm{ave}\) the point near \(\eta _\mathrm{dijet} \approx -2\) is very near to the edge of the phase space i.e. the produced dijet carries nearly all the available energy. Effects like hadronization (one has to allow the nucleon remnants to have energy to hadronize), threshold logarithms, and target-mass corrections become arguably large in this particular bin and it is left out from the fit. Towards positive values of \(\eta _\mathrm{dijet}\) a clear downward trend is again observed which in the calculations originates from the gluon shadowing. However, the very forward data points would prefer a clearly stronger gluon shadowing than what the fit allows for. We have tested that gluon PDFs that can reproduce the most forward datapoints fail in reproducing the data at other values of \(\eta _\mathrm{dijet}\). We have also varied the small-x part of our gluon parametrization to see whether the difficulty would be associated with a too stringent form of the fit function. However, varying the parametrization does not lead to any better result than what is seen in Fig. 15. This can also be understood as follows: Estimating the probed value of nuclear x by \(x^\mathrm{Pb} \approx 2p_\mathrm{T}^\mathrm{ave}/\sqrt{s}e^{-\eta _\mathrm{dijet}+0.465}\), the values for the \(\eta _\mathrm{dijet} \approx 2.75\) bin with \(p_\mathrm{T}^\mathrm{ave} = 95\,\mathrm{GeV}\) and the \(\eta _\mathrm{dijet} \approx 2.25\) bin with \(p_\mathrm{T}^\mathrm{ave} = 55\,\mathrm{GeV}\) are approximately the same. Both thus probe the nuclear modification \(R^\mathrm{Pb}_g(x,Q^2)\) at the same values of x. As the scale dependence of \(R^\mathrm{Pb}_g(x,Q^2)\) is very weak at such large interaction scales it appears impossible to reproduce these to data points with a same set of PDFs. What causes the most forward data points to be so strongly suppressed remains to be understood. However, our fit results are not sensitive to whether we include (as we do) or exclude the 4 most forward data points in the 4 first \(p_\mathrm{T}\) bins.

Finally, the new CMS \(8.16\,\mathrm{TeV}\) data on W\(^\pm \) production [9] are shown in Fig. 16. In the plot, we also show the EPPS16 uncertainties to visualize the difference between EPPS21 and EPPS16. Particularly towards forward (positive) lepton rapidities the EPPS21 errorbands are clearly smaller than the EPPS16 bands. However, this is mainly due to the D-meson and dijet data which now set more stringent constraints for the gluons. While the W\(^\pm \) production at forward direction is directly sensitive to the small-x quark content of the nucleus, the interaction scale, set by the mass of the W\(^\pm \) boson, is very high and a significant part of the behaviour of the sea quarks is dictated by the gluons. Just by looking at the plots, the rapidity dependencies of \(R_\mathrm{pPb}\) appear not so perfect but EPPS21 tends to overshoot the data at negative rapidities. At negative rapidities the form of \(R_\mathrm{pPb}\) is largely set by the valence-quark nuclear modifications which are well constrained by the available DIS data – the backward part of \(R_\mathrm{pPb}\) cannot thus be easily changed without ruining the agreement with the DIS data. If the data were multiplied by a larger normalization factor that matches the negative-rapidity data with the central EPPS21 prediction, the forward part of \(R_\mathrm{pPb}\) would be underestimated by EPPS21. As a result, to better reproduce the overall rapidity dependence, the forward part of EPPS21 should be higher which could be attained by having less gluon shadowing. However, both the dijet and D-meson data prefer a stronger shadowing such that one could be tempted see some indications of a tension between these observables, perhaps due to a coherent energy loss [98] or equivalent. However, \(\chi ^2/N_\mathrm{data} \approx 0.94\) for the W\(^\pm \) data so statistics-wise these data are well reproduced. We note that the CMS collaboration provides the covariance matrix to be used in calculating the \(\chi ^2\) values, which implicitly involves solving for the optimal shifts associated with the systematic uncertainties of the data. As only the covariance matrix is given, we cannot solve for these optimal shifts without further input and the data points are plotted at their central values (the data are still multiplied with the optimal normalization factor) – it is conceivable that the apparent non-prefect reproduction of the rapidity dependence disappears once these shifts are implemented.

The CMS \(8.16\,\mathrm{TeV}\) Z-boson data [99, 100] for nuclear modification \(R_\mathrm{pPb}\) compared with the EPPS21 fit. The solid blue lines show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty. The data have been normalized by a factor of 0.951 which is the optimal normalization with EPPS21. The grey bands correspond to the EPPS16 results

6.2 Comparison with the CMS 8 TeV Z data

Also the new CMS \(8.16\,\mathrm{TeV}\) data for Z production in pPb collisions are available [99]. By using the \(8\,\mathrm{TeV}\) pp reference data [100] we have constructed the mixed-energy nuclear modification factor as in Eq. (20). A comparison of the experimental results with the EPPS21 predictions is shown in Fig. 17. Due to the different muon acceptances in pp and pPb collisions, the shape of \(R_\mathrm{pPb}\) differs significantly from unity. While the broad systematics of the data are reproduced by the EPPS21 PDFs, the 90% EPPS21 uncertainty bands hardly overlap with the data errors. The value of \(\chi ^2\) is also rather large, \(\chi ^2/N_\mathrm{data} \approx 2.1\) (accounting for the correlated normalization uncertainty but adding the other errors in quadrature). Similar difficulties are also visible in the original CMS data paper [99] for the absolute cross sections as well as for the forward-to-backward ratio. In particular, the data exhibit large fluctuations around the midrapidity which seem to be impossible to reproduce by any PDF-based calculation. For these reasons, these new Z-boson data are not included in EPPS21.

The \(Q^2\)-dependent NMC structure–function ratios [45] compared with the EPPS21 analysis. The solid blue curves show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty

7 Summary

In summary, we have presented a new global analysis of collinearly factorized nuclear PDFs, EPPS21. On the methodological front, we have now set up a framework to account for the free-proton uncertainties in a more consistent way than in previous fits of nuclear PDFs based of Hessian uncertainty analyses. The most significant new experimental ingredients are the LHC p-Pb data for dijets (Run-I), D-mesons (Run-I) and W\(^\pm \) bosons (Run-II), which reduce the uncertainty of the nuclear gluons significantly in comparison to our previous EPPS16 fit. Our analysis now confirms the presence of gluon shadowing and antishadowing for large nuclei. We have now also incorporated recent JLab DIS data which probe the valence quarks at low values of \(Q^2\), previously only reached in the older SLAC data. The good simultaneous fit to all large-x DIS data indicates that no significant A-dependent higher-twist contributions appear in the kinematic region included in the fit. Overall, the new LHC pPb data are also well described within EPPS21 but some discrepancies persist. In particular, it appears impossible to reproduce the strong suppression in the four most forward dijet data points with any set of PDFs. What causes such a strong effect remains an open question and calls for further investigation. In addition, there are some indications that the Run-II W\(^\pm \) data would be better reproduced with a slightly less shadowed gluons in comparison to the central EPPS21. However, this feature may be just a fictitious one as the systematic shifts due to the experimental correlations are not available. The new Run-II Z-boson data from CMS also disagree significantly with EPPS21 and it appears impossible to accurately accommodate the rapidity dependence of these data within a global PDF analysis. From all this we can conclude that the LHC p-Pb program has now reached a level of precision which begins to put the conjecture of the universality of nuclear PDFs into a real test.

Notes

After the preprint of the present article was submitted, also an updated nNNPDF analysis appeared [11].

References

K.J. Eskola, P. Paakkinen, H. Paukkunen, C.A. Salgado, Eur. Phys. J. C 77(3), 163 (2017). https://doi.org/10.1140/epjc/s10052-017-4725-9arXiv:1612.05741 [hep-ph]

N. Armesto, H. Paukkunen, J.M. Penín, C.A. Salgado, P. Zurita, Eur. Phys. J. C 76(4), 218 (2016). https://doi.org/10.1140/epjc/s10052-016-4078-9arXiv:1512.01528 [hep-ph]]

A.M. Sirunyan et al. [CMS], Phys. Rev. Lett. 121(6), 062002 (2018). https://doi.org/10.1103/PhysRevLett.121.062002. arXiv:1805.04736 [hep-ex]

R. Aaij et al. [LHCb], JHEP 10, 090 (2017). https://doi.org/10.1007/JHEP10(2017)090. arXiv:1707.02750 [hep-ex]

K.J. Eskola, P. Paakkinen, H. Paukkunen, Eur. Phys. J. C 79(6), 511 (2019). https://doi.org/10.1140/epjc/s10052-019-6982-2arXiv:1903.09832 [hep-ph]

K.J. Eskola, I. Helenius, P. Paakkinen, H. Paukkunen, JHEP 05, 037 (2020). https://doi.org/10.1007/JHEP05(2020)037arXiv:1906.02512 [hep-ph]

A. Kusina, J.P. Lansberg, I. Schienbein, H.S. Shao, Phys. Rev. Lett. 121(5), 052004 (2018). https://doi.org/10.1103/PhysRevLett.121.052004arXiv:1712.07024 [hep-ph]

A. Kusina, J.P. Lansberg, I. Schienbein, H.S. Shao, Phys. Rev. D 104(1), 014010 (2021). https://doi.org/10.1103/PhysRevD.104.014010arXiv:2012.11462 [hep-ph]

A.M. Sirunyan et al. [CMS], Phys. Lett. B 800, 135048 (2020). https://doi.org/10.1016/j.physletb.2019.135048. arXiv:1905.01486 [hep-ex]

R. AbdulKhalek, J.J. Ethier, J. Rojo, G. van Weelden, JHEP 09, 183 (2020). https://doi.org/10.1007/JHEP09(2020)183arXiv:2006.14629 [hep-ph]

R.A. Khalek, R. Gauld, T. Giani, E.R. Nocera, T.R. Rabemananjara, J. Rojo, arXiv:2201.12363 [hep-ph]

A. Kusina, T. Ježo, D.B. Clark, P. Duwentäster, E. Godat, T.J. Hobbs, J. Kent, M. Klasen, K. Kovařík, F. Lyonnet et al., Eur. Phys. J. C 80(10), 968 (2020). https://doi.org/10.1140/epjc/s10052-020-08532-4arXiv:2007.09100 [hep-ph]

J. Seely, A. Daniel, D. Gaskell, J. Arrington, N. Fomin, P. Solvignon, R. Asaturyan, F. Benmokhtar, W. Boeglin, B. Boillat et al., Phys. Rev. Lett. 103, 202301 (2009). https://doi.org/10.1103/PhysRevLett.103.202301arXiv:0904.4448 [nucl-ex]

B. Schmookler et al. [CLAS], Nature 566(7744), 354–358 (2019). https://doi.org/10.1038/s41586-019-0925-9. arXiv:2004.12065 [nucl-ex]

J. Arrington, J. Bane, A. Daniel, N. Fomin, D. Gaskell, J. Seely, R. Asaturyan, F. Benmokhtar, W. Boeglin, P. Bosted et al., arXiv:2110.08399 [nucl-ex]

H. Paukkunen, P. Zurita, Eur. Phys. J. C 80(5), 381 (2020). https://doi.org/10.1140/epjc/s10052-020-7971-1arXiv:2003.02195 [hep-ph]

E.P. Segarra, T. Ježo, A. Accardi, P. Duwentäster, O. Hen, T.J. Hobbs, C. Keppel, M. Klasen, K. Kovařík, A. Kusina et al., Phys. Rev. D 103(11), 114015 (2021). https://doi.org/10.1103/PhysRevD.103.114015arXiv:2012.11566 [hep-ph]

H. Khanpour, M. Soleymaninia, S. Atashbar Tehrani, H. Spiesberger, V. Guzey, Phys. Rev. D 104(3), 034010 (2021). https://doi.org/10.1103/PhysRevD.104.034010arXiv:2010.00555 [hep-ph]

R.D. Ball, S. Carrazza, J. Cruz-Martinez, L. Del Debbio, S. Forte, T. Giani, S. Iranipour, Z. Kassabov, J.I. Latorre, E.R. Nocera et al., arXiv:2109.02653 [hep-ph]

R.D. Ball et al. [NNPDF], Eur. Phys. J. C 79(3), 282 (2019). https://doi.org/10.1140/epjc/s10052-019-6793-5. arXiv:1812.09074 [hep-ph]

M. Walt, I. Helenius, W. Vogelsang, Phys. Rev. D 100(9), 096015 (2019). https://doi.org/10.1103/PhysRevD.100.096015. arXiv:1908.03355 [hep-ph]

I. Helenius, M. Walt, W. Vogelsang, arXiv:2112.11904 [hep-ph]

T.J. Hou, J. Gao, T.J. Hobbs, K. Xie, S. Dulat, M. Guzzi, J. Huston, P. Nadolsky, J. Pumplin, C. Schmidt et al., Phys. Rev. D 103(1), 014013 (2021). https://doi.org/10.1103/PhysRevD.103.014013arXiv:1912.10053 [hep-ph]

M. Aaboud et al. [ATLAS], Eur. Phys. J. C 77(6), 367 (2017). https://doi.org/10.1140/epjc/s10052-017-4911-9. arXiv:1612.03016 [hep-ex]

M. Goncharov et al. [NuTeV], Phys. Rev. D 64, 112006 (2001). https://doi.org/10.1103/PhysRevD.64.112006. arXiv:hep-ex/0102049 [hep-ex]

K.J. Eskola, V.J. Kolhinen, P.V. Ruuskanen, Nucl. Phys. B 535, 351–371 (1998). https://doi.org/10.1016/S0550-3213(98)00589-6arXiv:hep-ph/9802350

K.J. Eskola, V.J. Kolhinen, H. Paukkunen, C.A. Salgado, JHEP 05, 002 (2007). https://doi.org/10.1088/1126-6708/2007/05/002arXiv:hep-ph/0703104 [hep-ph]

K.J. Eskola, H. Paukkunen, C.A. Salgado, JHEP 07, 102 (2008). https://doi.org/10.1088/1126-6708/2008/07/102arXiv:0802.0139 [hep-ph]

K.J. Eskola, H. Paukkunen, C.A. Salgado, JHEP 04, 065 (2009). https://doi.org/10.1088/1126-6708/2009/04/065arXiv:0902.4154 [hep-ph]

W. Furmanski, R. Petronzio, Phys. Lett. B 97, 437–442 (1980). https://doi.org/10.1016/0370-2693(80)90636-X

G. Curci, W. Furmanski, R. Petronzio, Nucl. Phys. B 175, 27–92 (1980). https://doi.org/10.1016/0550-3213(80)90003-6

Y.L. Dokshitzer, Sov. Phys. JETP 46, 641–653 (1977)

V.N. Gribov, L.N. Lipatov, Sov. J. Nucl. Phys. 15, 438–450 IPTI-381-71 (1972)

V.N. Gribov, L.N. Lipatov, Sov. J. Nucl. Phys. 15, 675–684 (1972)

G. Altarelli, G. Parisi, Nucl. Phys. B 126, 298–318 (1977). https://doi.org/10.1016/0550-3213(77)90384-4

P. Santorelli, E. Scrimieri, Phys. Lett. B 459, 599–606 (1999). https://doi.org/10.1016/S0370-2693(99)00698-XarXiv:hep-ph/9807572

P. Paakkinen, Phys. Rev. D 105(3), L031504 (2022). https://doi.org/10.1103/PhysRevD.105.L031504arXiv:2111.05368 [hep-ph]

M. Arneodo et al. [New Muon], Nucl. Phys. B 483, 3–43 (1997). https://doi.org/10.1016/S0550-3213(96)00538-X. arXiv:hep-ph/9610231 [hep-ph]

A.D. Martin, A.J.T.M. Mathijssen, W.J. Stirling, R.S. Thorne, B.J.A. Watt, G. Watt, Eur. Phys. J. C 73(2), 2318 (2013). https://doi.org/10.1140/epjc/s10052-013-2318-9arXiv:1211.1215 [hep-ph]

A. Accardi, T.J. Hobbs, X. Jing, P.M. Nadolsky, Eur. Phys. J. C 81(7), 603 (2021). https://doi.org/10.1140/epjc/s10052-021-09318-y10.1140/epjc/s10052-021-09318-yarXiv:2102.01107 [hep-ph]

A. Candido, S. Forte, F. Hekhorn, JHEP 11, 129 (2020). https://doi.org/10.1007/JHEP11(2020)129arXiv:2006.07377 [hep-ph]

J. Collins, T.C. Rogers, N. Sato, arXiv:2111.01170 [hep-ph]

J. Gomez, R.G. Arnold, P.E. Bosted, C.C. Chang, A.T. Katramatou, G.G. Petratos, A.A. Rahbar, S.E. Rock, A.F. Sill, Z.M. Szalata et al., Phys. Rev. D 49, 4348–4372 (1994). https://doi.org/10.1103/PhysRevD.49.4348

P. Amaudruz et al. [New Muon], Nucl. Phys. B 441, 3–11 (1995). https://doi.org/10.1016/0550-3213(94)00023-9. arXiv:hep-ph/9503291

M. Arneodo et al. [New Muon], Nucl. Phys. B 441, 12–30 (1995). https://doi.org/10.1016/0550-3213(95)00023-2. arXiv:hep-ex/9504002

M. Arneodo et al. [New Muon], Nucl. Phys. B 481, 3–22 (1996). https://doi.org/10.1016/S0550-3213(96)90117-0

M. Arneodo et al. [New Muon], Nucl. Phys. B 481, 23–39 (1996). https://doi.org/10.1016/S0550-3213(96)90119-4

J. Ashman et al. [European Muon], Z. Phys. C 57, 211–218 (1993). https://doi.org/10.1007/BF01565050

G. Onengut et al. [CHORUS], Phys. Lett. B 632, 65–75 (2006). https://doi.org/10.1016/j.physletb.2005.10.062

H. Paukkunen, C.A. Salgado, Phys. Rev. Lett. 110(21), 212301 (2013). https://doi.org/10.1103/PhysRevLett.110.212301arXiv:1302.2001 [hep-ph]

M. Krämer, F.I. Olness, D.E. Soper, Phys. Rev. D 62, 096007 (2000). https://doi.org/10.1103/PhysRevD.62.096007arXiv:hep-ph/0003035

J.C. Collins, Phys. Rev. D 58, 094002 (1998). https://doi.org/10.1103/PhysRevD.58.094002arXiv:hep-ph/9806259

R.S. Thorne, W.K. Tung, arXiv:0809.0714 [hep-ph]

A.B. Arbuzov, D.Y. Bardin, L.V. Kalinovskaya, JHEP 06, 078 (2005). https://doi.org/10.1088/1126-6708/2005/06/078arXiv:hep-ph/0407203 [hep-ph]

A. Accardi, J.W. Qiu, JHEP 07, 090 (2008). https://doi.org/10.1088/1126-6708/2008/07/090arXiv:0805.1496 [hep-ph]

D.M. Alde, H.W. Baer, T.A. Carey, G.T. Garvey, A. Klein, C. Lee, M.J. Leitch, J.W. Lillberg, P.L. McGaughey, C.S. Mishra et al., Phys. Rev. Lett. 64, 2479–2482 (1990). https://doi.org/10.1103/PhysRevLett.64.2479

M.A. Vasilev et al. [NuSea], Phys. Rev. Lett. 83, 2304–2307 (1999). https://doi.org/10.1103/PhysRevLett.83.2304. arXiv:hep-ex/9906010 [hep-ex]

S. Chatrchyan et al. [CMS], Eur. Phys. J. C 74(7), 2951 (2014). https://doi.org/10.1140/epjc/s10052-014-2951-y. arXiv:1401.4433 [nucl-ex]

Z. Nagy, Phys. Rev. D 68, 094002 (2003). https://doi.org/10.1103/PhysRevD.68.094002arXiv:hep-ph/0307268

V. Khachatryan et al. [CMS], Phys. Lett. B 750, 565–586 (2015). https://doi.org/10.1016/j.physletb.2015.09.057. arXiv:1503.05825 [nucl-ex]

V. Khachatryan et al. [CMS], Phys. Lett. B 759, 36–57 (2016). https://doi.org/10.1016/j.physletb.2016.05.044. arXiv:1512.06461 [hep-ex]

G. Aad et al. [ATLAS], Phys. Rev. C 92(4), 044915 (2015). https://doi.org/10.1103/PhysRevC.92.044915. arXiv:1507.06232 [hep-ex]

V. Khachatryan et al. [CMS], Eur. Phys. J. C 76(8), 469 (2016). https://doi.org/10.1140/epjc/s10052-016-4293-4. arXiv:1603.01803 [hep-ex]

K.J. Eskola, P. Paakkinen, H. Paukkunen, C.A. Salgado, Eur. Phys. J. C 82(3), 271 (2022). https://doi.org/10.1140/epjc/s10052-022-10179-2arXiv:2202.01074 [hep-ph]

R. Aaij et al. [LHCb], JHEP 09, 030 (2014). https://doi.org/10.1007/JHEP09(2014)030. arXiv:1406.2885 [hep-ex]

J. Adam et al. [ALICE], JHEP 02, 077 (2017). https://doi.org/10.1007/JHEP02(2017)077. arXiv:1611.03002 [nucl-ex]

S. Acharya et al. [ALICE], JHEP 09, 076 (2020). https://doi.org/10.1007/JHEP09(2020)076. arXiv:2005.11126 [nucl-ex]

R. Boughezal, J.M. Campbell, R.K. Ellis, C. Focke, W. Giele, X. Liu, F. Petriello, C. Williams, Eur. Phys. J. C 77(1), 7 (2017). https://doi.org/10.1140/epjc/s10052-016-4558-yarXiv:1605.08011 [hep-ph]

M. Cacciari, M.L. Mangano, P. Nason, Eur. Phys. J. C 75(12), 610 (2015). https://doi.org/10.1140/epjc/s10052-015-3814-xarXiv:1507.06197 [hep-ph]

R. Gauld, J. Rojo, L. Rottoli, J. Talbert, JHEP 11, 009 (2015). https://doi.org/10.1007/JHEP11(2015)00910.1007/JHEP11(2015)009arXiv:1506.08025 [hep-ph]

R. Gauld, J. Rojo, Phys. Rev. Lett. 118(7), 072001 (2017). https://doi.org/10.1103/PhysRevLett.118.072001arXiv:1610.09373 [hep-ph]

O. Zenaiev et al. [PROSA], Eur. Phys. J. C 75(8), 396 (2015). https://doi.org/10.1140/epjc/s10052-015-3618-z. arXiv:1503.04581 [hep-ph]

R. Gauld, Phys. Rev. D 93(1), 014001 (2016). https://doi.org/10.1103/PhysRevD.93.014001arXiv:1508.07629 [hep-ph]

G. Kramer, H. Spiesberger, Nucl. Phys. B 925, 415–430 (2017). https://doi.org/10.1016/j.nuclphysb.2017.10.016arXiv:1703.04754 [hep-ph]

I. Helenius, H. Paukkunen, JHEP 05, 196 (2018). https://doi.org/10.1007/JHEP05(2018)196arXiv:1804.03557 [hep-ph]

T. Kneesch, B.A. Kniehl, G. Kramer, I. Schienbein, Nucl. Phys. B 799, 34–59 (2008). https://doi.org/10.1016/j.nuclphysb.2008.02.015arXiv:0712.0481 [hep-ph]

S. Dulat, T.J. Hou, J. Gao, M. Guzzi, J. Huston, P. Nadolsky, J. Pumplin, C. Schmidt, D. Stump, C.P. Yuan, Phys. Rev. D 93(3), 033006 (2016). https://doi.org/10.1103/PhysRevD.93.033006arXiv:1506.07443 [hep-ph]

D. de Florian, R. Sassot, Phys. Rev. D 69, 074028 (2004). https://doi.org/10.1103/PhysRevD.69.074028arXiv:hep-ph/0311227

K.J. Eskola, H. Honkanen, V.J. Kolhinen, C.A. Salgado, Phys. Lett. B 532, 222–230 (2002). https://doi.org/10.1016/S0370-2693(02)01592-7arXiv:hep-ph/0201256 [hep-ph]

S.S. Adler et al. [PHENIX], Phys. Rev. Lett. 98, 172302 (2007). https://doi.org/10.1103/PhysRevLett.98.172302. arXiv:nucl-ex/0610036

P. Duwentäster, L.A. Husová, T. Ježo, M. Klasen, K. Kovařík, A. Kusina, K.F. Muzakka, F.I. Olness, I. Schienbein, J.Y. Yu, Phys. Rev. D 104(9), 094005 (2021). https://doi.org/10.1103/PhysRevD.104.094005arXiv:2105.09873 [hep-ph]

U.A. Acharya et al. [PHENIX], arXiv:2111.05756 [nucl-ex]

P. Aurenche, M. Fontannaz, J.P. Guillet, B.A. Kniehl, M. Werlen, Eur. Phys. J. C 13, 347–355 (2000). https://doi.org/10.1007/s100520000309arXiv:hep-ph/9910252 [hep-ph]

B.A. Kniehl, G. Kramer, B. Potter, Nucl. Phys. B 582, 514–536 (2000). https://doi.org/10.1016/S0550-3213(00)00303-5arXiv:hep-ph/0010289

P. Paakkinen, K.J. Eskola, H. Paukkunen, Phys. Lett. B 768, 7–11 (2017). https://doi.org/10.1016/j.physletb.2017.02.009arXiv:1609.07262 [nucl-th]

P. Paakkinen, K.J. Eskola, H. Paukkunen, PoS DIS2017, 205 (2018). https://doi.org/10.22323/1.297.0205. arXiv:1710.05736 [hep-ph]

J.G. Heinrich, C.E. Adolphsen, J.P. Alexander, K.J. Anderson, J.S. Conway, J.E. Pilcher, A. Possoz, E.I. Rosenberg, C. Biino, J.F. Greenhalgh et al., Phys. Rev. Lett. 63, 356–359 (1989). https://doi.org/10.1103/PhysRevLett.63.356

J. Badier et al. [NA3], Phys. Lett. B 104, 335 (1981). https://doi.org/10.1016/0370-2693(81)90137-4

P. Bordalo et al. [NA10], Phys. Lett. B 193, 368 (1987). https://doi.org/10.1016/0370-2693(87)91253-6

M. Gluck, E. Reya, A. Vogt, Z. Phys. C 53, 651–656 (1992). https://doi.org/10.1007/BF01559743

G. D’Agostini, Nucl. Instrum. Meth. A 346, 306–311 (1994). https://doi.org/10.1016/0168-9002(94)90719-6

J. Pumplin, D. Stump, R. Brock, D. Casey, J. Huston, J. Kalk, H.L. Lai, W.K. Tung, Phys. Rev. D 65, 014013 (2001). https://doi.org/10.1103/PhysRevD.65.014013arXiv:hep-ph/0101032

P.M. Nadolsky, Z. Sullivan, eConf C010630, P510 (2001). arXiv:hep-ph/0110378

A. Kusina, F. Lyonnet, D.B. Clark, E. Godat, T. Jezo, K. Kovarik, F.I. Olness, I. Schienbein, J.Y. Yu, Eur. Phys. J. C 77(7), 488 (2017). https://doi.org/10.1140/epjc/s10052-017-5036-xarXiv:1610.02925 [nucl-th]

M. Bonvini, S. Marzani, T. Peraro, Eur. Phys. J. C 76(11), 597 (2016). https://doi.org/10.1140/epjc/s10052-016-4445-6. arXiv:1607.02153 [hep-ph]

R.D. Ball, V. Bertone, M. Bonvini, S. Marzani, J. Rojo, L. Rottoli, Eur. Phys. J. C 78(4), 321 (2018). https://doi.org/10.1140/epjc/s10052-018-5774-4arXiv:1710.05935 [hep-ph]

K.J. Eskola, H. Honkanen, V.J. Kolhinen, J.W. Qiu, C.A. Salgado, Nucl. Phys. B 660, 211–224 (2003). https://doi.org/10.1016/S0550-3213(03)00257-8. arXiv:hep-ph/0211239

F. Arleo, G. Jackson, S. Peigné, arXiv:2107.05871 [hep-ph]

A.M. Sirunyan et al. [CMS], JHEP 05, 182 (2021). https://doi.org/10.1007/JHEP05(2021)182. arXiv:2102.13648 [hep-ex]

V. Khachatryan et al. [CMS], Eur. Phys. J. C 75(4), 147 (2015). https://doi.org/10.1140/epjc/s10052-015-3364-2. arXiv:1412.1115 [hep-ex]

A. Buckley, J. Ferrando, S. Lloyd, K. Nordström, B. Page, M. Rüfenacht, M. Schönherr, G. Watt, Eur. Phys. J. C 75, 132 (2015). https://doi.org/10.1140/epjc/s10052-015-3318-8arXiv:1412.7420 [hep-ph]

https://www.jyu.fi/science/en/physics/research/highenergy/urhic/npdfs

Acknowledgements

We acknowledge financial support from the Academy of Finland Projects 297058 and 330448 (K.J.E.), and 308301 (H.P.), Xunta de Galicia (Centro singular de investigacián de Galicia accreditation 2019-2022), European Union ERDF, the María de Maeztu Units of Excellence program MDM-2016-0692 and the Spanish Research State Agency, European Research Council project ERC-2018-ADG-835105 YoctoLHC. The Finnish IT Center for Science (CSC) is acknowledged for the computing time through the Project jyy2580. This research was funded as a part of the Center of Excellence in Quark Matter of the Academy of Finland (project 346325).

Author information

Authors and Affiliations

Corresponding author

Appendix A: Comparisons with the older data

Appendix A: Comparisons with the older data

The following figures present the comparisons between the EPPS21 PDFs and the data already included in the EPPS16 fit [1] (Figs. 18, 19, 20, 21, 22, 23, 24, 24, 25, 26, 27).

The structure–function ratios \(F_2^\mathrm{Sn}/F_2^\mathrm{C}\) as a function of \(Q^2\) for several fixed values of x. The data is from the NMC experiment [47]. The solid blue points show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty

Left: The SLAC data [43] for DIS cross-section ratios compared with the EPPS21 analysis. To increase the visibility, the data and theory values have been multiplied by 1.2, 1.1, 1.0, 0.9 for \(Q^2=2\,\mathrm{GeV}^2\), \(Q^2=5\,\mathrm{GeV}^2\), \(Q^2=10\,\mathrm{GeV}^2\), \(Q^2=15\,\mathrm{GeV}^2\). Right: The NMC data [46] for structure–function ratios compared with the EPPS21 analysis. The solid blue points show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty

The charged-current neutrino–nucleus CHORUS data [49] compared with the EPPS21 fit. Blue bands show the nuclear uncertainties, and the purple bands the total uncertainty

As Fig. 22 but for antineutrino–nucleus interaction

Drell–Yan cross-section ratios \(d\sigma ^{\mathrm{p}A} / d\sigma ^\mathrm{pBe}\) measured by the E866 collaboration [57] compared with the EPPS21 analysis. The solid blue points show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty

Drell–Yan cross-section ratios of the E772 collaboration [56] compared with the EPPS21 analysis. The solid blue points show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty

The CMS and ATLAS \(5\,\mathrm{TeV}\) pPb data [60,61,62] for Z (upper panels) and \(\mathrm{W}^\pm \) (middle panels), as well as PHENIX dAu cross-section ratios for inclusive pion production [80] (lowest panel) compared with the EPPS21 analysis. The solid blue lines show our central results, inner blue bands the nuclear uncertainties, and the purple bands the total uncertainty

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Eskola, K.J., Paakkinen, P., Paukkunen, H. et al. EPPS21: a global QCD analysis of nuclear PDFs. Eur. Phys. J. C 82, 413 (2022). https://doi.org/10.1140/epjc/s10052-022-10359-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10359-0