Abstract

Background: With growing emphasis on patient involvement in health technology assessment, there is a need for scientific methods that formally elicit patient preferences. Analytic hierarchy process (AHP) and conjoint analysis (CA) are two established scientific methods — albeit with very different objectives.

Objective: The objective of this study was to compare the performance of AHP and CA in eliciting patient preferences for treatment alternatives for stroke rehabilitation.

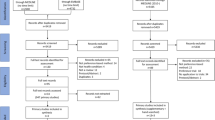

Methods: Five competing treatments for drop-foot impairment in stroke were identified. One survey, including the AHP and CA questions, was sent to 142 patients, resulting in 89 patients for final analysis (response rate 63%). Standard software was used to calculate attribute weights from both AHP and CA. Performance weights for the treatments were obtained from an expert panel using AHP. Subsequently, the mean predicted preference for each of the five treatments was calculated using the AHP and CA weights. Differences were tested using non-parametric tests. Furthermore, all treatments were rank ordered for each individual patient, using the AHP and CA weights.

Results: Important attributes in both AHP and CA were the clinical outcome (0.3 in AHP and 0.33 in CA) and risk of complications (about 0.2 in both AHP and CA). Main differences between the methods were found for the attributes ‘impact of treatment’ (0.06 for AHP and 0.28 for two combined attributes in CA) and ‘cosmetics and comfort’ (0.28 for two combined attributes in AHP and 0.05 for CA). On a group level, the most preferred treatments were soft tissue surgery (STS) and orthopedic shoes (OS). However, STS was most preferred using AHP weights versus OS using CA weights p< 0.001). This difference was even more obvious when interpreting the individual treatment ranks. Nearly all patients preferred STS according to the AHP predictions, while >50% of the patients chose OS instead of STS, as most preferred treatment using CA weights.

Conclusion: While we found differences between AHP and CA, these differences were most likely caused by the labeling of the attributes and the elicitation of performance judgments. CA scenarios are built using the level descriptions, and hence provide realistic treatment scenarios. In AHP, patients only compared less concrete attributes such as ‘impact of treatment.’ This led to less realistic choices, and thus overestimation of the preference for the surgical scenarios. Several recommendations are given on how to use AHP and CA in assessing patient preferences.

Similar content being viewed by others

Notes

These attribute weights were obtained to be able to estimate overall treatment performance (not reported in this paper) and to get familiar with the weighing procedure.

References

Bridges JF, Cohen JP, Grist PG, et al. International experience with comparative effectiveness research: case studies from England/Wales and Germany. Adv Health Econ Health Serv Res 2010; 22: 29–50

Sox HC. Defining comparative effectiveness research: the importance of getting it right. Med Care 2010 Jun; 48(6 Suppl.): S7–8

Gerber A, Dintsios CM. A distorted picture of IQWiG methodology [letter]. Health Aff (Millwood) 2010 Jan–Feb; 29(1): 220–1; author reply 221

Facey K, Boivin A, Gracia J, et al. Patients’ perspectives in health technology assessment: a route to robust evidence and fair deliberation. Int J Technol Assess Health Care 2010 Jul; 26(3): 334–40

Dolan JG. Multi-criteria clinical decision support: a primer on the use of multiple-criteria decision-making methods to promote evidence based patient-centered healthcare. Patient 2010; 3(4): 229–48

Belton V, Steward TJ. Multiple criteria decision analysis: an integrated approach. London: Kluwer Academic Press, 2003

Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics 2008; 26(8): 661–77

Guo JJ, Pandey S, Doyle J, et al. A review of quantitative risk-benefit methodologies for assessing drug safety and efficacy: report of the ISPOR risk-benefit management working group. Value Health 2010 Aug; 13(5): 657–66

Caro JJ, Nord E, Siebert U, et al. The efficiency frontier approach to economic evaluation of health-care interventions. Health Econ 2010 Sep 1; 19(10): 1117–27

Saaty TL. The analytic hierarchy process: planning, priority setting and resource allocation. New York: McGraw Hill, 1980

Dolan JG. Shared decision-making — transferring research into practice: the analytic hierarchy process (AHP). Patient Educ Couns 2008 Dec; 73(3): 418–25

van Til JA, Renzenbrink GJ, Dolan JG, et al. The use of the analytic hierarchy process to aid decision making in acquired equinovarus deformity. Arch Phys Med Rehabil 2008 Mar; 89(3): 457–62

Hummel JM, van Rossum W, Verkerke GJ, et al. Medical technology assessment: the use of the analytic hierarchy process as a tool for multidisciplinary evaluation of medical devices. Int J Artif Organs 2000 Nov; 23(11): 782–7

Shin T, Kim C-B, Ahn Y-H, et al. The comparative evaluation of expanded national immunization policies in Korea using an analytic hierarchy process. Vaccine 2009 Jan 29; 27(5): 792–802

Ryan M, Netten A, Skatun D, et al. Using discrete choice experiments to estimate a preference-based measure of outcome: an application to social care for older people. J Health Econ 2006; 25: 927–44

Mulye R. An empirical comparison of three variants of the AHP and two variants of conjoint analysis. J Behav Decis Mak 1998; 11: 263–80

Meißner M, Scholz S, Decker R. AHP versus ACA: an empirical comparison. In: Preisach C, Burkhardt H, editors. Data analysis: machine learning and applications. Berlin: Springer, 2008: 447–54

Scholl A. ML, Helm R, Steiner M. Solving multi-attribute design problems with analytic hierarchy process and conjoint analysis: an empirical comparison. Eur J Operat Res 2005; 164: 760–77

Weber M, Borcherding K. Behavioral influences on weight judgments in multiattribute decision making. Eur J Operat Res 1993; 67: 1–12

van Til JA, Dolan JG, Stiggelbout AM, et al. The use of multi-criteria decision analysis weight elicitation techniques in patients with mild cognitive impairment: a pilot study. Patient 2008; 1(2): 127–35

IJzerman MJ, van Til JA, Snoek GJ. Comparison of two multi-criteria decision techniques for eliciting treatment preferences in people with neurological disorders. Patient 2008; 1(4): 265–73

Lloyd-Jones D, Adams R, Carnethon M, et al. Heart disease and stroke statistics, 2009 update: a report from the American Heart Association Statistics Committee and Stroke Statistics Subcommittee. Circulation 2009; 119(3): 480–6

Expert Choice. Expert choice 11.5 [computer program]. Desktop version. Arlington (VA): Expert Choice, 2009 [online]. Available from URL: http://www.expertchoice. com/products-services/ [Accessed 2011 Oct 5]

Bryan S, Dolan P. Discrete choice experiments in health economics: for better or for worse? Eur J Health Econ 2004 Oct; 5(3): 199–202

Sawtooth Software. Choice-Based Conjoint (CBC) [computer program]. SSI Web 7.0.26. Sequim (WA): Sawtooth Software, 2008 [online]. Available from URL: http://www.sawtoothsoftware.com/products/cbc/ [Accessed 2011 Oct 5]

Edwards W, Barron FH. SMARTS and SMARTER: improved simple methods for multiattribute utility measurement. Org Behav Human Dec Proc 1994; 60: 306–25

Hummel JM, IJzerman MJ. The use of the analytic hierarchy process in health care decision making. Enschede: Health Technology & Services Research, 2009

Sloane E, Liberatore M, Nydick R, et al. Using the analytic hierarchy process as a clinical engineering tool to facilitate an iterative, multidisciplinary, microeconomic health technology assessment. Comput Oper Res 2003; 30(10): 1447–65

Hatcher M. Voting and priorities in health care decision making, portrayed through a group decision support system, using analytic hierarchy process. J Med Syst 1994 Oct; 18(5): 267–88

Levitan BS, Andrews EB, Gilsenan A, et al. Application of the BRAT framework to case studies: observations and insights. Clin Pharmacol Ther 2011 Feb; 89(2): 217–24

European Medicines Agency. Benefit-risk methodology project. Work package 2 report: applicability of current tools and processes for regulatory benefit-risk assessment. London: EMA, 2010 Aug [online]. Available from URL: http://www.ema.europa.eu/docs/en_GB/document_library/ Report/2010/10/WC500097750.pdf [Accessed 2011 Aug 28]

Kallas Z, Lambarraa F, Gil JM. A stated preference analysis comparing the analytical hierarchy process versus choice experiments. Food Qual Pref 2011; 22(2): 181–92

Helm R, Scholl A, Manthey L, et al. Measuring customer preferences in new product development: comparing compositional and decompositional methods. Int J Prod Dev 2004 Jan 1; 1(1): 12–29

Barzilai J. On the decomposition of value functions. Op Res Lett 1998; 22(4–5): 159–70

Dyer JS, Fishburn PC, Steuer RE, et al. Multiple criteria decision making, multiattribute utility theory: the next ten years. Manag Sci 1992; 38(5): 645–54

Dolan JG. Can decision analysis adequately represent clinical problems? J Clin Epidemiol 1990; 43(3): 277–84

Acknowledgments

This research was supported by The Netherlands Organization for Health Research and Development ZonMw (grant number 143.50.026). The authors have no conflicts of interest that are directly relevant to the content of this study. The opinions expressed in this manuscript are the authors’ own.

Author information

Authors and Affiliations

Corresponding author

Additional information

Key points for decision makers

• Analytic hierarchy process (AHP) and conjoint analysis (CA) are two frequently used approaches for measuring patient preferences for treatment

• Previous studies comparing AHP and CA are not conclusive about the most appropriate method for measuring preferences

• The present study demonstrates differences in weights obtained using either AHP or CA, leading to different rank order for the treatments considered in this study

• These differences were most likely caused by the framing of attributes and levels, and the differences in elicitation format, i.e. either a choice set comparing two scenarios or pairwise comparison of separate attributes

Rights and permissions

About this article

Cite this article

Ijzerman, M.J., van Til, J.A. & Bridges, J.F.P. A Comparison of Analytic Hierarchy Process and Conjoint Analysis Methods in Assessing Treatment Alternatives for Stroke Rehabilitation. Patient 5, 45–56 (2012). https://doi.org/10.2165/11587140-000000000-00000

Published:

Issue Date:

DOI: https://doi.org/10.2165/11587140-000000000-00000