What Is My Next Step? School Students' Perceptions of Feedback

- 1School of Education, The University of Queensland, Brisbane, QLD, Australia

- 2Institute of Social Science Research, The University of Queensland, Brisbane, QLD, Australia

- 3Melbourne Graduate School of Education, The University of Melbourne, Melbourne, VIC, Australia

- 4Faculty of Humanities and Social Sciences, The University of Queensland, Brisbane, QLD, Australia

- 5School of Education, The University of Queensland, Brisbane, QLD, Australia

Feedback literature is dominated by claims of large effect sizes, yet there are remarkable levels of variability relating to the effects of feedback. The same feedback can be effective for one student but not another, and in one situation but not another. There is a need to better understand how students are receiving feedback and currently there is relatively little research on school students' perceptions of feedback. In contrast, current social constructivist and self-regulatory models of feedback see the learner as an active agent in receiving, interpreting, and applying feedback information. This paper aims to investigate school student perceptions of feedback through designing a student feedback perception questionnaire (SFPQ) based upon a conceptual model of feedback. The questionnaire was used to collect data about the helpfulness to learning of different feedback types and levels. Results demonstrate that the questionnaire partially affirms the conceptual model of feedback. Items pertaining to feed forward (improvement based feedback) were reported by students as most helpful to learning. Implications for teaching and learning are discussed, in regard to how students receive feedback.

Introduction

The role of feedback in learning has been researched since the early 1900's with the vast majority of studies focusing upon measuring the effects of feedback. This appears logical given that early, behaviouristic models viewed feedback as a uni-directional transfer of information from the expert to the novice to enable the advancement or extinction of learning. For instance, Thorndike's (1933) law of effect was critical in developing understandings that feedback was a stimuli or message that could cause learners to adapt their initial response. Similarly, Skinner (1963) built upon this principle by using positive and negative feedback messages as a reinforcement to modify behavior to achieve desired outcomes. This use of feedback as an extrinsic motivator through punishment or reward has negative effects upon learning (Dweck, 2007). Instead, current social constructivist and self-regulatory models of feedback see the learner as an active agent in receiving, interpreting, and applying feedback information (Thurlings et al., 2013). The current view of feedback has shifted from information that has been given to the learner (teacher perspective) to how learners receive feedback (student perspective) (Hattie et al., 2016), further focus must be directed to how learners view and action feedback (Jonsson and Panadero, 2018). Student perceptions of feedback is an important part of the feedback process or cycle (Shute, 2008). Student engagement is crucial for feedback to be effective for learning. Student perceptions of feedback therefore must be positive so that feedback is interpreted and used to progress learning (Nicol and Macfarlane-Dick, 2006). Whilst, feedback literature is dominated by claims of large effect sizes (Hattie, 2009b) and calls for pedagogical reform (Wiliam, 2016), there is relatively little research on school students' perceptions of feedback (Peterson and Irving, 2008; Hattie and Gan, 2011; Gamlem and Smith, 2013; Harris et al., 2014; Murtagh, 2014).

This study is part of a wider, 3-year Australian Research Council (ARC) funded study titled Improving Student Outcomes: Coaching teachers in the power of feedback. The aim of this particular study was to investigate school student perceptions of feedback and to validate a student feedback perception questionnaire (SFPQ) based upon Hattie and Timperley's (2007) conceptual model of feedback. The study is framed by the research question: Which types and levels of feedback are most helpful to students during the completion of an English writing task? In order to answer this question, a SFPQ was used to gather data about the helpfulness of different feedback types and levels. Results demonstrate that the questionnaire partially affirms the conceptual model of feedback and notably, students report that items related to improvement-based feedback are the most helpful to learning. The paper begins by examining current views of feedback and then reviews the research upon school students' perceptions of feedback.

Feedback Perspectives

Current views of feedback draw upon both self-regulatory and social constructivist perspectives. Self-regulatory feedback models are underpinned by the principle that students are central and active to the feedback process (Brookhart, 2017). Nicol and Macfarlane-Dick's (2006) model of feedback and self-regulated learning aptly explain this. The model begins with the teacher setting a task and then the student is foregrounded as they create paths of internal feedback by engaging with the task, setting goals, devising strategies, and monitoring their own progress and behavior. The model does not assume that students will be proficient with the skills of self-regulation, rather the teacher is there to provide feedback and support. Boud and Molloy (2013) stressed that self-regulation goes beyond learners being givers and receivers of feedback, rather self-regulation requires learners interpreting, evaluating, and using feedback for improvement based actions. Wiliam (2011) argued that formative assessment provides the catalyst for evidence based discussion about the next steps for improvement. He stated that formative processes about learning are more effective when the student is an active participant, such as through participating in peer feedback and self-assessment activities.

Similarly, within a social constructivist view, students receive feedback from peers and teachers, which, after processing, takes them to a new learning stage before learning outcomes are evidenced. The cycle of feedback is then repeated as students again receive feedback on their recent learning outcomes. In a review of feedback using social constructivist perspectives, Rust et al. (2005) highlighted many problems with feedback practice, including students perceiving feedback as: rarely helpful, difficult to understand, not directed to the task, and often arriving too late in the learning period. In response to such problems, Rust et al. advocated for a social constructivist perspective as a means of making feedback more effective in the classroom. They stated that learners needed to be active participants in the feedback process rather than passive recipients who wait for feedback that may never arrive or be unhelpful for their learning needs. More recently, Lipnevich et al. (2016) suggested a student interaction model of feedback that highlights how feedback is received by the learner and how subsequent action on the feedback is mediated by the learner's characteristics such as ability, emotional state, or expectations.

Seminal work in the field by Sadler (1989) conceptualized three conditions for effective feedback: (1) learners need to know the standards for assessment; (2) learners need to be provided with opportunities to compare their work to the standards; and (3) learners must take action to close the gap identified from analysis of the first two conditions. Building upon these conditions and positioning learners as active agents who co-construct knowledge and understanding within the feedback process, Hattie and Timperley (2007) proposed a model of feedback that is guided by three questions from the learner's perspective: Where am I going? How am I going? What is my next step? This paper argues that using a social constructivist perspective emphasizes that feedback is most powerful when it is viewed from the perspective of the person receiving the feedback.

Review of Student Perceptions of Feedback

It has been widely acknowledged that school students' perceptions of feedback are relatively under researched (Peterson and Irving, 2008; Hattie and Gan, 2011; Gamlem and Smith, 2013; Harris et al., 2014; Murtagh, 2014) with the majority of studies conducted in the senior secondary or tertiary setting. This current review of student perceptions of feedback found that: (1) students perceive feedback to be unhelpful when it is vague, negative, or critical and is without guidance (Harris et al., 2014); (2) feedback must link students' work to the assessment criteria for it to be perceived as helpful (Brown et al., 2009); and (3) feedback following summative assessment is too late, needing instead to be provided formatively during the learning process (Smith and Lipnevich, 2009; Pokorny and Pickford, 2010). Due to the limited amount of research in this area, this paper uses themes from primary, secondary and tertiary students' perceptions of feedback to investigate research on middle school aged students' feedback perceptions.

Students perceive that feedback is often vague, unclear, or is unhelpful to learning (Harris et al., 2014). Pajares and Graham (1998) researched Year 8 school students' perceptions of the purpose of feedback in poetry lessons. They sought essay responses from 216 Year 8 students. This study established that students sought constructive, purposeful feedback rather than praise or encouragement. Building on this finding, Peterson and Irving (2008) found that students reported receiving too many “warm fuzzies” rather than honest appraisal. Using focus group methods with 41 students in Years 8 and 9, Peterson and Irving reported that students desired feedback that told them how to improve, yet much of the feedback they received from teachers pointed to areas for improvement without actually showing them the steps to do this. Gamlem and Smith (2013) made similar findings when they interviewed 11 students aged 13–15 in Norway about the feedback practices that existed in their classes. These students reported positive feedback as confirmatory of performance, achievement and effort, and importantly, pointed the learner toward the next steps for improvement. In this study, students reported negative feedback as outcome feedback, feedback that was given at the conclusion of the learning period. Aligning with findings from Peterson and Irving (2008), students reported that this negative or evaluative feedback told them only that they needed to improve without telling them how to do this. Beaumont et al. (2011) similarly used both focus group and interview methods in a study with 37 senior school students. They found that students reported effective feedback as being (i) part of a dialogic guidance process between student and teacher, and (ii) improvement focused rather than a summative judgement given at the conclusion of the learning period. These findings were consistent with recent research conducted by Van der Kleij et al. (2017), who focused on six teacher-student feedback conversations between Year 9 students and teachers across a range of subject contexts. They found that providing the opportunity for video-recorded dialogic conversations enabled students to be given a voice in the feedback process and teachers the opportunity to adjust their feedback based upon the knowledge of their students as learners. These studies highlighted the need for guidance and support of feedback processes to be effective in promoting the next steps for student learning.

For feedback to be effective, it must match the criteria for assessment (Brown et al., 2009). Beaumont et al. (2011) in a study involving 145 students from higher and tertiary education, reported that students cite preparatory guidance (including the explanation of criteria) as a key stage of the dialogic feedback cycle, because it gives students clarity of teacher's expectations. Likewise, Gamlem and Smith (2013) found that students needed knowledge of assessment criteria to be able to implement feedback messages effectively. Murtagh (2014) focused upon feedback strategies using case study data from two experienced Year 6 teachers and their students. This study highlighted the issue of discrepancy between espoused practice and implemented practice. In this instance, teachers thought they were providing descriptive feedback related to the criteria for assessment, yet when given time for reflection, self-discovered that they were not providing such feedback. This led to students reporting that feedback that focused on the basics such as spelling, grammar, and punctuation was less helpful than feedback that was matched to the key assessment objectives.

Students' perceived the timing of feedback as essential in feedback for learning (Pokorny and Pickford, 2010; West and Turner, 2015). Gamlem and Smith (2013) found that school students were seldom given time to implement feedback, and as a result, the feedback was often abandoned, generating affective emotions such as anger and frustration. Beaumont et al. (2011) similarly reported that students felt that for feedback to be effective, they needed to receive it in time to be able to implement the advice. This study therefore investigates school students' perceptions of the timing of feedback, particularly the implementation of “feed forward,” i.e., information that closes the gap between the student's current and future learning progress.

The role of feedback as a motivator for action was a prevalent concept within research regarding school student perceptions of feedback. Peterson and Irving (2008) reported that both the quantity and content of the feedback affected student motivation. Students reported that too little feedback or feedback that was perceived as inaccurate was demotivating. Similarly, too much corrective feedback resulted in students being overwhelmed and possibly ignoring the feedback message. The quandary for teachers to provide appropriate quantity and content of feedback is exacerbated by Peterson and Irving's finding that in most cases students in their sample did not act on the feedback. In Murtagh's (2014) aforementioned case studies, the content or focus of feedback that was received by the learner influenced the subsequent action taken to implement the feedback. Reflecting the idea of learning constancy (Weiner, 1984), Murtagh confirmed that the teachers' feedback on the basics of their students' work was at the expense of other key learning objectives. It was only in reflection that the teachers realized that their feedback was mainly phatic (affirming the exchange of information) and focused on areas of surface rather than deep learning. Murtagh's research highlighted the dilemma that teachers face as they try to mark as much work as possible to show value to their students, yet time constraints destine their marking to be often cursory in nature.

The review of literature demonstrated that students hold particular views of feedback. Students reported that effective feedback is timely, criterion referenced, and focused on improvement, yet they claim they rarely receive such feedback. Using a social constructivist view of learning, this research sought to explore which feedback was most helpful to the learner and this study was therefore designed to investigate student perceptions of feedback by positioning feedback as information that is received rather than given (Hattie et al., 2016).

Review of Measurement of Feedback

The present study proposed to utilize a questionnaire as a data collection tool, and accordingly, a further review was conducted on the use of questionnaires in this field. Further analysis of the two aforementioned studies regarding middle school students' perceptions of feedback (Peterson and Irving, 2008; Harris et al., 2014) revealed that items consistently referred to the term “feedback” in a general sense. This required students to have conceptual understanding of the term “feedback” and be able to relate this knowledge to learning experiences from the classroom. Peterson and Irving (2008) established that students held narrow views of what constituted assessment. Students typically perceived assessment to be formal summative assessments, whilst students did not recognize informal assessments such as self-assessment as assessment. Student perceptions of assessment may also be indicative of their perceptions of what constitutes feedback. In practice, the term feedback is not required to be explicitly stated for feedback practices to occur. This potentially results in a discrepancy between students' interpretation of feedback as stated in the data collection measures and the feedback practices that occur in the classroom. As such, an avenue exists to include explicit, practical examples of feedback in data collection instruments when investigating student perceptions of feedback.

A review of studies that used questionnaires to investigate school students' perceptions of feedback highlighted the limited research in this area and difficulties in validating instruments. Peterson and Irving (2007) developed a 55-item Student Conceptions of Feedback questionnaire (SCoF) for secondary school students. Exploratory and confirmatory factor analysis (CFA) identified six components: “feedback comes from teachers; feedback motivates me; feedback provides information; feedback is about standards; the qualities of good feedback; and the purpose of feedback to be help seeking” (Peterson and Irving, 2007, p. 14–15). However, the authors reported the fit of the model was only marginally acceptable. In a study of 193 Year 5–10 students, Harris et al. (2014) used an updated 33 item version of the SCoF, but could not replicate the same factor structure, instead finding three factors: comments for improvement, interpersonal factors, and negative factors. The researchers consequently surmised that their sample size may have been too small, which could have led to variation in the results. Strijbos et al. (2010) used an 18-item questionnaire to measure secondary school students' perceptions of peer feedback and confirmed structural validity for 5 scales being: fairness, usefulness, acceptance, willingness to improve, and affect. No other questionnaires were found that measured middle school students' perceptions of feedback type or feedback level. A questionnaire was therefore designed to specifically meet the needs of this research.

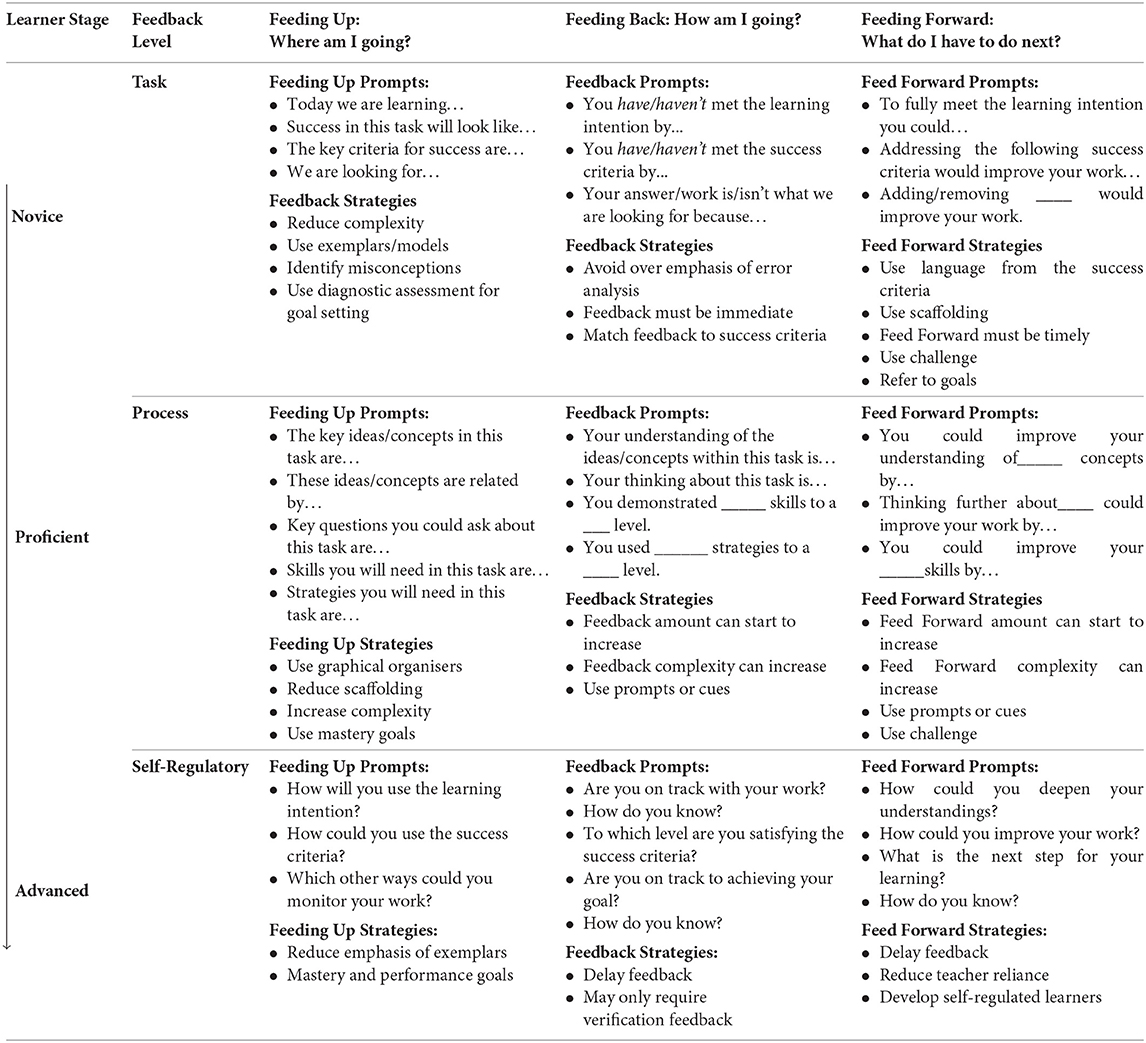

Hattie and Timperley's (2007) feedback types and levels were used as the conceptual framework for the investigation and for the questionnaire design. This conceptual framework delineates feedback first into three types: feeding up, feeding back, and feeding forward, and second into four levels: task, process, self-regulatory, and self. The three feedback types correspond to Hattie and Timperley's (2007) three feedback questions (Where am I going? How am I going? What is my next step?). These questions position the feedback types from the learner's perspective. This is imperative as research has shown that giving feedback provides no guarantee that it will be used, rather feedback needs to be viewed from how it is received (Hattie and Gan, 2011). Hattie and Timperley's four levels of feedback (task, process, self-regulation, and self) are designed to match the content and focus of feedback to the learning stage of individual students. Task level feedback provides information about the task requirements, process level feedback is aimed at the skills and strategies required to complete the task, and self-regulatory feedback is designed to facilitate learner self-improvement. Feedback to the self-level was not included in this study, as evidence would suggest it has negative effects on learning due to its common association with praise (Kluger and DeNisi, 1996; Dweck, 2007; Hattie, 2009a). Hattie (2012) further explains that task level feedback is most helpful for novice learners whilst process and self-regulatory feedback can be more helpful for proficient or expert learners. This investigation sought to answer the research question: which types and levels of feedback are most helpful to students?

Context of the Study

In this study, The Australian Curriculum Learning Area, English and the Year 5 Achievement Standard for English were used as the context to explore feedback practices. The Australian Curriculum sets the content and the expected quality of learning for students through Learning Areas, General Capabilities, and Cross-curriculum Priorities (Australian Curriculum, Assessment and Reporting Authority, 2010)1. The English Curriculum focuses on language, literature, and literacy and aims to develop students' receptive and productive knowledge and skills through analyzing and creating imaginative, informative, and persuasive texts.

Methods

Participants and Setting

Participants in the study were drawn from 13 Government schools in Queensland, Australia. The student populations of the 13 schools represent a range of multi-cultural and socio-economic background. In Australia, schools are compared using the Index of Socio Economic Advantage (ICSEA) with the mean score of 1,000, thus scores below 1,000 indicate lower than average socio-economic advantage whilst scores above 1,000 indicate higher than average socio-economic advantage. Scores of the 13 schools in the study ranged from 964 to 1,171. The schools, together with The Queensland Department of Education, joined the universities as partners in this research. One thousand two hundred and twenty-five Year 5 students aged between 9 years and 6 months and 10 years and 11 months from 54 classes were initially invited to participate in the study. Written and informed parent consent and student assent to participate was received from 807 parents and students. After removing students with missing or incomplete data, 691 students remained in the study with a sample consisting of male (n = 317) and female participants (n = 374). This project complies with the provisions contained in the National Statement on Ethical Conduct in Human Research and complies with the regulations governing experimentation on humans. The protocol was approved by The University of Queensland Human Research Ethics Committee.

Instrument Design

A questionnaire was selected as the data collection measure due to its potential to incorporate a wide range of response items and its ability to be administered to a student sample with relatively minimal disruption to learning. As a review of the literature failed to find a questionnaire that suitably measured feedback type and level, a questionnaire was developed to measure middle school aged students' responses about the helpfulness to learning of different feedback types and levels. The questionnaire was trialed within the context of the Year 5 English curriculum.

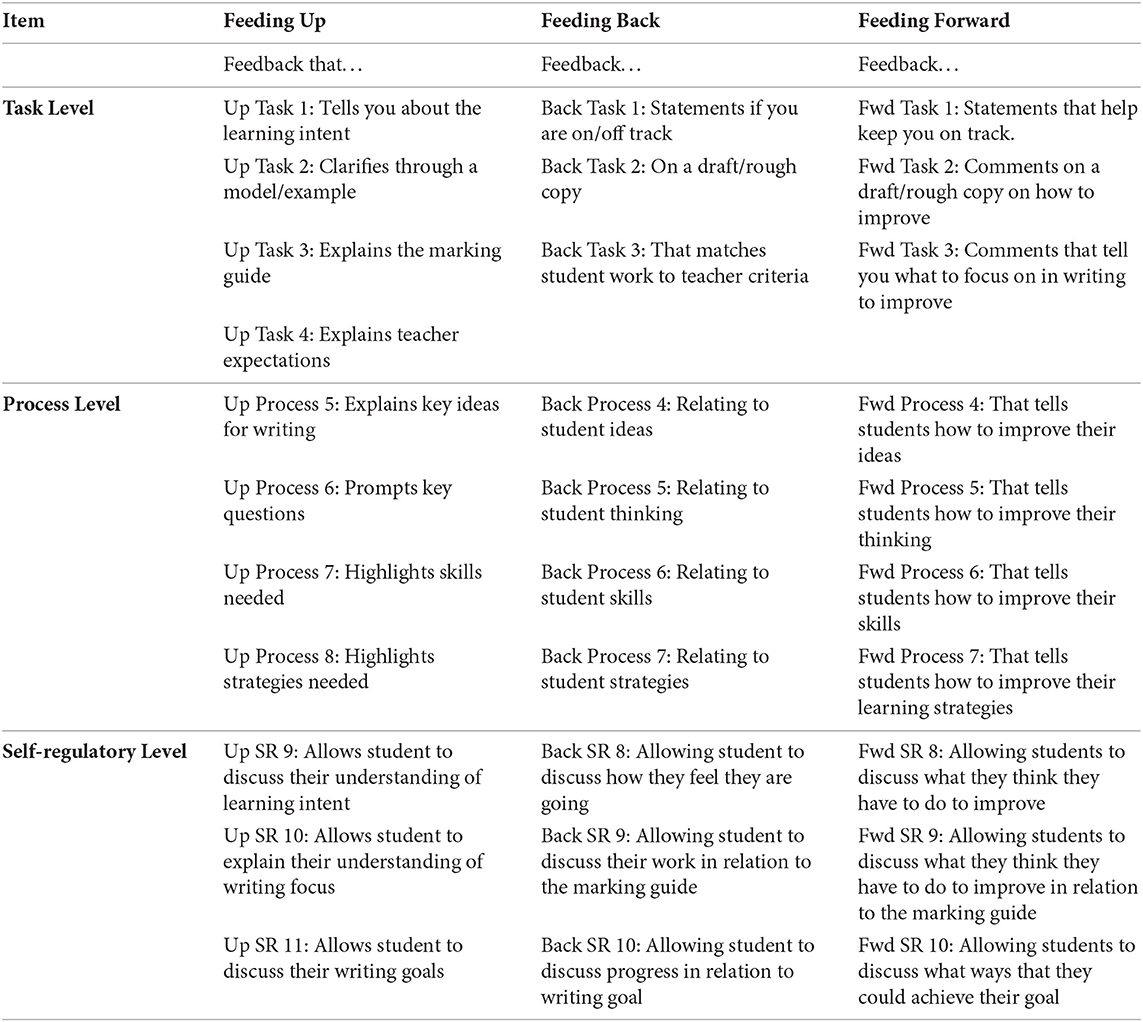

The questionnaire was organized into three sections according to feedback type and level as defined by Hattie and Timperley (2007). Three feedback types (feed up, feed back, and feed forward) were divided into three feedback levels (task, process, and self-regulation), thereby forming a three-by-three feedback matrix. As noted in the literature review, the self-level was not included in this research due to the established research on the detrimental effects of self-level feedback on learning (Kluger and DeNisi, 1996; Dweck, 2007; Hattie, 2009a). The feedback matrix and a description of the questionnaire items are found in Appendices 1, 2. Student responses could then be measured according to the three feedback types, the three feedback levels or the nine sub-sections that are intersected with both feedback type and level. An example of this would be feeding up at the task level. Items for the questionnaire were derived from a feedback for learning matrix (Brooks et al., 2019) that was designed upon Hattie and Timperley's model of feedback.

Item Selection

The first section of the questionnaire categorized items according to feeding up, which was then further categorized into three feeding up subsections according to the three feedback levels (task, process, and self-regulation). Four question items proposed for feeding up at the task level were generated from concepts from the feedback matrix such as statements of learning intent, clarification of the success criteria, the use of models and exemplars, and specific statements of “what I'm looking for.” Similarly, four items were proposed for feeding up at the process level. These items pertained to ideas, questions, skills, and strategies. Finally, three items were proposed for feeding up at the self-regulatory level relating to the feedback matrix concepts of student understanding of the learning intent, students' use of the success criteria and student goal setting. The same process as detailed above were repeated for feeding back and feeding forward.

In total, the SFPQ comprised 31 items. All response items required the students to give a 1–5 rating on a Likert scale of how helpful the particular feedback example was, with a rating of 1 being very unhelpful and 5 being very helpful.

Face Validity

Face validity was sought from four experienced primary school teachers. Face validity refers to the degree to which an instrument appears to measure what it claims to measure (Gay et al., 2011). The teachers reviewed the content, scale, length, and wording of the SFPQ to determine the appropriateness for Year 5 participants. As a result, items 1.2, 1.3, 2.9, and 3.9 required rewording, as they may have been confusing to students. In item 1.2, the term “example” was added to the term “model,” and in the other items, the term “GTMJ” was changed to “Marking guide.”

Procedure

Meetings were held to issue the questionnaires to teachers and explain the instructions for implementation. The classroom teacher administered the questionnaire to his/her students in order to make the students feel comfortable with the process. The instructions included the date of implementation, the use of a code to de-identify student responses and seating guidelines. Each teacher was directed to introduce the questionnaire, provide a reminder of ethical considerations, read aloud each item, and provide basic clarification to help student comprehension. The students were required to circle a number ranging from 1 to 5 to indicate their response for each item. Completed questionnaires were collected by the researchers for analysis.

Data Analysis

Quantitative data from the 31 items of the SFPQ were analyzed with exploratory factor analysis (EFA), Cronbach's alpha, structural equation models (SEM), and through the comparisons of means.

The Exploratory Factor Analysis of Feedback Variables

Exploratory factor analysis using maximum likelihood was conducted to uncover the underlying constructs using the individual items in the SFPQ. Exploratory factor analysis is an important statistical method to discover the factor structure of the latent constructs (Thompson, 2004). It does so by calculating the factor loadings using the correlation matrix of the input variables. Factor loadings measure the associations between the input variables and the resulting factors. Each input variable ideally loads highly onto one and only one factor (Fleming, 2003). Relatedly, another important purpose of using exploratory factor analysis is to examine the dimensionality of the scale (Conway and Huffcutt, 2003). In this research, the oblique rotation method was used to allow for correlations between the resulting factors. This method was used because it often yields more interpretable factors than specifying the factors to be uncorrelated.

Testing Reliability of Feedback Measures

The estimate of reliability of the three factor structure identified in the exploratory factor analysis was assessed using Cronbach's alpha (Cronbach, 1951). Reliability refers to internal consistency or stability of the scale (Davidshofer and Murphy, 2005). Different items are different attempts to measure the same constructs. If the items are consistently answered, they are reliable measures of the constructs. Cronbach's alpha is the established measure of reliability. Values of alpha over 0.7 indicate a reliable scale (DeVellis, 2012). Cronbach's alpha was calculated for each of the three factors identified in the exploratory factor analysis.

The Confirmatory Analysis of the Relationships Between Latent Feedback Variables: Structural Equation Modeling

Structural equation modeling (SEM) with maximum likelihood was used to examine the model fit of this factor structure to the data, obtain standardized factor loadings, and compute the weighted mean of the three factors. SEM is the analysis of causal structures of non-experimental data (Blunch, 2008). It has the advantage over other types of statistical techniques because it has a clear theoretical rationale, differentiates between what is known and unknown, and sets conditions for posing new questions (Kline, 2015). SEM models were built and estimation of these models was conducted on the existing data set using Stata version 14.2.

Descriptive Statistics

Quantitative results from the SFPQ were also analyzed through the comparison of means. Student responses to the helpfulness of feedback were grouped according to the factor structure identified in the exploratory factor analysis. Mean scores for each factor were found by calculating the weighted means for the individual items 1–5 Likert scale responses on the SFPQ. Weights were calculated using the standardized factor loadings.

Results

Exploratory Factor Analysis

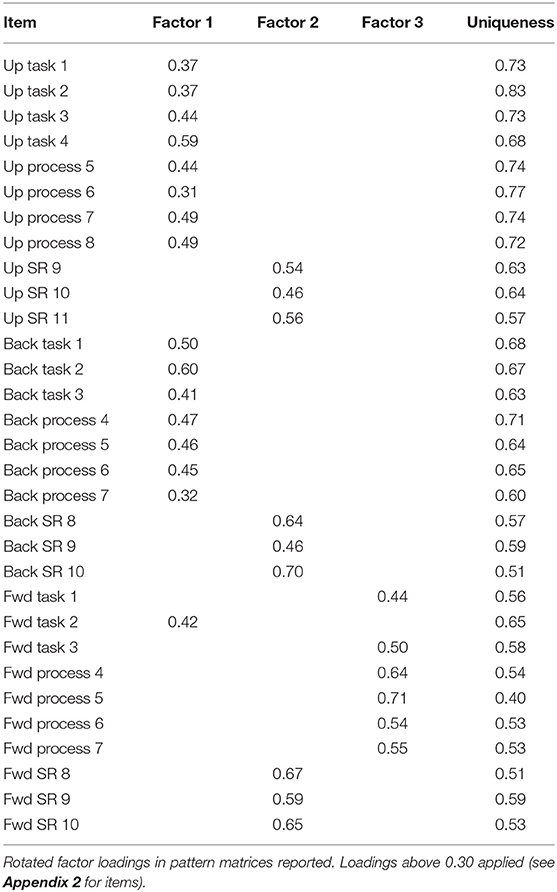

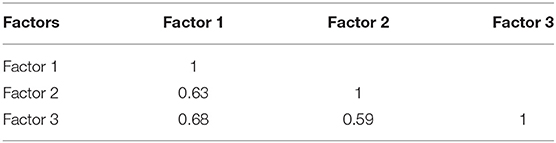

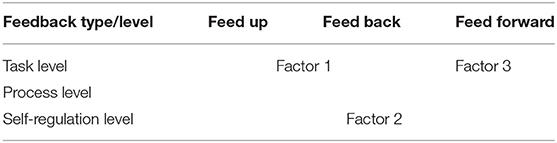

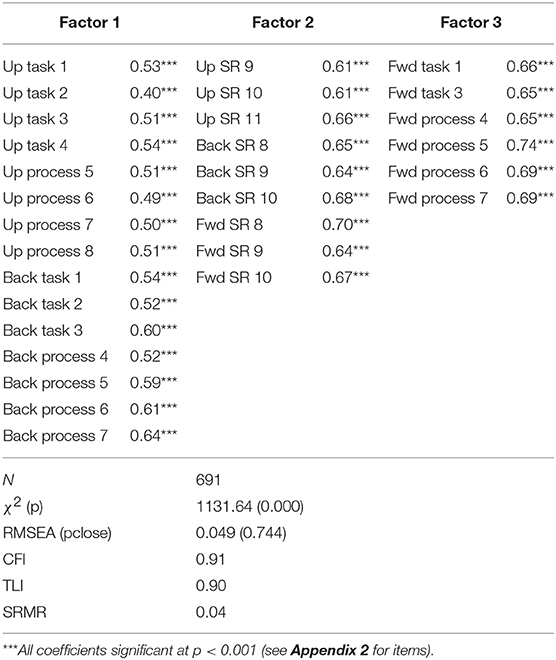

Maximum likelihood factor analysis was conducted to verify the unidimensionality of the factor constructs in preparation for causal analysis in SEM. This provides evidence that each feedback type is unidimensional (Conway and Huffcutt, 2003). All items loaded onto a unique factor with the exception of item 3.2, which cross-loaded onto factor 1 and factor 3. In Table 1, we report the rotated factor loadings in pattern matrices of the exploratory factor analysis output. Comparing the loading patterns with the conceptual model of feedback type and level as described in the feedback matrix (Brooks et al., 2019), we find that although these three factors do not strictly follow the three feedback types or feedback levels, they show a partially cross-classified structure of feedback types and levels. Factor 1 consists predominantly of items of feeding up and feeding back at both task and process levels, factor 2 consists of items of self-regulation level feedback, and factor 3 consists of items of feeding forward at both task and process levels. Table 2 illustrates how the 3-factor solution of the exploratory factor analysis compares to the 3 levels and 3 types of feedback from the conceptual model of feedback. The three factors are highly inter-correlated with all correlations close to or above 0.6 (see Table 3).

Table 2. The structure of the 3 factor exploratory factor analysis in relation to the conceptual model of feedback.

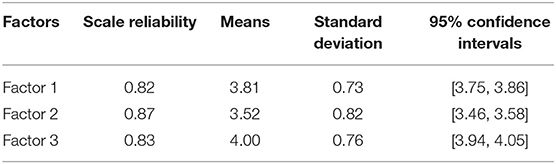

Reliability of Measures for Feedback Type

Cronbach's alpha is 0.86 for factor 1, 0.87 for factor 2, and 0.84 for factor 3. These results indicate high reliability of measures in each factor.

Structural Equation Modeling Results

Confirmatory factor analysis was conducted to examine the model fit of the exploratory factor structure to the data; obtain standardized factor loadings; and compute the weighted mean of the three factors. Table 4 reports standardized estimates and model fit statistics for the structural equation model for the factor structure. Results demonstrate that the factor structure in Table 1 fits the data well. All items contribute positively to measuring the factors. Most standardized factor loadings are above 0.5, indicating that items measure the factors properly. All estimates are statistically significant at 0.1% level.

Table 4. Standardized factor loadings and model fit statistics for the confirmatory factor analysis.

Model fit statistics show that the SEM fits data well. Although the likelihood-ratio chi-square test is statistically significant (p < 0.05), it is well-documented that the chi-square test statistic is very sensitive to sample size (Bollen, 1989; Schermelleh-Engel et al., 2003; Vandenberg, 2006; Barrett, 2007). As such, the degree of model fit should be based on other indices, such as Standardized Root Mean Square Residual (SRMR, absolute fit index) and Tucker-Lewis Index (TLI, relative fit index) (Vandenberg, 2006). The root mean square error of approximation (RMSEA) in Table 4 is 0.049 (below 0.08) and is not statistically significant (pclose > 0.05). The Comparative Fit Index (CFI) and TLI are both above 0.9. The SRMR is below 0.08. All these indices suggest good model fit (Hu and Bentler, 1999; Hooper et al., 2008; Kline, 2015).

Descriptive Statistics

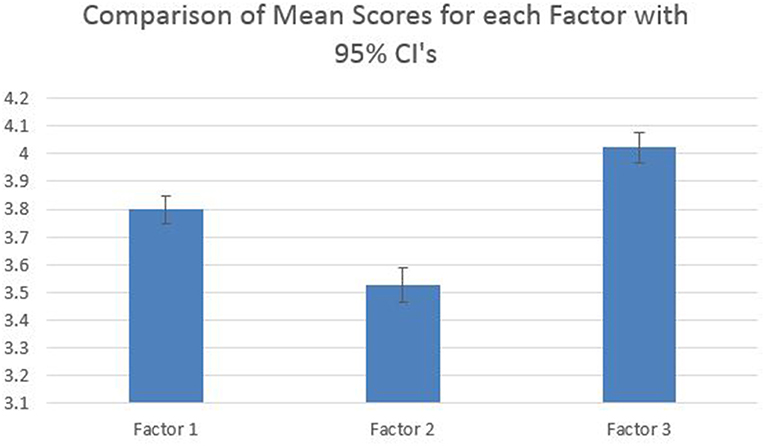

A comparison of weighted means for the three factors identified in the EFA was calculated with 95% confidence intervals. Factor 3 was rated the most helpful feedback type on the 1–5 Likert scale (M = 4.02, SD = 0.73) with 95% CI's [3.97, 4.08]. Factor 1 was rated second in order of helpfulness (M = 3.80, SD = 0.69) with 95% CI's [3.75, 3.85] followed by Factor 2 (M = 3.53, SD = 0.82) with 95% CI's [3.47, 3.59]. These results are illustrated in Figure 1.

Figure 1. Mean scores for feedback helpfulness on a 1–5 scale according to factor with 95% confidence intervals.

Discussion

The results from this research study were analyzed for the purpose of validating the SFPQ as a tool to measure student perceptions of feedback received whilst undertaking a writing task. First, the data collection instrument, the SFPQ, was analyzed for structural validity using both exploratory (factor analysis) and confirmatory (SEM) methods. Second, reliability tests were performed using Cronbach's alpha. Finally, descriptive statistics (analysis of means) were used to analyse the SFPQ data. Key findings include: (1) the SFPQ affirms the conceptual model of feedback type and level; (2) feeding forward is a distinct feedback type; (3) up and back feedback types are highly inter-related with task and process feedback levels; and (4) self-regulation is a unique feedback level.

The SFPQ Partially Affirms the Conceptual Model of Feedback Type and Level

The SFPQ was designed using the structure of Hattie and Timperley's (2007) conceptual model of feedback. Items were selected that represented feedback according to type and level. Exploratory factor analysis demonstrated that the survey items fit a three factor structure with loadings above 0.3. Whilst the three-factor structure of the exploratory factor analysis does not strictly follow the three feedback types and three feedback levels it does partially affirm the conceptual model of feedback as illustrated in Table 2. Factor 1 consists of items of feeding up and feeding back at both the task and process level. Factor 2 consists of items pertaining to self-regulation, and Factor 3 consists of items of feeding forward at both the task and process levels. Cronbach's alpha indicates high reliability of measures for the three factors. The structural equation model results demonstrate good model fit for the data.

Feeding Forward Is a Unique Feedback Type

Results from the exploratory factor analysis demonstrated that feeding forward was a distinct feedback type in comparison to feeding up and feeding back. This suggests that students in the study perceived feeding forward differently to feeding up and feeding back. Hattie and Timperley (2007) state feeding forward provides information to students about their next steps for learning. Brookhart (2012) asserts that when students receive information that clarifies their next steps for improvement, they gain control of their learning, which can lead to enhanced motivation and cognition. Brookhart does however note that feedback from the teacher is an external regulation and it is only when the student acts on the feedback that internal regulation is used and learning occurs. As such, feed forward, information that is used by the learner to improve, has been linked to the development of self-regulatory learning (Nicol and Macfarlane-Dick, 2006; Sadler, 2010; Boud, 2013). The underlying principle is that the provision of feedback is no guarantee of it being used (Hattie and Gan, 2011). Furthermore, if students are positioned as passive in the learning process, then feed forward information may never arrive. Boud and Molloy (2013) argue that students should not have to wait to receive feed forward information, rather, sustainable, student centered models of feedback should be used where students have agency to generate their own feed forward information. Such models require students being cognisant of standards and quality, and the use of strategies where students are active in the feedback process such as self-monitoring and self-evaluating (Boud and Molloy, 2013).

Analysis of student responses in this research demonstrates that students rated factor 3, characterized by items of feeding forward, the most helpful feedback type. Feeding forward is feedback information that is improvement focused and clarifies the next steps for learning (Hattie and Timperley, 2007). The finding that feed forward information is highly valued by students is supported by the findings of other research. Peterson and Irving (2008), Beaumont et al. (2011), and Gamlem and Smith (2013) used interview or focus group methods and similarly found that students reported they wanted constructive feedback that not only tells them what to improve but explains how to do it. In studies using questionnaires, Weaver (2006) and Ferguson (2011) highlighted the importance tertiary students place on improvement-based feedback. Students reported that feedback comments that did not contain improvement-based feedback were unhelpful; rendering that the feed forward component was critical for feedback to be effective.

These findings have implications for teaching and learning in schools. Feedback is tied closely to assessment (Hattie, 2012) and it is only after assessment that teachers know their students' performance (Wiliam, 2011) and thus can provide feed forward information about how to improve. In schools, feedback and feed forward information in particular, is heavily mediated by decisions on assessment processes. Curricula that are weighted toward the use of summative assessments at the conclusion of learning units are less likely to create the conditions for students to receive feed forward about improvement. Indeed, the very purpose of summative assessment is to make evaluation and judgements at the end of learning (Rowntree, 2015). Formative assessment, however, gives both teachers and students multiple opportunities to provide, receive, and generate feed forward information that is improvement focused in time for learning (Wiliam, 2011; Rowntree, 2015). Formative assessment directs learners' and teachers' attention toward closing the gap between students' current and desired standards (Wiliam, 2011). Hence, formative assessment is likely to be a key driver in generating the improvement focused feed forward that students' value.

Up and Back Feedback Types Are Highly Interrelated With Task and Process Feedback Levels

Results from the exploratory factor analysis demonstrate that student perceptions of up and back feedback types are highly interrelated with task and process feedback levels. Hattie and Timperley (2007) define feeding up (where the learner is going) as information that clarifies success for students. In the classroom, feeding up is typically clarified for students through the explicit communication of success criteria and the use of models and exemplars (Price and O'Donovan, 2006; Schunk and Zimmerman, 2007; Clarke, 2014). Whilst Hattie and Timperley's (2007) conceptual model points to distinct levels of feedback, this research found that student perceptions of feeding up are highly interrelated at the task and process levels. Task level feeding up is aimed at clarifying the task requirements whilst process level feeding up is directed to the processes, skills, or strategies required to complete the task. An examination of the success criteria from the Australian Curriculum (Australian Curriculum, Assessment and Reporting Authority, 2017)1 used in the English Unit in this research demonstrates that task and process level feeding up are intertwined. An example of a key criteria used in the unit work was, “Develops and explains a point of view about a text, selecting information, ideas and images from a range of resources.” This criterion clearly has both task and process level components. Teachers typically communicate these criteria as they are written and do not separate them into task (the what) and process (the how). Learning occurs in context (National Research Council, 2000) and students want to know both what they have to do and how to go about that.

Similarly, results from this research demonstrate that feeding back was also highly interrelated at both the task and process levels. Feeding back is information related to progress in relation to the success criteria (Hattie and Timperley, 2007). Findings from this study demonstrate student perceptions of feeding back cannot be neatly categorized into task or process levels. Items pertaining to task level feeding back included comments about the criteria for success whilst process level feeding back items referenced ideas, thinking, skills, and strategies. As noted in the paragraph above, these two groups of items are not mutually exclusive. For instance, in the classroom students receive feedback about their application of processes in regards to satisfying the success criteria of a task. Hattie and Timperley (2007) argue that task level feedback is usually directed to develop surface thinking whilst process level feedback can be directed to developing deeper understanding. Biggs and Collis (2014) use the Structure of Observed Learning Outcomes taxonomy to highlight that surface and deep thinking are connected as the former builds to the latter.

Whilst the preceding discussion examines the interrelationship between task and feedback level, analysis of the structure of the survey instrument indicates interconnectedness between feeding up and feeding back. This is not surprising as these feedback types are conceptually related as a learner must have understanding of the success criteria (where they are going) and their current learning state (how they are going) to understand their progress (Hattie and Timperley, 2007). Likewise established feedback models claim effective feedback clarifies success for learners, provides feedback on progress, and highlights the next steps for improvement (Nicol and Macfarlane-Dick, 2006; Hounsell et al., 2008; Boud and Molloy, 2013). Whilst findings from this study report that feed forward is perceived by students to be the most helpful feedback type, it must also be anticipated that information gained from feeding up and feeding back helps students to make sense of the feed forward information they receive. When students receive effective feedback information they are better able to close the feedback loop, meaning that feedback information was received and acted upon (Boud and Molloy, 2013).

Self-Regulation Is a Unique Feedback Level

A key finding from this research was that students perceive self-regulation as a distinct feedback level as compared to task and process level feedback. Hattie and Timperley (2007) define self-regulatory feedback as the application of self-monitoring skills during a task. In contrast to the explicit provision of task feedback, process and self-regulatory feedback was described as being given through prompts and questions with the purpose of activating learners to engage, think, reflect, self-monitor, and act upon the feedback. Brooks et al. (2019) found self-regulatory feedback occurred infrequently in the classroom. Likewise, Brookhart (2017) argues that most feedback comes from the teacher, is directed to the student and is delivered through a variety of modes. Winstone et al. (2017) found that processes aligned with self-regulation were important mediators for how students received and actioned feedback. The authors proposed four key recipience processes: self-appraisal; assessment literacy; goal setting and self-regulation; and engagement and motivation.

Interestingly, in this study students rated survey items analogous with self-regulatory level feedback as being less helpful than other feedback. This may be due in part to student expectations of types of feedback that are traditionally used in the classroom. Harris and Brown (2013) found that the implementation of endorsed but underused practices of peer and self-assessment was complex as such practices were largely unfamiliar to teachers and students. They reported the use of such practices challenged teacher and student beliefs of the role of assessment and feedback. In a study of 47 primary teachers, teachers also reported that whilst they desired self-regulation in their students they were unsure how to develop such behaviors (Dignath-van Ewijk and van der Werf, 2012). This suggests that students are likely to be less familiar with self-regulatory feedback and as such are likely to be more reliant upon the traditional process of the teacher being the giver and the student the receiver of feedback. Hence, students appear to value or prefer being explicitly told what or how to improve by the teacher, rather than be prompted to think and consider how they could use processes, skills or strategies for improvement.

Limitations

Despite the timeliness of this study and the application of the theory-based approach to survey construction, there are several limitations that must be acknowledged. First, although all reported factor loadings were above the conventional threshold of 0.3 and all items load uniquely onto one factor, some loadings were not particularly strong2. For example, if loadings above 0.4 are considered salient (Field, 2009; Stevens, 2009), then four loadings in our EFA results were not salient using this criterion. If loadings should be above 0.5 to be salient (Backhaus et al., 2006), then 16 loadings were below this threshold. In addition, if the cut-off point is 0.6 (Guadagnoli and Velicer, 1988; Bortz and Döring, 2006), then only seven loadings were considered salient by this standard. Therefore, there were some variations in the strength of our factor loadings depending on how a salient loading is defined.

Second, the intercorrelations among factors were moderately high, with a correlation coefficient of 0.63 between factors 1 and 2, 0.68 between factors 1 and 3, and 0.59 between factors 2 and 3. Some correlations among these factors were expected (Costello and Osborne, 2005), because the feedback types and levels that underlie these factors are conceptually related. However, the intercorrelations among factors should not be “too high,” because “highly overlapping factors are not of great theoretical interest” (Gorsuch, 1983, p. 33) and may indicate a lack of discriminant validity (Henseler et al., 2015). Nevertheless, there is no consensus in the empirical literature on what level of factor intercorrelations should be considered “too high.” Some researchers suggest 0.7–0.8 as the cut-off point (Gorsuch, 1983; Cheung and Wang, 2017), other scholars recommend 0.85 (Clark and Watson, 1995; Kline, 2011), yet others propose a threshold of 0.90 (Gold et al., 2001; Teo et al., 2008). Although our factor correlations were below the most conservative threshold of 0.7 in the literature, they were all near this borderline.

Third, use of scale scores based on weighted factor loadings capitalizes on chance variation in the data set. We undertook sensitivity analysis by estimating and reporting in Table 5 scale reliability and means based on unit-weighted factor scores from the items with the most salient loadings (Gorsuch, 1983; Grice, 2001). We used the threshold of 0.4 to define salient loadings. This is because the number of items for factor 1 reduced to only three when the cut-off point of 0.5 is used and to only one when the cut-off point of 0.6 is used. Many studies have demonstrated that factors with less than three items are generally unstable and solid factors in general would have five or more items with strong loadings (Costello and Osborne, 2005; Tabachnick and Fidell, 2007; Yong and Pearce, 2013). Table 5 shows that unit-weighted factor scores and loading-weighted factor scores produced very similar and highly consistent scale reliability and means. All factors based on unit-weighted factor scores showed high reliability; the unit-weighted means were very close to the loading-weighted means, showing the same pattern that factor 3 was rated the most helpful and was followed by factor 1 and factor 2. The differences in the unit-weighted means were also statistically significant at the 5% level.

Table 5. Scale reliability and means based on unit-weighted factor scores from the items with the most salient loadings (above 0.4).

The items in the student feedback perception were specifically written for the context of a writing task within the Year 5 English curriculum. Whilst justifications were given in the method for the specificity of questionnaire items in order to measure students' perceptions of feedback type and level, further research would be required before using this tool in other settings. A final limitation is that the EFA and CFA were undertaken on the same data set. This is chiefly because of the large number of feedback items used in uncovering the factor structure relative to a moderate sample size. Future research should leverage large samples that enable splitting into sub-samples for EFA and CFA while maintaining sufficient statistical power.

Summary

This research sought to add to the limited body of evidence regarding middle school students' perceptions of feedback. The review of the limited research in this area highlights that students seek feedback that is (i) specific, purposeful, and constructive (Pajares and Graham, 1998; Gamlem and Smith, 2013; Van der Kleij et al., 2017), (ii) matched to the criteria for assessment (Brown et al., 2009; Beaumont et al., 2011). and (iii) received in time for learning (Peterson and Irving, 2008). Yet too often the feedback message is lost due to the feedback arriving too late (Gamlem and Smith, 2013) or if it is received in time, the content and conceptual level is not matched to the task objectives or the students current learning state (Murtagh, 2014).

Findings from this study demonstrate that the SPFQ tool partially affirms Hattie and Timperley's (2007) conceptual model of feedback. Feeding forward (information about improvement) was found to be a unique feedback type that was perceived by participants as being most helpful to learning compared to other feedback. Student responses about feeding up (clarifying success) and feeding back (information about progress) were found to be highly interrelated at both task and process levels. Self-regulatory feedback was perceived to be a distinct level of feedback and reported by students to be less helpful than other feedback types. This may be due to student unfamiliarity with self-regulatory feedback and the cognitive effort required to process such information in comparison to receiving explicit feedback about the task and process from the teacher. This implies that students want and are reliant upon explicit feedback about the requirements of the task rather than feedback that encourages deeper thought processes or the generation of self-feedback through the monitoring of their own work.

These findings highlight important implications for teaching. First, how can formative assessment practices be used as evidence based data to provide differentiated feedback at the task, process and self-regulatory levels? It is only through the process of formative assessment that teachers know where students are at in the learning cycle and which type and level of feedback will be the most effective for moving the learner forward. Second, how can strategies such as peer and self-assessment be used to make teachers and students more open to the benefits of self-regulatory feedback? A sustainable model of feedback needs to recognize that feedback cannot flow unidirectionally from teacher to student; rather teachers need to develop capability in learners to regulate their own progress.

Data Availability

The data supporting the conclusions of this manuscript will be made available by the authors, without undue reservation, to any qualified researcher.

Ethics Statement

This project complies with the provisions contained in the National Statement on Ethical Conduct in Human Research and complies with the regulations governing experimentation on humans. The protocol was approved by The University of Queensland Human Research Ethics Committee.

Author Contributions

CB, YH, JH, AC, and RB contributed conception and design of the study and wrote sections of the manuscript. CB and RB collected data. YH performed the statistical analysis. CB and YH wrote the first draft of the manuscript. All authors contributed to manuscript revision, read and approved the submitted version.

Funding

This research was funded by the Australian Government through the Australian Research Council (LP160101604).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank the reviewer for suggesting this course of action.

Footnotes

1. ^Australian Curriculum, Assessment and Reporting Authority (ACARA) (2017). https://www.australiancurriculum.edu.au/

2. ^It needs to be understood that the definition of a salient/large factor loading varies across studies. As Gorsuch (1983, p. 208) puts it, “Unfortunately, there is no exact way to determine salient loadings in any of the three matrices used for interpreting factors. Only rough guidelines can be given.” Some researchers argue that the definition of salient loadings depends on the sample size (Gorsuch, 1983; Hair et al., 1998), with smaller sample sizes requiring higher cut-offs for loadings to be salient. Other researchers, however, recommend different cut-off values for salient loadings irrespective of the sample size (Guadagnoli and Velicer, 1988; Field, 2009; Stevens, 2009).

References

Backhaus, K., Erichson, B., Plinke, W., and Weiber, R. (2006). Multivariate Analysis Methods: An Application Oriented Introduction. Berlin: Springer.

Barrett, P. (2007). Structural equation modeling: judging model fit. Pers. Indiv. Diff. 42, 815–824. doi: 10.1016/j.paid.2006.09.018

Beaumont, C., O'Doherty, M., and Shannon, L. (2011). Reconceptualising assessment feedback: a key to improving student learning? Stud. High. Educ. 36, 671–687. doi: 10.1080/03075071003731135

Biggs, J. B., and Collis, K. F. (2014). Evaluating the Quality of Learning: The SOLO Taxonomy (Structure of the Observed Learning Outcome). New York, NY: Academic Press.

Blunch, N. J. (2008). Introduction to Structural Equation Modelling Using SPSS and AMOS. London: SAGE. doi: 10.4135/9781446249345

Bollen, K. A. (1989). Structural Equations With Latent Variables. New York, NY: John Wiley. doi: 10.1002/9781118619179

Bortz, J., and Döring, N. (2006). Research Methods and Evaluation for the Human and Social Sciences, 4th Edn. Heidelberg: Springer. doi: 10.1007/978-3-540-33306-7

Boud, D. (2013). Enhancing Learning Through Self-Assessment. Abingdon: Routledge. doi: 10.4324/9781315041520

Boud, D., and Molloy, E. (2013). Rethinking models of feedback for learning: the challenge of design. Assess. Eval. High. Educ. 38, 698–712. doi: 10.1080/02602938.2012.691462

Brookhart, S. M. (2012). “Teacher feedback in formative classroom assessment,” in Leading Student Assessment, eds C. Webber and J. Lupart (Dordrecht: Springer), 225–239. doi: 10.1007/978-94-007-1727-5_11

Brooks, C., Carroll, A., Gillies, R., and Hattie, J. (2019). A matrix of feedback for learning. Austr. J. Teach. Educ. 44, 14–32. doi: 10.14221/ajte.2018v44n4.2

Brown, G. T. L., Peterson, E. R., and Irving, S. E. (2009). Beliefs that make a difference: Adaptive and maladaptive self-regulation in students' conceptions of assessment. In Student perspectives on assessment: What students can tell us about assessment for learning (pp. 159–186).

Cheung, G. W., and Wang, C. (2017). “Current approaches for assessing convergent and discriminant validity with SEM: issues and solutions,” in Academy of Management Proceedings, Vol. 2017 (Briarcliff Manor, NY: Academy of Management), 12706.

Clark, L. A., and Watson, D. (1995). Constructing validity: basic issues in objective scale development. Psychol. Assess. 7, 309–319. doi: 10.1037/1040-3590.7.3.309

Conway, J. M., and Huffcutt, A. I. (2003). A review and evaluation of exploratory factor analysis practices in organizational research. Organ. Res. Methods 6, 147–168. doi: 10.1177/1094428103251541

Costello, A. B., and Osborne, J. W. (2005). Best practices in exploratory factor analysis: four recommendations for getting the most from your analysis. Pract. Assess. Res. Eval. 10, 1–9.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika 16, 297–334. doi: 10.1007/BF02310555

Davidshofer, K. R., and Murphy, C. O. (2005). Psychological Testing: Principles and Applications, 6th Edn. Upper Saddle River, NJ: Pearson/Prentice Hall.

Dignath-van Ewijk, C., and van der Werf, G. (2012). What teachers think about self-regulated learning: investigating teacher beliefs and teacher behavior of enhancing students' self-regulation. Educ. Res. Int. 2012:741713. doi: 10.1155/2012/741713

Dweck, C. S. (2007). The secret to raising smart kids. Sci. Am. Mind 18, 36–43. doi: 10.1038/scientificamericanmind1207-36

Ferguson, P. (2011). Student perceptions of quality feedback in teacher education. Assess. Eval. High. Educ. 36, 51–62. doi: 10.1080/02602930903197883

Fleming, J. S. (2003). Computing measures of simplicity of fit for loadings in factor-analytically derived scales. Behav. Res. Methods Instr. Comp. 34, 520–524. doi: 10.3758/BF03195531

Gamlem, S. M., and Smith, K. (2013). Student perceptions of classroom feedback. Assess. Educ. Principles Policy Prac. 20, 150–169. doi: 10.1080/0969594X.2012.749212

Gay, L. R., Mills, G. E., and Airasian, P. W. (2011). Educational Research: Competencies for Analysis and Applications. Upper Saddle River, NJ: Pearson Higher Ed.

Gold, A. H., Malhotra, A., and Segars, A. H. (2001). Knowledge management: an organizational capabilities perspective. J. Manag. Inform. Syst. 18, 185–214. doi: 10.1080/07421222.2001.11045669

Grice, J. W. (2001). A comparison of factor scores under conditions of factor obliquity. Psychol. Methods 6, 67–83. doi: 10.1037/1082-989X.6.1.67

Guadagnoli, E., and Velicer, W. (1988). Relation of sample size to the stability of component patterns. Psychol. Bull. 103, 265–275. doi: 10.1037/0033-2909.103.2.265

Hair, J. F., Tatham, R. L., Anderson, R. E., and Black, W. (1998). Multivariate Data Analysis, 5th Edn. London: Prentice-Hall.

Harris, L. R., and Brown, G. T. (2013). Opportunities and obstacles to consider when using peer-and self-assessment to improve student learning: case studies into teachers' implementation. Teach. Teach. Educ. 36, 101–111. doi: 10.1016/j.tate.2013.07.008

Harris, L. R., Brown, G. T., and Harnett, J. A. (2014). Understanding classroom feedback practices: a study of New Zealand student experiences, perceptions, and emotional responses. Educ. Assess. Eval. Account. 26, 107–133. doi: 10.1007/s11092-013-9187-5

Hattie, J. (2009a). “The black box of tertiary assessment: an impending revolution,” in Tertiary Assessment and Higher Education Student Outcomes: Policy, Practice and Research, eds L. H. Meyer, S. Davidson, H. Anderson, R. Fletcher, P. M. Johnston, and M. Rees (Wellington: Ako Aotearoa), 259–275.

Hattie, J. (2009b). Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement. Abingdon: Routledge. doi: 10.4324/9780203887332

Hattie, J. (2012). Visible Learning for Teachers: Maximizing Impact on Learning. London; New York, NY: Routledge.

Hattie, J., and Gan, M. (2011). “Instruction based on feedback,” in Handbook of Research on Learning and Instruction, eds R. Mayer and P. Alexander (New York, NY: Routledge), 249–271.

Hattie, J., Gan, M., and Brooks, C. (2016). “Instruction based on feedback,” in Handbook of Research on Learning and Instruction, eds R. E. Mayer and P. A. Alexander (New York, NY; London: Taylor and Francis), 290–324.

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Henseler, J., Ringle, C. M., and Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Market. Sci. 43, 115–135. doi: 10.1007/s11747-014-0403-8

Hooper, D., Coughlan, J., and Mullen, M. R. (2008). Structural equation modelling: guidelines for determining model fit. Electron. J. Business Res. Methods 6, 53–60. doi: 10.21427/D7CF7R

Hounsell, D., McCune, V., Hounsell, J., and Litjens, J. (2008). The quality of guidance and feedback to students. High. Educ. Res. Dev. 27, 55–67. doi: 10.1080/07294360701658765

Hu, L., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. 6, 1–55. doi: 10.1080/10705519909540118

Jonsson, A., and Panadero, E. (2018). “Facilitating students' active engagement with feedback,” in The Cambridge Handbook of Instructional Feedback, eds A. A. Lipnevich and J. K. Smith (Cambridge: Cambridge University Press).

Kline, R. B. (2011). Principles and Practice of Structural Equation Modeling. New York, NY: Guilford Press.

Kline, R. B. (2015). Principles and Practice of Structural Equation Modeling, 4th Edn. New York, NY: The Guilford Press.

Kluger, A. N., and DeNisi, A. (1996). The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 119, 254–284. doi: 10.1037/0033-2909.119.2.254

Lipnevich, A. A., Berg, D. A. G., and Smith, J. K. (2016). “Toward a model of student response to feedback.,” in Handbook of Human and Social Conditions in Assessment, eds G. T. L. Brown and L. R. Harris (New York, NY: Routledge), 169–185.

Murtagh, L. (2014). The motivational paradox of feedback: teacher and student perceptions. Curric. J. 25, 516–541. doi: 10.1080/09585176.2014.944197

National Research Council (2000). How People Learn: Brain, Mind, Experience, and School, Expanded edition. Washington, DC: National Academies Press.

Nicol, D. J., and Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Stud. High. Educ. 31, 199–218. doi: 10.1080/03075070600572090

Pajares, F., and Graham, L. (1998). Formalist thinking and language arts instruction: teachers' and students' beliefs about truth and caring in the teaching conversation. Teach. Teach. Educ. 14, 855–870. doi: 10.1016/S0742-051X(98)80001-2

Peterson, E. R., and Irving, S. E. (2007). Conceptions of Assessment and Feedback. Retreived from https://www.academia.edu/20018797/Conceptions_of_Assessment_and_Feedback_Project

Peterson, E. R., and Irving, S. E. (2008). Secondary school students' conceptions of assessment and feedback. Learn. Instruct. 18, 238–250. doi: 10.1016/j.learninstruc.2007.05.001

Pokorny, H., and Pickford, P. (2010). Complexity, cues and relationships: student perceptions of feedback. Active Learn. High. Educ. 11, 21–30. doi: 10.1177/1469787409355872

Price, M., and O'Donovan, B. (2006). “Improving performance through enhancing student understanding of criteria and feedback,” in Innovative Assessment in Higher Education, eds C. Bryan and K. Clegg (London; New York, NY: Routledge), 100–109.

Rust, C., O'Donovan, B., and Price, M. (2005). A social constructivist assessment process model: how the research literature shows us this could be best practice. Assess. Eval. High. Educ. 30, 231–240. doi: 10.1080/02602930500063819

Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instruct. Sci. 18, 119–144. doi: 10.1007/BF00117714

Sadler, D. R. (2010). Beyond feedback: developing student capability in complex appraisal. Assess. Eval. High. Educ. 35, 535–550. doi: 10.1080/02602930903541015

Schermelleh-Engel, K., Moosbrugger, H., and Müller, H. (2003), Evaluating the fit of structural equation models: tests of significance descriptive goodness of-fit measures. Methods Psychol. Res. 8, 23–74.

Schunk, D. H., and Zimmerman, B. J. (2007). Influencing children's self-efficacy and self-regulation of reading and writing through modeling. Read. Writ. Q. 23, 7–25. doi: 10.1080/10573560600837578

Shute, V. J. (2008). Focus on formative feedback. Rev. Educ. Res. 78, 153–189. doi: 10.3102/0034654307313795

Smith, J. K., and Lipnevich, A. A. (2009). “Formative assessment in higher education: frequency and consequence,” in Student Perspectives on Assessment: What Students Can Tell Us About Assessment for Learning, eds D. M. McInerney, G. T. L. Brown, and G. A. D. Liem (Charlotte, NC: Information Age), 279–295.

Stevens, J. P. (2009). Applied Multivariate Statistics for the Social Sciences. New York, NY: Routledge.

Strijbos, J. W., Pat-El, R. J., and Narciss, S. (2010). “Structural validation of a feedback perceptions questionnaire,” in Proceedings of the 9th International Conference of the Learning Sciences-Volume 2 (Chicago, IL: International Society of the Learning Sciences), 334–335. doi: 10.1037/t15429-000

Tabachnick, B. G., and Fidell, L. S. (2007). Using Multivariate Statistics 5th Edn. Boston, MA: Allyn and Bacon.

Teo, T. S., Srivastava, S. C., and Jiang, L. (2008). Trust and electronic government success: an empirical study. J. Manag. Inform. Syst. 25, 99–132. doi: 10.2753/MIS0742-1222250303

Thompson, B. (2004). Exploratory and Confirmatory Factor Analysis: Understanding Concepts and Applications. Washington, DC: American Psychological Association. doi: 10.1037/10694-000

Thorndike, E. L. (1933). A proof of the law of effect. Science 77, 173–175. doi: 10.1126/science.77.1989.173-a

Thurlings, M., Vermeulen, M., Bastiaens, T., and Stijnen, S. (2013). Understanding feedback: a learning theory perspective. Educ. Res. Rev. 9, 1–15. doi: 10.1016/j.edurev.2012.11.004

Van der Kleij, F., Adie, L., and Cumming, J. (2017). Using video technology to enable student voice in assessment feedback. Br. J. Educ. Technol. 48, 1092–1105. doi: 10.1111/bjet.12536

Vandenberg, R. J. (2006). Statistical and methodological myths and urban legends. Organ. Res. Methods 9, 194–201. doi: 10.1177/1094428105285506

Weaver, M. R. (2006). Do students value feedback? Student perceptions of tutors' written responses. Assess. Eval. High. Educ. 31, 379–394. doi: 10.1080/02602930500353061

Weiner, B. (1984). “Principles for a theory of student motivation and their application within an attributional framework,” in Research on Motivation, Vol. 1, eds R. Ames and C. Ames (Orlando, FL: Academic Press), 15–38.

West, J., and Turner, W. (2015). Enhancing the assessment experience: improving student perceptions, engagement and understanding using online video feedback. Innov. Educ. Teach. Int. 53, 1–11. doi: 10.1080/14703297.2014.1003954

Wiliam, D. (2016). Leadership for Teacher Learning. West Palm Beach, FL: Learning Sciences International.

Winstone, N. E., Nash, R. A., Parker, M., and Rowntree, J. (2017). Supporting learners' agentic engagement with feedback: a systematic review and a taxonomy of recipience processes. Educ. Psychol. 52, 17–37. doi: 10.1080/00461520.2016.1207538

Yong, A. G., and Pearce, S. (2013). A beginner's guide to factor analysis: focusing on exploratory factor analysis. Tutor. Quan. Methods Psychol. 9, 79–94. doi: 10.20982/tqmp.09.2.p079

Appendices

Appendix 1: The Feedback Matrix

Appendix 2: Table of Items

Keywords: feedback, learning, self-regulation, perceptions, assessment, teachers

Citation: Brooks C, Huang Y, Hattie J, Carroll A and Burton R (2019) What Is My Next Step? School Students' Perceptions of Feedback. Front. Educ. 4:96. doi: 10.3389/feduc.2019.00096

Received: 26 April 2019; Accepted: 26 August 2019;

Published: 18 September 2019.

Edited by:

Ernesto Panadero, Autonomous University of Madrid, SpainReviewed by:

Sarah M. Bonner, Hunter College (CUNY), United StatesLois Ruth Harris, Central Queensland University, Australia

Copyright © 2019 Brooks, Huang, Hattie, Carroll and Burton. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cameron Brooks, c.brooks@uq.edu.au

Cameron Brooks

Cameron Brooks Yangtao Huang

Yangtao Huang John Hattie

John Hattie Annemaree Carroll

Annemaree Carroll Rochelle Burton

Rochelle Burton