Gamification tailored for novelty effect in distance learning during COVID-19

- 1Department of Psychology, Faculty of Social Studies, Masaryk University, Brno, Czechia

- 2Department of Computer Systems and Communications, Faculty of Informatics, Masaryk University, Brno, Czechia

The pandemic led to an increase of online teaching tools use. One such tool, which might have helped students to stay engaged despite the distance, is gamification. However, gamification is often criticized due to a novelty effect. Yet, others state novelty is a natural part of gamification. Therefore, we investigated whether gamification novelty effect brings incremental value in comparison to other novelties in a course. We created achievement- and socialization-based gamification connected to coursework and practice test. We then measured students’ behavioral engagement and performance in a quasi-experiment. On the one hand, results show ICT students engaged and performed moderately better in a gamified condition than in control over time. On the other hand, BA course results show no difference between gamified and practice test condition and their novelty effect. We conclude an external gamification system yields better results than a classical design but does not exceed practice tests effect.

1. Introduction

Gamification is an intentional or emergent transformation of a non-game environment to a more game-like state (Koivisto and Hamari, 2019). Throughout the last decade, gamification has been used in the workplace, in school education, with various mobile apps for healthcare, fitness, self-learning, and many other contexts (Huotari and Hamari, 2017; Looyestyn et al., 2017; Sardi et al., 2017). Notably, implementing a gamified design in such a context usually aims to change behavioral outcomes, performance, motivation, and attitudes (Treiblmaier and Putz, 2020). This can be done using game elements such as badges, leaderboards, and narratives, out of which the most common is the PBL triad – points, badges, and leaderboards. Previous studies have shown that a well-designed gamification has the potential to promote motivation (Hamari et al., 2014), increase behavioral task engagement (Looyestyn et al., 2017) and performance (Landers et al., 2017). However, many researchers admit that the gamification effect may rather be caused by a novelty effect (Koivisto and Hamari, 2019). A novelty effect means that if we add something new to the environment, people get curious and temporarily more engaged with the environment. For instance, as gamification is something new to the environment, it may temporarily affect the users’ outcomes due to such a novelty effect. More specifically, the users’ initial gain in engagement and performance has often lowered across time in gamification studies (Farzan et al., 2008; Hamari, 2013). Raftopoulos (2020) even suggests that we should consider such a novelty effect as a systemic design feature of gamification. Meaning, we should strive to reap its benefits, for example, by irregularly adding something new to the design or redesigning the whole gamification once in a while. However, even if we consider the novelty effect as a feature of gamification, not an intervening variable, we may ask whether there are advantages of implementing a gamified design instead of some other novel elements.

This study aims to examine how implementing an achievement and social-based gamification design to enhance knowledge gain and retention in a university course differs in the short-term and the long-term from adding several practice tests with simple feedback. Therefore, we address how effective gamification is and how useful it is to gamify compared to providing other novel catalyzers of change in an educational context.

1.1. Gamification and its novelty effect in education

Many researchers have extensively examined the potential effect of various gamification designs in education (such as the effect on motivation or learning performance) throughout the last decade (Koivisto and Hamari, 2019). However, the results appear to be somewhat mixed. In their review, Koivisto and Hamari (2019) found majorily positive effects of gamification in education/learning experiments (68%, 19 studies), but also a substantial amount of null or ambiguous effects (25%, seven studies). Similarly, a review of gamified second-language acquisition in higher education (Boudadi and Gutiérrez-Colón, 2020) shows mainly positive (73%, 11 studies) with some ambiguous (20%, three studies) results. As the authors of both reviews agree, the unclear effect may primarily be caused by the type of gamification (e.g., Duolingo is less suitable for more skilled language learners) or by small sample size. Even other recent reviews agree that negative results occur scarcily and may be caused by user, gamification type or educational content type incompatibility (Zainuddin et al., 2020a; Metwally et al., 2021). Thus, we may assume that gamification has predominantly a positive effect in educational context. This is also in line with the recent meta-analysis which found a medium positive effect of gamification in terms of performance feedback and enjoyment (Bai et al., 2020).

Even recent large-sample studies further support this finding. For example, Legaki et al. (2021) showed that when using a gamified app in a forecasting course, students had better learning outcomes than students who only saw a lecture or read a book after the lecture. Legaki et al. (2020) also found a similar effect in a long-term experiment during a statistics course with 365 other students. Furthermore, El-Beheiry et al. (2017) concluded that gamifying virtual reality surgeon simulation through points and competition leads to higher simulator use and improved performance in comparison to providing students only with the simulator. Not only this shows that the gamification effect may add up to the experiential learning of a simulator (Kolb et al., 2001), but also that the gamification’s novelty may increase the supposed novelty effect of a simulator if we consider it a feature. This is crucial to our research aim as it means the effect of gamification and its novelty may add up to the effect of some other new course features and their novelty. The importance of this generalization is even emphasized by the fact that simulations are often difficult to distinguish from gamification and other game-based learning (van Gaalen et al., 2020).

The idea that a novelty effect might play a role in learning behavior and outcomes is supported by the manifestation of the said effect in multiple studies. Farzan et al. (2008) report that the initial spike in contributions to a gamified organizational network diminished, possibly because the system did not adjust to the users (i.e., stopped being novel to them). Additionally, Koivisto and Hamari (2014) observed that perceived enjoyment, playfulness, and usefulness of a gamified exercise application decreased with time, suggesting a novelty effect was at play. As this novelty effect on exercising was more substantial for younger users, we should inspect its role in educational gamification. This is further supported by a gamified long-term quasi-experiment where students with gamification outperformed the control group in Test 1, but did not differ from the control group in Tests 2 and 3 (Sanchez et al., 2020).

Contrastingly, van Roy and Zaman (2018) found no novelty effect in a course with a long-term gamification. Instead, they found a curvilinear relationship (first negative, later positive) between the time of use and autonomous and controlled motivation, as defined by self-determination theory (Deci and Ryan, 2015). Such a dicrepancy in novelty effect occurrence could be explained by the fact that their gamification introduced something new each week. This is consistent with the remarks of Raftopoulos (2020) and also with Tsay et al. (2019) who stated that adding new interactions later in the second year of a gamified two-term course mitigated a drop in engagement after the novelty effect wore off.

However, one could ask whether transforming the gamification regularly is not too costly compared to other methods which could be more easily sustained and whether gamification should not rather be used in short-term tasks where even a shallow gamification leads to positive results (Lieberoth, 2014). Not only must we therefore compare gamification with a control group like Tsay et al. (2019), but also gamification and other novel elements that may improve students’ behavior and outcomes (such as quizes, chatbots). The ideal time for such experiments was the first semester with COVID-19, as the sudden change to full-time online teaching brought the necessity of engaging students in new ways.

1.2. Students’ engagement during the pandemic

First studies on the pandemic unsurprisingly, yet sadly report that the time has been quite difficult on students. Although students usually had the time to study, their well-being and health suffered, leading to, for instance, stress, anxiety, and depressive symptoms (Safa et al., 2021), deterioration of family and peer relations (Morris et al., 2021), and even post-traumatic stress disorder and suicidality (Czeisler et al., 2020). Due to these and other adverse effects, students’ learning performance and engagement could suffer if not cared for, especially since the restrictions thwarted many common methods of teaching, learning, and socialization (Morris et al., 2021). The fact that the words “distance learning” directly put the distance between the students and their classes speaks for itself.

In pandemical distance learning, online tools and other technology-based methods are the key and most feasible approaches to teaching and studying. In order to narrow the distance, we need to seek out those methods which sustain students’ engagement in courses. Previous studies have shown that methods such as online practice, online peer discussions, videos, and teleconferencing can maintain engagement and lead to good learning performance (Campillo-Ferrer and Miralles-Martínez, 2021). Consequently, we may ask whether gamification has the potential to maintain or even increase students’ engagement and which method would be more cost-effective with respect to possible novelty effects.

Although we could not have foreseen the COVID-19 pandemic, a blessing in disguise was that we decided to implement our gamification and practice tests (i.e., online practice) with simple feedback in the spring term when the pandemic later began and when all universities in the Czech Republic closed down. Accordingly, most courses were just a combination of online lectures and self-study. This gave us a unique opportunity to examine whether gamification helps students learn and remain engaged in the course compared to practice tests (i.e., a quasi-experiment) and no changes to the course. Thus, we hypothesize the following:

H1: Students with gamification engage more in course materials than those without it both in the short-term and over time.

H2: Students perform better in practice tests with gamification than without it both in the short-term and over time.

H3: Students with gamification perform better in the course than those without gamification.

2. Materials and methods

2.1. Participants and study plan

The sample of our quasi-experiment included 278 Czech university students from three courses: two in the field of business administration (BA; 120 and 65 students) and one in information and communication technologies (ICT; 93 students). We chose this sample because of the course size, which allowed us to split the sample into two parts in 1 year. Also, the same teachers taught both BA courses and the courses had similar requirements, difficulty, and topics (psychology in HR). The courses differed only in the degree of study and thus were easily comparable. For one half of the students in the BA courses, we implemented only the practice tests; for the other half of BA students, we implemented the tests and their gamification. Contrastingly, we redesigned the whole coursework in the ICT course, creating a gamified and a non-gamified version of the course. However, we built the gamification with the same system, game elements, and aesthetics as the BA gamification. In all courses, the gamified and the non-gamified groups were distributed equally and randomly.

This study plan allowed us to examine the gamification novelty effect compared to other novelty effects and to the original course design, which would not have been possible to do only in the BA courses due to sample size. This way we were able to examine the generalizability of gamification across various uses of one gamified system (i.e., adding something new with it vs. redesigning current coursework). Unfortunately, the pandemic weakened such comparability as it led to one unexpected difference between the BA and ICT courses and gamification. Originally, there were supposed to be practice tests in all courses. However, the tests were canceled in the ICT course because they were not ready to be converted to online administration without the risk of flaws and cheating.

On the other hand, this shift to distance learning provided us with the opportunity to tailor the gamification to the current situation – we decided to emphasize the gamification features connected to distance learning problematique in our design (i.e., how to keep students focused during online seminars and lectures, how to help them be proactive in them, how to help them practice gained knowledge in-between lectures, how to get some feedback without continous direct contact with the lecturer, how to help students communicate with one another).

2.2. Design

We prepared a quasi-experimental design to inspect the difference in gamification and control condition over the course of 12 weeks. Examining the differences over time allowed us to observe the changes in them, thus granting us the possibility to infer about the novelty effects. However, we first examined from what the students would benefit the most.

In the BA courses, we first identified possible gaps in their design by interviewing the lecturers, investigating the syllabuses, and examining the yearly course outputs (grade points, students’ feedback, etc.). Based on this, we concluded that students could benefit from the possibility of exercising their critical thinking over cases from a workbook. We also determined that students need to solidify the knowledge gained after each lecture and seminar. Thus, we translated a suitable English workbook by Robbins and Judge (2017); an accompanying workbook to the handbook used in the class to Czech and transferred it to online exercise tests divided by lecture topics. Each test consisted of five random questions from a larger pool to make the exercise brief and motivate students to repeat them. After each test submission, the students received simple feedback (the number of correct answers). We implemented these tests in the administrative system so that they became available only after the topic was discussed with the students. For those who participated in the gamification, we also linked these tests to the gamification system. These systems were then consulted with course lecturers and discussed in cognitive interviews with previous students and IT experts. Based on these discussions, we finalized our instructions, exercise tests, and gamification.

We based our gamification on the achievements, socialization, and immersion framework by Xi and Hamari (2019) while focusing on the first two parts of the framework for several reasons. First, achievement-based gamification has been numerously found reliable and valid in academic environment (Koivisto and Hamari, 2019). Especially, if we strive for a long-term effect on behavioral outcomes and performance (Kuo and Chuang, 2016). Second, such a gamification can be easily based on self-determination theory by promoting students’ competence through challenges and feedback, their autonomy by making the tests voluntary and giving them enough challenges to choose from, and their relatedness to others by sharing their gamification success, comparisons, and discussing the test questions and answers (Treiblmaier et al., 2018). Such a gamification should motivate intrinsically rather than extrinsically (Tsay et al., 2019). In fact, there is longstanding evidence for this positive effect of satisfying basic psychological needs outside of gamification. If teachers give feedback supporting competence and if they support autonomy, students perform better (Guay et al., 2008). In work settings, supportive leadership with individualistic approach (i.e., transformational leadership) and skill-based compensation also lead to better performance and employee health (Gagné and Forest, 2008). Similar outcomes have even been found in many other contexts (Deci and Ryan, 2008). Finally, such gamification can also be in line with goal-setting theory. It sets clear goals through challenges and relative position leaderboards, that is, leaderboards showing only peers with similar scores (Landers et al., 2017; Koivisto and Hamari, 2019). Finally, with achievement and socialization-based gamification, it was easy to add novel challenges and practice test types in order to sustain the positive outcomes of a novelty effect as proposed in previous research (Tsay et al., 2019; Raftopoulos, 2020).

We designed our challenges and achievements to motivate students to engage in several activities. First, as we wanted them to try and explore “the game,” we created badges for logging in, opening the first test, among others. Second, we made test-completion badges of varying difficulty (i.e., one test with/without a full score, several tests with a certain number of points) to highlight the importance of trying tests that include various topics and gaining better scores. Third, we prepared badges for repeating the same tests to solidify the knowledge. Fourth, we devised time-constraint badges to give them feedback on their ability to finish the final exam in time. Fifth, to increase relatedness, we created badges for answering other students’ questions and reporting system bugs. Such a design should correspond to the needs we found with our gap analysis and gamification design recommendations (Furdu et al., 2017; Mekler et al., 2017).

In the ICT course, we examined the design gaps by forming a focus group with the lecturers, observing the syllabus, and examining the course outputs. We discovered that students had not been very active in the course (low non-mandatory seminar attendance, low activity in seminar discussions). Furthermore, their continuous coursework had not reached the expected quality (homework, presentations, and graphics). Thus, we focused on students’ buy-in, course activity (attendance, activity in and across seminars), and some coursework aspects (when they begin to work on a task, how they work, and what outcomes they present). We also used badges for logging in, looking in the forums for the first time, similar to BA courses. Moreover, we created badges of varying difficulty, time-constrained badges, and social interaction badges.

We also present the game elements, examples of their rules, and the objectives we strived for with these elements and rules in Appendix 2. This allows us to highlight the comparability and differences of the course objectives and the gamification design across them. Although the specific rules sometimes differ, the objectives and challenge types are very similar. Based on this, we assume the courses and their gamification are at least partially comparable.

2.3. Procedure

We introduced our research to students at the beginning of each course. In half of the randomly chosen seminar groups, we presented our addition to the course with gamification and the other half without it. In this presentation, we described what the system looked like, how it functioned, the general purpose of the research, and the requirements if the students decided to participate. We also assured them of data anonymization and confidentiality. We then provided informed consents and collected initial data. We collected their data on performance and engagement in the tests, course materials, and course performance continously throughout the 12-week semester, without interruptions or pauses in data collection. The semester schedule and the research plan can be found in Appendix 1.

2.4. Materials

2.4.1. Manipulation

We gamified half of the seminar groups in the BA courses, while the others received only practice tests. We gamified half of the seminar groups in the ICT course while leaving the other half unchanged. Thus, we have two types of control groups.

2.4.2. Behavioral engagement

Based on the recommendation of Tsay et al. (2019), we measured behavioral engagement in multiple ways connected to what we were trying to achieve with our design. We expected the students to gain feedback from the practice tests (and gamification) and thus look more often and sooner in the given literature. Such an aim should have helped students learn in the disorganized times of the first pandemic wave in spring 2020 when it was difficult for them to grasp online education, be motivated, and access learning materials (Kohli et al., 2021). Thus, we observed how often the students looked into each of the course learning materials since the introduction of our gamification (i.e., number of views of each of the course material in the information system). We looked into both the total amount of views and the views per semester week (and lecture topic). We have also examined how soon students opened their coursework. This variable has been calculated as the number of days between the release of a weekly study material and the first date of opening the material by the student. We assume this is a valid measure of the success of our tailored design due to self-determination theory. Were we successful in designing the gamification in accordance with this theory, students should have been more autonomously motivated. In previous research, this meant participants stayed longer on task in a free-choice period (Ryan and Rigby, 2019) or that they reported more curiosity about the gamified activity (Treiblmaier and Putz, 2020). Thus, observing whether students use the materials more often and whether they go through various topics, allowed us to examine further support of our hypotheses.

We also examined behavioral engagement with practice tests themselves: What amount of the weekly course topics the students tried to practice in the tests and how many tests the students went through, regardless of the topic. This measure’s validity is based on the same principle as the previous one.

2.4.3. Performance

We were interested in several types of performance. The first was course performance, i.e., the amount of grade points (GP) gained in the course. These points were obtained similarly across all courses (a project assignment and a final exam). Although GP can be subject to bias due to subjective evaluation, the measure is the most common objective outcome in education (Canfield, et al., 2015), including gamification research (Domínguez et al., 2013). Furthermore, the GP evaluation is highly (and similarly) standardized both on the university and course level. Moreover, the course instructors were subjected to a blinding procedure as they did not know which students were in the experimental condition. Therefore, their evaluation could not be biased by an effort to help the experimenter nor attentional bias to such students. Based on all of this, we assume GP are a valid measure of students’ learning outcomes.

The second performance measure was practice test performance, measured in total points earned from practice tests by giving the right answers in multiple-choice questions. Although multiple-choice questions are one of the easier testing forms since students do not have to come up with the answer themselves, they are a valid measure of performance and a valid tool for knowledge practice (Considine et al., 2005). However, given that we were also interested in students’ knowledge broadness and precision, we looked not only into total points (whether students answered correctly more often across all the various questions), but also into points per practice test (whether students answered more questions correctly in one test). We also note this second performance measure is relevant only to the BA courses as there were no practice tests in the ICT course.

3. Results

3.1. Descriptive statistics

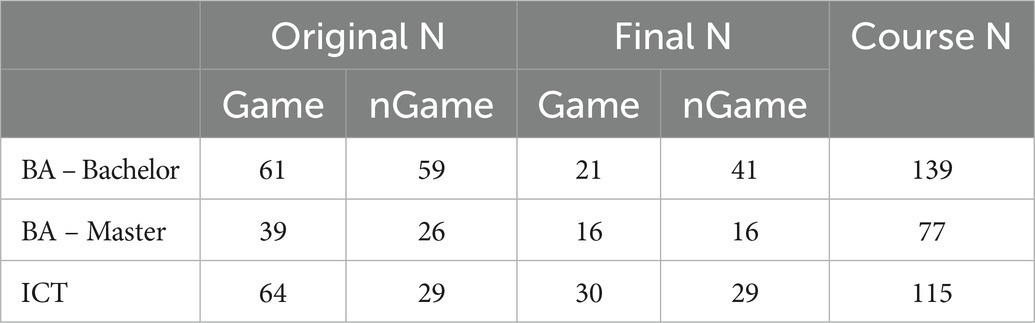

Starting with 284 Czech university students, we lost 10 participants to a drop-out, and 121 were excluded because they did not engage in the practice tests or the gamification system. We attribute this huge sample loss (a limit to our study) to the pandemic as for some students doing anything, but the most necessary work could have been too much – especially in the chaotic times of the first wave. Thus, the sample available for data analysis consisted of 274 students, but the meaningful data consisted only of 153 students who were, on average, 21.69 years old (SD = 1.57). The majority were men (94, 61%). A total of 62 students came from the Bachelor BA course, 32 from the Master BA course, and 59 from the ICT course (see Table 1 for more stratification information).

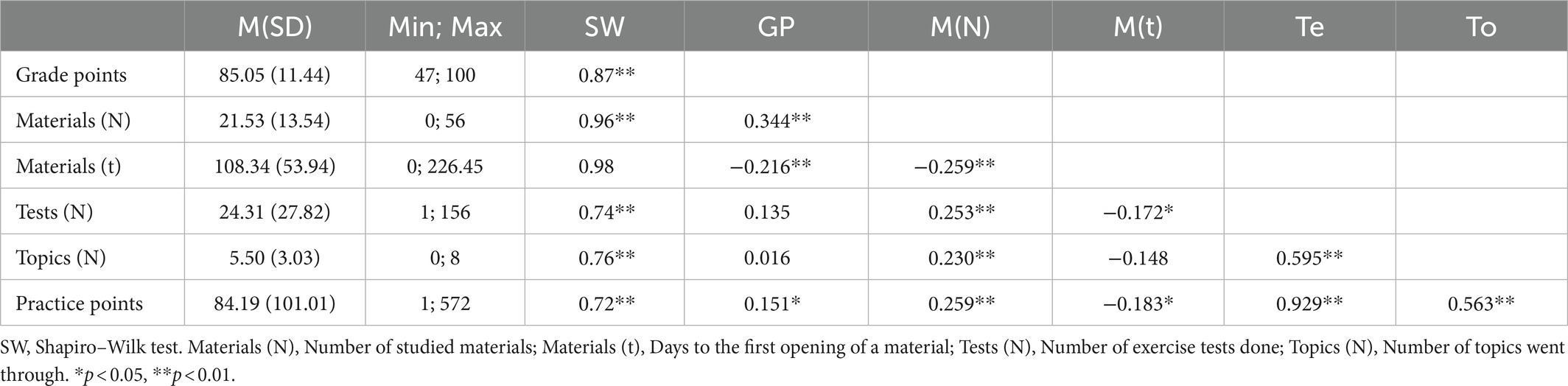

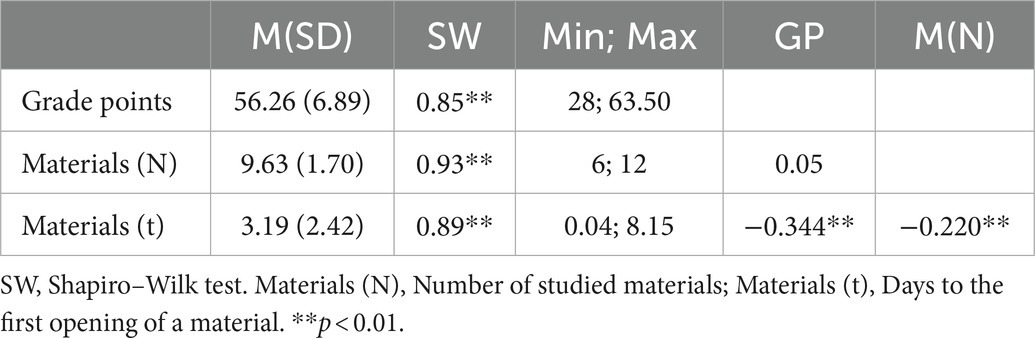

All variables except for material opening time (i.e., one of the measures of behavioral engagement) were non-normally distributed; thus, we used non-parametric tests. Specifically, points were negatively skewed due to low course difficulty and other behavioral engagement measures (be it course or practice tests) were positively skewed. We present the main descriptive statistics and correlations in Tables 2, 3.

As expected, we found a positive relationship between grade points and the number of course materials studied, supporting the idea that behavioral engagement in a course may lead to better outcomes. Similarly, the grade points were higher when the material opening time was shorter. Further, the weak correlation between the two behavioral engagement variables supports the statement of Tsay et al. (2019) that such engagement should be assessed from multiple points of view when gamifying. Interestingly, although practice test measures are related to most of the other variables, only the points gained in them are related to grade points. This result may be explained by our large sample loss or by course difficulty.

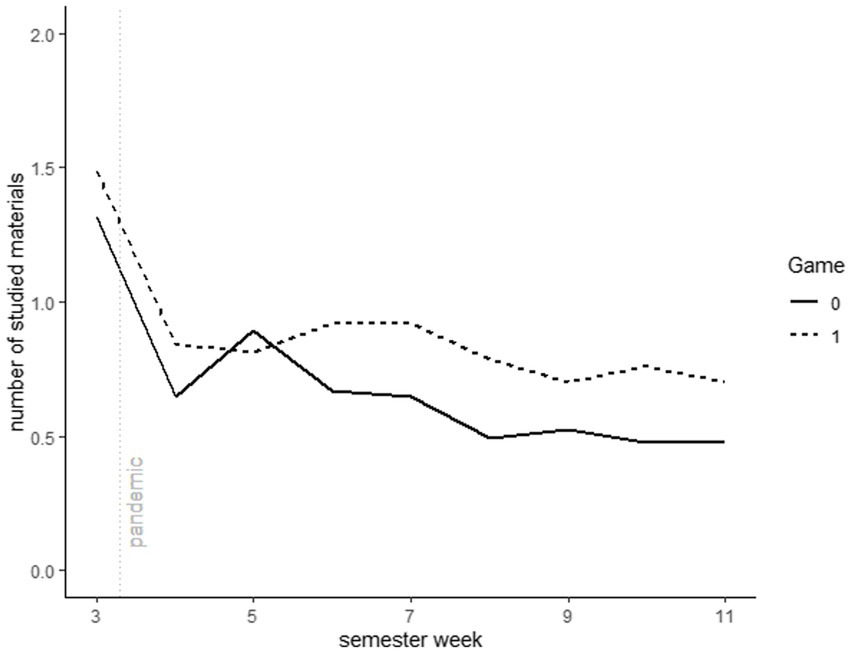

3.2. Hypotheses testing – BA courses

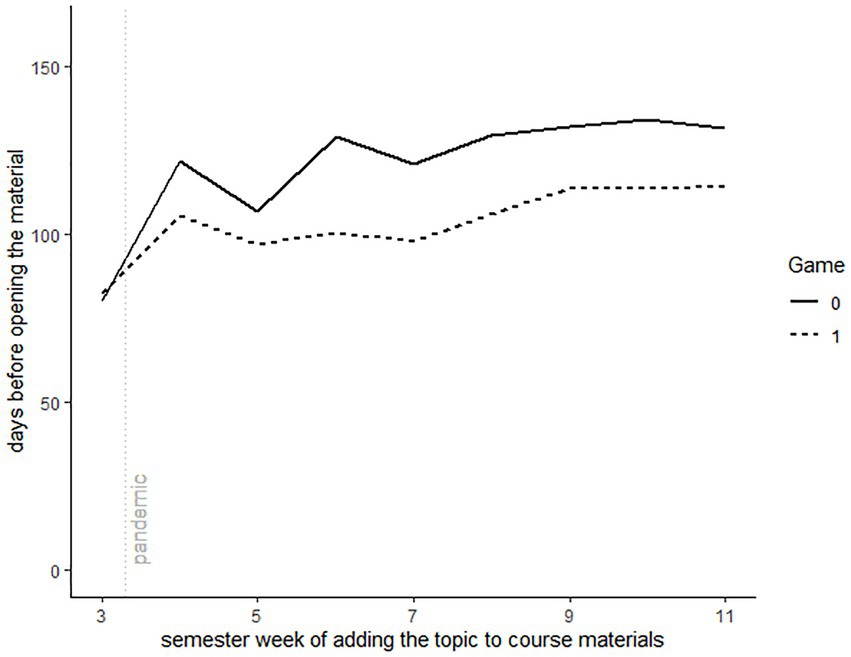

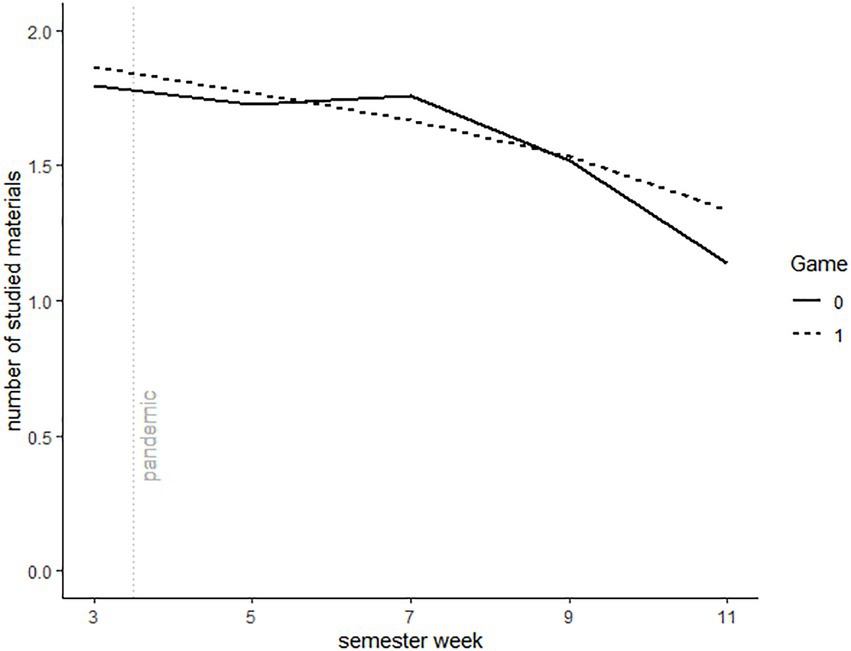

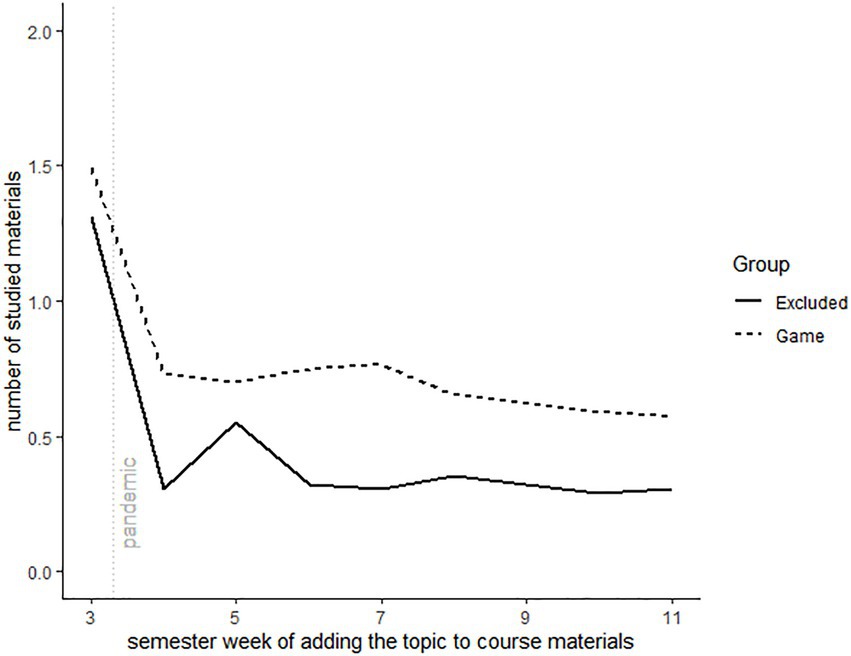

We first performed two Mann–Whitney U tests to test the hypothesis (H1) that behavioral engagement in the course differs across conditions. As we did not find a significant difference in both the amount of course materials the students went through [U(NGame = 37, NnGame = 57) = 1273.5, z = 1.7, p = 0.09] and in the material opening time [U(NGame = 37, NnGame = 57) = 864.5, z = −1.47, p = 0.14], we did not gain support for H1 on the whole course level. However, as we were interested in the novelty effect, we also decided to examine whether gamification leads to decreased engagement over time. Although a repeated-measure mixed model would be best suited for this, we first looked into the visualization of the growth curve. The graph for both the number of materials (Graph 1) and opening time (Graph 2) shows that those in the gamified condition fare slightly better as the amount of studied materials is higher for them and their material opening time is lower. However, these differences are too small to continue with a sensible evidence-driven analysis. Simultaneously, we can see that the drops in engagement in the gamified condition somewhat copy the drops in the control condition. Thus, we could not support the first hypothesis that those involved in gamification would engage more in the course.

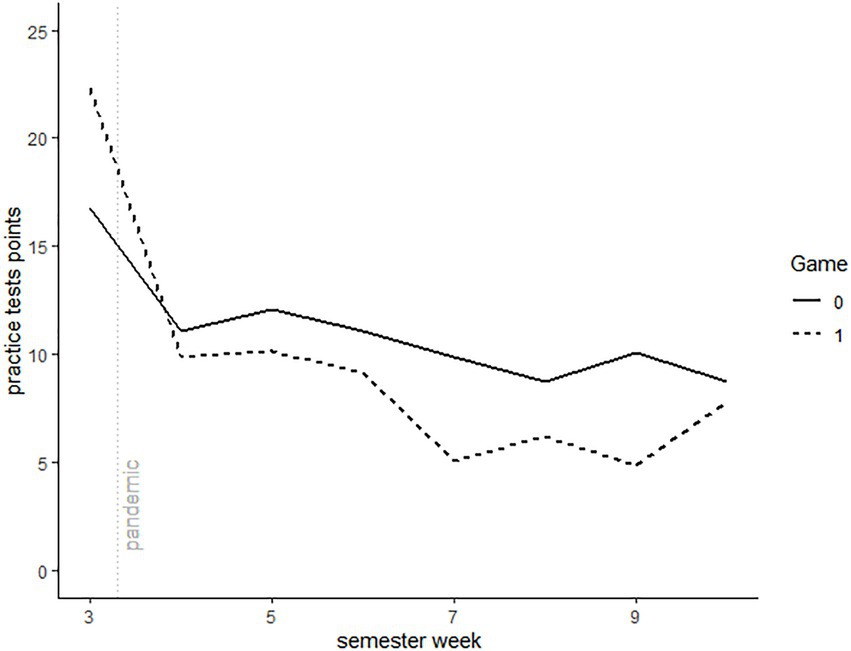

To test the hypothesis that students with gamification perform better in the practice tests (H2), we once again performed a Mann–Whitney U test on the total sum of points with no significant result [U(NGame = 37, NnGame = 57) = 1114.5, z = 0.62, p = 0.53] and on the sum of points per test with no significant result [U(NGame = 37, NnGame = 57) = 1027.5, z = −0.07, p = 0.95]. Regarding the growth curve (Graphs 3, 4), we found substantial differences at the start of the semester in total points, which diminished to an insubstantial difference. Thus, we did not proceed with a repeated-measures mixed model. Nevertheless, a positive gamification effect is noticeable at first in total points. Meaning, students with gamification initially tried out more tested and gained more points in general, but were not significantly more successful per test than those with practice tests only. Further, despite the reduction of the positive effect or even a converse effect across time, we found weak partial support for H2 that practice test performance would be higher with gamification in case of buy-in (i.e., in the short-term).

Finally, we also observed the gamification effect on course performance with no significant result [U(NGame = 37, NnGame = 57) = 900.5, z = −1.07, p = 0.29]. Meaning, we could not support the hypothesis (H3) that students would perform better in the exam with the gamified design.

3.3. Hypotheses testing – ICT course

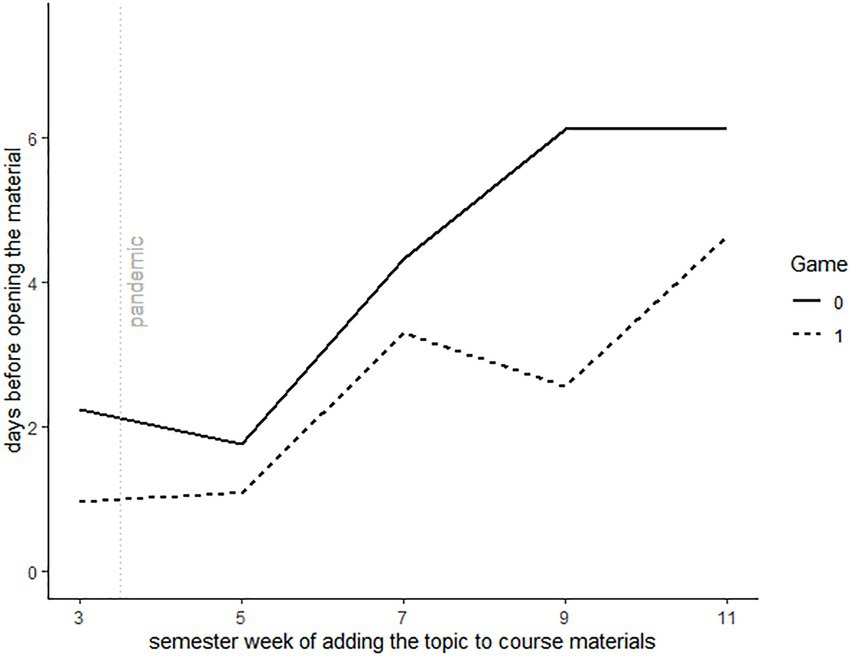

Testing the hypothesis (H1) that behavioral engagement is higher in the gamified course, we first performed two Mann–Whitney U tests. Although we did not find a significant difference in the amount of course materials the students went through [U(NGame = 29, NnGame = 28) = 444.5, z = 0.625, p = 0.53], there was a medium positive effect (MeGame = 1.71, MenGame = 4.18) on the material opening time [U(NGame = 29, NnGame = 28) = 265, z = −2.25, p < 0.05, d = 0.63]. Thus, we gained partial support for H1 at the whole course level. We further examined whether gamification leads to decreased engagement over time by looking at the growth curves for the number of materials (Graph 5) and the opening time (Graph 6). We can see that those in the gamified condition fare better in some sense as their material opening time is lower. However, these differences are too small to continue with a sensible evidence-driven analysis. Simultaneously, we can see that the drops in engagement in the gamified condition somewhat copy the drops in the control condition. Thus, we only found some support for the first hypothesis that those involved in gamification would engage more in the course. There is a difference in behavioral engagement as the opening time of materials persists over time. However, this difference does not widen.

Regarding the hypothesis that students with gamification will perform better in the course (H3), we found a moderate effect via a Mann–Whitney U test [U(NGame = 30, NnGame = 29) = 569, z = 2.03, p < 0.05, d = 0.73] with a higher score in the gamified condition (MeGame = 59.75, MenGame = 57). Our results for a course redesigned with gamification support this hypothesis.

4. Discussion

This study aimed to examine how behavioral engagement and knowledge gain in a university course differ during the pandemic if we redesign the whole course with a gamification or if we expand the course with something novel that is either gamified or not (i.e., practice tests) and that is supposed to help the students with distance learning difficulties. Specifically, we hypothesized that both engagement (H1) and learning performance (H2, H3) would be higher with gamification focused on activity, feedback, and practice. Furthermore, we explored whether such gamification’s novelty effect wears off compared to the control condition. This allowed us to assess both the immediate and long-term effects of our gamified course redesign or extension when using an external gamification system (i.e., a system that is not a part of what the students usually use in the course and the university administrative system) focused on achievements and socialization.

In this sense, we have come to several significant findings. First, we found partial support for the positive effect of our achievement- and socialization-based gamification delivered via an external system. Namely, we found a moderate negative impact on the length of time before students opened the course materials for the first time in the course redesigned with our gamification focused on feedback, activity, socialization, and performance. This initial difference persisted across the semester, although gamification did not lead to further widening effects across time. These results correspond with both review studies on the positive impact of gamification (Koivisto and Hamari, 2019) and previous studies on gamification and behavioral engagement (e.g., Çakıroğlu et al., 2017; Huang et al., 2018; Zainuddin et al., 2020b). Simultaneously, the results differ from previous novelty effect studies. We did not find the U-shaped positive difference of Rodrigues et al. (2022). Therefore, it is possible that a downside of our study is that by adding something new consistently, we lost the possibility of a familiarization effect (the positive difference at the end of the study after a period of no difference which is caused by knowing the game design better). However, the upside of our regular additions is that the significant engagement difference we found persisted across time and where there was no negative difference none was created unlike in previous studies (Koivisto and Hamari, 2014; Sanchez et al., 2020). At the same time, our results are in line with Tsay et al. (2019) who managed to overcome the novelty effect and sustain engagement when iterrating their gamification based on student feedback and favored activities. Therefore, we recommend using our design where problems in distance or hybrid learning and maybe even e-learning arise from students’ untimely or low commitment to course activities or from poor time management. However, we should note that success of such a design is also highly dependant on a well-done gap analysis and consequent fitness of the design to the students and lecturers, to their needs, and to the environment.

At the same time, students in the gamified course did not open more materials than those in the control group. Similarly, extending the course with gamified practice tests showed no difference in either measure of engagement compared to extending it with non-gamified practice tests. This points to the interpretation that when using an external gamification system for distance learning, redesigning the whole course may be better than expanding the course with something novel that is either gamified or non-gamified. This is further backed by the conflicting evidence we found for H3 that students with gamification would perform better in the final exam because only the ICT course data supported this hypothesis.

However, there are other explanations for this inconsistency. For instance, BA courses may have differed from the ICT course. While the BA courses focused on both theory and practice, the ICT course was predominantly practical. Thus, working continuously may have, for example, seemed to be a more sensible goal in the ICT course. Furthermore, we could have designed a gamification that is more suitable for the ICT course students. Although the aesthetics and gamification system are identical, and although we strived for similar types of challenges which would simultaneously suit the environment, it is possible we created a design that is more fitting for the ICT course. Such an interpretation would also be in line with the work of Legaki et al. (2021), who found their gamification to be more suitable to engineering students than to BA students. Another reason may be the course difficulty. If BA courses were easy to get through, the course gaps could have been so small that we would not detect a meaningful difference. This is supported by the success rate in each course in the last 5 years and by the change in the success rate in 2020 (see Appendix 3). Moreover, the difference between ICT success rate in 2020 and in 2015–2019 shows a substantial improvement which provides further support for the effectiveness of our gamified redesign. However, we should also note the effect may have been caused or partially caused by the pandemic as teachers were more lenient during the first wave, at least in terms of deadlines (e.g., Armstrong-Mensah et al., 2020; Gillis and Krull, 2020).

The assumption about BA course difficulty is also supported by the fact that the exam performance of those who used the practice tests did not differ from those we excluded because they did not partake in the extracurriculars. Meaning, people who did not take upon the offer to use extra course activities performed similarly to those partaking even though their behavioral engagement differed (see Appendix 3). It is also possible that the students prepared similarly well for the exam, but their other outcomes (e.g., enjoyment, long-term retention) may have differed. At the end of the semester, we asked the students how engaged they felt in the practice tests and participants with gamification felt more engaged in them than those without it (see Appendix 3). However, this difference in psychological engagement needs to be taken with a grain of salt as it is based only on one half of the sample. Simultaneously, the difference in behavioral engagement may be confounded. As we do not know why these participants chose not to use the tests (and gamification), it is possible the reason for this decision also led to the difference in behavioral engagement. Furthermore, the initial gap analysis of the ICT course led to finding more gaps in students’ proactivity in that course than in the BA courses. Therefore, it is possible our gamification is more suitable in cases where activity needs more support. In sum, there may be some course or student differences which reduce the comparability across courses. Future research should thus use a non-manipulated control group to properly assess whether practice tests and gamification design work similarly well or do not differ from no addition to the course during distance learning. Similarly, researchers should focus on other outcomes in easy courses. Finally, we recommend examining students’ attitudes toward similar gamification designs across various fields.

Our second major finding lies in the partial support for H2. Students gained more points in the practice tests with gamification at the beginning of the semester, yet not in general nor per test. Moreover, the initial difference diminished over time and eventually even turned over. There are multiple possible reasons for this development. For instance, we may have been unsuccessful in sustaining the novel effect of gamification on performing better in the tests. Thus, while the students in gamified conditions started off better, their willingness to try out new tests and consequent higher test performance might have decreased once the novelty wore off. This is consistent with the results of Hanus and Fox (2015) who, similarly to us, created a gamification with achievements and leaderboards and found a drop in intrinsic motivation after a while.

However, such an interpretation contradicts the positive trend we can see in behavioral engagement measures. As the curves for practice test performance vastly changed after the outbreak of COVID-19, we might assume that the pandemic caused this discrepancy. According to Nieto-Escamez and Roldán-Tapia (2021), students in gamified experiments often stopped partaking in the gamification due to inadequate physical and psychological conditions to conserve energy during the pandemic. In our case, students would logically stop using the gamified system, even if they still used the practice tests. Thus, sustaining the novelty effect with novel additions to the gamification would not have an impact. Simultaneously, such conditions would explain our sample loss. This once again points to an assumption that in crisis times, such as the pandemic, achievement- and socialization-based gamified designs in education (and possibly even other fields) should focus more on sustaining students’ activity in the current curriculum than in new extracurricular activities.

Finally, students with gamification might have focused on a different goal than those without gamification. Unlike the students in the control condition, they repeated the same tests more often to obtain better results in them (see Appendix 3). Meaning, our design might have prompted them to correct and learn from their mistakes. If this happened, it is possible students in gamified condition opened less course materials because they learned through practice tests and their repetition and did not feel as high a need to revisit the materials as those in control condition. This once again points to the fact that researchers and practitioners should choose carefully what the outcomes in gamified settings in various contexts should be. Simultaneously, we need to consider the circumstances of future users. This study and previous pandemical gamification studies show that under high stress and other physical and mental difficulties, users may not be able to utilize some of the gamified designs they have at hand (e.g., Lelli et al., 2020; Liénardy and Donnet, 2020) as gamification tends to create a cognitive load on them (Suh, 2015). Given the differences in BA and ICT courses, we extrapolate this load might become heavier if we add new extracurricular activities to the course together with the gamified design. Therefore, redesigning the course without such activities may be a better solution in distance learning. Such possibility should be further examined in future studies.

Altogether, we established some support for the assumption that our gamified design may be a suitable catalyst for change, even in pandemical times. Although our gamified design does not seem to work better than a more traditional and more easily developed teaching method (practice tests), it leads to better outcomes in comparison to using the most traditional methods of lectures, self-study, and homework without a gamified design. In a gamified course, students start working on their coursework earlier during the pandemic, corresponding with the results of Pakinee and Puritat (2021). However, unlike these authors, who created a more competitive gamification than us and whose students did not have any lectures with the teachers, we also found a moderate effect on students’ final exam performance. This could mean – as the authors themselves suggest – that competitive gamification elements need to be chosen carefully with respect to the users and their personality. But we may also suggest that when redesigning a course with gamification for distance learning purposes, we should still use those traditional teaching methods we are able to and possibly even intertwine them with the gamified design. Such a proposition should be further examined, as we can expect distance learning will still be needful in the future due to its benefits (Goudeau et al., 2021) or the concurrent energy crisis and other crises.

4.1. Limitations and future work

Our study had several limitations. The main limitation is our sample loss, probably caused by the pandemic, as in other studies (Nieto-Escamez and Roldán-Tapia, 2021). Possibly, the differences we have found or were not able to find may have been caused by the specific sample loss as we lost more participants in the gamified BA courses. Further, although we could not foresee the pandemic, we might have shed some light on its role and other determinants of sample loss via qualitative interviews with drop-out students. While this was not feasible due to the pandemic, it once again shows that gamification data collections are best done in mixed-method designs. Especially so since the first pandemical wave was chaotic even though our university started using Zoom, Teams, and e-learning very early on. Initially, many students and teachers were not used to using technology that much and in such a way. Students who had to get more used to being online might benefit more from the gamification and practice test than the others, but simultaneously might not have the capacity to utilize the opportunity unlike those more used to online communication and work.

The second limitation is the lack of a true control group in the BA courses combined with the differences between the BA courses and the ICT course. Even though we strived for a similar design and system, the results in the courses may have been caused by course differences. Therefore, gamified and non-gamified practice tests may function similarly well and better than no extension to the BA courses while some extensions to the ICT course might work similarly well as redesigning it with gamification. Thus, we recommend using a very large course where multiple grouping would be possible with a reasonable sample size to further test our results. However, finding such a course, which also could be sensibly gamified, may prove difficult.

Our results are also limited by possible individual differences in our sample. Previous research (e.g., Amo et al., 2020; Pakinee and Puritat, 2021) shows extraversion and trait competitiveness may play a role in a socialization-based gamification. Although our gamified designs were only partially socialization-based and competition was rather a marginal part of it, the individual differences may have played a role we could not tackle. Therefore, future research on the gamification novelty effect should consider these possible differences when designing, providing, and analyzing the gamification.

Lastly, our gamified designs were not originally planned to resolve the problems stemming from the pandemic and distance learning. Thus, our gamified BA design could have been ill-suited for such a situation, leading to no significant results in the BA courses. However, if so, it proves that redesigning a whole course so that students attend course meetings, are active in them, start working on their coursework sooner and more efficiently may be a viable solution in distance learning. Meaning, researchers should focus on such goal types in future gamification of distance learning in order to further support these findings or to find out which ones are the most relevant to making distance learning more accessible and efficient.

5. Conclusion

The aim of this study was to examine the novelty effect and effect of gamification in comparison to another novelty in a course as well as to a non-manipulated condition during distance learning. We found that practice tests and gamified practice tests lead to similar results in engagement and practice test performance across time. Although such results point to the conclusion that gamification is not always the better (nor the worse) solution in terms of objective outcomes, we conclude using similar gamified design of practice tests may still be the better choice due to subjective outcome differences (e.g., in enjoyment) found in previous studies (e.g., Lieberoth, 2014; Treiblmaier and Putz, 2020). Furthermore, our engagement and practice test performance results are in contrast with Sanchez et al. (2020) who found that gamified practice tests led to a significant decrease in performance across time even in comparison with traditional practice tests. We ascribe this contrast to the fact that we added something novel to the gamified environment regularly across time as proposed by Raftopoulos (2020) and Tsay et al. (2019). Therefore, our first main contribution to educational gamification is that we should extend the design regularly in times of distance learning in order to prevent negative consequences of a novelty effect.

This is also in line with the results we found when comparing a course redesigned with gamification and its original form. Students with the redesigned course performed better in the final exam and kept their higher engagement across time which is in contrast with previous novelty effect studies where they lost it (e.g., Koivisto and Hamari, 2014; Rodrigues et al., 2022). Once again, this points to the contribution that gamified designs should present something novel across time. Simultaneously, this meant the students were more pro-active in such a course and started working on their tasks sooner even in distance learning times. Thus, our second contribution is that redesigning a course with such an achievement- and socialization-based gamification in distance learning is a viable solution to close the distance, especially if we focus on coursework, pro-activity, and attendance. Although we should be mindful of the possible BA and ICT course differences, it also seems redesigning the whole course with gamification is more viable than redesigning only a course extension.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

This study was supported by the project Factors Affecting Job Performance 2022 (MUNI/A/1168/2021) of Masaryk University Internal Grant Agency.

Acknowledgments

The authors would like to deeply thank Petra Zákoutská for helping us prepare the aesthetics of our gamification design.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2023.1051227/full#supplementary-material

References

Amo, L., Liao, R., Kishore, R., and Rao, H. R. (2020). Effects of structural and trait competitiveness stimulated by points and leaderboards on user engagement and performance growth: a natural experiment with gamification in an informal learning environment. Eur. J. Inf. Syst. 29, 704–730. doi: 10.1080/0960085X.2020.1808540

Armstrong-Mensah, E., Ramsey-White, K., Yankey, B., and Self-Brown, S. (2020). COVID-19 and distance learning: effects on Georgia State University School of public health students. Front. Public Health 8:576227. doi: 10.3389/fpubh.2020.576227

Bai, S., Hew, K. F., and Huang, B. (2020). Does gamification improve student learning outcome? Evidence from a meta-analysis and synthesis of qualitative data in educational contexts. Educ. Res. Rev. 30:e100322. doi: 10.1016/j.edurev.2020.100322

Boudadi, N. A., and Gutiérrez-Colón, M. (2020). Effect of gamification on students’ motivation and learning achievement in second language acquisition within higher education: a literature review 2011-2019. The EUROCALL Rev. 28, 57–69. doi: 10.4995/eurocall.2020.12974

Çakıroğlu, Ü., Başıbüyük, B., Güler, M., Atabay, M., and Yılmaz Memiş, B. (2017). Gamifying an ICT course: influences on engagement and academic performance. Comput. Hum. Behav. 69, 98–107. doi: 10.1016/j.chb.2016.12.018

Campillo-Ferrer, J. M., and Miralles-Martínez, P. (2021). Effectiveness of the flipped classroom model on students’ self-reported motivation and learning during the COVID-19 pandemic. Human. Soc. Sci. Commun. 8, 1–9. doi: 10.1057/s41599-021-00860-4

Considine, J., Botti, M., and Thomas, S. (2005). Design, format, validity and reliability of multiple choice questions for use in nursing research and education. Collegian 12, 19–24.

Czeisler, M. E., Lane, R. I., Petrosky, E., Wiley, J. F., Christensen, A., Njai, R., et al. (2020). Mental health, substance use, and suicidal ideation during the COVID-19 pandemic—United States. Weekly 69, 1049–1057. doi: 10.15585/mmwr.mm6932a1

Deci, E. L., and Ryan, R. M. (2008). Self-determination theory: a macrotheory of human motivation, development, and health. Can. Psychol. 49, 182–185. doi: 10.1037/a0012801

Deci, E. L., and Ryan, R. M. (2015). Self-determination theory. Vol. 21, in International Encyclopedia of the Social & Behavioral Sciences, by J. D. Wright, Amsterdam, The Netherlands: Elsevier. 486–491.

Domínguez, A., Saenz-de-Navarrete, J., De-Marcos, L., Fernandez-Sanz, L., Pages, C., and Martínez-Herráiz, J. J. (2013). Gamifying learning experiences: practical implications and outcomes. Comput. Educ. 63, 380–392. doi: 10.1016/j.compedu.2012.12.020

El-Beheiry, M., McCreery, G., and Schlachta, C. M. (2017). A serious game skills competition increases voluntary usage and proficiency of a virtual reality laparoscopic simulator during first-year surgical residents’ simulation curriculum. Surg. Endosc. Other Interv. Tech. 31, 1643–1650. doi: 10.1007/s00464-016-5152-y

Farzan, R., DiMicco, J. M., Brownholtz, D. R. M. B., Geyer, W., and Dugan, C.. (2008). “Results from deploying a participation incentive mechanism within the enterprise.” CHI 2008 Proceedings. Florence, Italy: ACM. 563–572.

Furdu, I., Tomozei, C., and Kose, U. (2017). Pros and cons gamification and gaming in classroom. Broad Res. Arti. Intell. Neurosci. 8, 56–62. doi: 10.48550/arXiv.1708.09337

Gagné, M., and Forest, J. (2008). The study of compensation systems through the lens of self-determination theory: reconciling 35 years of debates. Can. Psychol. 49, 225–232. doi: 10.1037/a0012757

Gillis, A., and Krull, L. M. (2020). COVID-19 remote learning transition in spring 2020: class structures, student perceptions, and inequality in college courses. Teach. Sociol. 48, 283–299. doi: 10.1177/0092055X20954263

Goudeau, S., Sanrey, C., Stanczak, A., Manstead, A., and Darnon, C. (2021). Why lockdown and distance learning during the COVID-19 pandemic are likely to increase the social class achievement gap. Nat. Hum. Behav. 5, 1273–1281. doi: 10.1038/s41562-021-01212-7

Guay, F., Ratelle, C. F., and Chanal, J. (2008). Optimal learning in optimal contexts: the role of self-determination in education. Can. Psychol. 49, 233–240. doi: 10.1037/a0012758

Hamari, J. (2013). Transforming homo Economicus into homo Ludens: a field experiment on gamification in a utilitarian peer-to-peer trading service. Electron. Commer. Res. Appl. 12, 236–245. doi: 10.1016/j.elerap.2013.01.004

Hamari, J., Koivisto, J., and Sarsa, H.. “Does gamification work? – a literature review of empirical studies on gamification.” 47th Hawaii international conference on system sciences (HICCS). Waikoloa, HI, USA: IEEE, (2014). 3025–3034.

Hanus, M. D., and Fox, J. (2015). Assessing the effects of gamification in the classroom: a longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Comput. Educ. 80, 152–161. doi: 10.1016/j.compedu.2014.08.019

Huang, B., Hew, K. F., and Lo, C. K. (2018). Investigating the effects of gamification-enhanced flipped learning on undergraduate students’ behavioral and cognitive engagement. Interact. Learn. Environ. 27, 1106–1126. doi: 10.1080/10494820.2018.1495653

Huotari, K., and Hamari, J. (2017). A definition for gamification: anchoring gamification in the service marketing literature. Electron. Mark. 27, 21–31. doi: 10.1007/s12525-015-0212-z

Kohli, H., Wampole, D., and Kohli, A. (2021). Impact of online education on student learning during the pandemic. Stud. Learn. Teach. 2, 1–11. doi: 10.46627/silet.v2i2.65

Koivisto, J., and Hamari, J. (2014). Demographic differences in perceived benefits from gamification. Comput. Hum. Behav. 35, 179–188. doi: 10.1016/j.chb.2014.03.007

Koivisto, J., and Hamari, J. (2019). The rise of the motivational information systems: a review of gamification research. Int. J. Inf. Manag. 45, 191–210. doi: 10.1016/j.ijinfomgt.2018.10.013

Kolb, D. A., Boyatzis, R. E., and Mainemelis, C. (2001). “Experiential learning theory: previous research and new directions” in Perspectives on Thinking, Learning, and Cognitive Styles. eds. R. J. Sternberg and L.-F. Zhang, vol. 1 (Mahwah, NJ: Lawrence Erlbaum Associates Publishers), 227–247.

Kuo, M.-S., and Chuang, T.-Y. (2016). How gamification motivates visits and engagement for online academic dissemination – an empirical study. Comput. Hum. Behav. 55, 16–27. doi: 10.1016/j.chb.2015.08.025

Landers, R. N., Bauer, K. N., and Callan, R. C. (2017). Gamification of task performance with leaderboards: a goal setting. Comput. Hum. Behav. 71, 508–515. doi: 10.1016/j.chb.2015.08.008

Legaki, N.-Z., Karpouzisa, K., Assimakopoulosa, V., and Hamari, J. (2021). The effect of challenge-based gamification on learning: an experiment in the context of statistics education. Int. J. Hum. Comput. Stud. 144:102496. doi: 10.1016/j.ijhcs.2020.102496

Legaki, N.-Z., Xie, N., Hamari, J., Karpouzis, K., and Assimakopoulos, V. (2020). The effect of challenge-based gamification on learning: an experiment in the context of statistics education. Int. J. Hum. Comput. Stud. 144, 102496–102414. doi: 10.1016/j.ijhcs.2020.102496

Lelli, V., Andrade, R. M. C., Freitas, L. M., Silva, R. A. S., Gutenberg, F., Gomes, R. F., et al. (2020). “Gamification in remote teaching of se courses: experience report.” Proceedings of the 34th Brazilian Symposium on Software Engineering. New York, NY: ACM.

Lieberoth, A. (2014). Shallow gamification: testing psychological effects of framing an activity as a game. Games Cult. 10, 229–248. doi: 10.1177/1555412014559978

Liénardy, S., and Donnet, B.. (2020). “GameCode: choose your own problem solving path.” Proceedings of the 2020 ACM Conference on International Computing Education Research ICER ‘20, New Zealand.

Looyestyn, J., Kernot, J., Boshoff, K., Ryan, J., Edney, S., and Maher, C. (2017). Does gamification increase engagement with online programs? A systematic review. PLoS One 12:e0173403. doi: 10.1371/journal.pone.0173403

Mekler, E. D., Brühlmann, F., Tuch, A. N., and Opwis, K. (2017). Towards understanding the effects of individual gamification elements on intrinsic motivation and performance. Comput. Hum. Behav. 71, 525–534. doi: 10.1016/j.chb.2015.08.048

Metwally, A. H. S., Nacke, L. E., Chang, M., Wang, Y., and Yousef, A. M. F. (2021). Revealing the hotspost of educational gamification: an umbrella review. In. J. Educ. Res. 109:e101832. doi: 10.1016/j.ijer.2021.101832

Morris, M. E., Kuehn, K. S., Brown, J., Nurius, P. S., Zhang, H., Sefidgar, Y. S., et al. (2021). College from home during COVID-19: a mixed-methods study of heterogeneous experiences. PLoS One 16:e0251580. doi: 10.1371/journal.pone.0251580

Nieto-Escamez, F. A., and Roldán-Tapia, M. D. (2021). Gamification as online teaching strategy during COVID-19: a mini-review. Front. Psychol. 12:648552. doi: 10.3389/fpsyg.2021.648552

Pakinee, A., and Puritat, K. (2021). Designing a gamified e-learning environment for teaching undergraduate ERP course based on big five personality traits. Educ. Inf. Technol. 26, 4049–4067. doi: 10.1007/s10639-021-10456-9

Raftopoulos, M. (2020). Has gamification failed, or failed to evolve? Lessons from the frontline in information systems application. GamiFIN Conference 2020, Levi, Finland.

Robbins, S. P., and Judge, T. A. (2017). Organizational Behavior (17th Global Edition). Boston, MA: Pearson.

Rodrigues, L., Pereira, F. D., Toda, A. M., Palomino, P. T., Pessoa, M., Carvalho, L. S. G., et al. (2022). Gamification suffers from the novelty effect but benefits from the familiarization effect: findings from a longitudinal study. Int. J. Educ. Technol. High. Educ. 19, 1–25. doi: 10.1186/s41239-021-00314-6

Ryan, R. M., and Rigby, C. S. (2019). “Motivational foundations of game-based learning,” in Handbook of Game-Based Learning. Mit Press. 153–176.

Safa, F., Anjum, A., Hossain, S., Trisa, T. I., Alam, S. F., Abdur Rafi, M., et al. (2021). Immediate psychological responses during the initial period of the COVID-19 pandemic among Bangladeshi medical students. Child Youth Serv. Rev. 122:105912. doi: 10.1016/j.childyouth.2020.105912

Sanchez, D. R., Langer, M., and Kaur, R. (2020). Gamification in the classroom: examining the impact of gamified quizzes on student learning. Comput. Educ. 144:103666. doi: 10.1016/j.compedu.2019.103666

Sardi, L., Idri, A., and Fernández-Alemán, J. L. (2017). A systematic review of gamification in e-health. J. Biomed. Inform. 71, 31–48. doi: 10.1016/j.jbi.2017.05.011

Suh, C.-H. (2015). The effects of students’ motivation, cognitive load and learning anxiety in gamification software engineering education: a structural equation modeling study. Multimed. Tools Appl. 75, 10013–10036. doi: 10.1007/s11042-015-2799-7

Treiblmaier, H., and Putz, L.-M. (2020). Gamification as a moderator for the impact of intrinsic motivation: findings from a multigroup field experiment. Learn. Motiv. 71, 101655–101615. doi: 10.1016/j.lmot.2020.101655

Treiblmaier, H., Putz, L.-M., and Lowry, P. B. (2018). Research commentary: setting a definition, context, and theory-based research agenda for the gamification of nonnaming applications. AIS Trains. Hum. Comput. Int. 10, 129–163. doi: 10.17705/1thci.00107

Tsay, C.-H., Kofinas, A. K., and Trivedi, S. K. (2019). Overcoming the novelty effect in online gamified learning systems: an empirical evaluation of student engagement and performance. J. Comput. Assist. Learn. 36, 128–146. doi: 10.1111/jcal.12385

van Gaalen, A. E. J., Brouwer, J., Schönrock-Adema, J., Bouwkamp-Timmer, T., Jaarsma, A. D. C., and Georgiadis, J. R. (2020). Gamification of health professions education: a systematic review. Adv. Health Sci. Educ. 26, 683–711. doi: 10.1007/s10459-020-10000-3

van Roy, R., and Zaman, B. (2018). Need-supporting gamification in education: an assessment of motivational effects over time. Comput. Educ. 127, 283–297. doi: 10.1016/j.compedu.2018.08.018

Xi, N., and Hamari, J. (2019). Does gamification satisfy needs? A study on the relationship between gamification features and intrinsic need satisfaction. Int. J. Inf. Manag. 46, 210–221. doi: 10.1016/j.ijinfomgt.2018.12.002

Zainuddin, Z., Chu, S. K. W., Shujahat, M., and Perera, C. J. (2020a). The impact of gamification on learning and instruction: a systematic review of empirical evidence, 100326. Educ. Res. Rev. 30. doi: 10.1016/j.edurev.2020.100326

Keywords: gamification, novelty effect, pandemic (COVID-19), learning performance, behavioral engagement, tailored gamification

Citation: Kratochvil T, Vaculik M and Macak M (2023) Gamification tailored for novelty effect in distance learning during COVID-19. Front. Educ. 8:1051227. doi: 10.3389/feduc.2023.1051227

Edited by:

Ana Carolina Tome Klock, Tampere University, FinlandReviewed by:

Sandra Gama, University of Lisbon, PortugalJakub Swacha, University of Szczecin, Poland

Copyright © 2023 Kratochvil, Vaculik and Macak. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tomas Kratochvil, ✉ kratochvilt@mail.muni.cz

Tomas Kratochvil

Tomas Kratochvil Martin Vaculik

Martin Vaculik Martin Macak2

Martin Macak2