- 1Department of Computer Science and Engineering, Bangladesh University of Business and Technology, Dhaka, Bangladesh

- 2Department of Computer Science and Engineering, Jagannath University, Dhaka, Bangladesh

- 3Center for Health Informatics, Macquarie University, Sydney, NSW, Australia

- 4Amity International Business School, Amity University, Noida, India

- 5Department of Environmental Health, Harvard T H Chan School of Public Health, Boston, MA, United States

- 6Human Genetics Center, School of Public Health, The University of Texas Health Science Center at Houston, Houston, TX, United States

COVID-19 has caused over 528 million infected cases and over 6.25 million deaths since its outbreak in 2019. The uncontrolled transmission of the SARS-CoV-2 virus has caused human suffering and the death of uncountable people. Despite the continuous effort by the researchers and laboratories, it has been difficult to develop reliable efficient and stable vaccines to fight against the rapidly evolving virus strains. Therefore, effectively preventing the transmission in the community and globally has remained an urgent task since its outbreak. To avoid the rapid spread of infection, we first need to identify the infected individuals and isolate them. Therefore, screening computed tomography (CT scan) and X-ray can better separate the COVID-19 infected patients from others. However, one of the main challenges is to accurately identify infection from a medical image. Even experienced radiologists often have failed to do it accurately. On the other hand, deep learning algorithms can tackle this task much easier, faster, and more accurately. In this research, we adopt the transfer learning method to identify the COVID-19 patients from normal individuals when there is an inadequacy of medical image data to save time by generating reliable results promptly. Furthermore, our model can perform both X-rays and CT scan. The experimental results found that the introduced model can achieve 99.59% accuracy for X-rays and 99.95% for CT scan images. In summary, the proposed method can effectively identify COVID-19 infected patients, could be a great way which will help to classify COVID-19 patients quickly and prevent the viral transmission in the community.

1 Introduction

COVID-19 disease has caused one of the major pandemic events in human history since COVID-19 cases were first identified in late 2019. This virus, SARS-CoV-2, was initially discovered in Wuhan, China, in November 2019. The world has experienced the outbreak of this virus quickly and it has been not under control due to new viral strains being emerged. The World Health Organization (WHO) declared it a global epidemic due to its fast spread among humans Organization (2020).

COVID-19 has been defined as a respiratory disease because it causes myofascial pain syndrome, sore throat, headache, fever, breathing difficulty, dry cough, and chest infection Huang et al. (2020). In addition, an infected individual might display full symptoms in around 14 days. Over 528 million COVID-19 cases have been recorded in over 200 countries and territories in May 2022, resulting over 6.25 million fatalities Organization et al. (2020). Consequently, the global community currently faces a severe public health threat. The WHO labeled the expansion of disease as a public health crisis of international concern (PHEIC) on January 30, 2020, and acknowledged it as a pandemic on March 11, 2020 Organization (27 April 2020). Researchers have been continuing research to invent an effective vaccine to contain the virus Shah et al. (2021); Ahuja et al. (2021). Several vaccines, including Pfizer-BioNTech and Moderna, have been quickly designed and proven effective. However, many people from developing countries still cannot be vaccinated due to their poor economy and a lack of modern technology to preserve the vaccine. Furthermore, the virus can mutate quickly and infect people with a new strain. The vaccine for tackling a strain has not been fully effective for containing a novel strain. Therefore, we need to identify the infected individuals and isolate them to stop the rapid spread of this virus. However, the identification is very challenging as people infected with this virus show similar pneumonia, fever, and flu symptoms. Although at present, the most reliable identifying process is reverse transcription-polymerase chain reaction (RT-PCR), this process is time-consuming and costly, and the testing kits are limited in storage Wang et al. (2020). To address this issue, researchers have aimed to develop an effective RT-PCR alternative approach to detect viral sequence from possible COVID-19 patients and thus control the virus’s spread.

COVID-19 primarily infects the lungs, and visually marking the affected area aids in rapidly screening infected individuals Chung et al. (2020). Chest radiography images (chest X-Ray or computed tomography (CT) scan) are extensively used as a visual signal of lung Chung et al. (2020). So Screening with chest X-rays and CT scan is a potential approach to detecting COVID-19 afflicted patients. Deep learning (DL) approaches primarily focus on automatically collecting features from images and classifying them. DL applications are successfully implemented in the classification of medical images and clinical decision-making tasks Greenspan et al. (2016). The use of machine learning (ML) algorithms and software, or artificial intelligence (AI)Gaur et al. (2022); Biswas et al. (2021), to replicate human cognition in the analysis, display, and comprehension of complicated medical and health-care data is referred to as AI in healthcare Biswas Milon and Kawsher. (2022). Deep transfer learning also is being successfully applied in many medical sectors. For example PreRBP-TL is used for the reconstruction of gene regulatory networks, modeling of gene expression from single-cell data, and prediction of genomic properties such as accessible regions, chromatin connections, and TFBSs. Furthermore, deep learning techniques in bioinformatics offer a variety of uses, as well as tools and methodologies to quickly unfold the hidden information inside the DNA sequences‘Biswas Milon. (2022). In COVID-19 research, experts work round the clock to develop a reliable framework to diagnose COVID-19 utilizing medical imagery like lungs X-rays imaging and CT scan carrying deep learning(DL) technologies. And, numerous studies also offer an excellent diagnostic technique based on a DL algorithm for the recognition of COVID-19 patients Loey et al. (2020); Horry et al. (2020); Lahsaini et al. (2021); Maghded et al. (2020); Apostolopoulos and Mpesiana (2020); Ghose et al. (2021); Nayak et al. (2021); Pham (2021). Lahsaini et al. (2021) introduced a transfer learning-based system based on DenseNet201 with a visual explanation. They achieved an accuracy of 98.8% for identifying COVID-19 patients. At the same time, Maghded et al. (2020) developed a smartphone-based architecture to identify COVID-19 considering mass people. However, the accuracy and reliability of this approach are not acceptable. Apostolopoulos and Mpesiana (2020) introduced a MobileNetv2 model based on transfer learning for self-acting COVID-19 identification. The study used 1427 X-Rays and acquired 98.66% sensitivity, 96.78% accuracy, and specificity of 96.46%. In another study, Ghose et al. (2021) proposed a novel Convolutional neural Network (CNN) based model to identify COVID-19 individuals with an accuracy of 96%. For this research purpose, Panwar et al. (2020a) used Chest X-ray and CT scan images with a DL strategy for color viewing and quick COVID-19 data identification. On the other hand, Panwar et al. (2020b) utilized the nCOVnet base approach in X-ray images to find privacy risks inability to detect the COVID-19 instance. Furthermore, they demonstrated that the proposed technology is faster than a standard RT-PCR testing kit and can therefore differentiate COVID-19 from other respiratory disorders like Pneumonia. Realizing the effectiveness of this technology Luján-García et al. (2020) used simple DL models with X-rays as input and created 36 convolution neural layers to reach a precision score of 0.843 for identifying Pneumonia. To improve accuracy, a strategy was devised by Bhattacharyya et al. (2022) to recognize COVID-19 patients from X-ray images efficiently. First, they merged DL-based image segmentation and classification models to improve results. Finally, they categorized the convoluted features by applying ML methods, including SoftMax, RF, SVM, and XG Boost. The VGG 19 architecture blended with the BRISK key-points mining strategy with RF as the categorization layer got a significant accuracy of 96.6%. Loey et al. (2020) suggested a Deep Transfer Learning-based method for identifying COVID-19 using CT scan images of the chest. To make this process more faster and more automated, Alshazly et al. (2021) exhibited a deep learning model train for chest CT scan. For the first time, Maghded et al. (2020) stated an IoT based strategy for recognizing COVID-19, and they could establish a strategy for diagnosing COVID-19 using built-in sensors with CT scan images. They further claimed that their malware detection solution for smartphones was not only the most cost-effective and user-friendly but also the fastest. Their approach, however, has a significant flaw in that they do not address the accuracy of COVID-19 recognition. Furthermore, the individuals who take advantage of a smartphone’s standard sensors may pose security issues being their health conditional data public, which is not desirable. Unfortunately, they did not devote any attention to finding a solution to this problem. On the other side, somebody tries to make those detection more reliable by joining multiple architectures. For example, Aslan et al. (2021) effectively merged two pre-trained Alexnet architectures (transfer learning and BiLSTM layer) for COVID-19 separation, claiming that the stated hybrid system could do the better COVID-19 recognition than almost any single model base architecture. Furthermore, Ter-Sarkisov (2022) presented a COVID-CT-MaskNeT model to forecast COVID-19 in chest CT scan images.

Upon 21,192 test images, they obtained 90.80% sensitivity for COVID-19 cases, 91.62% sensitivity for Pneumonia cases, a mean accuracy of 91.66% and an F1-score of 91.50% by training only a tiny proportion of the model’s parameters.

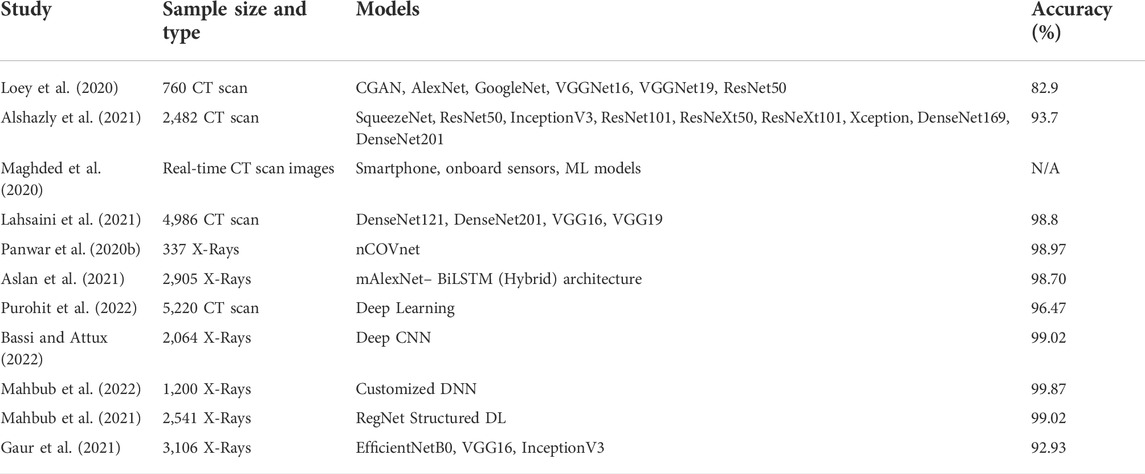

As reviewed above, approaches like CNN, DL, and transfer learning have all been employed to diagnose COVID-19. AI technology can introduce a new era in medical science by allowing for rapid illness detection and categorization, perhaps step-down the transmission of the COVID-19 virus. Representative works are briefly summarized in Table 1.

We propose an enhanced DL architecture to recognize COVID-19 and normal patients from X-Rays and CT scan utilizing the transfer learning strategy based on a DenseNet169 model. The significant contributions are:

• We have proposed a customized deep network to assist in the fast diagnosis of COVID-19 patients precisely by using a single transfer learning technique.

• An in-depth experimental analysis is carried out in view of accuracy, precision, recall, F1-score, and confusion matrix to evaluate the suggested model’s performance. Utilizing our proposed model, we have identified CT scan images with an accuracy rate of 99.95% for two classes (normal and COVID-19 patients). Similarly, for X-rays, our proposed model has shown a remarkable performance with a detection accuracy of 99.59%.

2 Materials and methods

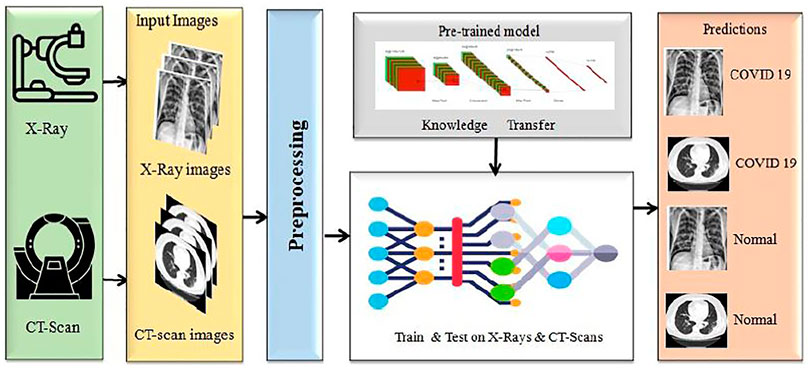

We proposed a model to distinguish COVID-19 patients from non-COVID-19 through chest X-rays and CT scan using a DL network based on the DenseNet169 introduced by Huang et al. (2017). This task uses transfer learning techniques, typically utilized in applications with a small data set, and retrains a pre-trained model on a massive dataset like ImageNET. Our paper uses a single transfer learning approach to reduce computational complexity. It can improve the model’s total training time and allow the deployment of a smaller dataset with a more complex architecture. Before training the model, we did some significant pre-processing on the training data. The block diagram of this experiment is presented in Figure 1.

2.1 Materials

We proposed a DL based model aimed in identifying COVID-19 patients utilizing X-rays and CT scan data gathered from Siddhartha and Santra (2020) and ARIA (2021). The tests are carried out on a local machine with an i5-8265U processor, 8GB RAM, and Google-Collab for GPU. The transfer learning technique is applied to CNN models in this study, frequently utilized in applications with restricted data sets.

2.1.1 Dataset collection

CT scan images for COVID-19 and normal individuals

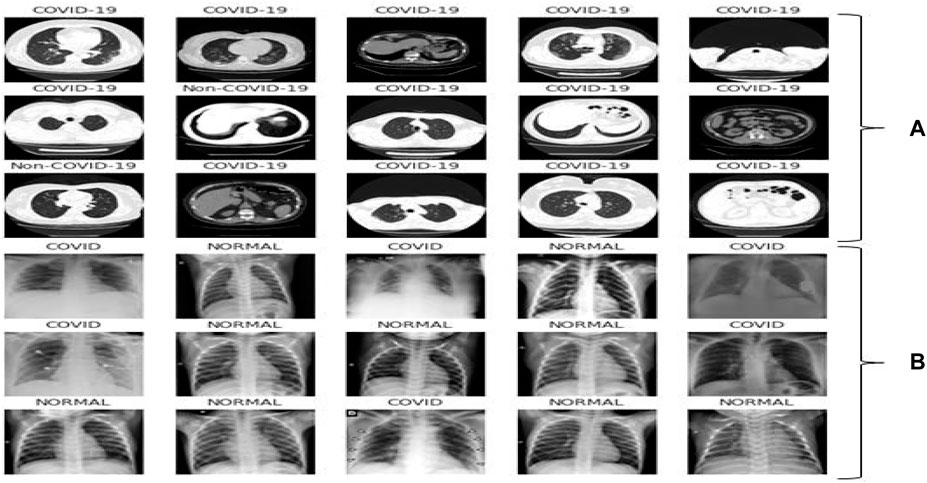

This dataset carries 8439 CT scan, of which 7495 are the COVID-19 belonging to 190 people and 944 CT-Sans of 59 peoples without COVID-19, pneumonia patients, and otherwise healthy individuals. This daraset was generated from ARIA (2021). The disease status of the suspected patients in this group was confirmed using an RT-PCR test. Figure 2A showed some representative CT scan of this dataset associated with normal and COVID-19 individuals.

FIGURE 2. Representative samples: (A) CT scan samples from the dataset ARIA (2021), (B) X-ray samples from the dataset Siddhartha and Santra (2020).

X-rays for COVID-19 and normal individuals

The second dataset contains 1626 images for COVID-19 patients and 1802 images for normal individuals found in Siddhartha and Santra (2020). The disease status of the suspected patients was confirmed using an RT-PCR test and is annotated by radiologist. Figure 2B showed few X-ray images associated with the dataset.

2.2 Methods

The research aims to determine the optimal architecture for classifying individuals with COVID-19 positive or normal. We have chosen a few CNN architectures that have produced outstanding results on the ImageNET and CiFAR datasets for the said purpose.

2.2.1 Pre-processing

According to the research, the effectiveness of medical imaging computer vision tasks in DL is not mainly attributable to CNN models; instead, image pre-processing plays a significant role. As the primary step of pre-processing, data normalization is executed to preserve the quality of the images, which is critical in the analysis of X-ray and CT scan images according to Patro (2015). First, we computed the pixel-level global average (SD) values for all of the images and afterward normalized the data with the formula below in Eq. 1:

where σ is the SD,

2.2.2 DenseNet169

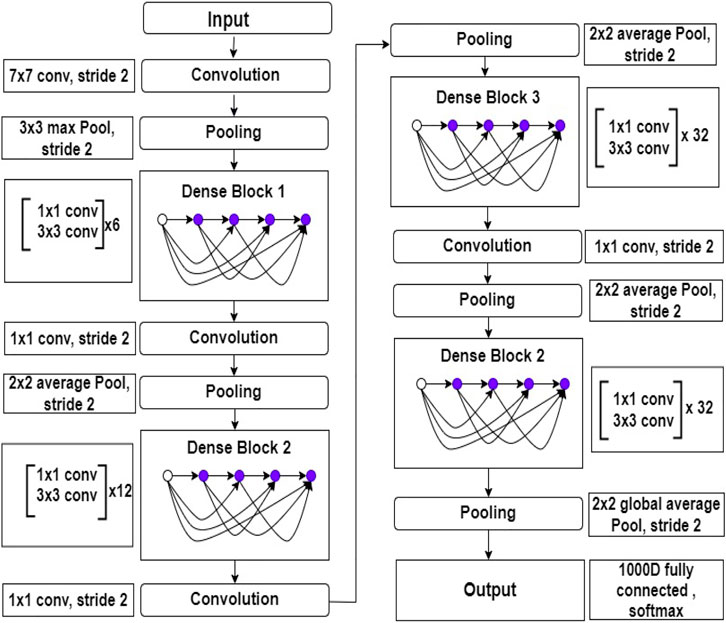

In limited data classification tasks, transfer learning has proven to be reliable. Therefore, tuning on Deep transfer learning (DTL) can help us get better results. We suggested the DenseNet169 model, which uses transfer learning to draw out features simultaneously and utilize their weights learned on the ImageNET dataset to reduce computation time. Huang et al. (2017) presented this type of model for the first time in 2017, and it contains one convolution and pooling layer at the start, three transition layers, four dense blocks, and a classification layer is used after that. For example, with stride 2, the first convolutional layer conducts 77 convolutions, followed by a max-pooling of 33 with stride 2. After that, the network consists of a dense block, followed by three sets consisting of a transition layer and a dense block. Huang et al. (2017) made dense connectivity by bringing indirect connections from any layer to any other layer in the network. As a result, the network’s lth layer collects the characteristics maps of all the preceding layers, improving gradient flow throughout the whole network. The DenseNets architecture is separated into the several densely linked dense blocks indicated above because convolutional neural networks are primarily designed to diminish the size of feature maps. The layers that appear between these large blocks are known as transition layers. A batch normalization layer, an 11 convolutional layer, and a 22 average pooling layer with a stride of 2 make up the network’s transition layers. The detailed architecture of DenseNet169 is shown in Figure 3.

FIGURE 3. Inside view of DenseNet169 deep learning model. This figure shows the layers architecture with layers number.

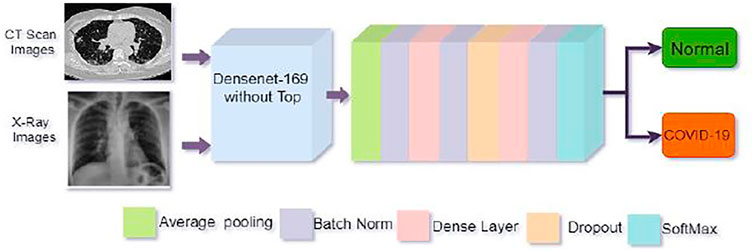

2.2.3 Proposed method

We proposed a customized DenseNet169-based model for COVID-19 recognition. The feature extraction layers were kept in the pre-trained DenseNet169 and then added a convolution layer, the Global Average Pooling layer, the batch normalization layer. We apply two dense layers with 512 and 256 neurons following batch normalization, and a 20% dropout layer is utilized in front of the second thick layer to avoid overfitting. The final classification or output layer has two neurons and employs the SoftMax function, which connects all neurons in almost the duplicate layer to the next layer. We have two outputs in our study. We employed SoftMax to categorize them into two groups: one for not infected or normal patients and another for infected or COVID-19 positive patients using Eq. 2.

where χ is input value and k implies the number of classes to be forecast.

The strategy applied in this study has been depicted in Figure 4.

FIGURE 4. Architecture of the proposed model. The top of the pre-trained DenseNet169 model was removed and then added some layers as shown in the figure for better COVID-19 detection.

2.2.4 Feature extraction procedure

Throughout the feature extraction phase, convolutional neural layers is used.Batch normalization is utilized to avoid model overfitting, weight regularization, and dropout approaches. The weights are regularized using the Euclidean norm (L2), with coefficient values ranging from (0.001–0.01) and a 20% weight dropout. The early stages of the pre-trained DenseNet169 network, which has a scalable architecture for image classification, are employed for enhanced features extraction influenced by the transfer learning technique. DenseNet169s convolutional cells are used in Figure 4 with their structure.The feature extraction blocks are avoided and have non-trainable parameters throughout the training mode, and Adam is used as the optimizer. We have used the cost-sensitive binary cross-entropy loss function presented in equation 3

Where, k is the number of class (in this work the classes are COVID-19 and normal), and φk is the SoftMax function or anticipated probability of class k, y is binary indicator (0 or 1) and ωk is weight for class k.

We set the initial learning rate 1 × 10–6. If no further improvement in the accuracy and the validation loss remained steady, the learning rate would be cut by 20% to a minimum of 1 × 10–6 for every ten epochs. The training process would end if the validation loss did not change for at least 15 epochs, and the best weights would be determined. For the batch size, 32 is selected. The number of epochs evaluated is 50.

3 Results and discussion

3.1 Performance evaluation matrices

When evaluating classification models, the most frequently used metrics are Accuracy, Precision, Recall, and F-1 Score. The formulae for the matrices are as follows:

where TP, FP, TN and FN denote true positive, false positive, true negative and false negative values respectively.

3.2 Experimental results

We gathered the datasets from ARIA (2021) and Siddhartha and Santra (2020). Then we performed some pre-processing such that all of the photos are 224 × 224 pixels on both dataset. Next, we employed data augmentation strategies to develop generalization by combining several strategies.

Results for CT scan images

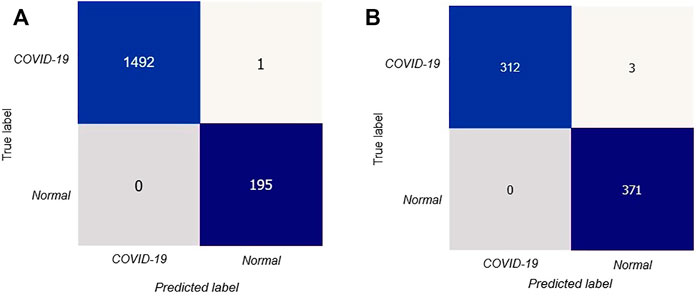

After that, we have created a confusion matrix to calculate the performance of our suggested design. Figure 5A depicts the confusion matrix of the stated model for test situations on CT scan images.

FIGURE 5. Confusion matrix for the proposed model: (A) confusion matrix on CT scan, (B) confusion matrix on X-rays.

The confusion matrix shows that out of 1688 test photos, only one image was detected incorrectly by the stated model with better and consistent true negative and true positive values. Thus our proposed deep learning technique can precisely classify COVID-19 patients.

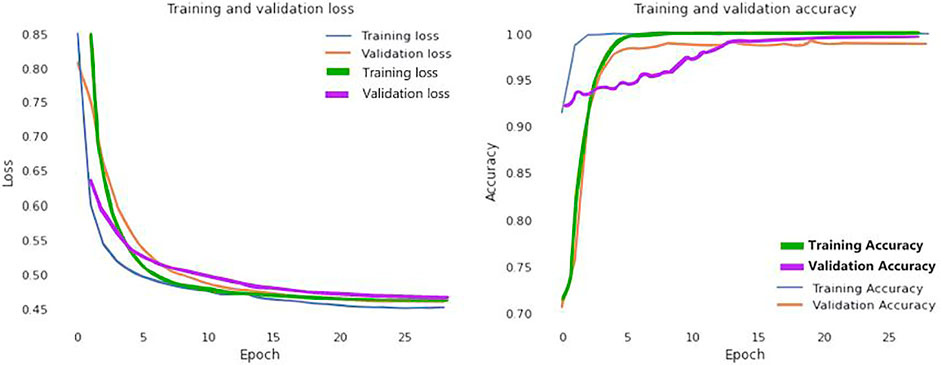

In addition, Figure 6 visually examines the efficiency of the introduced DL model in the learning and validation steps to comprehend the accuracy and loss (blue and yellow color curves). The accuracy of our suggested technique rose by 92% after the 10th epoch, which indicates that the presented methodology could be deployed to detect COVID-19 quickly. Correspondingly, training and validation accuracies are 99.98 and 99.79% at epoch 25. Furthermore, the proposed method provides that learning and validation losses are 0.12 and 0.15%, accordingly.

FIGURE 6. Loss and accuracy curves of the proposed model (Blue and yellow curves represents for CT scan images; green and purple curves ensembles for the X-Ray images).

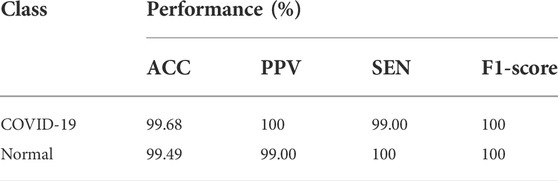

Table 2 summarizes the ACC, SEN, PPV, and F1-score for each class referred to. For COVID-19 cases, the suggested DenseNet169 network has 99.96% ACC, 99.93% SEN, 99.98% F1-score, and 100% PPV. In normal situations, an ACC of 99.94%, PPV of 99.48%, SEN of 100%, and F1-score of 99.99% were obtained.

TABLE 2. Experimental results obtained by the proposed model for COVID-19 and normal cases on CT scan.

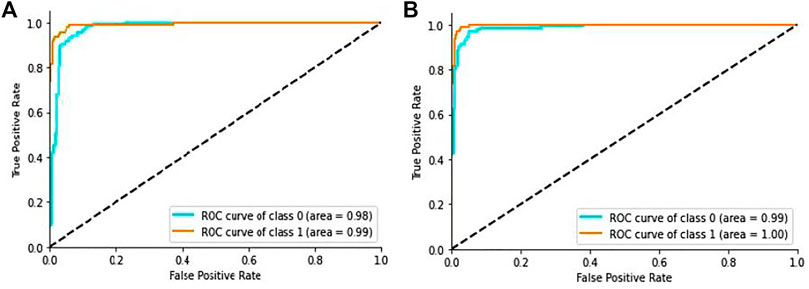

In addition, the ROC curves between the actual positive rate and the false positive rate were introduced to see the general evaluation, as shown in Figure 7A. The region under the ROC curve (AUC) was 99.9% for the proposed DL method.

FIGURE 7. ROC curve of the proposed model: (A) ROC curve on CT scan images, (B) ROC curve on X-ray images.

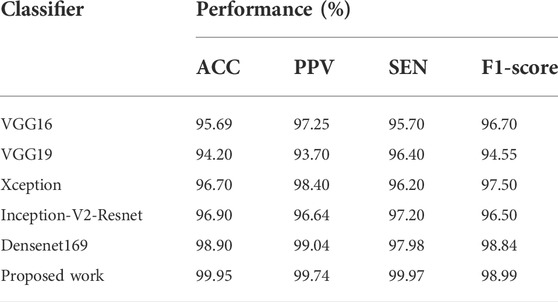

In this study, our suggested model has been compared to four different DL models: VGG16, VGG19, Xception, and Inception-V2-Resnet. Furthermore, we trained all architectures using 32-bit batches for 25 epochs with the same dataset ARIA (2021). Finally, the outcomes are compared using the assessment measures indicated before.

The obtained performances of various models are shown in Table 3, where the introduced DenseNet169 model outperforms other architectures in terms of ACC, PPV, SEN, and F1-score. Furthermore, DenseNet169 has the best SEN of 100%, which is beneficial in medical diagnostic aid because this metric is vital in general and determination support for COVID-19. High sensitivity is, in fact, synonymous with a low false negative rate. False negatives are people afflicted by COVID-19 but diagnosed COVID-19 negative by the model. Such an error can result in the patient’s death. As demonstrated in Table 3, our model also produces good performance in all the other terms.

TABLE 3. Experimental results obtained with proposed model and different DL models on CT scan images.

Results for X-Ray images

In Figure 5B we showed the confusion matrix generated on X-ray images data Siddhartha and Santra (2020) to determine the performances of proposed model.

The confusion matrix symbolized that our proposed DL model performed very well in precisely classifying COVID-19 patients.

We further visualized the accuracy and loss curve for training and validation in Figure 6 to show the achievement of the presented CNN model. These accuracy and loss curves (green and purple color curves) clearly suggested that the proposed model did well without overfitting and underfitting. For X-rays dataset, we set the epoch number 25 instead as well. After 25 epochs, we found training and validation accuracies 99.96 and 99.83% respectively.

In Table 4, we summarized the results obtained by applying the dataset Siddhartha and Santra (2020). Here, we also considered the evaluation metrics in term of ACC, SEN, PPV, and F1-score for each class. On an average, the proposed model achieved 99.59% ACC, 99.50% SEN, 100% F1-score, and 99.50% PPV on X-ray images.

TABLE 4. Experimental results obtained by the proposed model for COVID-19 and normal cases on X-rays.

Additionally, we generated the ROC curves between the actual positive rate and the false-positive-rate with a view to determining the general achievement shown in Figure 7B. We obtained the Area Under the ROC curve (AUC) 99.2% for the proposed architecture.

In this study, our suggested model has been compared to four different architectures:Keeping the parameter same, we also trained VGG16, VGG19, Xception, and Inception-V2-Resnet on X-rays dataset Siddhartha and Santra (2020) to compare the outcomes with our model.

Table 5 depicted the comparative performances of these model with our developed model taking the evaluation criteria ACC, PPV, SEN, and F1-score. As demonstrated in Table 5, the suggested model also produced good performance in all the evaluation metrics with an accuracy of 99.56% on chest X-ray images.

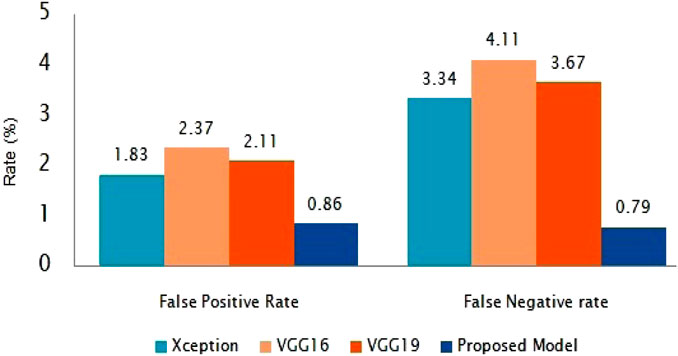

The suggested classification performance has been further examined using average false positive rate and false negative rate bar diagrams, as shown in Figure 8. The FPR and FNR values should have been much smaller for improved classifier performance. Our presented method gains the smallest FPR and FNR, which are 0.85 and 0.79%, respectively, as shown in Figure 8.

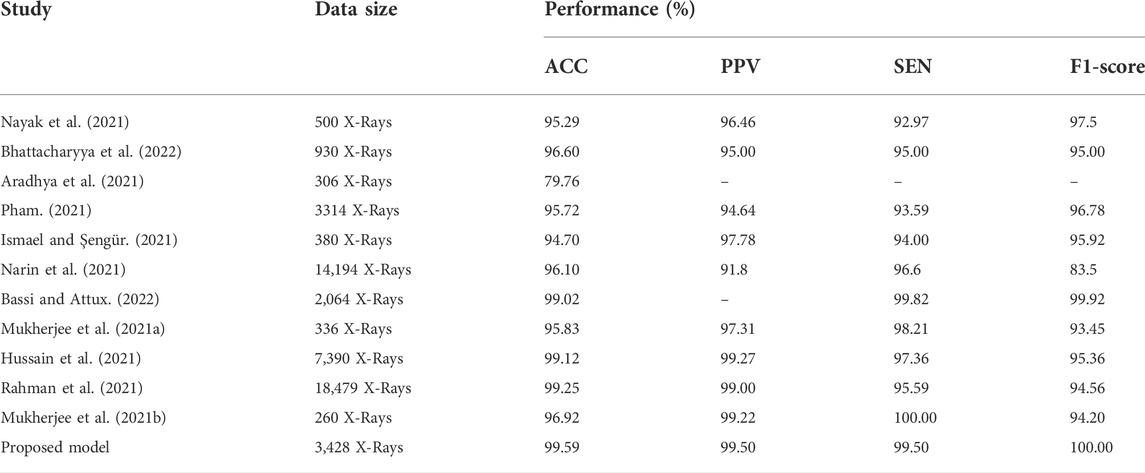

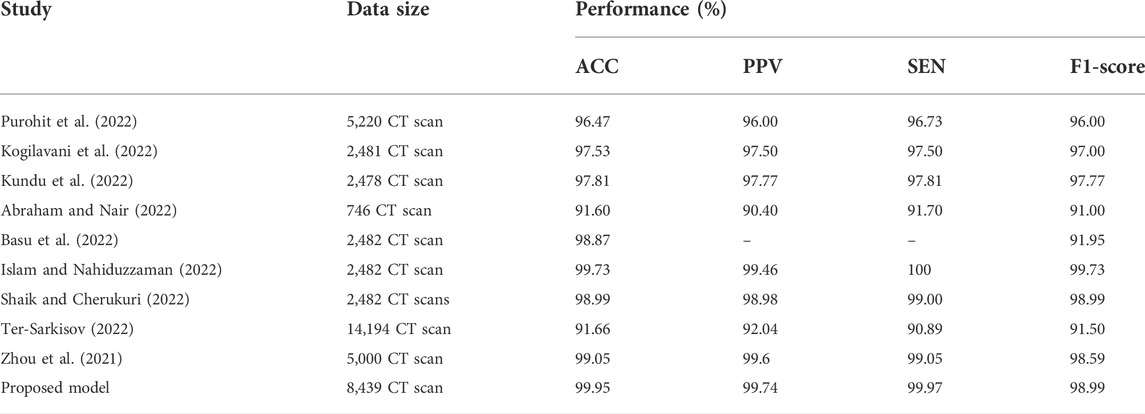

3.3 Comparison with existing methods

As demonstrated in Table 6 and Table 7, we assessed our model to other state-of-the-art DL techniques for recognizing COVID-19 on CXR images and chest CT scan images. For the categorization of COVID-19 and other individual’s images in our dataset, all of the algorithms in Tables 6, 7 was re-implemented. To identify COVID-19, Bhattacharyya et al. (2022) employed VGG19 to extract semantic features with BRISK and used RF as a classifier in the output layer and discovered that the average accuracy, sensitivity, precision, and F1-score was 96.6, 95, 95.0, 95.0%, correspondingly. Researchers Hussain et al. (2021) employed a transfer learning technique adopting MobileNet-v2 as a base model and obtained average classification accuracy, positive predictive value, and sensitivity of 99.12, 99.27, and 97.36%, respectively. Applying the transfer learning technique-based on ResNet-34 model, Nayak et al. (2021) obtained the accuracy, sensitivity, and precision of 95.29, 92.97, and 96.46%. Correspondingly, the average classification accuracy, sensitivity, and F1-score of a tuned AlexNet model were 95.72, 93.59, and 96.78% which was described by Pham (2021). Rahman et al. (2021) had proposed a novel DL model and obtained 99.65% accuracy, 100% precision, 95.59% recall, and 94.56% F1-score. Table 6 proved that our suggested technique outstrips the recently developed COVID-19 identification techniques on chest X-rays.Besides the X-rays, CT scan images are also being used as a meaningful resources for COVID-19 detection. Using CT scan, Ter-Sarkisov (2022) designed a COVID-CT-MAsk-Net model and gained 90.80% sensitivity for COVID-19 cases, 91.62% sensitivity for Pneumonia cases, and on an mean accuracy of 91.66%, and an F1-score of 91.50%. In another work, Purohit et al. (2022) utilized 5,220 CT scan to differentiate COVID-19 from normal where 2,760 images were on COVID-19 patients. Their model able to gained 96.47% ACC, 96% PPV and 96% F1-score. Research works done by Kogilavani et al. (2022); Kundu et al. (2022); Abraham and Nair (2022); Basu et al. (2022); Islam and Nahiduzzaman (2022); Shaik and Cherukuri (2022) applied the same datasets containing 2,482 CT scan where 1,252 CT scan were COVID-19 infected patients. All of them achieved around 98–98.99% which was good. From the Table 7, we find that Zhou et al. (2021) gained 99.05% ACC, 99.05% SEN and 98.59% F1-score which are very close to our proposed model.After summarised in Tables 6, 7, we have found that our proposed model can differentiate COVID-19 and normal patients efficiently. Thus, it is worth to claim that the introduced model is comparable with the recent published works and can be used to quick and primary identification of COVID-19 infected persons.

TABLE 6. Performance comparison of the stated COVID-19 identification model with others works on X-rays.

TABLE 7. Performance comparison of the stated COVID-19 identification model with others works on CT scan.

4 Conclusion and future direction

This work utilise bench-marking approaches and alternative models to categorize COVID-19 X-ray and CT scan images. For the COVID-19 test, this approach can save RT-PCR screening kits (and cost) and take less time than the RT-PCR test. Our study uses a customized deep network based on DenseNet169 to support the fast diagnosis of COVID-19 infected individuals using a precise transfer learning approach. As a result, the accuracy rate for COVID-19 and normal cases identification is 99.95%. For the sensitiveness and aggressive spreading system of the COVID-19, we also focus on evaluating the proposed method using the F1-score, sensitivity (SEN), and precision (PPV) and have achieved 98.99% F1- score, 99.97% SEN, and 99.74% PPV, respectively for CT scan images. In addition, it is worth mentioning that our method has the lowest FPR and FNR when compared to state-of-the-art algorithms, which are 0.86 and 0.79%, respectively. We also used chest X-ray images to train and test our model and found that our proposed model performed well in chest X-rays with an detection accuracy of 99.59%. The proposed model is more accurate and can quickly detect COVID-19 patients to make them isolated quickly and thus can contribute to stop the spreading of infection. However, we do not use particular parameters for chain-smokers or various drag-affected peasants’ lung images. In the future, we will give special attention and care to classify patients with other lung disease.

Data availability statement

Publicly available datasets were use for method development and analysis in this study. These data can be found here at: CT scan-https://www.kaggle.com/datasets/mehradaria/covid19-lung-ct-scans (2021), and X-Rays-https://data.mendeley.com/datasets/rscbjbr9sj/3.(2020).

Author contributions

Conceived and designed the experiments: PG, MA, and MB. Execution of the experiments: PG, and KM. Data analysis: PG, MT, MB, and LG. Manuscript writing: PG, MA, MB, LG, and MA. Final draft and revision are made by LG, SM, and ZZ. All authors have read and agreed to the final version of the manuscript.

Funding

ZZ was partially supported by the Cancer Prevention and Research Institute of Texas (CPRIT RP180734 and RP210045). The funders did not participate in the study design, data analysis, decision to publish, or preparation of the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abraham, B., and Nair, M. S. (2022). Computer-aided detection of Covid-19 from ct scans using an ensemble of cnns and ksvm classifier. Signal Image Video process. 16, 587–594. doi:10.1007/s11760-021-01991-6

Ahuja, S., Panigrahi, B. K., Dey, N., Rajinikanth, V., and Gandhi, T. K. (2021). Deep transfer learning-based automated detection of Covid-19 from lung ct scan slices. Appl. Intell. 51, 571–585. doi:10.1007/s10489-020-01826-w

Alshazly, H., Linse, C., Barth, E., and Martinetz, T. (2021). Explainable Covid-19 detection using chest ct scans and deep learning. Sensors 21, 455. doi:10.3390/s21020455

Apostolopoulos, I. D., and Mpesiana, T. A. (2020). Covid-19: Automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 43, 635–640. doi:10.1007/s13246-020-00865-4

Aradhya, V., Mahmud, M., Guru, D., Agarwal, B., and Kaiser, M. S. (2021). One-shot cluster-based approach for the detection of Covid–19 from chest x–ray images. Cogn. Comput. 13, 873–881. doi:10.1007/s12559-020-09774-w

Aria, M. (2021). Covid-19 lung ct scans: A large dataset of lung ct scans for covid-19 (sars-cov-2) detection. (kaggle)Availbale at: https://www.kaggle.com/datasets/mehradaria/covid19-lung-ct-scans.

Aslan, M. F., Unlersen, M. F., Sabanci, K., and Durdu, A. (2021). Cnn-based transfer learning–bilstm network: A novel approach for Covid-19 infection detection. Appl. Soft Comput. 98, 106912. doi:10.1016/j.asoc.2020.106912

Bassi, P. R., and Attux, R. (2022). A deep convolutional neural network for Covid-19 detection using chest x-rays. Res. Biomed. Eng. 38, 139–148. doi:10.1007/s42600-021-00132-9

Basu, A., Sheikh, K. H., Cuevas, E., and Sarkar, R. (2022). Covid-19 detection from ct scans using a two-stage framework. Expert Syst. Appl. 193, 116377. doi:10.1016/j.eswa.2021.116377

Bhattacharyya, A., Bhaik, D., Kumar, S., Thakur, P., Sharma, R., and Pachori, R. B. (2022). A deep learning based approach for automatic detection of Covid-19 cases using chest x-ray images. Biomed. Signal Process. Control 71, 103182. doi:10.1016/j.bspc.2021.103182

Biswas Milon, M. M., and Kawsher, M. (2022). “An enhanced deep convolution neural network model to diagnose alzheimer’s disease using brain magnetic resonance imaging,” in RTIP2R 2021. Communications in Computer and Information Science (Cham: Springer), 42.

Biswas, M., Tania, M. H., Kaiser, M. S., Kabir, R., Mahmud, M., and Kemal, A. A. (2021). Accu3rate: A mobile health application rating scale based on user reviews. PloS one 16, e0258050. doi:10.1371/journal.pone.0258050

Chung, M., Bernheim, A., Mei, X., Zhang, N., Huang, M., Zeng, X., et al. (2020). Ct imaging features of 2019 novel coronavirus (2019-ncov). Radiology 295, 202–207. doi:10.1148/radiol.2020200230

Gaur, L., Bhandari, M., Razdan, T., Mallik, S., and Zhao, Z. (2022). Explanation-driven deep learning model for prediction of brain tumour status using mri image data. Front. Genet. 448, 822666. doi:10.3389/fgene.2022.822666

Gaur, L., Bhatia, U., Jhanjhi, N., Muhammad, G., and Masud, M. (2021). Medical image-based detection of Covid-19 using deep convolution neural networks. Multimed. Syst., 1–10. doi:10.1007/s00530-021-00794-6

Ghose, P., Acharjee, U. K., Islam, M. A., Sharmin, S., and Uddin, M. A. (2021). “Deep viewing for Covid-19 detection from x-ray using cnn based architecture,” in 2021 8th International Conference on Electrical Engineering, Indonesia, October 20–21, 2021. (Computer Science and Informatics (EECSI) IEEE), 283.

Greenspan, H., Van Ginneken, B., and Summers, R. M. (2016). Guest editorial deep learning in medical imaging: Overview and future promise of an exciting new technique. IEEE Trans. Med. Imaging 35, 1153–1159. doi:10.1109/tmi.2016.2553401

Horry, M. J., Chakraborty, S., Paul, M., Ulhaq, A., Pradhan, B., Saha, M., et al. (2020). Covid-19 detection through transfer learning using multimodal imaging data. IEEE Access. 8, 149808–149824. doi:10.1109/ACCESS.2020.3016780

Huang, C., Wang, Y., Li, X., Ren, L., Zhao, J., Hu, Y., et al. (2020). Clinical features of patients infected with 2019 novel coronavirus in wuhan, China. Lancet 395, 497–506. doi:10.1016/S0140-6736(20)30183-5

Huang, G., Liu, Z., Van Der Maaten, L., and Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, Hawaii, July 21–26, 2017. 4700.

Hussain, E., Hasan, M., Rahman, M. A., Lee, I., Tamanna, T., and Parvez, M. Z. (2021). Corodet: A deep learning based classification for Covid-19 detection using chest x-ray images. Chaos Solit. Fractals 142, 110495. doi:10.1016/j.chaos.2020.110495

Islam, M. R., and Nahiduzzaman, M. (2022). Complex features extraction with deep learning model for the detection of covid19 from ct scan images using ensemble based machine learning approach. Expert Syst. Appl. 195, 116554. doi:10.1016/j.eswa.2022.116554

Ismael, A. M., and Şengür, A. (2021). Deep learning approaches for Covid-19 detection based on chest x-ray images. Expert Syst. Appl. 164, 114054. doi:10.1016/j.eswa.2020.114054

Kogilavani, S., Prabhu, J., Sandhiya, R., Kumar, M. S., Subramaniam, U., Karthick, A., et al. (2022). Covid-19 detection based on lung ct scan using deep learning techniques. Comput. Math. Methods Med. 2022, 7672196. doi:10.1155/2022/7672196

Kundu, R., Singh, P. K., Ferrara, M., Ahmadian, A., and Sarkar, R. (2022). Et-net: An ensemble of transfer learning models for prediction of Covid-19 infection through chest ct-scan images. Multimed. Tools Appl. 81, 31–50. doi:10.1007/s11042-021-11319-8

Lahsaini, I., Daho, M. E. H., and Chikh, M. A. (2021). Deep transfer learning based classification model for Covid-19 using chest ct-scans. Pattern Recognit. Lett. 152, 122–128. doi:10.1016/j.patrec.2021.08.035

Loey, M., Manogaran, G., and Khalifa, N. E. M. (2020). A deep transfer learning model with classical data augmentation and cgan to detect Covid-19 from chest ct radiography digital images. Neural comput. Appl., 1–13. doi:10.1007/s00521-020-05437-x

Luján-García, J. E., Yáñez-Márquez, C., Villuendas-Rey, Y., and Camacho-Nieto, O. (2020). A transfer learning method for pneumonia classification and visualization. Appl. Sci. 10, 2908. doi:10.3390/app10082908

Maghded, H. S., Ghafoor, K. Z., Sadiq, A. S., Curran, K., Rawat, D. B., and Rabie, K. (2020). “A novel ai-enabled framework to diagnose coronavirus Covid-19 using smartphone embedded sensors: Design study,” in 2020 IEEE 21st International Conference on Information Reuse and Integration for Data Science (IRI), August 11–13, 2020. (IEEE).

Mahbub, M., Biswas, M., Miah, A. M., Shahabaz, A., Kaiser, M. S., et al. (2021). “Covid-19 detection using chest x-ray images with a regnet structured deep learning model,” in International Conference on Applied Intelligence and Informatics, Cham, July 30–31, 2021 (Springer), 358.

Mahbub, M. K., Biswas, M., Gaur, L., Alenezi, F., and Santosh, K. (2022). Deep features to detect pulmonary abnormalities in chest x-rays due to infectious diseasex: Covid-19, pneumonia, and tuberculosis. Inf. Sci. 592, 389–401. doi:10.1016/j.ins.2022.01.062

Mukherjee, H., Ghosh, S., Dhar, A., Obaidullah, S. M., Santosh, K., and Roy, K. (2021a). Deep neural network to detect Covid-19: One architecture for both ct scans and chest x-rays. Appl. Intell. 51, 2777–2789. doi:10.1007/s10489-020-01943-6

Mukherjee, H., Ghosh, S., Dhar, A., Obaidullah, S. M., Santosh, K., and Roy, K. (2021b). Shallow convolutional neural network for covid-19 outbreak screening using chest x-rays, Cognitive Computation.

Narin, A., Kaya, C., and Pamuk, Z. (2021). Automatic detection of coronavirus disease (Covid-19) using x-ray images and deep convolutional neural networks. Pattern Anal. Appl. 24, 1207–1220. doi:10.1007/s10044-021-00984-y

Nayak, S. R., Nayak, D. R., Sinha, U., Arora, V., and Pachori, R. B. (2021). Application of deep learning techniques for detection of Covid-19 cases using chest x-ray images: A comprehensive study. Biomed. Signal Process. Control 64, 102365. doi:10.1016/j.bspc.2020.102365

Organization, W. H. (2020). Archived: Who timeline - covid-19. Available at: https://www.who.int/news/item/27-04-2020-who-timeline—covid-19.

Organization, W. H. (2020). Weekly operational update on Covid-19. Obtenido deAvailable at: https://www.who.int/publications/m/item/weekly-update-on-covid-19—23-october.

Panwar, H., Gupta, P., Siddiqui, M. K., Morales-Menendez, R., Bhardwaj, P., and Singh, V. (2020a). A deep learning and grad-cam based color visualization approach for fast detection of Covid-19 cases using chest x-ray and ct-scan images. Chaos Solit. Fractals 140, 110190. doi:10.1016/j.chaos.2020.110190

Panwar, H., Gupta, P., Siddiqui, M. K., Morales-Menendez, R., and Singh, V. (2020b). Application of deep learning for fast detection of Covid-19 in x-rays using ncovnet. Chaos Solit. Fractals 138, 109944. doi:10.1016/j.chaos.2020.109944

Pham, T. D. (2021). Classification of Covid-19 chest x-rays with deep learning: New models or fine tuning? Health Inf. Sci. Syst. 9, 2–11. doi:10.1007/s13755-020-00135-3

Purohit, K., Kesarwani, A., Ranjan Kisku, D., and Dalui, M. (2022). “Covid-19 detection on chest x-ray and ct scan images using multi-image augmented deep learning model,” in Proceedings of the Seventh International Conference on Mathematics and Computing, Singapore, March 6, 2022, (Springer), 395.

Rahman, T., Khandakar, A., Qiblawey, Y., Tahir, A., Kiranyaz, S., Kashem, S. B. A., et al. (2021). Exploring the effect of image enhancement techniques on Covid-19 detection using chest x-ray images. Comput. Biol. Med. 132, 104319. doi:10.1016/j.compbiomed.2021.104319

Shah, V., Keniya, R., Shridharani, A., Punjabi, M., Shah, J., and Mehendale, N. (2021). Diagnosis of Covid-19 using ct scan images and deep learning techniques. Emerg. Radiol. 28, 497–505. doi:10.1007/s10140-020-01886-y

Shaik, N. S., and Cherukuri, T. K. (2022). Transfer learning based novel ensemble classifier for Covid-19 detection from chest ct-scans. Comput. Biol. Med. 141, 105127. doi:10.1016/j.compbiomed.2021.105127

Shorten, C., and Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. J. Big Data 6, 60–48. doi:10.1186/s40537-019-0197-0

Siddhartha, M., and Santra, A. (2020). Covidlite: A depth-wise separable deep neural network with white balance and clahe for detection of covid-19. arXiv preprint arXiv:2006.13873.

Ter-Sarkisov, A. (2022). Covid-ct-mask-net: Prediction of Covid-19 from ct scans using regional features. Appl. Intell. 1–12, 9664–9675. doi:10.1007/s10489-021-02731-6

Wang, W., Xu, Y., Gao, R., Lu, R., Han, K., Wu, G., et al. (2020). Detection of sars-cov-2 in different types of clinical specimens. Jama 323, 1843–1844. doi:10.1001/jama.2020.3786

Keywords: COVID-19, x-ray, CT scan, deep learning, transfer learning, classification

Citation: Ghose P, Alavi M, Tabassum M, Ashraf Uddin M, Biswas M, Mahbub K, Gaur L, Mallik S and Zhao Z (2022) Detecting COVID-19 infection status from chest X-ray and CT scan via single transfer learning-driven approach. Front. Genet. 13:980338. doi: 10.3389/fgene.2022.980338

Received: 28 June 2022; Accepted: 18 August 2022;

Published: 21 September 2022.

Edited by:

Quan Zou, University of Electronic Science and Technology of China, ChinaReviewed by:

Bin Liu, Beijing Institute of Technology, ChinaBalachandran Manavalan, Sungkyunkwan University, South Korea

Copyright © 2022 Ghose, Alavi, Tabassum, Ashraf Uddin, Biswas, Mahbub, Gaur, Mallik and Zhao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhongming Zhao, Zhongming.Zhao@uth.tmc.edu

Partho Ghose1

Partho Ghose1 Muhaddid Alavi

Muhaddid Alavi Mehnaz Tabassum

Mehnaz Tabassum Milon Biswas

Milon Biswas Loveleen Gaur

Loveleen Gaur Saurav Mallik

Saurav Mallik Zhongming Zhao

Zhongming Zhao