Technological Approach to Mind Everywhere: An Experimentally-Grounded Framework for Understanding Diverse Bodies and Minds

- 1Allen Discovery Center at Tufts University, Medford, MA, United States

- 2Wyss Institute for Biologically Inspired Engineering at Harvard University, Cambridge, MA, United States

Synthetic biology and bioengineering provide the opportunity to create novel embodied cognitive systems (otherwise known as minds) in a very wide variety of chimeric architectures combining evolved and designed material and software. These advances are disrupting familiar concepts in the philosophy of mind, and require new ways of thinking about and comparing truly diverse intelligences, whose composition and origin are not like any of the available natural model species. In this Perspective, I introduce TAME—Technological Approach to Mind Everywhere—a framework for understanding and manipulating cognition in unconventional substrates. TAME formalizes a non-binary (continuous), empirically-based approach to strongly embodied agency. TAME provides a natural way to think about animal sentience as an instance of collective intelligence of cell groups, arising from dynamics that manifest in similar ways in numerous other substrates. When applied to regenerating/developmental systems, TAME suggests a perspective on morphogenesis as an example of basal cognition. The deep symmetry between problem-solving in anatomical, physiological, transcriptional, and 3D (traditional behavioral) spaces drives specific hypotheses by which cognitive capacities can increase during evolution. An important medium exploited by evolution for joining active subunits into greater agents is developmental bioelectricity, implemented by pre-neural use of ion channels and gap junctions to scale up cell-level feedback loops into anatomical homeostasis. This architecture of multi-scale competency of biological systems has important implications for plasticity of bodies and minds, greatly potentiating evolvability. Considering classical and recent data from the perspectives of computational science, evolutionary biology, and basal cognition, reveals a rich research program with many implications for cognitive science, evolutionary biology, regenerative medicine, and artificial intelligence.

Introduction

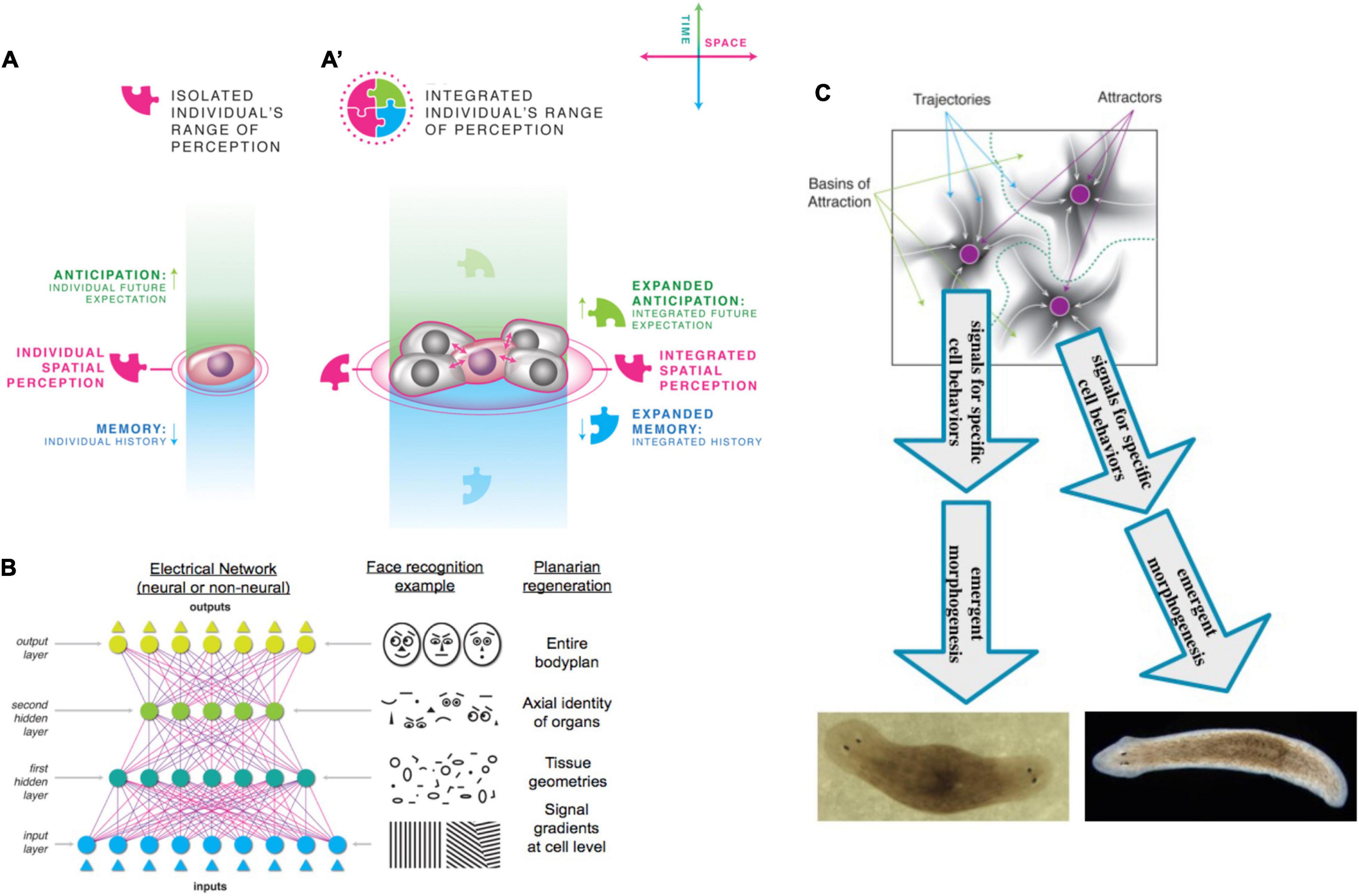

All known cognitive agents are collective intelligences, because we are all made of parts; biological agents in particular are not just structurally modular, but made of parts that are themselves agents in important ways. There is no truly monadic, indivisible yet cognitive being: all known minds reside in physical systems composed of components of various complexity and active behavior. However, as human adults, our primary experience is that of a centralized, coherent Self which controls events in a top-down manner. That is also how we formulate models of learning (“the rat learned X”), moral responsibility, decision-making, and valence: at the center is a subject which has agency, serves as the locus of rewards and punishments, possesses (as a single functional unit) memories, exhibits preferences, and takes actions. And yet, under the hood, we find collections of cells which follow low-level rules via distributed, parallel functionality and give rise to emergent system-level dynamics. Much as single celled organisms transitioned to multicellularity during evolution, the single cells of an embryo construct de novo, and then operate, a unified Self during a single agent’s lifetime. The compound agent supports memories, goals, and cognition that belongs to that Self and not to any of the parts alone. Thus, one of the most profound and far-reaching questions is that of scaling and unification: how do the activities of competent, lower-level agents give rise to a multiscale holobiont that is truly more than the sum of its parts? And, given the myriad of ways that parts can be assembled and relate to each other, is it possible to define ways in which truly diverse intelligences can be recognized, compared, and understood?

Here, I develop a framework to drive new theory and experiment in biology, cognition, evolution, and biotechnology from a multi-scale perspective on the nature and scaling of the cognitive Self. An important part of this research program is the need to encompass beings beyond the familiar conventional, evolved, static model animals with brains. The gaps in existing frameworks, and thus opportunities for fundamental advances, are revealed by a focus on plasticity of existing forms, and the functional diversity enabled by chimeric bioengineering. To illustrate how this framework can be applied to unconventional substrates, I explore a deep symmetry between behavior and morphogenesis, deriving hypotheses for dynamics that up- and down-scale Selves within developmental and phylogenetic timeframes, and at the same time strongly impact the speed of the evolutionary process itself (Dukas, 1998). I attempt to show how anatomical homeostasis can be viewed as the result of the behavior of the swarm intelligence of cells, and provides a rich example of how an inclusive, forward-looking technological framework can connect philosophical questions with specific empirical research programs.

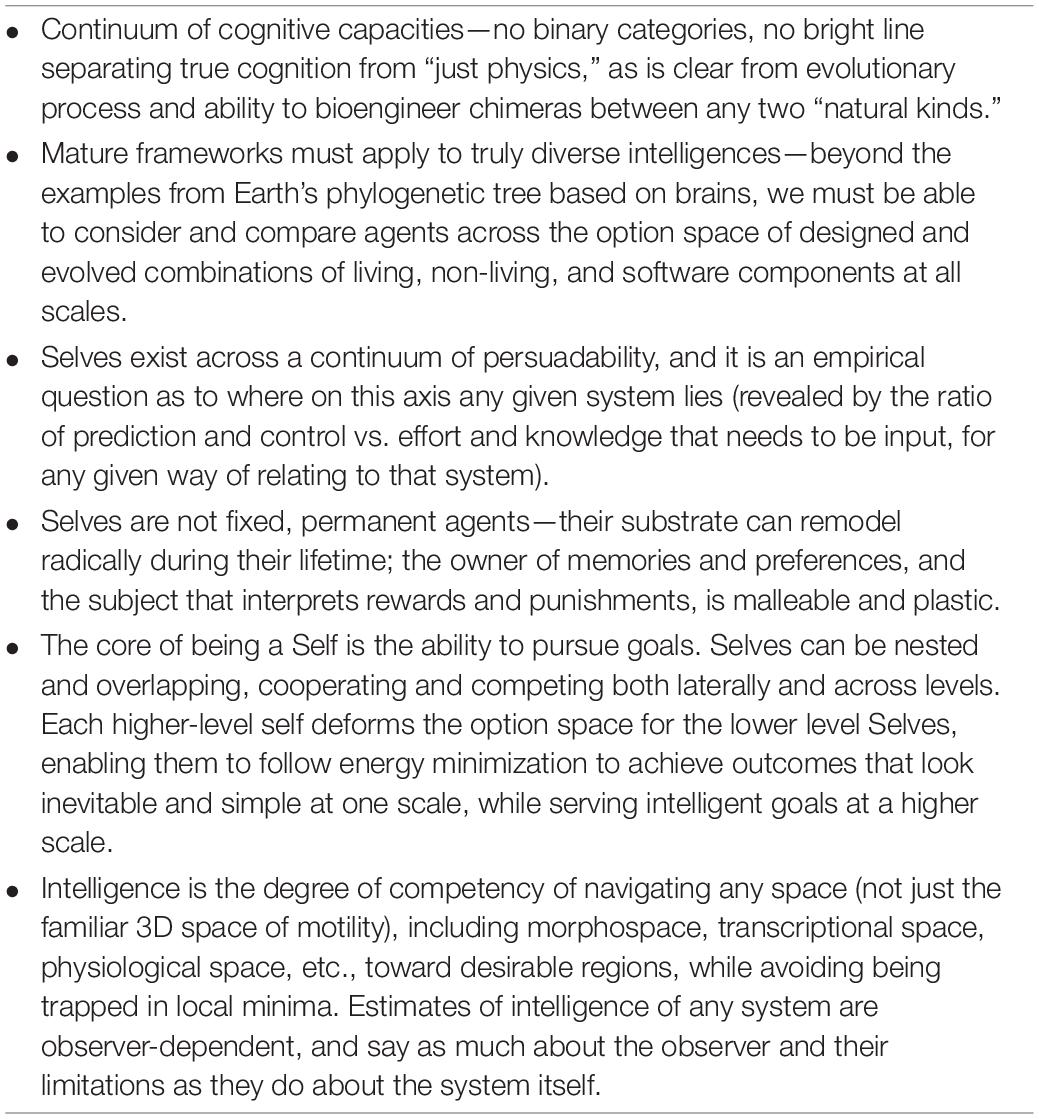

The philosophical context for the following perspective is summarized in Table 1 (see also Glossary), and links tightly to the field of basal cognition (Birch et al., 2020) via a fundamentally gradualist approach. It should be noted that the specific proposals for biological mechanisms that scale functional capacity are synergistic with, but not linearly dependent on, this conceptual basis. The hypotheses about how bioelectric networks scale cell computation into anatomical homeostasis, and the evolutionary dynamics of multi-scale competency, can be explored without accepting the “minds everywhere” commitments of the framework. However, together they form a coherent lens onto the life sciences which helps generate testable new hypotheses and integrate data from several subfields.

For the purposes of this paper, “cognition” refers not only to complex, self-reflexive advanced cognition or metacognition, but is used in the less conservative sense that recognizes many diverse capacities for learning from experience (Ginsburg and Jablonka, 2021), adaptive responsiveness, self-direction, decision-making in light of preferences, problem-solving, active probing of their environment, and action at different levels of sophistication in conventional (evolved) life forms as well as bioengineered ones (Rosenblueth et al., 1943; Lyon, 2006; Bayne et al., 2019; Levin et al., 2021; Lyon et al., 2021; Figure 1). For our purposes, cognition refers to the functional computations that take place between perception and action, which allow the agent to span a wider range of time (via memory and predictive capacity, however much it may have) than its immediate now, which enable it to generalize and infer patterns from instances of stimuli—precursors to more advanced forms of recombining concepts, language, and logic.

Figure 1. Diverse, multiscale intelligence. (A) Biology is organized in a multi-scale, nested architecture of molecular pathways. (B) These are not merely structural, but also computational: each level of this holarchy contains subsystems which exhibit some degree of problem-solving (i.e., intelligent) activity, on a continuum such as the one proposed by Rosenblueth et al. (1943). (C) At each layer of a given biosystem, novel components can be introduced of either biological or engineered origin, resulting in chimeric forms that have novel bodies and novel cognitive systems distinct from the typical model species on the Earth’s phylogenetic lineage. Images in panels (A,C) by Jeremy Guay of Peregrine Creative. Image in panel (B) was created after Rosenblueth et al. (1943).

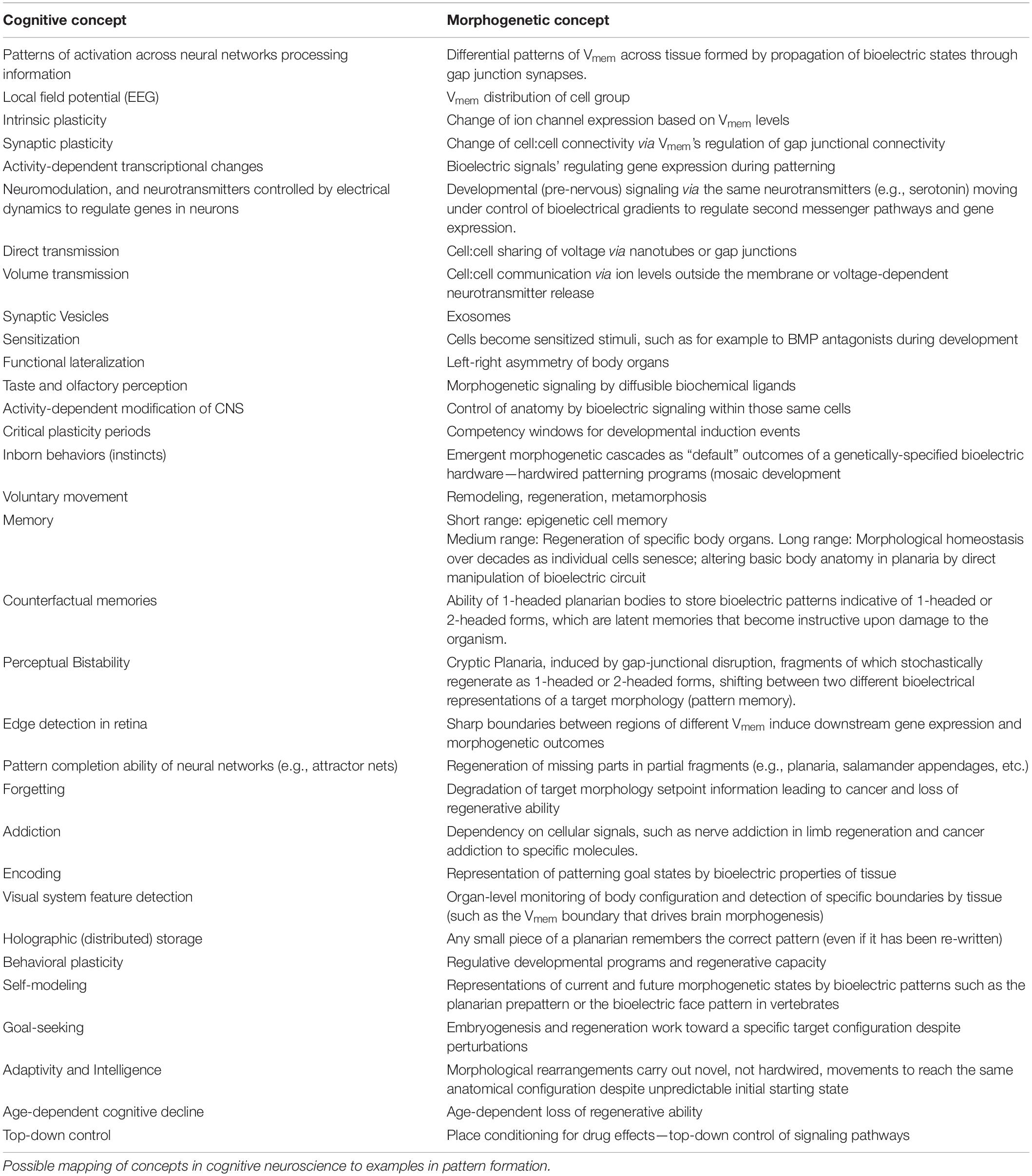

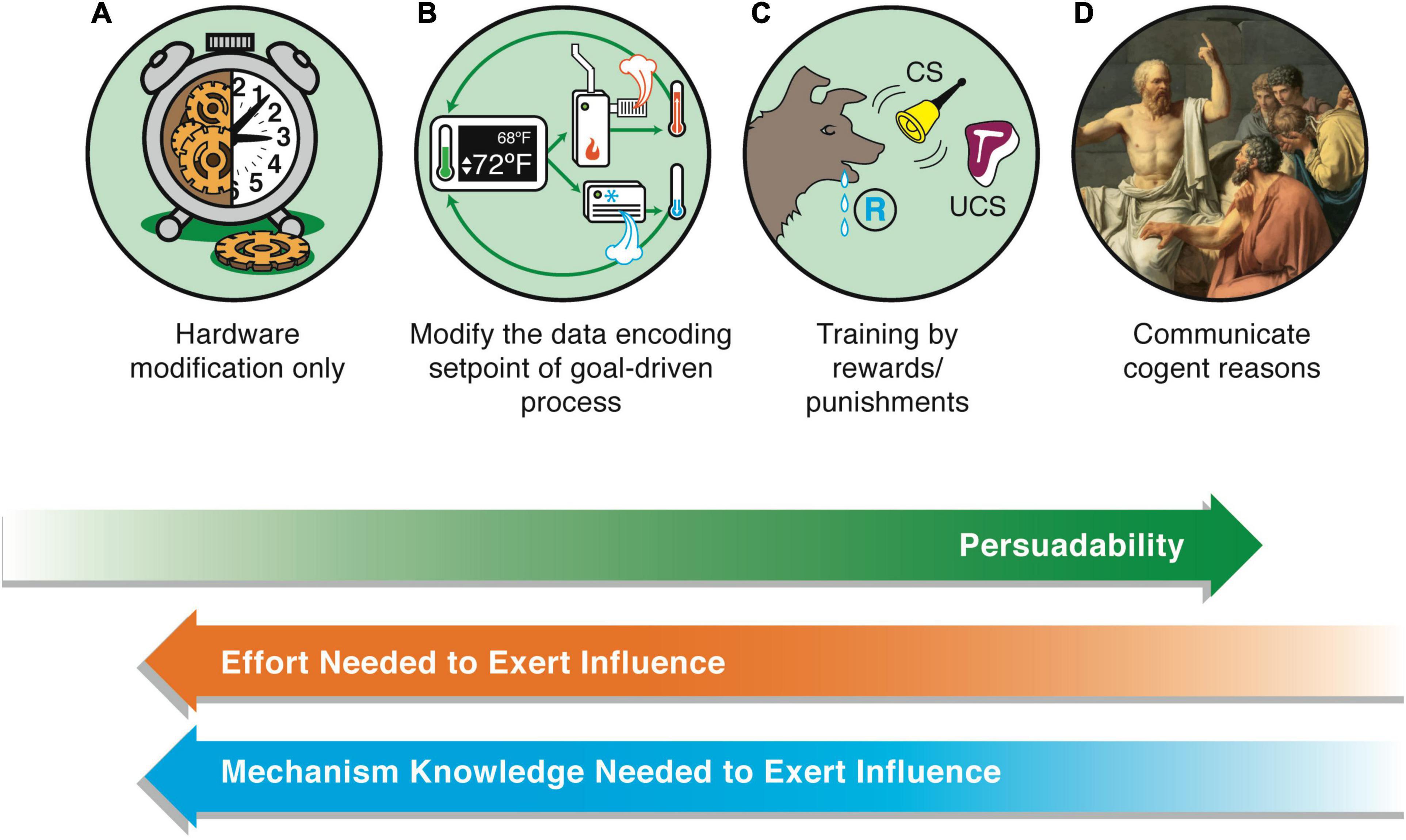

The framework, TAME—Technological Approach to Mind Everywhere—adopts a practical, constructive engineering perspective on the optimal place for a given system on the continuum of cognitive sophistication. This gives rise to an axis of persuadability (Figure 2), which is closely related to the Intentional Stance (Dennett, 1987) but made more explicit in terms of functional engineering approaches needed to implement prediction and control in practice. Persuadability refers to the type of conceptual and practical tools that are optimal to rationally modify a given system’s behavior. The origin story (designed vs. evolved), composition, and other aspects are not definitive guides to the correct level of agency for a living or non-living system. Instead, one must perform experiments to see which kind of intervention strategy provides the most efficient prediction and control (thus, one aim should be generalizing the human-focused Turing Test and other IQ metrics into a broader agency detection toolkit, which perhaps could itself be implemented by a useful algorithm).

Figure 2. The axis of persuadability. A proposed way to visualize a continuum of agency, which frames the problem in a way that is testable and drives empirical progress, is via an “axis of persuadability”: to what level of control (ranging from brute force micromanagement to persuasion by rational argument) is any given system amenable, given the sophistication of its cognitive apparatus? Here are shown only a few representative waypoints. On the far left are the simplest physical systems, e.g., mechanical clocks (A). These cannot be persuaded, argued with, or even rewarded/punished—only physical hardware-level “rewiring” is possible if one wants to change their behavior. On the far right (D) are human beings (and perhaps others to be discovered) whose behavior can be radically changed by a communication that encodes a rational argument that changes the motivation, planning, values, and commitment of the agent receiving this. Between these extremes lies a rich panoply of intermediate agents, such as simple homeostatic circuits (B) which have setpoints encoding goal states, and more complex systems such as animals which can be controlled by signals, stimuli, training, etc., (C). They can have some degree of plasticity, memory (change of future behavior caused by past events), various types of simple or complex learning, anticipation/prediction, etc. Modern “machines” are increasingly occupying right-ward positions on this continuum (Bongard and Levin, 2021). Some may have preferences, which avails the experimenter of the technique of rewards and punishments—a more sophisticated control method than rewiring, but not as sophisticated as persuasion (the latter requires the system to be a logical agent, able to comprehend and be moved by arguments, not merely triggered by signals). Examples of transitions include turning the sensors of state outward, to include others’ stress as part of one’s action policies, and eventually the meta-goal of committing to enhance one’s agency, intelligence, or compassion (increase the scope of goals one can pursue). A more negative example is becoming sophisticated enough to be susceptible to a “thought that breaks the thinker” (e.g., existential or skeptical arguments that can make one depressed or even suicidal, Gödel paradoxes, etc.)—massive changes can be made in those systems by a very low-energy signal because it is treated as information in the context of a complex host computational machinery. These agents exhibit a degree of multi-scale plasticity that enables informational input to make strong changes in the structure of the cognitive system itself. The positive flip side of this vulnerability is that it avails those kinds of minds with a long term version of free will: the ability through practice and repeated effort to change their own thinking patterns, responses to stimuli, and functional cognition. This continuum is not meant to be a linear scala naturae that aligns with any kind of “direction” of evolutionary progress—evolution is free to move in any direction in this option space of cognitive capacity; instead, this scheme provides a way to formalize (for a pragmatic, engineering approach) the major transitions in cognitive capacity that can be exploited for increased insight and control. The goal of the scientist is to find the optimal position for a given system. Too far to the right, and one ends up attributing hopes and dreams to thermostats or simple AIs in a way that does not advance prediction and control. Too far to the left, and one loses the benefits of top-down control in favor of intractable micromanagement. Note also that this forms a continuum with respect to how much knowledge one has to have about the system’s details in order to manipulate its function: for systems in class A, one has to know a lot about their workings to modify them. For class B, one has to know how to read-write the setpoint information, but does not need to know anything about how the system will implement those goals. For class C, one doesn’t have to know how the system modifies its goal encodings in light of experience, because the system does all of this on its own—one only has to provide rewards and punishments. Images by Jeremy Guay of Peregrine Creative.

Our capacity to find new ways to understand and manipulate complex systems is strongly related to how we categorize agency in our world. Newton didn’t invent two terms—gravity (for terrestrial objects falling) and perhaps shmavity (for the moon)—because it would have lost out on the much more powerful unification. TAME proposes a conceptual unification that would facilitate porting of tools across disciplines and model systems. We should avoid quotes around mental terms because there is no absolute, binary distinction between it knows and it “knows”—only a difference in the degree to which a model will be useful that incorporates such components.

Given this perspective, below I develop hypotheses about invariants that unify otherwise disparate-seeming problems, such as morphogenesis, behavior, and physiological allostasis. I take goals (in the cybernetic sense) and stressors (as a system-level result of distance from one’s goals) as key invariants which allow us to study and compare agents in truly diverse embodiments. The processes which scale goals and stressors form a positive feedback loop with modularity, thus both arising from, and potentiating the power of, evolution. These hypotheses suggest a specific way to understand the scaling of cognitive capacity through evolution, make interesting predictions, and suggest novel experimental work. They also provide ways to think about the impending expansion of the “space of possible bodies and minds” via the efforts of bioengineers, which is sure to disrupt categories and conclusions that have been formed in the context of today’s natural biosphere.

What of consciousness? It is likely impossible to understand sentience without understanding cognition, and the emphasis of this paper is on testable, empirical impacts of ways to understand cognition in all of its guises. By enabling the definition, detection, and comparison of cognition and intelligence, in diverse substrates beyond standard animals, we can enhance the range of embodiments in which sentience may result. In order to move the field forward via empirical progress, the focus of most of the discussion below is on ways to think about cognitive function, not on phenomenal or access consciousness [in the sense of the “Hard Problem” (Chalmers, 2013)]. However, I return to this issue at the end, discussing TAME’s view of sentience as fundamentally tied to goal-directed activity, only some aspects of which can be studied via third person approaches.

The main goal is to help advance and delineate an exciting emerging field at the intersection of biology, philosophy, and the information sciences. By proposing a new framework and examining it in a broad context of now physically realizable (not merely logically possible) living structures, it may be possible to bring conceptual, philosophical thought up to date with recent advances in science and technology. At stake are current knowledge gaps in evolutionary, developmental, and cell biology, a new roadmap for regenerative medicine, lessons that could be ported to artificial intelligence and robotics, and broader implications for ethics.

Cognition: Changing the Subject

Even advanced animals are really collective intelligences (Couzin, 2007, 2009; Valentini et al., 2018), exploiting still poorly-understood scaling and binding features of metazoan architectures that share a continuum with looser swarms that have been termed “liquid brains” (Sole et al., 2019). Studies of “centralized control” focus on a brain, which is in effect a network of cells performing functions that many cell types, including bacteria, can do (Koshland, 1983). The embodied nature of cognition means that the minds of Selves are dependent on a highly plastic material substrate which changes not only on evolutionary time scales but also during the lifetime of the agent itself.

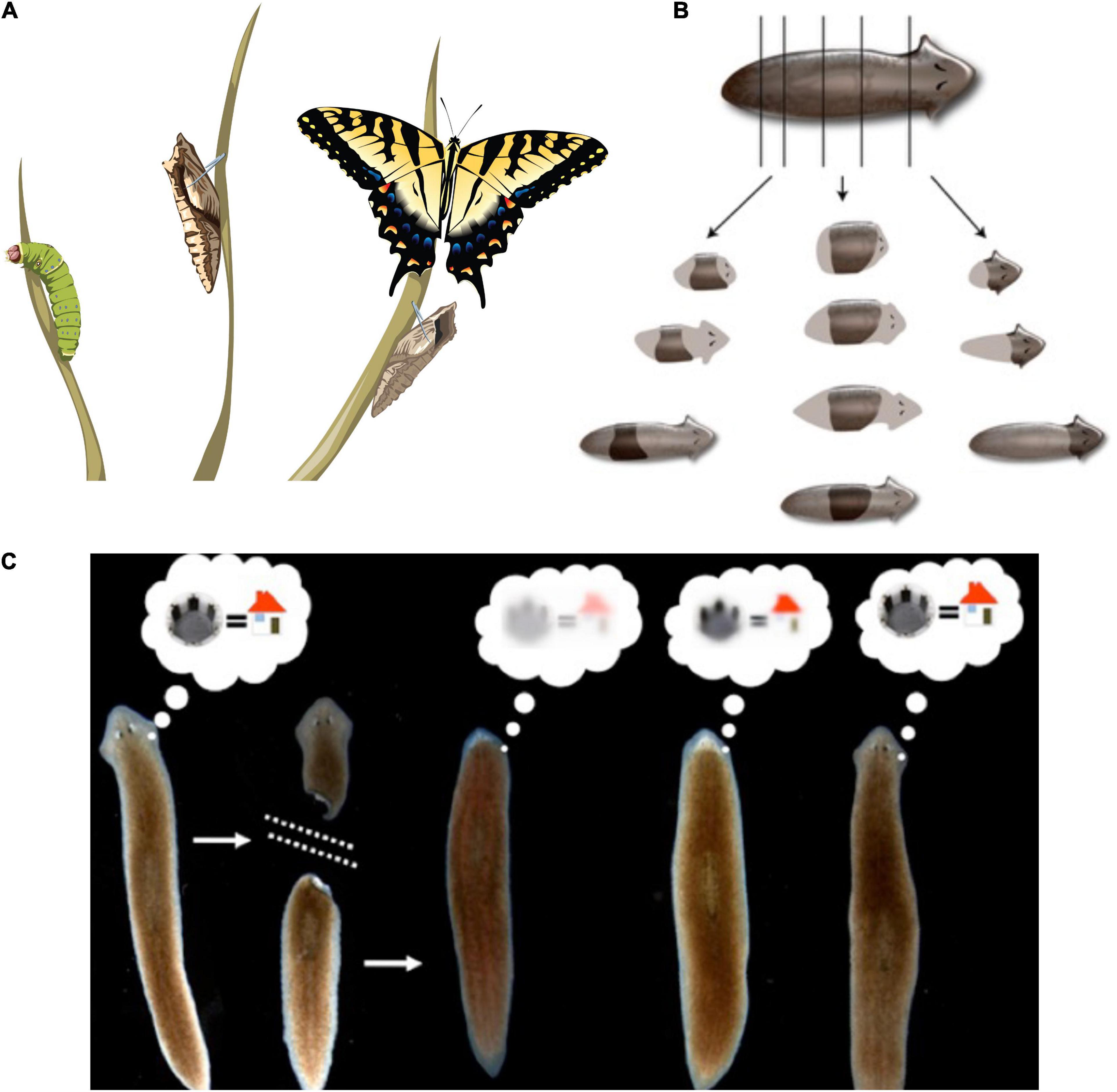

The central consequence of the composite nature of all intelligences is that the Self is subject to significant change in real-time (Figure 3). This means both slow maturation through experience (a kind of “software” change that doesn’t disrupt traditional ways of thinking about agency), as well as radical changes of the material in which a given mind is implemented (Levin, 2020). The owner, or subject of memories, preferences, and in more advanced cases, credit and blame, is very malleable. At the same time, fascinating mechanisms somehow ensure the persistence of Self (such as complex memories) despite drastic alterations of substrate. For example, the massive remodeling of the caterpillar brain, followed by the morphogenesis of an entirely different brain suitable for the moth or beetle, does not wipe all the memories of the larva but somehow maps them onto behavioral capacities in the post-metamorphosis host, despite its entirely different body (Alloway, 1972; Tully et al., 1994; Sheiman and Tiras, 1996; Armstrong et al., 1998; Ray, 1999; Blackiston et al., 2008). Not only that, but memories can apparently persist following the complete regeneration of brains in some organisms (McConnell et al., 1959; Corning, 1966; Shomrat and Levin, 2013) such as planaria, in which prior knowledge and behavioral tendencies are somehow transferred onto a newly-constructed brain. Even in vertebrates, such as fish (Versteeg et al., 2021) and mammals (von der Ohe et al., 2006), brain size and structure can change repeatedly during their lifespan. This is crucial to understanding agency and intelligence at multiple scales and in unfamiliar embodiments because observations like this begin to break down the notion of Selves as monadic, immutable objects with a privileged scale. Becoming comfortable with biological cognitive agents that are malleable in terms of form and function (change radically during the lifetime of an individual) makes it easier to understand the origins and changes of cognition during evolution or as the result of bioengineering effort.

Figure 3. Cognitive Selves can change in real-time. (A) Caterpillars metamorphose into butterflies, going through a process in which their body, brain, and cognitive systems are drastically remodeled during the lifetime of a single agent. Importantly, memories remain and persist through this process (Blackiston et al., 2015). (B) Planaria cut into pieces regenerate, with each piece re-growing and remodeling precisely what is needed to form an entire animal. (C) Planarians derived from tail fragments of trained worms still retain original information, illustrating the ability of memories to move across tissues and be reimprinted on newly-developing brains (Corning, 1966, 1967; Shomrat and Levin, 2013). Images by Jeremy Guay of Peregrine Creative.

This little-studied intersection between regeneration/remodeling and cognition highlights the fascinating plasticity of the body, brain, and mind; traditional model systems in which cognition is mapped onto a stable, discrete, mature brain are insufficient to fully understand the relationship between the Self and its material substrate. Many scientists study the behavioral properties of caterpillars, and of butterflies, but the transition zone in-between, from the perspective of philosophy of mind and cognitive science, provides an important opportunity to study the mind-body relationship by changing the body during the lifetime of the agent (not just during evolution). Note that continuity of being across drastic biological remodeling is not only relevant for unusual cases in the animal kingdom, but is a fundamental property of most life—even humans change from a collection of cells to a functional individual, via a gradual morphogenetic process that constructs an active Self in real time. This has not been addressed in biology, and likewise not yet in computer science, where machine learning approaches use static neural networks (there is not a formalism for altering artificial neural networks’ architecture on the fly).

What are the invariants that enable a Self to persist (and be recognizable by third-person investigations) despite such change? Memory is a good candidate (Shoemaker, 1959; Ameriks, 1976; Figure 3). However, at least certain kinds of memories can be transferred between individuals, by transplants of brain tissue or molecular engrams (Pietsch and Schneider, 1969; McConnell and Shelby, 1970; Bisping et al., 1971; Chen et al., 2014; Bedecarrats et al., 2018; Abraham et al., 2019). Importantly, the movement of memories across individual animals is only a special case of the movement of memory in biological tissue in general. Even when housed in the same “body,” memories must move between tissues—for example, in a trained planarian’s tail fragment re-imprinting its learned information onto the newly regenerated brain, or the movement of memories onto new brain tissue during metamorphosis. In addition to the spatial movement and re-mapping of memories onto new substrates, there is also a temporal component, as each memory is really an instance of communication between past and future Selves. The plasticity of biological bodies, made of cells that die, are born, and significantly rearrange their tissue architecture, suggests that the understanding of cognition is fundamentally a problem of collective intelligence: to understand how stable cognitive structures can persist and map onto swarm dynamics, with preferences and stressors that scale from those of their components.

This is applicable even to such a “stable” form as the human brain, which is often spoken of as a single Subject of experience and thought. First, the gulf between planarian regeneration/insect metamorphosis and human brains is going to be bridged by emerging therapeutics. It is inevitable that stem cell therapies for degenerative brain diseases (Forraz et al., 2013; Rosser and Svendsen, 2014; Tanna and Sachan, 2014) will confront us with humans whose brains are partially replaced by the naïve progeny of cells that were not present during the formation of memories and personality traits in the patient. Even prior to these advances, it was clear that phenomena such as dissociative identity disorder (Miller and Triggiano, 1992), communication with non-verbal brain hemispheres in commissurotomy patients (Nagel, 1971; Montgomery, 2003), conjoined twins with fused brains (Gazzaniga, 1970; Barilan, 2003), etc., place human cognition onto a continuous spectrum with respect to the plasticity of integrated Selves that reside within a particular biological tissue implementation.

Importantly, animal model systems are now providing the ability to harness that plasticity for functional investigations of the body-mind relationship. For example, it is now easy to radically modify bodies in a time-scale that is much faster than evolutionary change, to study the inherent plasticity of minds without eons of selection to shape them to fit specific body architectures. When tadpoles are created to have eyes on their tails, instead of their heads, they are still readily able to perform visual learning tasks (Blackiston and Levin, 2013; Blackiston et al., 2017). Planaria can readily be made with two (or more) brains in the same body (Morgan, 1904; Oviedo et al., 2010), and human patients are now routinely augmented with novel inputs [such as sensory substitution (Bach-y-Rita et al., 1969; Bach-y-Rita, 1981; Danilov and Tyler, 2005; Ptito et al., 2005)] or novel effectors, such as instrumentized interfaces allowing thought to control engineered devices such as wheelchairs in addition to the default muscle-driven peripherals of their own bodies (Green and Kalaska, 2011; Chamola et al., 2020; Belwafi et al., 2021). The central phenomenon here is plasticity: minds are not tightly bound to one specific underlying architecture (as most of our software is today), but readily mold to changes of genomic defaults. The logical extension of this progress is a focus on self-modifying living beings and the creation of new agents in which the mind:body system is simplified by entirely replacing one side of the equation with an engineered construct. The benefit would be that at least one half of the system is now well-understood.

For example, in hybrots, animal brains are functionally connected to robotics instead of their normal body (Reger et al., 2000; Potter et al., 2003; Tsuda et al., 2009; Ando and Kanzaki, 2020). It doesn’t even have to be an entire brain—a plate of neurons can learn to fly a flight simulator, and it lives in a new virtual world (DeMarse and Dockendorf, 2005; Manicka and Harvey, 2008; Beer, 2014), as seen from the development of closed-loop neurobiological platforms (Demarse et al., 2001; Potter et al., 2005; Bakkum et al., 2007b; Chao et al., 2008; Rolston et al., 2009a,b). These kinds of results are reminiscent of Philosophy 101’s “brain in a vat” experiment (Harman, 1973). Brains adjust to driving robots and other devices as easily as they adjust to controlling a typical, or highly altered, living body because minds are somehow adapted and prepared to deal with body alterations—throughout development, metamorphosis and regeneration, and evolutionary change.

The massive plasticity of bodies, brains, and minds means that a mature cognitive science cannot just concern itself with understanding standard “model animals” as they exist right now. The typical “subject,” such as a rat or fruit fly, which remains constant during the course of one’s studies and is conveniently abstracted as a singular Self or intelligence, obscures the bigger picture. The future of this field must expand to frameworks that can handle all of the possible minds across an immense option space of bodies. Advances in bioengineering and artificial intelligence suggest that we or our descendants will be living in a world in which Darwin’s “endless forms most beautiful” (this Earth’s N = 1 ecosystem outputs) are just a tiny sample of the true variety of possible beings. Biobots, hybrots, cyborgs, synthetic and chimeric animals, genetically and cellularly bioengineered living forms, humans instrumentized to knowledge platforms, devices, and each other—these technologies are going to generate beings whose body architectures are nothing like our familiar phylogeny. They will be a functional mix of evolved and designed components; at all levels, smart materials, software-level systems, and living tissue will be integrated into novel beings which function in their own exotic Umwelt. Importantly, the information that is used to specify such beings’ form and function is no longer only genetic—it is truly “epigenetic” because it comes not only from the creature’s own genome but also from human and non-human agents’ minds (and eventually, robotic machine-learning-driven platforms) that use cell-level bioengineering to generate novel bodies from genetically wild-type cells. In these cases, the genetics are no guide to the outcome (which highlights some of the profound reasons that genetics is hard to use to truly predict cognitive form and function even in traditional living species).

Now is the time to begin to develop ways of thinking about truly novel bodies and minds, because the technology is advancing more rapidly than philosophical progress. Many of the standard philosophical puzzles concerning brain hemisphere transplants, moving memories, replacing body/brain parts, etc. are now eminently doable in practice, while the theory of how to interpret the results lags. We now have the opportunity to begin to develop conceptual approaches to (1) understand beings without convenient evolutionary back-stories as explanations for their cognitive capacities (whose minds are created de novo, and not shaped by long selection pressures toward specific capabilities), and (2) develop ways to analyze novel Selves that are not amenable to simple comparisons with related beings, not informed by their phylogenetic position relative to known standard species, and not predictable from an analysis of their genetics. The implications range across insights into evolutionary developmental biology, advancing bioengineering and artificial life research, new roadmaps for regenerative medicine, ability to recognize exobiological life, and the development of ethics for relating to novel beings whose composition offers no familiar phylogenetic touchstone. Thus, here I propose the beginnings of a framework designed to drive empirical research and conceptual/philosophical analysis that will be broadly applicable to minds regardless of their origin story or internal architecture.

Technological Approach to Mind Everywhere: A Proposal for a Framework

The Technological Approach to Mind Everywhere (TAME) framework seeks to establish a way to recognize, study, and compare truly diverse intelligences in the space of possible agents. The goal of this project is to identify deep invariants between cognitive systems of very different types of agents, and abstract away from inessential features such as composition or origin, which were sufficient heuristics with which to recognize agency in prior decades but will surely be insufficient in the future (Bongard and Levin, 2021). To flesh out this approach, I first make explicit some of its philosophical foundations, and then discuss specific conceptual tools that have been developed to begin the task of understanding embodied cognition in the space of mind-as-it-can-be (a sister concept to Langton’s motto for the artificial life community—“life as it can be”) (Langton, 1995).

Philosophical Foundations of an Approach to Diverse Intelligences

One key pillar of this research program is the commitment to gradualism with respect to almost all important cognition-related properties: advanced minds are in important ways generated in a continuous manner from much more humble proto-cognitive systems. On this view, it is hopeless to look for a clear bright line that demarcates “true” cognition (such as that of humans, great apes, etc.) from metaphorical “as if cognition” or “just physics.” Taking evolutionary biology seriously means that there is a continuous series of forms that connect any cognitive system with much more humble ones. While phylogenetic history already refutes views of a magical arrival of “true cognition” in one generation, from parents that didn’t have it (instead stretching the process of cognitive expansion over long time scales and slow modification), recent advances in biotechnology make this completely implausible. For any putative difference between a creature that is proposed to have true preferences, memories, and plans and one that supposedly has none, we can now construct in-between, hybrid forms which then make it impossible to say whether the resulting being is an Agent or not. Many pseudo-problems evaporate when a binary view of cognition is dissolved by an appreciation of the plasticity and interoperability of living material at all scales of organization. A definitive discussion of the engineering of preferences and goal-directedness, in terms of hierarchy requirements and upper-directedness, is given in McShea (2013, 2016).

For example, one view is that only biological, evolved forms have intrinsic motivation, while software AI agents are only faking it via functional performance [but don’t actually care (Oudeyer and Kaplan, 2007, 2013; Lyon and Kuchling, 2021)]. But which biological systems really care—fish? Single cells? Do mitochondria (which used to be independent organisms) have true preferences about their own or their host cells’ physiological states? The lack of consensus on this question in classical (natural) biological systems, and the absence of convincing criteria that can be used to sort all possible agents to one or the other side of a sharp line, highlight the futility of truly binary categories. Moreover, we can now readily construct hybrid systems that consist of any percentage of robotics tightly coupled to on-board living cells and tissues, which function together as one integrated being. How many living cells does a robot need to contain before the living system’s “true” cognition bleeds over into the whole? On the continuum between human brains (with electrodes and a machine learning converter chip) that drive assistive devices (e.g., 95% human, 5% robotics), and robots with on-board cultured human brain cells instrumentized to assist with performance (5% human, 95% robotics), where can one draw the line—given that any desired percent combination is possible to make? No quantitative answer is sufficient to push a system “over the line” because there is no such line (at least, no convincing line has been proposed). Interesting aspects of agency or cognition are rarely if ever Boolean values.

Instead of a binary dichotomy, which leads to impassable philosophical roadblocks, we envision a continuum of advancement and diversity in information-processing capacity. Progressively more complex capabilities [such as unlimited associative learning, counterfactual modeling, symbol manipulation, etc., (Ginsburg and Jablonka, 2021)] ramp up, but are nevertheless part of a continuous process that is not devoid of proto-cognitive capacity before complex brains appear. Specifically, while major differences in cognitive function of course exist among diverse intelligences, transitions between them have not been shown to be binary or rapid relative to the timescale of individual agents. There is no plausible reason to think that evolution produces parents that don’t have “true cognition” but give rise to offspring that suddenly do, or that development starts with an embryo that has no “true preferences” and sharply transitions into an animal that does, etc. Moreover, bioengineering and chimerization can produce a smooth series of transitional forms between any two forms that are proposed to have, or not have, any cognitive property. Thus, agents gradually shift (during their lifetime, as result of development, metamorphosis, or interactions with other agents, or during evolutionary timescales) between great transitions in cognitive capacity, expressing and experiencing intermediate states of cognitive capacity that must be recognized by empirical approaches to study them.

A focus on the plasticity of the embodiments of mind strongly suggests this kind of gradualist view, which has been expounded in the context of evolutionary forces controlling individuality (Godfrey-Smith, 2009; Queller and Strassmann, 2009). Here the additional focus is on events taking place within the lifetime of individuals and driven by information and control dynamics. The TAME framework pushes experimenters to ask “how much” and “what kind of” cognition any given system might manifest if we interacted with it in the right way, at the right scale of observation. And of course, the degree of cognition is not a single parameter that gives rise to a scala naturae but a shorthand for the shape and size of its cognitive capacities in a rich space (discussed below).

The second pillar of TAME is that there is no privileged material substrate for Selves. Alongside familiar materials such as brains made of neurons, the field of basal cognition (Nicolis et al., 2011; Reid et al., 2012, 2013; Beekman and Latty, 2015; Baluška and Levin, 2016; Boussard et al., 2019; Dexter et al., 2019; Gershman et al., 2021; Levin et al., 2021; Lyon et al., 2021) has been identifying novel kinds of intelligences in single cells, plants, animal tissues, and swarms. The fields of active matter, intelligent materials, swarm robotics, machine learning, and someday, exobiology, suggest that we cannot rely on a familiar signature of “big vertebrate brain” as a necessary condition for mind. Molecular phylogeny shows that the specific components of brains pre-date the evolution of neurons per se, and life has been solving problems long before brains came onto the scene (Buznikov et al., 2005; Levin et al., 2006; Jekely et al., 2015; Liebeskind et al., 2015; Moran et al., 2015). Powerful unification and generalization of concepts from cognitive science and other fields can be achieved if we develop tools to characterize and relate to a wide diversity of minds in unconventional material implementations (Damasio, 2010; Damasio and Carvalho, 2013; Cook et al., 2014; Ford, 2017; Man and Damasio, 2019; Baluska et al., 2021; Reber and Baluska, 2021).

Closely related to that is the de-throning of natural evolution as the only acceptable origin story for a true Agent [many have proposed a distinction between evolved living forms vs. the somehow inadequate machines which were merely designed by man (Bongard and Levin, 2021)]. First, synthetic evolutionary processes are now being used in the lab to create “machines” and modify life (Kriegman et al., 2020a; Blackiston et al., 2021). Second, the whole process of evolution, basically a hill-climbing search algorithm, results in a set of frozen accidents and meandering selection among random tweaks to the micro-level hardware of cells, with impossible to predict large-scale consequences for the emergent system level structure and function. If this short-sighted process, constrained by many forces that have nothing to do with favoring complex cognition, can give rise to true minds, then so can a rational engineering approach. There is nothing magical about evolution (driven by randomizing processes) as a forge for cognition; surely we can eventually do at least as well, and likely much better, using rational construction principles and an even wider range of materials.

The third foundational aspect of TAME is that the correct answer to how much agency a system has cannot be settled by philosophy—it is an empirical question. The goal is to produce a framework that drives experimental research programs, not only philosophical debate about what should or should not be possible as a matter of definition. To this end, the productive way to think about this a variant of Dennett’s Intentional Stance (Dennett, 1987; Mar et al., 2007), which frames properties such as cognition as observer-dependent, empirically testable, and defined by how much benefit their recognition offers to science (Figure 2). Thus, the correct level of agency with which to treat any system must be determined by experiments that reveal which kind of model and strategy provides the most efficient predictive and control capability over the system. In this engineering (understand, modify, build)-centered view, the optimal position of a system on the spectrum of agency is determined empirically, based on which kind of model affords the most efficient way of prediction and control. Such estimates are, by their empirical nature, subject to revision by future experimental data and conceptual frameworks, and are observer-dependent (not absolute).

A standard methodology in science is to avoid attributing agency to a given system unless absolutely necessary. The mainstream view (e.g., Morgan’s Canon) is that it’s too easy to fall into a trap of “anthropomorphizing” systems with only apparent cognitive powers, when one should only be looking for models focused on mechanistic, lower levels of description that eschew any kind of teleology or mental capacity (Morgan, 1903; Epstein, 1984). However, analysis shows that this view provides no useful parsimony (Cartmill, 2017). The rich history of debates on reductionism and mechanism needs to be complemented with an empirical, engineering approach that is not inappropriately slanted in one direction on this continuum. Teleophobia leads to Type 2 errors with respect to attribution of cognition that carry a huge opportunity cost for not only practical outcomes like regenerative medicine (Pezzulo and Levin, 2015) and engineering, but also ethics. Humans (and many other animals) readily attribute agency to systems in their environment; scientists should be comfortable with testing out a theory of mind regarding various complex systems for the exact same reason—it can often greatly enhance prediction and control, by recognizing the true features of the systems with which we interact. This perspective implies that there is no such thing as “anthropomorphizing” because human beings have no unique essential property which can be inappropriately attributed to agents that have none of it. Aside from the very rare trivial cases (misattributing human-level cognition to simpler systems), we must be careful to avoid the pervasive, implicit remnants of a human-centered pre-scientific worldview in which modern, standard humans are assumed to have some sort of irreducible quality that cannot be present in degrees in slightly (or greatly) different physical implementations (from early hominids to cyborgs etc.). Instead, we should seek ways to naturalize human capacities as elaborations of more fundamental principles that are widely present in complex systems, in very different types and degrees, and to identify the correct level for any given system. Of course, this is just one stance, emphasizing experimental, not philosophical, approaches that avoid defining impassable absolute differences that are not explainable by any known binary transition in body structure or function. Others can certainly drive empirical work focused specifically on what kind of human-level capacities do and do not exist in detectable quantity in other agents.

Avoiding philosophical wrangling over privileged levels of explanation (Ellis, 2008; Ellis et al., 2012; Noble, 2012), TAME takes an empirical approach to attributing agency, which increases the toolkit of ways to relate to complex systems, and also works to reduce profligate attributions of mental qualities. We do not say that a thermos knows whether to keep something hot or cold, because no model of thermos cognition does better than basic thermodynamics to explain its behavior or build better thermoses. At the same time, we know we cannot simply use Newton’s laws to predict the motion of a (living) mouse at the top of a hill, requiring us to construct models of navigation and goal-directed activity for the controller of the mouse’s behavior over time (Jennings, 1906).

Under-estimating the capacity of a system for plasticity, learning, having preferences, representation, and intelligent problem-solving greatly reduces the toolkit of techniques we can use to understand and control its behavior. Consider the task of getting a pigeon to correctly distinguish videos of dance vs. those of martial arts. If one approaches the system bottom-up, one has to implement ways to interface to individual neurons in the animal’s brain to read the visual input, distinguish the videos correctly, and then control other neurons to force the behavior of walking up to a button and pressing it. This may someday be possible, but not in our lifetimes. In contrast, one can simply train the pigeon (Qadri and Cook, 2017). Humanity has been training animals for millennia, without knowing anything about what is in their heads or how brains work. This highly efficient trick works because we correctly identified them as learning agents, which allows us to offload a lot of the computational complexity of any task onto the living system itself, without micromanaging its components.

What other systems might this remarkably powerful strategy apply to? For example, gene regulatory networks (GRNs) are a paradigmatic example of “genetic mechanism,” often assumed to be tractable only by hardware (requiring gene therapy approaches to alter promoter sequences that control network connectivity, or adding/removing gene nodes). However, being open to the possibility that GRNs might actually be on a different place on this continuum suggests an experiment in which they are trained for new behaviors with specific combinations of stimuli (experiences). Indeed, recent analyses of biological GRN models reveal that they exhibit associative and several other kinds of learning capacity, as well as pattern completion and generalization (Watson et al., 2010, 2014; Szilagyi et al., 2020; Biswas et al., 2021). This is an example in which an empirical approach to the correct level of agency for even simple systems not usually thought of as cognitive suggests new hypotheses which in turn open a path to new practical applications (biomedical strategies using associative regimes of drug pulsing to exploit memory and address pharmacoresistance by abrogating habituation, etc.).

We next consider specific aspects of the framework, before diving into specific examples in which it drives novel empirical work.

Specific Conceptual Components of the Technological Approach to Mind Everywhere Framework

A useful framework in this emerging field should not only serve as a lens with which to view data and concepts (Manicka and Levin, 2019b), but also should drive research in several ways. It needs to first specify definitions for key terms such as a Self. These are not meant to be exclusively correct—the definitions can co-exist with others, but should identify a claim as to what is an essential invariant for Selves (and what other aspects can diverge), and how it intersects with experiment. The fundamental symmetry unifying all possible Selves should also facilitate direct comparison or even classification of truly diverse intelligences, sketching the markers of Selfhood and the topology of the option space within which possible agents exist. The framework should also help scientists derive testable claims about how borders of a given Self are determined, and how it interacts with the outside world (and other agents). Finally, the framework should provide actionable, semi-quantitative definitions that have strong implications and constrain theories about how Selves arise and change. All of this must facilitate experimental approaches to determine the empirical utility of this approach.

The TAME framework takes the following as the basic hallmarks of being a Self: the ability to pursue goals, to own compound (e.g., associative) memories, and to serve as the locus for credit assignment (be rewarded or punished), where all of these are at a scale larger than possible for any of its components alone. Given the gradualist nature of the framework, the key question for any agent is “how well,” “how much,” and “what kind” of capacity it has for each of those key aspects, which in turn allows agents to be directly compared in an option space. TAME emphasizes defining a higher scale at which the (possibly competent) activity of component parts gives rise to an emergent system. Like a valid mathematical theorem which has a unique structure and existence over and above any of its individual statements, a Self can own, for example, associative memories (that bind into new mental content experiences that occurred separately to its individual parts), be the subject of reward or punishment for complex states (as a consequence of highly diverse actions that its parts have taken), and be stressed by states of affairs (deviations from goals or setpoints) that are not definable at the level of any of its parts (which of course may have their own distinct types of stresses and goals). These are practical aspects that suggest ways to recognize, create, and modify Selves.

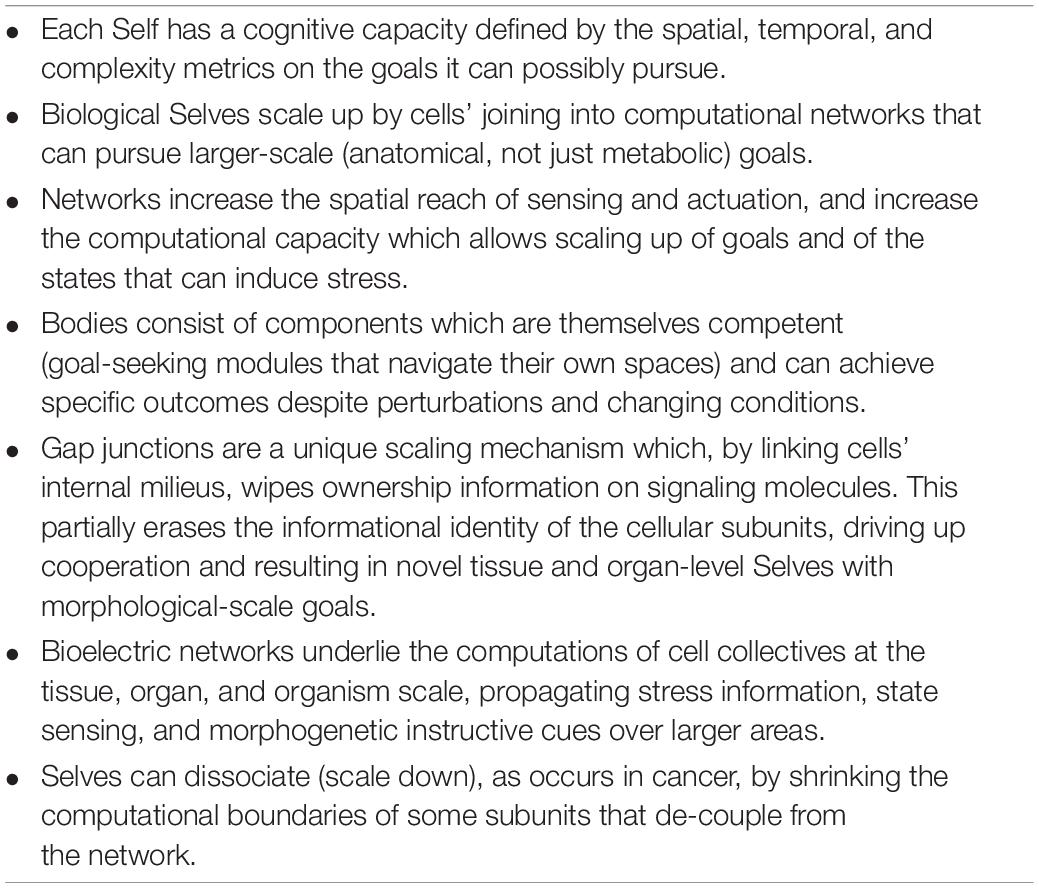

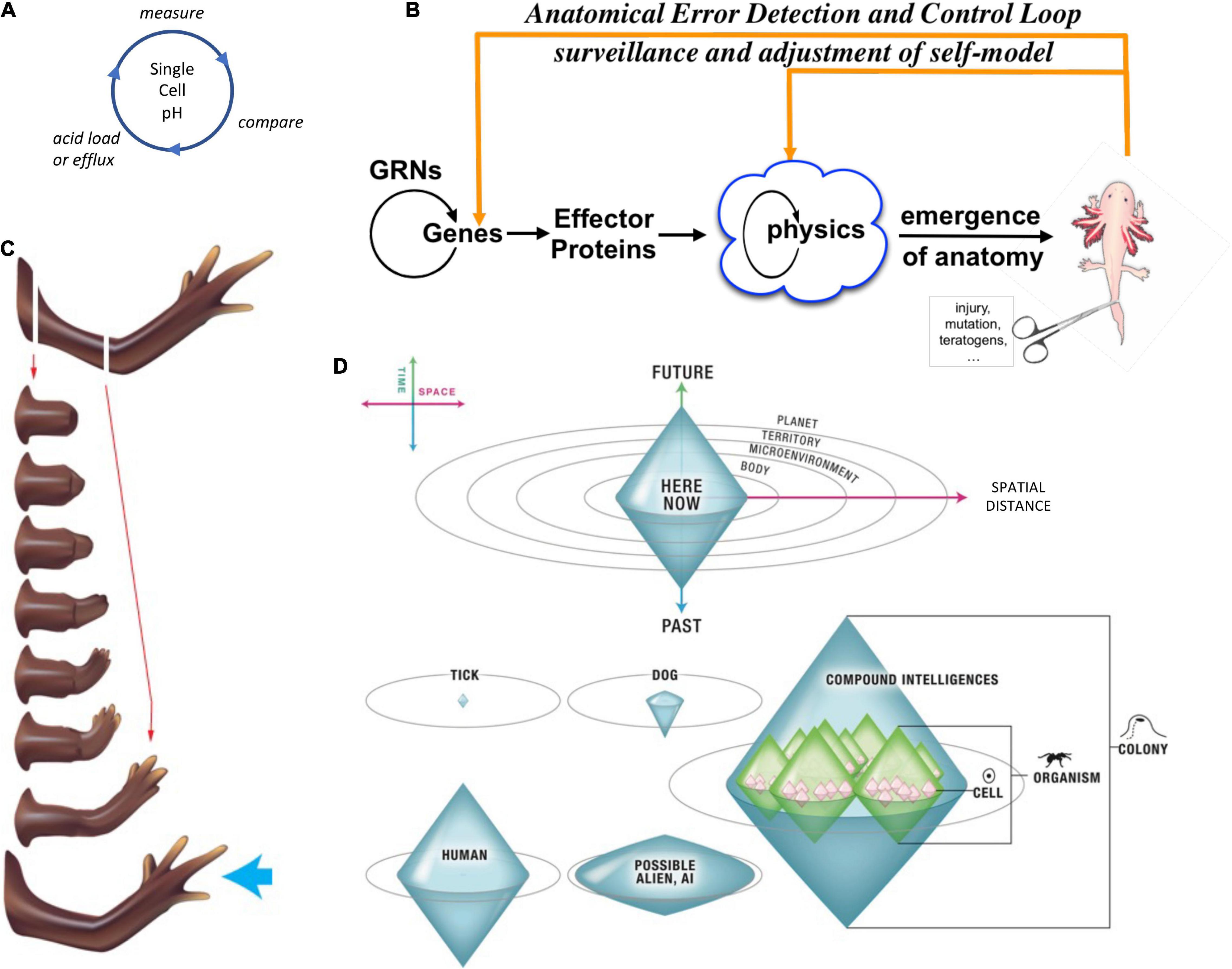

Selves can be classified and compared with respect to the scale of goals they can pursue [Figure 4, described in detail in Levin (2019)]. In this context, the goal-directed perspective adopted here builds on the work of Rosenblueth et al. (1943); Nagel (1979); and Mayr (1992), emphasizing plasticity (ability to reach a goal state from different starting points) and persistence (capacity to reach a goal (Schlosser, 1998) state despite perturbations).

Figure 4. Unconventional goal-directed agents and the scaling of the cognitive Self. (A) The minimal component of agency is homeostasis, for example the ability of a cell to execute the Test-Operate-Exit (Pezzulo and Levin, 2016) loop: a cycle of comparison with setpoint and adjustment via effectors, which allows it to remain in a particular region of state space. (B) This same capacity is scaled up by cellular networks into anatomical homeostasis: morphogenesis is not simply a feedforward emergent process but rather the ability of living systems to adjust and remodel to specific target morphologies. This requires feedback loops at the transcriptional and biophysical levels, which rely on stored information (e.g., bioelectrical pattern memories) against which to minimize error. (C) This is what underlies complex regeneration such as salamander limbs, which can be cut at any position and result in just the right amount and type of regenerative growth that stops when a correct limb is achieved. Such homeostatic systems are examples of simple goal-directed agents. (D) A focus on the size or scale of goals any given system can pursue allows plotting very diverse intelligences on the same graph, regardless of their origin or composition (Levin, 2019). The scale of their goal-directed activity is estimated (collapsed onto one axis of space and one of time, as in Relativity diagrams). Importantly, this way of visualizing the sophistication of agency is a schematic of goal space—it is not meant to represent the spatial extent of sensing or effector range, but rather the scale of events about which they care and the boundary of states that they can possibly represent or work to change. This defines a kind of cognitive light cone (a boundary to any agent’s area of concern); the largest area represents the “now,” with fading efficacy both backward (accessing past events with decreasing reliability) and forward (limited prediction accuracy for future events). Agents are compound entities, composed of (and comprising) other sub- or super-agents each of which has their own cognitive boundary of various sizes. Images by Jeremy Guay of Peregrine Creative.

The ability of a system to exert energy to work toward a state of affairs, overcoming obstacles (to the degree that its sophistication allows) to achieve a particular set of substates is very useful for defining Selves because it grounds the question in well-established control theory and cybernetics (i.e., systems “trying to do things” is no longer magical but is well-established in engineering), and provides a natural way of discovering, defining, and altering the preferences of a system. A common objection is: “surely we can’t say that thermostats have goals and preferences?” The TAME framework holds that whatever true goals and preferences are, there must exist primitive, minimal versions from which they evolved and these are, in an important sense, substrate- and scale-independent; simple homeostatic circuits are an ideal candidate for the “hydrogen atom” of goal-directed activity (Rosenblueth et al., 1943; Turner, 2019). A key tool for thinking about these problems is to ask what a truly minimal example of any cognitive capacity would be like, and to think about transitional forms that can be created just below that. It is logically inevitable that if one follows a complex cognitive capacity backward through phylogeny, one eventually reaches precursor versions of that capacity that naturally suggest the (misguided) question “is that really cognitive, or just physics?” Indeed, a kind of minimal goal-directedness permeates all of physics (Feynman, 1942; Georgiev and Georgiev, 2002; Ogborn et al., 2006; Kaila and Annila, 2008; Ramstead et al., 2019; Kuchling et al., 2020a), supporting a continuous climb of the scale and sophistication of goals.

Pursuit of goals is central to composite agency and the “many to one” problem because it requires distinct mechanisms (for measurement of states, storing setpoints, and driving activity to minimize the delta between the former and the latter) to be bound together into a functional unit that is greater than its parts. To co-opt a great quote (Dobzhansky, 1973), nothing in biology makes sense except in light of teleonomy (Pittendrigh, 1958; Nagel, 1979; Mayr, 1992; Schlosser, 1998; Noble, 2010, 2011; Auletta, 2011; Ellis et al., 2012). The degree to which a system can evaluate possible consequences of various actions, in pursuit of those goal states, can vary widely, but is essential to its survival. The expenditure of energy in ways that effectively reach specific states despite uncertainty, limitations of capability, and meddling from outside forces is proposed as a central unifying invariant for all Selves—a basis for the space of possible agents. This view suggests a semi-quantitative multi-axis option space that enables direct comparison of diverse intelligences of all sorts of material implementation and origins (Levin, 2019, 2020). Specifically (Figure 4), a “space-time” diagram can be created where the spatio-temporal scale of any agent’s goals delineates that Self and its cognitive boundaries.

Note that the distances on Figure 4D represent not first-order capacities such as sensory perception (how far away can it sense), but second-order capacities of the size of goals (humble metabolic hunger-satiety loops or grandiose planetary-scale engineering ambitions) which a given cognitive system is capable of representing and working toward. At any given time, an Agent is represented by a single shape in this space, corresponding to the size and complexity of their possible goal domain. However, genomes (or engineering design specs) map to an ensemble of such shapes in this space because the borders between Self and world, and the scope of goals an agent’s cognitive apparatus can handle, can all shift during the lifetime of some agents—“in software” (another “great transition” marker). All regions in this space can potentially define some possible agent. Of course, additional subdivisions (dimensions) can easily be added, such as the Unlimited Associative Learning marker (Birch et al., 2020) or aspects of Active Inference (Friston and Ao, 2012; Friston et al., 2015b; Calvo and Friston, 2017; Peters et al., 2017).

Some agents, like microbes, have minimal memory (Vladimirov and Sourjik, 2009; Lan and Tu, 2016) and can concern themselves only with a very short time horizon and spatial radius—e.g., follow local gradients. Some agents, e.g., a rat have more memory and some forward planning ability (Hadj-Chikh et al., 1996; Raby and Clayton, 2009; Smith and Litchfield, 2010), but are still precluded from, for example, effectively caring about what will happen 2 months hence, in an adjacent town. Some, like human beings, can devote their lives to causes of enormous scale (future state of the planet, humanity, etc.). Akin to Special Relativity, this formalization makes explicit that class of capacities (in terms of representation of classes of goals) that are forever inaccessible to a given agent (demarcating the edge of the “light cone” of its cognition).

In general, larger selves (1) are capable of working toward states of affairs that occur farther into the future (perhaps outlasting the lifetime of the agent itself—an important great transition, in the sense of West et al. (2015), along the cognitive continuum); (2) deploy memories further back in time (their actions become less “mechanism” and more decision-making (Balazsi et al., 2011) because they are linked to a network of functional causes and information with larger diameter); and (3) they expend effort to manage sensing/effector activity in larger spaces [from subcellular networks to the extended mind (Clark and Chalmers, 1998; Turner, 2000; Timsit and Gregoire, 2021)]. Overall, increases of agency are driven by mechanisms that scale up stress (Box 1)—the scope of states that an agent can possibly be stressed about (in the sense of pressure to take corrective action). In this framework, stress (as a system-level response to distance from setpoint states), preferences, motivation, and the ability to functionally care about what happens are tightly linked. Homeostasis, necessary for life, evolves into allostasis (McEwen, 1998; Schulkin and Sterling, 2019) as new architectures allow tight, local homeostatic loops to be scaled up to measure, cause, and remember larger and more complex states of affairs (Di Paulo, 2000; Camley, 2018).

BOX 1. Stress as the glue of agency.

Tell me what you are stressed about and I will know a lot about your cognitive sophistication. Local glucose concentration? Limb too short? Rival is encroaching on your territory? Your limited lifespan? Global disparities in quality of life on Earth? The scope of states that an agent can possibly be stressed by, in effect, defines their degree of cognitive capacity. Stress is a systemic response to a difference between current state and a desired setpoint; it is an essential component to scaling of Selves because it enables different modules (which sense and act on things at different scales and in distributed locations) to be bound together in one global homeostatic loop (toward a larger purpose). Systemic stress occurs when one sub-agent is not satisfied about its local conditions, and propagates its unhappiness outward as hard-to-ignore signals. In this process, stress pathways serve the same function as hidden layers in a network, enabling the system to be more adaptive by connecting diverse modular inputs and outputs to the same basic stress minimization loop. Such networks scale stress, but stress is also what helps the network scale up its agency—a bidirectional positive feedback loop.

The key is that this stress signal is unpleasant to the other sub-agents, closely mimicking their own stress machinery (genetic conservation: my internal stress molecule is the same as your stress molecule, which contributes to the same “wiping of ownership” that is implemented by gap junctional connections). By propagating unhappiness in this way (in effect, turning up the global system “energy” which facilitates tendency for moving in various spaces), this process recruits distant sub-agents to act, to reduce their own perception of stress. For example, if an organ primordium is in the wrong location and needs to move, the surrounding cells are more willing to get out of the way if by doing so they reduce the amount of stress signal they receive. It may be a process akin to run-and-tumble for bacteria, with stress as the indicator of when to move and when to stop moving, in physiological, transcriptional, or morphogenetic space. Another example is compensatory hypertrophy, in which damage in one organ induces other cells to take up its workload, growing or taking on new functions if need be (Tamori and Deng, 2014; Fontes et al., 2020). In this way, stress causes other agents to work toward the same goal, serving as an influence that binds subunits across space into a coherent higher Self and resists the “struggle of the parts” (Heams, 2012). Interestingly, stress spreads not only horizontally in space (across cell fields) but also vertically, in time: effects of stress response is one of the things most easily transferred by transgenerational inheritance (Xue and Acar, 2018).

Additional implications of this view are that Selves: are malleable (the borders and scale of any Self can change over time); can be created by design or by evolution; and are multi-scale entities that consist of other, smaller Selves (and conversely, scale up to make larger Selves). Indeed they are a patchwork of agents [akin to Theophile Bordeu’s “many little lives” (Haigh, 1976; Wolfe, 2008)] that overlap with each other, and compete, communicate, and cooperate both horizontally (at their own level of organization) and vertically [with their component subunits and the super-Selves of which they are a part (Sims, 2020)].

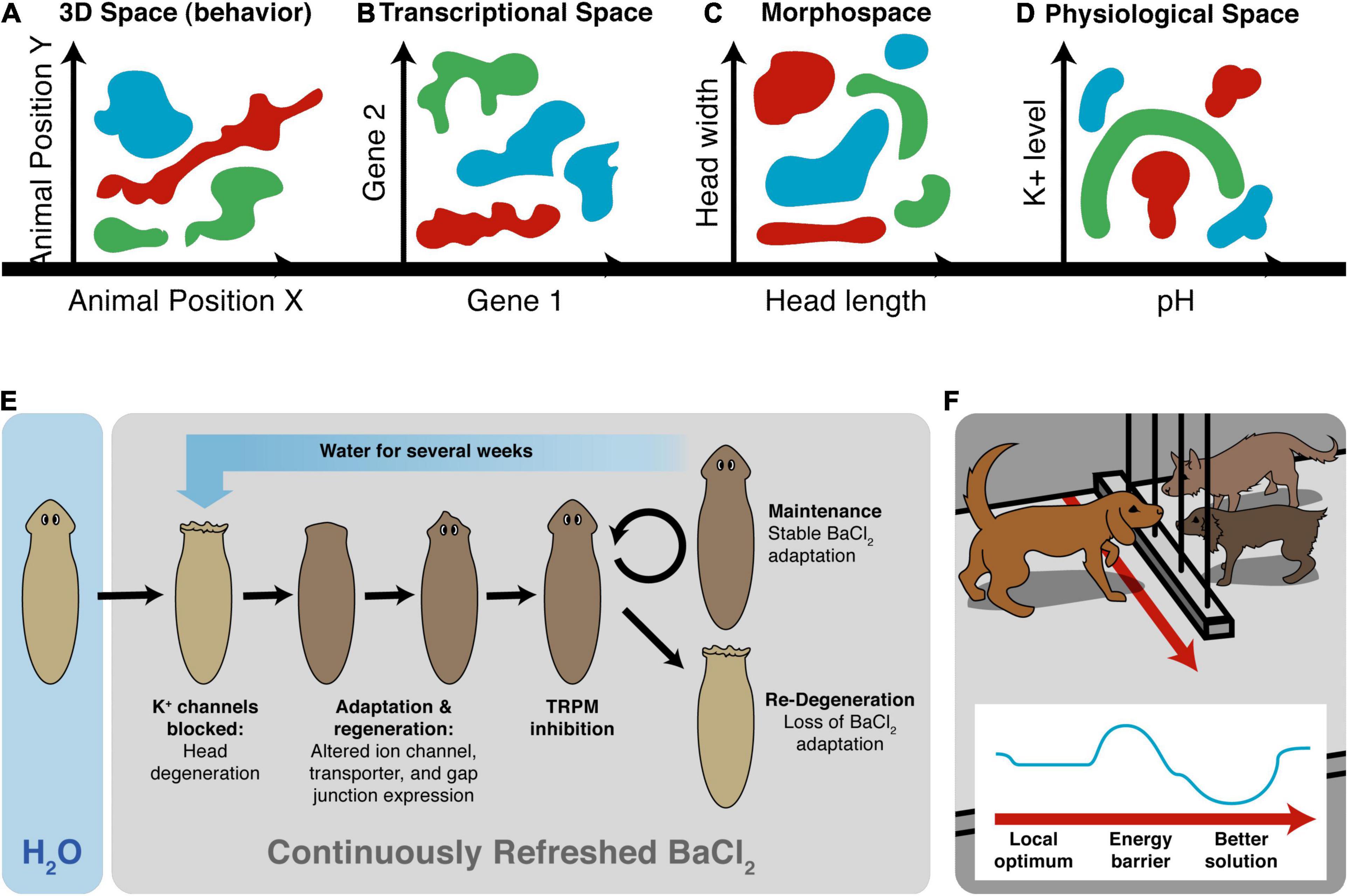

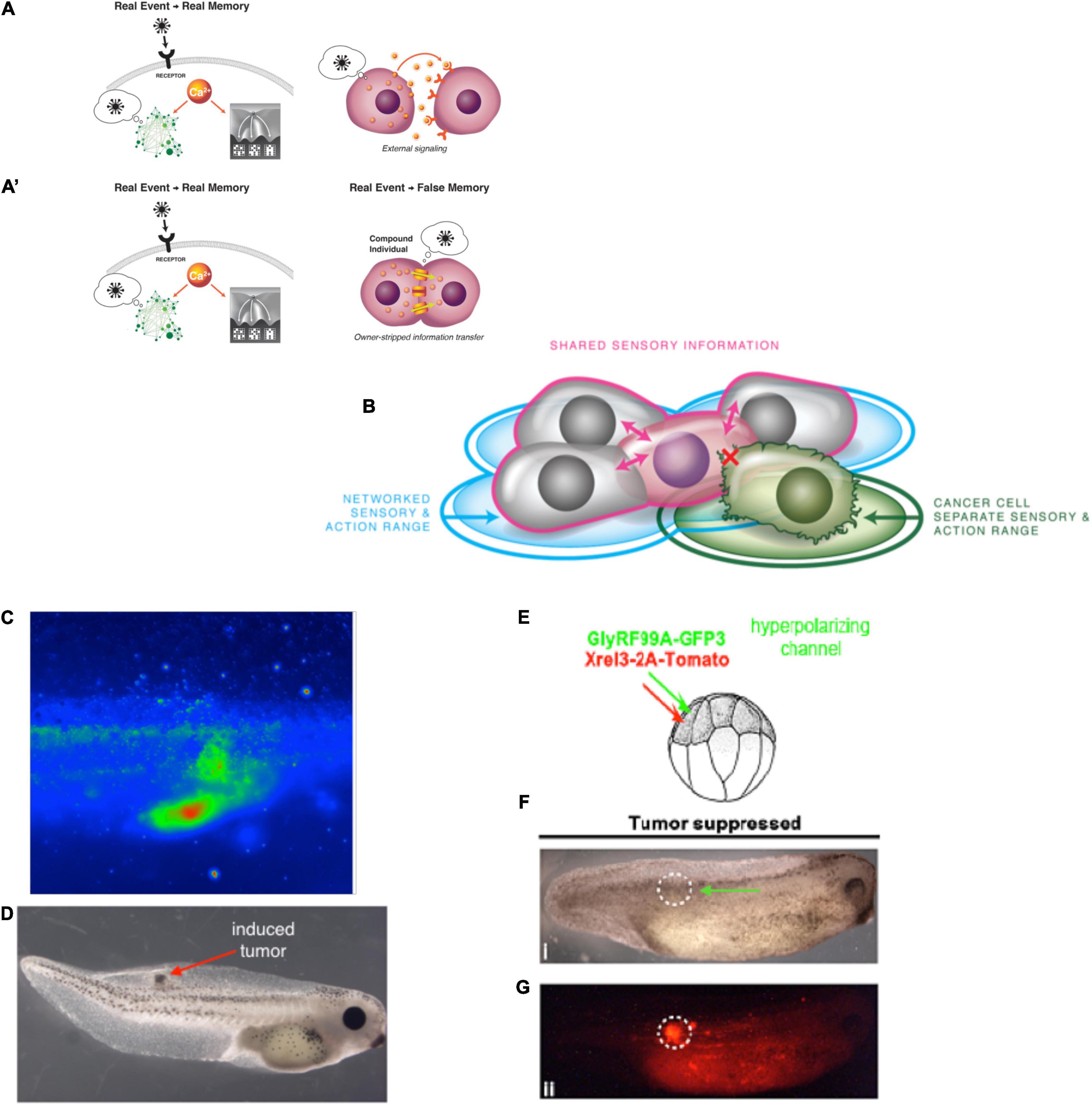

Another important invariant for comparing diverse intelligences is that they are all solving problems, in some space (Figure 5). It is proposed that the traditional problem-solving behavior we see in standard animals in 3D space is just a variant of evolutionarily more ancient capacity to solve problems in metabolic, physiological, transcriptional, and morphogenetic spaces (as one possible sequential timeline along which evolution pivoted some of the same strategies to solve problems in new spaces). For example, when planaria are exposed to barium, a non-specific potassium channel blocker, their heads explode. Remarkably, they soon regenerate heads that are completely insensitive to barium (Emmons-Bell et al., 2019). Transcriptomic analysis revealed that relatively few genes out of the entire genome were regulated to enable the cells to resolve this physiological stressor using transcriptional effectors to change how ions and neurotransmitters are handled by the cells. Barium is not something planaria ever encounter ecologically (so there should not be innate evolved responses to barium exposure), and cells don’t turn over fast enough for a selection process (e.g., with bacterial persisters after antibiotic exposure). The task of determining which genes, out of the entire genome, can be transcriptionally regulated to return to an appropriate physiological regime is an example of an unconventional intelligence navigating a large-dimensional space to solve problems in real-time (Voskoboynik et al., 2007; Elgart et al., 2015; Soen et al., 2015; Schreier et al., 2017). Also interesting is that the actions taken in transcriptional space (a set of mRNA states) map onto a path in physiological state (the ability to perform many needed functions despite abrogated K+ channel activity, not just a single state).

Figure 5. Cognitive agents solve problems in diverse spaces. Intelligence is fundamentally about problem-solving, but this takes place not only in familiar 3D space as “behavior” (control of muscle effectors for movement) (A), but also in other spaces in which cognitive systems try to navigate, in order to reach better regions. This includes the transcriptional space of gene expression (B) here schematized for two genes, anatomical morphospace (C) here schematized for two traits, and physiological space (D) here schematized for two parameters. An example (E) of problem-solving is planaria, which placed in barium (causing their heads to explode due to general blockade of potassium channels) regenerate new heads that are barium-insensitive (Emmons-Bell et al., 2019). They solve this entirely novel (not primed by evolutionary experience with barium) stressor by a very efficient traversal in transcriptional space to rapidly up/down regulate a very small number of genes that allows them to conduct their physiology despite the essential K+ flux blockade. (F) The degree of intelligence of a system can be estimated by how effectively they navigate to optimal regions without being caught in a local maximum, illustrated as a dog which could achieve its goal on the other side of the fence, but this would require going around—temporarily getting further from its goal (a measurable degree of patience or foresight of any system in navigating its space, which can be visualized as a sort of energy barrier in the space, inset). Images by Jeremy Guay of Peregrine Creative.

The common feature in all such instances is that the agent must navigate its space(s), preferentially occupying adaptive regions despite perturbations from the outside world (and from internal events) that tend to pull it into novel regions. Agents (and their sub- and super-agents) construct internal models of their spaces (Beer, 2014, 2015; Beer and Williams, 2015; Hoffman et al., 2015; Fields et al., 2017; Hoffman, 2017; Prentner, 2019; Dietrich et al., 2020; Prakash et al., 2020), which may or may not match the view of their action space developed by their conspecifics, parasites, and scientists. Thus, the space one is navigating is in an important sense virtual (belonging to some Agent’s self-model), is developed and often modified “on the fly” (in addition to that hardwired by the structure of the agent), and not only faces outward to infer a useful structure of its option space but also faces inward to map its own body and somatotopic properties (Bongard et al., 2006). The lower-level subsystems simplify the search space for the higher-level agent because their modular competency means that the higher-level system doesn’t need to manage all the microstates [a strong kind of hierarchical modularity (Zhao et al., 2006; Lowell and Pollack, 2016)]. In turn, the higher-level system deforms the option space for the lower-level systems so that they do not need to be as clever, and can simply follow local energy gradients.

The degree of intelligence, or sophistication, of an agent in any space is roughly proportional to its ability to deploy memory and prediction (information processing) in order to avoid local maxima. Intelligence involves being able to temporarily move away from a simple vector toward one’s goals in a way that results in bigger improvements down the line; the agent’s internal complexity has to facilitate some degree of complexity (akin to hidden layers in an artificial neural network which introduce plasticity between stimulus and response) in the goal-directed activity that enables the buffering needed for patience and indirect paths to the goal. This buffering enables the flip side of homeostatic problem-driven (stress reduction) behavior by cells: the exploration of the space for novel opportunities (creativity) by the collective agent, and the ability to acquire more complex goals [in effect, beginning the climb to Maslow’s hierarchy (Taormina and Gao, 2013)]. Of course it must be pointed out that this way of conceiving intelligence is one of many, and is proposed here as a way to enable the concept to be experimentally ported over to unfamiliar substrates, while capturing what is essential about it in a way that does not depend on arbitrary restrictions that will surely not survive advances in synthetic bioengineering, machine learning, and exobiology.

Another important aspect of intelligence that is space-agnostic is the capacity for generalization. For example, in the barium planaria example discussed above, it is possible that part of the problem-solving capacity is due to the cells’ ability to generalize in physiological space. Perhaps the cells recognize the physiological stresses induced by the novel barium stimulus as a member of the wider class of excitotoxicity induced by evolutionarily-familiar epileptic triggers, enabling them to deploy similar solutions (in terms of actions in transcriptional space). Such abilities to generalize have now been linked to measurement invariance (Frank, 2018), showing its ancient roots in the continuum of cognition.

Consistent with the above discussion, complex agents often consist of components that are themselves competent problem-solvers in their own (usually smaller, local) spaces. The relationship between wholes and their parts can be as follows. An agent is an integrated holobiont to the extent that it distorts the option space, and the geodesics through it, for its subunits (perhaps akin to how matter and space affect each other in general relativity) to get closer to a high-level goal in its space. A similar scheme is seen in neuroscience, where top-down feedback helps lower layer neurons to choose a response to local features by informing them about more global features (Krotov, 2021).

At the level of the subunits, which know nothing of the higher problem space, this simply looks like they are minimizing free energy and passively doing the only thing they can do as physical systems: this is why if one zooms in far enough on any act of decision-making, all one ever sees is dumb mechanism and “just physics.” The agential perspective (Godfrey-Smith, 2009) looks different at different scales of observation (and its degree is in the eye of a beholder who seeks to control and predict the system, which includes the Agent itself, and its various partitions). This view is closely aligned with that of “upper directedness” (McShea, 2012), in which the larger system directs its components’ behavior by constraints and rewards for coarse-grained outcomes, not microstates (McShea, 2012).

Note that these different competing and cooperating partitions are not just diverse components of the body (cells, microbiome, etc.) but also future and past versions of the Self. For example, one way to achieve the goal of a healthier metabolism is to lock the refrigerator at night and put the keys somewhere that your midnight self, which has a shorter cognitive boundary (is willing to trade long-term health for satiety right now) and less patience, is too lazy to find. Changing the option space, energy barriers, and reward gradients for your future self is a useful strategy for reaching complex goals despite the shorter horizons of the other intelligences that constitute your affordances in action space.

The most effective collective intelligences operate by simultaneously distorting the space to make it easy for their subunits to do the right thing with no comprehension of the larger-scale goals, but themselves benefit from the competency of the subunits which can often get their local job done even if the space is not perfectly shaped (because they themselves are homeostatic agents in their own space). Thus, instances of communication and control between agents (at the same or different levels) are mappings between different spaces. This suggests that both evolution’s, and engineers’, hard work is to optimize the appropriate functional mapping toward robustness and adaptive function.

Next, we consider a practical example of the application of this framework to an unconventional example of cognition and flexible problem-solving: morphogenesis, which naturally leads to specific hypotheses of the origin of larger biological Selves (scaling) and its testable empirical (biomedical) predictions (Dukas, 1998). This is followed with an exploration of the implications of these concepts for evolution, and a few remarks on consciousness.

Somatic Cognition: An Example of Unconventional Agency in Detail

“Again and again terms have been used which point not to physical but to psychical analogies. It was meant to be more than a poetical metaphor…”

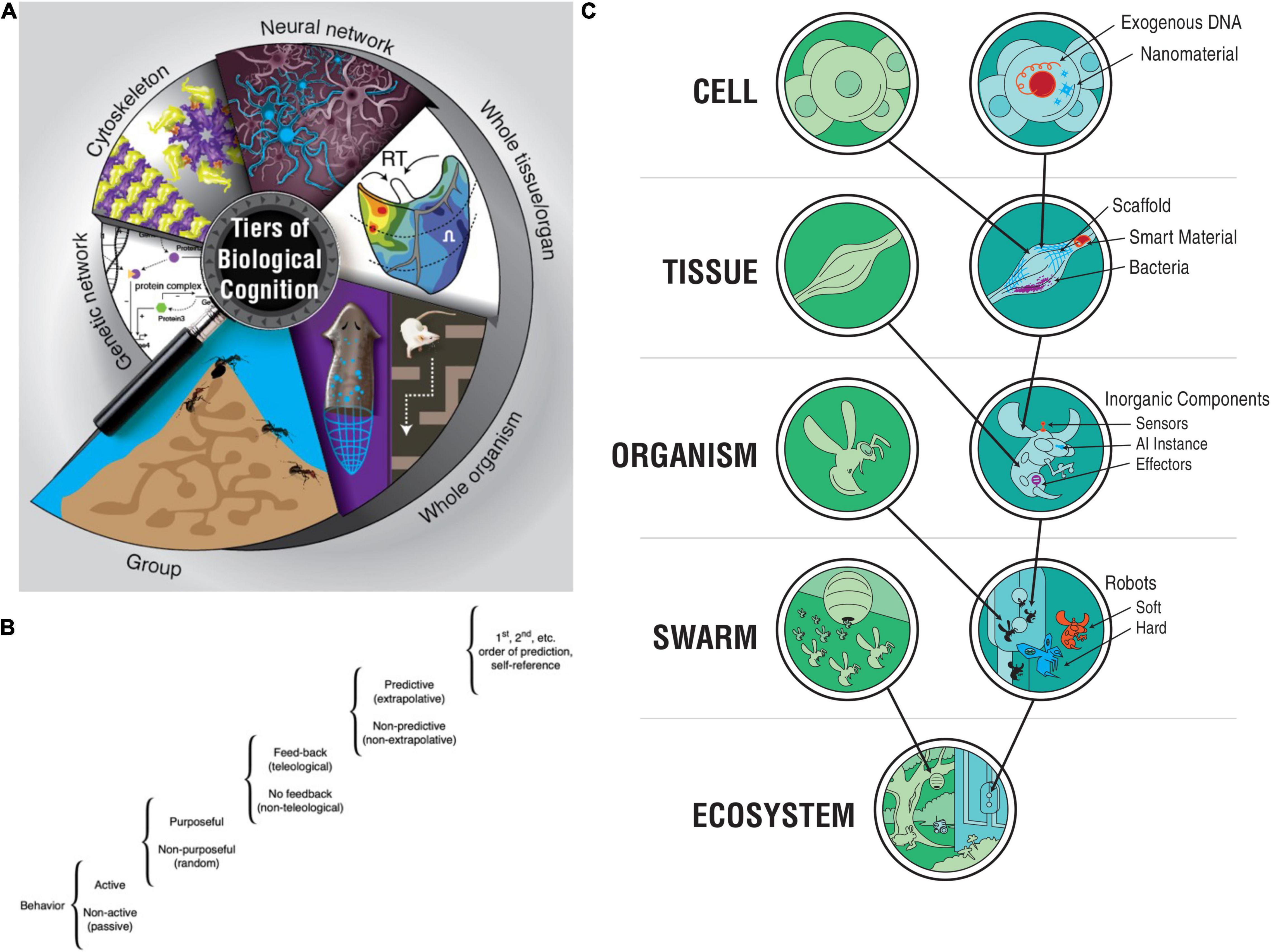

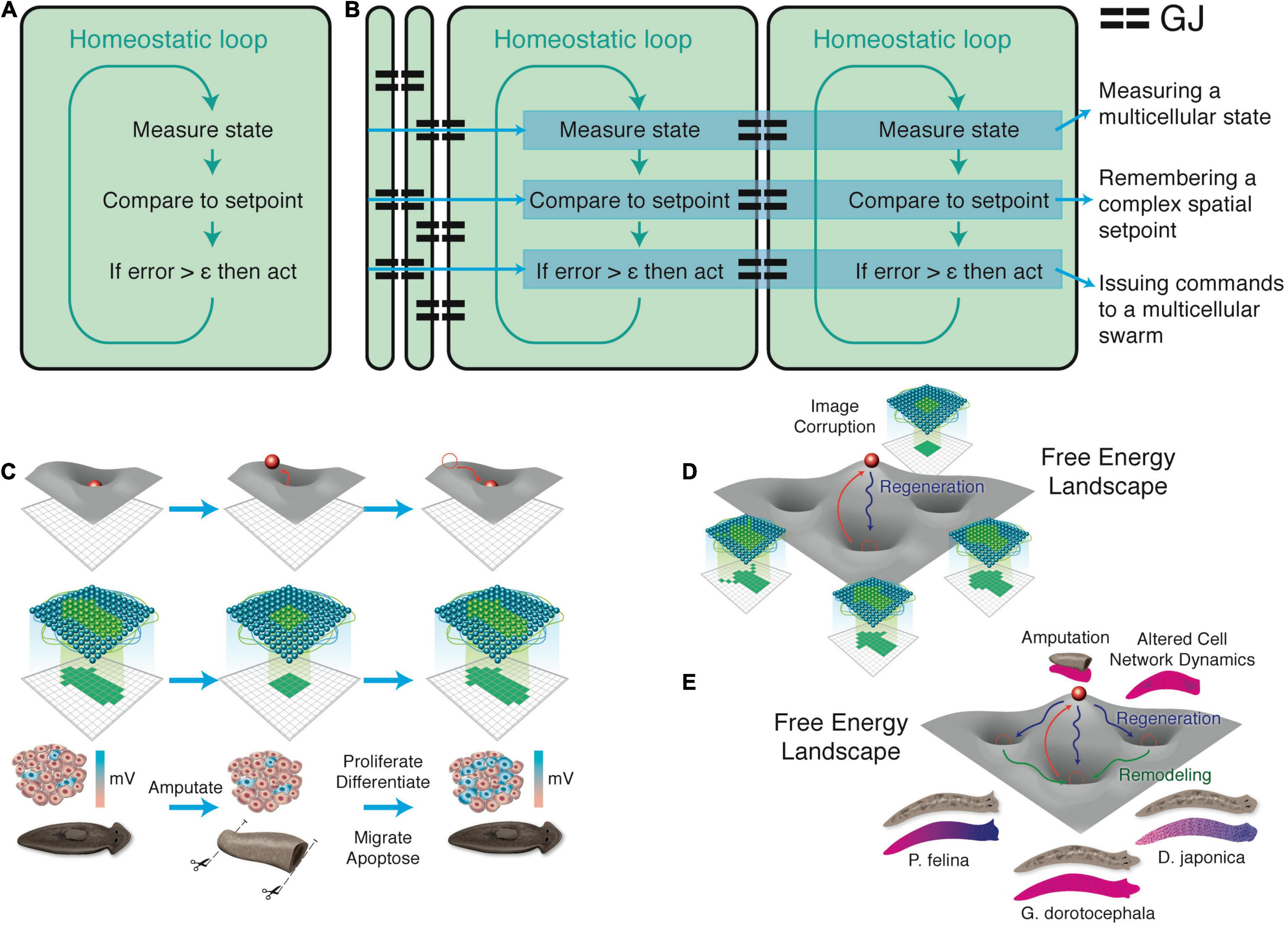

An example of TAME applied to basal cognition in an unconventional substrate is that of morphogenesis, in which the mechanisms of cognitive binding between subunits are now partially known, and testable hypotheses about cognitive scaling can be formulated [explored in detail in Friston et al. (2015a) and Pezzulo and Levin (2015, 2016)]. It is uncontroversial that morphogenesis is the result of collective activity: individual cells work together to build very complex structures. Most modern biologists treat it as clockwork [with a few notable exceptions around the recent data on cell learning (di Primio et al., 2000; Brugger et al., 2002; Norman et al., 2013; Yang et al., 2014; Stockwell et al., 2015; Urrios et al., 2016; Tweedy and Insall, 2020; Tweedy et al., 2020)], preferring a purely feed-forward approach founded on the idea of complexity science and emergence. On this view, there is a privileged level of causation—that of biochemistry—and all of the outcomes are to be seen as the emergent consequences of highly parallel execution of local rules (a cellular automaton in every sense of the term). Of course, it should be noted that the forefathers of developmental biology, such as Spemann (1967), were already well-aware of the possible role of cognitive concepts in this arena and others have occasionally pointed out detailed homologies (Grossberg, 1978; Pezzulo and Levin, 2015). This becomes clearer when we step away from the typical examples seen in developmental biology textbooks and look at some phenomena that, despite the recent progress in molecular genetics, remain important knowledge gaps (Figure 6).

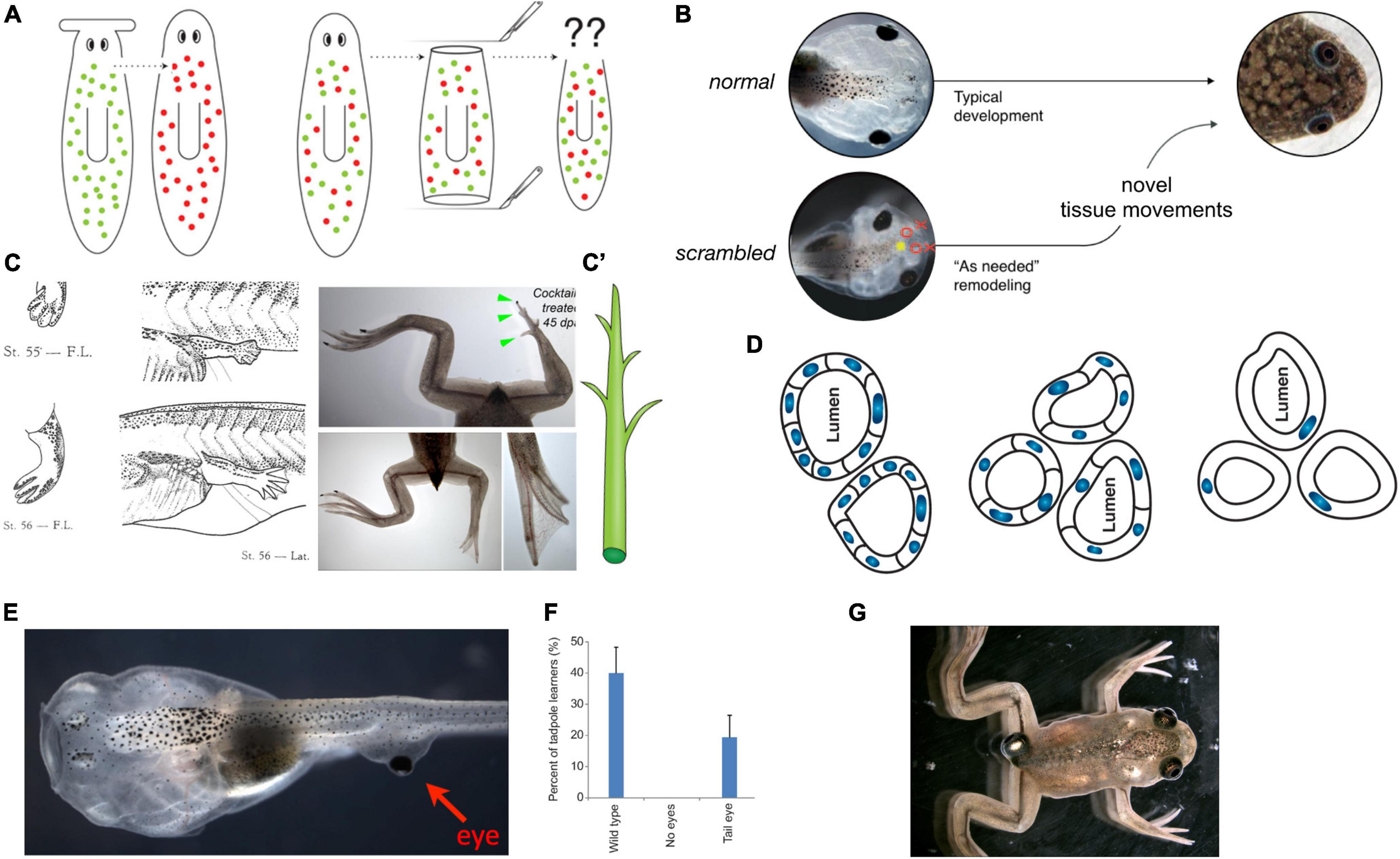

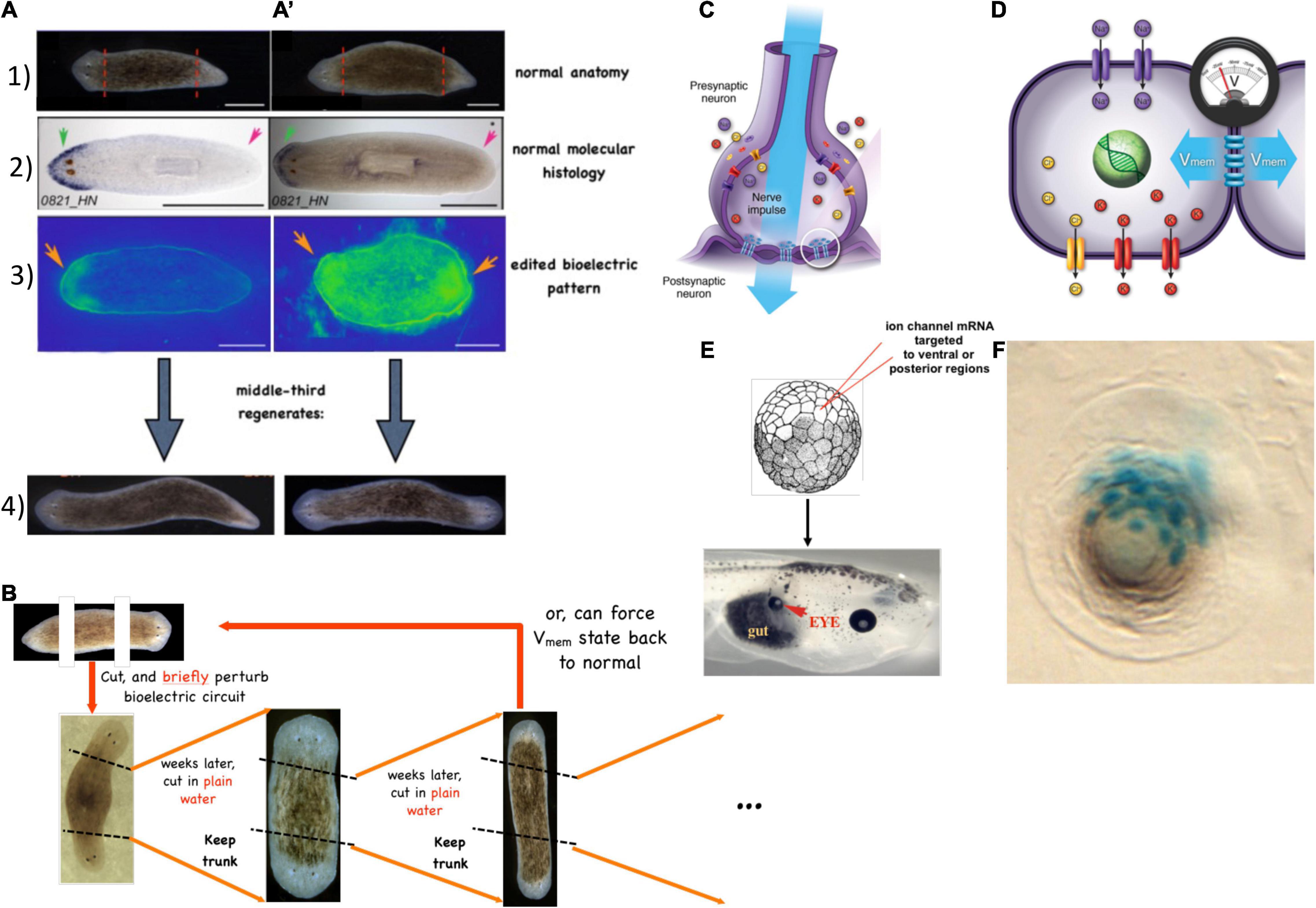

Figure 6. Morphogenesis as an example of collective intelligence and plasticity. The results of complex morphogenesis are the behavior in morphospace of a collective intelligence of cells. It is essential to understand this collective intelligence because by themselves, progress in molecular genetics is insufficient. For example, despite genomic information and much pathway data on the behavior of stem cells in planarian regeneration, there are no models predicting what happens when cells from a flat-headed species are injected into a round-headed species (A): what kind of head will they make, and will regeneration/remodeling ever stop, since the target morphology can never match what either set of cells expects? Development has the ability to overcome unpredictable perturbations to reach its goals in morphospace: tadpoles made with scrambled positions of craniofacial organs can make normal frogs (B) because the tissues will move from their abnormal starting positions in novel ways until a correct frog face is achieved (Vandenberg et al., 2012). This illustrates that the genetics seeds the development of hardware executing not an invariant set of movements but rather an error minimization (homeostatic) loop with reference to a stored anatomical setpoint (target morphology). The paths through morphospace are not unique, illustrated by the fact that when frog legs are induced to regenerate (C), the intermediate stages are not like the developmental path of limb development (forming a paddle and using programmed cell death to separate the digits) but rather like a plant (C′), in which a central core gives rise to digits growing as offshoots (green arrowheads) which nevertheless ends up being a very normal-looking frog leg (Tseng and Levin, 2013). (D) The plasticity extends across levels: when newt cells are made very large by induced polyploidy, they not only adjust the number of cells that work together to build kidney tubules with correct lumen diameter, but can call up a completely different molecular mechanism (cytoskeletal bending instead of cell:cell communication) to make a tubule consisting in cross-section of just 1 cell wrapped around itself; this illustrates intelligence of the collective, as it creatively deploys diverse lower-level modules to solve novel problems. The plasticity is not only structural but functional: when tadpoles are created to (E) have eyes on their tails (instead of in their heads), the animals can see very well (Blackiston and Levin, 2013), as revealed by their performance in visual learning paradigms (F). Such eyes are also competent modules: they first form correctly despite their aberrant neighbors (muscle, instead of brain), then put out optic nerves which they connect to the nearby spinal cord, and later they ignore the programmed cell death of the tail, riding it backward to end up on the posterior of the frog (G). All of this reveals the remarkable multi-scale competency of the system which can adapt to novel configurations on the fly, not requiring evolutionary timescales for adaptive functionality (and providing important buffering for mutations that make changes whose disruptive consequences are hidden from selection by the ability of modules to get their job done despite changes in their environment). Panels (A,C’,D) are courtesy of Peregrine Creative. Panel (C) is from Xenbase and Aisun Tseng. Panels (E–G) are courtesy of Douglas Blackiston. Panel (B) is used with permission from Vandenberg et al. (2012), and courtesy of Erin Switzer.

Goal-Directed Activity in Morphogenesis

Morphogenesis (broadly defined) is not only a process that produces the same robust outcome from the same starting condition (development from a fertilized egg). In animals such as salamanders, cells will also re-build complex structures such as limbs, no matter where along the limb axis they are amputated, and stop when it is complete. While this regenerative capacity is not limitless, the basic observation is that the cells cooperate toward a specific, invariant endstate (the target morphology), from diverse starting conditions, and cease their activity when the correct pattern has been achieved. Thus, the cells do not merely perform a rote set of steps toward an emergent outcome, but modify their activity in a context-dependent manner to achieve a specific anatomical target morphology. In this, morphogenetic systems meet James’ test for minimal mentality: “fixed ends with varying means” (James, 1890).

For example, tadpoles turn into frogs by rearranging their craniofacial structures: the eyes, nostrils, and jaws move as needed to turn a tadpole face into a frog face (Figure 6B). Guided by the hypothesis that this was not a hardwired but an intelligent process that could reach its goal despite novel challenges, we made tadpoles in which these organs were in the wrong positions—so-called Picasso Tadpoles (Vandenberg et al., 2012). Amazingly, they tend to turn into largely normal frogs because the craniofacial organs move in novel, abnormal paths [sometimes overshooting and needing to return a bit (Pinet et al., 2019)] and stop when they get to the correct frog face positions. Similarly, frog legs that are artificially induced to regenerate create a correct final form but not via the normal developmental steps (Tseng and Levin, 2013). Students who encounter such phenomena and have not yet been inoculated with the belief that molecular biology is a privileged level of explanation (Noble, 2012) ask the obvious (and proper) question: how does it know what a correct face or leg shape is?

Examples of remodeling, regulative development (e.g., embryos that can be cut in half and produce normal monozygotic twins), and regeneration, ideally illustrate the goal-directed nature of cellular collectives. They pursue specific anatomical states that are much larger than any individual cells and solve problems in morphospace in a context-sensitive manner—any swarm of miniature robots that could do this would be called a triumph of collective intelligence in the engineering field. Guided by the TAME framework, two questions come within reach. First, how does the collective measure current state and store the information about the correct target morphology? Second, if morphogenesis is not at the clockwork level on the continuum of persuadability but perhaps at that of the thermostat, could it be possible to re-write the setpoint without rewiring the machine (i.e., in the context of a wild-type genome)?