- 1The Department of Gynecology and Obstetrics, Beijing Shijitan Hospital, Capital Medical University, Beijing, China

- 2The Department of Electronics and Information Engineering, Beihang University, Beijing, China

Objective: This study aimed to evaluate and validate the performance of deep convolutional neural networks when discriminating different histologic types of ovarian tumor in ultrasound (US) images.

Material and methods: Our retrospective study took 1142 US images from 328 patients from January 2019 to June 2021. Two tasks were proposed based on US images. Task 1 was to classify benign and high-grade serous carcinoma in original ovarian tumor US images, in which benign ovarian tumor was divided into six classes: mature cystic teratoma, endometriotic cyst, serous cystadenoma, granulosa-theca cell tumor, mucinous cystadenoma and simple cyst. The US images in task 2 were segmented. Deep convolutional neural networks (DCNN) were applied to classify different types of ovarian tumors in detail. We used transfer learning on six pre-trained DCNNs: VGG16, GoogleNet, ResNet34, ResNext50, DensNet121 and DensNet201. Several metrics were adopted to assess the model performance: accuracy, sensitivity, specificity, FI-score and the area under the receiver operating characteristic curve (AUC).

Results: The DCNN performed better in labeled US images than in original US images. The best predictive performance came from the ResNext50 model. The model had an overall accuracy of 0.952 for in directly classifying the seven histologic types of ovarian tumors. It achieved a sensitivity of 90% and a specificity of 99.2% for high-grade serous carcinoma, and a sensitivity of over 90% and a specificity of over 95% in most benign pathological categories.

Conclusion: DCNN is a promising technique for classifying different histologic types of ovarian tumors in US images, and provide valuable computer-aided information.

1 Introduction

Ovaries are an important part of the female reproductive system. Ovarian tumors have many different histological types. Over 80% of patients with ovarian tumors are benign lesions. The histopathological types of benign ovarian tumors are mainly concentrated in serous cystadenoma, endometriotic cyst, mature cystic teratoma and mucinous cystadenoma, accounting for nearly 90% of patients operated for ovarian tumors (1). The treatments vary from one to another according to the histologic types and biological behavior of ovarian tumors, patient’s age and fertility needs. The surgeon needs to figure out the tumor size, benign or malignant, histologic type as clear as possible to make treatment decision (2). It is of vital important to figure out the histological types of ovarian tumors before surgery.

The preoperative diagnosis of ovarian tumors is highly dependent on imaging tests. Ultrasonography is widely used in the clinical diagnosis of ovarian tumors because of its simplicity, non-invasiveness, non-radiation, safety and affordability (3–5). Ultrasound images are interpreted manually by sonographers, and their accuracy plays an important role in the diagnosis and assessment of disease. However, variability in diagnostic results is inevitable due to professional knowledge, clinical experience, physiological fatigue, and subjective differences (6). With the development of artificial intelligence technology, the combination of computer technology and medical image analysis will be expected to provide new solutions in terms of cost, efficiency, and accuracy.

In recent years, deep learning analysis has been widely used in medical image processing (7). Scientists have proposed many methods to process medical images using deep neural network models, such as Convolutional Neural Networks (CNN) (8), Fully Convolutional Networks (FCN) (9), or Recurrent Neural Network (RNN) (10), which can segment and assisting diagnose diseases of medical images. There are researches in brain (11), lung (12), breast (13), liver (14), vascular artery (15), thyroid (16) and more. The DCNN can assist in diagnosing diseases by pre-processing images through algorithms.

However, research and application of deep learning-based US image analysis in the field of ovarian tumors is rare. Current researches focus on the benign and malignant classifications (17–21), but the treatment is closely related to the specific pathological categories. The subdivided pathological categories can be helpful to the physician in making a surgical decision. In this project, based on our ovarian ultrasound images, we propose to build a deep learning-based prediction model of ovarian tumors, which can classify the pathological categories in detail, and evaluate its clinical value in assisting the diagnosis of ovarian tumors, using postoperative histopathological examination results as confirmation criteria.

2 Material and methods

This retrospective study was approved by the institutional review board of Beijing Shijitan Hospital, and written informed consent was waived (approval number sjtky11-1x-2022 (085)).

2.1 Dataset collection

This study retrospectively collected patients with benign and malignant ovarian tumors who underwent surgery for ovarian tumors at Beijing Shijitan Hospital from January 2019 to June 2021 and underwent preoperative ultrasound, and were confirmed by postoperative histopathological examination.

Inclusion criteria were as follows: patients who had preoperative diagnostic-quality US showing at least one persistent ovarian tumor (excluding physiologic cysts), and underwent surgery with subsequent histopathologic results.

Exclusion criteria were histopathologically confirmed uterine sarcoma or non-gynecologic tumors, inconclusive histopathologic results, or US images of ovarian tumor showed blurred boundary, and the boundary of the lesions could not be delineated. The patient flowchart is shown in Figure 1.

2.2 Data preprocessing

After sonographer training, lesions were delineated along macroscopic lesion borders or anatomical structures using labelme software. The delineation results of the lesions were reviewed by the sonographer with more than five years of experience. Given the limitations of medical image acquisition, we applied data augmentation techniques to image processing to reduce overfitting and improve generalization. Data enhancing technology was used to randomly crop and flip the original images. Gray features and texture information of the lesion cannot be altered during this process. All augmented images were resized to 256 * 256 pixels. Python (version 3.7.3) was used to conduct all processing steps.

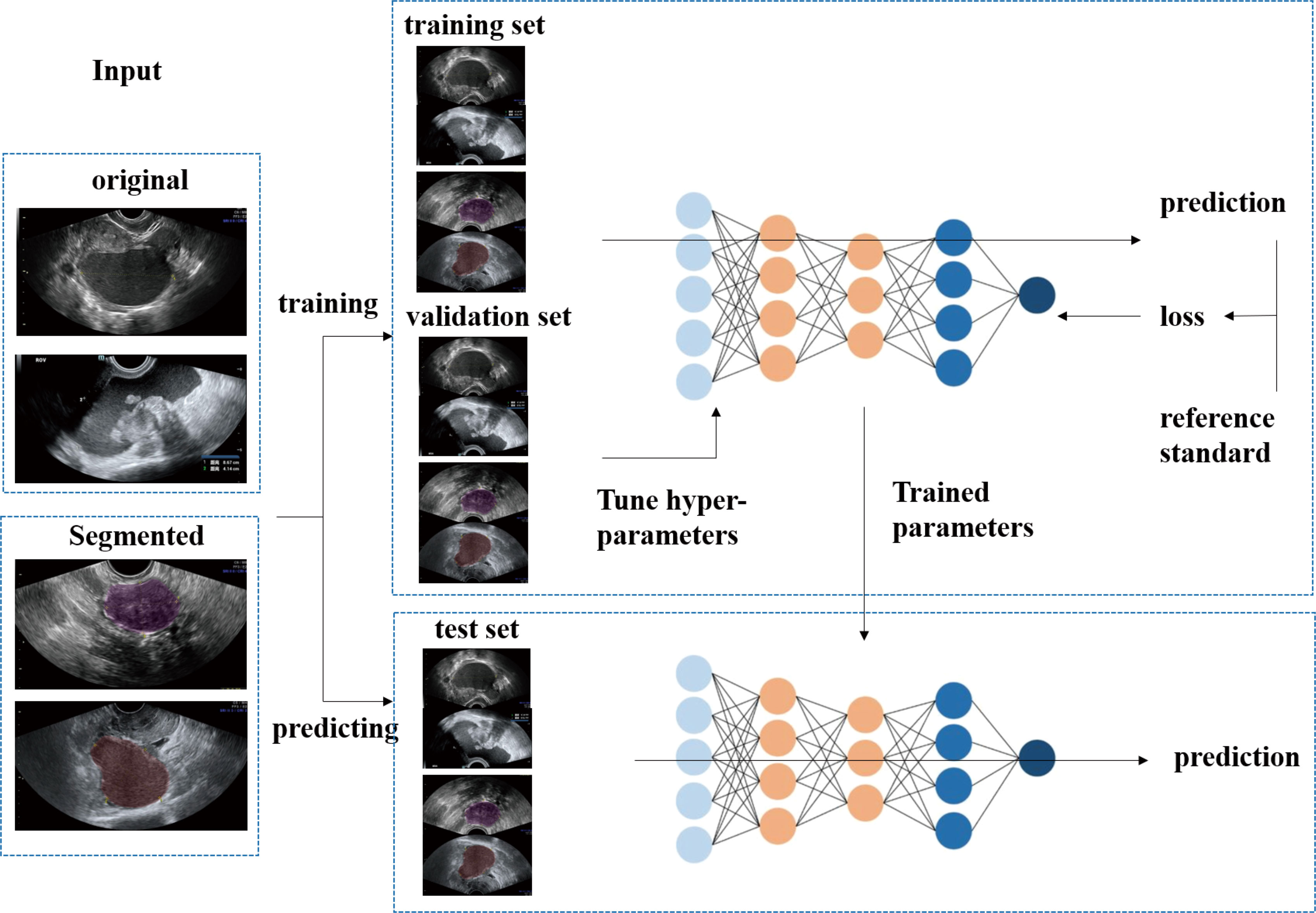

2.3 DCNN model training and interpretation

The procedure used for image processing is shown in Figure 2. Six representative DCNN architectures, VGG16, GoogleNet, ResNet34, ResNext50, DensNet121 and DensNet201 (22–26), were used to identify different histological types of ovarian tumors based on US images. We classify US images into 7 classes: the benign cohort was divided into six categories: mature cystic teratoma, endometriotic cyst, serous cystadenoma, granulosa theca cell tumor, mucinous cystadenoma, and simple cyst, and one malignant category, high-grade serous ovarian cancer. Each subgroup was sampled at 70%, 10%, and 20% and pooled together to construct training, validation, and test data sets. They were all trained and validated by the DCNN.

Figure 2 The flow diagram shows the image input and the main processing steps for algorithms based on deep learning.

During training, the weights of the neural network were updated using the Adam optimizer with an initial learning rate of 0.001 and a batch size of 16. All models were trained for 200 epochs; the momentum is set to 0.9. An Intel(R) Xeon(R) E5-2690 v4 CPU and Nvidia GeForce GTX 1080 GPU were used for models training. For classification, the transfer learning methods of these networks were also used (27, 28). We then used back-propagation to fine tune the parameters of the fully connected layer of the network on our dataset. All programs were executed in Python version 3.7.3.

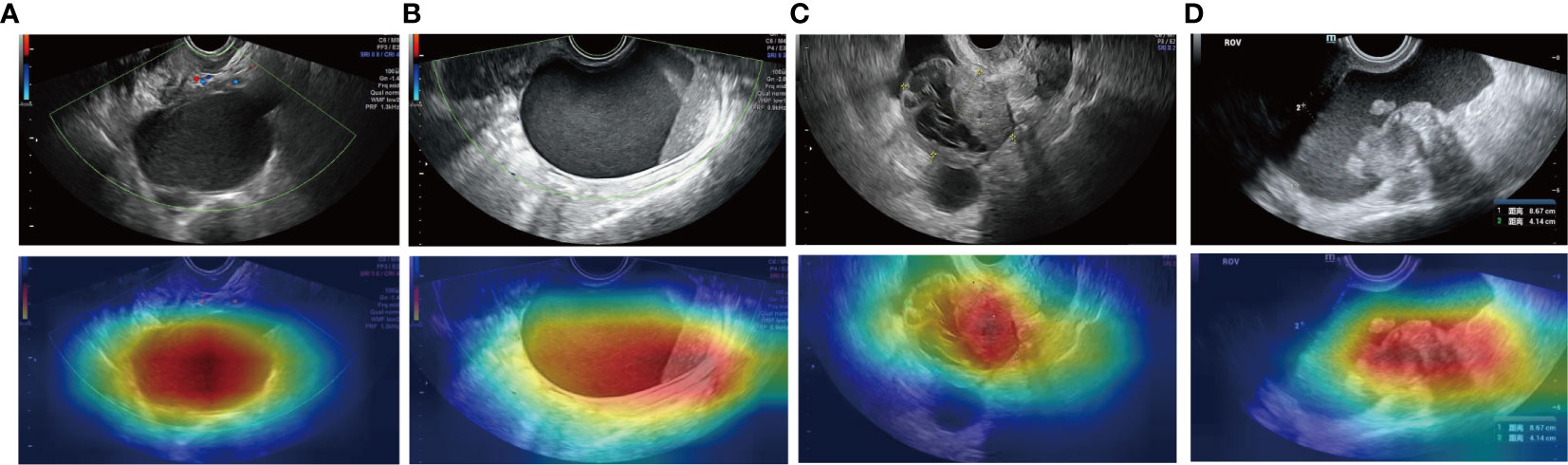

We used the Classifier Activator Map (CAM) method to visualize the important regions leading to the decision of the deep learning model in order to improve the interpretability of our model (29). We obtained heat maps explaining which parts of an input US image were focused by the DCNN. All heat maps were generated using the OpenCV package (version 4.3.0.36).

2.4 Statistical analysis

Our study investigated different DCNN models, obtained experimental results, and calculated indicators based on them. The area under the receiver operating characteristic curve, accuracy, sensitivity, specificity, and F1 score were used to evaluate the performance of the 7-class classification task. Differences between models were considered statistically significant when P < 0.05. These measures were calculated using the NumPy (version 1.16.2).

3 Results

3.1 Patient and US images characteristics

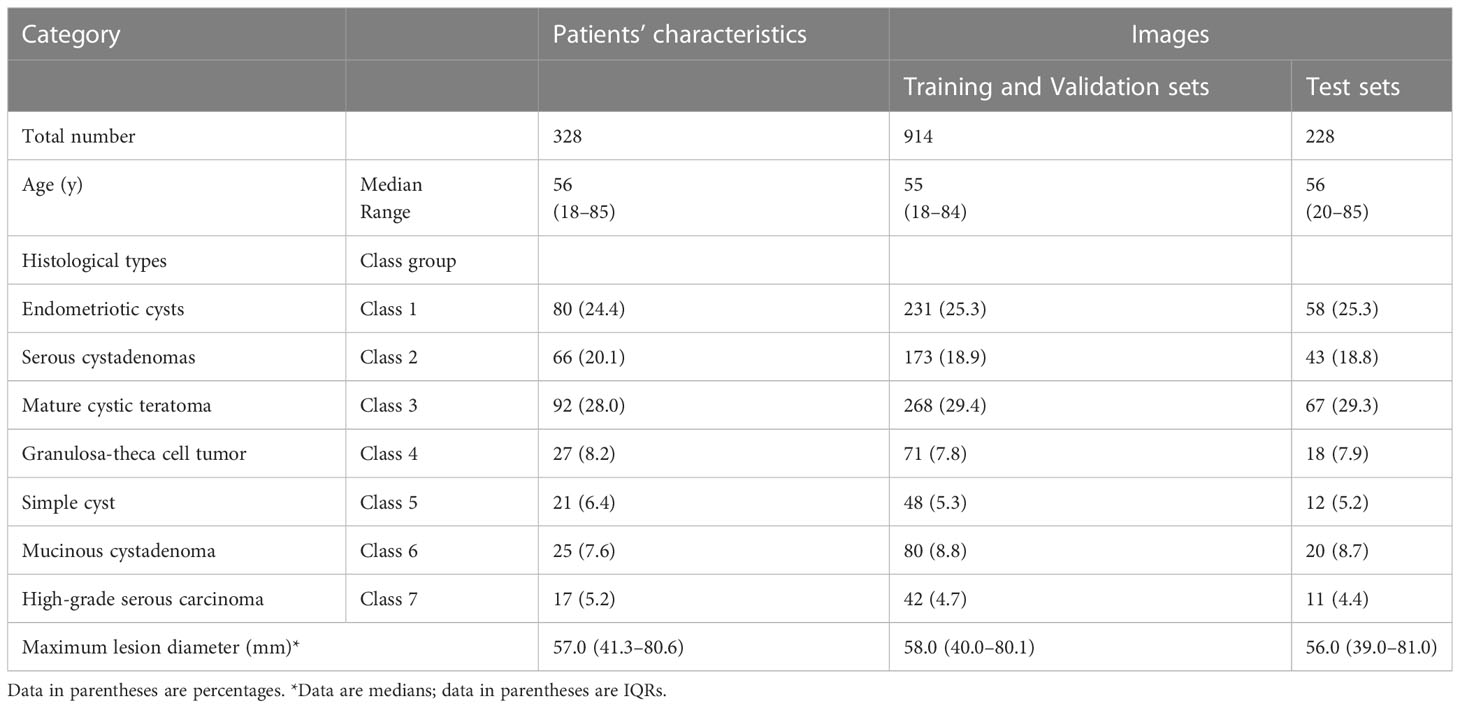

A total of 328 patients with ovarian tumors (290 benign ovarian tumors, 38 high-grade serous carcinomas), were enrolled in this study retrospectively. There were a total of 1142 individual US images. And they were further divided into different pathological categories. The characteristics of patients and image numbers of different pathological categories are shown in Table 1.

Table 1 Characteristics of patients and image numbers of different pathological categories in the Training, Validation, and Test Sets.

3.2 Performance of the seven-class classification task

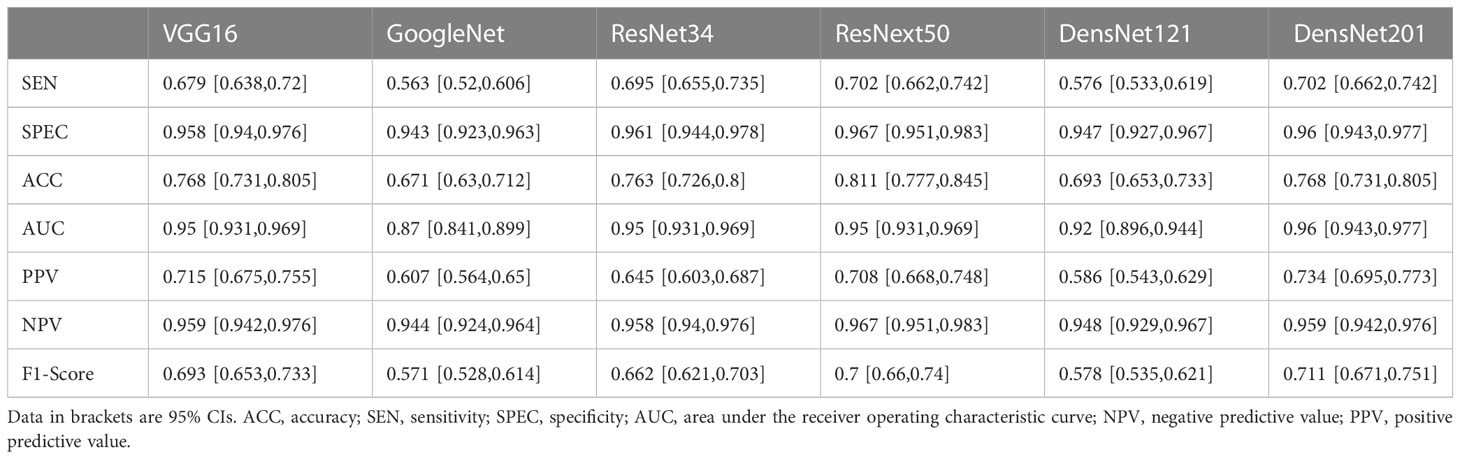

On the original US image test sets, the performance of DCNN models with transfer learning is shown in Table 2. The ResNext50 model achieved a higher accuracy of 0.811 in the test set with an AUC of 0.95 (95% CI: 0.931, 0.969), a sensitivity of 70.2% and a specificity of 96.7%.

Table 2 Diagnostic performance of six Deep convolutional neural networks (DCNN) models for classification of multiple histologic types of ovarian tumors in the original ultrasound image test set.

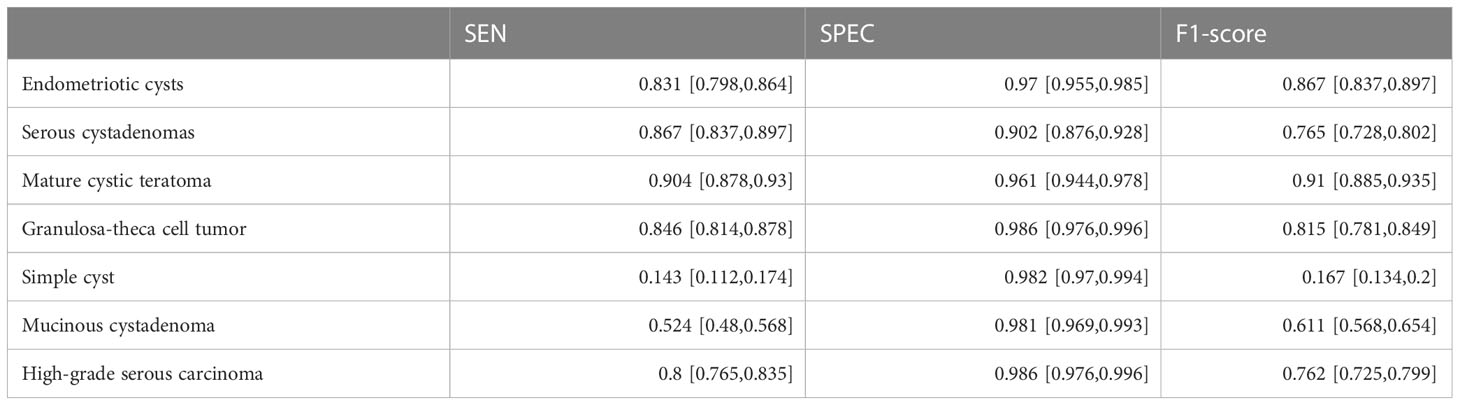

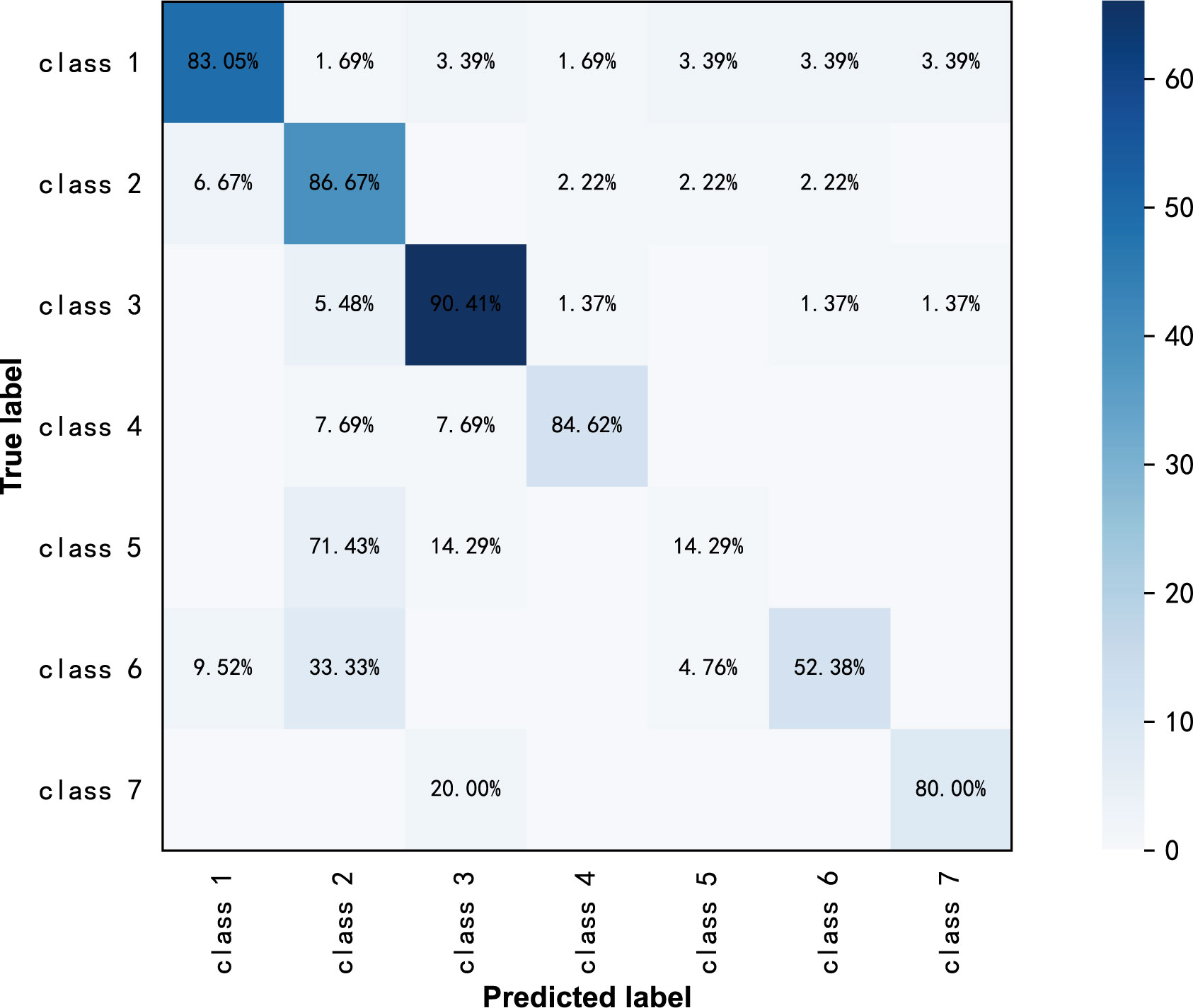

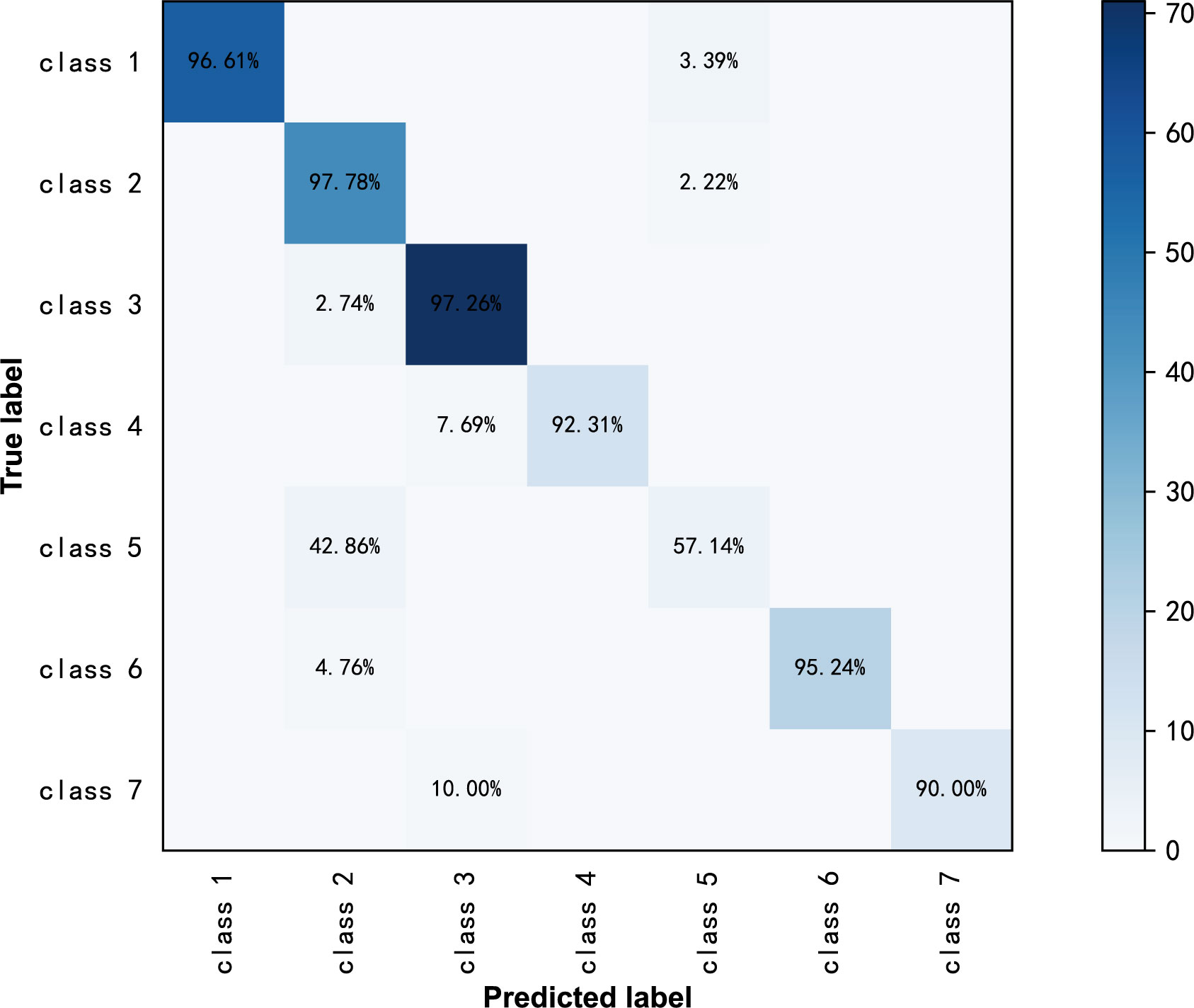

ResNext50 achieved a sensitivity of 80.00% - 90.41% in most of the subdivided pathological categories. In high-grade serous carcinoma (class 7), the sensitivity was 80%, the specificity was 98.6% and the F1 score was 0.762 (Table 3). The confusion matrix is shown in Figure 3.

Table 3 Diagnostic Performances of ResNext50 models seven-class classification in original ultrasound images.

Figure 3 Confusion matrix of ResNext50 models for seven-class classification in original ultrasound images.

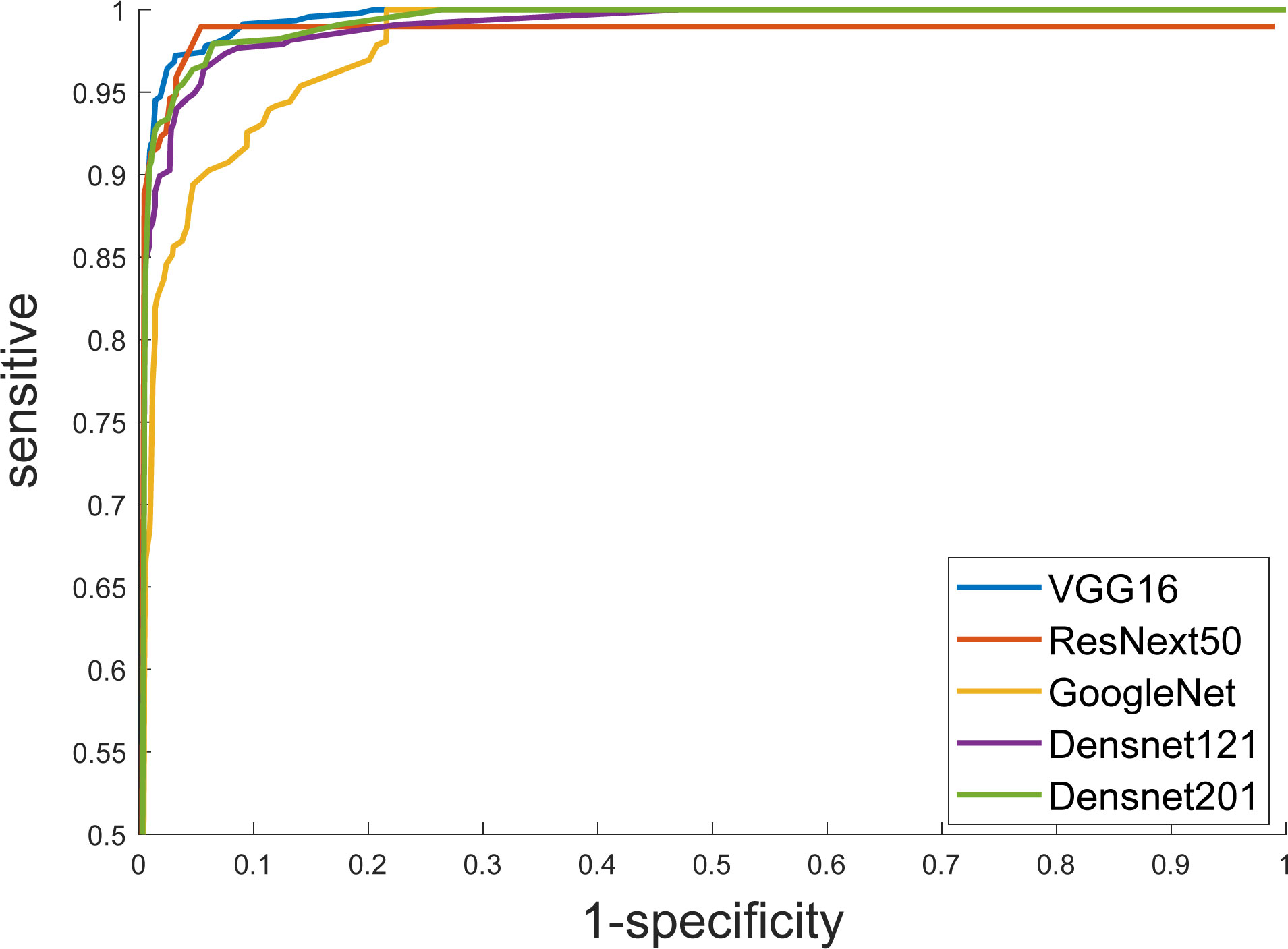

Furthermore, we put the masked US images into different DCNN models, and obtained better diagnostic results than that of the original US images. The ResNext50 model using transfer learning methods also showed the best discrimination performance, with an AUC of 0.997 (Figure 4), a sensitivity of 89.5%, a specificity of 99.2% and F1 score of 0.905 (Table 4). The AUC in each model was not significantly different from the other, as p-value > 0.05 in each comparison.

Figure 4 Receiver operating characteristic (ROC) curves show the diagnostic performance of six DCNN models.

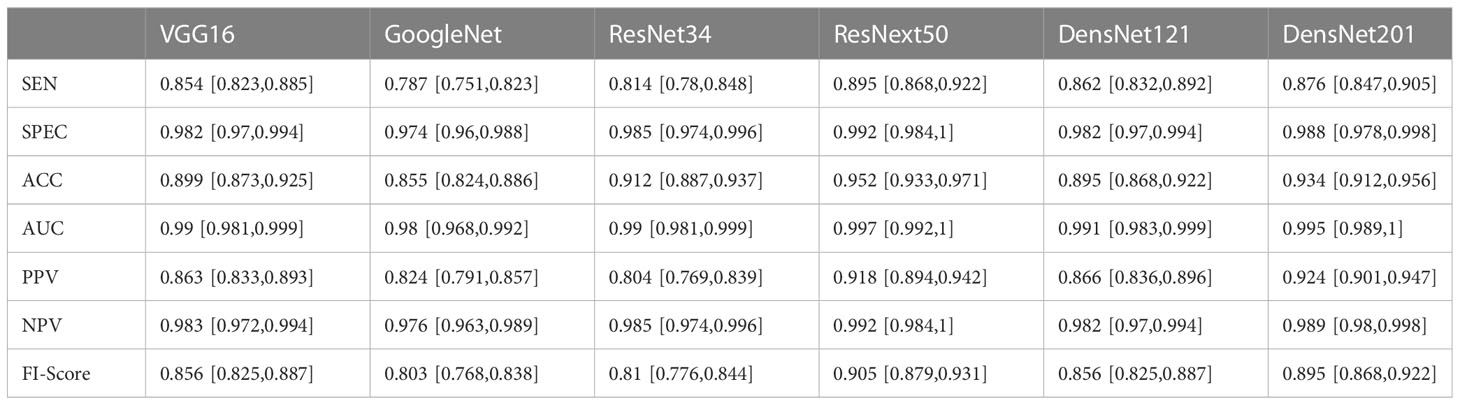

Table 4 Diagnostic performances of six deep convolutional neural network models for classifying multiple histologic types of ovarian tumors in the in the labeled ultrasound images test set.

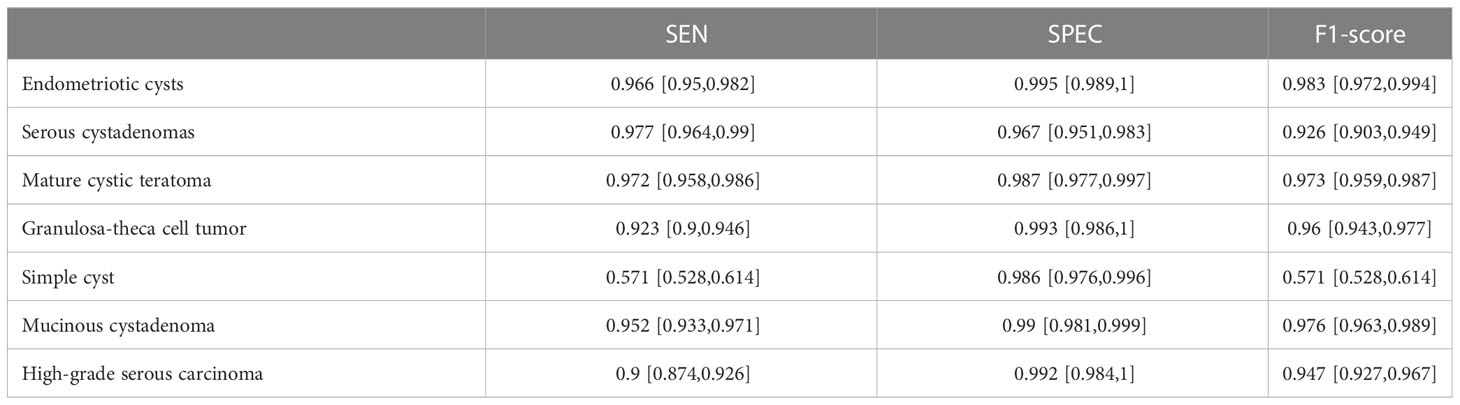

ResNext50 achieved sensitivity greater than 90% in subdivided pathologic categories. The sensitivity was 90% and the specificity was 99.2%, and the F1-Score was 0.947 in the class high-grade serous carcinoma (Table 5). The confusion matrix is shown in Figure 5.

Table 5 Diagnostic Performance of ResNext50 models seven-class classification in labeled ultrasound images.

Figure 5 Confusion matrix of ResNext50 models for seven-class classification in labeled ultrasound images.

3.3 Visualizing and understanding DCNN

CAM heatmaps (Figure 6) show that a map of such a localization is completely generated by the fully trained ResNext50 model without any manual annotation. The red and yellow area represents an area which has the greatest predictive significance in the ResNext50 model; the blue and green backgrounds reflect areas with weaker predictive values. Redder feature colors indicate a higher DCNN score. For the images that were correctly diagnosed, the DCNN focused on the same areas as the clinicians did.

Figure 6 Examples of the mapping of class activation using the ResNext50 model. The model identified endometriotic cyst (A), mucinous cystadenoma (B), and high-grade serous ovarian cancer (C, D) correctly.

4 Discussion

Diagnosing the pathological categories of ovarian tumors from US images can help physicians select more appropriate treatments. In this study, we used six DCNNs: VGG16, GoogleNet, ResNet34, ResNext50, DensNet121, and DensNet201 on ovarian tumor US images and set up 7 classification tasks in which benign ovarian lesions were divided into mature cystic teratoma, endometriotic cyst, serous cystadenoma, granulosa theca cell tumor, mucinous cystadenoma, and simple cyst. The DCNN model was evaluated on the original and labeled ovarian tumor US images. The results showed that ResNext50 had the highest overall accuracy. And better results were obtained in the segmented ultrasound images without noise interference.

In recent years, medical image analysis based on convolutional neural networks has been widely applied to computer-aided diagnosis of diseases. Resnet achieved the highest classification accuracy in the 2015 ImageNet competition. In ovarian tumor ultrasound images, previously developed models have mostly been used to discriminate between benign and malignant ovarian lesions. There has been no study on the classification of ovarian tumors into different pathological categories, which is important for the selection of treatment and surgical procedure. We found that ResNext50 achieved better overall accuracy in the test set with an area under the receiver operating characteristic curve (AUC) of 0.997 (95% CI: 0.992,1), sensitivity of 89.5% and specificity of 99.2%. Among them, the sensitivity (90% vs 96%), specificity (99.2% vs 87%), and AUC (0.99 vs 0.97) for malignant ovarian tumor reached a similar result as the expert assessment by Chen et al. (18).

From the confusion matrix in Figure 4, we can see that the high-grade serous carcinoma has a 10% chance of being misclassified as a serous cystadenoma, which is likely to occur in the early stages of the carcinoma. In this condition, further radiographic evaluation is needed, such as contrast-enhanced ultrasound. Simple cysts can be easily classified as serous cystadenomas because of the similarities in the ultrasound appearance of the two lesions. Endometriotic cyst, mature cystic teratoma, granulosa theca cell tumor and mucinous cystadenoma can be correctly diagnosed with more than 95% accuracy, demonstrating the excellent performance of the ResNext50 model.

A heatmap of CAM output was also provided in Figure 6. Most ovarian tumors are cystic solid tumors. The degree of malignancy increases with the number of solid components present in the ultrasound images. Figure 6 shows that DCNN focuses on the extent of the lesion and even the solid component of the tumor.

In this study, images were manually cropped. The DCNN produced better diagnostic results on the labeled US images than on the original US images. It is important to help the computer locate the lesion in advance. Despite the fact that most medical deep learning studies use manual ROI selection (30), the potential benefit of auto-segmentation should be explored in future studies.

Our study has several limitations. First, our study is a single-center study and a multi-center evaluation is needed to further develop and validate our model. Second, this is a retrospective study, and the data set is limited. Prospective studies are necessary to make further improvements. Third, our study is based on single-modal US images to trained the DCNN models. Multiple US images are used in clinical practice to diagnose ovarian cancer, including gray scale, color Doppler, and power Doppler. Investigating the performance of DCNN in multiple US images could be a next step.

It is important to emphasize that computer-assisted image analysis should only be used as an aid in the triage of patients and should not be used to make a definitive diagnosis. Nevertheless, we demonstrate that deep learning algorithms based on ultrasound images can predict the type of ovarian tumors, which have the potential to be clinically useful in the triage of women with an ovarian tumor.

5 Conclusions

In conclusion, we demonstrated that DCNNs can achieve high accuracy in distinguishing multiple pathological categories from ovarian ultrasound images. The DCNN-based model may prove to be a powerful clinical decision-support tool with more samples and further model calibration.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

WB, MY, and MW: study design and methods development. GC, SL, and ZT: lesions identification and marking. MW, SL and LC: results inference and implementation of methods. MW, GC, and SL: manuscript writing. WB, MY, MW, GC, SL, and LC: manuscript/results proof read and approval. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the Beijing Hospitals Authority’Ascent Plan (grant number DFL20190701).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Gupta N, Yadav M, Gupta V, Chaudhary D, Patne SCU. Distribution of various histopathological types of ovarian tumors: a study of 212 cases from a tertiary care center of Eastern uttar pradesh. J Lab Physicians (2019) 11(1):75–81. doi: 10.4103/JLP.JLP_117_18

2. Armstrong DK, Alvarez RD, Bakkum-Gamez JN, Barroilhet L, Behbakht K, Berchuck A, et al. Ovarian cancer, version 2.2020, nccn clinical practice guidelines in oncology. J Natl Compr Canc Netw (2021) 19(2):191–226. doi: 10.6004/jnccn.2021.0007

3. Brown DL, Dudiak KM, Laing FC. Adnexal masses: us characterization and reporting. Radiology (2010) 254(2):342–54. doi: 10.1148/radiol.09090552

4. Campbell S, Gentry-Maharaj A. The role of transvaginal ultrasound in screening for ovarian cancer. Climacteric (2018) 21(3):221–6. doi: 10.1080/13697137.2018.1433656

5. Friedrich L, Meyer R, Levin G. Management of adnexal mass: a comparison of five national guidelines. Eur J Obstet Gynecol Reprod Biol (2021) 265:80–9. doi: 10.1016/j.ejogrb.2021.08.020

6. Glanc P, Benacerraf B, Bourne T, Brown D, Coleman BG, Crum C, et al. First international consensus report on adnexal masses: management recommendations. J Ultrasound Med (2017) 36(5):849–63. doi: 10.1002/jum.14197

7. He J, Baxter SL, Xu J, Xu J, Zhou X, Zhang K. The practical implementation of artificial intelligence technologies in medicine. Nat Med (2019) 25(1):30–6. doi: 10.1038/s41591-018-0307-0

8. Gao J, Jiang Q, Zhou B, Chen D. Convolutional neural networks for computer-aided detection or diagnosis in medical image analysis: an overview. Math Biosci Eng (2019) 16(6):6536–61. doi: 10.3934/mbe.2019326

9. Anthimopoulos M, Christodoulidis S, Ebner L, Geiser T, Christe A, Mougiakakou S. Semantic segmentation of pathological lung tissue with dilated fully convolutional networks. IEEE J BioMed Health Inform (2019) 23(2):714–22. doi: 10.1109/JBHI.2018.2818620

10. Do LN, Baek BH, Kim SK, Yang HJ, Park I, Yoon W. Automatic assessment of aspects using diffusion-weighted imaging in acute ischemic stroke using recurrent residual convolutional neural network. Diagnostics (Basel) (2020) 10(10):803. doi: 10.3390/diagnostics10100803

11. Abd El Kader I, Xu G, Shuai Z, Saminu S, Javaid I, Salim Ahmad I. Differential deep convolutional neural network model for brain tumor classification. Brain Sci (2021) 11(3):352. doi: 10.3390/brainsci11030352

12. Usuzaki T, Takahashi K, Umemiya K, Ishikuro M, Obara T, Kamimoto M, et al. Augmentation method for convolutional neural network that improves prediction performance in the task of classifying primary lung cancer and lung metastasis using ct images. Lung Cancer (2021) 160:175–8. doi: 10.1016/j.lungcan.2021.06.021

13. Du R, Chen Y, Li T, Shi L, Fei Z, Li Y. Discrimination of breast cancer based on ultrasound images and convolutional neural network. J Oncol (2022) 2022:7733583. doi: 10.1155/2022/7733583

14. Meng L, Zhang Q, Bu S. Two-stage liver and tumor segmentation algorithm based on convolutional neural network. Diagnostics (Basel) (2021) 11(10):1806. doi: 10.3390/diagnostics11101806

15. Huang F, Lian J, Ng KS, Shih K, Vardhanabhuti V. Predicting ct-based coronary artery disease using vascular biomarkers derived from fundus photographs with a graph convolutional neural network. Diagnostics (Basel) (2022) 12(6):1390. doi: 10.3390/diagnostics12061390

16. Webb JM, Meixner DD, Adusei SA, Polley EC, Fatemi M, Alizad A. Automatic deep learning semantic segmentation of ultrasound thyroid cineclips using recurrent fully convolutional networks. IEEE Access (2021) 9:5119–27. doi: 10.1109/access.2020.3045906

17. Wang H, Liu C, Zhao Z, Zhang C, Wang X, Li H, et al. Application of deep convolutional neural networks for discriminating benign, borderline, and malignant serous ovarian tumors from ultrasound images. Front Oncol (2021) 11:770683. doi: 10.3389/fonc.2021.770683

18. Chen H, Yang BW, Qian L, Meng YS, Bai XH, Hong XW, et al. Deep learning prediction of ovarian malignancy at us compared with O-rads and expert assessment. Radiology (2022) 304(1):106–13. doi: 10.1148/radiol.211367

19. Al-Karawi D, Al-Assam H, Du H, Sayasneh A, Landolfo C, Timmerman D, et al. An evaluation of the effectiveness of image-based texture features extracted from static b-mode ultrasound images in distinguishing between benign and malignant ovarian masses. Ultrason Imaging (2021) 43(3):124–38. doi: 10.1177/0161734621998091

20. Zhang L, Huang J, Liu L. Improved deep learning network based in combination with cost-sensitive learning for early detection of ovarian cancer in color ultrasound detecting system. J Med Syst (2019) 43(8):251. doi: 10.1007/s10916-019-1356-8

21. Christiansen F, Epstein EL, Smedberg E, Akerlund M, Smith K, Epstein E. Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: comparison with expert subjective assessment. Ultrasound Obstet Gynecol (2021) 57(1):155–63. doi: 10.1002/uog.23530

22. Shin HC, Roth HR, Gao M, Lu L, Xu Z, Nogues I, et al. Deep convolutional neural networks for computer-aided detection: cnn architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging (2016) 35(5):1285–98. doi: 10.1109/TMI.2016.2528162

23. Simonyan K, Zisserman A. Very deep convolutional networks for Large-scale image recognition. arXiv (2015) 1409.1556. doi: 10.48550/arXiv.1409.1556

24. Szegedy C, Wei L, Yangqing J, Pierre S, Scott R, Dragomir A, et al. “Going deeper with convolutions,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA (2015), 1–9. doi: 10.1109/CVPR.2015.7298594

25. He K, Zhang X, Ren S, Sun J. “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA (2016), 770–778. doi: 10.1109/CVPR.2016.90

26. Huang G, Liu Z, Laurens V, Weinberger KQ. Densely connected convolutional networks. IEEE Comput Soc (2016) 2261–69. doi: 10.1109/CVPR.2017.243

27. Zhuang F, Qi Z, Duan K, Xi D, He Q. A comprehensive survey on transfer learning. P IEEE (2020) 109(1):43–76. doi: 10.1109/JPROC.2020.3004555

28. Wenhe L, Xiaojun C, Yan Y, Yi Y, Alexander GH. Few-shot text and image classification via analogical transfer learning. ACM Trans Intell Syst Technol (TIST) (2018) 9(6):1–20. doi: 10.1145/3230709

29. Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A. Learning deep features for discriminative localization. CVPR IEEE Comput Soc (2016), 2921–9. doi: 10.1109/CVPR.2016.319

Keywords: deep convolutional neural network, deep learning, ultrasound, benign ovarian tumor, high-grade serous carcinoma

Citation: Wu M, Cui G, Lv S, Chen L, Tian Z, Yang M and Bai W (2023) Deep convolutional neural networks for multiple histologic types of ovarian tumors classification in ultrasound images. Front. Oncol. 13:1154200. doi: 10.3389/fonc.2023.1154200

Received: 30 January 2023; Accepted: 12 June 2023;

Published: 23 June 2023.

Edited by:

Kai Ding, University of Oklahoma Health Sciences Center, United StatesReviewed by:

Huiling Xiang, Sun Yat-sen University Cancer Center (SYSUCC), ChinaElsa Viora, University Hospital of the City of Health and Science of Turin, Italy

Copyright © 2023 Wu, Cui, Lv, Chen, Tian, Yang and Bai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wenpei Bai, baiwp@bjsjth.cn

Meijing Wu

Meijing Wu Guangxia Cui1

Guangxia Cui1 Wenpei Bai

Wenpei Bai