- 1Mind-Brain Group, Institute for Culture and Society (ICS), University of Navarra, Pamplona, Spain

- 2IE Business School, IE University, Madrid, Spain

- 3Basque Center on Cognition, Brain and Language, San Sebastian, Spain

Background: The use of electronic interventions to improve reading is becoming a common resource. This systematic review aims to describe the main characteristics of randomized controlled trials or quasi-experimental studies that have used these tools to improve first-language reading, in order to highlight the features of the most reliable studies and guide future research.

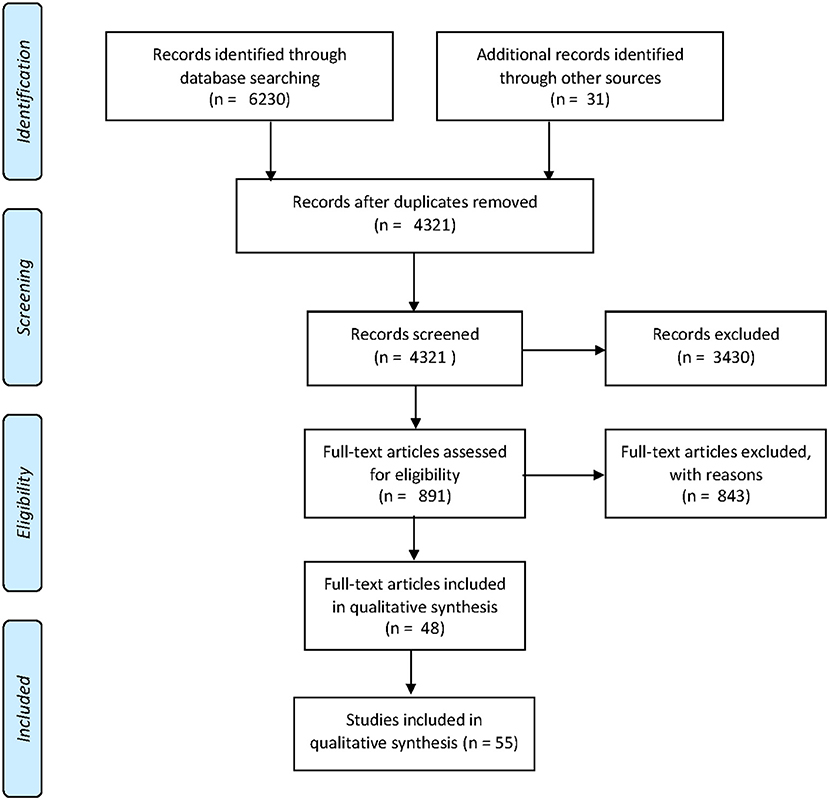

Methods: The whole procedure followed the PRISMA guidelines, and the protocol was registered before starting the process (doi: 10.17605/OSF.IO/CKM4N). Searches in Scopus, PubMed, Web of Science and an institutional reference aggregator (Unika) yielded 6,230 candidate articles. After duplicate removal, screening, and compliance of eligibility criteria, 55 studies were finally included.

Results: They were research studies on improving first-language reading, both in children and adults, and including a control group. Thirty-three different electronic tools were employed, most of them in English, and studies were very diverse in sample size, length of intervention, and control tasks. Risk of bias was analyzed with the PEDro scale, and all studies had a medium or low risk. However, risk of bias due to conflicts of interest could not be evaluated in most studies, since they did not include a statement on this issue.

Conclusion: Future research on this topic should include randomized intervention and control groups, with sample sizes over 65 per group, interventions longer than 15 h, and a proper disclosure of possible conflicts of interest.

Systematic Review Registration: The whole procedure followed the PRISMA guidelines, and the protocol was registered before starting the process in the Open Science Framework (doi: 10.17605/OSF.IO/CKM4N).

Introduction

Reading is a multifaceted ability involving the decoding of letters and words and language comprehension, which can be further broken into other components and precursors including orthography and alphabetics, phonics, phonemic awareness, vocabulary, comprehension, fluency, and motivation and attention.

Reading acquisition is one of the main keys for school success and a crucial component for empowering individuals to participate meaningfully in society. Yet, for a significant number of children, it is still a challenging skill to be acquired by. Globally, around 250 million children are unable to acquire basic literacy skills (UNESCO). Similarly, many students will not be able to acquire grade-level proficiency to adequately study or learn when they enter high school, which will, in turn, influence their risk of early dropping from the educational system and will possible result in future underemployment and economic success (Polidano and Ryan, 2017). Many different aspects have been related to poor reading outcomes such as prenatal and perinatal risk factors (Liu et al., 2016), gender, socio-economic factors (Linnakyla et al., 2004), or several mental health problems (Francis et al., 2019). Specific Learning Disabilities (SLD) are one of the main challenges. Among SLD, dyslexia is one of the most common, accounting for up to 80% of diagnosed learning disabilities (Shaywitz, 1998).

There is an extensive number of interventions for reading difficulties, given the social relevance and long-term consequences of this problem. Most of them aim to improve skills in five key areas, namely (i) phonemic awareness, (ii) phonics, (iii) fluency, (iv) vocabulary, and (v) comprehension (National Reading Panel, 2000). While traditional assessments rely on paper-based materials, normally used with the supervision of a professional therapist, the number of computer-based intervention tools to improve reading is growing rapidly (see Franceschini et al., 2015; Rello et al., 2017 for some examples). Computer-based interventions have several advantages over more traditional methods. Importantly, they typically require less human resources, and they can provide an attractive environment for children to work with. Additionally, they ease the application of a reading instruction method systematically to all students, reducing the influence of individual differences among teachers. Finally, they are usually programmed to adapt their pace of instruction to the advances of the students, hence facilitating an individualized attention.

Given the novelty and the heterogeneity of electronic interventions, their efficacy has not been systematically evaluated. It has been noted that much of the published research aiming at evaluating these interventions follow unrandomized, small, single-sample, pre and post training protocols (Brooks, 2016). However, in order to be able to evaluate the soundness of these programs, especially in the case of rapidly maturing individuals such as children, it is critical to take into account age-related improvements. Such age-related improvements can only be separated from the effects of interest through the inclusion of experiments with a control group. More generally, a systematic approach to the evidence supporting these interventions must evaluate the risk of many other biases that derive from design decisions as the only way of guiding future research, such us the extent to which all those involved in the experiment were blinded to the treatment condition, or the a-priori statistical power of the studies. Finally, in the case of a rapidly evolving field, it is of paramount importance that the evaluation of the evidence is up to date, and includes the most recent literature.

Hence, we present a systematic review of the electronic interventions aimed to improve first-language reading skills. This systematic review seeks to compare any kind of intervention aimed at improving reading or any of its core components under the same standardized criteria, in order to determine guidelines for assessing the reliability of computer-based interventions, and discriminating which of those are effective. First, we present how we selected the research papers to be included in the review. Second, we attempt to analyze the quality of the selected interventions, proposing key aspects that could be improved in future studies. Finally, we present an overview of the efficacy of those interventions, taking into account the risk of bias of the studies. We believe that this work can benefit professionals who are developing technology-based training and researchers who are evaluating their interventions.

Materials and Methods

The design and reporting of results of this systematic review was carried out following the guidelines for Preferred Reporting Items for Systematic review and Meta-analysis (PRISMA) (Moher et al., 2009, 2015). A protocol was written and registered before starting data extraction in the Open Science Framework (Ostiz-Blanco and Arrondo, 2018). It was uploaded on May 14, 2019, and it is available in the following URL: https://osf.io/ckm4n/ Searches were carried out in Scopus (Elsevier), PubMed (Medline Plus) and Web of Science (core collection). Additionally, we used an institutional reference aggregator (Unika) based on the EBSCO service to combine references from 61 external databases (psychology profile) (EBSCO Discovery Service; University of Navarra, Búsqueda básica: UNIKA). A full list of databases included the psychology profile of Unika can be found in the Supplementary Material. Initially, searches were limited to the period between 2008 and September of 2017, date in which these searches were carried out. The rationale for the time limit was the fast pace at which computer technologies advance. Hence, any program created over 10 years ago was likely to be outdated. No other limitations were imposed during the search phase. The search was updated on March 2, 2020. Search terms were adapted for each database and limited to abstract, title or keywords.

The general query was (dyslexia OR reading OR “reading disorder” OR “reading difficulties”) AND (computer-based OR videogame OR “mobile application”). The references section of all included articles was used to find further articles of interest.

The PICO (Participants, Intervention, Controls, Outcomes) framework was used to define the key characteristics of our systematic review as follows.

Participants

Samples considered to be drawn from the general population, that is, without specific disabilities or learning disorders were accepted. Therefore, the fact that a minor percentage of the sample had some of these problems was not a reason for exclusion. Additionally, participants with dyslexia or reading disorders/difficulties were also considered as a valid population. There were no age limitations. Articles were excluded if they were carried out in populations with specific disorders or disabilities other than dyslexia, although if the sample had a proportion of participants with such difficulties the article was not necessarily excluded.

Interventions

Articles had to deal with any technologically-based intervention aimed at improving reading skills. In this regard, our definition of reading intervention was atheoretical as we relied on the descriptions provided by the authors of the primary papers. However, interventions were broadly classified as supporting reading at the word level (decoding, i.e., phoneme-grapheme mapping), its precursors (phonological awareness -the sound structure of words- or vocabulary learning), or other related skills such as rhythm or attention.

Studies with participants of any age were included, although a majority of articles in children were expected. Whenever an article indicated that their technological intervention was aimed at improving reading or any of its core components, it was accepted. Interventions aimed at learning a second language were excluded.

Controls

All studies had to include a control group and between group comparisons. Participants of the control group had to fulfill the same criteria than those described in the participants section. Any intervention in the control group was accepted. Hence, we included articles using passive controls such as “Treatment as Usual” (normal classroom) or wait-list, and also articles with an active control (another learning or even reading task).

Outcomes

At the methodological level, all studies had to be randomized or non-randomized longitudinal interventions (i.e., RCTs or quasi-experimental designs), but any duration or control task was permitted. We included both randomized and non-randomized studies, since the focus of our study was to show how current research is carried out in the field, and not the efficacy of the specific tools. Any outcome measuring an improvement in any of the reading components was accepted, including word reading accuracy, text reading accuracy, reading rate and fluency and phonological skills.

Any type of research format was accepted (articles, thesis, congress proceedings, etc.). Reviews found were used to identify additional references.

Search results were imported to Mendeley. Duplicates were removed automatically using the function provided by this software, and also manually in the cases it was not successful. Two researchers independently reviewed all titles and abstracts. Any article deemed potentially appropriate was downloaded, and the full text was reviewed for further consideration on whether it fulfilled inclusion criteria, or it had to be excluded detailing the reasons for exclusion.

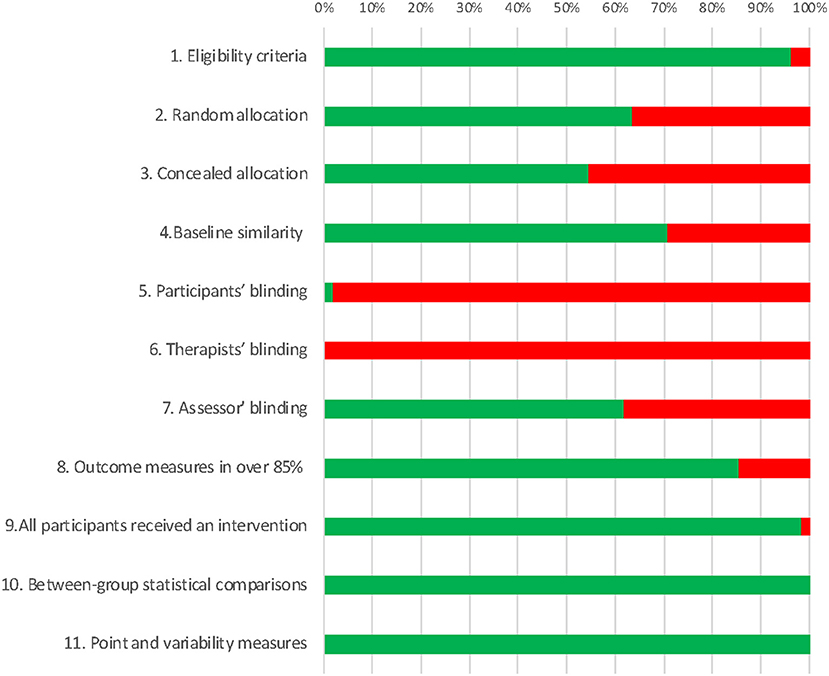

Two researchers extracted data independently and differences were solved by consensus. Data analysis was carried out employing tables and narrative synthesis. The following data was extracted from each article: trained skills (direct reading or other skills); hardware modality; language, country and duration of the intervention; type of control task; sample sizes and age; and results. Risk of bias was evaluated using the Physiotherapy Evidence Database tool (PEDro) (Blobaum, 2006; Physiotherapy Evidence Database, 2012). This scale evaluates 11 items: inclusion criteria and source, random allocation, concealed allocation, similarity at baseline, subject blinding, therapist blinding, assessor blinding, completeness of follow up, intention-to-treat analysis (the analysis of the results of a study according to the initial intervention assignment instead of according to the group at the end of the intervention time), between-group statistical comparisons, and point measures and variability (whether the study includes adequate measure of the size of the treatment effect and its variation, e.g., mean effect in each of the groups and its confidence interval). Each item is rated as “yes” or “no,” and the total PEDro score is the number of items met. Afterwards, studies were divided into three groups: high (less than four points), medium (between four and seven) or low risk of bias (between 8 and 11). Finally, studies were divided into four groups according to their combined sample size and the smallest effect size that they would be able to detect (assuming a two-sample t-test between two equally sized groups and 0.8 power): very large effects (Cohen's d over 1, combined sample size under 53), large effects (Cohen's d over 0.8, combined sample between 53 and 128), medium effects (Cohen's d over 0.5, combined sample size between 128 and 786), and small effects (Cohen's d over 0.2, combined sample size over 786) (Cohen, 1988). This categorization was driven by the fact that low power combined with a high proportion of statistically significant results could indicate a high proportion of false positives in the literature (Szucs and Ioannidis, 2017). Conflicts of interest declared in the included articles were also extracted (Cristea and Ioannidis, 2018).

Results

The search in four databases and a reference aggregator yielded 6,230 results. After elimination of duplicates, screening and full text evaluation, 48 articles fulfilled inclusion criteria (Borman et al., 2008; Given et al., 2008; Jiménez and Rojas, 2008; Macaruso and Walker, 2008; Watson and Hempenstall, 2008; Deault et al., 2009; Ecalle et al., 2009; Macaruso and Rodman, 2009, 2011; Pindiprolu and Forbush, 2009; Shelley-Tremblay and Eyer, 2009; Wild, 2009; Arvans, 2010; Huffstetter et al., 2010; Jimenez and Muneton, 2010; Rasinski et al., 2011; Rogowsky, 2011; Saine et al., 2011; Soboleski, 2011; Tijms, 2011; Wolgemuth et al., 2011, 2013; Di Stasio et al., 2012; Falke, 2012; Ponce et al., 2012, 2013; Williams, 2012; Franceschini et al., 2013; Heikkilä et al., 2013; Hill-Stephens, 2013; Mcmurray, 2013; Reed, 2013; Savage et al., 2013; Kamykowska et al., 2014; Plony, 2014; Rello et al., 2015; Shannon et al., 2015; Beaudry, 2016; De Primo, 2016; Jackson, 2016; O'Callaghan et al., 2016; Deshpande et al., 2017; Messer and Nash, 2017; Moser et al., 2017; Nee Chee et al., 2017; Rosas et al., 2017; Daleen et al., 2018; Flis, 2018). Since some of the articles included more than one study, 55 studies were finally analyzed. A flowchart of the screening and inclusion of articles in our review is depicted in Figure 1. Excluded articles and the exclusion criteria they fulfilled are detailed in Supplementary Table 1; a Venn diagram summarizing the reasons for exclusion and the number of articles excluded for each of them is shown in Supplementary Figure 1.

Figure 1. Review flowchart: a flowchart of the screening and inclusion of articles in our systematic review.

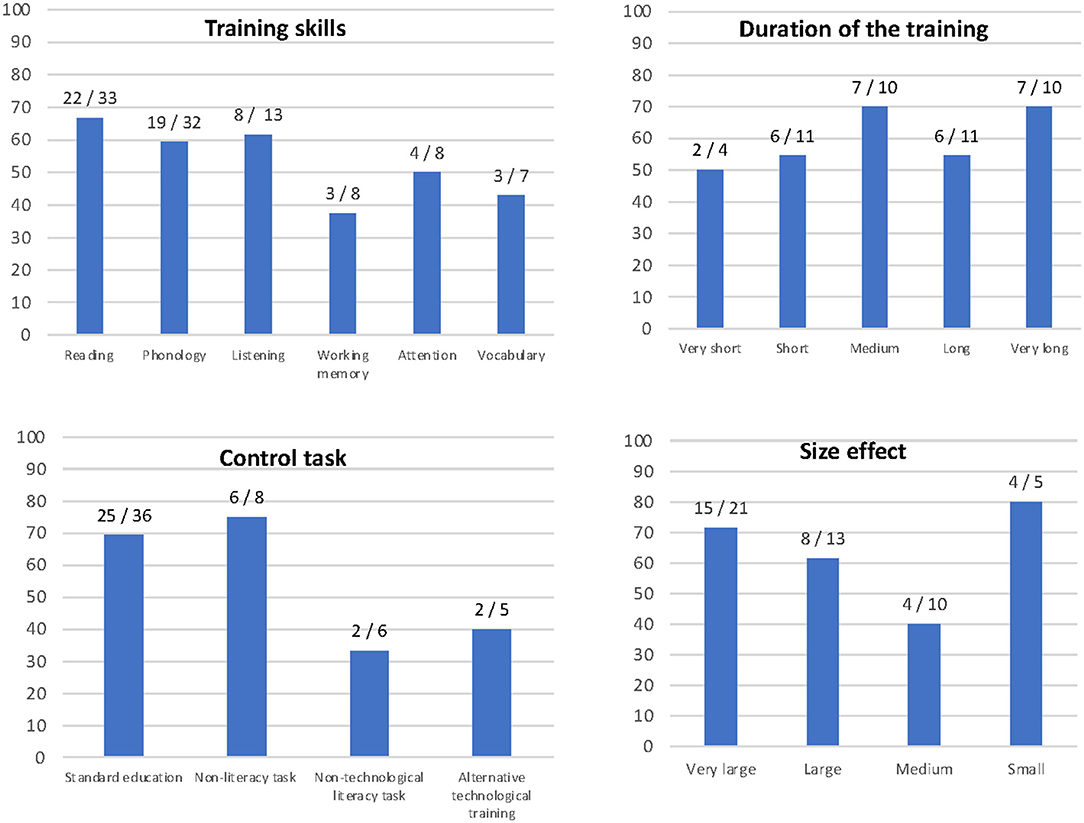

The key characteristics of all included studies are summarized in Table 1. Study methods and results were very heterogeneous, and 33 different training programs were included. The most employed tools were Fast ForWord (in seven studies), Abracadabra (in six) and Graphogame (in four). Most tools were present in only one study, and seven did not provide the name of the software being evaluated. Most tools were in English (69% of the studies), followed by Spanish (15%) and six other languages with only one study each. In fact, nearly half of studies had been carried out in the United States (49%). The vast majority (91%) used computers as hardware, instead of laptops/tablets (7%) or videogame systems (2%).

Table 1. Articles included in the systematic review, and main characteristics of the computerized training used in each of them.

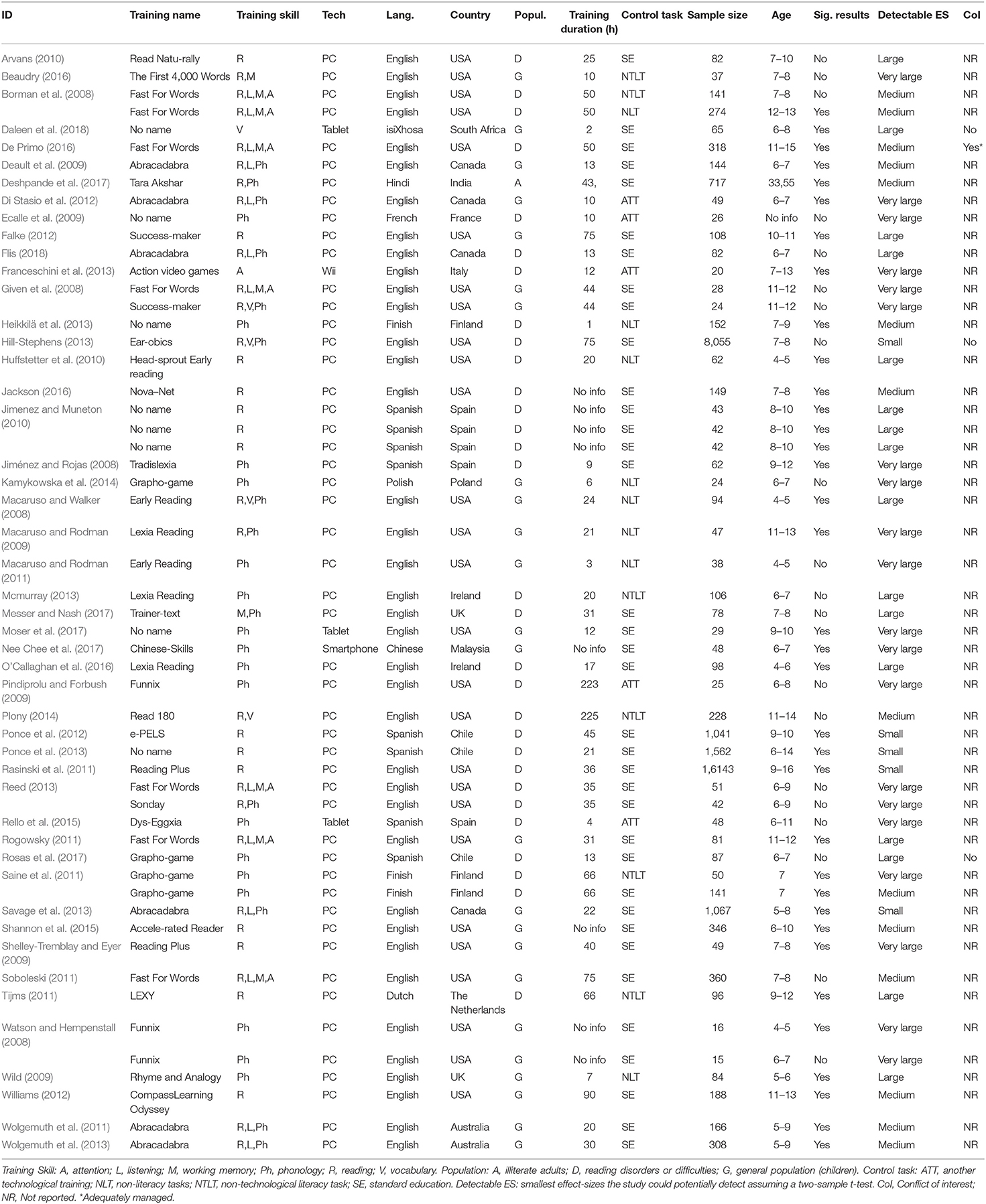

Regarding the reading-related skill trained, 60% of the interventions directly aimed to improve reading, 58% worked on phonology and the remaining studies addressed indirect skills such as oral comprehension (24%), working memory (15%), attention (15%) or vocabulary (13%). Studies were mainly carried out in two different kinds of population: children/adolescents either with (54%) or without (44%) reading difficulties. Only one study (Deshpande et al., 2017) included illiterate adults (2%). Consequently, the median age of the participants was 8.6 years old. Duration of the interventions was highly variable, ranging between 1.25 and 225 h.

The most common control task against which the interventions were compared was standard education (65% of the cases), whereas the remaining studies used active tasks: 15% used a non-linguistic task such as mathematics or art, 11% a non-technological reading intervention, and 9% a different technological reading training. Sample sizes were also heterogeneous, ranging between 15 and 16,243, with a median of 82. Only 9% of the studies had a sample size big enough to be able to consistently detect small effects and 18% of the studies could identify medium-sized effect-sizes. Conversely, 24% would only have been able to detect large effects, and 38% of the studies were only capable of consistently showing statistically significant very large effects.

Thirty-four studies (64%) reported statistically significant effects. Figure 2 shows the proportion of studies with significant results in relation to the different study characteristics, namely training skill, duration of the training, control task used and effect size that studies would have been able to consistently detect.

Figure 2. Statistically significant studies: proportion of studies with statistically significant results in relation to four study characteristics: training skill, duration of the training, control task used and effect size that studies would have been able to consistently detect.

Using the PEDro tool, we assessed the risk of bias for each of the studies included in the review (Figure 3; Supplementary Table 2.2). Twenty-six and 29 studies had a medium and low risk of bias, respectively. Regarding conflicts of interest, only one study reported competing interests that could suppose a risk of bias for their conclusions, but such conflicts were adequately managed. Three studies declared no conflicts of interest. Importantly, 51 studies did not include a conflict of interest statement, and therefore the risk of bias due to this issue cannot be estimated.

Figure 3. Risk of bias assessment: percentage of included articles fulfilling each of the PEDro scale items.

Next, we report in detail the characteristics of those studies with the highest quality, since their results should be the more reliable for the research questions on this topic. Five studies were considered to have the highest quality in terms of a low risk of bias, at least a medium treatment length (over 15 h), and a sample size allowing the detection of medium-sized effects. Two of them were included within the same article by Borman et al. and used a similar methodology: they evaluated the effectiveness of Fast ForWord (a computerized reading intervention that uses the principles of neuroplasticity to improve reading and learning) in samples of individuals with low reading skills against an active control condition of arts and gymnastics activities, and utilized the Comprehensive Test of Basic Skills, Fifth Edition (CTBS/5). The first study tested 248 children between seven and 8 years and the second 453 between 12 and 13 years. Only the second study reported statistically significant differences. Another study evaluated Graphogame (a computer game designed to provide intensive training in rapid recognition of grapheme-phoneme associations and further reading skills) in Finnish (Saine et al., 2011), in 50 seven-year-old children at risk of developing reading problems randomized to either a regular reading intervention or a computer assisted intervention. The training took 66 h and performance was compared to usual classroom activities. Significant training-induced improvements were found on letter naming, reading fluency and spelling. Additionally, these groups were compared to the mainstream reading group. The other two studies used Abracadabra (a free access, web-based literacy tool that contains texts and strategies to support word reading, phonics, reading and listening comprehension, and reading fluency) on Australian samples of children between 5 and 9 years old recruited from the average population of Canada and Australia, respectively, utilizing the normal classroom curriculum as the control task (Savage et al., 2013; Wolgemuth et al., 2013). One of the studies found statistically significant results in phonological awareness and reading after 30 h with a sample size of 308 participants, whereas the other study reported differences in phonological skill and letter knowledge after 22 h of training, and a sample size of 1,067 participants. It is important to highlight that none of these articles have a conflict of interest statement, so the possibility of undeclared competing interests cannot be completely discarded. Also, the variety of designs (randomized at the individual level or the classroom level, comparing computerized trainings against other remediation measures or the normal classroom dynamic, among others), statistical analyses (such as ANCOVA, ANOVA, hierarchical linear models and linear regression) and completeness of reporting precluded the calculation of any meaningful common effect size from these studies.

Discussion

Technologies evolve at a very fast pace, and educational digital interventions are not an exception neither at the school level (Hubber et al., 2016), nor at the level of University (Arrondo et al., 2017) or non-formal education (Ostiz-Blanco et al., 2016). The current systematic review provides an overview of the characteristics of published research using digital tools and interventions aimed at improving reading processes. The overarching objective of our analysis is to provide a description of the research available on this topic, in order to guide future investigations on this topic. Organizations such as What Works Clearinghouse provide guidance on which specific interventions have a greater evidence-based support (U.S. Department of Education, Institute of Education Sciences, 2009, 2010, 2013a,b, 2017), and therefore we did not intend to evaluate the efficacy of existing tools. Conversely, we present an overview on how research is carried out on this field of study, showing the strengths and weaknesses to potentiate the former and mitigate the latter.

From a methodological point of view, the protocol of this systematic review was pre-registered to reduce the risk of bias (Ostiz-Blanco and Arrondo, 2018), international guidelines were followed throughout its development and reporting (Moher et al., 2009, 2015), and searches were carried out over three different databases and an aggregator that combines results from over sixty additional databases. After screening over four thousand initial articles, our final review comprised 55 studies that included a control group and inter-group comparisons. Indeed, among the most frequent reasons for exclusion was the fact that many studies did not include such a group or only evaluated intraindividual changes between pre and post-intervention phases. However, without proper control groups and comparisons, studies can hardly assess efficacy, especially when dealing with populations developing very fast such as children.

As stated above, our systematic review was not designed to evaluate the efficacy of interventions. Moreover, the very different characteristics of the studies and tools reviewed hamper the possibility of adequately comparing research outcomes, even if effect sizes had been calculated. In any case, our review highlights a number of features that, from a methodological point of view, are shared by the highest quality studies included in our analysis. Five studies fulfilled criteria to be considered as with a high quality (Borman et al., 2008; Saine et al., 2011; Savage et al., 2013; Wolgemuth et al., 2013): a low risk of bias, a treatment duration over 15 h and a combined sample size over 128, and hence capable of detecting at least medium-sized effects. These studies showcase the experimental design that future studies should try to emulate; additionally, their results are the most informative regarding effectiveness evaluation.

Most intervention programs were implemented on computers, whereas we found few studies using smartphones, tablets or videogame systems. This might seem surprising since mobile technologies offer important advantages over desktop computers regarding usability and motivation, including the fact that they are touch- and movement-responsive or that children associate them to leisure activities. As there are big delays between the creation of a program, its testing and the publication of results, it is likely that this proportion would change over time, and upcoming studies will reflect an integration of this hardware within educational interventions. Similarly, the majority of the published interventions were carried out in English, some in Spanish, and very few in other languages. It is unknown whether the underrepresentation of other languages derives from a lack of tools for language training in those languages or a lack of publication of research results in international journals. In this regard, their potential world-wide audience could make digital systems especially suited for the implementation of programs that train language-independent reading-related skills, since such programs could be distributed with only minor changes (Burgstahler, 2015). Regarding the type of language skill trained, most studies provided either a direct reading training or phonological training. Nevertheless, the number of studies centered on the improvement of other skills, such as hearing or visual attention, was still relevant. Studies that directly trained reading skills had a higher proportion of statistically significant results in our review, and similar findings have been reported in the literature. This could indicate that direct language training has higher efficacy than other approaches and should be recommended as the default approach. However, indirect training could also have advantages in some cases. For example, it could be useful as an early intervention for very young children at risk of later developing reading problems (Lyytinen et al., 2009; Snowling, 2013). Furthermore, it could increase motivation, as the training does not focus on an area where the individual may feel impaired (Wouters et al., 2013). Remarkably, all high-quality studies included in our review used these direct-training approaches, which indicates both a higher level of evidence for such interventions and the need for further high-quality research on the effectiveness of non-direct trainings. Similarly, studies were typically aimed at primary school students that were either acquiring or consolidating their language skills. Research on other age segments, including preschoolers, secondary school students, or adults is lacking. Duration of interventions was highly variable. Whereas, it is not clear from our results if longer interventions lead to better outcomes, this seems a reasonable assumption. Without any doubt, very short interventions were related to a lower rate of positive results. The creation of engaging games that children can use independently for extended periods could be an effective strategy to obtain reading improvements without individuals feeling an increase in their educational workload over time.

Among the most useful aspects to take into account when developing future studies is the risk of bias of previous published research. Moreover, it has been recently proposed that reviews should only be considered systematic if they evaluate the risk of bias of included studies (Krnic Martinic et al., 2019), a step that is rarely carried out in systematic reviews in psychology (Leclercq et al., 2019). Risk of bias was assessed by using the PEDro scale (Blobaum, 2006; Physiotherapy Evidence Database, 2012). Results indicated that none of the studies here had a high risk of bias. This is partly explained by some of our a-priori criteria of inclusion which to some extent were more stringent than the options provided by the scale. For example, by requiring that studies had to include inter-group comparisons, the item 10 and the item 11 of the scale were satisfied in all cases. However, other items of the scale, such as whether assessment agents were blinded to the treatment group of the participants require complex organization when carrying school-based research and were very rarely fulfilled. Future studies should be designed to try to overcome previous limitations and should improve randomization and blinding to all those involved in the research project (participants, therapists and assessors). While we required that all studies included a control group, not all research involved the same type of controls; and this could greatly influence the interpretation of results. Two thirds of the interventions were carried out in addition or instead of the standard classroom education and compared to the latter, whereas only a few studies used active linguistic tasks as controls. Relatedly, the few studies using active linguistic tasks had a reduced proportion of significant results. However, studies without such controls would at most be able to conclude that the methodology tested works, but would not be able to evaluate if the training provides an improvement over any existing methodology. In this regard, since the development and implementation of newer methodologies have important associated costs, it would be hard to justify the expenses without evidence of their differential effectiveness. In addition to risk of bias, we evaluated sample sizes and their power to detect given effect sizes. The median sample size was 82 participants, whereas only one third of the studies could detect medium effect sizes and <10% small ones. While in theory larger sample sizes do not lead to a lower bias, it has been shown that small studies are more prone to publication bias (e.g., only to be published if positive) and have more unstable results (e.g., are more dependent of analytic choices made by the researcher) (Kühberger et al., 2014; Rubin, 2017). Hence, sample sizes seem a key factor for improvement in future research.

The most researched interventions were the commercial programs Fast ForWords and Abracadabra. Four out of the five studies fulfilling our excellence criteria comprised these tools. As it occurs in the case of biomedical research, partnerships between universities and publishers seems a promising way to carry out well-powered and designed studies and manage their costs. However, such kind of research has its own conflicts of interest that should also be taken into account in future research. Relatedly, another venue for improvement is an increase of mandatory declarations of competing interests on articles. The great majority of the articles in our systematic review did not include one, even in the cases when they were dealing with commercial applications.

To conclude, we found an increasing number of studies that use computer games or apps for the improvement of reading skills and they seem a promising alternative in education. However, research is still in its infancy and studies up to date have important limitations that hinder their usefulness to guide decisions in the educational domain. Future studies should be better-designed randomized controlled trials, with larger sample sizes, and that are able to answer the question on whether a computerized intervention adds any value to existing methods. Partnerships between universities and publishers or other entrepreneurial initiatives could be a potential way of moving forward, but conflicts of interest in such cases should be outlined.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

MO-B and GA: conceptualization. MO-B, GA, and JB: funding acquisition and formal analysis. GA: methodology and supervision. MO-B, GA, JB, IG-A, and PD-S: data extraction. MO-B, GA, JB, and LR: writing–original draft. MO-B, GA, JB, IG-A, PD-S, LR, and ML: writing–review and editing. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by the Institute for Culture and Society (ICS, University of Navarra), Obra Social La Caixa, Fundación Caja Navarra, Fundación Banco Sabadell, and the Severo Ochoa program grant SEV-2015-049.

Conflict of Interest

MO-B, ML, and JB have developed a videogame to improve reading in people with dyslexia (Jellys). LR's research is also focused on computer-based tools to improve reading in people with dyslexia (DytectiveU). None of these computer-based tools are expected to yield any present or future direct economic profits.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We acknowledge the assistance of Stephanie Valencia (Carnegie Mellon University) for her assistance in writing the protocol for this systematic review.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.652948/full#supplementary-material

References

Arrondo, G., Bernacer, J., and Díaz Robredo, L. (2017). Visualización de modelos digitales tridimensionales en la enseñanza de anatomía: principales recursos y una experiencia docente en neuroanatomía. Educ. Med. 18, 267–269. doi: 10.1016/j.edumed.2016.06.022

Arvans, R. (2010). Improving reading fluency and comprehension in elementary students using read naturally (Dissertation). Western Michigan University, Michigan.

Beaudry, A. L. (2016). The impact of a computer-based shared reading program on second grade readers' vocabulary development, overall reading, and motivation (Dissertation). Widener University, Chester, PA.

Borman, G. D., Benson, J. G., and Overman, L. (2008). A Randomized Field Trial of the Fast ForWord Language Computer-Based Training Program. Educ. Eval. Policy Anal. 31, 82–106. doi: 10.3102/0162373708328519

Brooks, G. (2016). What Works for Children and Young People With Literacy Difficulties? 5th Edn. Available online at: http://www.interventionsforliteracy.org.uk/wp-content/uploads/2017/11/What-Works-5th-edition-Rev-Oct-2016.pdf (accessed August 23, 2021).

Burgstahler, S. (2015). Equal Access: Universal Design of Instruction. Washington, DC: University of Washington.

Cohen, J. (1988). Statistical Power Analysis for the Behavioural Science, 2nd Edn. London: Routledge.

Cristea, I.-A., and Ioannidis, J. P. A. (2018). Improving disclosure of financial conflicts of interest for research on psychosocial interventions. JAMA Psychiatry 75, 541–542. doi: 10.1001/jamapsychiatry.2018.0382

Daleen, K., Laurette, M., Amanda, M., Febe, D. W., Klop, D., Marais, L., et al. (2018). Learning new words from an interactive electronic storybook intervention. S. Afr. J. Commun. Disord. 65, e1–e8. doi: 10.4102/sajcd.v65i1.601

De Primo, L. D. (2016). Read more, read better: how sustained silent reading impacts middle school students' comprehension (Dissertation). Capella University, Minneapolis, MN.

Deault, L., Savage, R., and Abrami, P. (2009). Inattention and response to the ABRACADABRA web-based literacy intervention. J. Res. Educ. Eff. 2, 250–286. doi: 10.1080/19345740902979371

Deshpande, A., Desrochers, A., Ksoll, C., and Shonchoy, A. S. (2017). The impact of a computer-based adult literacy program on literacy and numeracy: evidence from India. World Dev. 96, 451–473. doi: 10.1016/j.worlddev.2017.03.029

Di Stasio, M. R., Savage, R., and Abrami, P. C. (2012). A follow-up study of the ABRACADABRA web-based literacy intervention in Grade 1. J. Res. Read. 35, 69–86. doi: 10.1111/j.1467-9817.2010.01469.x

EBSCO Discovery Service. EBSCO Information Services Inc. | www.ebsco.com">www.ebsco.com. Available online at: https://www.ebsco.com/products/ebsco-discovery-service (accessed August 23, 2021).

Ecalle, J., Magnan, A., Bouchafa, H., and Gombert, J. E. (2009). Computer-based training with ortho-phonological units in dyslexic children: new investigations. Dyslexia 15, 218–238. doi: 10.1002/dys.373

Falke, T. R. (2012). The effects of implementing a computer-based reading support program on the reading achievement of sixth graders (Dissertation). University of Missouri, Kansas City, MO.

Flis, A. (2018). ABRACADABRA: The effectiveness of a computer-based reading intervention program for students at-risk for reading failure, and students learning English as a second language (Dissertation). University of British Columbia, British Columbia.

Franceschini, S., Bertoni, S., Ronconi, L., Molteni, M., Gori, S., and Facoetti, A. (2015). “Shall we play a game?”: improving reading through action video games in developmental dyslexia. Curr. Dev. Disord. Reports 2, 318–329. doi: 10.1007/s40474-015-0064-4

Franceschini, S., Gori, S., Ruffino, M., Viola, S., Molteni, M., and Facoetti, A. (2013). Action video games make dyslexic children read better. Curr. Biol. 23, 462–466. doi: 10.1016/j.cub.2013.01.044

Francis, D. A., Caruana, N., Hudson, J. L., and McArthur, G. M. (2019). The association between poor reading and internalising problems: a systematic review and meta-analysis. Clin. Psychol. Rev. 67, 45–60. doi: 10.1016/j.cpr.2018.09.002

Given, B. K., Wasserman, J. D., Chari, S. A., Beattie, K., and Eden, G. F. (2008). A randomized, controlled study of computer -based intervention in middle school struggling readers. Brain Lang. 106, 83–97. doi: 10.0.3.248/j.bandl.2007.12.001

Heikkilä, R., Aro, M., Nähri, V., Westerholm, J., and Ahonen, T. (2013). Does training in syllable recognition improve reading speed? a computer-based trial with poor readers from second and third grade. Sci. Stud. Read. 17, 398–414. doi: 10.1080/10888438.2012.753452

Hill-Stephens, E. (2013). The effects of a computer-based, multimedia, individualized instructional approach on oral reading fluency of at-risk learners (Dissertation). Capella University, Minneapolis, MN.

Hubber, P. J., Outhwaite, L. A., Chigeda, A., McGrath, S., Hodgen, J., and Pitchford, N. J. (2016). Should touch screen tablets be used to improve educational outcomes in primary school children in developing countries? Front. Psychol. 7:839. doi: 10.3389/fpsyg.2016.00839

Huffstetter, M., King, J. R., Onwuegbuzie, A. J., Schneider, J. J., and Powell-Smith, K. a. (2010). Effects of a computer-based early reading program on the early reading and oral language skills of at-risk preschool children. J. Educ. Stud. Placed Risk 15, 279–298. doi: 10.1080/10824669.2010.532415

Jackson, A. H. (2016). NovaNET's effect on the reading achievement of at-risk middle school students (Dissertation). Walden University, Minneapolis, MN.

Jimenez, J. E., and Muneton, M. A. (2010). Effects of computer-assisted practice on reading and spelling in children with learning disabilities. Psicothema 22, 813–821.

Jiménez, J. E., and Rojas, E. (2008). Efectos del videojuego Tradislexia en la conciencia fonologica y reconocimiento de palabras en niños disléxicos. Psicothema 20, 347–353.

Kamykowska, J., Haman, E., Latvala, J. M., Richardson, U., and Lyytinen, H. (2014). Developmental changes of early reading skills in six-year-old polish children and graphogame as a computer-based intervention to support them. L1 Educ. Stud. Lang. Lit. 13, 1–13. doi: 10.17239/L1ESLL-2013.01.05

Krnic Martinic, M., Pieper, D., Glatt, A., and Puljak, L. (2019). Definition of a systematic review used in overviews of systematic reviews, meta-epidemiological studies and textbooks. BMC Med. Res. Methodol. 19:203. doi: 10.1186/s12874-019-0855-0

Kühberger, A., Fritz, A., and Scherndl, T. (2014). Publication bias in psychology: a diagnosis based on the correlation between effect size and sample size. PLoS ONE 9:e105825. doi: 10.1371/journal.pone.0105825

Leclercq, V., Beaudart, C., Ajamieh, S., Rabenda, V., Tirelli, E., and Bruyère, O. (2019). Meta-analyses indexed in PsycINFO had a better completeness of reporting when they mention PRISMA. J. Clin. Epidemiol. 115, 46–54. doi: 10.1016/j.jclinepi.2019.06.014

Linnakyla, P., Malin, A., and Taube, K. (2004). Factors behind low reading literacy achievement. Scand. J. Educ. Res. 48, 231–249. doi: 10.1080/00313830410001695718

Liu, L., Wang, J., Shao, S., Luo, X., Kong, R., Zhang, X., et al. (2016). Descriptive epidemiology of prenatal and perinatal risk factors in a Chinese population with reading disorder. Sci. Rep. 6:36697. doi: 10.1038/srep36697

Lyytinen, H., Ronimus, M., Alanko, A., Poikkeus, A.-M., and Taanila, M. (2009). Early identification of dyslexia and the use of computer game-based practice to support reading acquisition. Nord. Psychol. 59, 109–126. doi: 10.1027/1901-2276.59.2.109

Macaruso, P., and Rodman, A. (2009). Benefits of computer-assisted instruction for struggling readers in middle school. Eur. J. Spec. Needs Educ. 24, 103–113. doi: 10.1080/08856250802596774

Macaruso, P., and Rodman, A. (2011). Efficacy of computer-assisted instruction for the development of early literacy skills in young children. Read. Psychol. 32, 172–196. doi: 10.1080/02702711003608071

Macaruso, P., and Walker, A. (2008). The efficacy of computer-assisted instruction for advancing literacy skills in kindergarten children. Read. Psychol. 29, 266–287. doi: 10.1080/02702710801982019

Mcmurray, S. (2013). An evaluation of the use of Lexia Reading software with children in Year 3, Northern Ireland (6- to 7-year olds). J. Res. Spec. Educ. Needs 13, 15–25. doi: 10.1111/j.1471-3802.2012.01238.x

Messer, D., and Nash, G. (2017). An evaluation of the effectiveness of a computer-assisted reading intervention. J. Res. Read. 41, 1–19. doi: 10.1111/1467-9817.12107

Moher, D., Liberati, A., Tetzlaff, J., and Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6:e1000097. doi: 10.1371/journal.pmed.1000097

Moher, D., Shamseer, L., Clarke, M., Ghersi, D., Liberati, A., Petticrew, M., et al. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 4:1. doi: 10.1186/2046-4053-4-1

Moser, G. P., Morrison, T. G., and Wilcox, B. (2017). Supporting fourth-grade students' word identification using application software. Read. Psychol. 38, 349–368. doi: 10.1080/02702711.2016.1278414

National Reading Panel (2000). Teaching Children to Read: An Evidence-Based Assessment of the Scientific Research Literature on Reading and Its Implications for Reading Instruction. NIH Publ. No. 00–4769. National Inst of Child Health & Human Development.

Nee Chee, K., Yahaya, N., and Hasniza Ibrahim, N. (2017). Effectiveness of mobile learning application in improving reading skills in Chinese language and towards post-attitudes. Int. J. Mob. Learn. Organ. 11, 210–225. doi: 10.1504/IJMLO.2017.085347

O'Callaghan, P., McIvor, A., McVeigh, C., and Rushe, T. (2016). A randomized controlled trial of an early-intervention, computer-based literacy program to boost phonological skills in 4- to 6-year-old children. Br. J. Educ. Psychol. 86, 546–558. doi: 10.1111/bjep.12122

Ostiz-Blanco, M., and Arrondo, G. (2018). Improving Reading Through Videogames and Digital Apps: A Systematic Review (Protocol). Pamplona: University of Navarra. doi: 10.17605/OSF.IO/CKM4N

Ostiz-Blanco, M., Calafi, A. P. A. P., Azcárate, M. L. M. L., and Carrión, S. G. S. G. (2016). “ACMUS: comparative assessment of a musical multimedia tool,” in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) eds R. Bottino, J. Jeuring, and R. Veltkamp (Cham: Springer International Publishing), 321–330.

Physiotherapy Evidence Database (2012). PEDro Scale. Available online at: https://pedro.org.au/english/resources/pedro-scale/ (accessed August 23, 2021).

Pindiprolu, S. S., and Forbush, D. (2009). Computer-based reading programs: a preliminary investigation of two parent implemented programs with students at-risk for reading failure. J. Int. Assoc. Spec. Educ. 10, 71–81.

Plony, D. A. (2014). The effects of read 180 on student achievement (Dissertation). Keiser University, Fort Lauderdale, FL.

Polidano, C., and Ryan, C. (2017). What happens to students with low reading proficiency at 15? evidence from Australia. Econ. Rec. 93, 600–614. doi: 10.1111/1475-4932.12367

Ponce, H. R., Lopez, M. J., and Mayer, R. E. (2012). Instructional effectiveness of a computer-supported program for teaching reading comprehension strategies. Comput. Educ. 59, 1170–1183. doi: 10.1016/j.compedu.2012.05.013

Ponce, H. R., Mayer, R. E., and Lopez, M. J. (2013). A computer-based spatial learning strategy approach that improves reading comprehension and writing. Educ. Technol. Res. Dev. 61, 819–840. doi: 10.1007/s11423-013-9310-9

Rasinski, T., Samuels, S. J., Hiebert, E., Petscher, Y., and Feller, K. (2011). The relationship between a silent reading fluency instructional protocol on students' reading comprehension and achievement in an urban school setting. Read. Psychol. 32, 75–97. doi: 10.1080/02702710903346873

Reed, M. S. (2013). A comparison of computer-based and multisensory interventions on at-risk students' reading achievement (Dissertation). Indiana University of Pennsylvania, Indiana, PA.

Rello, L., Bayarri, C., Otal, Y., and Pielot, M. (2015). “A computer-based method to improve the spelling of children with dyslexia,” in ACM SIGACCESS Conf. Comput. Access. (Rochester, NY).

Rello, L., Macias, A., Herrera, M., de Ros, C., Romero, E., and Bigham, J. P. (2017). “DytectiveU: a game to train the difficulties and the strengths of children with dyslexia,” in Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility (Baltimore, MD), 319–320.

Rogowsky, B. A. (2011). The impact of Fast ForWord on sixth grade students' use of Standard Edited American English (Dissertation). Wilkes University, Wilkes-Barre, PA.

Rosas, R., Escobar, J. P., Ramírez, M. P., Meneses, A., and Guajardo, A. (2017). Impact of a computer-based intervention in Chilean children at risk of manifesting reading difficulties/Impacto de una intervención basada en ordenador en niños chilenos con riesgo de manifestar dificultades lectoras. Infanc. Aprendiz. 40, 158–188. doi: 10.1080/02103702.2016.1263451

Rubin, M. (2017). An evaluation of four solutions to the forking paths problem: adjusted alpha, preregistration, sensitivity analyses, and abandoning the neyman-pearson approach. Rev. Gen. Psychol. 21, 321–329. doi: 10.1037/gpr0000135

Saine, N. L., Lerkkanen, M., Ahonen, T., Tolvanen, A., and Lyytinen, H. (2011). Computer -assisted remedial reading intervention for school beginners at risk for reading disability. Child Dev. 82, 1013–1028. doi: 10.0.4.87/j.1467-8624.2011.01580.x

Savage, R., Abrami, P. C., Piquette, N., Wood, E., Deleveaux, G., Sanghera-Sidhu, S., et al. (2013). A (Pan-Canadian) cluster randomized control effectiveness trial of the ABRACADABRA web-based literacy program. J. Educ. Psychol. 105, 310–328. doi: 10.1037/a0031025

Shannon, L. C., Styers, M. K., Wilkerson, S. B., and Peery, E. (2015). Computer-assisted learning in elementary reading: a randomized control trial. Comput. Sch. 32, 20–34. doi: 10.1080/07380569.2014.969159

Shelley-Tremblay, J., and Eyer, J. (2009). Effect of the Reading Plus program on reading skills in second graders. J. Behav. Optom. 20, 59–66.

Snowling, M. J. (2013). Early identification and interventions for dyslexia: a contemporary view. J. Res. Spec. Educ. Needs 13, 7–14. doi: 10.1111/j.1471-3802.2012.01262.x

Soboleski, P. K. (2011). Fast ForWord: An investigation of the effectiveness of computer-assisted reading intervention (Dissertation). Bowling Green State University, Bowling Green, OH.

Szucs, D., and Ioannidis, J. P. A. (2017). Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature. PLoS Biol. 15:e2000797. doi: 10.1371/journal.pbio.2000797

Tijms, J. (2011). Effectiveness of computer-based treatment for dyslexia in a clinical care setting: Outcomes and moderators. Educ. Psychol. 31, 873–896. doi: 10.1080/01443410.2011.621403

U.S. Department of Education Institute of Education Sciences. (2009). What Works Clearinghouse. Adolescent Literacy Intervention Report: Accelerated Reader [TM].

U.S. Department of Education Institute of Education Sciences. (2010). What Works Clearinghouse. Students with Learning Disabilities Intervention Report: Herman Method [TM].

U.S. Department of Education Institute of Education Sciences. (2013a). What Works Clearinghouse. Beginning Reading Intervention Report: Read Naturally [TM].

U.S. Department of Education Institute of Education Sciences. (2013b). What Works Clearinghouse. Beginning Reading Intervention Report: Fast ForWord [TM].

U.S. Department of Education Institute of Education Sciences. (2017). What Works Clearinghouse. Beginning Reading Intervention Report: Success for All [TM].

UNESCO. Literacy. Available online at: https://en.unesco.org/themes/literacy (accessed August 23 2021).

University of Navarra Búsqueda básica: UNIKA. Eds.b.ebscohost.com. Available online at: https://eds.b.ebscohost.com/eds/search/basic?vid=3&sid=a67126af-33eb-43c0-aa14-719f27669949%40sessionmgr102 (accessed August 23 2021).

Watson, T., and Hempenstall, K. (2008). Effects of a computer based beginning reading program on young children. Australas. J. Educ. Technol. 24, 258–274. doi: 10.14742/ajet.1208

Wild, M. (2009). Using computer-aided instruction to support the systematic practice of phonological skills in beginning readers. J. Res. Read. 32, 413–432. doi: 10.1111/j.1467-9817.2009.01405.x

Williams, Y. (2012). An evaluation of the effectiveness of integrated learning systems on urban middle school student achievement (Dissertation). University of Oklahoma, Norman, OK.

Wolgemuth, J., Savage, R., Helmer, J., Lea, T., Harper, H., Chalkiti, K., et al. (2011). Using computer-based instruction to improve Indigenous early literacy in Northern Australia: a quasi-experimental study The NT context. Australas. J. Educ. Technol. 27, 727–750. doi: 10.14742/ajet.947

Wolgemuth, J. R., Savage, R., Helmer, J., Harper, H., Lea, T., Abrami, P. C., et al. (2013). ABRACADABRA aids Indigenous and non-Indigenous early literacy in Australia: evidence from a multisite randomized controlled trial. Comput. Educ. 67, 250–264. doi: 10.1016/j.compedu.2013.04.002

Keywords: computer-based intervention, dyslexia, first-language, PEDro, PRISMA

Citation: Ostiz-Blanco M, Bernacer J, Garcia-Arbizu I, Diaz-Sanchez P, Rello L, Lallier M and Arrondo G (2021) Improving Reading Through Videogames and Digital Apps: A Systematic Review. Front. Psychol. 12:652948. doi: 10.3389/fpsyg.2021.652948

Received: 13 January 2021; Accepted: 10 August 2021;

Published: 16 September 2021.

Edited by:

Fang Huang, Qingdao University, ChinaReviewed by:

Elena Florit, University of Verona, ItalyNick Preston, University of Leeds, United Kingdom

Copyright © 2021 Ostiz-Blanco, Bernacer, Garcia-Arbizu, Diaz-Sanchez, Rello, Lallier and Arrondo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gonzalo Arrondo, garrondo@unav.es

Mikel Ostiz-Blanco

Mikel Ostiz-Blanco Javier Bernacer

Javier Bernacer Irati Garcia-Arbizu1

Irati Garcia-Arbizu1 Luz Rello

Luz Rello Marie Lallier

Marie Lallier Gonzalo Arrondo

Gonzalo Arrondo