An Efficient Lossless Compression Method for Periodic Signals Based on Adaptive Dictionary Predictive Coding

Abstract

:1. Introduction

2. Algorithm Introduction

2.1. Coding Algorithm Introduction

- (1)

- The first two digitals , in the input array to be coded do not have the first two adjacent digitals. Therefore, the first two digitals cannot be coded, and they need to be directly output as they are.

- (2)

- In the early stage of the coding process, because the dictionary is not complete, there may not be predicted values in the dictionary. According to the first two adjacent digitals, Equation (1) can be used as the predicted value of the current digital to be coded.

| Algorithm 1 steps of coding algorithm |

| Input: coded input {s(k)} Output: coded output {e(k)} |

| Initialize- dictionary D |

| Initialize- address index k← 0 |

| while k<=n %n is the length of input array{s(k)} |

| do k← k+1 |

| if k<3 |

| then e(k) ← s(k) |

| elseif D(s(k−2),s(k-1))=NULL |

| then s’(k) ← a*s(k−2)+b*s(k−1) |

| else s’(k) ← D(s(k−2),s(k−1)) |

| e(k) ← s(k)-s’(k) |

| D(s(k−2),s(k−1)) ← s(k) |

| end |

2.2. Decoding Algorithm Introduction

- (1)

- The first two digitals , in the input array to be decoded do not have the first two adjacent digitals. Therefore, the first two digitals cannot be decoded, and they need to be directly output as they are.

- (2)

- In the early stage of the decoding process, because the dictionary is not complete, there may not be predicted values in the dictionary. According to the first two adjacent digitals of the decoded output , Equation (2) can be used as the predicted value of the current digital to be encoded.

| Algorithm 2 steps of decoding algorithm |

| Input: decoded input {e(k)} Output: decoded output {u(k)} |

| Initialize- dictionary H |

| Initialize- address index k← 0 |

| while k<=n %n is the length of input array{s(k)} |

| do k← k+1 |

| if k<3 |

| then u(k) ← e(k) |

| elseif H(u(k−2),u(k-1)) = NULL |

| then u’(k) ← a*u(k−2)+b*u(k−1) |

| else u’(k) ← H(u(k−2),u(k−1)) |

| u(k) ← e(k)+u’(k) |

| H(u(k−2),u(k−1)) ← u(k) |

| end |

3. Experiment and Result Analysis

3.1. Experiment

- (1)

- The first set of data is a periodic signal that changes period and amplitude with time to verify the feasibility of a lossless compression system.

- (2)

- The second set of data is a periodic signal with a period T = 200 and a signal length of L = 10 K. It is used to verify the effect of block size on compression efficiency. The formula of the signal iswhere = 0, 1, …, 50.

- (3)

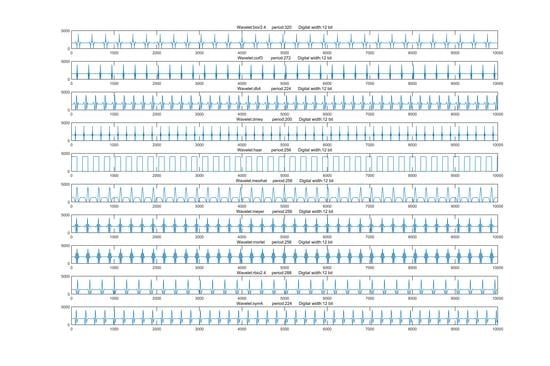

- The last set of data we selected is 10 different periodic signals, whose periods and amplitudes are different. Because the wavelet basis function has rich frequency domain information, we perform periodic extension of the wavelet basis function to obtain the above-mentioned 10 different sets of periodic signals. The detailed information of these 10 sets of periodic signals is shown in Table 1.

- (1)

- Segment the one-dimensional periodic signal along the period of the signal.

- (2)

- Arrange the cut signal into a two-dimensional signal.

- (1)

- Segment 1-D signal along with multiples of the signal’s period.

- (2)

- Arrange the signal into a 2-D signal.

- (3)

- Divide the 2-D signal into 8×8 matrix blocks.

- (4)

- Perform 2D discrete cosine transform on 8×8 matrix blocks.

- (5)

- Quantize the transformed output.

- (1)

- Segment 1-D signal along with multiples of the signal’s period.

- (2)

- Arrange the signal into a 2-D signal.

- (3)

- Perform 3-layer lifting wavelet transform on 2-D signal.

- (4)

- Quantize the transformed output.

- (1)

- The formula for predicting the actual value is

- (2)

- The calculation formula of the output result signal iswhere is the predicted value of the encoded number , and is the prediction coefficient.

3.2. Experimental Evaluation Index

3.3. Result Analysis

4. Conclusions

- (1)

- The results obtained proved that the output of the coding has a strong “energy concentration” characteristic, which has many 0 values.

- (2)

- Our proposed method has a strong adaptive ability, the amplitude and period of the periodic signal change, which has little effect on the prediction accuracy of this method.

- (3)

- By comparing with 2-D DCT, 2-D LWT, and DPCM, our proposed method is more effective in compressing periodic signals. It is combined with LZW to compress the periodic signal and has a high compression ratio.

- (4)

- The complexity of the method proposed in this paper, which is , is low.

Author Contributions

Funding

Conflicts of Interest

References

- Tripathi, R.P.; Mishra, G.R. Study of various data compression techniques used in lossless compression of ECG signals. In Proceedings of the 2017 International Conference on Computing, Communication and Automation (ICCCA), Greater Noida, India, 5–6 May 2017; pp. 1093–1097. [Google Scholar] [CrossRef]

- Gao, X. On the improved correlative prediction scheme for aliased electrocardiogram (ECG) data compression. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 6180–6183. [Google Scholar] [CrossRef]

- Guo, L.; Zhou, D.; Goto, S. Lossless embedded compression using multi-mode DPCM & averaging prediction for HEVC-like video codec. In Proceedings of the 21st European Signal Processing Conference (EUSIPCO 2013), Marrakech, Morocco, 9–13 September 2013; pp. 1–5. [Google Scholar]

- Arshad, R.; Saleem, A.; Khan, D. Performance comparison of Huffman Coding and Double Huffman Coding. In Proceedings of the 2016 Sixth International Conference on Innovative Computing Technology (INTECH), Dublin, Ireland, 24–26 August 2016; pp. 361–364. [Google Scholar] [CrossRef]

- Huang, J.-Y.; Liang, Y.-C.; Huang, Y.-M. Secure integer arithmetic coding with adjustable interval size. In Proceedings of the 2013 19th Asia-Pacific Conference on Communications (APCC), Denpasar, India, 29–31 August 2013; pp. 683–687. [Google Scholar] [CrossRef]

- Khosravifard, M.; Narimani, H.; Gulliver, T.A. A Simple Recursive Shannon Code. IEEE Trans. Commun. 2012, 60, 295–299. [Google Scholar] [CrossRef]

- Hou, A.-L.; Yuan, F.; Ying, G. QR code image detection using run-length coding. In Proceedings of the 2011 International Conference on Computer Science and Network Technology, Harbin, China, 24–26 December 2011; pp. 2130–2134. [Google Scholar] [CrossRef]

- Nishimoto, T.; Tabei, Y. LZRR: LZ77 Parsing with Right Reference. In Proceedings of the 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 26–29 March 2019; pp. 211–220. [Google Scholar] [CrossRef] [Green Version]

- Nandi, U.; Mandal, J.K. A Compression Technique Based on Optimality of LZW Code (OLZW). In Proceedings of the 2012 Third International Conference on Computer and Communication Technology, Allahabad, India, 23–25 November 2012; pp. 166–170. [Google Scholar] [CrossRef]

- An, Q.; Zhang, H.; Hu, Z.; Chen, Z. A Compression Approach of Power Quality Monitoring Data Based on Two-dimension DCT. In Proceedings of the 2011 Third International Conference on Measuring Technology and Mechatronics Automation, Shanghai, China, 6–7 January 2011; pp. 20–24. [Google Scholar]

- Zhang, R.; Yao, H.; Zhang, C. Compression method of power quality data based on wavelet transform. In Proceedings of the 2013 2nd International Conference on Measurement, Information and Control, Harbin, China, 16–18 August 2013; pp. 987–990. [Google Scholar]

- Tsai, T.; Tsai, F. Efficient Lossless Compression Scheme for Multi-channel ECG Signal. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1289–1292. [Google Scholar]

- Alam, S.; Gupta, R. A DPCM based Electrocardiogram coder with thresholding for real time telemonitoring applications. In Proceedings of the 2014 International Conference on Communication and Signal Processing, Melmaruvathur, India, 3–5 April 2014; pp. 176–180. [Google Scholar]

- Liu, B.; Cao, A.; Kim, H. Unified Signal Compression Using Generative Adversarial Networks. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–9 May 2020; pp. 3177–3181. [Google Scholar] [CrossRef] [Green Version]

- Wang, I.; Ding, J.; Hsu, H. Prediction techniques for wavelet based 1-D signal compression. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 23–26. [Google Scholar] [CrossRef]

- Huang, F.; Qin, T.; Wang, L.; Wan, H.; Ren, J. An ECG Signal Prediction Method Based on ARIMA Model and DWT. In Proceedings of the 2019 IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019; pp. 1298–1304. [Google Scholar] [CrossRef]

- Hikmawati, F.; Setyowati, E.; Salehah, N.A.; Choiruddin, A. A novel hybrid GSTARX-RNN model for forecasting space-time data with calendar variation effect. J. Phys. Conf. Ser. Kota Ambon Indones. 2020, 1463, 012037. [Google Scholar] [CrossRef]

- Jacquet, P.; Szpankowski, W.; Apostol, I. A universal pattern matching predictor for mixing sources. In Proceedings of the IEEE International Symposium on Information Theory, Lausanne, Switzerland, 30 June–5 July 2002; p. 150. [Google Scholar] [CrossRef]

- Feder, M.; Merhav, N.; Gutman, M. Universal prediction of individual sequences. IEEE Trans. Inf. Theory 1992, 38, 1258–1270. [Google Scholar] [CrossRef] [Green Version]

- Cleary, J.G.; Witten, I.H. Data compression using adaptive coding and partial string matching. IEEE Trans. Commun. 1984, 32, 396–402. [Google Scholar] [CrossRef] [Green Version]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

| Wavelet | Period | Length | Digital Width (Bit) |

|---|---|---|---|

| bior2.4 | 320 | 102.4 K | 12 |

| coif3 | 272 | 74 K | 12 |

| db4 | 224 | 50 K | 12 |

| dmey | 200 | 40 K | 12 |

| haar | 256 | 65.5 K | 12 |

| mexihat | 256 | 65.5 K | 12 |

| meyer | 256 | 65.5 K | 12 |

| morlet | 256 | 65.5 K | 12 |

| rbio2.4 | 288 | 82.9 K | 12 |

| sym4 | 224 | 50 K | 12 |

| Coding Methods | CRmean | CRmin | CRmax | PSNR (dB) |

|---|---|---|---|---|

| Proposed method | 64.90 | 32.11 | 129.77 | - |

| 2-D DCT | 46.78 | 32.72 | 98.92 | 63.77 |

| 2-D LWT | 20.78 | 14.87 | 25.22 | 56.07 |

| DPCM | 21.00 | 11.31 | 50.63 | - |

| Coding Methods | Time Complexity |

|---|---|

| Proposed method | O(n) |

| DPCM | O(n) |

| 2-D DCT | O(n2) |

| 2-D LWT | O(n) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, S.; Liu, W.; Wang, Z.; Li, K.; Zhu, P.; Wang, P. An Efficient Lossless Compression Method for Periodic Signals Based on Adaptive Dictionary Predictive Coding. Appl. Sci. 2020, 10, 4918. https://doi.org/10.3390/app10144918

Dai S, Liu W, Wang Z, Li K, Zhu P, Wang P. An Efficient Lossless Compression Method for Periodic Signals Based on Adaptive Dictionary Predictive Coding. Applied Sciences. 2020; 10(14):4918. https://doi.org/10.3390/app10144918

Chicago/Turabian StyleDai, Shaofei, Wenbo Liu, Zhengyi Wang, Kaiyu Li, Pengfei Zhu, and Ping Wang. 2020. "An Efficient Lossless Compression Method for Periodic Signals Based on Adaptive Dictionary Predictive Coding" Applied Sciences 10, no. 14: 4918. https://doi.org/10.3390/app10144918