1. Introduction

Due to technical limitations [

1], current satellites, such as QuickBird, IKONOS, WorldView-2, GeoEye-1, can not obtain the high spatial resolution

multispectral (MS) images, but only acquire an image pair with complementary features, i.e., a high spatial resolution

panchromatic (PAN) image and a low spatial resolution MS image with rich spectral information. To get high-quality products, pansharpening is proposed with the goal of fusing MS and PAN images to generate

high resolution multispectral (HRMS) image with the same

spatial resolution of the PAN image and the

spectral resolution of the MS image [

2,

3]. It can be cast as a typical kind of

image fusion [

4] or

super-resolution [

5] problems and has a wide range of real-world applications, such as enhancing the visual interpretation, monitoring the land cover change [

6], object recognition [

7], and so on.

Over decades of studies, a large number of pansharpening methods have been proposed in the literature of remote sensing [

3]. Most of them can be categorized into the following two main classes [

2,

3,

8]: (1)

component substitution (CS)-based methods and (2)

multiresolution analysis (MRA)-based methods. The CS class first transforms the original MS image into a new space and then substitutes one component of the transformed MS image by the histogram matched PAN image. The representative methods of the CS class are

Intensity-Hue-Saturation (IHS) [

9],

generalized IHS (GIHS) [

10],

principal component analysis (PCA) [

11], Brovey [

12], among many others [

13,

14,

15,

16,

17]. The MRA-based class is also known as the class of spatial methods, which extracts the high spatial frequencies of the high resolution PAN image through multiresolution analysis tools (e.g., wavelets or Laplacian pyramids) to enhance the spatial information of MS image. The representative methods belonging to the MRA-based class are

high-pass filtering (HPF) [

18],

smoothing filter-based intensity modulation (SFIM) [

19], the

generalized Laplacian pyramid (GLP) [

20,

21], among many others [

22,

23]. The two class methods are fast and easy to implement. However, for the CS-based methods, local dissimilarities between PAN and MS images can not be eliminated, resulting in spectral distortion, and for the MRA-based methods, they have a relatively less spectral distortion but with limited spatial enhancement. From the above, the CS-based and the MRA-based methods usually have complementary performances in improving the spatial quality of MS images while maintaining the corresponding spectral information.

To balance the trade-off performances of the CS-based and MRA-based methods, the hybrid methods by combining both of these two classes have been proposed in recent years. For example, the

additive wavelet luminance proportional (AWLP) [

24] method is proposed by Otazu et al. via implementing the “à trous” wavelet transform in the IHS space. Shah et al. [

25] proposed a method by combining an adaptive PCA method with the discrete contourlet transform. Liao et al. [

26] proposed an framework, called

guided filter PCA (GFPCA), which performs a

guided filter in the PCA domain. Although the hybrid methods have an enhanced performance to the CS-based or MRA-based methods, these improvements are limited due to their hand-crafted design.

Recently, significant progress on improving the spatial and spectral qualities of the fused images for the classical methods has been achieved by

variational optimization (VO)-based methods [

27,

28,

29,

30,

31] and learning-based methods, among which

convolution neural network (CNN)-based methods are the most popular, due to their powerful capability and the end-to-end learning strategy. For instance, Masi et al. introduced a CNN architecture with three layers in [

32] for the pansharpening problem. Another novel CNN-based model, which is focused on preserving spatial and spectral information, is designed by Yang et al. in [

33]. Inspired by these work, Liu et al. [

34] proposed a two-stream CNN architecture with

-norm loss function to further improve the spatial quality. Zheng et al. [

35] proposed a CNN-based method by using deep hyperspectral prior and dual-attention residual network to deal with the problem of that the discriminative ability of CNNs is sometimes hindered. Though having great ability of automatically extracting features and the state-of-the-art performances, CNN-based methods usually require intensive computational resources [

36]. In addition, unlike the CS-based and MRA-based methods, CNN-based methods are lack of interpretability and are more like a black-box game. A detailed summary and relevant works for the VO-based methods can be found in [

2]. We do not discuss the VO class for more since this paper focuses on a combination of the other three classes.

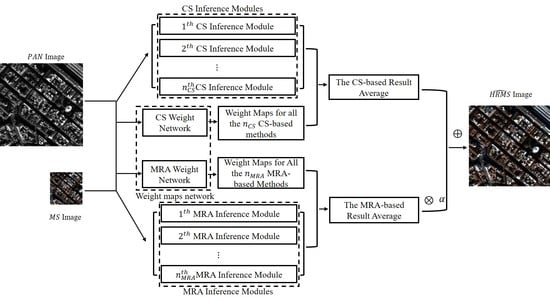

In this paper, we propose a pansharpening weight network (PWNet) to bridge the classical methods (i.e., CS-based and MRA-based methods) and the learning-based methods (typically the CNN-based methods). On one hand, similar to the hybrid methods, PWNet can combine the merits of the CS-based and the MRA-based methods. On the other hand, similar to learning-based methods, PWNet is data-driven and is very effective and efficient. To achieve this, PWNet uses the CS-based and MRA-based methods as inference modules and utilizes CNN to learn adaptive weight maps for weighting the results of the classical methods. Unlike the above hybrid methods with hand-crafted design, the PWNet can be seen as an automatic and data-driven hybrid method for pansharpening. In addition, the structure of PWNet is very simple to ease training and save computational time.

The main contributions of this work are as follows:

A model average network, called pansharpening weight network (PWNet), is proposed. The PWNet can be trained and is the first attempt to combining the classical methods via an end-to-end trainable network.

PWNet integrates the complementary characteristics of the CS-based and MRA-based methods and the flexibility of the learning-based (typically the CNN-based) methods, providing an avenue to bridge the gap between them.

PWNet is data-driven, and can automatically weight the contributions of different CS-based and MRA-based methods on different data sets. By visualizing the weight maps, we prove that the PWNet is adaptive and robust to different data sets.

Extensive experiments on three kinds of data sets have been conducted and shown that the fusion results obtained by PWNet achieve state-of-the-art performance compared with the CS-based, MRA-based methods and other CNN-based methods.

The paper is organized as follows. In

Section 2, we briefly introduce the background of the CS-based, MRA-based and learning-based methods.

Section 3 introduces the motivation, network architecture, and other details of PWNet. In

Section 4, we conduct the experiments, analyze the parameter setting and time complexity and present the comparisons with the-state-of-art methods at the reduced and full scales. Finally, we draw the conclusion in

Section 5.

2. Related Work

Notations. We denote the low resolution multispectral (LRMS) image by , where and N are the width, the height, and the number of spectral bands of the LRMS image, respectively. We denote the high resolution PAN image by , where r is the spatial resolution ratio between MS and PAN, denote by the reconstructed HRMS image. We let to represent the kth band of the LRMS image, where …, and let to represent the upsampled version of by ratio r. For notational simplicity, we also denote by P the histogram matched PAN image. Based on these symbols, we next briefly introduce the main idea of the CS-based, MRA-based and learning-based methods.

2.1. The CS-Based Methods

The CS-based methods are based on the assumption that the spatial and spectral information of LRMS image can be separated by a projection or transformation of the original LRMS image [

3,

37]. The CS class usually has four steps: (1) upsample the LRMS image to the size of the PAN image; (2) use a linear transformation to project the upsampled LRMS image into another space; (3) replace the component containing the spatial information with the PAN image; (4) perform an inverse transformation to bring the transformed MS data back to their original space and then get the pansharpened MS image (i.e., the estimated HRMS). Due to the changes in low spatial frequencies of the MS image, the substitution procedure usually suffers from spectral distortion. Thus, spectral matching procedure (i.e., histogram matching) is often applied before the substitution.

Mathematically, above fusion process can be simplified without the calculation of the forward and backward transformation as shown in

Figure 1, which leads the CS class to have the following equivalent form as

where

…

are the injection gains, and

is a linear combination of the upsampled LRMS image bands and often called

intensity component, defined as

where

…

usually correspond to the first row of the forward transformation matrix, which is used to measure the degrees of spectral overlap between the MS and PAN channels.

Numerous CS-based methods have been proposed to sharpen the LRMS images according to Equation (

1) and flowchart in

Figure 1. The CS class includes IHS [

9] which exploits the transformation into the IHS color space and its generalized version GIHS [

10], PCA [

11] based on the statistical irrelevance of each principal component, Brovey [

12] based on a multiplicative injection scheme,

Gram-Schmidt (GS) [

13] which conducts the Gram-Schmidt orthogonalization procedure and by a weighted average of the MS bands minimizing the

mean square error (MSE) with respect to a low-pass filtered version PAN image in the

adaptive GS (GSA) [

15],

band-dependent spatial detail (BDSD) [

14] and its enhanced version (i.e.,

BDSD with physical constraints: BDSD-PC) [

16],

partial replacement adaptive component substitute (PRACS) [

17] based on the concept of

partial replacement of the intensity component and so on. Each method differs from the others by the different projections of the MS images used in the process and by the different designs of injection gains. Although they show extreme performances in improving the spatial qualities of LRMS images, they usually suffer from heavily spectral distortions in some scenarios due to local dissimilarity or the not well-separated spatial structure with the spectral information. Refer to [

3] for more detailed discussions about this.

2.2. The MRA-Based Methods

Unlike the CS-based methods, the MRA class is based on the operator of multi-scale decomposition or low-pass filter (equal to a single scale of decomposition) over the PAN image [

3,

37]. They first extract the spatial details over a wide range of scales from the high resolution PAN image or from the difference between the PAN image and its low-pass filtered version

, and then inject the extracted spatial details into each band of upsampled LRMS image.

Figure 2 shows the general flowchart of the MRA-based methods.

Generally, for each band

, the MRA-based methods can be formulated as

As we can see from above Equation (

3), different MRA-based methods can be distinguished by the way of obtaining

and by the design of injection gains

. Several methods belonging to this class have been proposed, such as HPF [

18] using the box mask and additive injection, the SFIM [

19],

decimated Wavelet transform using additive injection model (Indusion) [

23], the AWLP [

24], GLP with

modulation transfer function (MTF)-matched filter (denoted by MTF-GLP) [

21], its HPM injection version (MTF-GLP-HPM) [

22] and context-based decision version (MTF-GLP-CBD) [

38],

a trous wavelet transform using the model 3 (ATWT-TM3) [

39], and so on.

The MRA-based methods highlight the extraction of multi-scale and local details from the PAN image, well in reducing the spectral distortion but compromising the spatial enhancement. To make up this problem, many approches have been proposed by the utilization of different decomposition schemes (e.g.,

morphological filters [

40]) and the optimization of the injection gains.

2.3. The Learning-Based Method

Apart from the traditional CS-based and MRA-based methods, the learning-based methods have been proposed or applied to the pansharpening, among which the CNN-based methods are the most popular [

41]. The CNN-based methods are very flexible, and one can design a CNN with different architectures. Due to the end-to-end and data-driven properties, they achieve the state-of-the-art performances in some studies [

32,

33,

34,

35]. After a network architecture design, training image pairs with

low resolution MS (LRMS) images as network input and

high resolution MS (HRMS) images as network output, are needed to learn the network parameters

. The learning procedure is based on the choice of loss function and optimization method, and the effect of learning is different from each other according to these choices of loss function and optimization strategy. However, these ideal image pairs are unavailable, and usually simulated based on scale invariant assumption by properly downsampling both PAN and the original MS images to a reduced resolution. Then, the resolution reduced MS images and the original MS can be used as an input-output pair.

Given the input-output MS pairs of

with low resolution and

with high resolution, and aided by the auxiliary PAN image

,

…

, the CNN-based methods optimize the parameter by minimizing the following cost function

where

denotes a neural network which takes

as parameters, and

is the Frobenius norm, which is defined as the square root of the sum of the absolute squares of the elements.

To further improve the performances of CNN-based methods, recent work mainly resorts to the deep residual architecture [

42] or to increase the depth of the model to extract multi-level abstract features [

43]. However, these will require large number of network parameters and burden computation [

36]. Unlike the above CNN-based methods that aim at generating the HRMS images or their residual images, we here to reduce the number of parameters and reduce the requirements on the computation capacity of the computer by learning weight maps for the CS-based and the MRA-based methods. Refer to the following section for more detailed discussions about this.