Semi-Supervised Segmentation for Coastal Monitoring Seagrass Using RPA Imagery

Abstract

:1. Introduction

- Can FCNNs model high resolution aerial imagery from a small set of geographically referenced image shapes?

- How does performance compare with standard OBIA/GIS frameworks?

- How accurate is modeling Zostera noltii and Angustifolia along with all other relevant coastal features within the study site?

2. Methods

2.1. Study Site

2.2. Data Collection

2.3. On-Situ Survey

- Background sediment: dry sand and other bareground

- Algae: Microphytobenthos, Enteromorpha and other macroalgae (including Fucus)

- Seagrass: Zostera noltii and Angustifolia merged to a single class

- Other plants: Saltmarsh

2.4. Data Pre-Processing for FCNNs

2.4.1. Polygons to Segmentation Masks for FCNNs

2.4.2. Vegetation, Soil and Atmospheric Indices for FCNNs

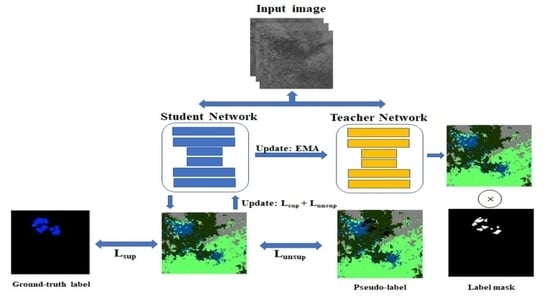

2.5. Fully Convolutional Neural Networks

2.5.1. Weighted Training for FCNNs

2.5.2. Supervised Loss

2.5.3. Unsupervised Loss

2.5.4. Training Parameters

2.6. OBIA

2.7. Accuracy Assessment

3. Results

3.1. SONY ILCE-6000 Results

3.2. MicaSense RedEdge3 Results

3.3. Habitat Maps

4. Discussion

4.1. FCNNs Convergence

4.2. SONY ILCE-6000 Analysis

4.3. MicaSense RedEdge3 Analysis

4.4. Overall Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RS | Remote Sensing |

| FCNN | Fully Convolutional Neural Network |

| MRS | Multi-Resolution Segmentation |

| OBIA | Object-Based Image Analysis |

References

- Fonseca, M.S.; Zieman, J.C.; Thayer, G.W.; Fisher, J.S. The role of current velocity in structuring eelgrass (Zostera marina L.) meadows. Estuar. Coast. Shelf Sci. 1983, 17, 367–380. [Google Scholar] [CrossRef]

- Fonseca, M.S.; Bell, S.S. Influence of physical setting on seagrass landscapes near Beaufort, North Carolina, USA. Mar. Ecol. Prog. Ser. 1998, 171, 109–121. [Google Scholar] [CrossRef] [Green Version]

- Gera, A.; Pagès, J.F.; Romero, J.; Alcoverro, T. Combined effects of fragmentation and herbivory on Posidonia oceanica seagrass ecosystems. J. Ecol. 2013, 101, 1053–1061. [Google Scholar] [CrossRef] [Green Version]

- Pu, R.; Bell, S.; Meyer, C. Mapping and assessing seagrass bed changes in Central Florida’s west coast using multitemporal Landsat TM imagery. Estuar. Coast. Shelf Sci. 2014, 149, 68–79. [Google Scholar] [CrossRef]

- Short, F.T.; Wyllie-Echeverria, S. Natural and human-induced disturbance of seagrasses. Environ. Conserv. 1996, 23, 17–27. [Google Scholar] [CrossRef]

- Marbà, N.; Duarte, C.M. Mediterranean warming triggers seagrass (Posidonia oceanica) shoot mortality. Glob. Chang. Biol. 2010, 16, 2366–2375. [Google Scholar] [CrossRef]

- Duarte, C.M. The future of seagrass meadows. Environ. Conserv. 2002, 29, 192–206. [Google Scholar] [CrossRef] [Green Version]

- McGlathery, K.J.; Reynolds, L.K.; Cole, L.W.; Orth, R.J.; Marion, S.R.; Schwarzschild, A. Recovery trajectories during state change from bare sediment to eelgrass dominance. Mar. Ecol. Prog. Ser. 2012, 448, 209–221. [Google Scholar] [CrossRef] [Green Version]

- Lamb, J.B.; Van De Water, J.A.; Bourne, D.G.; Altier, C.; Hein, M.Y.; Fiorenza, E.A.; Abu, N.; Jompa, J.; Harvell, C.D. Seagrass ecosystems reduce exposure to bacterial pathogens of humans, fishes, and invertebrates. Science 2017, 355, 731–733. [Google Scholar] [CrossRef]

- Fourqurean, J.W.; Duarte, C.M.; Kennedy, H.; Marbà, N.; Holmer, M.; Mateo, M.A.; Apostolaki, E.T.; Kendrick, G.A.; Krause-Jensen, D.; McGlathery, K.J.; et al. Seagrass ecosystems as a globally significant carbon stock. Nat. Geosci. 2012, 5, 505–509. [Google Scholar] [CrossRef]

- Macreadie, P.; Baird, M.; Trevathan-Tackett, S.; Larkum, A.; Ralph, P. Quantifying and modelling the carbon sequestration capacity of seagrass meadows–a critical assessment. Mar. Pollut. Bull. 2014, 83, 430–439. [Google Scholar] [CrossRef]

- Dennison, W.C.; Orth, R.J.; Moore, K.A.; Stevenson, J.C.; Carter, V.; Kollar, S.; Bergstrom, P.W.; Batiuk, R.A. Assessing water quality with submersed aquatic vegetation: Habitat requirements as barometers of Chesapeake Bay health. BioScience 1993, 43, 86–94. [Google Scholar] [CrossRef]

- Waycott, M.; Duarte, C.M.; Carruthers, T.J.; Orth, R.J.; Dennison, W.C.; Olyarnik, S.; Calladine, A.; Fourqurean, J.W.; Heck, K.L.; Hughes, A.R.; et al. Accelerating loss of seagrasses across the globe threatens coastal ecosystems. Proc. Natl. Acad. Sci. USA 2009, 106, 12377–12381. [Google Scholar] [CrossRef] [Green Version]

- Richards, J.A.; Richards, J. Remote Sensing Digital Image Analysis; Springer: Berlin, Germany, 1999; Volume 3. [Google Scholar]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Duffy, J.P.; Pratt, L.; Anderson, K.; Land, P.E.; Shutler, J.D. Spatial assessment of intertidal seagrass meadows using optical imaging systems and a lightweight drone. Estuar. Coast. Shelf Sci. 2018, 200, 169–180. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution unmanned aerial vehicle (UAV) imagery, based on structure from motion (SfM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Collin, A.; Dubois, S.; Ramambason, C.; Etienne, S. Very high-resolution mapping of emerging biogenic reefs using airborne optical imagery and neural network: The honeycomb worm (Sabellaria alveolata) case study. Int. J. Remote Sens. 2018, 39, 5660–5675. [Google Scholar] [CrossRef] [Green Version]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and classification of ecologically sensitive marine habitats using unmanned aerial vehicle (UAV) imagery and object-based image analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef] [Green Version]

- Rossiter, T.; Furey, T.; McCarthy, T.; Stengel, D.B. UAV-mounted hyperspectral mapping of intertidal macroalgae. Estuar. Coast. Shelf Sci. 2020, 242, 106789. [Google Scholar] [CrossRef]

- Wilson, B.R.; Brown, C.J.; Sameoto, J.A.; Lacharité, M.; Redden, A.M.; Gazzola, V. Mapping seafloor habitats in the Bay of Fundy to assess megafaunal assemblages associated with Modiolus modiolus beds. Estuar. Coast. Shelf Sci. 2021, 252, 107294. [Google Scholar] [CrossRef]

- Fogarin, S.; Madricardo, F.; Zaggia, L.; Sigovini, M.; Montereale-Gavazzi, G.; Kruss, A.; Lorenzetti, G.; Manfé, G.; Petrizzo, A.; Molinaroli, E.; et al. Tidal inlets in the Anthropocene: Geomorphology and benthic habitats of the Chioggia inlet, Venice Lagoon (Italy). Earth Surf. Process. Landf. 2019, 44, 2297–2315. [Google Scholar] [CrossRef]

- Costa, B.; Battista, T.; Pittman, S. Comparative evaluation of airborne LiDAR and ship-based multibeam SoNAR bathymetry and intensity for mapping coral reef ecosystems. Remote Sens. Environ. 2009, 113, 1082–1100. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Su, W.; Li, J.; Chen, Y.; Liu, Z.; Zhang, J.; Low, T.M.; Suppiah, I.; Hashim, S.A.M. Textural and local spatial statistics for the object-oriented classification of urban areas using high resolution imagery. Int. J. Remote Sens. 2008, 29, 3105–3117. [Google Scholar] [CrossRef]

- Flanders, D.; Hall-Beyer, M.; Pereverzoff, J. Preliminary evaluation of eCognition object-based software for cut block delineation and feature extraction. Can. J. Remote Sens. 2003, 29, 441–452. [Google Scholar] [CrossRef]

- Butler, J.D.; Purkis, S.J.; Yousif, R.; Al-Shaikh, I.; Warren, C. A high-resolution remotely sensed benthic habitat map of the Qatari coastal zone. Mar. Pollut. Bull. 2020, 160, 111634. [Google Scholar] [CrossRef]

- Husson, E.; Ecke, F.; Reese, H. Comparison of manual mapping and automated object-based image analysis of non-submerged aquatic vegetation from very-high-resolution UAS images. Remote Sens. 2016, 8, 724. [Google Scholar] [CrossRef] [Green Version]

- Purkis, S.J.; Gleason, A.C.; Purkis, C.R.; Dempsey, A.C.; Renaud, P.G.; Faisal, M.; Saul, S.; Kerr, J.M. High-resolution habitat and bathymetry maps for 65,000 sq. km of Earth’s remotest coral reefs. Coral Reefs 2019, 38, 467–488. [Google Scholar] [CrossRef] [Green Version]

- Rasuly, A.; Naghdifar, R.; Rasoli, M. Monitoring of Caspian Sea coastline changes using object-oriented techniques. Procedia Environ. Sci. 2010, 2, 416–426. [Google Scholar] [CrossRef] [Green Version]

- Schmidt, K.; Skidmore, A.; Kloosterman, E.; Van Oosten, H.; Kumar, L.; Janssen, J. Mapping coastal vegetation using an expert system and hyperspectral imagery. Photogramm. Eng. Remote Sens. 2004, 70, 703–715. [Google Scholar] [CrossRef]

- Fakiris, E.; Blondel, P.; Papatheodorou, G.; Christodoulou, D.; Dimas, X.; Georgiou, N.; Kordella, S.; Dimitriadis, C.; Rzhanov, Y.; Geraga, M. Multi-frequency, multi-sonar mapping of shallow habitats—Efficacy and management implications in the national marine park of Zakynthos, Greece. Remote Sens. 2019, 11, 461. [Google Scholar] [CrossRef] [Green Version]

- Innangi, S.; Tonielli, R.; Romagnoli, C.; Budillon, F.; Di Martino, G.; Innangi, M.; Laterza, R.; Le Bas, T.; Iacono, C.L. Seabed mapping in the Pelagie Islands marine protected area (Sicily Channel, Southern Mediterranean) using Remote Sensing object based image analysis (RSOBIA). Mar. Geophys. Res. 2019, 40, 333–355. [Google Scholar] [CrossRef] [Green Version]

- Janowski, L.; Madricardo, F.; Fogarin, S.; Kruss, A.; Molinaroli, E.; Kubowicz-Grajewska, A.; Tegowski, J. Spatial and Temporal Changes of Tidal Inlet Using Object-Based Image Analysis of Multibeam Echosounder Measurements: A Case from the Lagoon of Venice, Italy. Remote Sens. 2020, 12, 2117. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Helsinki, Finland, 21–23 August 2017; pp. 2961–2969. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5 October 2015; pp. 234–241. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Shi, G.; Huang, H.; Wang, L. Unsupervised dimensionality reduction for hyperspectral imagery via local geometric structure feature learning. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1425–1429. [Google Scholar] [CrossRef]

- Luo, F.; Zhang, L.; Du, B.; Zhang, L. Dimensionality reduction with enhanced hybrid-graph discriminant learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5336–5353. [Google Scholar] [CrossRef]

- Sun, H.; Zheng, X.; Lu, X. A Supervised Segmentation Network for Hyperspectral Image Classification. IEEE Trans. Image Process. 2021, 30, 2810–2825. [Google Scholar] [CrossRef]

- Bowler, E.; Fretwell, P.T.; French, G.; Mackiewicz, M. Using deep learning to count albatrosses from space: Assessing results in light of ground truth uncertainty. Remote Sens. 2020, 12, 2026. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef] [Green Version]

- Hamdi, Z.M.; Brandmeier, M.; Straub, C. Forest damage assessment using deep learning on high resolution remote sensing data. Remote Sens. 2019, 11, 1976. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. arXiv 2017, arXiv:1703.01780. [Google Scholar]

- Olsson, V.; Tranheden, W.; Pinto, J.; Svensson, L. Classmix: Segmentation-based data augmentation for semi-supervised learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2021; pp. 1369–1378. [Google Scholar]

- French, G.; Laine, S.; Aila, T.; Mackiewicz, M.; Finlayson, G. Semi-supervised semantic segmentation needs strong, varied perturbations. In Proceedings of the British Machine Vision Conference, Virtual Conference, Virtual Event. UK, 7–10 September 2020. Number 31. [Google Scholar]

- French, G.; Oliver, A.; Salimans, T. Milking cowmask for semi-supervised image classification. arXiv 2020, arXiv:2003.12022. [Google Scholar]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Scharwächter, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset. In Proceedings of the CVPR Workshop on the Future of Datasets in Vision, Boston, MA, USA, 7–12 June 2015; Volume 2. [Google Scholar]

- Ladle, M. The Haustoriidae (Amphipoda) of Budle Bay, Northumberland. Crustaceana 1975, 28, 37–47. [Google Scholar] [CrossRef]

- Meyer, A. An Investigation into Certain Aspects of the Ecology of Fenham Flats and Budle Bay, Northumberland. Ph.D. Thesis, Durham University, Durham, UK, 1973. [Google Scholar]

- Olive, P. Management of the exploitation of the lugworm Arenicola marina and the ragworm Nereis virens (Polychaeta) in conservation areas. Aquat. Conserv. Mar. Freshw. Ecosyst. 1993, 3, 1–24. [Google Scholar] [CrossRef]

- Agisoft, L. Agisoft Metashape User Manual, Professional Edition, Version 1.5. Agisoft LLC St. Petersburg Russ. 2018. Available online: https://www.agisoft.com/pdf/metashape-pro_1_5_en.pdf (accessed on 29 April 2021).

- Cunliffe, A.M.; Brazier, R.E.; Anderson, K. Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 2016, 183, 129–143. [Google Scholar] [CrossRef] [Green Version]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef] [Green Version]

- Rouse, J.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Ren-hua, Z.; Rao, N.; Liao, K. Approach for a vegetation index resistant to atmospheric effect. J. Integr. Plant Biol. 1996, 38, Available. Available online: https://www.jipb.net/EN/volumn/volumn_1477.shtml (accessed on 29 April 2021).

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Daughtry, C.S.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey Iii, J. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Xiaoqin, W.; Miaomiao, W.; Shaoqiang, W.; Yundong, W. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 152–159. [Google Scholar]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef] [Green Version]

- MacIntyre, H.L.; Geider, R.J.; Miller, D.C. Microphytobenthos: The ecological role of the “secret garden” of unvegetated, shallow-water marine habitats. I. Distribution, abundance and primary production. Estuaries 1996, 19, 186–201. [Google Scholar] [CrossRef]

- Martin, R.; Ellis, J.; Brabyn, L.; Campbell, M. Change-mapping of estuarine intertidal seagrass (Zostera muelleri) using multispectral imagery flown by remotely piloted aircraft (RPA) at Wharekawa Harbour, New Zealand. Estuar. Coast. Shelf Sci. 2020, 246, 107046. [Google Scholar] [CrossRef]

- Chand, S.; Bollard, B. Low altitude spatial assessment and monitoring of intertidal seagrass meadows beyond the visible spectrum using a remotely piloted aircraft system. Estuar. Coast. Shelf Sci. 2021, 107299. [Google Scholar] [CrossRef]

- Perez, D.; Islam, K.; Hill, V.; Zimmerman, R.; Schaeffer, B.; Shen, Y.; Li, J. Quantifying Seagrass Distribution in Coastal Water with Deep Learning Models. Remote Sens. 2020, 12, 1581. [Google Scholar] [CrossRef]

- Reus, G.; Möller, T.; Jäger, J.; Schultz, S.T.; Kruschel, C.; Hasenauer, J.; Wolff, V.; Fricke-Neuderth, K. Looking for seagrass: Deep learning for visual coverage estimation. In Proceedings of the 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO), Port Island, Kobe, Japan, 28–31 May 2018; pp. 1–6. [Google Scholar]

- Weidmann, F.; Jäger, J.; Reus, G.; Schultz, S.T.; Kruschel, C.; Wolff, V.; Fricke-Neuderth, K. A closer look at seagrass meadows: Semantic segmentation for visual coverage estimation. In Proceedings of the OCEANS 2019-Marseille, Marseille, France, 17–20 June 2019; pp. 1–6. [Google Scholar]

- Yamakita, T.; Sodeyama, F.; Whanpetch, N.; Watanabe, K.; Nakaoka, M. Application of deep learning techniques for determining the spatial extent and classification of seagrass beds, Trang, Thailand. Bot. Mar. 2019, 62, 291–307. [Google Scholar] [CrossRef]

- Balado, J.; Olabarria, C.; Martínez-Sánchez, J.; Rodríguez-Pérez, J.R.; Pedro, A. Semantic segmentation of major macroalgae in coastal environments using high-resolution ground imagery and deep learning. Int. J. Remote Sens. 2021, 42, 1785–1800. [Google Scholar] [CrossRef]

- Kervadec, H.; Dolz, J.; Granger, É.; Ayed, I.B. Curriculum semi-supervised segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 568–576. [Google Scholar]

- Perone, C.S.; Cohen-Adad, J. Deep semi-supervised segmentation with weight-averaged consistency targets. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin, Germany, 2018; pp. 12–19. [Google Scholar]

- Wang, S.; Chen, W.; Xie, S.M.; Azzari, G.; Lobell, D.B. Weakly supervised deep learning for segmentation of remote sensing imagery. Remote Sens. 2020, 12, 207. [Google Scholar] [CrossRef] [Green Version]

- Kang, X.; Zhuo, B.; Duan, P. Semi-supervised deep learning for hyperspectral image classification. Remote Sens. Lett. 2019, 10, 353–362. [Google Scholar] [CrossRef]

- Islam, K.A.; Hill, V.; Schaeffer, B.; Zimmerman, R.; Li, J. Semi-supervised adversarial domain adaptation for seagrass detection using multispectral images in coastal areas. Data Sci. Eng. 2020, 5, 111–125. [Google Scholar] [CrossRef] [PubMed]

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DS | 0.99 | 0.62 | 0.76 | 0.99 | 0.96 | 0.97 | 1.0 | 1.0 | 1.0 | 0.99 | 1.0 | 0.99 |

| OB | 0.56 | 0.42 | 0.48 | 0.99 | 0.97 | 0.98 | 0.99 | 0.98 | 0.99 | 0.99 | 0.97 | 0.98 |

| EM | 0.73 | 0.95 | 0.83 | 0.90 | 0.96 | 0.93 | 0.25 | 0.97 | 0.40 | 0.18 | 0.57 | 0.27 |

| MB | 0.008 | 0.72 | 0.01 | 0.66 | 0.89 | 0.76 | 1.0 | 0.88 | 0.93 | 0.30 | 0.99 | 0.46 |

| OM | 0.25 | 0.49 | 0.33 | 0.36 | 0.58 | 0.45 | 0.02 | 0.83 | 0.05 | 0.66 | 0.55 | 0.60 |

| SG | 0.67 | 0.95 | 0.78 | 0.31 | 0.70 | 0.43 | 0.64 | 0.93 | 0.76 | 0.27 | 0.93 | 0.42 |

| SM | 0.99 | 0.96 | 0.98 | 0.97 | 0.99 | 0.98 | 0.99 | 0.73 | 0.84 | 0.97 | 0.81 | 0.88 |

| MicaSense: OBIA | MicaSense: FCNN | SONY: OBIA | SONY: FCNN | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hobley, B.; Arosio, R.; French, G.; Bremner, J.; Dolphin, T.; Mackiewicz, M. Semi-Supervised Segmentation for Coastal Monitoring Seagrass Using RPA Imagery. Remote Sens. 2021, 13, 1741. https://doi.org/10.3390/rs13091741

Hobley B, Arosio R, French G, Bremner J, Dolphin T, Mackiewicz M. Semi-Supervised Segmentation for Coastal Monitoring Seagrass Using RPA Imagery. Remote Sensing. 2021; 13(9):1741. https://doi.org/10.3390/rs13091741

Chicago/Turabian StyleHobley, Brandon, Riccardo Arosio, Geoffrey French, Julie Bremner, Tony Dolphin, and Michal Mackiewicz. 2021. "Semi-Supervised Segmentation for Coastal Monitoring Seagrass Using RPA Imagery" Remote Sensing 13, no. 9: 1741. https://doi.org/10.3390/rs13091741