Data driven archaeological predictive modeling is a fundamentally inductive process where significant datasets are proportionally compiled to make decision support tools based on rules defined by site locations. Datasets can include soil type or land-use maps, distance to water, topography (slope, aspect,

etc.) and defensive concerns (prominence). These can be compiled using logistic regression into models that note high and low probabilities of archaeological sites in a landscape [

11]. The utility of accurate predictive modeling to resource-starved cultural resource management needs little explanation; however, Harrower has noted that the ever increasing resolution of remotely sensed data has seen a move from more landscape focused studies based on inductive modeling to identifying individual sites in the landscape [

12]. Much has been published on the latter in recent years with exciting results, especially in the field of airborne laser scanning, but site-focused approaches to prospection are less useful as large-scale decision support tools. As Kvamme has noted, detection is as much about understanding the absence as the presence of sites, and this involves exploring and investigating the wider landscape [

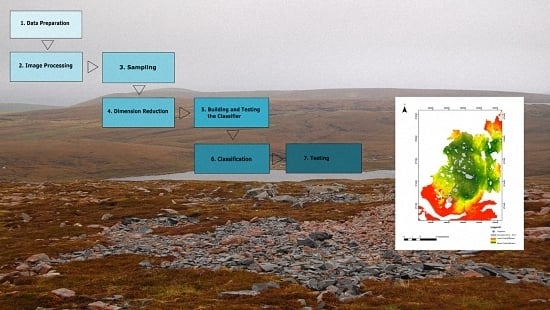

13]. Until recently, inductive predictive modeling using higher resolution data would have been time consuming to the point of redundancy. Protocols presented in this paper attempt to address this using machine-learning techniques, dimension reduction and classification to generate posterior probability maps useful as decision support tools.

The protocols used in this paper are built on earlier research into the use of remotely sensed imagery and statistics to produce probability models. These approaches were used to develop what are termed by Chen at al as ‘Direct Detection Models’ and the history and earlier iterations of this process are described more fully elsewhere [

14,

15]. Many of these applications have developed over the last two decades to assist federal agencies in the USA to comply with legislation that requires government agencies to account for the impact of publicly funded projects on historic and archaeological sites (National Historic Preservation Act of 1966: Section 106), and to be responsible for the management and preservation of these sites (National Historic Preservation Act of 1966: Section 110). Similar frameworks like the European Landscape Convention, which requires signatories to identify and assess significant changes within their cultural landscapes (ELC 6: C

2000), lay a foundation for identifying and cataloguing sites within a European context. In both cases, the inventorying and recording of archaeological sites are crucial to their management and preservation. Traditional survey techniques are expensive and require considerable time. Direct detection models were developed to assist this process through the statistical analysis of data from a wide variety of sources, most saliently that of imagery. These datasets come from a range of sensors including synthetic aperture radar (SAR) and hyper and multispectral data [

16,

17,

18]. In using multispectral data, direct detection models are ideal for identifying non-extant sites or sites with very subtle surface signatures. Early models used frequentist statistics to establish relationships between sites and background datasets [

16]. A detection model developed for San Clemente Island, California, used data which included a high-resolution DEM derived from polarized synthetic aperture radar (SAR) data collected by the JPL/NASA AirSAR platform (C-, L-, and P-Band), polarized X-Band SAR data collected by the GeoSAR platform (developed by JPL/NASA for use by the private sector), NDVI and Tasseled Cap indices derived from 4-band IKONOS satellite multispectral imagery, and digital elevation models generated from AirSAR C-band data and GeoSAR X-band data. Points were randomly seeded away from known archaeological sites and these points acted as non-sites. Overall, individual Student’s

t-tests were performed on the mean site and non-site values from 11 datasets. From these 11, four were repeatedly significant, and a predictive model was constructed using the best performing thresholds of each dataset. Models were tested by means of a Gain Statistic, which compares the ratio between the percentage of total study area within each probability, and the percentage of correctly identified sites [

19]. The model produced gain statistics between 90 and 99.81; however, the very high gain statistics were calculated from small area percentages. Recent advances in computational capacity have allowed the use of statistical learning techniques. These methods allow a far greater dimensionality of data and for more data to be utilized simultaneously; however, it should be noted that more data does not automatically equate to better models. Higher dimensionality can results in over-fitting, and the ability to understand causal relationships can be lost. Chen

et al. presented a direct detection model for Fort Irwin that included many protocols employed in this paper, including band difference ratios and principal components analysis. They were using site data represented as points and so employed a multi-ring annuli sampling approach which resulted in very high dimensionality (where

d = 2160). Classification used a linear discriminant analysis with a Bayes plug-in classifier. This assumes class conditional normal distributions with the same covariance matrices. Results (as plotted on ROC curves) were promising but producing a usable probability map was time-consuming given the large number of dimensions [

14,

15].

As aforementioned, intemperate weather coupled with the isolated nature of the sites mean only 20% of the NRFP landscape has been surveyed in two survey seasons. This represents an actual value of less than 25 km

2. There was a need to more efficiently focus resources, and it was hoped that a survey cost-efficacy model could be generated using a probability model created from Worldview-2 imagery and other topographical data, and a least-cost model which records actual movement costs through the modern landscape. Many dykes are less than 2 m in width so it was hoped that the higher resolution of WV-2 could enable identification at a finer scale. The protocols and applications presented in this paper build on protocols developed for direct detection modeling, but favor a linear discriminant analysis (LDA) classification approach to the frequentist approaches and Boolean merges used in earlier iterations [

16,

17,

18]. They also use principal components analysis (PCA) to reduce redundancy rather than assessing relevance using a Students

t-test.